Abstract

An incomplete-data Fisher scoring method is proposed for parameter estimation in models where data are missing and in latent-variable models that can be formulated as a missing data problem. The convergence properties of the proposed method and an accelerated variant of this method are provided. The main features of this method are its ability to accelerate the rate of convergence by adjusting the steplength, to provide a second derivative of the observed-data log-likelihood function using only the functions used in the proposed method, and the ability to avoid having to explicitly solve the first derivative of the object function. Four examples are presented to demonstrate how the proposed method converges compared with the EM algorithm and its variants. The computing time is also compared.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a method for estimating the parameters of probability models with incomplete data, Dempster et al. (1977) formulated the expectation-maximization (EM) algorithm. The EM algorithm has become the standard method of estimating such parameters in the presence of missing data. The EM algorithm has also been extended to estimate parameters for the estimating functions other than the probability models (Elashoff and Ryan 2004) and it can also be used in the context of latent variable models that can be formulated as a missing data problem (e.g., Rubin and Thayer 1982).

Two major limitations of the EM algorithm, which are often emphasized, are its slow convergence and the unavailability of standard errors as a byproduct of the algorithm (McLachlan and Krishnan 2008). Slow convergence means that the increment at one iteration is very small and thus the algorithm might be stopped erroneously (Bentler and Tanaka 1983). To accelerate convergence, various authors have proposed the use of the Newton–Raphson type iteration for the transformed EM algorithm (e.g., Meilijson 1989; Rai and Matthews 1993; Jamishidian and Jennrich 1993; Lange 1995a, b; Varadhan and Roland 2008) and other methods such as Aitken’s acceleration (e.g., Louis 1982; McLachlan 1995). The unavailability of standard errors occurs because the second derivative of the log-likelihood function cannot be used. As a result, judging whether a convergent point is a local maximum or a saddle point is impossible. Meng and Rubin (1991) proposed the supplemented EM algorithm to provide the second derivative through numerical differentiation.

In this paper, another method for parameter estimation in probability models with incomplete data is proposed, namely, the “incomplete-data Fisher scoring method” (hereafter the IFS method). The IFS method not only overcomes the limitations of the EM algorithm, but it also offers other merits. The IFS method can be accelerated by simple steplength adjustments and the second derivative can be produced through numerical differentiation. The IFS uses the iteration equation,

with steplength adjustment, where \(\varvec{\alpha }^{(t)}\) is the value of parameter \(\varvec{\alpha }\) at the tth iteration, \(q^{(t)}\) is the tth steplength, \(J_\mathrm{com}({\varvec{\alpha }}^{(t)})\) is the information matrix for a single complete datum evaluated at \(\varvec{\alpha }={\varvec{\alpha }}^{(t)}\), and \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\) is the first derivative of the observed-data log-likelihood function with missing data of sample size n evaluated at \(\varvec{\alpha }={\varvec{\alpha }}^{(t)}\). Function \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\) can be replaced with \(\nabla ^{10}Q({\varvec{\alpha }}^{(t)}|{\varvec{\alpha }}^{(t)})\) when necessary, where Q() is the Q-function of the EM algorithm, which is often easier to calculate than \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\), and \(\nabla ^{10} Q({\varvec{\alpha }}^{(t)}|{\varvec{\alpha }}^{(t)})\) is the first derivative of the Q-function with respect to the first argument and is evaluated at \(\varvec{\alpha }={\varvec{\alpha }}^{(t)}\). This method is evidently a Fisher scoring method (Osborne 1992) modified for missing data, and also a scaled version of the steepest ascent (Bertsekas 2004). The first advantage of this form is that no exact solution to the first derivative of the observed-data log-likelihood function is required. In cases where an exact solution to the first derivative is not available, the EM algorithm would need to resort to numerical methods such as the steepest ascent (e.g., Lange 1995a). The second advantage of this form is that to develop the IFS method we were able to make use of the vast amount of knowledge that has already been generated with respect to the Newton–Raphson type algorithm. See Bertsekas (2004) on this development.

The IFS method is closely related to the EM algorithm, although the former is derived without starting from the EM map. For further details, this close relationship is exemplified by studies focused on different aspects of the EM algorithm (Titterington 1984; Lange 1995b; Xu and Jordan 1995; Salakhutdinov et al. 2002). Titterington (1984) proposed the same form of the equation as put forward here in terms of the IFS method, as an approximation to the EM algorithm. Lange (1995a) proposed the EM gradient method which converges exactly to Eq. (1) when the distribution belongs to an exponential family of distributions with a natural parameter \(\varvec{\alpha }\). However, application of Eq. (1) is not limited to an exponential family distribution. Xu and Jordan (1995) and Salakhutdinov et al. (2002), among others, expressed the EM algorithm in the form of a scaled version of the steepest ascent method for latent variable models. The IFS method becomes identical to the EM algorithm for certain steplengths as illustrated in the examples which follow. As such, the IFS method can be interpreted as another derivation of the EM algorithm, akin to Minka (1998).

In the next section, two examples are provided to demonstrate the close relationship between the proposed method and the EM algorithm and to emphasize the importance of steplength adjustment. In Sect. 3, a formal definition of the proposed IFS method, and the accelerated IFS, and their derivations are provided. In Sect. 4, properties of the method are described with proofs relegated to the Appendix. In Sect. 5, four numerical examples are shown with the computing time comparison. In Sect. 6, conclusions are offered along with suggestions for future research.

2 Examples

In the following two examples, I show how Eq. (1) of the IFS method is related to the iteration of the conventional EM algorithm (Dempster et al. 1977).

2.1 Normal distribution

Assume that missing data for variable \(\varvec{y}=(y_1,y_2)^{\prime }\) from the two-variate normal distribution \(N({\varvec{\mu }},\varSigma )\) are as follows:

where \({\varvec{\mu }}= (\mu _1,\mu _2)^{\prime }\) and \(\displaystyle \varSigma =\left( \begin{array}{cc} \sigma _{11} &{} \sigma _{12} \\ \sigma _{21} &{} \sigma _{22} \end{array} \right) \). The data on variable \(y_1\) are missing from the \((m+1)\)th to the nth cases. For these missing data, we construct a log-likelihood function which is called the “observed-data log-likelihood function” as follows:

and maximize this function to obtain the maximum likelihood estimates (MLE for short) of the parameters \(\varvec{\alpha }=({\varvec{\mu }}^{\prime },\sigma _{11},\sigma _{12},\sigma _{22})^{\prime }\). Note that \(\sigma _{12}=\sigma _{21}\). The problem in the maximization is that the function is not easy to handle, e.g., it is not easy to take the derivative

The difficulty in handling the observed-data log-likelihood function can be mitigated by using the complete data and the complete-data log-likelihood function. If the complete data \((y_{1i},y_{2i})\) (\(i=1,\ldots ,n\)) were available, the complete-data log-likelihood function,

would produce the MLE. However, this is not the case, since the data contain missing values. The basic idea of the EM algorithm is to replace the missing values in \(\ell _\mathrm{com}(\varvec{\alpha })\) with their conditional expectation on the observed data. Then, the EM algorithm produces Q-function, \(Q(\varvec{\alpha }|{\varvec{\alpha }}^{(t)})=E[\ell _\mathrm{com}(\varvec{\alpha })|Y_\mathrm{obs},{\varvec{\alpha }}^{(t)}]\), where \(Y_\mathrm{obs}\) is the observed data (Dempster et al. 1977).

Deriving the iterations of the EM algorithm by taking the derivative of \(Q(\varvec{\alpha }|{\varvec{\alpha }}^{(t)})\), we have iterations,

where vec(A) is a column vector stacking columns in matrix A. Since \(y_{1i}\) (\(i=m+1,\ldots ,n\)) are missing values, they are imputed by the conditionally expected value. The iterations can be written as

The function in the curly brackets is the first derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\) with respect to \({\varvec{\mu }}\). Thus, this iteration can be written as

In a similar way, the EM iteration for \(\varSigma \) becomes

See the Appendix on the equivalence between Eqs. (3) and (4).

In a concise way, by rewriting \(\varvec{\alpha }=({\varvec{\mu }}^{\prime },vec(\varSigma )^{\prime })^{\prime }\), the iterations of the EM algorithm for a normal distribution are given in the form of Eq. (1) as

where \(J_\mathrm{com}(\varvec{\alpha })=\displaystyle \left( \begin{array}{cc} \varSigma ^{-1} &{} O \\ O &{} .5(\varSigma ^{-1}\otimes \varSigma ^{-1}) \end{array}\right) \), which is the information matrix of a normal distribution for the complete data of size one. Apparently this is the iteration of Eq. (1) with the unit steplength. The resulting Eq. (5) implies that the expression of this equation might be generally related to the EM algorithm, and that appropriate adjustment of the steplength can accelerate the faster convergence rate than the EM algorithm.

2.2 A Mixture of Poisson distributions

In this example, we consider a mixture of Poisson distributions. This model involves no missing data, but a latent variable. However, the mixture of Poisson distributions can be formulated as a missing data problem by augmenting the data.

Let \(y_1,\ldots ,y_n\) be data taken independently from a mixture of two Poisson distributions: \(Po(y_i|\alpha _1)\) with probability \(\pi \)(\(>0\)) and \(Po(y_i|\alpha _2)\) with probability \(1-\pi \) (\(i=1,\ldots ,n\)). The parameters to be estimated are \(\varvec{\alpha }=(\pi ,\alpha _1,\alpha _2)^{\prime }\). The observed-data log-likelihood function is given as

Because taking the derivative is overly cumbersome, an avoidance strategy is needed. The strategy augments the original data by introducing a random binary variable \(z_i\)(\(i=1,\ldots ,n\)) which takes one if \(y_i\) belongs to \(Po(y_i|\alpha _1)\) and zero if \(y_i\) belongs to \(Po(y_i|\alpha _2)\), and constructs augmented complete data, \((y_i,z_i)\) (\(i=1,\ldots ,n\)). The complete-data log-likelihood function is

Using the complete-data log-likelihood function, we are able to construct two seemingly different but actually closely related iterations.

Applying the EM algorithm, we obtain the Q-function of the EM algorithm, \(Q(\varvec{\alpha }|{\varvec{\alpha }}^{(t)})= E\left[ \ell _\mathrm{com}(\varvec{\alpha }) |\varvec{y};{\varvec{\alpha }}^{(t)}\right] \). With the standard notation

(See McLachlan and Krishnan 2008), the iterations of the EM algorithm become

Applying the IFS method in Eq. (1), we need to compute \(\nabla \ell _\mathrm{obs}(\varvec{\alpha })\). However, in this case, \(\nabla \ell _\mathrm{obs}(\varvec{\alpha })\) is hard to compute. Instead, we use the useful relationship that \(\nabla \ell _\mathrm{obs}(\varvec{\alpha })=\nabla ^{10}Q(\varvec{\alpha }|{\varvec{\alpha }}^{(t)})\) which makes it less difficult to compute the derivative. Then, we have iterations,

where we have used different steplengths for each element of the parameters, \(q_1^{(t)}\), \(q_2^{(t)}\) and \(q_3^{(t)}\), and \(J_\mathrm{com}(\varvec{\alpha }) = \mathrm{diag}(1,\pi ,1-\pi )\).

The iterations of the EM algorithm again become identical to those of the IFS method by adjusting steplengths. By setting \(q_{1}^{(t)}=1\), the first iteration equation becomes that of the EM algorithm. The second and third iteration equations become those of the EM algorithm by setting \(q_2^{(t)}=n\pi ^{(t)}/\sum _{i=1}^nz_i^{(t)}\) and \(q_3^{(t)}=n(1-\pi ^{(t)})/\sum _{i=1}^n(1-z_i^{(t)})\), respectively. Let the direction provided by the IFS method be \(\varvec{d}=(d_1,d_2,d_3)^{\prime }\). The direction by the EM algorithm is

This is not the steepest ascent direction in terms of the IFS method because of the different steplengths for each element. Steplengths \(q_2^{(t)}\) and \(q_3^{(t)}\) converge to one as \(|\pi ^{(t)}-\pi ^{(t+1)}|\rightarrow 0\). This means that in the latter stages of convergence, the sequence produced by the IFS method approaches that of the EM algorithm when the IFS method and the EM algorithm start from the common initial values around a convergent point.

Steplengths for the EM algorithm are obtained as follows. The optimum steplength of \(q_1^{(t)}\) is a solution to

where \(d_1\ne 0\). The left-side term can be expressed as

where \(z_i^{(t)}\) is fixed as the current estimate, although it is actually a function of \(\varvec{\alpha }^{(t)}\). The solution is \(q_{1}^{(t)}=1\). In a similar way, for \(q_2^{(t)}\),

leads to \(q_1^{(t)}=n\pi ^{(t)}/\sum _{i=1}^nz_i^{(t)}\). The steplength \(q_3^{(t)}\) becomes identical to that given above in a similar way. Thus, from observation, we can state that the EM algorithm, or the IFS method with these steplengths, proceeds in the same direction as the IFS method in equation (1). The EM algorithm takes (inexact) different steplengths for each element of direction, while the IFS method proceeds on a common steplength. To proceed in the same direction, the common steplength is better than different steplengths for different elements in the same direction. How to determine an appropriate steplength will be discussed in Sect. 3.

3 Definition and derivation

3.1 Definition

As per the foregoing, assume that we have the observed-data log-likelihood function, denoted by \(\ell _\mathrm{obs}(\varvec{\alpha })\), for incomplete data of sample size n and \(r\times 1\) parameter vector \(\varvec{\alpha }\). The complete-data log-likelihood function for this sample size, denoted by \(\ell _\mathrm{com}(\varvec{\alpha })\), is not available for estimation since data contain missing values or the model contains a latent variable. However, the complete-data log-likelihood function can be used as a function of random variables. Thus, its information matrix for a single datum can be computed as \(-E[\nabla ^2 \ell _\mathrm{com}({\varvec{\alpha }}^{(t)})]=n J_\mathrm{com}({\varvec{\alpha }}^{(t)})\), where E is the expectation with respect to the joint distribution of the complete-data variables (Kenward and Molenberghs 1998). In the following, a theory is developed for independent and identically distributed data, which can also be extended for dependent data in a straightforward manner.

We define the IFS method as follows:

IFS method

-

Step 0.

Determine \(\epsilon \)(\(>0\)). Set \(t=0\) and an initial value for \(\varvec{\alpha }^{(0)}\).

-

Step 1.

If \(\Vert \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\Vert <\epsilon \), do not proceed to the subsequent steps.

-

Step 2.

Compute \(\varvec{d}^{(t)}=n^{-1}J_\mathrm{com}(\varvec{\alpha }^{(t)})^{-1}\nabla \ell _\mathrm{obs}(\varvec{\alpha }^{(t)})\).

-

Step 3.

Provide the steplength \(q^{(t)}\) according to some method and adjust it using the Armijo Rule with \(\tilde{\varvec{d}}^{(t)}=q^{(t)}\varvec{d}^{(t)}\).

(Armijo Rule) For a given \(\beta \in (0,1)\) and a given \(\sigma \in (0,.5)\), find the maximum \(s^{(t)}\in \{\beta ^\ell |\ell =0,1,\ldots \}\) such that

$$\begin{aligned} \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)}+s^{(t)}\tilde{\varvec{d}}^{(t)})-\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})> s^{(t)}\sigma \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})^{\prime }\tilde{\varvec{d}}^{(t)}. \end{aligned}$$ -

Step 4.

Improve the current estimate \({\varvec{\alpha }}^{(t)}\) to \({\varvec{\alpha }}^{(t+1)}\) by \({\varvec{\alpha }}^{(t+1)}={\varvec{\alpha }}^{(t)}+ s^{(t)}\tilde{\varvec{d}}^{(t)}\); set \(t\leftarrow t+1\) and go to Step 1.

In Step 1, the algorithm determines whether or not the convergence criterion is satisfied. In some practical cases, the distance between \({\varvec{\alpha }}^{(t)}\) and \({\varvec{\alpha }}^{(t+1)}\) is also used to determine the convergence. This convergence criterion is natural because if \(\varvec{d}^{(t)}={\varvec{0}}\), \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\) is reduced to zero under the assumption that \(J_\mathrm{com}({\varvec{\alpha }}^{(t)})\) is nonsingular. In Step 2, if \(J_\mathrm{com}({\varvec{\alpha }}^{(t)})\) is positive definite, then \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})^{\prime }\varvec{d}^{(t)}>0\), which means that \(\varvec{d}^{(t)}\) is an ascent direction. Positive definiteness is a natural assumption that is usually satisfied. In Step 3, the steplength is determined to ensure that the likelihood increases. An alternative method such as the Wolfe condition (Nocedal and Wright 2006) is also available with the same direction vector \(\tilde{\varvec{d}}^{(t)}\). There are various ways to determine \(q^{(t)}\) including a random guess. With the results obtained from the previous steps, the current estimate \({\varvec{\alpha }}^{(t)}\) is improved to \({\varvec{\alpha }}^{(t+1)}\) in Step 4.

There is no definitive way to determine steplength. An initial steplength might need adjustment to increase the likelihood function, often by shortening the initial steplength. The problem is that the adjusted steplength may render convergence slower than the EM algorithm. Thus, we need a way to identify a good initial steplength that is likely to increase the likelihood function with as few adjustments as possible. The optimal steplength is \(q(\ge 0)\), which minimizes \(\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)}+q\varvec{d}^{(t)})\) for a certain direction \(\varvec{d}^{(t)}\). However, this steplength cannot be derived exactly because the exact minimization is practically difficult. Instead, an inexact steplength is provided by the following Eq. (6). See Sect. 3.3 for its derivation. The steplength in Eq. (6) accelerates the convergence of the IFS when it is used as the initial steplength. We denote the IFS with Eq. (6) “AIFS” (which stands for “accelerated IFS”). The AIFS might require a steplength adjustment at the initial stage of iterations, while, around a convergent point, the AIFS accepts the initial steplength of Eq. (6) without any adjustment, which is proved in Lemma 1 (ii) in the Appendix. It is also shown that the AIFS converges faster than the EM algorithm (Theorem 2 with the subsequent comments).

To increase the convergence rate of the IFS method, the accelerated IFS (AIFS) method is thus proposed herein.

AIFS method

-

Step 0.

Determine \(\epsilon \)(\(>0\)). Set \(t=0\) and an initial value for \(\varvec{\alpha }^{(0)}\).

-

Step 1.

If \(\Vert \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\Vert <\epsilon \), then do not proceed to subsequent steps.

-

Step 2.

Compute \(\varvec{d}^{(t)}=n^{-1}J_\mathrm{com}(\varvec{\alpha }^{(t)})^{-1}\nabla \ell _\mathrm{obs}(\varvec{\alpha }^{(t)})\).

-

Step 3.

Provide the steplength \(q^{(t)}\) and adjust it using the Armijo Rule with \(\tilde{\varvec{d}}^{(t)}=q^{(t)}\varvec{d}^{(t)}\), where

$$\begin{aligned} q^{(t)} =\frac{n \varvec{d}^{(t)\prime }J_\mathrm{com}({\varvec{\alpha }}^{(t)})\varvec{d}^{(t)}}{\varvec{d}^{(t)\prime } \varvec{D}({\varvec{\alpha }}^{(t)},{\varvec{\alpha }}^{(t)}+\varvec{d}^{(t)})}, \end{aligned}$$(6)and \(\varvec{D}(\varvec{\alpha }_1,\varvec{\alpha }_2) =\nabla \ell _\mathrm{obs}(\varvec{\alpha }_1)-\nabla \ell _\mathrm{obs}(\varvec{\alpha }_2)\).

-

Step 4.

Improve the current estimate \({\varvec{\alpha }}^{(t)}\) to \({\varvec{\alpha }}^{(t+1)}\) by \({\varvec{\alpha }}^{(t+1)}={\varvec{\alpha }}^{(t)}+ s^{(t)}\tilde{\varvec{d}}^{(t)}\); set \(t\leftarrow t+1\) and go to Step 1.

The only difference between the IFS and AIFS is with respect to \(q^{(t)}\). In the former, \(q^{(t)}\) is arbitrary, while in the latter \(q^{(t)}\) is systematically estimated. The latter brings greater advantage than the former. Not only does the latter accelerate the convergence, but it also provides the standard errors of the estimates. The following IFS-Variance method explains how to do the estimation.

On the termination of either of these methods, a numerical differential produces the second derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\) at the convergent point. Let the convergent point be \(\varvec{\alpha }^{*}\). Let \(\varvec{e}_b\) (\(b=1,\cdots ,r\)) be a \(r\times 1\) vector with one only at the bth element and zeros in all other elements, and let \(G=[g_{ab}]_{a,b=1,\ldots ,r}\) be the second derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\) at \(\varvec{\alpha }^{*}\) by numerical differentiation.

IFS-Variance method

-

Step 0.

Determine \(k(>0)\).

-

Step 1.

For \(a,b=1,\ldots ,r\) (\(a\le b\)), compute

$$\begin{aligned} g_{ab} = \frac{\varvec{D}_a(\varvec{\alpha }^{*}+k\varvec{e}_b,\varvec{\alpha }^{*}-k\varvec{e}_b)}{2k}, \end{aligned}$$where \(\varvec{D}_a(\varvec{\alpha }_1,\varvec{\alpha }_2)\) is the ath element of \(\varvec{D}(\varvec{\alpha }_1,\varvec{\alpha }_2)\).

-

Step 2.

\(G\leftarrow G + G^{\prime } - [g_{aa}]_{a=1,\ldots ,r}\), where \([g_{aa}]\) is a diagonal matrix of elements which are diagonal elements of G.

The standard error matrix of \(\varvec{\alpha }^{*}\) is computed by inverting \(-G/n\).

3.2 Derivation of direction vector

Assume that we improve the current estimate \({\varvec{\alpha }}^{(t)}\) to \({\varvec{\alpha }}^{(t+1)}\) by using the relation,

where \(q\ge 0 0\) is the unknown steplength and \(\varvec{d}\) is the unknown direction vector at the tth iteration. They must be chosen so that \(\ell _\mathrm{obs}({\varvec{\alpha }}^{(t+1)})> \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\).

The IFS method can be derived as a modification of the Fisher scoring method (Osborne 1992). The Fisher scoring method for \(\ell _\mathrm{obs}(\varvec{\alpha })\) finds a direction vector \(\varvec{d}\) using a quadratic model function:

where E denotes the expectation with respect to random variables of data and the corresponding missing-data indicator variables (Kenward and Molenberghs 1998; Seaman et al. 2013). The last term contains \(E[\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})]\) which is often difficult to compute. Thus, to avoid this computation, we invoke the idea of a lower-bound algorithm (Böhning and Lindsay 1988). The algorithm optimizes a quadratic model function which is lower than the original quadratic function. The lower bound of \(E[\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})]\) is \(-nJ_\mathrm{com}({\varvec{\alpha }}^{(t)})\), that is, \(E[\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})]\ge -nJ_\mathrm{com}({\varvec{\alpha }}^{(t)})\), where this inequality denotes that \(\varvec{x}^{\prime }(E[\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})]+nJ_\mathrm{com}({\varvec{\alpha }}^{(t)}))\varvec{x}\ge 0\) for an arbitrary vector \(\varvec{x}\) of an appropriate size. See the Appendix for the derivation. Based on this relationship, we have another model which minorizes \(m_F^{(t)}(\varvec{d})\),

By taking the first derivative with respect to \(\varvec{d}\), we have

Using \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})=\nabla ^{10}Q({\varvec{\alpha }}^{(t)}|{\varvec{\alpha }}^{(t)})\) given in Fisher (1925) and McLachlan and Krishnan (2008), \(\varvec{d}^{(t)}\) can also be written as

where \(\nabla ^{10}\) indicates the first derivative with respect to the first parameter. As observed in the Poisson mixture example, the Q-function of the EM algorithm could be useful to compute the first derivative of the observed-data log-likelihood function.

Another reason why \(J_\mathrm{com}(\varvec{\alpha })\) appears as a scaled matrix is explored. If the complete data were available, the Fisher scoring method would be given as

The Fisher scoring method has several merits (Small and Wang 2003). However, we cannot use it here since we only have the observed data. An available primal estimating function is \(\ell _\mathrm{obs}(\varvec{\alpha })\). This function is known to produce a consistent estimator that converges to the same point as \(\ell _\mathrm{com}(\varvec{\alpha })\), that is, to the true value of \(\varvec{\alpha }\), under the assumption that data are missing at random (Little and Rubin 2002). When an estimating function is close to \(n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\nabla \ell _\mathrm{com}(\varvec{\alpha })\), we can expect that the estimate sequence will have properties as good as those of \(n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\nabla \ell _\mathrm{com}(\varvec{\alpha })\). Now, it is proposed that a scaled version of \(\nabla \ell _\mathrm{obs}(\varvec{\alpha })\) should be configured, denoted by \(B\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\), as close to \(n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\nabla \ell _\mathrm{com}(\varvec{\alpha })\) as possible, where B is an appropriate-sized matrix-valued function of \(\varvec{\alpha }\). Let the distance between vectors \(\varvec{a}\) and \(\varvec{b}\) be measured by \(\mathrm{dist}(\varvec{a},\varvec{b})= E\left[ \Vert \varvec{a}-\varvec{b}\Vert ^2\right] \). The problem to be solved is

The solution is \(B=n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\). Thus, \(n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\nabla \ell _\mathrm{obs}(\varvec{\alpha })\) is the best approximation to the direction of the Fisher scoring method for complete data in the sense of expectation. Further, because of its derivation, the IFS direction is closer to \(n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\nabla \ell _\mathrm{com}(\varvec{\alpha })\) than \(E[\nabla ^2\ell _\mathrm{obs}(\varvec{\alpha })]^{-1}\nabla \ell _\mathrm{obs}(\varvec{\alpha })\) which is the direction provided by direct application of the Fisher scoring method to \(\ell _\mathrm{obs}(\varvec{\alpha })\). The direction by the IFS method for incomplete data aims to approach the direction by the Fisher scoring method for complete data.

These two derivations are not based on the EM algorithm. Finally, the EM-based derivation is given to show its close relationship with the EM algorithm. The EM gradient algorithm (Lange 1995a) provides an iteration,

where \(\nabla ^{20}\) indicates the second derivative of the Q-function with respect to the former part of \(\varvec{\alpha }\). In the same spirit as the Fisher scoring method, we take the expectation of the second derivative of the Q-function with respect to \(\varvec{y}\) and its indicator (Kenward and Molenberghs 1998; Seaman et al. 2013). As a result, the iteration of the same form as Eq. (1) with \(q^{(t)}=1\) is obtained.

Whether the IFS method is derived from the EM algorithm or not, the IFS method uses complete data and a complete-data log-likelihood function just as the EM algorithm does. The EM algorithm uses a complete-data log-likelihood function to derive a surrogate function \(Q(\varvec{\alpha }|{\varvec{\alpha }}^{(t)})= E\left[ \ell _\mathrm{com}(\varvec{\alpha })|Y_\mathrm{obs},{\varvec{\alpha }}^{(t)}\right] \) to \(\ell _\mathrm{obs}(\varvec{\alpha })\). The Q-function is expected to be easier to handle than \(\ell _\mathrm{obs}(\varvec{\alpha })\). On the other hand, the IFS method uses the complete-data log-likelihood function to compute the information matrix. It is intriguing that they produce similar iterations as in the previous examples.

3.3 Derivation of steplength

To ensure an increase in the likelihood function, the steplength is determined with an exact or inexact line search. The exact line search finds a steplength maximizing \(\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)}+q\varvec{d}^{(t)})\) (\(q\ge 0\)). This type of line search is sometimes impractical because \(d\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)}+q\varvec{d}^{(t)})/dq=0\) is difficult to solve. Instead, here it is proposed that an inexact line search should be used. This solves a quadratic approximation of \(\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)}+q\varvec{d}^{(t)})\),

The solution is given as

where \(I_\mathrm{obs}({\varvec{\alpha }}^{(t)})=-n^{-1}\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\), and when transforming \(q_0\) to the last equation, we have used \(J_\mathrm{com}({\varvec{\alpha }}^{(t)})\varvec{d}^{(t)}=n^{-1}\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\) which results from Eq. (7). It may be practically impossible to use matrix \(I_\mathrm{obs}({\varvec{\alpha }}^{(t)})\), because the form of \(\ell _\mathrm{obs}(\varvec{\alpha })\) is complicated and taking its derivative is difficult. Instead, we suggest here that the following should be used:

This estimate is derived by use of this relationship:

where \(o(\Vert \varvec{d}^{(t)}\Vert )\) indicates a small quantity compared with \(\Vert \varvec{d}^{(t)}\Vert \), that is, \(o(\Vert \varvec{d}^{(t)}\Vert )/\Vert \varvec{d}^{(t)}\Vert \rightarrow 0\) as \(\Vert \varvec{d}^{(t)}\Vert \rightarrow 0\). This relationship indicates that \(q^{(t)}\) gets close enough to \(q_0\) for sufficiently small \(\Vert \varvec{d}^{(t)}\Vert \)’s.

It happens in practice that when, for example, \(\Vert \varvec{d}^{(t)}\Vert \) is not sufficiently small, \(q^{(t)}\) may not qualify as the steplength. The steplength may be too long, or even negative. In cases where \(q^{(t)}\) is too long, the steplength is reduced until the increase in the likelihood value is ensured. Such a steplength is always found provided that the first derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\) is not zero, as will be shown in Theorem 1. When \(q^{(t)}\) is negative, after changing its sign or replacing it with an arbitrary positive number, appropriate reduction of the steplength results in a steplength that increases the likelihood value. To reduce the initial steplength, the Armijo Rule is used.

4 Properties

We investigate the properties of the (A)IFS method. In the following, the parameter space, denoted by \(\varOmega \), is a subset of the p-dimensional real space, \(R^p\). A positive integer set is denoted by Z.

To derive the convergence property, we make the following assumptions.

- (A1)

There exist positive constants \(c_1\) and \(c_2\) such that \(c_1 \Vert \varvec{v}\Vert ^2 \le \varvec{v}^{\prime }J_\mathrm{com}({\varvec{\alpha }}^{(t)}) \varvec{v}\le c_2 \Vert \varvec{v}\Vert ^2\) for the sequence \(\{{\varvec{\alpha }}^{(t)}\}\) and all \(\varvec{v}\in R^p\).

- (A2)

The level set \(L_0=\left\{ \varvec{\alpha }\in \varOmega |\ell _\mathrm{obs}(\varvec{\alpha })\ge \ell _\mathrm{obs}(\varvec{\alpha }^{(0)})\right. \left. >-\infty \right\} \) is compact.

- (A3)

\(\ell _\mathrm{obs}(\varvec{\alpha })\) is twice differentiable in the interior of \(\varOmega \).

Assumption (A1) is not restrictive. Indeed, in many practical cases, it is naturally met. As shown in the previous section, the EM algorithm for some models also requires this assumption, proceeding on the basis that it implicitly holds. Assumption (A2) guarantees that there exists an accumulation point of \(\{{\varvec{\alpha }}^{(t)}\}\) in \(L_0\). Assumption (A3) is required to conduct the convergence analysis, and is also used for this purpose with respect to the EM algorithm. Twice differentiability is not unnatural because the information matrix based on the twice differential log-likelihood usually exists on the parameter space.

Theorem 1

The IFS method for \(\ell _\mathrm{obs}(\varvec{\alpha })\) produces a sequence of \(\varvec{\alpha }^{(t)}\). The sequence satisfies the following properties.

-

(i)

Assume that \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\ne {\varvec{0}}\). The steplength produced by the Armijo Rule is determined within a finite number of iterations and the likelihood function monotonically increases with the steplength.

-

(ii)

Every limit point of \(\left\{ {\varvec{\alpha }}^{(t)}\right\} \) is a stationary point.

Proof

See Proposition 1.2.1 in Bertsekas (2004). \(\square \)

Note that Theorem 1 does not imply that the IFS method converges to a global maximum from any initial value. Theorem 1 just indicates that \(\varvec{\alpha }^{*}\) may be a local maximum or a saddle point.

Corollary 1

If the log-likelihood function is concave with respect to \(\varvec{\alpha }\), the convergent point \(\varvec{\alpha }^{*}\) satisfies \(\ell _\mathrm{obs}(\varvec{\alpha })\le \ell _\mathrm{obs}(\varvec{\alpha }^{*})\) for \(\forall \varvec{\alpha }\in \varOmega \). Further, if “concavity” can be replaced with “strict concavity, ” then \(\ell _\mathrm{obs}(\varvec{\alpha })<\ell _\mathrm{obs}(\varvec{\alpha }^{*})\) for all \(\varvec{\alpha }\in \varOmega \setminus \{\varvec{\alpha }^{*}\}\).

Proof

See Appendix. \(\square \)

The rate of convergence is of interest here. The convergence rate of the proposed method is investigated in terms of the likelihood value \(\{\ell _\mathrm{obs}(\varvec{\alpha }^{(t)})\}_t\). The following notation o(1) indicates a quantity that goes to zero as \({\varvec{\alpha }}^{(t)}\) goes to \(\varvec{\alpha }^{*}\).

Theorem 2

Assume that the maximum and minimum eigenvalues of \(J_\mathrm{com}(\varvec{\alpha }^{*})^{-1}I_\mathrm{obs}(\varvec{\alpha }^{*})\) are \(\lambda _1\) and \(\lambda _r\) (\(1>\lambda _1>\lambda _r>0\)). Let \(\varDelta ({\varvec{\alpha }}^{(t)})=|\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})-\ell _\mathrm{obs}(\varvec{\alpha }^{*})|\).

(i) For IFS, there exists a sufficiently large \(t_1\) such that

where \(s^{(t)}q^{(t)}\in (0,1/\lambda _r)\).

(ii) For AIFS, there exists a sufficiently large \(t_2\) such that

Proof

See Appendix. \(\square \)

This theorem states that the AIFS method converges faster than, or at the same rate as, the IFS when

around the convergent point \(\varvec{\alpha }^{*}\). In other words, where steplengths do not satisfy this inequality, the IFS converges faster than the AIFS method. However, before executing the method, it is impossible to know these eigenvalues. In practice, the AIFS should be used from the outset. The EM algorithm (at least the variants appearing in this paper) is regarded as the IFS method with \(s^{(t)}=1\) and \(q^{(t)}\rightarrow 1\). Thus, it can be asserted that the IFS with \(s^{(t)}q^{(t)}>1\) converges at a faster rate than the EM algorithm. Note that this inequality does not express the whole sequence of the method/algorithm.

Corollary 2

Let \(\Vert \cdot \Vert _{I_o}\) be the norm with metric matrix \(I_\mathrm{obs}(\varvec{\alpha }^{*})\). Under the same setting as Theorem 2,

(i) For IFS, there exists a sufficiently large \(t_1\) such that

for \(\forall t\ge t_1\), where \(s^{(t)}q^{(t)}\in (0,1/\lambda _r)\).

(ii) For AIFS, there exists a sufficiently large \(t_2\) such that

for \(\forall t\ge t_2\).

Proof

See “Appendix”. \(\square \)

5 Examples

5.1 Normal distribution

To illustrate how the steplength adjustment works in an empirical context, the AIFS method is compared with the IFS method with \(q^{(t)}=1.5\), and also with the EM algorithm. Note that \(q^{(t)}=1.5\) is an arbitrary number, and the IFS method with \(q_1^{(t)}=q_2^{(t)}=1\) is identical to the EM algorithm. Hereafter, these are referred to as AIFS, IFS, and EM, respectively. Apple crop data from Table 7.4 of Little and Rubin (2002) are used for expository purposes. The data take the same form as in Sect. 2.1 when \(n=18\) and \(m=12\). The three methods to be compared share the initial values, \({\varvec{\mu }}^{(0)}=(30,30)^{\prime }\) and \(\varSigma ^{(0)}=\mathrm{diag}(100,100)\), and the convergence criterion \(\Vert \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\Vert <10^{-4}\), where \(\mathrm{diag}(\varvec{x})\) denotes a diagonal matrix with \(\varvec{x}\) in its diagonal elements and zeros otherwise.

Table 1 gives \(\varDelta ({\varvec{\alpha }}^{(t)})=|\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})-\ell _\mathrm{obs}(\varvec{\alpha }^{*})|\), \(s^{(t)}q^{(t)}\) of AIFS, IFS, and \(q^{(t)}\) of EM. All values are rounded to four decimal places. Thus, note that 0.0000 is not equal to zero, but a rounded zero. Where “–” appears, this denotes an empty cell because the steplength is not used or the computation is terminated. In the initial stages, EM comes closest to \(\ell _\mathrm{obs}(\varvec{\alpha }^{*})\). In later stages, when \(\varDelta ({\varvec{\alpha }}^{(t)})\) comes closer to zero, EM is further from the convergent point than the other two methods, while AIFS and IFS become closer around \(\varvec{\alpha }^{*}\). As per Theorem 2, it is confirmed that AIFS converges faster than IFS and EM around the convergent point, and as per this example, it is also confirmed that AIFS converges faster than the others even for the whole sequence.

The standard error of the estimate obtained by the (A)IFS method is generated by the IFS-Variance method. In this example, the second derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\) can be explicitly obtained (e.g., Savalei 2010), and thus the standard error matrix is also obtained. The distance between the former and the latter, measured in terms of the sum of squares, was less than \(10^{-8}\). This indicates that the IFS-Variance method works well here.

5.2 Poisson mixture distribution

Three parameter estimation methods are compared using empirical data: the AIFS, the IFS with the randomly guessed initial value \(q^{(t)}=2\), and the EM algorithm. These are denoted by AIFS, IFS, and EM, respectively. Mortality data from the 1910-1912 London Times newspaper (Hasselblad 1969; Titterington 1984) are used for this purpose. The data record the number of deaths per day and the number of days with \(y_i\) deaths (\(i=1,\ldots ,1096\)). The data are a better fit to a two-component Poisson mixture model than a single component model (Lange 1995b). The initial value is the moment estimates \((\pi ^{(0)},\alpha _1^{(0)},\alpha _2^{(0)})=(0.2870,2.582,1.101)\) and the convergence criterion is \(\Vert \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\Vert <10^{-4}\) for all models.

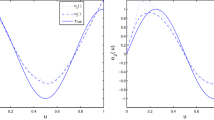

Figure 1 shows the convergence process for AIFS, IFS, and EM. The horizontal axis indicates the iteration number to the 300th iteration and the vertical axis is \(\varDelta ({\varvec{\alpha }}^{(t)})\). Notice that until the 300th iteration AIFS converges but the remaining two methods are still in the process of convergence. The log-likelihood values are all \(-1989.946\) for AIFS, IFS, and EM when these methods satisfy the convergence criterion. The value is reported as the maximum log-likelihood function value (Lange 1995b). The number of iterations until convergence is 196, 1474, and 2208 for AIFS, IFS, and EM, respectively. The figure shows that AIFS converges much faster than the other two methods and the decrease is not so smooth as the other two; IFS and EM behave smoothly. Around the convergent point of IFS and EM, which is not shown in the figure, IFS satisfies the convergence criterion faster than EM. The limitation of EM here is its slow behavior around the convergent point. It is confirmed that AIFS converges faster than IFS, whilst IFS is faster than EM for the whole sequence.

The standard error can be obtained through the IFS-Variance method. In this example, the standard error of the estimates for \((\pi ,\alpha _1,\alpha _2)\) is obtained in an explicit form by taking the second derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\). The sum of squares of the difference of all elements between the former and the latter is less than \(10^{-6}\). Thus, the IFS-Variance method again works well.

5.3 Multivariate t-distribution

Multivariate t-distributions are often used for data analysis, especially in the context of robust statistics (McLachlan and Peel 2000). Let the observed data, \(\varvec{y}_1,\ldots ,\varvec{y}_n\), follow p-variate t-distribution \(t_p({\varvec{\mu }},\varSigma ,v)\), where \({\varvec{\mu }}\) is a location vector, \(\varSigma \) is a scale matrix, and v is known degrees of freedom. The log-likelihood function of this t-distribution with \(\varvec{y}_i\) (\(i=1,\dots ,n\)) is denoted by \(\ell _\mathrm{obs}(\varvec{\alpha })\). In the following, the parameters, \({\varvec{\mu }}\) and the nonredundant elements in \(\varSigma \), to be estimated are written as \(\varvec{\alpha }\). To estimate \(\varvec{\alpha }\), it is assumed that the complete data are \((\varvec{y}_i,z_i)\) (\(i=1,\ldots ,n\)) and that

where \(N_p({\varvec{\mu }},z_i^{-1}\varSigma )\) is a normal distribution with mean \({\varvec{\mu }}\) and variance \(v^{-1}\varSigma \), and \(\chi _v^2\) is a chi-squared distribution with v degrees of freedom. The complete-data log-likelihood function is

The (A)IFS method is derived as follows. The first derivative of \(\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\) is

where \(h_i^{(t)}=(\varvec{y}_i-{\varvec{\mu }}^{(t)})^{\prime } \left( \varSigma ^{(t)}\right) ^{-1}(\varvec{y}_i-{\varvec{\mu }}^{(t)})\). To derive \(\nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\), \(\nabla ^{10}Q({\varvec{\alpha }}^{(t)}|{\varvec{\alpha }}^{(t)})\) is used, since it is easier to calculate. The inverse matrix of the expected second derivative is

Thus, the iteration equation of the IFS method is given as

where \(q_1^{(t)}\) and \(q_2^{(t)}\) indicate the different steplengths.

The IFS method with

becomes the iteration of the EM algorithm. These steplengths can be obtained in the same method as in the mixture of Poisson distributions. Let \(\varvec{d}_1\) be the direction for \({\varvec{\mu }}\). The equation that produces the exact optimum steplength is

This is difficult to solve, thus the following is solved instead:

where \(h_i^{(t)}\) is fixed at the current estimate, although it is actually a function of \(\varvec{\alpha }^{(t)}\). This leads to \(q_1^{(t)}\) of Eq. (8). In a similar way for \(q_2^{(t)}\), note \(q_2^{(t)}=1\). The fixation is justified when the direction vector according to the IFS method is sufficiently close to zero, that is, when \({\varvec{\alpha }}^{(t)}\) is near the convergent point. Toward the end, the directions by the EM algorithm and the IFS method are almost identical.

The IFS method with

becomes the iteration of the parameter-expansion EM algorithm (PX-EM algorithm; Liu et al. 1998). This was developed to accelerate the convergence of the EM algorithm. The IFS method with the common steplength (i.e., the PX-EM algorithm) is expected to be faster than that with different steplengths (i.e., the EM algorithm). The problem is how this steplength can be improved.

Here, these four methods are compared: the AIFS method, the IFS method with the initial value \(q^{(t)}=1.2\), the PX-EM algorithm, and the EM algorithm. These are referred to as AIFS, IFS, PXEM, and EM, respectively. To compare these methods, data of size \(n=100\) are randomly generated from the multivariate t-distribution with the location parameter \({\varvec{\mu }}=(1,2,3)^{\prime }\) and the covariance matrix

with six degrees of freedom. For all four computation methods, the initial values are the moment estimates, and the convergence criterion is \(\Vert \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\Vert <10^{-4}\).

Table 2 shows the process of convergence across the four methods in terms of \(\varDelta ({\varvec{\alpha }}^{(t)})\) and the steplength chosen to improve the current value at each iteration. Empty cells, “–”, occur for the same reason as per the foregoing normal distribution example. All methods attain the same maximum value of the log-likelihood function. All values are rounded to four decimal places; thus, again, 0.0000 for \(\varDelta ({\varvec{\alpha }}^{(t)})\) denotes a rounded zero. The number of iterations until convergence differ across the methods as follows: 10, 15, 11, and 19 for AIFS, IFS, PXEM, and EM, respectively. Neither AIFS or IFS required any steplength adjustments. AIFS and PXEM require almost the same number of iterations until convergence. From the first to the fourth iteration, AIFS is closer to the convergent point than IFS and EM, but farther than PXEM. After the fifth iteration, AIFS begins to get closer to the convergent point than PXEM. This indicates that AIFS improves itself more slowly than PXEM in the initial iterations, but more quickly in subsequent iterations. In this example, it is confirmed that AIFS converges almost equivalently to PXEM, that they are faster than IFS, and that IFS is faster than EM, even for the whole sequence. Although in the application of PXEM an extended model is needed that contains the original model as a special case, AIFS can be applied to cases where such an extended model cannot be found. In those cases, AIFS can substitute for PXEM.

5.4 Dirichlet distribution

The (A)IFS method is applied for the parameter estimation of a Dirichlet distribution. The Dirichlet distribution has no explicit form of the iteration for the EM algorithm since it involves the gamma function and, in practical cases, its derivative. The EM algorithm must resort to the Newton–Raphson type iterations, losing its simplicity. On the other hand, the (A)IFS method could compute the parameter without resorting to such a computational method.

Let \(\varvec{y}=(y_1,\ldots ,y_p)^{\prime }\) be a data vector taken independently from the Dirichlet distribution with density function,

where \((\alpha _1,\ldots ,\alpha _p)\) is the parameter. The Dirichlet random vector is derived by transforming the independent random variables from gamma distributions. Assume that \(x_1,\ldots ,x_p\) are independently positive random variables and each \(x_j\) is distributed as a gamma distribution with parameter \(\alpha _j\) (\(j=1,\ldots ,p\)). By setting

vector \((y_1,\ldots ,y_p)\) is distributed as Eq. (9) (Mosimann 1962). \((y_1,\ldots ,y_p)\) can be regarded as incomplete data and \((x_1,\ldots ,x_p)\) as complete data for the estimation of parameters \((\alpha _1,\ldots ,\alpha _p)\).

Assume that we have data of sample size n taken independently from the Dirichlet distribution, \(\varvec{y}_{1},\ldots ,\varvec{y}_{n}\) with \(\varvec{y}_i=(y_{i1},\ldots ,y_{ip})\). The observed-data log-likelihood function is

where \(\varvec{\alpha }=(\alpha _1,\ldots ,\alpha _p)^{\prime }\). The complete-data log-likelihood function is, ignoring an irrelevant term,

As mentioned earlier, applying the EM algorithm would produce an inexplicit form of the iteration. In fact, the EM algorithm leads to

This equation cannot be explicitly solved with respect to \(\varvec{\alpha }\) since the derivation of \(\varGamma (\alpha _j)\) is involved. To derive the M-step, we need to resort to the Newton–Raphson type iterations. In this case, use of the EM algorithm would not bring benefits such as simplicity.

On the other hand, the (A)IFS method does not solve the first derivative of the Q-function. To apply the (A)IFS method, we need a direction vector, \(\varvec{d}=n^{-1}J_\mathrm{com}(\varvec{\alpha })^{-1}\nabla \ell _\mathrm{obs}(\varvec{\alpha })\). First, we compute the Fisher information matrix for a single datum.

where \(\varPsi _1(\alpha _j)\) is the first derivative of a digamma function \(\varPsi (\alpha _j)\) with respect to \(\alpha _j\). Next, we compute the first derivative of the observed-data log-likelihood function.

As a result, the direction vector has its jth element as

In this case, the iteration becomes identical to that of the EM gradient algorithm (Lange 1995a) except for steplength.

The AIFS method is compared with the IFS method for the data from Mosimann (1962). The data represent the proportions of the diet ingredients for ducklings. The data have its size \(n=23\), and \(p=3\) variables. The same data are used in the paper about the EM gradient algorithm by Lange (1995a). The EM gradient algorithm has the same form of the iteration as that of the IFS method shown in Eq. (1). Both the EM gradient algorithm and the IFS method have an arbitrary steplength. I use the steplength of two, because Lange (1995a) suggests the steplength of two approximately halves the number of iterations until convergence for the EM gradient algorithm. Hereafter, the AIFS method, and the IFS method with the steplength of two are referred to as AIFS, and IFS, respectively.

Starting from the initial value (1.0., 1.0, 1.0), AIFS and IFS converge to the same value of the observed-data log-likelihood function 73.1250 with the same estimates (3.22, 20.38, 21.69), which is set to be \(\varvec{\alpha }^{*}\) for later use. The convergence criterion is \(\Vert \nabla \ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\Vert <10^{-4}\) as before. As a result, the numbers of iterations until convergence are 193 when AIFS is applied, and 735 when IFS is applied. The process of convergence to the 50th iteration is shown in Fig. 2. The horizontal axis indicates the iteration number to the 50th iteration and the vertical axis is \(\varDelta ({\varvec{\alpha }}^{(t)})=|\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})-\ell _\mathrm{obs}(\varvec{\alpha }^{*})|\). Notice that both methods do not converge yet in the graph. During initial iterations, IFS is closer to the convergent point than AIFS, and after those initial iterations, AIFS becomes closer. This example illustrates that AIFS and IFS work for models for which the EM algorithm could not be used without resorting to numerical methods, and, on the other hand, that AIFS and IFS treat such models without counting on the other computational methods. It also shows, with the data of diet ingredients for ducklings, that IFS, which is equivalent to the EM gradient algorithm, can be drastically improved to AIFS which appropriately adjusts the steplength at each iteration.

5.5 Computing time

In the previous four subsections, from the perspective of the number of iterations until convergence, the (A)IFS was compared with the EM algorithm and its variants. The theorems given above also mainly concern the number of iterations. Another interesting perspective is the computing time. In this subsection, we make the comparison among the AIFS method, the IFS method, the PX-EM algorithm, and the EM algorithm in terms of the computing time using a simulation technique.

We conduct four simulations corresponding to each example above. In each simulation, the same set of data as each example is used with the same initial values to make the same comparison. We make the parameter estimation 1000 times and measure the computing time with the unit of millisecond, obtaining the mean and standard deviation of the computing times. Note that although the multivariate t-distribution uses a randomly generated set of data, the simulation uses a fixed set of data as in Sect. 5.3.

At each iteration, the (A)IFS method takes more computation than the EM algorithm does. This is because the (A)IFS uses steplength adjustment, that is, the (A)IFS gives an initial steplength and adjusts it if necessary. Thus, if the numbers of iterations until convergence do not vary between the (A)IFS method and the EM algorithm for the same data, the (A)IFS method should take a longer computing time than the EM algorithm. However, this is not the case: the number of iterations until convergence varies between the (A)IFS method and the EM algorithm. As all the examples show, the (A)IFS method takes a smaller number of iterations until convergence. Although the computational amount due to use of the (A)IFS method increases at each iteration, the total computing time should be shorter under the condition that the number of iterations until convergence becomes small enough. Thus, the moderate decrease in the number of iterations due to use of the (A)IFS method would offset the increase in the computational amount due to use of the (A)IFS method, resulting in no change in the total computing time. The large decrease in the number of iterations would shorten the total computing time.

Table 3 shows the result of the simulation. In the table, AIFS, and IFS denote the AIFS method, the IFS with the initial steplength of 1.5 for the normal distribution example and 2 for the Poisson mixture distribution example and the Dirichlet distribution example, and 1.2 for the multivariate t-distribution example. PXEM, and EM denote the parameter-expansion EM algorithm, and the EM algorithm, respectively. The mean of computing times is given with its standard deviation in parentheses. All values are rounded to four decimal places.

In the normal distribution example, the number of iterations decrease from 24 of EM to 17 of IFS, and to 12 of AIFS. The decrease in the number of iterations is not large enough to offset the increase in the computation amount due to use of IFS and AIFS. The computing time order may almost reflect the computational amount order. AIFS needs more computational amount than IFS to compute the initial steplength and adjust it, and IFS needs more than EM to adjust the initial steplength of 1.5.

In the rest of the simulation result, AIFS always takes the shortest computing time. This reflects that the number of iterations due to use of AIFS is small enough, and the computational amount due to AIFS increases a little. AIFS uses a steplength of a theoretically good initial value, and the selected steplength needs almost no adjustment. IFS outperforms EM in the multivariate t-distribution example, and not in the normal distribution example and the Poisson mixture distribution example. The reason might be that IFS uses a steplength of a guessed initial value, and the steplength needs its appropriate adjustment if any.

The result as a whole indicates that the AIFS method works better in terms of computing time as well as the number of iterations. Taking into consideration that the AIFS method can compute the standard error of the estimates without additional programs, the AIFS method is all the more favorable than the IFS method, the EM algorithm, and its variants.

A practical problem in deciding whether to use the AIFS method is that it is unknown before executing the actual computation which of the AIFS method, the IFS method, or the EM algorithm takes the shortest computing time. However, it is beyond the scope of this paper and it will be dealt with in a future paper.

6 Conclusion

In this paper, the IFS method and its accelerated variant, the AIFS method, are proposed. These can be seen as Fisher scoring methods modified for incomplete data,and also as scaled versions of the steepest ascent method (Bertsekas 2004). The noteworthy feature of the IFS method is that it becomes nearly or exactly identical to the EM algorithm, but, at the same time, can be accelerated through steplength adjustment. With the first derivative of \(\ell _\mathrm{obs}(\varvec{\alpha })\) for the (A)IFS method, the second derivative is obtained by numerical differentiation, which produces the standard error of the MLE.

It is possible to further modify this proposed method. Observing the process of convergence in the examples provided herein, focusing on the normal distribution and the multivariate t-distribution, and switching from the EM algorithm at initial stages to the IFS or AIFS methods at later stages is expected to result in less iterations being required before convergence. This is a kind of “hybrid EM algorithm” (McLachlan and Krishnan 2008). The EM algorithm, at initial stages, can be replaced with the IFS with a fixed \(s^{(t)}q^{(t)}=1\) because it is exactly or nearly identical to the EM algorithm. The advantage of this hybrid method is its smooth transition, achieved by steplength changes. Using the IFS iterations, this transition can be applied to the Dirichlet distribution example as well. As shown in Fig. 2, switching from the IFS with a certain steplength to the AIFS is expected to result in faster convergence. However, observing the convergence process in the Poisson mixture example in Fig. 1, switching might not be effective for reducing the number of iterations in some cases.

Another modification is the memory-saved IFS method, which is expected to be generally effective if properly employed. Computing the inverse matrix of \(J_\mathrm{com}(\varvec{\alpha })\) at every iteration could be computationally onerous, and might cause a longer computing time. To avoid the onerous and long computation, after some iterations, \(J_\mathrm{com}(\varvec{\alpha })\) is fixed at its newest value. This is called the “Whittaker’s method” for the Newton–Raphson algorithm (Small and Wang 2003). However, it is unknown beforehand whether to use this method or not and, if the researcher does opt to use this method, when to switch to the AIFS method and when to fix \(J_\mathrm{com}(\varvec{\alpha })^{-1}\) is unclear. These are problems to be explored in the future.

This paper is just a starting point for the development of the (A)IFS. This paper focuses on the theoretical aspects of the (A)IFS, namely the convergence theorem (Theorem 1) and the convergence rate (Theorem 2). Beyond the theoretical results, users are likely to be more interested in knowing how much faster the (A)IFS is compared to the EM algorithm in empirical contexts. These practical aspects also need further exploration in the future.

References

Bentler, P.M., Tanaka, J.S.: Problems with EM algorithms for ML factor analysis. Psychometrika 48(2), 247–251 (1983)

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena Scientific, MA (2004)

Böhning, D., Lindsay, B.G.: Monotonicity of quadratic-approximation algorithms. Ann. I. Stat. Math. 40(4), 641–663 (1988). https://doi.org/10.1007/BF00049423

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 39(1), 1–38 (1977)

Elashoff, M.E., Ryan, L.: An EM algorithm for estimating equations. J. Comput. Graph. Stat. 13(1), 48–65 (2004)

Fisher, R.A.: Theory of statistical estimation. Proc. Camb. Philos. Soc. 22, 700–725 (1925)

Hasselblad, V.: Estimation of finite mixtures of distributions from the exponential family. J. Am. Stat. Assoc. 64(238), 1459–1471 (1969)

Jamishidian, M., Jennrich, R.I.: Conjugate gradient acceleration of the EM algorithm. J. Am. Stat. Assoc. 88(421), 221–228 (1993)

Kenward, M.G., Molenberghs, G.: Likelihood based frequentist inference when data are missing at random. Stat. Sci. 13(3), 236–247 (1998)

Lange, K.: A gradient algorithm locally equivalent to the EM algorithm. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 57(2), 425–437 (1995a)

Lange, K.: A quasi-Newton acceleration of the EM algorithm. Stat. Sin. 5(1), 1–18 (1995b)

Little, R.J.A., Rubin, D.B.: Statistical Analysis with Missing Data, 2nd edn. Wiley, Hoboken (2002)

Liu, C., Rubin, D.R., Wu, Y.N.: Parameter expansion to accelerate EM: the PX-EM algorithm. Biometrika 85(4), 755–770 (1998)

Louis, A.: Finding the observed information matrix when using the EM algorithm. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 44(2), 226–233 (1982)

McLachlan, G.: On Aitken’s method and other approaches for accelerating convergence of the EM algorithm. In: Proceedings of the A.C. Aitken Centenary Conference. University of Otago Press, pp. 201–209 (1995)

McLachlan, G., Krishnan, T.: The EM Algorithm and Extensions, 2nd edn. Wiley, Hoboken (2008)

McLachlan, G., Peel, D.: Finite Mixture Models. Wiley, Hoboken (2000)

Meilijson, I.: A fast improvement to the EM algorithm on its own terms. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 51(1), 127–138 (1989)

Meng, X.L., Rubin, D.B.: Using EM to obtain asymptotic matrices : the SEM algorithm. J. Am. Stat. Assoc. 86(416), 899–909 (1991)

Minka, T.P.: Expectation-maximization as lower bound maximization. http://www.cse.psu.edu/~rtc12/CSE598G/papers/minka98expectationmaximization.pdf (1998). Accessed 12 Jan 2020

Mosimann, J.E.: On the compound multinomial distribution, the multivariate \(\beta \)-distribution, and correlations among proportions. Biometrika 49(1 and 2), 65–82 (1962)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, Berlin (2006)

Osborne, R.: Fisher’s method of Scoring. Int. Stat. Rev. 60(1), 99–117 (1992)

Rai, S.N., Matthews, D.E.: Improving the EM algorithm. Biometrics 49(2), 587–591 (1993)

Rubin, D.B., Thayer, D.T.: EM algorithm for ML factor analysis. Psychometrika 47(1), 69–76 (1982)

Salakhutdinov, R., Roweis, S., Ghahramani, Z.: Relationship between gradient and EM steps in latent variable models. http://www.utstat.toronto.edu/~rsalakhu/papers/report.pdf (2002). Accessed 12 Jan 2020

Savalei, V.: Expected versus observed information in SEM with incomplete normal and nonnormal data. Psychol. Methods 15(4), 352–367 (2010)

Seaman, S., Galati, J., Jackson, D., Carlin, J.: What is meant by “Missing at Random”? Stat. Sci. 28(2), 257–268 (2013)

Small, C.G., Wang, J.: Numerical Methods for Nonlinear Estimating Equations, Oxford Statistical Science Series, vol. 29. Oxford University Press, Oxford (2003)

Titterington, D.M.: Recursive parameter estimation using incomplete data. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 46(2), 257–267 (1984)

Varadhan, R., Roland, C.: Simple and globally convergent methods for accelerating the convergence of any EM algorithm. Scand. J. Stat. 35, 335–353 (2008)

Xu, L., Jordan, M.I.: On convergence properties of the EM algorithm for Gaussian mixtures. Neural Comput. 8(1), 129–151 (1995)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by JSPS KAKENHI Grant Number JP18K11205.

Appendix

Appendix

Equivalence between equations (3) and (4) Define \(S=(0, 1)^{\prime }\). Equation (2) can be written as

Taking the derivative with respect to \(vec(\varSigma )\) leads to

Using the relationship that \(vec(ABC)=(C^{\prime }\otimes A) vec(B)\) for matrices A, B, and C of appropriate sizes, we have

Apparently, this is equivalent to the right-hand side in Eq. (3).

Proof of\(E[\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})]\ge -nJ_\mathrm{com}({\varvec{\alpha }}^{(t)})\) We suppress the iteration number of \({\varvec{\alpha }}^{(t)}\) for convenience. The complete-data log-likelihood function is decomposed into

where \(\ell _\mathrm{miss|obs}(\varvec{\alpha })\) denotes the distribution of missing data conditional on the observed data. Taking the second derivative and the expectation of both sides gives,

where E is the expectation with respect to the joint distribution of random variables for the data and the corresponding missing-data indicators (Kenward and Molenberghs 1998; Seaman et al. 2013). The second term on the right side is

where \(E_\mathrm{miss|obs}\) indicates the expectation of the missing-data variables conditional on the observed-data variables and \(E_\mathrm{obs}\) is the expectation of the observed-data variables. Since \(-E_\mathrm{miss|obs}\left[ \nabla ^2\ell _\mathrm{miss|obs}(\varvec{\alpha })\right] \) is the Fisher information matrix, \(E[\nabla ^2\ell _\mathrm{miss|obs}(\varvec{\alpha })]\le O\) in the sense that \(\varvec{x}^{\prime } E[\nabla ^2\ell _\mathrm{miss|obs}(\varvec{\alpha })]\varvec{x}\le 0\) for any value of vector \(\varvec{x}\). Therefore, with Eq. (10), \(E[\nabla ^2\ell _\mathrm{com}(\varvec{\alpha })]\le E[\nabla ^2\ell _\mathrm{obs}(\varvec{\alpha })]\), that is, \(-nJ_\mathrm{com}({\varvec{\alpha }}^{(t)})\le E[\nabla ^2\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})]\).

Proof of Corollary 1

By the concavity, \(\ell _\mathrm{obs}(\varvec{\alpha })-\ell _\mathrm{obs}(\varvec{\alpha }^{*})\le \nabla \ell _\mathrm{obs}(\varvec{\alpha }^{*})^{\prime }(\varvec{\alpha }-\varvec{\alpha }^{*})\) (See Proposition B.3 of Bertsekas 2004). Since \(\nabla \ell _\mathrm{obs}(\varvec{\alpha }^{*})={\varvec{0}}\), the statement holds. The relation \(\ell _\mathrm{obs}(\varvec{\alpha })<\ell _\mathrm{obs}(\varvec{\alpha }^{*})\) directly follows from strict concavity. \(\square \)

Lemma 1

Under the same setting as Theorem 2,

-

(i)

For IFS, there exists a sufficiently large \(t_1\) such that \(s^{(t)}q^{(t)}\ge 1\) (\(t\ge t_1\)).

-

(ii)

For AIFS, there exists a sufficiently large \(t_2\) such that \(s^{(t)}= 1\) (\(t\ge t_2\)).

Proof

(i) To simplify notation, iteration numbers are again suppressed in the proof. To prove the lemma, it suffices to show that \(s^{(t)}q^{(t)}=1\) is accepted by the Armijo Rule.

Define \(\varvec{y}=I_\mathrm{obs}(\varvec{\alpha })^{\frac{1}{2}}\varvec{d}\). Since \(J_\mathrm{com}(\varvec{\alpha })\) and \(I_\mathrm{obs}(\varvec{\alpha })\) are continuous with respect to \(\varvec{\alpha }\),

Thus, there exists a sufficiently large \(t_1\) such that

for \(\forall t\ge t_1\).

(ii) As \(\Vert \varvec{d}\Vert \rightarrow 0\), \(q^{(t)}\rightarrow q_0\). Thus, it suffices to show \( \ell _\mathrm{obs}(\varvec{\alpha }+q_0\varvec{d}) -\ell _\mathrm{obs}(\varvec{\alpha })> \sigma q_0\varvec{d}^{\prime }\nabla \ell _\mathrm{obs}(\varvec{\alpha })\) when t is sufficiently large. In the following, iteration numbers are again suppressed. In the same way as (i),

since \(\sigma \in (0,.5)\). Because \(\Vert \varvec{d}\Vert \rightarrow 0\) as \(t\rightarrow 0\), the statement follows.

Proof of Theorem 2

Define \(\ell _t=\ell _\mathrm{obs}({\varvec{\alpha }}^{(t)})\) and \(I_\mathrm{obs}(\varvec{\alpha }^{*})=I_o\) for convenience. Applying the Taylor expansion produces

Since the direction produced by the IFS method is given as \(\varvec{d}^{(t)}=n^{-1}J_\mathrm{com}({\varvec{\alpha }}^{(t)})^{-1}\nabla \ell _t\),

Dividing both sides by \(\Vert {\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*}\Vert \), for sufficiently large t,

where \(\varvec{g}^{(t)}=\varvec{d}^{(t)}/\Vert {\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*}\Vert \), \(J_c=J_\mathrm{com}(\varvec{\alpha }^{*})\), and \(\varvec{e}^{(t)}=({\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*})/\Vert {\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*}\Vert \). Since \(\varvec{e}^{(t)}\) is a unit vector and \(\Vert J_c^{-1}I_o\Vert \) is nonzero and lower- and upper-bounded, \(\varvec{g}^{(t)}\) on the left-side term is also nonzero and bounded.

Since \(\nabla \ell _\mathrm{obs}(\varvec{\alpha }^{*})=0\), we have

In a similar way, we have

(i) By Lemma 1, there exists a sufficiently large \(t_1\) such that \((s^{(t)}q^{(t)})\ge 1\) for \(\forall t\ge t_1\). Thus, again maintaining simple notation, let \(k=s^{(t)}q^{(t)}\),

There exists an orthogonal matrix P satisfying \(P^{\prime }(I_o^{\frac{1}{2}}J_c^{-1} I_o^{\frac{1}{2}})P=\mathrm{diag}(\lambda _1,\lambda _2,\cdots ,\lambda _r)\), where \(\lambda _j\) is the eigenvalues of \(J_c^{-1}I_o\) (\(j=1,\ldots ,r\)). Letting \(\varvec{x}=P\varvec{y}=(x_1,\ldots ,x_p)^{\prime }\), we have

where we have used the inequality, \(k^2\lambda _j^2-2k\lambda _j\le k^{2}\lambda _r^2-2k\lambda _r\) for \(\forall j\in \left\{ 1,\ldots ,r\right\} \). We have the desired result.

(ii) By Lemma 1, there exists a sufficiently large \(t_2\) such that \(s^{(t)}=1\). Thus,

for \(\forall t\ge t_2\). Setting \(J_c^{\frac{1}{2}}\varvec{g}^{(t)}=\varvec{z}\), Eq. (11) becomes

by Kantrovich’s inequality (Lemma 3.1 in Bertsekas 2004). Therefore, we have the desired result. \(\square \)

Proof of Corollary 2

(i) Setting \(\varDelta ({\varvec{\alpha }}^{(t)})=.5({\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*})^{\prime }I_o({\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*})=.5 \Vert {\varvec{\alpha }}^{(t)}-\varvec{\alpha }^{*}\Vert _{I_o}^2\) in Theorem 2, we have the desired result.

(ii) Proved similarly to (i). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Takai, K. Incomplete-data Fisher scoring method with steplength adjustment. Stat Comput 30, 871–886 (2020). https://doi.org/10.1007/s11222-020-09923-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-020-09923-z