Abstract

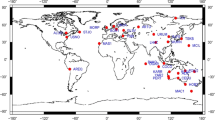

Knowledge of the long-range dependence (LRD) parameter is critical to studies of self-similar behavior. However, statistical estimation of the LRD parameter becomes difficult when the observed data are masked by short-range dependence and other noises or are gappy in nature (i.e., some values are missing in an otherwise regular sampling). Currently there is a lack of theory for spectral- and wavelet-based estimators of the LRD parameter for gappy data. To address this, we estimate the LRD parameter for gappy Gaussian semiparametric time series based upon undecimated wavelet variances. We develop estimation methods by using novel estimators of the wavelet variances, providing asymptotic theory for the joint distribution of the wavelet variances and our estimator of the LRD parameter. We introduce sandwich estimators to compute standard errors for our estimates. We demonstrate the efficacy of our methods using Monte Carlo simulations and provide guidance on practical issues such as how to select the range of wavelet scales. We demonstrate the methodology using two applications: one for gappy Arctic sea-ice draft data and another for gap-free and gappy daily average temperature data collected at 17 locations in south central Sweden.

Similar content being viewed by others

Notes

The dataset we use in this article can be downloaded from http://staff.washington.edu/dbp/DATA/draft-profile.txt.

References

Abry, P., Veitch, D.: Wavelet analysis of long-range-dependent traffic. IEEE Trans. Inf. Theory 44, 2–15 (1998)

Akaike, H.: Maximum likelihood identification of Gaussian autoregressive moving average models. Biometrika 60, 255–265 (1973)

Bardet, J., Lang, G., Moulines, E., Soulier, P.: Wavelet estimator of long-range dependent processes. Stat. Inference Stoch. Process. 3, 85–99 (2000)

Beran, J.: Statistics for Long Memory Processes. Chapman and Hall, New York, NY (1994)

Bloomfield, P.: Spectral analysis with randomly missing observations. J. R. Stat. Soc. Ser. B 32, 369–380 (1970)

Brillinger, D.R.: Time Series: Data Analysis and Theory. Holden-Day Inc, Toronto (1981)

Craigmile, P.F.: Simulating a class of stationary Gaussian processes using the Davies–Harte algorithm, with application to long memory processes. J. Time Ser. Anal. 24, 505–511 (2003)

Craigmile, P.F., Guttorp, P.: Space-time modeling of trends in temperature series. J. Time Ser. Anal. 32, 378–395 (2011)

Craigmile, P.F., Percival, D.B.: Asymptotic decorrelation of between-scale wavelet coefficients. IEEE Trans. Inf. Theory 51, 1039–1048 (2005)

Craigmile, P.F., Guttorp, P., Percival, D.B.: Trend assessment in a long memory dependence model using the discrete wavelet transform. Environmetrics 15, 313–335 (2004)

Craigmile, P.F., Guttorp, P., Percival, D.B.: Wavelet based estimation for polynomial contaminated fractionally differenced processes. IEEE Trans. Signal Process. 53, 3151–3161 (2005)

Dahlhaus, R.: Nonparametric spectral analysis with missing observations. Sankhyā Indian J. Stat. Ser. A 49, 347–367 (1987)

Daubechies, I.: Ten lectures on wavelets. In: CBMS-NSF Series in Applied Mathematics, SIAM, Philadelphia, PA (1992)

Davies, R.B., Harte, D.S.: Tests for Hurst effect. Biometrika 74, 95–101 (1987)

Dunsmuir, W., Robinson, P.M.: Asymptotic theory for time series containing missing and amplitude modulated observations. Sankhyā Indian J. Stat. Ser. A 43, 260–281 (1981)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348–1360 (2001)

Fay, G., Moulines, E., Roueff, F., Taqqu, M.S.: Estimators of long-memory: Fourier versus wavelets. J. Econom. 151, 159–177 (2009)

Geweke, J., Porter-Hudak, S.: The estimation and application of long memory time series models. J. Time Ser. Anal. 4, 221–238 (1983)

Granger, C.W.J., Joyeux, R.: An introduction to long-memory time series models and fractional differencing. J. Time Ser. Anal. 1, 15–29 (1980)

Green, P.J.: Reversible jump Markov chain Monte Carlo computation and Bayesian model determination. Biometrika 82, 711–732 (1995)

Heidelberger, P., Welch, P.D.: A spectral method for confidence interval generation and run length control in simulations. Commun. ACM 24, 233–245 (1981)

Hosking, J.R.M.: Fractional differencing. Biometrika 68, 165–176 (1981)

Hurst, H.E.: The problem of long-term storage in reservoirs. Trans. Am. Soc. Civil Eng. 116, 776–808 (1951)

Hurvich, C., Lang, G., Soulier, P.: Estimation of long memory in the presence of a smooth nonparametric trend. J. Am. Stat. Assoc. 100, 853–871 (2005)

Hurvich, C.M., Chen, W.W.: An efficient taper for potentially overdifferenced long-memory time series. J. Time Ser. Anal. 21, 155–180 (2000)

Hurvich, C.M., Ray, B.K.: The local Whittle estimator of long-memory stochastic volatility. J. Financ. Econom. 1, 445–470 (2003)

Hurvich, C.M., Tsai, C.L.: Regression and time series model selection in small samples. Biometrika 76, 297–307 (1989)

Jensen, M.: Using wavelets to obtain a consistent ordinary least squares estimator of the long-memory parameter. J. Forecast. 18, 17–32 (1999)

Jones, R.H.: Spectrum estimation with missing observations. Ann. Inst. Stat. Math. 23, 387–398 (1971)

Knight, M.I., Nason, G.P., Nunes, M.A.: A wavelet lifting approach to long-memory estimation. Stat. Comput. 27, 1453–1471 (2016)

Ko, K., Vannucci, M.: Bayesian wavelet analysis of autoregressive fractionally integrated moving-average processes. J. Stat. Plann. Inference 136, 3415–3434 (2006)

Kouamo, O., Lévy-Leduc, C., Moulines, E.: Central limit theorem for the robust log-regression wavelet estimation of the memory parameter in the gaussian semi-parametric context. Bernoulli 19, 172–204 (2013)

Künsch, H.: Discrimination between monotonic trends and long-range dependence. J. Appl. Probab. 23, 1025–1030 (1986)

Künsch, H.: Statistical aspects of self-similar processes. In: Proceedings of the First World Congress of the Bernouilli Society, VNU Science Press, Utrecht, Netherlands, vol. 1, pp. 67–74 (1987)

Mandelbrot, B.B., van Ness, J.W.: Fractional Brownian motions, fractional noises and applications. SIAM Rev. 10, 422–436 (1968)

McCoy, E.J., Walden, A.T.: Wavelet analysis and synthesis of stationary long-memory processes. J. Comput. Gr. Stat. 5, 26–56 (1996)

Mondal, D., Percival, D.: Wavelet variance analysis for gappy time series. Ann. Inst. Stat. Math. 62, 943–966 (2010)

Moulines, E., Soulier, P.: Semiparametric spectral estimation for fractional processes. In: Doukhan, P., Oppenheim, G., Taqqu, M.S. (eds.) Theory and Applications of Long-range Dependence, pp. 251–301. Birkhäuser, Boston, MA (2003)

Moulines, E., Roueff, F., Taqqu, M.S.: Central limit theorem for the log-regression wavelet estimation of the memory parameter in the Gaussian semi-parametric context. Fractals 15, 301–313 (2007a)

Moulines, E., Roueff, F., Taqqu, M.S.: On the spectral density of the wavelet coefficients of long-memory time series with application to the log-regression estimation of the memory parameter. J. Time Ser. Anal. 28, 155–187 (2007b)

Moulines, E., Roueff, F., Taqqu, M.S.: A wavelet Whittle estimator of the memory parameter of a nonstationary gaussian time series. Ann. Stat. 36, 1925–1956 (2008)

Palma, W.: Long-Memory Time Series: Theory and Methods. Wiley-Interscience, Hoboken, NJ (2007)

Parzen, E.: On spectral analysis with missing observations and amplitude modulation. Sankhyā Indian J. Stat. Ser. A 25, 383–392 (1963)

Percival, D.B., Mondal, D.: A wavelet variance primer. In: Rao TS, Rao CR (eds) Time Series, Handbook of Statistics, vol. 30, Elsevier, Chennai, India, chapter 22 (2012)

Percival, D.B., Walden, A.T.: Spectral Analysis for Physical Applications Multitaper and Conventional Univariate Techniques. Cambridge University Press, Cambridge (1993)

Percival, D.B., Walden, A.T.: Wavelet Methods for Time Series Analysis. Cambridge University Press, Cambridge (2000)

Percival, D.B., Rothrock, D.A., Thorndike, A.S., Gneiting, T.: The variance of mean sea-ice thickness: effect of long-range dependence. J. Geophys. Res. 113, 1958–1970 (2008)

R Core Team (2018) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna. https://www.R-project.org/

Robinson, P.M.: Semiparametric analysis of long-memory time series. Ann. Stat. 22, 515–539 (1994)

Robinson, P.M.: Gaussian semiparametric estimation of long range dependence. Ann. Stat. 23, 1630–1661 (1995a)

Robinson, P.M.: Log-periodogram regression of time series with long range dependence. Ann. Stat. 23, 1048–1072 (1995b)

Roueff, F., von Sachs, R.: Locally stationary long memory estimation. Stoch. Process. Appl. 121, 813–844 (2010)

Roueff, F., Taqqu, M.S.: Asymptotic normality of wavelet estimators of the memory parameter for linear processes. J.Time Ser. Anal. 30, 534–558 (2009)

Sabatini, A.M.: Wavelet-based estimation of 1/f-type signal parameters: confidence intervals using the bootstrap. IEEE Trans. Signal Process. 47, 3406–3409 (1999)

Scheinok, P.A.: Spectral analysis with randomly missed observations: the binomial case. Ann. Math. Stat. 36, 971–977 (1965)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978)

Velasco, C.: Gaussian semiparametric estimation of non-stationary time series. J. Time Ser. Anal. 20, 87–127 (1999)

Velasco, C., Robinson, P.M.: Edgeworth expansions for spectral density estimates and studentized sample mean. Econom. Theory 17, 497–539 (2001)

Walden, A.T.: A unified view of multitaper multivariate spectral estimation. Biometrika 87, 767–788 (2000)

White, H.: A heteroskedasticity-consistent covariance matrix estimator and a direct test for heteroskedasticity. Econometrica 48, 817–838 (1980)

Yaglom, A.M.: Correlation Theory of Stationary and Related Random Functions, vol. I. Springer, NY, New York (1987)

Zurbenko, I.G.: The Spectral Analysis of Time Series. Elsevier Science, Amsterdam (1986)

Acknowledgements

We thank Prof. Noel Cressie and Prof. Mario Peruggia for helpful comments that improved this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Craigmile is supported in part by the US National Science Foundation (NSF) under Grants NSF-DMS-1407604 and NSF-SES-1424481, and the National Cancer Institute of the National Institutes of Health under Award Number R21CA212308. Mondal is supported by National Science Foundation Grants NSF-DMS-0906300 and NSF-DMS-1519890.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Proofs

Proofs

We first need the following lemmas.

Proposition 1

Let \(U_{l,l',t} \) and \( V_{l,l',t} \) be stationary processes that are independent of each other for any choice of k, \(k'\), l and \(l'\), and let

be their respective spectral representations. For any \(k,k',l\) and \(l'\), let \(S_{k,k',l,l'}\) and \(G_{k,k',l,l'}\) denote the respective cross-spectrum between \(U_{k,k',t}\) and \(U_{l,l',t}\) and between \(V_{k,k',t}\) and \(V_{l,l',t}\). For fixed real numbers \(\{ a_{l,l'} \}\) define

Then, \(Q_t\) is a second-order stationary process whose spectral density function is given by

where \( S*G_{k,k',l,l'}(f) \equiv \int _{-1/2}^{1/2} G_{k,k',l,l'}(f-f') S_{k,k',l,l'}(f')\,\text {d}f'. \)

Proof

The proof is given in the preprint that accompanies Mondal and Percival (2010). \(\square \)

Proposition 2

Let \(X_t\) be a real-valued Gaussian stationary process with zero mean, and SDF \(S_X\) that satisfies

Let \(\eta _t\) be a binary-valued strictly stationary process that is independent of \(X_t\) and satisfies assumptions stated in Mondal and Percival (2010). Using \(Y_{j,t}\) as defined by Eq. (9), then \(Q_t\), defined by

is a second-order stationary process whose SDF at zero is a strictly positive finite number.

Proof

Note that \(Q_t\) in (22) can be written as

where \(U_{l,l',t} = 1/2 (X_{t-l} - X_{t-l'})^2\), \(V_{l,l',t} = \eta _{t-l} \eta _{t-l'}\), \(a_{l,l'} = \sum _{j=J_0}^J \gamma _j h_{j,l} h_{j,l'} \pi _{l-l'}\), \(\theta _{l,l'} = \gamma _{X, l-l'}\), and \( \omega _{l,l'} = \pi ^{-1}_{l-l'}\). Next, following the argument given in Mondal and Percival (2010), we can show that the bivariate process \(\mathbf{U}_t = \left[ 1/2 (X_{t-k} - X_{t-k'})^2, 1/2 (X_{t-l} - X_{t-l'})^2 \right] ^T\), for any choice of \(k,k',l\) and \(l'\), has a spectral matrix \({{\mathcal {S}}}_\mathbf{U}\) that is continuous. In addition, if \(S_{k,k',l,l'}\) is the \((k,k',l,l')\) component of \({{\mathcal {S}}}_\mathbf{U}\), then

Now by Lemma 1, the SDF of \(Q_t\) is given by the right hand side of Eq. (21), where \(G_{k,k',l,l'}\) is the cross-spectrum between \(\eta _{t-k} \eta _{t-k'}\) and \(\eta _{t-l} \eta _{t-l'}\). Since \(a_{l,l'} \omega _{l,l'} = \sum _{j=J_0}^J \gamma _j h_{j,l}h_{j,l'}\),

and after a few lines of algebra, reduces to

where \(| H_j(f)|^2\) is the squared gain function associated with the level j wavelet filter \(h_{j,l}\). This integral is a strictly positive finite number. Now \(\sum _{k,k'} \sum _{l,l'}a_{k,k'} a_{l,l'} \theta _{k,k'} \theta _{l,l'} G_{k,k',l,l'}(0)\) and \(\sum _{k,k'} \sum _{l,l'}a_{k,k'} a_{l,l'} S*G_{k,k',l,l'}(0)\) are nonnegative and finite because \(G_{k,k',l,l'}\) and \(S_{k,k',l,l'}\) are entries of spectral density matrices. Finally, \( \sum _{k,k'} \sum _{l,l'}a_{k,k'} a_{l,l'} \theta _{k,k'} \theta _{l,l'} G_{k,k',l,l'}(0)\) is also nonnegative and finite. This completes the proof. \(\square \)

Before we prove Theorem 1, the following lemmas are consequences of Brillinger (1981, p. 21) and are proved in Mondal and Percival (2010).

Proposition 3

Assume that \(X_t\) satisfies the conditions stated in Theorem 1. Let \(U_{p,t} = -\,1/2 (X_{t-l}- X_{t-l'})^2\) and \(E U_{p,t}=\theta _p\), in which \(p=(l,l')\). Then, for \(n \ge 3\) and fixed \(p_1, \ldots , p_n\),

where each \(t_i\) ranges from 0 to \(M-1\).

Proposition 4

Let \(U_{p,t}\) be either as in Lemma 3, and assume \( \kappa _n(p_1, \ldots , p_n, t_1, \ldots , t_n) = \text{ cum }(U_{p_1, t_1}-\theta _{p_1}, \ldots , U_{p_n,t_n}-\theta _{p_n}). \) Define for \(i = 1,2,\ldots , n-1\)

where the summation in \(t_j\) ranges from 0 to \(M-1\). Then \(\kappa _n(p_1, \ldots , p_n, t_1, \ldots , t_i)\) is bounded and satisfies

for \(i =1,2,\ldots ,n\).

Proof

(Proof of Theorem 1) First we will apply the Cramer–Wold theorem to derive the asymptotic normality of the time averages of the multivariate process \((Y_{J_0,t}, Y_{J_0+1,t}, \ldots , Y_{J,t} )^T\). Thus, as in Lemma 2, let \(\gamma _j\), \(j=J_0, \ldots , J\) be real constants and take the univariate time series formed by the linear combination, i.e.,

where \(U_{l,l',t} = 1/2 (X_{t-l} - X_{t-l'})^2\), \(V_{l,l',t} = \eta _{t-l} \eta _{t-l'}\), \(a_{l,l'} = \sum _{j=J_0}^J \gamma _j h_{j,l} h_{j,l'} \pi _{l-l'}\), \(\theta _{l,l'} = \gamma _{X, l-l'}\), and \( \omega _{l,l'} = \pi ^{-1}_{l-l'}\). We first prove a central limit theorem for \(R = M^{-1/2} \sum _{t=0}^{M-1} Q_t\). Using Zurbenko (1986, p. 2), to write the log of the characteristic function of R as

where \(B_n\) is the nth-order cumulant of \(Q_t\), and each \(t_i\) ranges from 0 to \(M-1\). Since \(Q_t\) is centered, \(B_1 (t_1)=0\). By Lemma 2, \(Q_t\) is stationary, the autocovariances \(s_{Q,\tau }\) of \(Q_t\) are absolutely summable and \(M^{-1} \sum _{t_1}\sum _{t_2} B_2 (t_1,t_2) \rightarrow \sum _\tau s_{Q,\tau } = S_Q (0) > 0 \). In order to prove the CLT for R, it suffices to show that \(\sum _{t_1,\ldots ,t_n} M^{-n/2} B_n (t_1, \ldots , t_n) \rightarrow 0\) for \( n=3,4,\ldots \).

Now using Brillinger (1981, p. 19), we break up the nth-order cumulant as follows:

But, applying the argument given in Mondal and Percival (2010), it follows that \( \text{ cum }( U_{p_1,t_1} V_{p_1,t_1}-\theta _{p_1} \omega _{p_1}, \ldots , U_{p_n,t_n} V_{p_n,t_n}-\theta _{p_n} \omega _{p_n} ) = o(M^{n/2}). \)

Note that \(\widehat{\nu }^2_j - \nu ^2_j\) is the average of \(Q_t\) over \(L_j-1 \le t\le N-1\) with \(\eta _{l-l'}\) replaced by its consistent estimate \({\hat{\eta }}_{l,l'}\). Then, Slutsky’s theorem is invoked to complete the proof that \(\widehat{\nu }^2_j, j=J_0, \ldots J\) is jointly asymptotically normal. \(\square \)

Proof

(Proof of Theorem 2) Note that \(\varvec{a}\) depends on J. We have \( \varvec{a}^T \varvec{1} = 0 \) and \( \varvec{a}^T \varvec{w} = 1. \) Then, since \(K = J - J_0 + 1\) is finite and \(\varvec{a}\) is normalized, as \(J \rightarrow \infty \), \(\varvec{a}^T = O(\varvec{1}^T)\); that is, for each i, \(a_i = O(1)\).

Letting \(\varvec{\xi } = (\log \nu _j^2: j=J_0,\ldots ,J)\), we have

An immediate consequence of Eqs. (2) and (13) is that the second term in the above equation goes to zero. The asymptotic normality of the first term holds by Theorem 1 and (14) in conjunction with the applications of the delta method and Slustky’s theorem.

To see that condition (14) holds in the gap-free case, note that \(\sigma _{j,j}\) is equal to

where \( K_{1,\delta } = \int |f|^{-4 \delta } | \varPsi (f)|^4 \, \text {d}f. \) This implies that

for some constant \(c_1\). \(\square \)

Rights and permissions

About this article

Cite this article

Craigmile, P.F., Mondal, D. Estimation of long-range dependence in gappy Gaussian time series. Stat Comput 30, 167–185 (2020). https://doi.org/10.1007/s11222-019-09874-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-019-09874-0