Abstract

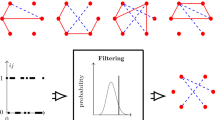

The increasing amount of data stored in the form of dynamic interactions between actors necessitates the use of methodologies to automatically extract relevant information. The interactions can be represented by dynamic networks in which most existing methods look for clusters of vertices to summarize the data. In this paper, a new framework is proposed in order to cluster the vertices while detecting change points in the intensities of the interactions. These change points are key in the understanding of the temporal interactions. The model used involves non-homogeneous Poisson point processes with cluster-dependent piecewise constant intensity functions and common discontinuity points. A variational expectation maximization algorithm is derived for inference. We show that the pruned exact linear time method, originally developed for change points detection in univariate time series, can be considered for the maximization step. This allows the detection of both the number of change points and their location. Experiments on artificial and real datasets are carried out, and the proposed approach is compared with related methods.

Similar content being viewed by others

Notes

Interaction times are assumed to be strictly positive and \(\nu _{M+1}\) is assumed to fall outside the time interval [0, T]: \(\mathbb {P}(\nu _{M+1} \ge T) = 1\).

Since the whole time interval [0, T] is considered, the intensity functions can be assumed (exceptionally) left continuous in \(t=T\). In formulas: \(\lambda _{kg}(T)=\lambda _{kg}(\eta _{D-1}\)).

We use the same notation to denote the set of \(K(K+1)/2\) intensity functions and the tensor because under our assumptions they correspond to two different views of the same object. Notice that the frontal slices of \({\varvec{\lambda }}\) are symmetric \(K\times K\) matrices since we are dealing with undirected graphs.

In the framework of change point detection for time series, a cost function to be minimized is associated with each time segment. In contrast, we introduce a gain function to be consistent with the problem formulation used so far. However, the two definitions are equivalent since the cost function can be thought as a gain function multiplied by \(-\,1\).

The same holds for the selected number of groups K (see Sect. 3.5).

Notice that in case the minimal partition is used, \(X_{ij}^{(u)}\) is trivially equal to one for the pair (i, j) interacting at \(t_{u-1}\) and zero for all the other pairs.

Map data are available from http://www.openstreetmap.org and copyrighted OpenStreetMap contributors.

References

Achab, M., Bacry, E., Gaïffas, S., Mastromatteo, I., Muzy, J.F.: Uncovering causality from multivariate Hawkes integrated cumulants. ArXiv preprint arXiv:1607.06333 (2016)

Airoldi, E., Blei, D., Fienberg, S., Xing, E.: Mixed membership stochastic blockmodels. J. Mach. Learn. Res. 9, 1981–2014 (2008)

Boullé, M.: Optimum simultaneous discretization with data grid models in supervised classification: a Bayesian model selection approach. Adv. Data Anal. Classif. 3(1), 39–61 (2009)

Casteigts, A., Flocchini, P., Quattrociocchi, W., Santoro, N.: Time-varying graphs and dynamic networks. Int. J. Parallel Emerg. Distrib. Syst. 27(5), 387–408 (2012). doi:10.1080/17445760.2012.668546

Corneli, M., Latouche, P., Rossi, F.: Block modelling in dynamic networks with non-homogeneous poisson processes and exact ICL. Soc. Netw. Anal. Min. 6(1), 1–14 (2016a). doi:10.1007/s13278-016-0368-3

Corneli, M., Latouche, P., Rossi, F.: Exact ICL maximization in a non-stationary temporal extension of the stochastic block model for dynamic networks. Neurocomputing 192, 81–91 (2016). doi:10.1016/j.neucom.2016.02.031

Daley, D.J., Vere-Jones, D.: An Introduction to the Theory of Point Processes. Volume I: Elementary Theory and Methods. Springer, Berlin (2003)

Daudin, J.J., Picard, F., Robin, S.: A mixture model for random graphs. Stat. Comput. 18(2), 173–183 (2008)

Dempster, A.P., Rubin, D.B., Laird, N.M.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B (Methodol.) 39(1), 1–38. http://www.jstor.org/stable/2984875 (1977)

Dubois, C., Butts, C., Smyth, P.: Stochastic block modelling of relational event dynamics. In: International Conference on Artificial Intelligence and Statistics, Volume 31 of the Journal of Machine Learning Research Proceedings, pp. 238–246 (2013)

Durante, D., Dunson, D.B., et al.: Locally adaptive dynamic networks. Ann. Appl. Stat. 10(4), 2203–2232 (2016)

Fortunato, S.: Community detection in graphs. Phys. Rep. 486(3–5), 75–174 (2010)

Friel, N., Rastelli, R., Wyse, J., Raftery, A.E.: Interlocking directorates in Irish companies using a latent space model for bipartite networks. In: Proceedings of the National Academy of Sciences, vol. 113, no. 24, pp. 6629–6634. doi:10.1073/pnas.1606295113. http://www.pnas.org/content/113/24/6629.full.pdf (2016)

Guigourès, R., Boullé, M., Rossi, F.: A triclustering approach for time evolving graphs. In: Co-clustering and Applications, IEEE 12th International Conference on Data Mining Workshops (ICDMW 2012), Brussels, Belgium, pp. 115–122. doi:10.1109/ICDMW.2012.61 (2012)

Guigourès, R., Boullé, M., Rossi, F.: Discovering patterns in time-varying graphs: a triclustering approach. In: Advances in Data Analysis and Classification, pp. 1–28. doi:10.1007/s11634-015-0218-6 (2015)

Hanneke, S., Fu, W., Xing, E.P., et al.: Discrete temporal models of social networks. Electron. J. Stat. 4, 585–605 (2010)

Hawkes, A.G.: Point spectra of some mutually exciting point processes. J. R. Stat. Soc. Ser. B (Methodol.) 33(3), 438–443 (1971)

Ho, Q., Song, L., Xing, E.P.: Evolving cluster mixed-membership blockmodel for time-evolving networks. In: International Conference on Artificial Intelligence and Statistics, pp. 342–350 (2011)

Hoff, P., Raftery, A., Handcock, M.: Latent space approaches to social network analysis. J. Am. Stat. Assoc. 97(460), 1090–1098 (2002)

Jackson, B., Sargle, J., Barnes, D., Arabhi, S., Alt, A., Giomousis, P., Gwin, E., Sangtrakulcharoen, P., Tan, L., Tsai, T.: An algorithm for optimal partitioning of data on an interval. In: Signal Processing Letters, pp. 105–108 (2005)

Jernite, Y., Latouche, P., Bouveyron, C., Rivera, P., Jegou, L., Lamassé, S.: The random subgraph model for the analysis of an ecclesiastical network in Merovingian Gaul. Ann. Appl. Stat. 8(1), 55–74 (2014)

Killick, R., Fearnhead, P., Eckley, I.A.: Optimal detection of changepoints with a linear computational cost. J. Am. Stat. Assoc. 107(500), 1590–1598 (2012). doi:10.1080/01621459.2012.737745

Kim, M., Leskovec, J.: Nonparametric multi-group membership model for dynamic networks. Adv. Neural Inf. Process. Syst. 25, 1385–1393 (2013)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Krivitsky, P.N., Handcock, M.S.: A separable model for dynamic networks. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 76(1), 29–46 (2014)

Latouche, P., Birmelé, E., Ambroise, C.: Overlapping stochastic block models with application to the French political blogosphere. Ann. Appl. Stat., 5(1) 309–336 (2011)

Lewis, P., Shedler, G.: Simulation of nonhomogeneous poison processes by thinning. Naval Res. Logist. Q. 26(3), 403–413 (1979)

Matias, C., Miele, V.: Statistical clustering of temporal networks through a dynamic stochastic block model. J. R. Stat. Soc. Ser. B 79(4), 1119–1141 (2017)

Matias, C., Rebafka, T., Villers, F.: Estimation and clustering in a semiparametric Poisson process stochastic block model for longitudinal networks. arXiv:1512.07075 e-prints (2015)

Nouedoui, L., Latouche, P.: Bayesian non parametric inference of discrete valued networks. In: 21-th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2013), Bruges, Belgium, pp. 291–296 (2013)

Nowicki, K., Snijders, T.: Estimation and prediction for stochastic blockstructures. J. Am. Stat. Assoc. 96(455), 1077–1087 (2001)

Rand, W.M.: Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66(336), 846–850 (1971)

Robins, G., Pattison, P., Kalish, Y., Lusher, D.: An introduction to exponential random graph (p*) models for social networks. Soc. Netw. 29(2), 173–191 (2007)

Sarkar, P., Moore, A.W.: Dynamic social network analysis using latent space models. ACM SIGKDD Explor. Newsl. 7(2), 31–40 (2005)

Sewell, D.K., Chen, Y.: Latent space models for dynamic networks. J. Am. Stat. Assoc. 110(512), 1646–1657 (2015)

Sewell, D.K., Chen, Y.: Latent space models for dynamic networks with weighted edges. Soc. Netw. 44, 105–116 (2016)

Snijders, T.A.: Stochastic actor-oriented models for network change. J. Math. Sociol. 21(1–2), 149–172 (1996)

von Luxburg, U.: A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007). doi:10.1007/s11222-007-9033-z

Wang, Y., Wong, G.: Stochastic blockmodels for directed graphs. J. Am. Stat. Assoc. 82, 8–19 (1987)

Xing, E.P., Fu, W., Song, L.: A state-space mixed membership blockmodel for dynamic network tomography. Ann. Appl. Stat. 4(2), 535–566 (2010). doi:10.1214/09-AOAS311

Xu, H., Farajtabar, M., Zha, H.: Learning granger causality for Hawkes processes. In: Proceedings of the 33rd International Conference on Machine Learning, pp. 1717–1726 (2016)

Xu, K.S., Hero III, A.O.: Dynamic stochastic blockmodels for time-evolving social networks. IEEE J Sel Top Signal Process. 8(4), 552–562 (2014)

Yang, T., Chi, Y., Zhu, S., Gong, Y., Jin, R.: Detecting communities and their evolutions in dynamic social networks a Bayesian approach. Mach. Learn. 82(2), 157–189 (2011)

Zreik, R., Latouche, P., Bouveyron, C.: The dynamic random subgraph model for the clustering of evolving networks. Comput. Stat. 32(2), 501–533 (2016). doi:10.1007/s00180-016-0655-5

Author information

Authors and Affiliations

Corresponding author

Appendix: Proofs

Appendix: Proofs

1.1 A.1 Proof of Proposition 1

Proof

Notice, first, that the central factor on the r.h.s. of the equality in Eq. (3) can be written as

where \({\mathbf {1}}_{{\mathcal {G}}}(\cdot )\) is the indicator function on a set \({\mathcal {G}}\) and \(\mathcal {A}^{(ij)}\) has been defined in (1). By inverting the product on the right-hand side, because of the indicator function we get

where \(\nu _m^{(i,j)}\) are the interaction times in the set \(\mathcal {A}^{(i,j)}\), whose cardinality is \(M^{(i,j)}\). Thanks (5), the following holds

Note that the last equality employs the definition of counting process

By replacing (19) into (3) and using that

it suffices to take the logarithm of the likelihood and the proposition is proven. \(\square \)

1.2 A.2 Proof of Proposition 3

Proof

The following objective function is taken into account

This function has to be maximized with respect to both \(\tau \) and the N Lagrange multipliers \(l_1, \ldots , l_N\), introduced to take into account the normality of the lines of \(\tau \). The most difficult step consists in taking the partial derivative of the objective function with respect to \(\tau _{i_0k_0}\). We first focus on those terms of \(\mathcal {L}(\cdot )\) depending on d

Hence

where the last equality comes from the symmetry (i.e., interactions are undirected) of the frontal slices of tensors X and \({\varvec{\lambda }}\). Notice that the last term in the above equation is the function inside the exponential in Proposition 3. The remaining terms of \(\mathcal {L}(\cdot )\), not involving d, can be differentiated straightforward. Imposing the partial derivatives of \(\mathcal {L}(\cdot )\) equal to zero and using the above equation lead to the following system

The solution is obtained straightforward after some manipulations, and this concludes the proof. \(\square \)

1.3 A.3 Proof of Proposition 4

Proof

The following definitions are introduced to keep the notation uncluttered

Moreover, for every \(t_{u_e}<t_{u_f}<t_{u_g}\), the following shorthand notation is used

and similarly for \(\Delta ^{f,g}\) and \(\Delta ^{e,g}\). Hence, we get

where the first and the last equalities come from the definition of \({\mathcal {G}}(\cdot )\). This concludes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Corneli, M., Latouche, P. & Rossi, F. Multiple change points detection and clustering in dynamic networks. Stat Comput 28, 989–1007 (2018). https://doi.org/10.1007/s11222-017-9775-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-017-9775-1