Abstract

Software inspection is a widely-used approach to software quality assurance. Human-Machine Pair Inspection (HMPI) is a novel software inspection technology proposed in our previous work, which is characterized by machine guiding programmers to inspect their own code during programming. While our previous studies have shown the effectiveness of HMPI in telling risky code fragments to the programmer, little attention has been paid to the issue of how the programmer can be effectively guided to carry out inspections. To address this important problem, in this paper we propose to combine Risk Number with Code Inspection Diagram (CID) to provide accurate guidance for the programmer to efficiently carry out inspections of his/her own programs. By following the Code Inspection Diagram, the programmer will inspect every checking item shown in the CID to efficiently determine whether it actually contain bugs. We describe a case study to evaluate the performance of this method by comparing its inspection time and number of detected errors with our previous work. The result shows that the method is likely to guide the programmer to inspect the faulty code earlier and be more efficient in detecting defects than the previous HMPI established based on Cognitive Complexity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of computer hardware and the swift expansion of software functionalities and size, software vulnerability issues continue to emerge relentlessly. Software inspection is a static analysis technology with a wide range of practical uses, which can effectively identify codes that may cause software vulnerabilities, thereby ensuring and improving the reliability and quality of software (Gregory, 1993; Liu et al., 2011; Parnas & Lawford, 2003). However, due to the continuous increase in the code size of modern software systems, it also brings huge pressure to software inspection. Since the programmer knows the details of his/her own code, he/she should have a great potential to be effective and efficient in identifying defects, especially subtle ones, in his/her own code (Assal & Chiasson, 2019). Therefore, using a mechanism to guide programmer to self-inspect the code can reduce the workload of code reviewers. Furthermore, the recent widespread use of Code-Generating Models by programmers makes self-inspection even more crucial. Code-Generating Models are trained from open source projects in GitHub, so the code they generate is very likely to be risky. In the meanwhile, programmers also have a tendency to pay less attention to the details of their own code due to over confidence in the programs they develop and in Code-Generating Models (Barke et al., 2023). In our previous studies, we have proposed Human-Machine Pair Inspection (HMPI) as a new technique to deal with this problem. We have achieved some progress in telling the risky code fragments based on Cognitive Complexity in our previous publication (Dai & Liu, 2021). And further improved the accuracy of pointing out high-risk code during the HMPI process in our recent work (Dai et al., 2023). But we have not been able to address the critical problem of how the programmer can be effectively and efficiently guided to actually conduct inspections.

In this paper, we propose a novel technique to deal with the problem just mentioned above. The technique is characterized by an effective combination of Risk Numbers with a Code Inspection Diagram (CID). The essential idea of the combination is first to properly assign Risk Numbers to code fragments according to the risky level of involving defects and then create a CID to show the workflow for inspection. The advantage of this approach is that it unleashes the potential of both programmers and machines in the code inspection process, apart from enhancing the accuracy of identifying risky code, it also provides a visualization of elements that require inspection. We use a statistical tool that investigates the relationship between variables, namely regression analysis, to more precisely represent the relationship between nested structure and the software fault proneness. Thus, the degree of risk that the code fragment contains errors is calculated, expressed as a Risk Number, which is used to locate possible faults and then guide inspection. This allows developers to focus on more complex or error-prone code. To be more eye-catching than the existing checklist-based inspection, we propose to construct the checklist as a CID based on Risk Number. The construction principle of the CID is that questions for code with higher risk value are placed in the higher priority position, and questions for code with the same priority are placed in the same priority position from left to right according to the order in the source code. Programmers are likely to be so familiar with the code they write that they overlook potential defects. Therefore, the CID reordered according to Risk Numbers is able to attract more attention than inspection in code order. To make the inspection process more effective and efficient, we can utilize information about the relationship and dependency of high-risky program’s elements to guide the reviewer to determine whether it actually contain bugs or not. After the machine locates the instruction that may contain errors, there should be more than one variable we need to analyze, so it is more suitable to use the simultaneous slicing (Danicic & Harman, 1996) obtained by the joint calculation of multiple static slices. Owing to this technology, we can make the checklist only contain instructions with high Risk Number and dependencies with them, thereby narrowing the scope of inspection and improving the efficiency of inspection.

Our major contributions in this paper are:

-

A software metric called Risk Number based on statistical and regression analysis is reused, in an effort to focus on accurately locating faulty code fragments for inspection.

-

A Code Inspection Diagram constructed based on Risk Number and program slicing for visualizing the checklist according to certain rules, thereby guiding programmers to perform inspection.

-

A novel Human-Machine Pair Inspection method for facilitating inspection process. Improve the efficiency and effectiveness of inspection by utilizing Risk Numbers and CID to attract programmers’ attention to high risk code fragments.

The remainder of the paper is arranged as follows. We next provide the briefly introduction of HMPI in Section 2 to prepare for the discussions in the paper. Section 3 elaborates on the method of Risk Number calculation, the technical principles of CID and how Risk Number and CID can help detect bugs, followed by the analysis of our case study results and the discussion of its limitations in Section 4. Section 5 gives an overview of related work. Insights and the future works derived from our study are concluded in Section 6.

2 Background

During the peer review process, when reviewers inspect complex and large amounts of code, it can sometimes be challenging to fully comprehend all the details of the code being reviewed due to a lack of background information or domain knowledge (Thongtanunam et al., 2015). Self-inspection of code by programmers can overcome the aforementioned issue. However, due to programmers’ excessive familiarity and confidence in the code constructed by themselves (Ruangwan et al., 2019), the effectiveness of code self-inspection may be compromised by challenges such as the absence of external perspectives and cognitive biases (Mohanani et al., 2018).

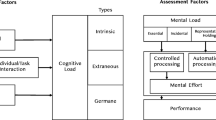

In order to mitigate these deficiencies of existing inspection techniques, we proposed Human-Machine Pair Inspection (HMPI) (Dai & Liu, 2021) in our previous work, which is support for the Human-Machine Pair Programming (HMPP) (Liu, 2018) technology. HMPP means that a programmer and computer work together to construct a program, where the programmer plays the role of driver and the computer plays the role of observer. Similarly, the process of Human-Machine Pair Inspection is that computer points out where to inspect in the code and guides the programmer to carry out the inspection. The main process is shown in Fig. 1.

Specifically, our proposed HMPI utilizes Cognitive Complexity to guide programmers to inspect their own code. The process consists of six major steps: Preprocess the recorded data, Generate the intermediate representation (IR) and control flow graph (CFG), Calculate the Cognitive Complexity, Generate the inspection syntax graph, Establish the checklist, Analyze the code to detect defects. Not precisely understanding the expressions involved in the process will not severely affect the reader in understanding the discussions on the novel HMPI in this paper. For brevity, we do not explain the details of every process.

The HMPI based on Cognitive Complexity cannot accurately point out which code fragments are structurally more complex and error-prone, nor can it subtly draw the inspector’s attention to error-prone code, resulting in inefficiency for large-scale software engineering projects. Therefore, we propose to use Risk Number and CID to further support HMPI.

3 Methodology

In this section, we first begin with a software metric called Risk Number, which identifies high-risky code fragments for inspection. Then we introduce how to transform the identified code fragments into Code Inspection Diagrams based on the three fundamental structures of the code. We end the section by describing how to guide the inspection process by Risk Number and CID.

3.1 Calculation method of Risk Number

This subsection discusses the technique for calculating Risk Numbers, including why we proposed this novel software metric and how to get Risk Numbers based on statistical data.

Intuitively, a linear series of n loop structures would be more maintainable and less error-prone than the sequential nesting of the same n structures. Therefore, the Risk Number takes the nested structure into evaluation.

In Cognitive Complexity, each time a structure consisting of a loop structure or a conditional statement is nested inside another such structure, a nesting increment is added for each level of nesting. As shown in Listing 1, the For statement is one level of nesting, then the value of Cognitive Complexity is increased by one, and the While statement is 2-level nesting, so the value of Cognitive Complexity is increased by 2. Therefore, for any project, any functional code, any level of education and practice programmers, the complexity calculation is immutable.

However, through the statistics of errors in different projects, we find that as the nesting level increases, the trend of error occurrence is not fixed. For instance, experienced programmers may make far fewer errors than fresh developers when dealing with projects with many nesting statements. There is no doubt that the measure of complexity is influenced by the education level of team members and the project itself. But it is extremely difficult to directly quantify the relationship between these factors and errors. Intuitively, as the level of nesting increases, the complexity is unlikely to just increase by 1 gradually, and the difficulty of understanding the code will increase greatly. Therefore, we try to use the source code of the projects developed by the project team and the review results of the historical source code as statistical analysis data from a statistical point of view.

Figure 2 shows our case study about the relationship between the risk of code containing bugs and the level of nesting in the flow-break structures by statistics. Through the statistics of historical errors caused by programmers in our laboratory, we found that with the increase of nesting levels, the trend of error occurrence is much larger than the increment in Cognitive Complexity. In the next subsection our statistical process and the calculation of the Risk Number are described in detail.

We mainly evaluate the Risk Number based on two principles, in other words, it is composed of two different types of increments.

-

Nesting increment (statistical-based): assessment of risk factors for nesting control flow structures.

-

Judgment increment (add one): assessment for each break in the linear flow of the code.

For each statement, the Risk Number is the sum of these two increments, and for a code fragment, the Risk Number is the sum of Risk Numbers of all statements in it. A guiding principle in the computation of the Risk Number is that it should be able to reflect the influence of the complexity of the nested structure on whether the code contains errors. This may encourage simplified code structure to make the code more readable, maintainable and testable. In order to make the Risk Number better reflect the impact of the nested structure on whether the code is prone to errors in reality, there are three steps.

3.1.1 Collecting statistical data from GitHub

-

Select the project to be counted. Find the open-source systems project with multiple forks. This is because multiple forks usually mean multiple people participating, and the project has more pull requests.

-

Identify defective code fragments manually. Find closed pull requests those have been merged into master by code reviewers. Determine whether there are bugs based on code changes.

-

Identify the level of nesting in the flow-break structures. Convert the source code to Control Flow Graph (CFG) (Ball & Horwitz, 1993), and perform loop analysis to get the loop nesting tree (Ramalingam, 2000). Get the nesting level according to the depth of the tree, and get the number of nested structures with different nesting levels according to the width of the tree.

-

Assign labels to errors. According to which level of nesting the bug exists in, assign the corresponding label to the error (e.g., if there is an error in a code fragment with 2-level nesting of the If statements, the error is labeled level-2).

-

Count the number of nested structures with errors for each label.

To explain how to identify errors and assign labels, we take defects in the ONNX project in GitHubFootnote 1 as an example, as shown in Fig. 3. In the red rows of figure, the code with a minus sign in front of it is an error. In the corresponding green rows, the code with a plus sign in front of it is the modified one. It can be seen that there is an “if” statement nested in the for loop, so the error exists in the 1-level nesting, the label assigned to it is “level-1”.

3.1.2 Calculating the probability of errors in nested structures

The number of structures with different nesting levels in the project obtained by statistics is denoted as \(t_i\). That is, the number of 1-level nesting is represented by \(t_1\), the number of 2-level nesting is represented by \(t_2\),..., the number of n-level nesting is represented by \(t_n\). Then, count the number of bugs for each label, which is denoted as \(d_i\). The number of bugs labeled level-1 is recorded as \(d_1\), the number of bugs labeled level-2 is \(d_2\),..., the number of bugs with the label of level-n is \(d_n\). The probability that each level of nesting contains bugs is denoted by \(P_i\), which is a 0-1 distribution. \(P_i\) represents the probability of errors occurring in \(t_i\) nesting and \(1 - P_i\) represents the probability that the \(t_i\) nesting does not contain errors. The probability that 1-level nesting contains bugs is \(P_1\),..., the probability that n-level nesting contains bugs is \(P_n\). The probability of errors in different nested structures can be computed as

3.1.3 Regression analysis on probability

For the purpose of facilitating understanding, utilization, and comparison with existing complexity metrics, we initialize the statistically obtained probability to obtain nesting increments(\(I_{nesting}\)) for different nesting levels. Specifically, the probability \(P_1\) of 1-level nesting is used as a reference standard, and the rest of the data is divided by this value to obtain the nesting increments of different nesting levels (including \(P_1\) divided by itself to get the number 1), calculated as follows.

Then we get a function(\(I_{nesting}(x)\)) to fit these points by regression analysis (Sykes, 1993), so that we can get the relationship between nesting level i and increment \(I_{nesting}\) for nested structure. For convenience, the value of \(I_{nesting}(x)\) retains only one digit after the decimal point. Therefore, even if some data of any nesting level is missing in our statistical process (for example, there is no 3-level nesting in the data set), we can also infer the increment corresponding to the nesting level without statistical data through the relationship represented by the function.

Another guiding principle of the Risk Number formula is that readability, understandability, testability, and maintainability of structures that break the normal linear flow of code require extra effort from the people involved. For this reason, Risk Number assesses judgment increments for judgment nodes in CFG: for, while, do while, if, #if, #ifdef, else if, elif, catch, ternary operators, logical operators, and case,\(\cdots\). Specially, for a switch, all its cases cause a single judgment increment. Taking the QuickSortion program written in Java as an example, the two increments and Risk Number are listed in Listing 2.

3.2 Code Inspection Diagram

The Risk Number can highlight code fragments with high risk. Therefore, to implement HMPI, the next issue to address is how to guide developers in inspecting the code they have written. In this subsection, we will discuss how the highly risky program structure can be translated into a Code Inspection Diagram. Our target is to guide programmers inspect the code by listing questions about the code fragment.

A Code Inspection Diagram (CID) is a structure used to represent the checklist to be inspected by reviewers. A CID is composed of inspection module and inspection flow, where the inspection module includes statement information and checklist represented by rectangles, and the inspection flow is represented by an arrow. For the sake of simplicity, module is used to refer to inspection module in the following text. Each module consists of 2 to 3 rectangles, depending on whether the Risk Number of the statement is the largest. Since program slicing will be performed on the most risky statements resulting in slices, a rectangle representing the slice information will be added in the middle, see a detailed discussion in Section 3.3. The modules for other statements only have 2 rectangles. The rectangle on the left (and in the middle) represents statement information, which is a three-layer structure. The first layer represents the Risk Number and line number, the second layer is the abbreviation of the code, and the third layer displays the structural category of the code. The rectangle on the right shows various issues in the checklist used in the traditional code inspection process, reflecting possible errors in this code.

The main idea of module arrangement rules is that the higher the Risk Number, the higher the priority. If the Risk Numbers are the same, the source code order will be followed. Therefore, the module for the code with the highest Risk Number is placed in the first row, and the module for the code with the second highest Risk Number is placed in the second row, in descending order. Modules with the same Risk Number are arranged from left to right according to the source code order. Thus, the inspection flow is from top to bottom and from left to right. In other words, the reviewer inspects the code by answering questions in the CID according to this order.

Since a source code generally consists of the three fundamental constructs: sequence, selection, and iteration, our discussion will focus on the issue of how to construct the CID for these three constructs. The modules given in this paper are not intended to cover all of the potentially possible cases but to serve as examples to illustrate the essential principles for the construction.

Based on the top 25 most dangerous software weaknesses,Footnote 2 we have set the following checking items for the sequential structure:

-

Is the type in the declaration statement correct?

-

Is the variable initialized properly?

-

Is the function called correctly?

-

Are there any defined but unused variables?

Set the following checking items for the selection structure:

-

Is every logical expression (or condition) correct ?

-

Can the decision and conditions (logical expressions) of the if-then-else or if-then statement becomes true and false, respectively?

Set the following checking items for the iteration structure:

-

Is the scope of the loop variable correct?

-

Can the loop condition in a while or for statement becomes true and false, respectively? Is it written correctly?

-

Is there any appropriate statement updating the loop variables inside the body of the loop statement (while, for)?

In addition to the above checking items, programmers can also add other checking items by themselves based on their frequently encountered problems.

3.2.1 Module of sequence

The sequential structure includes assignment statements, expression statements, function call statements, etc. in addition to conditional statements and loop statements. Pattern 1: the sequence statement will be constructed into a module in CID in Fig. 4. According to the calculation rules of Risk Number, the Risk Number of a sequential statement may have a judgment increment or may be zero. From this, it can be inferred that the sequential structure will exist in the program slicing results of the statement with the highest Risk Number, in the structure of “else”, as well as the last module of the CID.

3.2.2 Module of selection

There are two kinds of selection statements: \(if\ C\ then\ S_1\) and \(if\ C\ then\ S_1\ else\ S_2\). Their module in CID are described in two patterns below.

Pattern 2: Let the selection statement be \(if\ C\ then\ S_1\), then, the statement will be constructed into a module in CID in Fig. 5. When the Risk Number of a statement is the maximum value in the CID, program slicing will be performed on it. The obtained slice is basically composed of sequential statements, and its code information will be placed in the middle rectangle, as shown in the blue rectangles in Fig. 5. Otherwise, no program slicing will be done and the module consists of just two rectangles.

Pattern 3: Let the selection statement be \(if\ C\ then\ S_1\ else\ S_2\), then the statement will be constructed into modules in CID in Fig. 6. This pattern consists of two modules, one built for \(if\ C\ then\ S_1\) and the other for \(else\ S_2\) respectively. The former is the same as pattern 2 and will not be described in detail here, so only the latter module is shown in Fig. 6. The module for \(else\ S_2\) only retains the statements in the else body, or statements starting from the else until the statement that causes a break in the linear flow of the code(if any). It can be inferred that only sequential statements can be retained in the module for \(else\ S_2\), so that the checking items are consistent with those of the sequential statements. Note that depending on the value of Risk Number, the module for \(if\ C\ then\ S_1\) and for \(else\ S_2\) can exist discontinuously in CID.

3.2.3 Module of iterations

We focus our discussion on three iteration statements: \(while-do\), \(do-while\), and for.

Pattern 4: let \(while\ C\ do\ S\) be a \(while-do\) statement, let \(do\ S\ while\ C\) be a \(do-while\) statement, and let \(for(int\ i = 0;\ C,\ S_1)\ S\) be a for iteration statement. There are two ways to construct this iteration into a CID. If statement S implements a simple function, then the whole \(while-do\), or \(do-while\), or for statement can be translated into a single module, as shown in Fig. 7. However, if S implements a rather large or complex function, it will be translated into a high level process and its decomposition based on Risk Number, which are other high level modules and is also composed of these four patterns.

The four patterns discussed above have demonstrated how each kind of program structure or statement can be converted to a CID showing how programmers can perform code inspections. In the next subsection, we will elaborate on how to utilise Risk Number and CID to improve inspection process.

3.3 Code inspection based on Risk Number and CID

According to the above calculation of Risk Number and CID, we proposed the novel HMPI method, which is also performed by two roles, namely machine and programmer. The machine is responsible for performing the following steps:

-

Assign the increment for the code fragments to be inspected line by line.

-

Find the statement s of code with the maximum increment, and extract all the variables \(v_i\) contained in it.

-

Utilize \(\{\langle s, v_1\rangle ,\cdots ,\langle s, v_n\rangle \}\) as the simultaneous slicing criterion, where \(\langle s,v_i\rangle ,i\le n\) is a static slicing criterion. In other words, perform the simultaneous slice calculation on the variable set \(\{v_1, v_2,...v_n\}\) in the statement s. If there are multiple statements with the same maximum Risk Number, perform simultaneous slice calculation on them all.

-

Translate the code fragments and slices(if any) into CID composed of statement information and checklist.

While the human is a developer who changes the source code and inspect codes by answering the questions listed in the CID from top to bottom and from left to right.

Figure 8 shows the process of our novel HMPI method, which mainly consists of two phases: Risk Number Calculation and CID Translation, where the former consists of tasks to draw the programmer’s attention to high-risk code, while the latter is the phase when the guidance is provided for inspection.

The first phase, Risk Number Calculation, the process of calculating the Risk Number for source code takes place. The source code being written by the programmer is converted into an CFG. Then each statement is assigned a Risk Number based on the historical bug dataset.

In the second phase, CID Translation, the statement with the maximum Risk Number is simultaneously sliced to obtain the high-risk code fragment. Then convert the code to CID, the programmer conduct inspection according to the checklist in CID, and finally continues programming, looping in sequence.

We use a part of a user verification and data processing system implemented in C language that contains errors as an example to illustrate how the general principle of our inspection method works for practical operations.

By applying our method, we have assigned the Risk Number for the code fragment as showing in Listing 3.

Figure 9 shows the CID for the example. It can be seen from the figure that the Risk Number in the first row is the highest, both are equal to 2, and then decreases row by row. The Risk Numbers in the second row are all equal 1, and the Risk Number in the third row is 0. After slicing the two most risky statements, the first module gets the code information in the blue rectangle, and the second module has no remaining code after slicing. The programmer perform inspection by answering the questions in orange rectangles based on the flow of CID marked by arrows. Then he or she will find that it contains four potential risks that may lead to vulnerabilities, namely hard-coded values, SQL injection, array out-of-bounds, and integer overflow.

4 Case study

In this section we report the experimental campaign we performed to evaluate the effectiveness and efficiency of the proposed Risk Number metric and CID in inspection. We assess whether the proposed inspection strategy can assist programmer in practice through the detected error rate and inspection time. We use part of a stock reservation and purchase system implemented in Java as an example. Although we use Java in our examples, the principle of our inspection method is language-independent. We first discuss the calculation of Risk Number, and then we discuss the experimental results.

4.1 Calculating the Risk Number

After statistics and calculations, the probabilities of 1-level, 3-level and 4-level nesting are obtained, which are 5%, 24% and 35.5% respectively (no 2-level nested structure in the project). Next, initialization for probabilities to get the nesting increments of different levels of nesting. The increment obtained for the 1-level nesting is 1, the 3-level nesting is 4.8, and the 4-level nesting is 7.1, as shown in Fig. 10.

Then we get a function to fit these points by regression analysis, as shown in Fig. 11.

The resulting function has a weight of 2.01 and a bias of –1.04999999. Therefore, we can get the relationship between nesting level i and increment \(I_{nesting}\) for nested structure in Risk Number is,

By substituting \(i=2\) into this formula, it can be calculated that the 2-level increment is about 3, which is represented by the blue dot in Fig. 11. The corresponding probability is 15%. Table 1 lists the nesting level, its probability, its nesting increment, and its Cognitive Complexity. It can be observed from Table 1 that the weight of our method is higher than Cognitive Complexity, which can better reflect the difficulty of understanding and maintaining the code due to the increase in nesting levels.

4.2 Experimental results

We have invited 12 students from our university to conduct an experiment on our proposed method, comparing it with our previous HMPI methods. For simplicity, the method proposed in this paper is referred to as RNCID-HMPI, the previous HMPI based on Cognitive Complexity is referred to as CCHMPI and the HMPI based on Risk Number without CID is referred to as RNHMPI. All these three methods were applied to the Human-Machine Pair Inspection for part of the stock system, and the results are analyzed and compared in terms of efficiency and effect. Many existing toolkits (such as ‘ast’ package in Python) can support identifying the nested structure in CFG to help calculate the Risk Numbers. And several existing tools (such as SimSlice for simultaneous slicing on Java code) can support simultaneous slicing. But the tools to be installed on the machine for the entire RNCID-HMPI process are still under development, so these 12 participants were divided into groups of two. One member is responsible for programming and inspection, while the other calculates the Risk Numbers and provides CID after program slicing each time the partner completes programming of a function (Subjects are recorded as Group1-Group6). After completing their respective tasks, switch roles and conduct the same experiment again(Subjects are recorded as Group1’ -Group6’). Group 1,2 used RNCID-HMPI method, Group 3,4 used RNHMPI method, and Group 5,6 used CCHMPI. The subjects are students of the same university, and it can be assumed that these 12 students have comparable programming ability. Each programming and inspection session was strictly controlled to ensure that the students worked independently.

The stock reservation and purchase system is required to provide the following functions: customer registration, cancel a customer registration, stock registration, cancel a stock information, remind the customer of a specific stock information, purchase a stock by a customer, sell a stock by a customer. Each group has the same task, which is to implement this system. The HMPI method they used, the number of errors inspected out according to the checklist, the total number of errors actually generated throughout the implementation, the detected error rate obtained by dividing the number of errors detected according to the checklist by the total number of errors and the time spent on inspection are all recorded in Table 2.

By comparing the number of errors detected by the checklist guidance and the total number of errors shown in Table 2, it is clear that RNCID-HMPI is more effective in pointing out code that may have errors. Specifically, by dividing the number of errors detected according to the checklist by the total number of errors actually generated, it can be concluded that the checklist generated by RNCID-HMPI leads to greater than or equal to 75% of the errors detected, while the effectiveness of RNHMPI is around 60% and CCHMPI does not exceed 50%. The average detected error rate of RNCID-HMPI, RNHMPI, and CCHMPI are 77.85%, 64.79%, and 46.6% respectively. We conducted a significance analysis to compare the detected error rates obtained by RNCID-HMPI versus RNHMPI and CCHMPI. The computed results show that the p-values are approximately 0.000495 and 0.00000104, which are less than the significance level 0.05. This indicates a statistically significant difference between the two groups in terms of detected error rates. Furthermore, it implies that the method proposed in the paper better reflects the impact of nested structure on software quality and accurately attracts the programmer’s attention. The group using RNCID-HMPI method also take less inspection time than other method, probably benefit from the reasonable inspection order of CID.

4.3 Threats and validity

The proposed Risk Number is derived from statistical data, providing a more accurate reflection of the actual situation. However, it is crucial to note that limited or inaccurate data can compromise the precision of our high-risky code identification, thereby impacting the construction of CID.

HMPI harnesses the potential of both machines and programmers, albeit with a heavy reliance on programmer involvement. While we minimized variations in knowledge background and programming ability among participants in the experiment, real-world scenarios involve inherent differences among programmers. Therefore, the assistance provided by machine guidance to programmers will inevitably vary across individuals.

We present a framework for machine-guided programmer inspection, offering programmers some checklists in CID. However, the concerns provided in CID are based on the code structure and may not be precise for every line of code. Further judgment by the programmer is required during the inspection process.

5 Related work

Code inspection

Since Fagan pointed out in his theoretical work (Fagan, 2002) that formal design and code inspections and face-to-face meetings reduced the number of errors detected during the testing phase in small development teams, many researchers began to study inspection. The early inspection research focused on different variations of inspection processes (Ackerman et al., 1989; Aurum et al., 2002; Kollanus & Koskinen, 2009) and in-process details like reading techniques (Basili et al., 1996; Shull et al., 2000) and different effectiveness factors (Biffl et al., 2001; Sauer et al., 2000). The commonly used method to guide inspection is checklist-based inspection (Thelin et al., 2003), which serves the purpose of provoking reviewers to look for more than they otherwise might do and increasing the number of bugs found by a reviewer. The aim of each reviewers is to find the maximum number of potential defects by answering all the questions in the checklists. But the list format is less eye-catching than diagrams.

Due to the adoption of agile methods and distributed software development, code inspections are currently done in a less formal way than in the past, improving the efficiency of their early forms. The lightweight variant of code inspection has been referred to as modern code review (MCR) (Bacchelli & Bird, 2013), which is a flexible, tool-based, and asynchronous process. The general idea of MCR is that reviewers (who may be other developers) other than the author of the code (i.e., the programmer) evaluate code changes to identify defects and quality issues, and decide whether to discard or integrate the changes into the main project repository. Nowadays, most of the existing inspection techniques (Islam et al., 2022; Sultana et al., 2023; Tufano et al., 2022) focus on the support of the peer review approach such as MCR. But understanding code changes, their purpose, and motivations has always been the main challenge faced by reviewers when reviewing code changes (Davila & Nunes, 2021), which reduces the effectiveness of the methodologies, especially for complex systems.

There is no doubt that programmers comprehend their code most accurately. In general, the reviewer does not understand the details of the code in the peer review process, and the programmer is too confident in inspecting the code constructed by himself or herself, which is likely to be a hurdle for bug finding. Our HMPI (Dai & Liu, 2021) enhances the benefits of machine by utilising it to intelligently guide the programmer to carry out inspections of the program code during the program construction process. After further improving the accuracy of pointing out risky code and the efficiency of inspection (Dai et al., 2023), this paper provides a framework for visualizing the inspection process to guide inspections.

Recently, a number of approaches have leveraged Generative Pre-Trained Transformer (GPT) for code inspection (Crandall et al., 2023; Georgsen, 2023; Szabó & Bilicki, 2023). Unlike our approach, these studies primarily focus on inspecting complete code rather than aiding programmers in inspecting code while programming. Moreover, improving the accuracy of GPT tools in code inspection and overcoming reliability problems inherent to GPT models remains unresolved.

Software metric

Many software metrics have been proposed and used to assist software inspection by locating possible faults. Since the inspection method we conceived is to locate possible faults by the machine during the programming process, the more suitable software metrics to assist the positioning are the Cyclomatic Complexity (McCabe, 1976) and Cognitive Complexity (Campbell, 2018). While Cyclomatic Complexity accurately measures testability, most developers agree it fails at measuring understandability which is also crucial for software quality. Although Cognitive Complexity is a metric designed specifically to measure understandability, it is a heuristic and simplistic approach to decision counting and does not accurately indicate the relationship between complex nested structure and faults probability.

6 Conclusion

In this work, we have described a novel software quality metric derived from statistics named Risk Number, and utilize it with program slicing to construct a Code Inspection Diagram to improve the efficiency of HMPI. We have pointed out shortcomings in Cognitive Complexity, and enhanced previous work by collecting statistics from real programs and regression analysis to get new nesting increments. In addition, we have explained how to use Risk Number and program slicing to point out the statements that need to be inspected, and construct a suitable Code Inspection Diagram to guide the inspection. Furthermore, a case study was conducted to illustrate Risk Number and CID, which shows that our method is likely to be effective and efficient in detecting defects.

There are several questions and areas that could be investigated more carefully in the future, including making the process more automatic, incorporating more error-causing factors into the Risk Number, and making full use of history code data and providing targeted inspection diagrams.

Data availability

No datasets were generated or analysed during the current study.

References

Ackerman, A. F., Buchwald, L. S., & Lewski, F. H. (1989). Software inspections: an effective verification process. IEEE Software, 6(3), 31–36.

Assal, H., & Chiasson, S. (2019). ‘Think secure from the beginning’: A survey with software developers. In: Brewster, S. A., Fitzpatrick, G., Cox, A. L., & Kostakos, V. (eds.) Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI 2019, Glasgow, Scotland, UK, May 04-09, 2019, p. 289.

Aurum, A., Petersson, H., & Wohlin, C. (2002). State-of-the-art: Software inspections after 25 years. Software Testing, Verification and Reliability, 12(3), 133–154.

Bacchelli, A., & Bird, C. (2013). Expectations, outcomes, and challenges of modern code review. In: 2013 35th International Conference on Software Engineering (ICSE), IEEE, pp. 712–721.

Ball, T., & Horwitz, S. (1993). Slicing programs with arbitrary control-flow. In: International Workshop on Automated and Algorithmic Debugging, Springer, pp. 206–222.

Barke, S., James, M. B., & Polikarpova, N. (2023). Grounded copilot: How programmers interact with code-generating models. Proceedings of the ACM on Programming Language, 7(OOPSLA1), 85–111.

Basili, V. R., Green, S., Laitenberger, O., Lanubile, F., Shull, F., Sørumgård, S., & Zelkowitz, M. V. (1996). The empirical investigation of perspective-based reading. Empirical Software Engineering, 1(2), 133–164.

Biffl, S., Freimut, B., & Laitenberger, O. (2001). Investigating the cost-effectiveness of reinspections in software development. In: Proceedings of the 23rd International Conference on Software Engineering. ICSE 2001, IEEE, pp. 155–164.

Campbell, G. A. (2018). Cognitive complexity: An overview and evaluation. In: Proceedings of the 2018 International Conference on Technical Debt, pp. 57–58.

Crandall, A. S., Sprint, G., & Fischer, B. (2023). Generative pre-trained transformer (gpt) models as a code review feedback tool in computer science programs. Journal of Computing Sciences in Colleges, 39(1), 38–47.

Dai, Y., & Liu, S. (2021). Applying cognitive complexity to checklist-based human-machine pair inspection. In: 2021 IEEE 21st International Conference on Software Quality, Reliability and Security Companion (QRS-C), IEEE, pp. 314–318.

Dai, Y., Liu, S., Xu, G., & Liu, A. (2023). Utilizing risk number and program slicing to improve human-machine pair inspection. In: 2023 27th International Conference on Engineering of Complex Computer Systems (ICECCS), IEEE, pp. 108–115.

Danicic, S., & Harman, M. (1996). A simultaneous slicing theory and derived program slicer. In: 4th RIMS Workshop in Computing.

Davila, N., & Nunes, I. (2021). A systematic literature review and taxonomy of modern code review. Journal of Systems and Software, 177, 110951.

Fagan, M. (2002). Design and code inspections to reduce errors in program development. In: Software Pioneers, Springer, pp. 575–607.

Georgsen, R. E. (2023). Beyond code assistance with gpt-4: Leveraging github copilot and chatgpt for peer review in vse engineering. In: Norsk IKT-konferanse for Forskning Og Utdanning.

Gregory, F. (1993). Software formal inspections standard. Technical Report NASA-STD-2202-93, NASA Office of Safety and Mission Assurance, Washington, DC, USA.

Islam, K., Ahmed, T., Shahriyar, R., Iqbal, A., & Uddin, G. (2022). Early prediction for merged vs abandoned code changes in modern code reviews. Information and Software Technology, 142, 106756.

Kollanus, S., & Koskinen, J. (2009). Survey of software inspection research. The Open Software Engineering Journal,3(1).

Liu, S. (2018). Software construction monitoring and predicting for human-machine pair programming. In: International Workshop on Structured Object-Oriented Formal Language and Method, Springer, pp. 3–20.

Liu, S., Chen, Y., Nagoya, F., & McDermid, J. A. (2011). Formal specification-based inspection for verification of programs. IEEE Transactions on Software Engineering, 38(5), 1100–1122.

McCabe, T. J. (1976). A complexity measure. IEEE Transactions on Software Engineering, 4, 308–320.

Mohanani, R., Salman, I., Turhan, B., Rodríguez, P., & Ralph, P. (2018). Cognitive biases in software engineering: A systematic mapping study. IEEE Transactions on Software Engineering, 46(12), 1318–1339.

Parnas, D. L., & Lawford, M. (2003). The role of inspection in software quality assurance. IEEE Transactions on Software Engineering, 29(8), 674–676.

Ramalingam, G. (2000). On loops, dominators, and dominance frontier. ACM SIGPLAN Notices, 35(5), 233–241.

Ruangwan, S., Thongtanunam, P., Ihara, A., & Matsumoto, K. (2019). The impact of human factors on the participation decision of reviewers in modern code review. Empirical Software Engineering, 24, 973–1016.

Sauer, C., Jeffery, D. R., Land, L., & Yetton, P. (2000). The effectiveness of software development technical reviews: A behaviorally motivated program of research. IEEE Transactions on Software Engineering, 26(1), 1–14.

Shull, F., Lanubile, F., & Basili, V. R. (2000). Investigating reading techniques for object-oriented framework learning. IEEE Transactions on Software Engineering, 26(11), 1101–1118.

Sultana, S., Turzo, A. K., & Bosu, A. (2023). Code reviews in open source projects: How do gender biases affect participation and outcomes? Empirical Software Engineering, 28(4), 92.

Sykes, A. O. (1993). An introduction to regression analysis. Coase-Sandor Institute for Law & Economics Working Paper No. 20.

Szabó, Z., & Bilicki, V. (2023). A new approach to web application security: Utilizing gpt language models for source code inspection. Future Internet, 15(10), 326.

Thelin, T., Runeson, P., & Wohlin, C. (2003). An experimental comparison of usage-based and checklist-based reading. IEEE Transactions on Software Engineering, 29(8), 687–704.

Thongtanunam, P., Tantithamthavorn, C., Kula, R.G., Yoshida, N., Iida, H., & Matsumoto, K. -I. (2015). Who should review my code? A file location-based code-reviewer recommendation approach for modern code review. In: 2015 IEEE 22nd International Conference on Software Analysis, Evolution, and Reengineering (SANER), IEEE, pp. 141–150.

Tufano, R., Masiero, S., Mastropaolo, A., Pascarella, L., Poshyvanyk, D., & Bavota, G. (2022). Using pre-trained models to boost code review automation. In: Proceedings of the 44th International Conference on Software Engineering, pp. 2291–2302.

Funding

Open Access funding provided by Hiroshima University. This work is supported in part by the National Key R&D Program of China under No.2023YFB2703800, the National Science Foundation of China under Grants U22B2027, the China Guangxi Science and Technology Plan Project under Grant AD23026096, and Hainan Provincial Natural Science Foundation of China under Grant 622RC616.

Author information

Authors and Affiliations

Contributions

Shaoying Liu and Guangquan Xu designed the research and supervised the entire research process. Yujun Dai has presented the research idea, implemented it, and wrote the first draft of the manuscript. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dai, Y., Liu, S. & Xu, G. Enhancing human-machine pair inspection with risk number and code inspection diagram. Software Qual J (2024). https://doi.org/10.1007/s11219-024-09674-4

Accepted:

Published:

DOI: https://doi.org/10.1007/s11219-024-09674-4