Abstract

The Van Allen Probes mission operations materialized through a distributed model in which operational responsibility was divided between the Mission Operations Center (MOC) and separate instrument specific SOCs. The sole MOC handled all aspects of telemetering and receiving tasks as well as certain scientifically relevant ancillary tasks. Each instrument science team developed individual instrument specific SOCs proficient in unique capabilities in support of science data acquisition, data processing, instrument performance, and tools for the instrument team scientists. In parallel activities, project scientists took on the task of providing a significant modeling tool base usable by the instrument science teams and the larger scientific community. With a mission as complex as Van Allen Probes, scientific inquiry occurred due to constant and significant collaboration between the SOCs and in concert with the project science team. Planned cross-instrument coordinated observations resulted in critical discoveries during the seven-year mission. Instrument cross-calibration activities elucidated a more seamless set of data products. Specific topics include post-launch changes and enhancements to the SOCs, discussion of coordination activities between the SOCs, SOC specific analysis software, modeling software provided by the Van Allen Probes project, and a section on lessons learned. One of the most significant lessons learned was the importance of the original decision to implement individual team SOCs providing timely and well-documented instrument data for the NASA Van Allen Probes Mission scientists and the larger magnetospheric and radiation belt scientific community.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

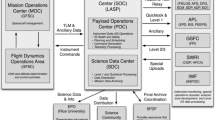

The Van Allen Probes Mission Science Operation Centers (SOC) were designed to provide the highest level of science return in one of most intense radiation environments to fly an operational mission around Earth. The success of the Van Allen Probes Mission (Fox and Burch 2014) is reflected by the simple fact that this mission has become the Gold Standard for NASA missions in regards to mission operations successes and scientific return. The sheer number of new discoveries accomplished by the research teams are too numerous to fully describe but a simple metric of this statement can easily be gleaned by doing a search on scientific papers published using the data from Van Allen Probes instruments; the details of the metrics of the scientific success of the Van Allen Probes Mission can be found in the introduction by Ukhorskiy et al. (in prep). Science operations for this mission were broken into multiple levels that included command and control of the spacecraft and instruments; receipt of telemetry; processing of telemetry into higher level data products. The Mission Operations Center (MOC) as described in Kirby et al. (2013), managed communications between the ground segment and each spacecraft; handled spacecraft operations; and provided detailed ephemerid of the spacecraft for each of the instrument teams. The overall configuration of the Van Allen Probes operations was architected using a “bent-pipe” system where the MOC handled all elements related to the spacecraft and the instrument Science Operation Centers (SOC) handled all aspect of instrument operations.

The success of the extension of the standard satellite “bent-pipe” architecture for the data systems coupled with the distribution of operational responsibilities between the central MOC and the instrument SOC’s cannot be overstated. This configuration provided the highest level of flexibility for the instrument teams especially in situations where rapidly changing spacecraft and instrument conditions required very fast response in order to capture the highest telemetry rates and best quality of data or in some situations in order to protect the health of the instrument. As telemetry was processed into higher level data products, each team provided an instrument scientist and/or a data scientist to verify and validate the resulting data products. Since this responsibility was given to each instrument team and not a centralized production center, the scientists involved in the production of each specific data product had a clearer understanding of the specifics of the instrumentation than might have been in other situations and the production software could be quickly modified to handle changing flight configurations as opposed to the teams submitting change order requests to a centralized production center to be implemented, tested, and verified.

With all of this in mind, this paper provides and documents necessary updates for each of the Instrument Science Operations Centers (SOCs) as to any changes, development of software, and operations at the end of the mission. The following sections describe instrument configuration changes and other details for the following topical categories:

-

1)

Post Launch Instrument and SOC Modifications

-

2)

Science Coordination activities

-

3)

Science Analysis Software

-

4)

Science Gateway

-

5)

Lessons Learned

It should be noted that in some instances the complexity of instrument operations and SOC operations was difficult to separate between the instrument papers of this journal and this paper. If the reader cannot find the desired information for which they are searching about a particular instrument, then they should also review the details provided in each of the specific instrument chapters of this journal.

2 Post Launch Instruments and SOC Modifications

The Van Allen Probes Mission started mission development with the announcement in May of 2006 of the selection of the Johns Hopkins Applied Physics Laboratory to build and operate the twin Radiation Belt Storm Probes spacecraft. The instrument selections were subsequently announced in the early July of 2006. Preliminary mission design, Mission Phase B, occurred from instrument selection through 2008. The formal Mission Phases C and D occurred from Jan 2009 to launch. During phase C/D, software design and development efforts were underway with the desire to support launch sometime in 2012. The software design and development required the instrument teams to build as flexible a software system as possible for the instrument specific targeted requirements. With the launch of the spacecraft in August of 2012, the instrument teams and SOC teams were required to shift into operations support no matter what the condition of the software systems. In many cases the primary software production systems for the higher- level data products were still in development and in some cases still in design. Delay in development of the higher-level data products occurred in some instances because the teams needed to understand the instrument performance and have a reasonable understanding of the scientific capabilities before attempting to fully specify higher level data products.

In this light it becomes understandable that changes were necessary to both instrument operations and SOC software to accommodate and adapt to the flight of the instruments in what is considered one of the most hostile environments for spacecraft operations in the solar system. The following subsections attempt to describe changes to the SOC’s operationally and/or software configuration post launch as instrument performance and the radiation belt environments were better understood.

One specific project wide decision that was made toward the end of the Critical Design Review stage of the instruments SOC development was the need for a production format that was easily utilized by the instrument teams and the outside scientists whom would be using the various data products. The instrument teams selected the Common Data Format (CDF, see GSFC CDF Main Website). The criteria used by the teams include the following positive reasons for the selection:

-

1)

CDF is a self-documenting data format containing data and metadata,

-

2)

CDF stores data in a platform independent format (using the CDF software libraries),

-

3)

CDF is widely used in the Heliophysics domain,

-

4)

CDF is capable of easily handling multidimensional data (max is 10 dimensions),

-

5)

CDF files can be compressed at the file level or variable by variable,

-

6)

CDF data is accessible via the CDF Software Suite which is distributed in many different programming languages and integrated into many different Commercial Software Programs such as L3HARRIS™ Corporations Interactive Data Language or IDL© (IDL Main Website), MathWorks® analysis software MATLAB© (MATLAB Main Website), Wolfram Research software Mathematica© (Mathematica Main Website), Faden et al. (2010) (Autoplot Main Website), and others include customized software programmed by the Van Allen Probes instrument teams for purposes of production and analysis of the data.

There are many other benefits than can be found at the Goddard Spaceflight Institute (GSFC) primary CDF website at CDF.GSFC.NASA.gov. When considering a data file format specification such as CDF it is important to also consider the impact of the disadvantages in making the choice:

-

1)

CDF files are stored as binary data and while the data in uncompressed CDF files can be read directly by user developed software in general proper access to the data is best done through the available CDF Software Suite directly integrated into a user’s program or accessed via Commercial software.

-

2)

CDF itself has the capability to store multidimensional data but all of the data within the array must be of the same type. CDF does not have the capability to store “objects” or otherwise records comprised from a heterogenous amalgamation of data with varying data type such as one might find in a Jason (ref) XML stream.

-

3)

CDF itself has many software interface readers but a CDF file itself cannot be read by other common software program such as Microsoft Excel which many data analysts find useful especially in doing validation between various data records or just doing simple plotting without having to purchase a large-scale commercial software package such as IDL.

2.1 Energetic Particle, Composition, and Thermal Plasma Suite (ECT-) (Spence et al. 2013)

HOPE Level 2 Processing Algorithms

HOPE data are affected by changes in on-board energy and angular bins, both over the course of the mission and within an orbit. These are described in Skoug et al. (in prep).

HOPE fluxes incorporate a time-varying efficiency correction. This algorithm, and other details of HOPE processing, are detailed in Voskresenskaya et al. (in prep). For each of the five sensor heads (pixels) and 72 energy channels, an absolute efficiency is calculated as (coincidences ∗ coincidences)/(starts ∗ stops) and normalized to January 2013, with the switch to the final 72-bin energy assignments on board occurred. This value is calculated hourly by summing each of the counts for the hour; if an hour contains less than 3600 coincidence counts, the time window is expanded until the threshold is reached, and the same relative efficiency value used for all times within the window. Data gathered for \(\text{L}<2.5\) are excluded. Earlier releases of the data used a variant of this algorithm; the earliest releases contained no time-varying correction.

HOPE Level 3 Processing Algorithms

HOPE level 3 files are calculated from the level 1 (counts) files and an intermediate pitch angle tags product.

Time tags from level 1, which has a single tag for the entire spin and all energy sweeps, are converted to a single unique tag for each sector of the spin, and each energy value, time-resolving the energy sweep. The spacecraft spin is broken into sectors by HOPE, and during each sector a complete energy sweep is made. This means different energies are measured at different times, and thus slightly different spin phases and look directions. EMFISIS Level 2 data are used to find the magnetic field for each of these timestamps, with the field interpolated to the timestamp from available field data using the SLERP approach as implemented by LANLGeoMag (Henderson et al. 2018). The angle between the field and the HOPE look direction (rotated into the same frame as the magnetic field using SPICE) is calculated for each record, detector, sector, and energy, and recorded as the pitch angle (0–180 degrees) in the “tags” file. An orthogonal gyro angle (0–360 degrees) is also calculated. Zero gyroangle is defined as the direction of the cross product of the magnetic field direction and the spacecraft spin axis. Before EMFISIS level 2 files were available, EMFISIS quicklook files were used, but they have not been used for the final archive.

From the level 1 counts and the pitch angle tags, a general binning code creates binned level 3 files. This code sums counts into 2D array (for each time) by pitch angle and gyrophase, also tracking total number of samples in each bin. These arrays are treated identically to the 2D array, by detector number and sector. Thus, the same code that calibrates counts to fluxes (count rates, uncertainties) for level 2 is used to calculate fluxes in level 3. For level 3 pitch angle files, a single gyrophase bin is used (and removed on output), with eleven pitch angle bins: nine bins of 18 degrees, and half-width bins for 0–9 and 171–180 degrees, to provide higher resolution at the loss cone. The same code and inputs are used to produce files containing 5 pitch angle and 8 gyroangles as inputs to moment calculations.

REPT Processing Algorithms

The REPT processing algorithms are described in Baker et al. (2021). A summary of the basics of the algorithm proceeds in the following sequence. A particle enters the sensor stack and deposits charge on the silicon into Charge Sensitive Amplifiers (CSA). The CSA collects the charge (or current) pulses from each detector in the stack and then generates a classification of the pulse using Pulse Height Analysis (PHA) techniques. The particles are classified by type and energy range by comparing the PHA values from the individual detectors and PHA sums of select detectors against sets of defined energy bounds, each set defines the energy and species of each particle. The identified bounding set is then used to increment the counting for that particular species/energy combination. The energy bins are accumulated over each spacecraft spin into sectors (36/spin). Counts are then reported as a telemetry record one per spin providing fine pitch angle discrimination. The collective data for each spin is then analyzed by energy spectra per pitch angle.

Combined Electron Product

A combined electron product, using all ECT sensors (HOPE, magEIS, REPT), is described by Boyd et al. (2021).

Magnetic Ephemeris variables

To provide easy context to the scientific observations, certain quantities from the magnetic ephemeris files (Reeves et al. in prep) are added to all ECT data files. These are interpolated from the one-minute MagEphem files to the same timestamps as the ECT data. This postprocessing step is applied after the generation of the L2 and L3 files with instrument-specific code, using a single generic code. Included quantities are MLT, Roederer L*, model magnetic field at the spacecraft, McIlwain L, model equatorial field, and spacecraft position in geographic coordinates. All are using the OP77Q model.

2.2 Electric and Magnetic Field Instrument Suite and Integrated Science (EMFISIS)

The EMFISIS instruments (Kletzing et al. 2013) have operated as planned throughout the mission with essentially no changes. A few parameters have been adjusted:

-

From Oct 2012–Dec 2012, the length of the electric field booms was increasing, so the parameter used for calculating the electric field was adjusted to provide the correct length as the booms were extended These factors are incorporated into the EMFISIS data products.

-

After monitoring the response to large amplitude signals, the built-in attenuator was switched on continually after early 2013 to ensure minimal clipping of signals. This cut is 15 dB and is included in the physical units for EMFISIS data products.

-

Approximately halfway through the mission the threshold for change magnetometer ranges was lowered by about 500 nT to ensure correct switching as the spacecraft moved outbound. Because of the rapid mothing of the spacecraft, this change is essentially unnoticeable, moving the location of the change outbound by a fraction of an Earth radius. Indeed, after the change no date users ever noticed!

Beyond these operational changes in the instruments, EMFISIS steadily revised software to correct for the usual coding and calibration errors. For L2 and L3 products there have essentially no change since the second quarter of 2021.

The EMFISIS L4 density product has remained unchanged in form, but due to the need for human intervention to ensure accuracy, the data set is not 100% complete, but is complete at a level of great use to the community.

The L4 wave-normal analysis (WNA) project (described in the EMFISIS post-flight instrument paper) has been the subject of intensive work to improve the electric field accuracy by employing a model of the sheath impedance to the plasma to get correct amplitudes and phases. This effort has been quite successful and provides one of the most accurate sets of 3D electric and magnetic field wave products in terms of parameters such as Poynting flux, ellipticity, polarization, etc.

Some data products produced by EMFISIS were not originally planned for, but were developed because of their utility. These include records of thruster firings, spacecraft charging events, and axial boom shadowing. EMFISIS also developed a data product to provide a set of spacecraft housekeeping data so that instruments could understand housekeeping events which might affect their operation.

2.3 Electric Fields and Waves Suite (EFW)

A description of the instrument and science operations for the Electric Fields and Waves (EFW) instrument is provided by Wygant et al. (2013). The primary activities of the EFW Science Operating Center (SOC) – divided between the University of Minnesota and the University of California Berkeley – included data processing, instrument operation and commanding, scheduling of sensor diagnostic tests, and the collection and telemetry of burst data including support of collaborative campaigns with other missions.

Here we discuss the EFW data processing chain leading to the production of publicly available data products, and the operation of the burst 1 instrument. Further details are available in Breneman et al. (2022).

This section is an overview of the EFW data processing chain from raw telemetry (level 0) files to fully calibrated, publicly available level 3 files. On a near daily basis UCB SOC received raw telemetry files from the Mission Operating Center (MOC) at Johns Hopkins University Applied Physics Laboratory. These were decommutated and turned into time-tagged but un-calibrated (L1 ADC counts) science and housekeeping data quantities. These files were then transferred to UMN SOC where they underwent further calibration. This included the application of a rough calibration to attain physical units (such as mV/m) used to produce the daily survey quick look plots available at http://rbsp.space.umn.edu/survey/. In a few days’ time, after official ephemeris data and (roughly calibrated) EMFISIS magnetometer data became available, the quick look plots were updated to include the more accurate spin-fit survey electric fields. In addition, calibrated L2 files were developed, and these included quantities such as spin cadence (spin-fit) electric fields in modified GSE (mGSE) coordinates (see Breneman et al. 2022), survey cadence (16 or 32 s/sec) electric-fields in mGSE, probe potentials, and estimates of plasma density. Finally, in the following weeks or months, L3 data containing the best calibration available were produced as ISTP-compliant CDF files. These files, available at CDAWeb, represent the best possible EFW calibrated data and are recommended for public use.

2.4 Radiation Belt Storm Probes Ion Composition Experiment Science Operations

The RBSPICE Science Operations Center (SOC) as described by Mitchell et al. (2013) was developed over the course of five years prior to launch. Development and enhancement of the operational and scientific software continued throughout the duration of the seven-year mission. This section the changes and enhancements to the RBSPICE SOC and data as compared to Mitchell et al. (2013). Figure 1 presents the final data flow schematic as implemented by the RBSPICE SOC, located at Fundamental Technologies, LLC (FTECS) in Lawrence, KS, and the RBSPICE SOC located at JHUAPL in Laurel, MD. Pre-release Magnetic field data (EMFISIS-L0) was included to allow the RBSPICE SOC to create preliminary pitch angles for analysis in the MIDL software. Enhancements to the external interfaces from FTECS included the development of a RESTful API based upon the Heliophysics Application Programmer’s Interface (HAPI – see Vandergriff et al. 2019) which allows for streaming of RBSPICE data using a JSON object specification.

RBSPICE Data Flow Schematic. Figure derived from Mitchell et al. (2013) and updated with final implemented information

Summary of RBSPICE Data Pipeline and Products

The RBSPICE data processing pipeline was architected and designed using the Unified Modeling Language (UML – Rumbaugh et al. 2004; Booch et al. 1999). It was implemented in Microsoft C# (Hejlsberg et al. 2006) to run on Microsoft Windows 8.1 with final production occurring on Microsoft Windows 10. The production systems were based upon software and systems used for production of the Cassini Magnetospheric Imaging Instrument (MIMI) data (Krimigis et al. 2005) although significant modifications and enhancements were made to the overall software systems with a design toward utility and generalization instead of high-speed performance.

The RBSPICE data production pipeline was developed as a series of segments based upon NASA Data Level definitions (see the Appendix) (CSV = Comma Separated Value, CDF = Common Data Format (Kessel et al. 1995)). Production of each NASA data level in the RBSPICE SOC occurred as a set of dependent steps with all data products for any particular day being generated for each production segment (NASA data level). Enhancement to the L3 data production included two additional products using team-defined binning algorithms. The primary L3 data product includes the L2 differential flux along with calculated pitch and phase angles for each record for each telescope. Additionally, magnetic ephemeris coordinate information is included which was taken directly from the ECT Magnet Ephemeris (MagEphem) data product (Reeves et al. in prep). See Table 1 for details on RBSPICE data products time resolution, data formats, and primary data units.

The L3 Pitch Angle and Pressures (L3-PAP) contains L2 differential flux binned by pitch angle, species specific perpendicular and parallel partial pressures, OMNI flux, total intensity, and the species specific partial density. Pressures and density calculations include binned flux for a limited set of energy channels chosen as reliable and uncontaminated by the RBSPICE instrument/science team. The L3 products called the Pitch Angle, Phase Angle, and Pressures (L3-PAPAP) contains L2 differential flux binned by pitch and phase angles. Phase angles are calculated using the Solar Magnetospheric (SM) reference frame with the zero-degree phase toward the Sun (\(\hat{x}_{SM}\)), and the \(90^{\circ}\) phase in the \(- \hat{y}_{SM}\) direction. L3-PAPAP includes the calculation of species-specific pressures, OMNI flux, intensity, and density as in the L3-PAP files. See Table 2 for details on each data category identifying the data sources, units, access, and overall mission data volume. The RBSPICE instruments were capable of distinguishing between electrons and individual ion species, specifically protons, helium, and oxygen – for further instrument details see Gkioulidou et al. (2022).

Time System Specifications

The RBSPICE time system utilized the NASA Navigation and Ancillary Information Facility (NAIF) SPICE software system (Acton 1996; Acton et al. 2017) to convert spacecraft time (SCLOCK) into the J2000 Ephemeris Time system (ET) (Fukushima 1995). The MOC was responsible for the production of SPICE kernels maintaining the temporal map between SCLOCK and ET (J2000 epoch). All spacecraft clock event resets were handled by the MOC without creating new SCLOCK partitions.

MOC generate data files were produced for each SCLOCK day (86400 SCLOCK ticks). The first SCLOCK day was created in synch with the UTC Day of launch. Each SOC produced UTC Day files for each data product. This required correct handling of input telemetry files realizing that any particular day of telemetry might include data from as many as three different UTC days. The RBSPICE SOC system created a database map of the SCLOCK to UTC start and stop times for each MOC telemetry file. This allowed for a fast query to find telemetry files containing data for any particular UTC Day.

Telemetry Processing and Data Production

The RBSPICE Level 0 data contains 33 individual data products, see Table 3 for a listing of the primary counting data products together with the required ancillary data products used for production. Each product file contains an unpacked copy of the RBSPICE telemetry file decoding the CCSDS Payload Telemetry Packet (PTP) (Packet Telemetry 2000) records into count and support data. The only time field provided by each PTP is the spacecraft SCLOCK and the internal RBSPICE flight software derived time fields.

The produced L0 products include three time fields formats: ET (double precision), SCLOCK (string), and Universal Time Coordinated (UTC-string) using the ISO(T) 8601 Ordinal Time Format Specification [ANSI INCITS 30-1997 (R2008) formatted as “CCYY-DDDTHH:MM:SS.hhh”. CCYY = century and year, DDD = ordinal Day of Year, HH = hour, MM = minute, SS = integer second, and hhh = decimal seconds to milliseconds resolution. SCLOCK values are formatted in NAIF Type 1 SCLOCK format (NASA NAIF SPICE 2010) as [part/ticks:fine] where part = integer partition (always 1), ticks = major ticks (\(\sim1~\text{second}\)), and fine = minor ticks of the spacecraft time system in \(2^{-16}\) increments.

The following sections provide updates to the algorithms used in the creation of the Level 0 Count Files, the Level 1 Rate files, and the Level 2 Intensity (flux) files. Subsequent sections provide the detailed algorithms used in the creation of the Level 3 Pitch Angle files, the Level 3 PAP files, and the Level 3 PAPAP files. A final section discusses the algorithms needed to calculate Level 4 Phase Space Density (PSD) data. Details presented for each of these steps are sufficient in conjunction with the details provided in the original MB-I and the RBSPICE Data Handbook (Manweiler and Zwiener 2019) to allow other software developers to write their own translation workflow.

Level 0 Data Product

The Level 0 data products are organized by ephemeris time (ET), spacecraft spin number, and the RBSPICE instrument created virtual sector number with 36 sectors per spin. The starting time of each sector is determined by the RBSPICE flight software coupled with the spacecraft 1 PPS (Pulse Per Second) status record sent to the RBSPICE instrument. The ground software calculates the beginning of each sector based upon the nominal spin period provided by the RBSPICE Auxiliary telemetry record for the current spin/sector using the Spin Duration field. In the situation where either spacecraft goes into eclipse and loses the nominal 1 PPS signal then the RBSPICE flight software utilizes a hard-coded nominal spin period of 12 sec to calculate the duration of each spin and to time tag the beginning of the next spin record.

Time Stamp Generation

The RBSPICE Auxiliary telemetry (Aux) product is the only component of the received RBSPICE telemetry that provides the ability to create a high time resolution conversion from the full SCLOCK to ET (J2000 epoch). Aux packets are generated by the RBSPICE instrument at the end of each spin and each include a time stamp derived from the timing information provided by the spacecraft 1 PPS (Pulse Per Spin) signal. The SCLOCK value is a four-byte unsigned integer which cycles from 0 to (\(2^{32}-1\)). The Fine SCLOCK value is a two-byte unsigned integer number which cycles from 0 to (\(2^{16}-1\)) and is in units of (\(1/2^{16}\)) SCLOCK ticks. In general, each tick of the SCLOCK is approximately 1 second, although this relationship can drift depending upon the heating and cooling of the spacecraft. The SCLOCK value is not a unique value, but repeats every 136.19 years. A compression of the SCLOCK value from the instrument was necessary when converting into NAIF SCLOCK values since the NAIF Fine specification is in 1/50000 sec units. The \(\times323\) telemetry record time stamps are decoded by the RBSPICE SOC software system and the resulting SCLOCK and Fine SCLOCK values are converted into a time stamp using the algorithm in Fig. 2.

Duration of Measurement and Start/Stop Times

Level 0 processing calculates the duration of each measurement at the same time the sector timestamp is calculated. The duration cannot be simply calculated as the difference between the next sector and current sector start times since the RBSPICE instrument has three possible measurement modes which can be assigned to one of the three available subsector accumulation time periods. Figure 3 displays the sector division into three unequal time sized subsector partitions: \(\Delta t_{0} = \frac{1}{2} t_{sect}\); \(\Delta t_{1} = \frac{1}{4} t_{sect}\); \(\Delta t_{2} = \frac{1}{4} t_{sect}\). The RBSPICE instrument can be commanded to use any measurement mode (electron energy, ion energy, and ion species) in any combination of subsectors, providing the ability to simultaneously measure electrons and ions within a sector or, alternatively, to use a single type of measurement for higher time resolution science. Sector “dead time (dt)”, also shown, occurs at the end of each subsector due to instrument electronic state changes, \(\Delta t_{01_{dt}} = 3.94~\text{ms}\); \(\Delta t_{12_{dt}} =3.95~\text{ms}\); \(\Delta t_{20_{\text{dt}}} =4.04~\text{ms}\). Subsector accumulation time is \(\Delta t_{0_{acc}} = \Delta t_{0} - \Delta t_{20_{dt}}\); \(\Delta t_{1_{acc}} = \Delta t_{1} - \Delta t_{01_{dt}}\); \(\Delta t_{2_{acc}} = \Delta t_{2} - \Delta t_{12_{dt}}\).

The key values required to properly calculate the measurement duration are found in the Aux telemetry packet: Spin Duration (in seconds), Accumulation Mode Values (S, N1, N2, Spin) and Data Collection Pattern (DCP) – the combination of instrument modes for each subsector. The timing system calculates the duration of the measurement using the algorithm in Fig. 4. The diagram showing the structured activity (green insert box) provides some detail of the calculation of the midpoint time for the accumulation. For single spin accumulations this calculation is very straight forward as the start ET plus half the delta time for the accumulation, (\(t_{mid_{ET}} = t_{start_{ET}} + ( t_{end_{ET}} - t_{start_{ET}} )/2\)). Multi-spin accumulation involves a more complex calculation, see Fig. 5. In this example, the calculation is done for a starting accumulation in sector 0 and accumulating over 4 sectors and 10 spins, i.e., \(S=1\); \(N_{1} =2\); \(N_{2} =2\); \(Spin_{j} =10\). The sectors involved in the measurement are identified in the table as green with a white square in the middle. A “false” midpoint time is calculated using the simple algorithm \(t_{mid_{ET}} = t_{start_{ET}} + ( t_{end_{ET}} - t_{start_{ET}} )/2\) as indicated with the “\(x\)” in the red square outside the actual accumulation time.

The correctly calculated midpoint is shown as the bullseye in the middle of the two white squares. Even this calculation requires attention because the two white squares in the example are still one full spin apart. if the number of spins used in the accumulation is even then the midpoint time is the end of the first of the two white squares but if the number of spins used in the accumulation is odd then there is only a single sector in the white square so the midpoint is halfway between the start and stop of that sector.

The rest of the RBSPICE Level 0 data product production is thoroughly described in the online version of the RBSPICE Data Handbook (Manweiler and Zwiener 2019).

Level 1 Processing Algorithms

Level 1 processing is done by converting Level 0 count data into Level 1 rate data as a series of algorithmic steps for which the critical component is the calculation of the Rate-in versus the Rate-out (\(R_{in}\) vs \(R_{out}\)) algorithm. This is necessary since the instrument electronics has a maximum clock cycle limiting the highest rates observable before becoming saturated as well as accounting for lost particle events during instrument deadtime. Table 4 presents the fields and their definitions, type, and default values that are used in the subsequent \(R_{in}\) vs \(R_{out}\) formula.

\(R_{in}\) vs \(R_{out}\) Algorithm and Formula for Specific Data Products

Basic Rates: EBR (APID: x312), IBR (APID: x313), and ISBR (APID: x315) Basic rate telemetry includes the measured counts (SSD), dead time correction values (SSDDead) per telescope, and the calculated duration of the accumulation. These values are converted to a rate value using the algorithm in Fig. 6.

Energy Rates

The conversion algorithm of the counts obtained for the following energy mode products, ESRLEHT (APID: x317), ISRHELT (APID: x318), and ESRHELT (APID: x319), requires an understanding of the spin information (APID: x323) and the \(R_{in}\) vs \(R_{out}\) corrected basic rate data (EBR for ESRLEHT and ESRHELT, IBR for ISRHELT) to calculate the rate. For purposes of this algorithm, the count values in the telemetry are referenced as \(h_{ij}\) where \(i\) refers to the telescope number and \(j\) refers to the energy channel of the measurement. Figure 7 shows the algorithm used in the RBSPICE SOC software for each telescope and each energy channel. The figure includes the formulas used in the calculations.

Species TOFxPH Rates

Figure 8 displays the algorithm used in the conversion of the species mode TOFxPH measurements for products TOFxPHHLEHT (APID: x31D) and TOFxPHHHELT (APID: x31E) which follows the algorithm for the calculation of Energy Rates (see Fig. 7). The key difference in the diagram is the use of the corrected Ion Species Basic Rates (ISBR – APID: x315) and differences in the formula used in the \(R_{in}\) vs \(R_{out}\) calculation.

Species TOFxE Rates

Figure 9 displays the algorithm used in the conversion of the species mode TOFxE measurements for products TOFxEIon (APID: x31A), TOFxEH (APID: x31B), and TOFxEnonH (APID: x31C) follows a similar algorithm as for Species TOFxPH rates (see Fig. 8). The key difference in the diagram is the formula used in the \(R_{in}\) vs \(R_{out}\) calculation.

Error Calculations for Rate Files

As counts are converted into rates, the Level 1 files capture the statistical Poisson error for the purposes of error propagation in later data levels. Additionally, since we are keeping track of the percent error and including the errors in higher level data products, we have the ability to easily propagate the errors when we do various integration or telescope combination activities in the level 3 data products, see discussion of errors in the Level 3 PAP/PAPAP sections and also Fig. 10 for the basic error propagation algorithm used in the RBSPICE production system.

Level 2 Processing Algorithms

The primary activity in processing the Level 1 data into Level 2 data is to convert the rate data into particle intensity (flux) data. This is done in a series of algorithmic steps in which the Level 1 rate data is read into memory, the calibration data for the SC and product are loaded, the intensities are calculated, and the intensities are then written to a Level 2 file. Additional fields are added to the Level 2 file in order to partially fulfill the standards defined by the Panel on Radiation Belt Environmental Modeling (PRBEM: COSPAR ISTP PRBEM Committee 2010).

Calculation of Intensities (Differential Flux)

Conversion of RBSPICE data into differential flux requires knowledge of the channel and product specific RBSPICE calibration factors. The calibration data can be found on the RBSPICE website at the following locations: http://rbspice.ftecs.com/RBSPICEA_Calibration.html and http://rbspice.ftecs.com/RBSPICEB_Calibration.html or archived at the CDAWeb RBSPICE archive. Note that the reference table in the calibration files of TOF-trigger_ion E is referring to the RBSPICE TOFxE_Ion data products.

The data is organized by product type and contains the necessary information needed to convert RBSPICE rates into differential flux. The calibration data fields are fully described in the RBSPICE Data Handbook. Rates are converted into Intensities using the following equation, \(flux[tele,enchan]= \frac{rate[tele,enchan]}{\left ( E_{High} - E_{Low} \right ) * G_{size} *eff}\). The value of the geometrical factor, \(G_{size}\), is based upon the current pixel value (small or large) identified in the Aux data packet for the current spin/sector and ET combination. The final CDF variable that is created to contain the intensities is a two-dimensional variable of type Double (or Double Precision) and sized as \(FxDU[tele,enchan]\) so that it contains the data for each telescope and energy channel combination.

Ion Species Mode Flux Data (ISRHELT)

While the calibration of the rate and flux data measurements for the Ion Species Rates High Energy Resolution Low Time Resolution (ISRHELT) data product is very well understood the measurement is not itself very specific as to species. In order to use the ISRHELT data it is necessary to have independent knowledge of which species dominates the measurements. Otherwise, it is difficult to draw scientific conclusions using this data product. Any research that utilizes this particular data product should at the very least do a relative comparison to the equivalent ECT-MagEIS measurements before using this data to make scientific conclusions.

RBSPICE Background Contamination

The current data files produced by the RBSPICE SOC are not background corrected for contamination. Work is ongoing within the RBSPICE team to correct for these issues but at the time of this writing the rates are still potentially contaminated with accidentals (mostly during perigee) and other background rate contamination issues. The reader is strongly encouraged to reach out to members of the RBSPICE team prior to doing significant scientific activity in order to avoid utilization of contaminated data and deriving erroneous results. The two specific products that are most likely contaminated with background or accidentals are a varying set of the TOFxPH proton lowest energy channels and all of the TOFxPH oxygen channels. At some point, the RBSPICE SOC will reprocess the data and at that point when background rates dominate over foreground rates on a channel-by-channel basis then the channel specific data quality flags contained within the CDF files for each flux variable will be properly tagged with a value indicating data contamination. As of the writing of this manuscript the TOFxPH oxygen data have all data records tagged as contaminated. Work is ongoing to attempt to eliminate the background from the data.

Level 3 Processing Algorithms

Processing Level 2 data into Level 3 data requires the calculation of the look direction, pitch angle, and phase angle of each telescope, using the measured magnetic field received from the EMFISIS instrument as well as loading of ancillary data from the ECT Magnetic Ephemeris data files.

EMFISIS Magnetic Field Data

EMFISIS Level 2 UVW magnetic field data files were used to calculate the RBSPICE pitch angles. These files contain data sampled at 60 Hz with over 5 million samples per data file. In order to reduce memory utilization and processing requirements, these files were deprecated by a specific programmable number prior to pitch angle calculations. The final mission wide deprecation factor was set to 8 representing a signal frequency of 7.5 Hz which results in approximately 2–3 magnetic field measurements per RBSPICE sector. No other filtering of the EMFISIS data was utilized during the deprecation stage, although it is noted that data records tagged as ‘bad’ were not included.

ECT Magnetic Ephemeris Data

Additional fields loaded in the RBSPICE Level 3 CDF files were derived from ECT Magnetic Ephemeris data files. The definitive Olsen-Pfitzer 1977 quiet time data were used as the source. Specific data fields used were deemed necessary and pertinent to provide for a full scientific understanding of the RBSPICE energetic particle data: \({L}_{dipole}\), \({L}^{*}\), \({L}_{eq}\), I (2nd adiabatic moment – single value and pitch angle dependent array), K (3rd adiabatic moment – single value and pitch angle dependent array), and Magnetic Local Time (MLT).

Calculation of Particle Flow Direction

The particle flow direction has been added to the RBSPICE Level 3 files since file version x.1.10. The calculation of particle flow direction, \(\hat{v}_{0},\dots,\hat{v}_{5}\) in Fig. 11, uses the definitive SPICE CK, FK, and IK kernels for each spacecraft at the time of the observations. The calculation utilizes the NAIF SPICE function \(\mathrm{pxform}\_c\left ( f,t,tmatrix \right ){:}\ i= \{ n \mid n \in \mathbb{N}, 0\leq n\leq 5\}\). The variable \(f\) represents the “From” reference frame and is the RBSPICE telescope reference frame (\(RBSP\{A/B\}\_RBSPICE\_ T_{i} \}\), e.g. \(RBSPB\_RBSPICE\_ T_{3}\) represents RBSPICE telescope 3 of spacecraft B. The variable \(t\) represents the “To” reference frame and is the Spacecraft UVW reference frame. The RBSPICE telescope and spacecraft UVW reference frames are defined in the Van Allen Probes SPICE frame kernels: rbspa_vxxx.tf and rbspb_vxxx.tf where “xxx” is the highest version number.

The particle flow direction unit vector is then calculated as the negative or reverse of the telescope boresight unit vector transformed into the UVW reference frame, e.g., \(\hat{v}_{i} =- \hat{T}_{i_{SM}}\). Any exceptions occurring during this transformation results in the particle flow direction unit vector set as \(\hat{v}_{i} =(0.0,0.0,0.0)\) representing an unknown direction.

Calculation of Pitch Angles

Figure 11 also displays the geometry used in the calculation of the RBSPICE pitch angle for each of the instruments six telescopes. The overall orientation of the diagram is such that the spacecraft \(\hat{w}\)-axis points generally toward the sun. The spacecraft rotation around the \(\hat{w} \)-axis is also shown and the fan of six RBSPICE telescopes allow for an almost \(4p\) steradian view of the sky for each spacecraft spin period: \(\tau _{SC} \cong 10.9~\text{sec}\). The conical elements of the figure display the telescope look direction unit vectors, \(\hat{t}_{i}\), centered on the aperture for each telescope as they are mounted on the spacecraft. The particle velocity unit vectors (or particle flow direction) are also shown in the diagram along with the representation of the pitch angles as the angle between the velocity unit vectors and the observed magnetic field unit vectors. The deprecated 7.5 Hz magnetic field signal results in approximately 2–3 magnetic field vectors occurring in the RBSPICE sector (\(\sim0.3~\text{sec}\)) time window.

Algorithmically, a pitch angle is calculated for each of the magnetic vectors that exist within the accumulation period. The final pitch angle is the average of the calculated pitch angles and the deviation between all pitch angles is reported in the CDF variable FxDU_AlphaRange. If the deviation between the calculated pitch angles results in variations that are larger than 1/2 of a sector look direction then the sector pitch angle quality flag is set to a value indicating it is unusable (\(\text{AlphaQuality}_{\mathrm{i}}=\{0\text{-Good},1\text{-Bad}\}\)) and the pitch angle is set to the CDF Double Precision fill value of \(-1.0\times 10^{31}\). Calculation of pitch angles uses the algorithm in Fig. 12.

Calculation of Phase Angles

The RBSPICE Level 3 data files, as of file version x.2.z, include a calculation of the phase angle of the RBSPICE telescope with respect to the Solar Magnetospheric (SM) reference frame (Laundal and Richmond 2017). Figure 13 displays the calculation of the phase angles in the \(SM\) reference frame. The magnetic field \(\hat{x}\)–\(\hat{y}\) plane is first projected into the SM reference frame and then the phase angles are calculated with respect to the SM coordinate system using the projected vectors of \(\vec{B}_{x_{SM}}\) and \(\vec{B}_{y_{SM}}\). The orientation of this figure is such that the \(\hat{z}\)-axis of the SM frame is up (approximately in the direction of \(\hat{B}_{dipole}\)); the \(\hat{x}\)-axis is away from the Sun; and the \(\hat{y} \)-axis completes the orthogonal system. The RBSPICE phase calculation is defined such that the zero-degree phase angle points toward the Sun, i.e., along \(+ \hat{x}_{SM}\) and the 90-degree phase angle is in the \(+ \hat{y}_{SM}\) direction. As the spacecraft orbits around the Earth, this reference frame always maintains the relationship between the solar drivers of magnetospheric activity and the phase angle of the particle distribution. The figure also shows the particle velocity vectors and the associated acceptance solid angles for each RBSPICE telescope.

Diagram of the calculation of the phase angle in the SM reference frame. Note that to reduce the complexity of the diagram, the rotation of the spacecraft is shown around the \(\hat{x}_{SM}\) axis but the actual rotation of the spacecraft is around the \(\hat{w}\) axis of the spacecraft which points approximately along the \(\hat{x}_{SM}\) axis

The phase angles are calculated in the \(XY_{SM}\) plane and are represented by the blue gradient circles with red lines/arrows starting at the \(\hat{x}_{B}\)-axis and going to the central point of each cone. An example phase angle is shown with \(\text{f}_{0}=357.2^{\circ}\) and each subsequent phase angle \(\sim15\) degrees rotated away from the Sun. If the phase angle cannot be calculated then that phase angle is set to the CDF Double Precision fill value of \(-1.0\times 10^{31}\). This figure also shows the calculation of the phase angle between the vector that points from the Earth toward the SC and the \(\hat{x}_{B}\)-axis in the \(XY_{SM}\) plane. This allows a phase shift calculation for scientific analysis of Earth centered radial, tangential, and normal particle flow/anisotropies. Figure 14 shows the algorithm used in the calculation of the RBSPICE phase angle.

Level 3 Pitch Angle and Pressure (PAP) Processing Algorithms

Level 3 differential flux data is used in the calculation of the Level 3 PAP data products by utilizing the pitch angle data from each telescope and a predefined set of pitch angle bins with centers at 7.5, 20, 30, 40, 50, 60, 70, 80, 90, 100, 110, 120, 130, 140, 150, 160, and 172.5 degrees. Part of the binning of the differential flux provides the ability to calculate partial moments of the distributions. The calculated species-specific moments include the perpendicular and parallel partial particle pressures, density for a select set of energy channels, the omnidirectional differential flux for each energy channel, and fully integrated particle flux over the entire energy range (Note: proceed with caution as this integrated particle flux includes noisy and background contaminated channels).

Binning of Pitch Angles and Calculation of Aggregate Data

PAP data calculation uses the algorithm shown in Fig. 15. Calculation of the moments is over a specific set of energy channels for which the RBSPICE science team has determined are reasonably reliable. Table 5 presents the energy channels used in moment calculation as a function of data product, energy channel indices (absolute and relative reference channel range with respect to the Level 3 CDF differential flux variable), and the energy channel passband range. Products that are set “none” do not have moments calculated since the specific product has been identified by the RBSPICE team as untrustworthy either in data or in calibration. Untrustworthy data products also have data quality flags set to a value other than 0 = good or 10 = unknown indicating that the data should not be used for science.

Special note: As of the writing of this paper the TOFxPH Oxygen observations are deemed unreliable and no aggregate values are calculated within the PAP data files but the data is provided as a product so that the RBSPICE team can update the calibration information and reprocess the data once the causes of the contamination are understood and can be removed resulting in newly calibrated data considered usable for science.

Level 3 Pitch Angle, Phase Angle, and Pressure (PAPAP) Processing Algorithms

Level 3 differential flux data is used in the calculation of the Level 3 PAPAP data products by utilizing the pitch angle data from each telescope and a predefined set of pitch angle bins with centers the same as for the Level 3 PAP data product. The predefined set of phase angle bins are calculated in thirty (30) degrees separation with the first center set at zero degrees. Moments are also calculated as with the Level 3 PAP data product and include calculated species specific perpendicular and parallel partial particle pressures, density for a select set of energy channels, the omnidirectional differential flux for each energy channel, and fully integrated particle flux over the entire energy range. An algorithm diagram is not shown for this product as it is almost exactly the same as for the Level 3 PAP algorithm with one change. At the point in which we identify the “PA Bin” number for the record we instead identify the PitchBin and the PhaseBin for the record. The FxDU related variables are expanded with one additional dimension, e.g. FxDU[#energy channels, #Pitch bins, #Phase bins].

Level 4 Phase Space Density Data Products

One of the original lofty goals of the RBSPICE science team was to have the capability to generate a standardized Level 4 Phase Space Density (PSD) data product for each of the proton, helium, and oxygen species measured by the RBSPICE instruments. The development of that data product was strongly dependent upon available funding and unfortunately there were not enough resources to support this activity during the mission. The following describes some of the work that was accomplished as part of the initial investigation into the feasibility of the generation of a PSD product and was presented at the in-person Science Working Group (SWG) meetings of the larger Van Allen Probes science teams May 2018 by the lead author.

In a traditional Phase Space Density analysis using magnetospheric plasma and energetic particle data the analysis attempts to blend data observations from particles instruments with a chosen magnetic field model. This method provides a guide to understand the dynamics of the particles through Louisville’s theorem where phase space density organized by an adiabatic invariant coordinate system is unaffected by slow temporal changes in the magnetic field. In the analysis one utilizes the collisional Vlasov equation as the appropriate representative of Louisville’s theorem for plasma physics:

As part of the algorithm, it is necessary to convert the observed intensities into a phase space density time series sampling of the distribution function. The conversion from flux, \(J(E,\alpha ; \vec{r},t)\) with \([J \left ( E,\alpha ; \vec{r} \right ) ]= \left (\text{cm}^{2}\,\text{sr}\,\text{s}\,\text{MeV}\right )^{-1}\), to phase space density, \(f( \mu _{k}, \alpha _{j}; \vec{r},t)\) with \([f \left ( E,\alpha ; \vec{r} \right ) ]= \text{s}^{3} / \text{m}^{6}\), is presented in Schulz and Lanzerotti (1974):

and when converting from a velocity space integration into an energy space integration becomes written as:

The analysis is strongly dependent upon binning the data into model dependent adiabatic invariant coordinates of the first adiabatic invariant \(\mu\), the magnetic moment; the second adiabatic invariant \(K\), related to the bounce period; and \(L^{*}\) is the Roederer L-shell parameter.

The RBSPICE SOC lead started the development of a model independent approach to packaging the particle data which would easily be used by the magnetospheric modeling community as a direct input for whatever specific field model desired which could then also provide comparative corrective feedback to the simulation. The work itself started as part of the calibration work between the RBSPICE/TOFxPH data and the ECT/HOPE data with the intent of using coupled energy spectra and PSD plots to better understand the dependencies in the \(R_{HR} \) factor (see Sect. 3 – Science Coordination Activities subsection on ECT and RBSPICE cross calibration).

The algorithm currently developed uses the in-situ measured magnetic field in the spacecraft coordinate system i.e., the EMFISIS Level 2 UVW data product (also used for RBSPICE pitch and phase angle calculations). The magnetic moment, \(\mu \), is calculated for each energy bin (\(E_{i} \)) and pitch angle (\(\alpha _{j} \)) combination for each spin record of the RBSPICE Level 3 PAP data product:

The observed flux is converted into phase space density using the above equation for energy space integration resulting in a measured value of the distribution function for each value of \(\mu _{i,j}\), i.e. \(f_{i,j} ( \mu _{i,j}, \alpha _{j}; \vec{r} )\). The magnetic moment is then divided into logarithmic bins, \(\mu _{i,j} \rightarrow \mu _{k}\). The PSD values are then binned and normalized into a two-dimensional data structure organized by \(\mu _{k}\) and \(\alpha _{j}\). Figure 16 shows an example of the calculated PSD data comprised of ECT/HOPE, RBSPICE/TOFxPH, and RBSPICE/TOFxE proton data. Each are plotted into individual spectrogram panels showing logarithmically binned in \(\mu \) and organized by pitch angle, \(\alpha \). The ECT/HOPE and RBSPICE/TOFxPH proton data overlap in \(\mu \) space with RBSPICE/TOFxE proton data at higher values of \(\mu \). The figure displays two sets of panels of PSD at different times during the St. Patrick’s Day storm on March 18, 2015 for Spacecraft B. The left three panels reflect a time when the magnetic field is weaker sampling slightly higher values of \(\mu \) space compared to the right three panels. The \(x\)-axis in \(\mu \) space and the contour color scale are the same between each set of panels although the although the associated energy range (\(\mu (E,\alpha )\) is higher for the earlier panels as is reflected by the secondary \(x\)-axis displayed for each contour panel. This example calculation displays the capability of generating a combined PSD from ECT/HOPE and RBSPICE data that can be a direct input into modeling systems.

3 Science Coordination Activities

One of the key elements of the Van Allen Probes Mission was the intentional attempt to have the instrument teams coordinate science activities both within the mission specific group of instrument teams but also to include external teams such as the team from the Balloon Array for Radiation-belt Relativistic Electron Loss (BARREL) Mission and to also include other assets such as ground radar stations. The most important coordination activities between the instrument teams involved the cross calibration of similar instruments e.g., overlap of proton energy channels between ECT-HOPE and RBSPICE/TOFxPH. The following section describes some of the key coordination activities and results that have been accomplished to this point during the Van Allen Probes Mission.

3.1 Electric and Magnetic Field Instrument Suite and Integrated Science (EMFISIS)

Over the course of the Van Allen Probes mission, EMFISIS conducted various science coordination activities. First and foremost, because magnetometer data is essential for calculating particle pitch angles and field-aligned coordinates for fields data, EMFISIS coordinate with all teams to provide good accuracy magnetic field data in spacecraft coordinates.

Other coordination efforts included:

-

Working with the BARREL balloon team to coordinate bust mode data taking at times when the Van Allen Probes spacecraft were magnetically conjugate to regions in which the BARREL balloons were flying. This is described in full detail in the EFW section which follows.

-

Coordinating with lightning research ins the US and Hungary to take burst data when over regions where they had good ground measurements and the Van Allen probes were magnetically conjugate to those regions This enabled more detailed studies of lightning-generated whistlers.

-

Coordinating with researchers at Goddard Space Flight Center to take burst mode data when the Van Allen probes were at perigee in regions where spread-F is observed. This resulted in some highly detailed observation of spread-F including some unusual observations of a magnetic signatures associated with these waves.

-

EMFISIS coordinated efforts to identify times when the Van Allen probes and the Japanese Arase satellite had conjunctions in order to take burst mode data for cross comparisons between the two missions. This has led to several papers on conjugate observations.

In addition to these efforts. EMFISIS did its best to take burst mode data or implement different modes of operation on requests for short periods of time.

3.2 Electric Field and Waves Suite (EFW)

During the Van Allen Probes mission the Electric Fields and Waves (EFW) instrument took part in several collaborative science campaigns with other missions including BARREL, FIREBIRD/Ac6, and WWLLN. These collaborations were focused efforts to collect high time resolution burst waveform data, generally during times of magnetic or drift shell conjunctions.

The three most significant collaborations were:

-

BARREL (Balloon Array for Radiation Belt Relativistic Electron Losses) – The EFW and BARREL teams worked closely together for six balloon campaigns in order to determine the temporal and spatial characteristics of magnetospheric waves and resulting electron loss. These campaigns included the 2013 and 2014 Antarctica campaigns (roughly Jan–Feb, 2013 and Dec, 2013–Feb, 2014), three Kiruna, Sweden turnaround campaigns (7 balloons in Aug, 2015; 7 balloons in Aug, 2016, 2 balloons in June, 2018), and an Antarctica superpressure campaign where a single balloon remained aloft from Dec, 2018 to Feb, 2019. Details on these campaigns from the BARREL perspective are discussed by Woodger et al. (2015), Millan et al. (in prep) and Johnson et al. (2020).

-

FIREBIRD (Focused Investigations of Relativistic Electron Burst Intensity, Range, and Dynamics) and AC6 (AeroCube 6) – EFW provided burst 1 collection during times of close magnetic conjunction in order to further understand the connection of magnetospheric waves (primarily chorus) and microburst precipitation. This included several month-long campaigns from 2015–2019 (see Johnson et al. 2020 for details).

-

WWLLN (Worldwide Lightning Location Network) – EFW provided burst collection during times when the Van Allen Probes mapped to magnetic field lines over the continental United States in order to study the manner in which lightning activity couples into whistler mode radiation in the inner magnetosphere. The decision to telemeter burst data was based on whether or not significant lightning activity was detected (Zheng et al. 2016).

By mission’s end, EFW had telemetered a substantial dataset of spatially separated, high time resolution data during dynamic times, leading to a number of publications (see Breneman et al. 2022).

EFW Campaign with Balloon Array for Radiation-Belt Relativistic Electron Losses (BARREL)

EFW’s first significant collaborative effort was with the Balloon Array for Relativistic Radiation Belt Losses (BARREL) mission of opportunity’s first mission in 2013 (Millan et al. in prep; Woodger et al. 2015). During this roughly two-month long effort the BARREL team launched a total of 20 balloons from SANAE and Halley Bay stations in Antarctica. Balloons had an average duration aloft of approximately 12 days, and typically 6 balloons were aloft at any given time (Woodger et al. 2015). At altitudes of \(\sim30\text{--}40~\text{km}\) the balloons measured Bremsstrahlung X-rays created from external sources including electron precipitation from the radiation belts in addition to galactic cosmic rays, solar flares, solar energetic protons. Using a forward folding technique the X-ray spectrum could be reliably used to estimate the spectrum of the incoming flux, particularly when constrained by in situ flux measurements from satellites (see Millan et al. in prep; Woodger et al. 2015 for more details). These measurements filled a gap in the near-equatorial Van Allen Probes observations by allowing a direct measurement of precipitating flux – not typically possible for near-equatorial satellites which cannot resolve the small (\(\sim1\text{--}2~\text{deg}\)) loss cone.

One of the key science goals of BARREL was to quantitatively investigate wave-particle interactions leading electron precipitation by various wave types and other precipitation drivers at times of magnetic or drift shell conjunction. In 2013(2014) conjunctions were focused in the morning(afternoon) sector, as shown in Fig. 17 (derived from Fig. 1 in Woodger et al. 2015). This location played an important role in the EFW burst 1 operation, with morning sector conjunctions typically sampled at the highest rate (16K) in order to resolve chorus waves, and with lower rates for the afternoon sector to resolve lower frequency hiss and EMIC waves.

The EFW/BARREL collaboration was highly successful for both missions; planning and communication between the teams was a key component to this success. An approximate three-day lead time was needed to decide on when burst data were to be collected to ensure the commands would be successful uplinked to the satellites. Shorter timeframes were sometimes available for us to make decisions, however we tried to stick to making decisions about burst collection 3 days out. Thus, it was clear that we would need a clear method to plan and prioritize collection periods.

The relevant teams met informally at AGU a year prior to the first BARREL campaign to discuss strategy. A plan was developed for the BARREL team to create expected trajectories as Google Earth KML files to enable prediction of conjunctions between the balloons and satellites. These plots, which included the balloon flight paths and the magnetic footprint of the Van Allen Probes, ground stations, and other satellites, were then referenced when prioritizing burst collection and download.

Starting in mid-December 2012, when the team declared flight-ready in Antarctica, the BARREL team started sending daily emails that included a high-level summary of the space weather and any potential upcoming activity, followed by updates about which balloons were likely to be launched or terminated, as well as which were still afloat. A list of observed precipitation events followed, along with the current burst data collection times and data in the que to be downloaded. These two pieces of information facilitated discussions between the teams to prioritize downloading data which was likely to be highly impactful. The emails continued with updates from other instruments, missions, and ground observations, along with a more detailed look at the current space environment and predictions of upcoming activity. As these emails were long, they were often ended with a fun fact. This may seem unnecessary to mention or add, but it aided in keeping spirits light which helped with a near 24/7 cadence over a few months.

After the daily emails were sent, the BARREL, EFW, and other instrument and mission teams held a daily phone call to tag up and plan for new burst data collection and downloads. Because we made sure that the emails described above were sent about 2 hours prior to the phone call, our chats were very focused and short. Even with waiting a few minutes at the beginning to make sure everyone was on, say hi, how’s the weather, etc. the average length of time for these telecons was 6 minutes. Telecons were cancelled when not needed and the team worked hard to avoid weekend tag ups to give people some much deserved and needed down time.

As many researchers were interested in the ongoings of the BARREL campaigns but did not want to receive daily emails, we offered a few other forms of communication. The emails were paired down to remove the identification of event times and other potentially sensitive information and then posted to a blog http://relativisticballoons.blogspot.com/. In the later campaigns this blog was used for public outreach and we added a second science focused blog for researchers. We also started posting when we were launching and terminating balloons, along with some other fun information to a twitter account @keV_Balloons, and on to a Facebook page. These interactions provided unexpected engagement with the broader research community. Specifically, the Twitter interactions with other space physics researchers led to the collection of extra ground data and resulted in successful proposals to get time on EISCAT (which was near conjugate to the Kiruna launch site) for the 3rd and 4th BARREL campaigns.

Through advance planning, respecting people’s time, and accommodating their preferred communication format, we were able to have a successful first campaign. This success led everyone to work extra hard, and even look forward to a second and additional intense follow-on campaigns.

Another aspect which enabled the success of BARREL as a mission of opportunity was the openness of the Van Allen Probes Team to include BARREL in other activities. The BARREL team regularly had joint meetings with the EFW and other teams and were always included in the twice-yearly mission meetings. Perhaps most importantly, The BARREL team was included in their efforts for outreach to the broader scientific community. This included the Van Allen Probes Data/Analysis help sessions during posters at AGU and GEM as well as inclusion within chapters such as this one. This was further enabled by the BARREL team ensuring their data was available through CDAWEB and analysis software provided through SPEDAS (Angelopoulos et al. 2019).

EFW Campaign with JAXA Arase Mission

The Van Allen Probes EFW instrument team and the Japanese Aerospace Exploration Agency (JAXA) Exploration of energization and Radiation in Geospace (ERG) (Miyoshi et al. 2018 – also referenced as “Arase”) science team collaborated in a prolonged science campaign from 2017–2019 focusing on science topics that motivated the collection of high-rate burst waveform electric and magnetic field data (see Breneman et al. 2022 for more details). This dataset provides simultaneous observations of plasma waves with a range of spatial separations in L and MLT, as well as the first significant dataset of wave observations separated primarily by magnetic latitude during more than 500 magnetic conjunctions. The latter is critical for understanding the way in which plasma waves propagate away from their (typically) near-equatorial source to higher latitudes. Results have led to significant increases in our understanding of the spatial and temporal dynamics of wave/particle interactions in the inner magnetosphere.

For example, Colpitts et al. (2020) used simultaneous conjunction observations at magnetic latitudes of 11 deg (Van Allen Probe A) and 21 deg (Arase) to directly show how rising tone chorus packets propagated from their equatorial source to higher magnetic latitudes. Details of this propagation (e.g., ducted vs non-ducted) are critical for determining how chorus waves interact with electrons with energies from tens to hundreds of keV. Figure 18 displays the conjugate observations of the RBSP B Magnetic Field wave measurements on the same time scale as the Arase Magnetic Field wave measurements. It is clear that both spacecraft observe the waves even though they are at different inclinations (\(10^{\circ}\) for RBSP and \(32^{\circ}\) for Arase (JAXA ARASE Website)).

Observations of the RBSP-B and ARASE magnetic field instruments while the spacecraft are on the same magnetic field line and are separated by \(\sim20~\text{deg}\) in magnetic latitude. Figure taken from Colpitts et al. (2020)

Comparison of ECT/HOPE, RBSPICE/TOFxPH, and RBSPICE/TOFxE spectra at 2017-02-02T02:09:36 UTC using the OMNI data variables from each data product. The left panel shows the raw spectra from each instruments data product with HOPE in red, TOFxPH in blue, and TOFxE in green. There is a clear discrepancy between the RBSPICE TOFxPH/TOFxE OMNI differential flux and that of HOPE as shown by the orange oval. The right panel shows the same data except that the HOPE data has been increased by a factor of \(R_{HR} \cong 1.98\) referenced in the figure as the HOPEMOD factor which is used to shift the measurements such that they now form a continuous spectra excluding the lowest TOFxPH energy channels as shown by the green oval. The black circled TOFxPH energy channels lifted above the merger of the HOPE and TOFxPH/TOFxE spectra are due to lower energy oxygen ions in the TOFxPH system being interpreted as protons. The specific factor, \(R_{HR}\), used was calculated using a simple algorithm as described in this section

The relative time of arrival difference and wave normal angles for chorus packets identified on both spacecraft were used to constrain a ray-tracing analysis which indicated that the waves were generated near the magnetic equator with nearly parallel wave normal angles and then propagated without any significant density ducting to higher latitudes.

A similar result was provided by Matsuda et al. (2021) for simultaneous observations of EMIC waves on Arase, RBSP, and ground magnetometers and their effect on ion heating. A comprehensive summary of these results and many others from this collaboration is presented in a submitted paper by Miyoshi et al. (2022).

3.3 ECT HOPE and RBSPICE Cross Calibration Factor – \(R_{HR}\)

The RBSPICE and ECT teams have worked on cross calibration of the species-specific observations between the ECT/HOPE, ECT/MagEIS, and the RBSPICE instrument observations for similar energy channels. These calibration activities resulted in adjustments to the efficiencies in the calibration table for the RBSPICE instrument with additional work still ongoing. One of the key cross calibration activities has been to resolve an apparent discrepancy between the upper energy channels of the HOPE and the lower energy channels of the RBSPICE proton differential flux measurements. As of the writing of this manuscript there is an approximate factor of 2 difference between the HOPE and RBSPICE proton data for the HOPE release 4 data set. Upon analysis, the problem is significantly more complex than a simple multiplicative factor although there is an expectation that some of this discrepancy will be resolved in the upcoming release 5 dataset.

For example, the left panel of Fig. 20 shows two combined proton spectra using OMNI data from HOPE (red), RBSPICE/TOFxPH (blue), and RBSPICE/TOFxE (green). Error bars reflect the width of each energy channel (\(\hat{x}\)-axis) and the Poisson counting errors (\(\hat{y}\)-axis). There is a clear mismatch between the HOPE OMNI differential flux higher energy channel measurements and the RBSPICE/TOFxPH measurements well outside the range of the error bars. In contrast, the TOFxPH and TOFxE measurements form a continuous spectrum within the limitations of the errors.

Distribution of the correction factor, \(R_{HR}\), for each spacecraft (A-left, B-right) accumulated over the entire mission. Each black curve includes all data and the rest of the curves provide the breakout by \({L}_{Dipole}\) segments between 3.0 and 7.0 in \(0.5R_{E}\) increments. The consistency across \({L}_{Dipole}\) is reflective of the significant work to cross calibrate the ECT-HOPE and RBSPICE observations throughout the mission

In the right panel, a simplistic algorithm has been used to match the HOPE upper energy observations with those of the RBSPICE/TOFxPH observations of similar energy. This figure includes a printout line HOPEMOD Factor (t0) which identifies the scalar multiplicative factor used to change the HOPE flux to match that of the RBSPICE/TOFxPH flux for the time 0 observation. In this particular example, the calculation itself is only accurate for the upper energy channels of the HOPE data. This is in part because the lower energy channels of the RBSPICE/TOFxPH data for the observation time is contaminated with accidentals causing the lifting of the TOFxPH spectra (black circled area). For this particular time, the required factor needed to modify the HOPE flux is \(\sim1.98\). The algorithm used is described in the following steps:

-

1)

\(\langle j_{HOPE} \rangle = \sum _{i=68}^{i=70} E_{i}/{3}\): \(\begin{array}[t]{l} E_{range} =[30.3~\text{KeV}\text{--}47.8~\text{KeV}] \Delta E=17.5~\text{KeV}\\ E_{68} =32.7\pm 2.5~\text{KeV}\\ E_{69} =38.1\pm 2.8~\text{KeV}\\ E_{70} =44.4\pm 2.5~\text{KeV} \end{array}\)

-

2)

\(\langle j_{RBSPIC E_{TOFxPH}} \rangle = \sum _{i=15}^{i=18} E_{i} / {4}\): \(\begin{array}[t]{l} E_{range} = [31.2~\text{KeV}\text{--}46.5~\text{KeV}]\Delta E=15.3~\text{KeV}\\ E_{15} =32.9\pm 3.3~\text{KeV}\\ E_{16} =36.3\pm 3.6~\text{KeV}\\ E_{17} =40.1\pm 3.9~\text{KeV}\\ E_{18} =44.3\pm 4.4~\text{KeV} \end{array}\)

-

3)

\(R_{HR} = \frac{\langle j_{RBSPIC E_{tofxph}} \rangle}{\langle j_{HOPE} \rangle}\)

(Note: \(R_{HR}\) is referenced as the HOPEMOD factor, \(R_{HOPEMOD}\), or just HOPEMOD in some plots)

-

4)

\(j_{HOPE_{ch}} = R_{HR} * j_{HOPE_{ch}}\)

This particular algorithm provides a 0th order of calibration between the HOPE and RBSPICE instruments spin-by-spin. There are significantly more complex aspects of this calibration problem that includes positionally where the spacecraft is within the orbit by both L and MLT as well as the ongoing level of magnetospheric activity as Sym-H (or Dst) and whether the spacecraft is within the plasmasphere or outside the plasmasphere.

Figure 20 shows the distribution of the values of \(R_{HOPEMOD}\) for the entire mission for both spacecraft (A-left, B-right). In the plots, the black curve displays the distribution for the entire mission for all values of \(R_{HOPEMOD}\) within the cutoff limits: \(R_{range} = \left [ 0.01, 100.0 \right ]\) and \(L_{Dipole} =[3.0 R_{E}, 7.0 E_{E} ]\). The rest of the curves show the distributions of \(R_{HOPEMOD}\) of \(L_{Dipole}\) between \(3.0R_{E}\) and \(7.0R_{E}\) in \(0.5R_{E}\) increments. Each inset plot displays the location of the peak for each curve with errors calculated based upon the width of the individual peaks.

Figure 21 displays these peak measured values for the entire mission for both spacecraft (A-left, B-right) as a function of \(L_{Dipole}\) for different times throughout the mission. The time segments each represent one quarter of a precession of the petals of the Van Allen Probes orbits throughout the mission. Each time segment is centered on one of the primary MLT points of Midnight, dusk, noon, or dawn in order of precession periods over the 7-year mission. There are RBSPICE HV gain adjustments in 2013 and 2015 where the ratio of HOPE to RBSPICE/TOFxPH flux observations remains fairly constant for those years but starts to drift downward thereafter. Error! Reference source not found. displays the peak measured value of \(R\) for different times within the mission (A-top, B-bottom). These curves more clearly show that there is a drift in the \(R_{HR}\) value which is indicative of depredation of each of the RBSPICE detectors. Each curve shows a constant value of \(R_{HR}\)until the final calibration changes in 2015 and thereafter the value degrades. The remaining details of the calibration story of HOPE and RBSPICE proton observations are to be presented in a future paper.

Plot of the \(L_{Dipole}\) dependency of \(R_{HR}\) for different periods throughout the mission. The dependency on \(L_{Dipole}\) is fairly constant throughout the mission except for 1) the initial quarter period (2013-031 through 2013-166) where both instruments are adjusting HV gain to stabilize rates and 2) the final precession period (or so) where the RBSPICE instrument performance has degraded especially for \(L<5\)

Plot of the \(R_{HR}\) for SC-A (top) and SC-B (bottom) for individual segments of \(L_{Dipole}\) as a function of Mission time. After the last RBSPICE calibration adjustment in January 2015 there is a slow degradation of the RBSPICE instrument that is captured very clearly in these plots comparing the RBSPICE and HOPE proton flux over time

4 Science Analysis Software

Each of the Van Allen Probes Science Operations Centers (SOCs) used existing software or designed and programmed new software to provide a graphical view of the key indicators of instrument performance. The programs used were highly specific to the instruments with fully understanding of instrument variables and parameters allowing the engineering and science teams the capability of fine-tuning instrument performance throughout the mission. The sections below provide details on the software programs used by each of the instrument teams.

4.1 EMFISIS and ECT