Abstract

The solar magnetic field dominates and structures the solar coronal plasma. Detailed insights into the coronal magnetic field are important to understand most physical phenomena there. While direct, routine measurements of the coronal magnetic field are not available, field extrapolation of the photospheric vector-field measurements into the corona is the only way to study the structure and dynamics of the coronal field. Here we focus on global coronal structures traditionally modeled using spherical grids and synoptic vector magnetograms as boundary conditions. We developed a new code that performs nonlinear force-free magnetic-field extrapolations in spherical geometry. Our new implementation is based on a well-established optimization principle on a Cartesian grid and a single spherical finite-difference grid. In the present work, for the first time, the algorithm is able to reconstruct the magnetic field in the entire corona, including the polar regions. The finite-difference numerical scheme that was employed in previous spherical-code versions suffered from numerical inefficiencies because of the convergence of those grids on the poles. In our new code, we implement the so-called Yin–Yang overhead grid, the structure of which addresses this difficulty. Consequently, both the speed and accuracy of the optimization algorithm are improved compared to the previous implementations. We tested our new code using the well known semi-analytical model (Low and Lou solution). This is a commonly used benchmark for nonlinear force-free extrapolation codes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reconstructing the global coronal magnetic field of the Sun remains an open research problem in solar physics. The magnetic structure and topology in the solar atmosphere are of significant importance in modeling and understanding the dynamics of the solar corona, as phenomena such as solar flares and coronal mass ejections are driven by coronal magnetic activity. Our understanding and ability to model and predict such phenomena require a highly accurate coronal magnetic-field model. Progress in this field is hindered partly by the current lack of direct measurements of the magnetic field in the solar corona and of other physical quantities, such as plasma temperature and density that define the state of the coronal plasma, and by the very high complexity that the solar corona exhibits as a dynamical system. Nevertheless, various methods have been developed during the past five decades for modeling the solar corona. The photospheric magnetic-field measurements have been used as boundary conditions for such models. These models have different levels of sophistication, including different assumptions for the state of the coronal plasma and different requirements of computational resources. Deriving the coronal electric currents plays an important role in characterizing the different models. The simplest approach is to assume that no current exists within the corona, which leads to potential fields (Altschuler and Newkirk, 1969; Schatten, Wilcox, and Ness, 1969). Global potential fields require only line-of-sight (LOS) magnetic fields as the boundary. A popular approach for the outer boundary is the assumption of a source surface, where all field lines become parallel to the radial direction. This mimics the effect of stretching the field lines as a consequence of the solar-wind flow. These are the so-called potential-field source surface (PFSS) models. Schatten (1972) extended the PFSS model by introducing current sheets. Other global models take into account that the real coronal magnetic field has currents and is non-potential. Solving the full MHD equations for coronal modeling was done for axi-symmetric solutions by Mikić and Linker (1994) and in 3D by Mikić et al. (1999). MHD codes with an adaptive mesh refinement have been developed to study the solar corona, including modeling the solar wind (Feng et al., 2012b; Feng, 2020). Also popular are codes that solve subclasses of the full MHD equations, e.g. a special class of global magneto-hydro-static equilibria (see Bogdan and Low, 1986; Neukirch, 1995, for details) and stationary MHD, including the solar-wind flow (Wiegelmann et al., 2020). For an overview of global coronal magnetic-field models, see the review articles by Mackay and Yeates (2012) and Wiegelmann, Petrie, and Riley (2017), and for force-free fields by Wiegelmann and Sakurai (2021).

Recent advances mainly within the last 15 years in both observational facilities, such as full-disk vector magnetograms from the Synoptic Optical Long-term Investigations of the Sun (SOLIS) and the Helioseismic and Magnetic Imager (HMI) onboard the Solar Dynamics Observatory (SDO) and the increase in the available computational resources have contributed significantly to the improvement of the global corona models. Although there is still a lack of direct measurements of the coronal field, in contrast to the past, when only line-of-sight magnetograms were used, high-quality photospheric vector magnetograms can be employed now as more strict boundary conditions. Moreover, because of the recent advances in stereoscopy and the availability of high-resolution extreme-ultraviolet coronal images, it is now possible to validate coronal magnetic-field models, as was proposed by De Rosa et al. (2009) for Cartesian, nonlinear, force-free models. Observing coronal loops simultaneously from several vantage points allows us to reconstruct their 3D geometric structure using stereoscopic techniques (see Aschwanden, 2011, for a review article on stereoscopy). Coronal observations can be used not only as a way to validate computational models, as done, e.g. by Yeates et al. (2018), but also as a tool to improve them, as demonstrated by Chifu, Wiegelmann, and Inhester (2017).

Currently, there are still limitations in deriving the full global structure of the coronal magnetic field. Two of the most significant challenges that one has to face when constructing such global models are the quality of the vector magnetograms at the polar regions and the numerical inefficiencies associated with the convergence of the regular spherical grids towards the poles. The reliability of the vector magnetograms in these high-latitude regions is questionable because of the large observation angle when the observations are performed from the ground (e.g. SOLIS) or spacecraft orbiting within the ecliptic plane (e.g. SDO). As nonlinear force-free models require vector magnetograms as a boundary condition, their applicability is not possible when the data are not reliable. Furthermore, single finite-difference spherical grids suffer from numerical issues at the poles. More specifically, the convergence of the spherical grid towards the poles affects the convergence of the extrapolation algorithms. The successful launch of Solar Orbiter in February 2020 promises new measurements of the vector magnetic field on the solar polar regions. Recent overviews about the Solar Orbiter mission can be found in the articles by Zouganelis et al. (2020) and Rouillard et al. (2020). The Polarimetric and Helioseismic Imager (PHI) onboard Solar Orbiter will provide the magnetic-field vector and the Extreme Ultraviolet Imager (EUI) imaging observations of coronal loops. To be ready for the time when polar-region vector magnetograms will become available, we developed a new code that can use these data as a boundary condition to compute the coronal global magnetic field. Our new code overcomes the problem of grid convergence in the polar regions because it is implemented on a Yin–Yang grid that is specifically developed for modeling spherical objects, such as planets, stars, etc. We structure the article as follows: In Section 2, we give details of our new Yin–Yang code for computing nonlinear force-free fields (NLFFF), and in Section 3 we test our code with a semi-analytic reference solution while also examining its efficiency. Finally, we summarize our results and discuss our future research plans based on the newly developed code in Section 4.

2 Code Description

2.1 Optimization Code

Force-free magnetic fields are defined by both a vanishing Lorentz force and a vanishing magnetic-field divergence. Therefore, they have to obey the following equations:

One possibility to solve Equations 1 and 2 was proposed by Wheatland, Sturrock, and Roumeliotis (2000) through the minimization of a functional \(L\). For the study of global coronal magnetic fields, we use a functional expressed in spherical geometry as introduced by Wiegelmann (2007) and defined as

The definition of the functional (Equation 3) is such that \(L = 0\) fulfils the requirement that the field is both force and divergence-free because the quadratic form in the functional ensures that Equations 1 and 2 are satisfied for \(L=0\). The functional derivative of the functional leads to

where \(\mu \) refers to a positive constant, and the pseudo-force \(\boldsymbol{F}\) is defined as

with

A typical approach to solving nonlinear force-free equilibrium by minimizing Equation 3 is to use a potential field as the initial state, which is a special class of a force-free equilibrium. Potential fields are usually not consistent with the horizontal magnetic-field measurements, which are used as the bottom boundary for our model. Subsequently, when calculating the functional (Equation 3) after having replaced the bottom layer of the potential field with the observed field, there is a (large) deviation of force- and divergence-freeness. The functional \(L\) is then minimized numerically by applying the iterative equation (Equation 4). For the tests performed in this study, we use synthetic synoptic vector magnetograms and generalize the implementation of our spherical optimization code for synoptic magnetograms as described by Tadesse et al. (2014).

2.2 Yin–Yang Grid

When the optimization algorithm is implemented on a regular finite-difference spherical grid, there are some limitations when the computational domain extends towards the poles. Close to the polar regions, the spatial-grid converges, which means that the minimum distance between the grid points decreases. The iteration step scales with the square of the spatial grid resolution and consequently the number of iteration steps until \(L\) reaches its minimum increases dramatically. The dynamic time-step control in our previous spherical code (and also in the Cartesian code) decreases the time-step automatically when \(L\) does not monotonically decrease. Consequently, the time-step decreases by several orders of magnitude, and the number of iterations steps until the convergence increases accordingly. Up to now, this limitation of the code was not significant for the application to currently available data because the lack of reliable magnetic-field measurements in solar polar regions did not allow accurate modeling of the coronal magnetic field above the poles in any case. Consequently, the computational domain was limited between 20 and 160 degrees in co-latitude to achieve a reasonable execution time. In the advent of polar magnetic-field measurements from the Solar Orbiter mission, we implement the optimization code on a Yin–Yang grid to overcome the polar convergence issue. The Yin–Yang grid was introduced by Kageyama and Sato (2004) for geophysical applications. This overhead grid was to our knowledge first applied to solar applications by Jiang, Feng, and Xiang (2012) who implemented a Yin–Yang grid in their CESE–MHD code to compute nonlinear force-free fields equilibria by MHD relaxation. A Yin–Yang grid was also implemented in the AMR–CESE–MHD code to study the global coronal evolution in a data-driven model by Feng et al. (2012a). The Yin–Yang grid consists of two identical spherical grids. The first component of the grid is a regular spherical grid with some cutout regions. More specifically, \(\frac{\pi}{4} < \theta < \frac{3\pi}{4}\) and \(\frac{\pi}{4} < \phi <\frac{7\pi}{4}\), which corresponds to \(45^{\circ }< \theta < 135^{\circ}\) and \(45^{\circ }< \phi < 315^{\circ}\) (\(\theta \) refers to the co-latitude and \(\phi \) to the longitude). The other component of the grid is the Yang grid, which is identical to the complementary Yin grid and perpendicular to it. More specifically, the North Pole of the Yang grid is located in the Equator of the Yin grid with spherical coordinates \(90^{\circ}\) longitude and \(90^{\circ}\) latitude. By North Pole we refer to the point of the sphere at the end of the positive part of the \(z\)-axis of each grid. While both grids do not have polar regions, the two combined grids cover the entire sphere. The spherical grids are defined close enough to the equatorial regions so that the finite-difference grid is almost equidistant. This allows the use of a larger iteration step. The price one has to pay is that the boundaries of each grid should be updated to capture the evolution of the field that happens within the other grid. This should be done while the optimization algorithm is running, thus increasing the algorithm complexity. The corresponding coordinate transformation equations between the two grids are given by

where the index \(e\) is used to refer to the Yin grid and the index \(n\) to the Yang grid. As the two-component grids are completely symmetrical to each other, the same expression is used to transform coordinates from the Yang to the Yin grid. The symmetry of the two grids is very convenient for the implementation of the code because all mathematical and algorithmic procedures can operate on both grids.

2.3 Yin–Yang Implementation

There are two basic differences between the previous version of the optimization code and the newly developed one. All numerical operations called to operate on one grid are also called to operate on the other. The lateral boundaries of each grid (which have been fixed to a potential field in previous code versions) are now updated with values taken from the complementary grid. In this way, the new method overcomes the problem of earlier codes where the lateral boundaries were required to be specified. The Yin–Yang implementation only requires boundary conditions at the photosphere and on the outer boundary. To update the boundaries of one grid using the values from the other, we apply the following procedure:

-

i)

For an arbitrary grid point, we perform a coordinate transformation to find its coordinates with respect to the other grid.

-

ii)

Compute the magnetic-field vector at this point by applying an interpolation method. It is important to note here that the new coordinates of our arbitrary point do not have to match with the coordinates of a grid point of the other grid, and this is why we have to interpolate to estimate the components of the magnetic-field vector.

-

iii)

Transform the magnetic vector back to the initial grid.

Because of the symmetry of the grids, this process is identical regardless of which is the first and which is the second grid. We describe below all the steps needed for the new Yin–Yang optimization code.

-

i)

Initialize the Yin grid by performing a potential extrapolation using the line-of-sight magnetogram as a boundary condition.

-

ii)

Replace the bottom boundary of the Yin grid with a vector magnetogram. Here, a synthetic one deduced from the Low and Lou solution (a semi-analytical force-free equilibrium found by Low and Lou (1990)) is used.

-

iii)

Initialize the Yang grid by coordinate transformation, interpolation, and vector transformation.

-

iv)

Calculate \(L\) with Equation 3 separately on both grids.

-

v)

Add \(L=L_{n} + L_{e}\).

-

vi)

The iterative Equation 4 is used to iterate the field towards minimizing \(L\) separately on both grids.

-

vii)

The boundaries of each grid are updated using the values of the field on the other grid (by coordinate transformation, interpolation, and vector transformation).

-

viii)

The code is terminated when \(L\) becomes stationary.

-

ix)

The final solution can be transformed to both grids.

3 Validation of the Code

3.1 Method

In this section, we want to evaluate the performance of our newly developed code. The aim is to find out whether our code is able to minimize the functional \(L\) down to the numerical precision and to compare the output of our code with a known nonlinear force-free field reference equilibrium. Checking the force-freeness and comparing with a reference solution are standard procedures to validate NLFFF codes implemented on Cartesian and spherical grids. A frequently used reference solution for this aim is a semi-analytical nonlinear force-free equilibrium solution found by Low and Lou (1990). This method solves a Grad–Shafranov equation in spherical coordinates, which is invariant in the \(\phi \)-direction, but displacements of the origin and rotation allow us to compute equilibria changing in all three spatial coordinates. It can be used to evaluate nonlinear force-free codes in 3D (as done for Cartesian geometry by, e.g., Wiegelmann, 2004; Amari, Boulmezaoud, and Aly, 2006; Schrijver et al., 2006; Inhester and Wiegelmann, 2006; Valori, Kliem, and Fuhrmann, 2007) and to evaluate spherical NLFFF codes (Wiegelmann, 2007; Song et al., 2007; Tadesse, Wiegelmann, and Inhester, 2009; Jiang, Feng, and Xiang, 2012; Guo et al., 2012; Contopoulos, 2013; Amari et al., 2013). Following these works, we use a spherical Low and Lou solution as reference to evaluate our code, which we do as follows:

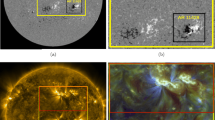

-

i)

Calculate the spherical Low and Lou solution in 3D, which, from now on, we will call the reference solution. A field-line plot for this reference solution is shown in Figure 1, left panel. The reference solution was computed on a spherical grid with \(n_{r} = 45\), \(n_{\theta} = 90\), and \(n_{\phi} = 180\), where \(n_{r}\) is the number of points in the radial direction, \(n_{\theta}\) is the number of points in latitude, and \(n_{\phi}\) is the number of points in longitude.

-

ii)

Using the radial component of the Low and Lou solution as a synthetic magnetogram, we performed a potential-field extrapolation on the same spherical grid, as shown in Figure 1, middle panel.

-

iii)

We use the potential field as computed in the last step as initial equilibrium, and the bottom boundary from the reference solution is taken as a synthetic vector map used as a boundary condition for our new code. The top boundary was selected to be either the top boundary of the potential field or the top boundary from the reference field. The effect of using these different top boundary conditions will be examined in Section 3.2.

-

iv)

We run the optimization code and compare the results with the reference Low and Lou solution and evaluate the influence of several effects as stated below. A visual representation of one of our outputs (dubbed Case 3, see also Table 1) is shown in Figure 1, right panel.

Table 1 The specifications of our test cases (Columns 1 – 4) and a quantitative evaluation of the results (Columns 5 – 11). The first column “Case” names the test cases. The second column “NIS” the Non-Interpolation Steps. This means the number of iterations are done on the Yin and Yang separately without communication between the two grids. Column three “Bound.” specifies the used boundary condition. Here “l” means that the Low and Lou reference field was specified in the photosphere and on the outer boundary, and “p” means that a potential field was specified on the outer boundary. Column 4 “\(\mu \) control” specifies whether a constant iteration time-step was used (value 1), or if and how the time-step was controlled dynamically (see the text for details). Column 5 “Iterations” shows the number of iteration steps until convergence and Column 6 “Time”, the computational time in minutes on Macbook pro with M1 processor. Columns 7 – 9 “L”, “L1”, “L2” show the final value of the minimized functional L (see Equation 3) and its two parts, which are the residual Lorentz force (see Equation 12) and the residual magnetic-field divergence (see Equation 13). Finally, we compare the output of our code with the reference solution by the vector correlation (see Equation 14) in Column 10 “VC” and the energy percentage (see Equation 15) in Column 11 labeled “EP”.

3.2 Code Specifications and Free Parameters

A number of different parameters are used to control the flow of the code and specify different conditions, as explained below. How these specifications influence the quality and computing time of our code is presented in Table 1.

-

i)

Interpolation method: Specifies the order of the interpolation method used during the vector-transformation process. As a first test, we use a bi-linear interpolation. Please note that the \(r\) is always identical on both grids and interpolation is needed only for the lateral directions. In principle, higher-order interpolation methods could be used.

-

ii)

Non-interpolation steps (NIS): Defines the number of optimization steps between updating the boundaries of the one grid with vectors interpolated and transformed from the other grid.

-

iii)

The initial iteration step is \(\mu =10^{-4}\), which is sufficiently small to ensure a strictly decreasing functional \(L\) if \(\mu \) is kept constant.

-

iv)

Iteration-step reducer: This parameter divides \(\mu \) if the functional \(L\) does not decrease after one iteration step. This is considered a non-successful iteration step, which is refused and repeated with the reduced iteration step when running the code with a dynamically controlled time-step.

-

v)

Iteration step increaser: This parameter multiplies \(\mu \) by 1.01 after every successful iteration step. This doubles the iteration step after 70 successful iterations. The optimization ensures a continuous decrease of \(L\) (see Wheatland, Sturrock, and Roumeliotis, 2000). In a numerical implementation, \(L\) can increase if the iteration time-step is too large. The time-step increaser and reducer help to search for the optimum time-step, as high as possible (for a short computing time) and as low as necessary (to ensure a strictly monotonically decreasing \(L\)). The code is terminated either when the maximum number of iterations is reached, or when \(\mu \) becomes smaller than a threshold number: \(10^{-9}\).

-

vi)

The original Yin–Yang grid spans the range of \(45 \leq \theta \leq 135\) and \(45 \leq \phi \leq 315\). In most cases, we use this standard grid, but we also investigate the effect of different (non-standard) grids.

3.3 First Qualitative Evaluation

Our first tool to validate our new code is the comparison between the field lines of the solution resulting from our code with the field lines of the reference solution and the potential solution, as shown in Figure 1. It is evident from the reference solution in the left panel that the output of our code depicts a very good qualitative agreement of the corresponding magnetic-field lines. The potential-field model, which was used as the initial equilibrium for our code, shows large deviations in the field-line plots.

3.4 Quantitative Evaluation

Visualizing our solution and comparing the corresponding magnetic-field-line plots with the reference solution, as done in Figure 1, is a fast way to qualitatively evaluate our results. Here we use a number of quantitative criteria. The value of the functional \(L\) (Equation 3) after the optimization evaluates how close the resulting field is to a force-free and divergence-free state. We compute this also separately for the Lorentz-force part, defined as

and the divergence integral, defined as

Additionally, we compare our results quantitatively with the reference resolution by a number of quantitative measures (as introduced by Schrijver et al., 2006).

Namely, we use the Vector Correlation, defined as

and the Energy Percentage, defined as

The magnetic-field output from our code \([\boldsymbol{b_{i}}]\) is compared with a reference solution \([\boldsymbol{B_{i}]}\) (here the Low and Lou solution). For a perfect agreement of our solutions with the reference, both VC and EP should be unity. To compute these numerical quantities, the sums are taken over all \(N\) data points of the computational grid. More specifically, for the computation of each quantity, we calculate the Yin and Yang parts separately and then add them. In order to not include the overlapping region twice in the computations for these regions, we use the points of the Yin grid. The vector correlation VC is an important indicator of how well the two vector fields agree because it involves the dot products between the vectors, as well as their magnitudes. The percentage of the magnetic energy EP not only helps us to compare the two vector fields but also has a physical meaning. If the energy in our solution is not close to the energy of the reference solution, our model is not that useful because being able to calculate the free magnetic energy stored in the corona is one of the reasons why nonlinear force-free extrapolations are useful. Having the energy percentage close to unity tells us that, overall, the vectors of our vector field have the correct magnitude.

3.5 Comparison of Different Test Cases

-

Case 0:

In this case, the initial potential field and the discretization error (\(L\), L1, and L2) are computed. This does not require optimization, but only one iteration to compute the functional \(L\). A comparison with the reference solution is also done. All comparison criteria should be close to their ideal values so that the potential-field solution is meaningful.

-

Case 1:

This case estimates the discretization error of the reference solution computed on the numerical grids. Again, no iteration was done, just one evaluation of \(L\) was computed.

-

Cases 2 – 4:

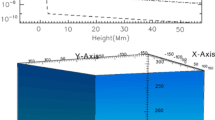

These are the first real tests, which use an initial potential field, and both the bottom and top boundaries are prescribed from the reference solution. This ensures fully consistent boundary conditions. This set-up of boundary conditions for the Yin–Yang code is equivalent to prescribing all six boundaries of the computational box of a Cartesian NLFFF code. All quantities show an almost perfect agreement with the reference solution (Case 1). The values of \(L\), L1, and L2 are even a bit smaller than the discretization error of the semi-analytical reference field. The reason for this is that the code optimizes these quantities on the numerical grids. The results are not affected if the update of the boundaries between the Yin–Yang grid is done after every iteration step (Case 2) or only after every 10 or 100 iteration steps as in Cases 3 and 4, respectively. When examining the convergence of the code, it seems that in Case 2, it converges faster than in Case 3, and in Case 3, it converges faster than in Case 4. This can be understood as a more frequent update of the boundaries that insures faster flow of information between the grids. When we talk about convergence speed, we refer to the number of iterations needed to reduce \(L\). The difference in convergence speed is so small that it is not visible in Figure 3. A significant difference is observed in the computational time, which is reduced by about 8 % as the updating of the boundaries is a lot less frequent in these cases. The reduction of the execution time is not that significant when going from 10 to 100 non-interpolation steps as the percentage of the execution time that the code spends on doing the updates is a lot smaller in Case 3 than in Case 2. In all cases, a fixed (small) iteration time-step was used, and the code was running until the maximum number of allowed steps (40,000) was reached. The evolution of \(L\) (a decrease in almost four orders of magnitude) during the iterations is shown in Figure 2 and the development of the vector correlation and energy percentage in Figure 3. These two quantities naturally start with the potential-field values and reach almost unity at the end of the iteration.

-

Cases 5 – 7:

They are similar to Cases 2 – 4 with the only difference that the bottom boundary of our computational domain was prescribed by the reference solution. The outer boundary was taken from the initial potential-field solution. These two boundaries are not necessarily consistent with each other. However, these test cases mimic the situation when the code is applied to measurements. When a full-Sun extrapolation is attempted, only photospheric magnetic-field measurements are available at present, and the outer boundary is a priori unknown. This set-up of boundary conditions is comparable to specifying only the bottom boundary of a Cartesian code. The inconsistent top and bottom boundary conditions lead to slightly higher values of \(L\), L1, and L2, but a still almost perfect vector correlation, and an error of about 3 % in the magnetic energy. Again the number of non-interpolation steps hardly influenced the result. Updating the interpolation only every ten steps resulted in the lowest computing time, but not by a large margin. To understand this difference, it is important to mention here that in Case 6 fewer iterations were needed to reach the state with a minimum \(L\) compared to Case 7. This is expected as the more frequent update of the boundaries ensures faster communication between the grids, thus accelerating the convergence. On the other hand, the code runs faster when the updates are not that frequent. Therefore, the combination of these two competing procedures gives us this complex dynamics.

-

Cases 8 – 10:

Here, instead of using a fixed, small iteration step, we controlled the iteration step dynamically. If both bottom and top boundaries are prescribed, we get a slight increase in the residual errors \(L\), L1, and L2 compared to the cases where a fixed time-step is used and an error in the energy percentage of 2 – 3 %. The vector correlation is almost one. Compared with a fixed time-step, we obtain a drastically reduced computational time by a factor of about 3.3 in the worst case. It is interesting to state here that while the code needs 11,000 iterations in Case 8 to reach the minimum L, during the last 6000 iterations it is converging so slowly that \(L\) is reduced only 10 %.

-

Cases 11 – 13:

Here, we explore the dynamic time-step control for the realistic case in which only the photospheric magnetic field is provided. The effects of a potential inconsistency between the bottom and outer boundaries and the effects of the dynamic control of time-step cumulate in a slightly higher residual error in L1, but not in L2. Compared with the fixed-time-step computation, the error in the energy percentage increases by another 3 %, and the magnetic energy is underestimated by 5 % compared to the reference solution. The computing time decreases by a factor of about 4.5 compared to the cases with a fixed time-step.

-

Cases 14 and 15:

Here, we investigate the effect of a non-standard Yin–Yang grid and changed the areas where both grids overlap. This was done for a case with a dynamically controlled time-step and the top boundary taken from the reference solution. All quantities (residual \(L\), L1, and L2 and comparison matrices VC and EP) are worse than with the standard Yin–Yang grid, and consequently, we do not further investigate non-standard grids.

4 Conclusion and Future Plans

We developed a new nonlinear force-free optimization code implemented on a Yin–Yang grid. The quality of the solutions is comparable to the ones produced by a previous version of the spherical NLFFF code as described by Wiegelmann (2007). Please note that the previous code version did not include proper treatment of the polar regions, and the earlier tests were done on a lower resolution grid. Within this work, we investigated the influence of several code specifications. The first, more technical, test was to investigate the new code under the condition of complete and consistent boundary conditions, where the reference solution was specified on all boundaries, which showed an almost perfect agreement of the computed equilibria with the reference solution.

Please note that the Yin–Yang grids have no lateral boundaries, and only bottom and top boundaries needed to be prescribed. On the Sun, we have, however, only magnetic-field measurements in the photosphere and the top boundary conditions are unknown. A natural guess is using a potential-field source-surface model to prescribe the boundary conditions on the source surface. Our code still provides a good agreement with the reference solution. The vector correlation with the reference solution has an error of about 0.5 %, and the error in the magnetic energy is in the range of 3 % (for a fixed, small time-step) and 5 % (for a dynamically controlled time-step). However, a dynamic time-step does reduce the computing time by about a factor of 4.5.

We also investigated to what extent the final result is influenced by how often the Yin and Yang grids communicate with each other (after every iteration step, after 10, and after 100 steps). It was found that these hardly influenced the solution. The lowest computing time was reached if the interpolation between the two grids was done every tenth iteration step. However much tempting it is to suggest using a dynamically controlled time-step and grid communication at every tenth step as standard for future application to data, the combination of these parameters may have different results when working with observational data, thus using an update every step is the safest suggestion. If computing time does not matter, a small, fixed time-step gives somewhat more accurate results.

Our next plans are to apply our new code to the forthcoming synoptic vector magnetograms created by observations from Solar Orbiter/PHI and SDO/HMI data. The higher-latitude orbit of Solar Orbiter allows a view of the poles, and combining data from both spacecraft will allow the removal of the 180-degree ambiguity by stereoscopy (as investigated by Valori et al., 2022). Our newly developed Yin–Yang implementation is timely for an application to these new vector maps, which are expected to be more accurate than the current data (due to the stereoscopic ambiguity-removal approach) because polar fields will be measured. We would like to point out that the resulting full-sphere global coronal magnetic-field model is not only important as a stand-alone result, but knowing the global magnetic topology and connectivity also helps to prescribe (lateral and top) boundary conditions for local computations, e.g. in active regions and at the poles.

References

Altschuler, M.D., Newkirk, G.: 1969, Magnetic fields and the structure of the Solar Corona. I: Methods of calculating coronal fields. Solar Phys. 9, 131. DOI. ADS.

Amari, T., Boulmezaoud, T.Z., Aly, J.J.: 2006, Well posed reconstruction of the solar coronal magnetic field. Astron. Astrophys. 446, 691. DOI. ADS.

Amari, T., Aly, J.-J., Canou, A., Mikić, Z.: 2013, Reconstruction of the solar coronal magnetic field in spherical geometry. Astron. Astrophys. 553, A43. DOI. ADS.

Aschwanden, M.J.: 2011, Solar stereoscopy and tomography. Liv. Rev. Solar Phys. 8, 5. DOI. ADS.

Bogdan, T.J., Low, B.C.: 1986, The three-dimensional structure of magnetostatic atmospheres. II - Modeling the large-scale corona. Astrophys. J. 306, 271. DOI. ADS.

Chifu, I., Wiegelmann, T., Inhester, B.: 2017, Nonlinear force-free coronal magnetic stereoscopy. Astrophys. J. 837, 10. DOI. ADS.

Contopoulos, I.: 2013, The force-free electrodynamics method for the extrapolation of coronal magnetic fields from vector magnetograms. Solar Phys. 282, 419. DOI. ADS.

De Rosa, M.L., Schrijver, C.J., Barnes, G., Leka, K.D., Lites, B.W., Aschwanden, M.J., Amari, T., Canou, A., McTiernan, J.M., Régnier, S., Thalmann, J.K., Valori, G., Wheatland, M.S., Wiegelmann, T., Cheung, M.C.M., Conlon, P.A., Fuhrmann, M., Inhester, B., Tadesse, T.: 2009, A critical assessment of nonlinear force-free field modeling of the Solar Corona for Active Region 10953. Astrophys. J. 696, 1780. DOI. ADS.

Feng, X.: 2020, Magnetohydrodynamic Modeling of the Solar Corona and Heliosphere, Springer, Singapore. DOI.

Feng, X., Jiang, C., Xiang, C., Zhao, X., Wu, S.T.: 2012a, A data-driven model for the global coronal evolution. Astrophys. J. 758, 62. DOI. ADS.

Feng, X., Yang, L., Xiang, C., Jiang, C., Ma, X., Wu, S.T., Zhong, D., Zhou, Y.: 2012b, Validation of the 3D AMR SIP-CESE solar wind model for four Carrington rotations. Solar Phys. 279, 207. DOI. ADS.

Guo, Y., Ding, M.D., Liu, Y., Sun, X.D., DeRosa, M.L., Wiegelmann, T.: 2012, Modeling magnetic field structure of a Solar Active Region Corona using nonlinear force-free fields in spherical geometry. Astrophys. J. 760, 47. DOI. ADS.

Inhester, B., Wiegelmann, T.: 2006, Nonlinear force-free magnetic field extrapolations: comparison of the Grad Rubin and Wheatland Sturrock Roumeliotis algorithm. Solar Phys. 235, 201. DOI. ADS.

Jiang, C., Feng, X., Xiang, C.: 2012, A new code for nonlinear force-free field extrapolation of the global corona. Astrophys. J. 755, 62. DOI. ADS.

Kageyama, A., Sato, T.: 2004, “Yin-Yang grid”: An overset grid in spherical geometry. Geochem. Geophys. Geosyst. 5, Q09005. DOI. ADS.

Low, B.C., Lou, Y.Q.: 1990, Modeling Solar Force-free magnetic fields. Astrophys. J. 352, 343. DOI. ADS.

Mackay, D.H., Yeates, A.R.: 2012, The Sun’s global photospheric and coronal magnetic fields: observations and models. Liv. Rev. Solar Phys. 9, 6. DOI. ADS.

Mikić, Z., Linker, J.A.: 1994, Disruption of coronal magnetic field arcades. Astrophys. J. 430, 898. DOI. ADS.

Mikić, Z., Linker, J.A., Schnack, D.D., Lionello, R., Tarditi, A.: 1999, Magnetohydrodynamic modeling of the global solar corona. Phys. Plasmas 6, 2217. DOI. ADS.

Neukirch, T.: 1995, On self-consistent three-dimensional analytic solutions of the magnetohydrostatic equations. Astron. Astrophys. 301, 628. ADS.

Rouillard, A.P., Pinto, R.F., Vourlidas, A., De Groof, A., Thompson, W.T., Bemporad, A., Dolei, S., Indurain, M., Buchlin, E., Sasso, C., Spadaro, D., Dalmasse, K., Hirzberger, J., Zouganelis, I., Strugarek, A., Brun, A.S., Alexandre, M., Berghmans, D., Raouafi, N.E., Wiegelmann, T., Pagano, P., Arge, C.N., Nieves-Chinchilla, T., Lavarra, M., Poirier, N., Amari, T., Aran, A., Andretta, V., Antonucci, E., Anastasiadis, A., Auchère, F., Bellot Rubio, L., Nicula, B., Bonnin, X., Bouchemit, M., Budnik, E., Caminade, S., Cecconi, B., Carlyle, J., Cernuda, I., Davila, J.M., Etesi, L., Espinosa Lara, F., Fedorov, A., Fineschi, S., Fludra, A., Génot, V., Georgoulis, M.K., Gilbert, H.R., Giunta, A., Gomez-Herrero, R., Guest, S., Haberreiter, M., Hassler, D., Henney, C.J., Howard, R.A., Horbury, T.S., Janvier, M., Jones, S.I., Kozarev, K., Kraaikamp, E., Kouloumvakos, A., Krucker, S., Lagg, A., Linker, J., Lavraud, B., Louarn, P., Maksimovic, M., Maloney, S., Mann, G., Masson, A., Müller, D., Önel, H., Osuna, P., Orozco Suarez, D., Owen, C.J., Papaioannou, A., Pérez-Suárez, D., Rodriguez-Pacheco, J., Parenti, S., Pariat, E., Peter, H., Plunkett, S., Pomoell, J., Raines, J.M., Riethmüller, T.L., Rich, N., Rodriguez, L., Romoli, M., Sanchez, L., Solanki, S.K., St Cyr, O.C., Straus, T., Susino, R., Teriaca, L., del Toro Iniesta, J.C., Ventura, R., Verbeeck, C., Vilmer, N., Warmuth, A., Walsh, A.P., Watson, C., Williams, D., Wu, Y., Zhukov, A.N.: 2020, Models and data analysis tools for the Solar Orbiter mission. Astron. Astrophys. 642, A2. DOI. ADS.

Schatten, K.H.: 1972, Current sheet magnetic model for the Solar Corona. In: Sonett, C.P., Coleman, P.J., Wilcox, J.M. (eds.) Solar Wind, SP-308. NASA, Washington, DC, 44. ADS.

Schatten, K.H., Wilcox, J.M., Ness, N.F.: 1969, A model of interplanetary and coronal magnetic fields. Solar Phys. 6, 442. DOI. ADS.

Schrijver, C.J., De Rosa, M.L., Metcalf, T.R., Liu, Y., McTiernan, J., Régnier, S., Valori, G., Wheatland, M.S., Wiegelmann, T.: 2006, Nonlinear force-free modeling of coronal magnetic fields Part I: a quantitative comparison of methods. Solar Phys. 235, 161. DOI. ADS.

Song, M.T., Fang, C., Zhang, H.Q., Tang, Y.H., Wu, S.T., Zhang, Y.A.: 2007, Reconstructing spherical nonlinear force-free field in the Solar Corona. Astrophys. J. 666, 491. DOI. ADS.

Tadesse, T., Wiegelmann, T., Inhester, B.: 2009, Nonlinear force-free coronal magnetic field modelling and preprocessing of vector magnetograms in spherical geometry. Astron. Astrophys. 508, 421. DOI. ADS.

Tadesse, T., Wiegelmann, T., Gosain, S., MacNeice, P., Pevtsov, A.A.: 2014, First use of synoptic vector magnetograms for global nonlinear, force-free coronal magnetic field models. Astron. Astrophys. 562, A105. DOI. ADS.

Valori, G., Kliem, B., Fuhrmann, M.: 2007, Magnetofrictional extrapolations of Low and Lou’s force-free equilibria. Solar Phys. 245, 263. DOI. ADS.

Valori, G., Löschl, P., Stansby, D., Pariat, E., Hirzberger, J., Chen, F.: 2022, Disambiguation of vector magnetograms by stereoscopic observations from the Solar Orbiter (SO)/Polarimetric and Helioseismic Imager (PHI) and the Solar Dynamic Observatory (SDO)/Helioseismic and Magnetic Imager (HMI). Solar Phys. 297, 12. DOI. ADS.

Wheatland, M.S., Sturrock, P.A., Roumeliotis, G.: 2000, An optimization approach to reconstructing force-free fields. Astrophys. J. 540, 1150. DOI. ADS.

Wiegelmann, T.: 2004, Optimization code with weighting function for the reconstruction of coronal magnetic fields. Solar Phys. 219, 87. DOI. ADS.

Wiegelmann, T.: 2007, Computing nonlinear force-free coronal magnetic fields in spherical geometry. Solar Phys. 240, 227. DOI. ADS.

Wiegelmann, T., Sakurai, T.: 2021, Solar force-free magnetic fields. Liv. Rev. Solar Phys. 18, 1. DOI. ADS.

Wiegelmann, T., Petrie, G.J.D., Riley, P.: 2017, Coronal magnetic field models. Space Sci. Rev. 210, 249. DOI. ADS.

Wiegelmann, T., Neukirch, T., Nickeler, D.H., Chifu, I.: 2020, An optimization principle for computing stationary MHD equilibria with Solar Wind Flow. Solar Phys. 295, 145. DOI. ADS.

Yeates, A.R., Amari, T., Contopoulos, I., Feng, X., Mackay, D.H., Mikić, Z., Wiegelmann, T., Hutton, J., Lowder, C.A., Morgan, H., Petrie, G., Rachmeler, L.A., Upton, L.A., Canou, A., Chopin, P., Downs, C., Druckmüller, M., Linker, J.A., Seaton, D.B., Török, T.: 2018, Global non-potential magnetic models of the Solar Corona during the March 2015 eclipse. Space Sci. Rev. 214, 99. DOI. ADS.

Zouganelis, I., De Groof, A., Walsh, A.P., Williams, D.R., Müller, D., St Cyr, O.C., Auchère, F., Berghmans, D., Fludra, A., Horbury, T.S., Howard, R.A., Krucker, S., Maksimovic, M., Owen, C.J., Rodríguez-Pacheco, J., Romoli, M., Solanki, S.K., Watson, C., Sanchez, L., Lefort, J., Osuna, P., Gilbert, H.R., Nieves-Chinchilla, T., Abbo, L., Alexandrova, O., Anastasiadis, A., Andretta, V., Antonucci, E., Appourchaux, T., Aran, A., Arge, C.N., Aulanier, G., Baker, D., Bale, S.D., Battaglia, M., Bellot Rubio, L., Bemporad, A., Berthomier, M., Bocchialini, K., Bonnin, X., Brun, A.S., Bruno, R., Buchlin, E., Büchner, J., Bucik, R., Carcaboso, F., Carr, R., Carrasco-Blázquez, I., Cecconi, B., Cernuda Cangas, I., Chen, C.H.K., Chitta, L.P., Chust, T., Dalmasse, K., D’Amicis, R., Da Deppo, V., De Marco, R., Dolei, S., Dolla, L., Dudok de Wit, T., van Driel-Gesztelyi, L., Eastwood, J.P., Espinosa Lara, F., Etesi, L., Fedorov, A., Félix-Redondo, F., Fineschi, S., Fleck, B., Fontaine, D., Fox, N.J., Gandorfer, A., Génot, V., Georgoulis, M.K., Gissot, S., Giunta, A., Gizon, L., Gómez-Herrero, R., Gontikakis, C., Graham, G., Green, L., Grundy, T., Haberreiter, M., Harra, L.K., Hassler, D.M., Hirzberger, J., Ho, G.C., Hurford, G., Innes, D., Issautier, K., James, A.W., Janitzek, N., Janvier, M., Jeffrey, N., Jenkins, J., Khotyaintsev, Y., Klein, K.-L., Kontar, E.P., Kontogiannis, I., Krafft, C., Krasnoselskikh, V., Kretzschmar, M., Labrosse, N., Lagg, A., Landini, F., Lavraud, B., Leon, I., Lepri, S.T., Lewis, G.R., Liewer, P., Linker, J., Livi, S., Long, D.M., Louarn, P., Malandraki, O., Maloney, S., Martinez-Pillet, V., Martinovic, M., Masson, A., Matthews, S., Matteini, L., Meyer-Vernet, N., Moraitis, K., Morton, R.J., Musset, S., Nicolaou, G., Nindos, A., O’Brien, H., Orozco Suarez, D., Owens, M., Pancrazzi, M., Papaioannou, A., Parenti, S., Pariat, E., Patsourakos, S., Perrone, D., Peter, H., Pinto, R.F., Plainaki, C., Plettemeier, D., Plunkett, S.P., Raines, J.M., Raouafi, N., Reid, H., Retino, A., Rezeau, L., Rochus, P., Rodriguez, L., Rodriguez-Garcia, L., Roth, M., Rouillard, A.P., Sahraoui, F., Sasso, C., Schou, J., Schühle, U., Sorriso-Valvo, L., Soucek, J., Spadaro, D., Stangalini, M., Stansby, D., Steller, M., Strugarek, A., Štverák, Š., Susino, R., Telloni, D., Terasa, C., Teriaca, L., Toledo-Redondo, S., del Toro Iniesta, J.C., Tsiropoula, G., Tsounis, A., Tziotziou, K., Valentini, F., Vaivads, A., Vecchio, A., Velli, M., Verbeeck, C., Verdini, A., Verscharen, D., Vilmer, N., Vourlidas, A., Wicks, R., Wimmer-Schweingruber, R.F., Wiegelmann, T., Young, P.R., Zhukov, A.N.: 2020, The Solar Orbiter Science Activity Plan. Translating solar and heliospheric physics questions into action. Astron. Astrophys. 642, A3. DOI. ADS

Acknowledgments

A. Koumtzis acknowledges IMPRS as this work was supported by the International Max-Planck Research School (IMPRS) for Solar System Science at the University of Göttingen. T. Wiegelmann acknowledges financial support by DLR-grant 50 OC 2101. The authors thank Maria S. Madjarska and Bernd Inhester for discussions and useful comments to the manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koumtzis, A., Wiegelmann, T. A New Global Nonlinear Force-Free Coronal Magnetic-Field Extrapolation Code Implemented on a Yin–Yang Grid. Sol Phys 298, 20 (2023). https://doi.org/10.1007/s11207-023-02109-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11207-023-02109-6