Abstract

While modeling political participation as a latent variable, researchers usually choose whether to conceptualize and model participation as a latent continuous or latent categorical variable. When participation is modeled as a continuous variable, factor analytic and item-response theory models are used. When modeled as a categorical variable, latent class analysis is employed. However, both conceptualizations and modeling approaches rest upon very strong assumptions. In the continuous case, all subjects are assumed to come from the same homogenous population; in the categorical case, we assume that no quantitative heterogeneity exists within the latent classes. In this work, I argue that these assumptions are implausible and propose to model participation using zero-inflated measurement and regression models that assume the existence of two latent classes-politically disengaged and politically active-with the latter class being quantitatively heterogenous (people in that class are thought to participate to a varying degree). The results show that the models accounting for the latent class of politically disengaged have much better out-of-sample predictive accuracy. Moreover, modeling the zero-inflation changes estimates of measurement and regression models, and offers new research opportunities because with zero-inflated models we can explicitly tackle the question of what impacts the probability of ending up in the latent class of politically disengaged.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For many years, researchers have resorted to various latent variable models to measure political participation (e.g. Marsh, 1974; van Deth, 1986; Teorell, Torcal and Montero 2007; García-Albacete, 2014; Talò & Mannarini, 2015; Oser, 2017; Theocharis & van Deth, 2018). Measurement models of this kind allow to link observable indicators with the latent construct of political participation, account for the measurement error, and to check if the chosen model and, consequently, the chosen conceptualization have any resemblance in the data (cf. Fariss et al., 2020).

If we entertain the idea of political participation as a latent variable, one of the key decisions to make is about what conceptualization and measurement model to choose. In the literature, the dominant view is that the latent construct of political participation should be treated as a continuous variable (García-Albacete, 2014; Marsh, 1974, 1977; Milbrath, 1965; Quaranta, 2013; van Deth, 1986; Verba et al., 1978; Vráblíková, 2014). According to this view, we would assume that people have various propensities to participate, and depending on these propensities, they choose different forms of participation. Measurement models of political participation as a continuous latent variable vary in complexity from simple additive indices to complex factor analytic models, such as the bifactor model (Koc, 2021). In an alternative conceptualization, participation is not conceived of as some kind of latent continuum. Researchers favouring this approach try to identify relatively homogenous groups of people, where the criterion of homogeneity is based on participation repertoires (Oser, Hooghe & Marien, 2013; Jeroense & Spierings, 2022; Johann et al., 2020; Oser, 2017, 2021; Quaranta, 2018), e. g., people having a high probability of participating in all forms, those who choose only electoral participation or people refraining from any participation (cf. Oser, 2017). To define these homogenous groups, scholars use Latent Class Analysis (LCA) and treat political participation as a categorical latent variable.

However, both of these approaches are based on strong assumptions and have their limitations. In the ‘participation as a continuous-variable’ case, all individuals are assumed to come from the same homogenous population, and any differences between individuals to arise only from different propensities on the latent continuum. Hence, there are no subpopulations with distinct participation repertoires. With ‘participation as a categorical variable’, we ignore the within-group quantitative differences and we assume that people in each group participate to the same extent.

In this article, I go beyond the dichotomy of continuous vs. categorical and investigate the possibility of political participation exhibiting simultaneously features of both of these conceptualizations. Specifically, I propose to divide people into two latent classes: those who refrain from participating at all (politically disengaged) and those who participate to a varying degree. I argue that we should distinguish these two latent classes based on the frequently used data—European Social Survey—that show that most people do not participate in any non-electoral form of political participation, and substantive considerations, i.e. the theory of political apathy and alienation. To test the proposal, I utilize zero-inflated models which assume that there are two different distributions, or processes, contributing to the all-zero answers (i.e., non-participating in any form): one where subjects always produce zeros (“structural zeros” or “excessive zeros”), and the other, where subjects reported all-zero answers during the period of interest or at the time of assessment ("sampling zeros”) (Feng, 2021). That is, a person may report not participating in any non-electoral form because they belong to the latent class of politically disengaged and does not participate at all or because the person did not participate in the particular period that the interviewer asked about.

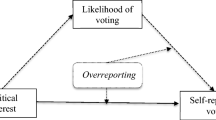

The analytical procedure consists of two steps. First, I estimate the proportions of the two latent classes in the population and investigate the heterogeneity within the class of those who participate using a Zero-Inflated Item Response Theory (ZI-IRT) model. I compare the fit of this model with a regular IRT model. Second, I estimate two regression models—a Beta-Binomial and Zero-Inflated Beta-Binomial—which closely mirror the data generating process of the two measurement models, with five frequently used antecedents of political participation: gender, age, education, political interest, and political efficacy. This allows me to check whether accounting for the zero-inflation matters for explanatory models. Additionally, with the Zero-Inflated Beta-Binomial, I will be able to find out if those antecedents of participation have the same effect on the participation intensity and the probability of belonging to the latent class of politically disengaged.

The results show that the ZI-IRT model fits the data better than the model not accounting for the zero-inflation. When taking the zero-inflation into account, parameters of the measurement model change: item difficulty and discrimination coefficients shrink, and the mass of the distribution of latent scores moves to the right (people seem to be more politically active). Moreover, accounting for the zero-inflation matters when we regress political participation on other variables: the fit of the Zero-Inflated Beta-Binomial model is substantially better and the regression coefficients of the model are different. Also, the zero-inflated part reveals that out of the five predictors, political interest and education have an impact on the probability of belonging to the latent class of politically disengaged, while political efficacy—a strong predictor of participation intensity—has no effect on the probability of belonging to the latent class of disengaged. Overall, this study provides suggestive evidence for the superiority of the approach distinguishing two latent classes while modeling political participation—the disengaged and the participating to a varying degree—and that we can and ought to model two separate processes—the intensity of participation and the probability of belonging to the latent class of politically disengaged.

2 Existing Perspectives on Political Participation as a Latent Variable

Following van Deth (2014), for a phenomenon to be counted as political participation, it needs to be a voluntary behavior conducted by individuals acting as citizens and not as professionals, that fulfills at least one of the following conditions: (1) it is located within the realm of politics, government, or the state; (2) it is targeted at a subject from one of these realms or aimed at solving a community problem; (3) it is placed in a political context (e.g., dressing up as money-eating zombies and walking outside the New York stock exchange during the height of the global financial crisis; see van Deth and Theocharis (2018)) or it is used to express political aims and intentions (van Deth, 2014; van Deth & Theocharis, 2018). This approach to conceptualizing political participation allows us, on the one hand, to capture old and new forms of participation and, on the other hand, to avoid falling into the trap of building a “concept of everything” (van Deth, 2001; van Deth & Theocharis, 2018).

2.1 Political Participation as a Continuous Variable

Thinking about political participation as a continuum has a long and well-established tradition in political behavior research. Probably the most famous early examples of this approach can be found in the works of Milbrath (1965) and Marsh (1974). According to Milbrath (1965), political participation is cumulative and forms a ranking of behavior types that is based on resources needed to enact them (cf. Brady et al., 1995). Different forms of participation require different amounts of time and energy, with the least resources needed for easier activities such as voting, and significantly more resources required for more demanding acts such as taking part in demonstrations. In Milbrath’s (1965) work, the easiest acts are labeled as “spectator activities”, and the most difficult ones— “gladiator” activities. The fact that political participation, according to Milbrath (1965), is cumulative means that people participating in more difficult forms would very likely participate in easier acts, with the opposite not being true. While Milbrath considered more “conventional” forms of participation, Marsh (1974) presents similar reasoning while focusing on the citizens’ protest potential and finds that it is positively related to their socioeconomic status. The idea that political participation can be understood cumulatively and that participation acts can be ordered according to the costs they impose on the individual has been discussed later also by other authors (e.g., García-Albacete, 2014; van Deth, 1986).

To translate the conceptualization of political participation as a continuum into a measurement model, researchers initially used either Guttman scaling (e.g., Marsh, 1974) or Mokken Scale Analysis (e.g., García-Albacete, 2014; van Deth, 1986). Technical details of the two techniques are not important for the discussion in this article.Footnote 1 What is important though is that in the case of both models (1) every subject is assumed to have a certain, unknown value on the latent variable of political participation—which we can call participation potential, participation intensity, or a propensity to participate—and (2) that for each participation form, the probability of participating in that form increases with that latent variable (van Deth, 1986). Furthermore, forms of political participation, or acts, are thought to vary in terms of their difficulty,Footnote 2 which corresponds to the resources needed to perform those acts. It should be mentioned that these assumptions are made also when political participation is measured with more complicated cumulative latent variable models like in Quaranta (2013), Vráblíková (2014), or Koc (2021).

2.2 Political Participation as a Categorical Variable

In the approach where participation is treated as a categorical variable, we would not think of participation to be cumulative and of participation acts to form some kind of ranking. There would be no latent propensity to participate that explains performing acts of various difficulty. Instead, we would assume a person-centered approach, where the key question would be about how individual actors engage in various political acts and simultaneously refrain from political participation (Oser, 2017). The level of analysis would be shifted from political acts to political actors (Oser, Hooghe & Marien, 2013; Oser, 2017). In this approach, we would try to classify people into homogenous groups, depending on how these people choose to participate, in what forms (Oser, 2021). The set of forms people choose to participate in would be called repertoires (Oser, 2017).

For that classification into groups, researchers would use Latent Class Analysis. LCA provides us with two kinds of information that is useful if the purpose of the analysis is classification: conditional item probabilities and class probabilities (Collins & Lanza, 2010). First, the conditional item probabilities are class-specific and provide information about the probability that an individual in that class will provide a positive answer to a specific item (Clark et al., 2013). In the research on political participation, the conditional item probabilities indicate the likelihood that respondents in each participant type (a homogenous group of individuals distinguished based on the forms they use) engage in each of the political acts (Oser, 2021). Second, the class probabilities specify the relative size of each class, or the proportion of the population that is in a particular class (Clark et al., 2013; Collins & Lanza, 2010). The optimal number of latent classes would be chosen using information criteria, model comparison, and the inspection of conditional item probabilities and class probabilities (Collins & Lanza, 2010).

2.3 Limitations

From the measurement perspective, both approaches have their limitations, which were briefly mentioned in the introduction. First, with cumulative latent variable models, such as Guttman, Confirmatory Factor Analysis (CFA), and various parametric and non-parametric Item Response Theory (IRT) models, we assume that all individuals come from the same homogenous population and the only differences between individuals are due to different levels on the latent trait(s). In the case of political participation, this means that all citizens participate allegedly in the same way, which is highly implausible. We know, for example, that young people participate differently than adults and that these differences are not only about the levels of participation but also about the forms-young people choose different forms than adults (García-Albacete, 2014; Quintelier, 2007). In instances, like the one with age groups, where the source of heterogeneity is observable, standard extensions to CFA and IRT—Multiple Group models—can be used. But once the source of heterogeneity is unobservable these standard approaches fail and cannot account for the violation of the population homogeneity assumption (Lubke & Muthén, 2005). A way to circumvent the problem is to resort to LCA, which is meant to model unobserved sources of population heterogeneity (Collins & Lanza, 2010). But with LCA we assume that there are no quantitative differences within the classes, which does not seem to be convincing. If we take the classification provided by Oser (2017), who distinguishes four types of participants—“Disengaged”, “All-around activists”, “High-voting engaged”, and “Mainstream participants”—and focus on “Mainstream participants”, it is very likely the among people with that participant type we have individuals who are more and less active. This can be said also about the other types, except for those who are disengaged. Hence, with LCA we ignore the fact that people of a given participant type may participate to a different extent.

3 Analytical Strategy

3.1 Motivation for Zero-Inflated Models

One way to overcome the problems presented by the existing approaches is to resort to zero-inflated models. Using this kind of models can be motivated by data and substantive considerations. When it comes to the data perspective, we should look at the proportion of people who report non-participation in forms other than voting. For example, for countries participating in rounds 8 and 9 of the European Social Survey (ESS 2016; ESS 2018), on average (for all countries), approx. 50% of respondents reported that they had not participated in any of the non-electoral forms listed in the questionnaire, with the maximum of 87% in Hungary and the minimum of 18% in Sweden. Figure 1 shows the proportions for all countries participating in both rounds. Concentrating on non-electoral forms of participation makes sense since researchers often do not include voting in their composite measures of participation (e.g., Hooghe & Marien, 2013; Li, 2021; Marien et al., 2010; Oser & Hooghe, 2018; Theocharis & van Deth, 2018; Vráblíková, 2014) because voting is a very frequent activity and therefore an exception from other indicators (Parry, Moyser and Day 1992).

Proportions of all-zero answers for items measuring political participation across countries for rounds 8 and 9 of ESS. Average proportions across the two rounds are depicted above the bars for each country. Note Calculations are based on the respondents with complete information on political participation items. AT-Austria, BE-Belgium, CH-Switzerland, CZ-Czechia, DE-Germany, EE-Estonia, ES-Spain, FI-Finland, FR-France, GB-United Kingdom, HU-Hungary, IE-Ireland, IT-Italy, LT-Lithuania, NL-Netherlands, NO-Norway, PL-Poland, PT-Portugal, SE-Sweden, SI-Slovenia

From the substantive point of view, we might want to use zero-inflated models to account for the existence of a subpopulation of people who do not participate at all, described by Amnå and Ekman (2014) as "unengaged" and "disillusioned" citizens. In the literature on political participation, we could find at least two explanations for why people would refrain from participating altogether—political alienation and political apathy (Aberbach, 1969; Abramson & Aldrich, 1982; Dahl et al., 2018; Farthing, 2010; Finifter, 1970; Fox, 2015). Being alienated and apathetic could stop a person from enacting any form of political participation (Dahl et al., 2018).

These data and substantive considerations should result in modeling decisions. When it comes to measurement models, we should relax the assumption of population homogeneity and take into consideration the possibility that there are two subpopulations: those who do not participate at all (politically disengaged), and those who participate to various degrees. Furthermore, in the subpopulation of politically active, we should allow for quantitative differences among individuals. By doing so, we can overcome the limitations of the classical approaches to modeling political participation as a latent variable.

Given that we often want to know not only what the structure of political participation is, but also how it is related to other variables, the explanatory model should take into account the existence of the two subpopulations as well. As a result, we could include variables that would predict whether an observation is likely to end up in the latent class of politically disengaged (people whose count of political activities is always 0) and variables that would predict the intensity of participation.

Now, I will describe measurement and explanatory models that are well-suited for accounting for the excess of zeros in the data.

3.2 Zero-Inflated IRT

To account for the excess of zeros, methodologists came up with the idea of Zero-Inflated IRT models (Finkelman et al., 2011; Magnus & Liu, 2018; Wall et al., 2015). To better understand their mechanics, I will introduce an equation for the Zero-Inflated Graded Response Model, an IRT model which is meant to accommodate the zero-inflation for ordinal indicators, including binary ones. For the parametrization of the model proposed by Magnus and Garnier-Villarreal (2022), the probability function for the Zero-Inflated Graded Response Model could be written as:

where \({y}_{ij}\) denotes a response to an item j by a person i; \(\alpha\) is a slope for each indicator; \({\theta }_{i}\) denotes an individual’s score on the latent variable of political participation; \({\varvec{\kappa}}\) is a vector of thresholds’ parameter; \(\lambda\) is the proportion of excessive all-zeros, i.e. individuals belonging to the class of politically disengaged. Item thresholds are often understood in terms of difficulty, i.e. what value on the latent variable one needs to have to have a 50% probability of responding above the given threshold. In the binary case, it simplifies to have a 50% probability for endorsing an item (for example, where one needs to fall on the participation intensity continuum to have 50% probability of endorsing the item ‘taking part in demonstrations’). Slopes represent item discrimination that provides information on how well the item can differentiate among individuals located at different points on the latent continuum (Ayala, 2009) (for example, how well ‘taking part in demonstrations’ discriminates between people having rather high level on the participation intensity continuum).

From Eq. 1 we can read off an important feature of the Zero-Inflated IRT model: The all-zero answers are due to two processes. That is, some all-zeros are contributed by the measurement model, \(\mathrm{OrderedLogit}\left(0|\alpha {\theta }_{i},{\varvec{\kappa}}\right)\) in the equation above, and some by a Bernoulli process, with mixing probability \(\lambda\). In other words, some of the all-zero answers originate from the latent class of people participating to a varying degree (the measurement model part), and the remaining ones come from those belonging to the latent class of politically disengaged, that is individuals who always respond with 0 to each item (cf. Magnus & Garnier-Villarreal, 2022). By contrast, the non-all-zero answers are only due to the measurement model.

Given the observations we made about the data generating process, it should be clear that estimating a Zero-Inflated IRT model is not the same as estimating a regular IRT model after discarding all-zero answers. The latter approach would very likely lead to biased estimates since “throwing out the all-zeros is the same as selecting who to fit the IRT model to conditional on their score” (Wall et al., 2015, p. 595). Moreover, simply reading off the percentage of people who have all-zero answers in the sample will not provide us with the proportion of people belonging to the latent class of politically disengaged.

Lastly, Eq. 1 highlights the major difference between the ZI-IRT approach and the LCA approach. Like in the LCA approach, we employ latent classes to model the unknown population heterogeneity (disengaged and those participating to a varying degree). However, the ZI-IRT model also allows us to model heterogeneity within the class of politically active. This is represented by a continuous latent variable of political participation and is quantified using each individual’s score on that variable, \({\theta }_{i}\). Therefore, with the ZI-IRT model, we effectively combine two perspectives of modeling participation as a latent variable—it is both categorical (capturing unobserved population heterogeneity with latent classes) and continuous (estimating the individuals’ scores denoting varying participation intensity levels within the class of politically active).

3.3 Zero-Inflated Beta-Binomial

To build an explanatory model for political participation, I treat political participation as an observable. I do so for two reasons. First, because this is the standard practice among students of political participation (García-Albacete, 2014; Kostelka, 2014; Letki, 2004; Oser & Hooghe, 2018). Second, estimating the zero-inflated measurement model with predictors would necessitate rather advanced programming skills in Stan (Carpenter et al., 2017), a general Bayesian statistical software program, potentially rendering the approach inaccessible for most applied researchers.

To show that accounting for the subpopulation of disengaged makes sense while regressing political participation on external variables, we should find a regression model that would closely mirror the data generating process as specified in the Zero-Inflated Graded Response Model. A very good candidate for that would be the Zero-Inflated Beta-Binomial model.

Zero-Inflated Beta-Binomial is an extension of the Beta-Binomial model. Beta-binomial model is used to model grouped binary data, that is a count of 1s out of a fixed number of trails, when we believe that the data might be correlated or that the probability of endorsing 1s across the trails is not constant (King, 1998). These are very plausible assumptions for modeling participation as a count of forms one participated in. We could write the probability function for Beta-Binomial as:

where \(n\) denotes the number of 1s (endorsements or successes) out of \(N\) trails, \(\mu\) is the expected average success probability, \(\phi\) is a measure for how much variation exists in \(\mu\) across the binary random variables, and \(B()\) denotes the beta function. What we usually want to predict is \(\mu\), which in our case can be understood as the expected average probability of participating.

The probability function for Zero-Inflated Beta-Binomial is a simple extension of Eq. 2, and could be written in the following way:

with \(\lambda\) being the proportion of excessive zeros, i.e. individuals belonging to the latent class of politically disengaged. The observations we have made about the data generating process with respect to the Zero-Inflated IRT model hold also here: (1) some zeros are contributed by the Beta-Binomial model, and the rest by a Bernoulli process; (2) non-zero answers are only due to the Beta-Binomial model. Again, we cannot treat all all-zero answers as coming from the individuals belonging to the latent class of politically disengaged.

3.4 Data and Cases

To investigate the latent structure of political participation, I use 8th and 9th round of the European Social Survey (ESS 2016; ESS 2018). I use both rounds of ESS because the participation data matrix is very sparse, with few cases in the co-occurrence cells. Using only one round led to nonsensical parameter values for the zero-inflated model and convergence problems. Using two consecutive waves for each country does not pose a problem since the scale of political participation, as measured by the ESS items, retains high levels of measurement invariance within countries across waves (Koc & Pokropek, 2022). ESS battery of participation items has been frequently used in the research on political participation (e.g., García-Albacete, 2014; Kostelka, 2014) and includes numerous forms. From the analyzed battery of items, I remove voting due to its special character and the practice of constructing composite measures without that item.

The items that measure political participation were recoded into dichotomous variables, with values 1 “participation” and 0 “no participation”. Refusals, “No answer”, and “Don’t know” answers were recoded as missing values. Besides, all interviewees-minors were excluded from the analysis as they could not participate in some participation forms for legal reasons. A full list of indicators can be found in Table 1.

Additionally, for some regression models, which I will discuss later in detail, I use five variables as predictors: gender, age, years of education, political interest, and political efficacy. Political efficacy is constructed as an index out of four variables measuring efficacy: (1) political system allows people to have a say in what government does; (2) political system allows people to have an influence on politics; (3) able to take an active role in a political group; (4) confident in own ability to participate in politics. The values of the index range from 0 to 1. Again, refusals, “No answer”, and “Don’t know” answers were recoded as missing values. Also, I removed all interviewees-minors and individuals who reported more than 30 years of formal education as such values are highly unrealistic.

3.5 Estimation and Model Comparison

To fit all the models, I use Stan. For the measurement models, I use the code provided by Magnus and Garnier-Villarreal (2022) and weakly informative priors (see Appendix for a detailed model specification). I run four chains, each with 2000 iterations, using the first 1000 iterations as a warm-up. Hence, inferences are based on the posterior distributions from 4000 draws. I set the adapt_delta argument to 0.99 to prevent divergent transitions after the warm-up phase.

For Beta-Binomial and Zero-Inflated Beta-Binomial regression models, I use multilevel versions of the said models to account for the fact that the observations are nested within countries. Additionally, I add a dummy variable denoting the ESS round to every model. Four models are estimated: (1) Beta-Binomial with the round dummy; (2) Beta-Binomial with the round dummy and a set of five frequently used antecedents of political participation: gender, age, years of education, political interest, and political efficacy; (3) Zero-Inflated Beta-Binomial where the expected average probability of participating is regressed on the set of five predictors and the round dummy, and the probability of belonging to the latent class of politically disengaged is regressed on the round dummy; (4) Zero-Inflated Beta-Binomial where the average probability of participating and the probability of belonging to the latent class of politically disengaged are regressed on the set of five predictors and the round dummy. In all the models, \(\phi\) parameters have random intercepts. In model 3, the probability of belonging to the latent class of politically disengaged has a random intercept and is regressed on the round dummy.

To fit the regression models I use brms (Bürkner, 2017), an R package that has Stan as its backend. I run four chains, each with 5000 iterations, using the first 2500 iterations as a warm-up. Hence, inferences are based on the posterior distributions from 10,000 draws. I set the adapt_delta argument to 0.99 to prevent divergent transitions after the warm-up phase. I use weakly informative priors, which are described in the Appendix.

To compare the fit of the measurement and regression models, I follow the common practice in Bayesian statistics and use leave-one-out cross-validation and the difference in expected log pointwise predictive density (ELPD) (Lambert, 2018; McElreath, 2020). A model is thought to have superior out-of-sample predictive accuracy over another one when the difference in ELPD values is at least larger than twice the estimated standard error of the difference (Vehtari et al., 2017).

Additionally, for the measurement models, I compare difficulty and discrimination parameters, and the distribution of the \(\theta\) parameters, which denote the individual scores on the latent variable of political participation. To be more precise, I compare the individual mean values of \(\theta\) s. For regression models, I compare the regression coefficients using predicted probabilities.

4 Results

4.1 Measurement Models

Table 2 displays the best fitting model and the difference in ELPD between this model and the alternative one, along with the standard error of this difference. In all countries, the zero-inflated version has a better fit. The difference in ELPD is much larger than twice the estimated standard error of the difference (except for Sweden, Norway, and Finland, the difference is of two orders of magnitude). This means that we should strongly prefer the zero-inflated model over the model not accounting for the zero-inflation.

What are the consequences of accounting for the zero-inflation for the model parameters? The estimates of item discrimination move to the left (to the zero) by 0.46 and the estimates of item difficulty by 0.08, on average. By looking at Table 3, we can better understand how well the items measure the latent trait of political participation after taking into account the existence of a subpopulation of politically disengaged.Footnote 3 It is worth inspecting the properties of the items as most students of political participation concentrate on the properties of the whole scale or latent factors (e.g., Koc, 2021; Ohme et al., 2018; Talò & Mannarini, 2015).

First, all item difficulty estimates are positive, meaning that to have a 50% chance of endorsing the items one needs to have an above-average level on the latent trait of political participation. On average, the most difficult items are working for a party or action group (average difficulty: 2.23), taking part in public demonstrations (2.10), and badging (1.82). Second, the greatest discriminatory power is provided by the item “working for a political party or action group”. That is, this item best detects differences between individuals on the latent trait of political participation. One obvious implication of the observations we have made would be that these items provide very little information about persons with below-average levels on the latent variable of participation.

Another question that we can answer by comparing the regular and Zero-Inflated IRT models is that about the distribution of the latent trait of participation in the population. Figure 2 shows the densities representing average individual scores on the latent variable of political participation. As a result of modeling the excess of zeros, the mass of the distribution of the latent trait moves to the right, meaning that after taking into consideration the subpopulation of disengaged, the picture of the intensity of participation in the population changes—people seem to be more active. The size of this move is a function of the proportion of the latent class of disengaged.

Figure 3 reveals that new democracies, along with Italy, feature the highest proportions of politically disengaged citizens. In Hungary, Lithuania, Poland, and Italy, the disengaged make up more than 50% of the population. The distribution of the latent trait in these countries shows high kurtosis, marked by a distinct peak and narrow spread, indicating minimal variance in the intensity of participation. In stark contrast, Scandinavian countries such as Finland, Norway, and Sweden have the smallest proportions of disengaged citizens. After accounting for the latent class of disengaged citizens in these countries, the mass of the distribution shifts only slightly. Furthermore, these Scandinavian countries exhibit a larger spread in the distribution of political participation, indicating more variance compared to the other countries analyzed in the study.

Nevertheless, even after modeling the excess of zeros, the majority of the population (representing 51% of the distribution's mass) falls below zero on the latent trait of participation and the distribution of this trait remains heavily skewed to the right in almost all countries. The notable exceptions are Finland and Sweden, where the majority neither falls below zero, nor is the distribution significantly skewed. Lastly, we observe that the proportions of the latent class of disengaged, as estimated by the Zero-Inflated IRT, do not match the proportions from Fig. 1.

4.2 Regression Models

Similarly to measurement models, regression models accounting for the zero-inflation have a better fit than the models not accounting for the excess of zeros, which is shown in Table 4. Zero-Inflated Beta-Binomial with predictors for both parts of the model has the best fit, followed by ZIBB with predictors only for the average probability of participating. ZIBB with predictors only for the average probability of participating has a better fit than the Beta-Binomial model with predictors (ELPD difference: − 57.3; SE: 11.8). Hence, judging by how well the models fit the data, zero-inflated models are to be preferred. They seem to better reflect the data generating process.

Figure 4 shows partial predicted probabilities for ZIBB with predictors for both parts (Model 4) and Beta-Binomial (Model 2) with the same predictors. To calculate the probabilities, values of covariates were either set to their mean value or, in the case of political interest, ESS round, and gender, to “Quite interested”, round 9, and “Women”, respectively.

Partial predicted probabilities for Beta-Binomial and Zero-Inflated Beta-Binomial (μ; expected average probability of participating). Note To calculate partial predicted probabilities, covariates were either set to their mean value, or, in case of political interest, ESS rounds, and gender, to “quite interested”, 9, and “women”, respectively. The ribbons and error bars denote 95% credible intervals

First, the predicted probabilities are systematically higher for ZIBB than for Beta-Binomial. For years of education, the line representing the impact of the variable on political participation is less steep if estimated with the ZIBB model. Overall, however, the tendencies and relative differences remain largely the same. The directions of the predictions in both models are in line with the expectations from the literature: political interest, political efficacy, and education have a positive impact on the probability of participation, the probability decreases with age, and women are slightly more likely to participate than men.

What is special about the ZIBB model is that, unlike the regular Beta-Binomial, it offers the possibility of modeling the zero-inflation, i.e. the probability of ending up in the latent class of politically disengaged. Figure 5 shows partial predicted probabilities which were calculated in the same way as previously. We can see that two variables predict well the probability of belonging to the class of disengaged: political interest and education. Interestingly, political efficacy—a strong predictor of the probability of participating—has no effect on belonging to the class of disengaged. The same can be said about age. In the case of gender, at first sight, we would say that there is no effect. A more precise approach involving calculating marginal effects (contrasts) shows that the effect of being a woman is extremely small, somewhere between 0.3 and 2.8 percentage points, with 1.3 points as the mean. It is relatively easy to explain why political interest predicts belonging to the class of disengaged—lack of interest is the key measure of political apathy (Dahl et al., 2018). It is more difficult to explain the role of education as this variable often captures several different things, such as skills, knowledge, social status, and social network centrality (Persson, 2013). Also, interpreting the non-effect of political efficacy is challenging given that these models are mainly predictive. However, this non-effect aligns with correlational studies that use voting turnout as a dependent variable (Dahl et al., 2018). Still, such dramatic change should provoke future research to single out and investigate the mechanism of political efficacy on the probability of belonging to the latent class of politically disengaged. Full regression tables can be found in the Online Supplementary Materials (ESM 2).

Partial predicted probabilities for Zero-inflated beta-binomial (\(\lambda\); probability of belonging to the latent class of politically disengaged). Note To calculate partial predicted probabilities, covariates were either set to their mean value, or, in case of political interest, ESS rounds, and gender, to “quite interested”, 9, and “women”, respectively. The ribbons and error bars denote 95% credible intervals

5 Discussion

In this paper, I argued that political participation exhibits features of categorical and continuous latent variables, i.e. that it makes sense to (1) distinguish two latent classes: a class of politically disengaged, with people refraining from participation altogether, and a class composed of people who participate to a varying degree; and (2) to allow for quantitative differences in the class of politically active. To verify those claims, I used ESS data and analyzed them with zero-inflated measurement and regression models.

Existing studies on modeling political participation as a latent variable consider the possibility of political participation being either continuous or categorical. There has been no approach, to the best knowledge of the author of this work, that would go beyond this dichotomy. Modeling participation as a continuous latent variable rests upon a very strong assumption of population homogeneity, which as the existing studies show (Oser, Hooghe & Marien, 2013; Oser, 2017, 2021) is not plausible. Accounting for the population heterogeneity by the means of LCA is also problematic because with LCA we assume that there are no quantitative differences within the homogenous groups.

To overcome the limitations of the existing approaches, I used a Zero-Inflated IRT model and a regression model closely resembling the data generating process implied by that IRT model—Zero-Inflated Beta-Binomial. Judging by the out-of-sample predictive accuracy, zero-inflated models that account for the latent class of politically disengaged are superior to the models not accounting for the existence of that class. Accounting for the zero-inflation showed to have an impact on various model estimates: item difficulty and discrimination estimates decreased, the distribution of the latent trait in the population moved to the right, and predicated probabilities for the participation intensity part of the regression model increased. Also, thanks to the Zero-Inflated Beta-Binomial model, I showed that one can and should model the probability of belonging to the class of politically disengaged and that the predictors of belonging to that class do not function in the same way as they would in predicting the participation intensity. Considering all the evidence, not accounting for the excess of zeros, attributed to the latent class of politically disengaged, casts doubt on the measurement validity. This doubt arises because we (1) fail to translate the theoretical concept into the measurement specification (translation error; Fariss et al., 2020; Trochim & Donnelly, 2007), and (2) leave the mechanism leading to the zero-inflation unmodeled.

Moreover, the proposed methodology outlined in this study could be extended to a variety of contexts beyond the ESS data and European countries. Particularly in regions where democracy is fragile or in transitional stages, such as some parts of Latin America, this methodology might provide valuable insights into the nuances of political participation. It could also be applicable to data from other large-scale surveys like the World Values Survey, broadening the range of possible analyses and outcomes.

Yet, this research suffers from several limitations. First, the measurement models assume normality for the latent variables, which as the density plots suggest-may not be true. Future research should consider other population distributions for the latent trait, such as skew-normal (Azzalini, 2014). Second, even though ESS has eight participation items it is still relatively few and it would be beneficial to compare models with a larger battery of items. Third, the predictors used for the model comparison cannot be interpreted causally. For that, we would need to single out causal mechanisms and ponder on the conditions of identification (see, Keele et al., 2020). Only then we would be able to understand, for example, why political efficacy does not have any effect on belonging to the latent class of politically disengaged. Finally, unlike the LCA approach, the ZI-IRT model proposed in this study does not permit differentiation beyond two latent classes. While modeling the continuous trait and accommodating more than two latent classes might be possible in a full Factor Mixture Modelling approach, this presents additional challenges due to the complexity of fitting these models (see, Clark et al., 2013). Nevertheless, this is an avenue that future research could explore.

Data and Availability

Data can be found on the ESS website (http://www.europeansocialsurvey.org/).

Code Availability

Code can be found in the Electronic Supplementary Materials (EMS_3).

Notes

It suffices to say that Mokken is a probabilistic version of Guttman, which means that a subject might have participated in a more cost-demanding activity even if he or she had not taken part in a less demanding one (for more on Guttman versus Mokken scaling, see van Schuur (2003).

It should be emphasized that the difficulty of a participation form is not the sole significant factor explaining people’s political activism (see, for example, Chapters 32–39 in Giugni and Grasso (2022), Verba, Schlozman and Brady (1995)). In this section, 'difficulty' is used more in reference to a parameter of the latent cumulative model, which particularly in the IRT tradition is referred to as 'difficulty'. The intention of this discussion is to demonstrate how this abstract parameter from the measurement model can be connected to theoretical considerations on the nature of political participation.

Full tables, including 95% credible intervals, for each country separately can be found in the Online Supplementary Materials (ESM 1).

References

Aberbach, J. D. (1969). Alienation and political behavior. American Political Science Review. https://doi.org/10.2307/1954286

Abramson, P. R., & Aldrich, J. H. (1982). The decline of electoral participation in America. American Political Science Review. https://doi.org/10.2307/1963728

Amnå, E., & Ekman, J. (2014). Standby citizens: Diverse faces of political passivity. European Political Science Review. https://doi.org/10.1017/S175577391300009X

Azzalini, A. (2014). The skew-normal and related families (Institute of Mathematical Statistics monographs. Cambridge: Cambridge University Press.

Brady, H. E., Verba, S., & Schlozman, K. L. (1995). Beyond SES: A resource model of political participation. American Political Science Review. https://doi.org/10.2307/2082425

Bürkner, P.-C. (2017). brms : An R package for bayesian multilevel models using stan. Journal of Statistical Software. https://doi.org/10.18637/jss.v080.i01

Carpenter, B., Gelman, A., Hoffman, M. D., Lee, D., Goodrich, B., Betancourt, M., et al. (2017). Stan : A probabilistic programming language. Journal of Statistical Software. https://doi.org/10.18637/jss.v076.i01

Clark, S. L., Muthén, B., Kaprio, J., D’Onofrio, B. M., Viken, R., & Rose, R. J. (2013). Models and strategies for factor mixture analysis: An example concerning the structure underlying psychological disorders. Structural Equation Modeling : A Multidisciplinary Journal. https://doi.org/10.1080/10705511.2013.824786

Collins, L. M., & Lanza, S. T. (2010). Latent class and latent transition analysis: With applications in the social, behavioral, and health sciences (Wiley series in probability and statistics). New Jersey: Wiley.

Dahl, V., Amnå, E., Banaji, S., Landberg, M., Šerek, J., Ribeiro, N., et al. (2018). Apathy or alienation? Political passivity among youths across eight European union countries. European Journal of Developmental Psychology. https://doi.org/10.1080/17405629.2017.1404985

de Ayala, R. J. (2009). The theory and practice of item response theory (methodology in the social sciences). New York: Guildford Press.

Deth van, J. W. (2001). Studying political participation: towards a theory of everything? Presented at the joint sessions of workshops of the ECPR. Grenoble. April 2001.

ESS Round 8: European Social Survey Round 8 Data. (2016). Data file edition 2.2. NSD-Norwegian centre for research Data: Data archive and distributor of ESS data for ESS ERIC. Norway, https://doi.org/10.21338/NSD-ESS8-2016

ESS Round 9: European Social Survey Round 9 Data. (2018). Data file edition 2.0. NSD-Norwegian centre for research data. Norway: Data archive and distributor of ESS data for ESS ERIC. https://doi.org/10.21338/NSD-ESS9-2018

Fariss, C. J., Kenwick, M. R., & Reuning, K. (2020). Measurement Models. In L. Curini & R. Franzese (Eds.), The SAGE handbook of research methods in political science and international relations (pp. 353–370). United States: SAGE Publications Ltd.

Farthing, R. (2010). The politics of youthful antipolitics: Representing the ‘issue’ of youth participation in politics. Journal of Youth Studies. https://doi.org/10.1080/13676260903233696

Feng, C. X. (2021). A comparison of zero-inflated and hurdle models for modeling zero-inflated count data. Journal of Statistical Distributions and Applications. https://doi.org/10.1186/s40488-021-00121-4

Finifter, A. W. (1970). Dimensions of political alienation. American Political Science Review. https://doi.org/10.2307/1953840

Finkelman, M. D., Green, J. G., Gruber, M. J., & Zaslavsky, A. M. (2011). A zero- and K-inflated mixture model for health questionnaire data. Statistics in Medicine. https://doi.org/10.1002/sim.4217

Fox, S. (2015). Apathy, alienation and young people: the political engagement of British millennials. PhD Thesis. University of Nottingham.

García-Albacete, G. M. (2014). Young people’s political participation in western Europe. UK: Palgrave Macmillan.

Gelman, A., Jakulin, A., Pittau, M. G., & Su, Y.-S. (2008). A weakly informative default prior distribution for logistic and other regression models. The Annals of Applied Statistics. https://doi.org/10.1214/08-AOAS191

Gelman, A., Simpson, D., & Betancourt, M. (2017). The prior can often only be understood in the context of the likelihood. Entropy. https://doi.org/10.3390/e19100555

Giugni, M., & Grasso, M. (2022). The Oxford Handbook of Political Participation. Oxford: Oxford University Press.

Hooghe, M., & Marien, S. (2013). A comparative analysis of the relation between political trust and forms of political participation in Europe. European Societies. https://doi.org/10.1080/14616696.2012.692807

Jeroense, T., & Spierings, N. (2022). Political participation profiles. West European Politics. https://doi.org/10.1080/01402382.2021.2017612

Johann, D., Steinbrecher, M., & Thomas, K. (2020). Channels of participation: Political participant types and personality. PLoS ONE. https://doi.org/10.1371/journal.pone.0240671

Keele, L., Stevenson, R. T., & Elwert, F. (2020). The causal interpretation of estimated associations in regression models. Political Science Research and Methods. https://doi.org/10.1017/psrm.2019.31

King, G. (1998). Unifying political methodology: The likelihood theory of statistical inference. Ann Arbor: University of Michigan Press.

Koc, P. (2021). Measuring non-electoral political participation: Bi-factor model as a tool to extract dimensions. Social Indicators Research. https://doi.org/10.1007/s11205-021-02637-3

Koc, P., & Pokropek, A. (2022). Accounting for cross-country-cross-time variations in measurement invariance testing. A case of political participation. Survey Research Methods. https://doi.org/10.18148/srm/2022.v16i1.7909

Kostelka, F. (2014). The state of political participation in post-communist democracies: Low but surprisingly little biased citizen engagement. Europe-Asia Studies. https://doi.org/10.1080/09668136.2014.905386

Lambert, B. (2018). A student’s guide to bayesian statistics. Los Angeles: SAGE.

Letki, N. (2004). Socialization for participation?: Trust, membership, and democratization in east-central Europe. Political Research Quarterly. https://doi.org/10.1177/106591290405700414

Li, O. (2021). Grievances and political action in Russia during Putin’s rise to power. International Journal of Sociology. https://doi.org/10.1080/00207659.2021.1930882

Lubke, G. H., & Muthén, B. (2005). Investigating population heterogeneity with factor mixture models. Psychological Methods. https://doi.org/10.1037/1082-989X.10.1.21

Magnus, B. E., & Garnier-Villarreal, M. (2022). A multidimensional zero-inflated graded response model for ordinal symptom data. Psychological Methods. https://doi.org/10.1037/met0000395

Magnus, B. E., & Liu, Y. (2018). A zero-inflated box-cox normal unipolar item response model for measuring constructs of psychopathology. Applied Psychological Measurement. https://doi.org/10.1177/0146621618758291

Marien, S., Hooghe, M., & Quintelier, E. (2010). Inequalities in non-institutionalised forms of political participation: A multi-level analysis of 25 countries. Political Studies. https://doi.org/10.1111/j.1467-9248.2009.00801.x

Marsh, A. (1974). Explorations in unorthodox political behaviour: A scale to measure “protest potential.” European Journal of Political Research. https://doi.org/10.1111/j.1475-6765.1974.tb01233.x

Marsh, A. (1977). Protest and political consciousness. Los Angeles: Sage Publications.

McElreath, R. (2020). Statistical Rethinking: A Bayesian Course with Examples in R and STAN. Baco Raton: CRC Press.

Milbrath, L. W. (1965). Political participation: How and why do people get involved in politics? Chicago: Rand Mcnally College.

Ohme, J., de Vreese, C. H., & Albæk, E. (2018). From theory to practice: How to apply van Deth’s conceptual map in empirical political participation research. Acta Politica. https://doi.org/10.1057/s41269-017-0056-y

Oser, J. (2017). Assessing how participators combine acts in their “political tool kits”: A person-centered measurement approach for analyzing citizen participation. Social Indicators Research. https://doi.org/10.1007/s11205-016-1364-8

Oser, J. (2021). Protest as one political act in individuals’ participation repertoires: Latent class analysis and political participant types. American Behavioral Scientist. https://doi.org/10.1177/00027642211021633

Oser, J., & Hooghe, M. (2018). Democratic ideals and levels of political participation: The role of political and social conceptualisations of democracy. The British Journal of Politics and International Relations. https://doi.org/10.1177/1369148118768140

Oser, J., Hooghe, M., & Marien, S. (2013). Is online participation distinct from offline participation? A latent class analysis of participation types and their stratification. Political Research Quarterly. https://doi.org/10.1177/1065912912436695

Parry, G., Moyser, G., & Day, N. (1992). Political participation and democracy in Britain. Cambridge: Cambridge University Press.

Persson, M. (2013). Education and Political Participation. British Journal of Political Science. https://doi.org/10.1017/S0007123413000409

Quaranta, M. (2013). Measuring political protest in Western Europe: Assessing cross-national equivalence. European Political Science Review. https://doi.org/10.1017/S1755773912000203

Quaranta, M. (2018). Repertoires of political participation: Macroeconomic conditions, socioeconomic resources, and participation gaps in Europe. International Journal of Comparative Sociology. https://doi.org/10.1177/0020715218800526

Quintelier, E. (2007). Differences in political participation between young and old people. Contemporary Politics. https://doi.org/10.1080/13569770701562658

Talò, C., & Mannarini, T. (2015). Measuring participation: Development and validation the participatory behaviors scale. Social Indicators Research. https://doi.org/10.1007/s11205-014-0761-0

Teorell, J., Torcal, M., & Montero, J. R. (2007). Political participation: Mapping the terrain. In J. W. van Deth, J. R. Montero, & A. Westholm (Eds.), Citizenship and involvement in European democracies: A comparative analysis (pp. 334–357). London: Routledge.

Theocharis, Y., & van Deth, J. W. (2018). The continuous expansion of citizen participation: A new taxonomy. European Political Science Review. https://doi.org/10.1017/S1755773916000230

Trochim, W. M. K., & Donnelly, J. P. (2007). The research methods knowledge base. Mason, OH: Cenage Learning.

van Deth, J. W. (1986). A note on measuring political participation in comparative research. Quality and Quantity. https://doi.org/10.1007/BF00227430

van Deth, J. W. (2014). A conceptual map of political participation. Acta Politica. https://doi.org/10.1057/ap.2014.6

van Deth, J. W., & Theocharis, Y. (2018). Political participation in a changing world: Conceptual and empirical challenges in the study of citizen engagement. New York: Taylor & Francis Ltd.

van Schuur, W. H. (2003). Mokken scale analysis: Between the Guttman scale and parametric item response theory. Political Analysis, 11(2), 139–163.

Vehtari, A., Gelman, A., & Gabry, J. (2017). Practical Bayesian model evaluation using leave-one-out cross-validation and WAIC. Statistics and Computing. https://doi.org/10.1007/s11222-016-9696-4

Verba, S., Nie, N. H., & Kim, J. (1978). Participation and political equality: A seven-nation comparison. Cambridge: Cambridge University Press.

Verba, S., Schlozman, K. L., & Brady, H. E. (1995). Voice and equality: Civic voluntarism in American politics. Cambridge: Harvard University Press.

Vráblíková, K. (2014). How context matters?: Mobilization, political opportunity structures, and nonelectoral political participation in old and new democracies. Comparative Political Studies. https://doi.org/10.1177/0010414013488538

Wall, M. M., Park, J. Y., & Moustaki, I. (2015). IRT modeling in the presence of zero-inflation with application to psychiatric disorder severity. Applied Psychological Measurement. https://doi.org/10.1177/0146621615588184

Acknowledgements

The author thanks Natalia Letki, Artur Pokropek, and Tomasz Żółtak for helpful comments on previous versions of this work.

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

Bayesian estimation details.

Thresholds in the code from Magnus and Garnier-Villarreal (2022) are reparametrized to the IRT metric, i.e. the thresholds from the Ordered-Logit distribution, \({\varvec{\kappa}}\), are divided by the slope parameters, \(\alpha\). Yet, for the priors’ specification, the original metric from the Ordered-Logit distribution is used. The following set of weekly informative priors is applied:

For the Zero-Inflated-Beta-Binomial model with predicators for both parts of the model (4), we could write down the model specification as follows:

\(\sigma\) s and parameters with bars, with the bar meaning average, denote hyperparameters, i.e. parameters for parameters. The normal distributions with mean \(\overline{\alpha },\) \(\overline{\gamma }\), \(\overline{\omega }\) and standard deviations \(\sigma\) are the priors for each country intercept. But these priors themselves have their own priors for \(\overline{\alpha },\) \(\overline{\gamma }\), \(\overline{\omega }\) and \(\sigma\) s. Hence, there are two levels in the model, each resembling a simpler model (McElreath, 2020). \(\sum{\varvec{\beta}}{\varvec{X}}\) is a compact notation for multiple predictors.

The priors were chosen to be weakly informative – they preclude implausibly large parameter values from being sampled while having only a small influence on the posterior distribution in the range of plausible parameter values (Gelman et al., 2008). To make sure they are weakly informative, I run prior predictive simulations (see, Gelman et al., 2017). The priors remain the same for the Beta-Binomial model and the zero-inflated model without the set of five predictors for the probability of belonging to the latent class of politically disengaged. These models would have only a subset of the parameters, such as no predictors or no model for \(\lambda\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koc, P. Beyond Continuous versus Categorical Dichotomy: Uncovering Latent Structure of Non-electoral Political Participation Using Zero-Inflated Models. Soc Indic Res 171, 215–236 (2024). https://doi.org/10.1007/s11205-023-03250-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11205-023-03250-2