Abstract

This paper demonstrates a simple methodological sequence for the statistical calculation of a context-sensitive quality of life index especially suitable for use in the Global South, i.e. lower and middle income countries. We draw on the large (n = 24,889), area-sampled, survey dataset of the fifth Quality of Life Survey (QoL V, 2017/18) of South Africa’s Gauteng province, conducted by the Gauteng City-Region Observatory. Using overtly exploratory analysis, and a fully reflective conception of indicators, we apply exploratory factor analysis (EFA) in two stages to generate our model, while defending our approach methodologically and empirically and indicating its philosophical foundations. We contrast this approach to previous analyses using the QoL I (2010) data, which defined their dimensions and assign their indicators in advance, drawing on literature and indexes largely from the Global North. We ran series of EFAs on 60 longitudinally available variables from the QoL V data set. This allowed us to determine the optimal number of factors/dimensions, guided by established criteria and the interpretability of the grouped indicators. Each dimension score is calculated arithmetically, using the indicators’ factor loadings as weights. Then the dimensions, weighted by their eigenvalues, are aggregated into the composite index, scaled to run from 0 to 100. The resulting seven-dimension, 33-indicator model was validated through confirmatory factor analysis (CFA) on the QoL V data; and its configural invariance, i.e. whether the pattern of indicators amongst the factors holds over time, was checked against the two previous QoL datasets and confirmed. This validates the ability of the approach outlined to generate a stable index. Analysis of the resulting quality of life index by race and municipality reveals trends consistent with the South African context. Overall, the White population group has the highest measured quality of life index, followed in turn by the Indian/Asian, Coloured and Black African population groups. Changes in the quality-of-life ranking of the nine municipalities comprising Gauteng province may be observed over time, using the validated previous models. The value of the exploratory approach in enabling context to influence the index construction is highlighted by the emergence of a distinct dimension of bottom-up political voice, which is relevant for democratic governance.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Enhancing quality of life is a key objective of government and civil society globally. The creation of a composite quality of life index provides a statistically founded tool to measure progress and challenges in quality of life, creating a set of scores which is relatively straightforward to understand and interpret (Mazziotta & Pareto, 2017). To date, however, much of the work on creation of these indexes is driven by theoretical and empirical work shaped in the Global North. We argue that the creation of an index outside of this context must be empirically informed by an understanding of the relevant particularities of the context in question, rather than by application of an externally derived theoretical framework.

In this paper, we motivate the methodological and philosophical appropriateness of our approach, prior to using large-sample survey data from Gauteng province, South Africa to demonstrate a relatively straightforward statistical sequence for the calculation of a context-sensitive quality of life index. A large-scale, area-sampled Quality of Life Survey (QoL) has been conducted biennially since 2009 by the Gauteng City-Region Observatory (GCRO). To generate the index model, we apply exploratory factor analyses (EFA) in two stages to 60 longitudinally available variables in the data from this survey’s fifth iteration, QoL V (2017/18) n = 24,889. This yields a seven-dimensional, 33-indicator model, which is validated using confirmatory factor analysis (CFA) on the QoL V dataset. Configural invariance is additionally confirmed using the two previous survey datasets (i.e. QoL IV (2015/16) and QoL III (2013)), suggesting that the index model can be used over time to generate longitudinally comparable results.

Following identification and validation of the model, each dimension score is calculated arithmetically, using the indicators’ factor loadings as weights. These dimension scores, weighted by their eigenvalues, are then aggregated into a composite index, scaled to run from 0 to 100. We compare this construction of the index to the GCRO’s previous approach to index calculation (Everatt, 2017), and identify noteworthy differences in both the number and constitution of dimensions. We also highlight the importance of the exploratory approach in generating a context-sensitive grouping of indicators, for example enabling the emergence of a distinct dimension representing bottom-up political voice. While not a standard feature of many quality of life indexes, its importance for democracy has been emphasised by Stiglitz et al. (2009).

The aim of this study is therefore to devise a data-driven methodology for constructing a quality of life index in the context of South Africa (and Gauteng province in particular), a middle income country. But we expect that the straightforward methodological approach we outline to data-handling, model generation, validation, and index calculation, is suitable for application elsewhere in other low and middle income countries.

2 The GCRO’s Biennial QoL Surveys

2.1 Importance of the Surveys in the South African Context

Contemporary South Africa continues to struggle with many objective legacies bequeathed by the apartheid era (Statistics South Africa, 2019): racially aligned poverty, inequality, unemployment and spatial segregation. These come with behavioural and subjective concomitants including high levels of crime and violence (South African Police Service, 2017), widespread ongoing protests and pervasive mental distress (Mungai & Bayat, 2019). These challenges have proved intractable, and improvement has been gradual and uneven. These circumstances have been the subject of plans at national level (National Planning Commission, 2012) and below, along with innumerable local and international academic and stakeholder studies. The vast range of resulting official and academic statistics that are continually reported in the media make it nigh impossible for political principals, civil society, and laypersons alike to achieve an informed overall view of progress and change.

In this context, quality of life studies have played and continue to play an essential role in selecting salient indicators, integrating them into suitable dimensions and domains, and supplying a one-number index that can be tracked and differentiated, regionally and across relevant social groupings. Notable quality of life surveys were conducted and repeated in South Africa by independent academics during and since the apartheid years (Møller, 2014), and have been sustained in biennial national studies since the onset of democracy by the government-funded Human Sciences Research Council (Rule, 2007), while numerous once-off quality of life studies with particular topical or geographical foci continue to be mounted (Møller & Roberts, 2014).

Encouraged by the Stiglitz et al. report (2009), which both emphasised the importance of quality of life in sustainable development and stressed the feasibility of sample surveys of objective and subjective aspects of quality of life, the GCRO was established as a partnership of the University of the Witwatersrand, Johannesburg, the University of Johannesburg, the Gauteng Provincial Government and organised local government. A key objective of the GCRO is to provide government, researchers and civil society with regular and academically sound research and insight into key contributors to individual and societal well-being, including household circumstances, service delivery, perception of government, social attitudes, health, life satisfaction etc. This information has been collected by GCRO since 2009 through five large-scale, biennial, carefully area-sampled, surveys of randomly selected adult respondents in households across the province (Everatt, 2017; GCRO, 2014, 2016, 2019; de Kadt et al., 2019). To provide an accessible tool for rapid assessment of overall wellbeing in the province, survey data was used to calculate a single-number QoL index score from the outset.

Because of the variety of official and academic user needs, these surveys have had to be ‘omnibus’ in nature. Nevertheless they are named the Quality of Life Surveys, from QoL I in 2009, to QoL V in 2017/18. (The results of QoL VI were released in 2021, following completion of the analysis presented here.) The surveys carry both core and rotating modules that include, but are not confined to, variables relevant to the typical dimensions of quality of life identified by Stiglitz et al. (2009). The original range of questionnaire items, including those devoted or applicable to quality of life, was informed by an overview of relevant quality of life and other surveys (Jennings, 2012).

Given the breadth of coverage, the questionnaires are long, taking approximately an hour each on average (Orkin, 2020). So they have to be conducted face-to-face. Gauteng is South Africa’s most densely populated province, with its 15 million residents comprising a quarter of the country’s population (Statistics South Africa, 2020), yet contained in only 1.4% of the country’s land area. Even so, its residents live in dramatically different milieux, from urban houses and apartments through to effectively racially segregated ‘townships’Footnote 1 enduring from the apartheid era, dense informal settlements, and even some still-rural areas (Statistics South Africa, 2019). As a result, the surveys are expensive to mount, and achieving specified coverage and response rates is arduous. Access to sampled households sometimes requires multiple repeat visits out of working hours. The spatial sample distribution for the GCRO QoL Survey V (2017/18), stratified across the nine municipalities of Gauteng, and drawing from the 529 wards, is mapped in Fig. 1.

2.2 The Original Approach to Generating the Quality of Life Index

Over time, the GCRO QoL surveys have steadily expanded in scope and sample: from 187 questions and 6,600 households in QoL I to 248 questions and 24,800 households in QoL V (Orkin, 2020). A core set of 123 questions has been sustained across survey iterations. To create the original QoL index, guided by a review of the literature, a generous subset of 54 questions arranged into ten domains was specified at the outset (Everatt, 2017). By QoL V the number of questions feeding into the QoL index had increased slightly to 58.

These questions are a mix of ‘objective’ items (e.g., type of housing) and ‘subjective’ items (e.g., reported satisfaction with various aspects of life). It is not documented whether a range of experts (Greco, 2019) or stakeholders (Hagerty et al., 2012) were consulted for this original specification, to avoid the subjectivity of the developer’s perceptions (Booysen, 2002). The items are shown in the left-hand column of Fig. 2 (in Sect. 5.2), arranged into the original ten dimensions, and also with their levels of measurement in “Appendix”.

In calculating this original index, the selected questions—comprising dichotomies, three-option questions, and five-point Likert scales—were firstly reduced to dichotomies. Missing or inapplicable values were set to 0, resulting in their being treated as negative responses. The dichotomies, varying in number within in each dimension, were summed by dimension to yield the 10 dimension scores. These were scaled to run from 0 to 10, and then summed to yield the aggregate QoL index, in turn scaled to run from 0 to 10. Since the number of items per dimension ranged from 4 to 12, this approach was not unweighted, but amounted to an implicit, unequal weighting per item (Mikulić et al. 2015). An alternative approach was subsequently developed. This by-passed the dimensions and directly summed over all 54 dichotomies, and then scaled the total from 0 to 100. Use of this version has remained limited, and it will not be considered further. The questions, their dichotomisation, the arrangement into 10 dimensions, and the arithmetical compilation into dimension scores and an aggregated index were retained with negligible variation over the ten years from QoL I through to QoL V.

In preparation for the third survey, GCRO commissioned an exercise (Greyling, 2013) to consider alternative possible weightings of variables or dimensions during index compilation. This drew on quality of life theory and existing international indices to define 7 dimensions in advance, and select 27 of the 54 original indicator variables available from the QoL I survey. Using the QoL I data, it applied successive iterations of principal components analysis (PCA) to eliminate variables with the lowest communalities, and then applied a further PCA with varimax rotation to the remaining 15 variables. This approach did not—to anticipate the vocabulary of the next section—distinguish between reflective and formative indicators. The PCA indicated five dimensions, following the customary critera of eigenvalues greater than one, a scree plot, and interpretability (Tabachnik and Fidell 2007). Finally, the exercise followed the method of Nicoletti et al. (2000) in calculating each dimension’s score by scaling to unity the sum of the squared loadings of its variables; and then in aggregating the final quality of life score as the sum of the dimension scores, after weighting them by their share of the total variance (i.e. their eigenvalues).

The aim of this exercise was to see whether it ‘generated results that were significantly different’ from GCRO’s arithmetically compiled, 10-dimension index. According to Everatt (2017) ‘the simple answer was “no”.’ The original approach was accordingly retained through to QoL V (De Kadt and Crowe-Petterson 2020).

2.3 Revisiting the QoL Index Variables, Dimensions and Calculations

In 2019 GCRO commissioned a review of the overall QoL survey programme, and a synthesis report (Orkin, 2020). An element of the review was that the four authors of this paper consider the data-handling and the steps of index construction used to date, and quantify how the results of a suitable statistically-based computation of the index might or might not differ from those of the prevailing approach. An alternative approach was developed, initially drawing on the much larger dataset of 24,889 responses available from QoL V of 2017/18 (GCRO 2018). As described in Sect. 4, we worked with the slightly increased selection of 58 quality of life variables, incorporated just 3 additional variables, corrected the processing of the missing or inapplicable values, and retained the ordinal Likert scaling of variables where it was used. Our application of exploratory polychoric factor analysis, and differently weighted compilations of dimensions and of the final index, were informed—but not dictated—by the indexing literature in the review (Orkin, 2020), including the approach previously recommended by the Greyling (2013) exercise and its subsequent iterations (Greyling and Tregenna 2017), which were still based on the QoL I data.

An additional objective of this work was to achieve a sound and well-precedented statistical approach that would be straightforwardly replicable in subsequent GCRO QoL analyses, in other South African provinces, and by lower and middle income countries in Africa and elsewhere. We believe that this further expectation is met by the stages of our analysis, yielding a more parsimonious set of 33 variables, re-arranged in 7 rather than 10 quality of life dimensions that are conceptually coherent and theoretically familiar. The stages are described in Sect. 4, Methodology.

Commensurately with the indexing process, Sect. 4 also details how we used CFA to confirm the robustness of the dimensional structure of this model against the two immediately preceding QoL IV and QoL III survey datasets (GCRO 2014; GCRO 2016), which provided similarly ample sample sizes. By contrast, we found that the original ten-dimensional, 58-variable model (Everatt, 2017) could not be fitted to these three datasets with a CFA at all.

As the literature makes clear, conceiving and constructing a composite quality of life index that is conceptually and statistically defensible, longitudinally comparable, and user-friendly, requires various informed methodological and theoretical choices; and, we would suggest, ultimately even philosophical standpoints. These arise from the complex nature of the observed societal ‘reality’ and the particular focus of quality of life, as well as the nature of quantitative indicators and their compilation first into dimensions and then a quality of life index (Maggino, 2014; Maggino & Zumbo, 2012).

Accordingly, in Sect. 3, Theory, we seek to define quality of life and attend briefly to the issue of whether it is appropriate to aggregate dimensions into a composite index. But, in particular, our approach must be defensible in the context of recent, vigorous debate in the quality of life literature about the nature and appropriate statistical treatment of ‘reflective’ against ‘formative’ indicators. Reflective indicators are exemplified in the scaling of items that are the observable manifestations of an unobservable latent construct such as depression, i.e. they can be said to be ‘caused by’ it. By contrast, formative indicators are observable items that jointly comprise a construct broader concept such as socio-economic status, i.e. ‘cause’ it; and by extension the construct may not be regarded as an ‘underlying’ construct at all. However, this too may be contested, which is where a philosophical debate will briefly become relevant. But we shall see that quality of life indices in practice have often treated formative as well as reflective indicators reflectively, and that to do so has even been argued to be empirically preferable. Without attending to the issue, Stiglitz et al. (2009) clearly expected that both formative and prima facie reflective items, and by extension dimensions, would be present in a quality of life survey; and this is often the case, especially in respondent- rather than country-level surveys.

In Sect. 3 we therefore defend a coherent route through selected contributions to this demanding debate at the different levels, while noting the wise view of Greco et al. (2019) that ‘each choice made for the construction of a composite index appears to be “between the devil and the deep blue sea”.’ Therefore, we have accorded with his recommendation that ‘robustness analysis should follow the construction of an index’.

Finally, Sect. 5, Results, compares results from our reworked approach with the original approach, to provide some revealing indicative findings. Section 6, Discussion, reflects on illustrative differentiations of the results, and limitations and implications of the investigation.

3 Theory: Definitions and Debates

3.1 The Concept of Quality of Life

As Stiglitz et al. (2009) allow, the term ‘quality of life’ is frequently used interchangeably with ‘well-being’. According to Gasper (2010), ‘well-being’ is often used in psychology or health literature to refer to experiences or circumstances as experienced by an individual person, whereas ‘quality of life’ is more often used in sociology and social policy to include the societal environment in which individuals find themselves. Nonetheless, there is substantial overlap between the concepts, which extends to the measures and approaches used to quantify them. So, instruments for each tend to incorporate variables from the other (Lourenço et al., 2019). We shall generally refer to quality of life unless a source indicates otherwise.

On Glatzer’s (2012) distinction, indicators that capture individuals’ subjective experiences or circumstances (e.g. ‘satisfaction with your life as a whole’ or ‘feeling secure in your neighbourhood’) are said to measure quality of life subjectively. On the other hand, quality of life is said to be measured objectively when individuals’ living conditions (e.g. housing, or environmental conditions) are assessed without asking them for their opinion. (Respondents’ personal reports of objective features, e.g. of their income, are generally also classified as objective.)

The OECD (2020), for its country-level comparisons primarily using objective administrative and official data, has recently defined ‘well-being’ by 14 dimensions. Somewhat confusingly, it labels 11 of these dimensions as ‘quality of life’: work-life balance, health, education, social connections, civic engagement and governance, environmental quality, personal safety and subjective well-being. The other 3 dimensions are ‘material conditions’: income and wealth, jobs and earnings, and housing. We may take all 14 dimensions as a comprehensive ostensive definition of quality of life.

Several well-known quality of life surveys using objective or subjective indicators, aggregated into differing numbers of dimensions, are mentioned in Sect. 3.2 below. The individual-level GCRO QoL surveys contain both objective and subjective indicators as reported by respondents—this is noted in Sect. 4.1.

3.2 Composite Indices of Quality of Life

Nardo et al. (2005) define a composite index as a single index derived from the process of compiling individual variables based on a model designed to measure a multidimensional concept. Similarly, Michalos et al. (2011) define a composite index of well-being as a unidimensional index encapsulating a multidimensional conception of human well-being. Such a composite index is valuable because it permits simple communication about quality of life and its changes to an everyday audience, even though quality of life itself should not be regarded as a unidimensional socio-economic phenomenon that can be measured by a single variable (Mazziotta & Pareto, 2017).

Typically, a composite index is constructed in two broad steps: first by combining specifically operationalised variables—which can be put as questionnaire items to respondents, or extracted from administrative data—into several intermediate-level composite dimensions or constructs that reflect distinct aspects of quality of life (OECD, 2008); and then by aggregating the several dimensions into the single composite index (Mazziotta & Pareto, 2017).

The choice of whether or not to aggregate dimensions into a single index is debated in the literature (Greco et al., 2019; Saltelli, 2007). Proponents of stopping at the first step and retaining separate dimensions of quality of life argue that there is unacceptable ‘information loss’ (Lourenço et al., 2019) in the use of a single index, which may mask important changes in the dimensions. Instead, they advocate a ‘dashboard’ approach, by which the different dimensions of quality of life are individually monitored (Mazziotta & Pareto, 2017; OECD, 2008). Others (Saltelli, 2007) argue that this approach adds complexity and difficulty in communicating the results, especially to non-technical policy makers. Stakeholders, particularly those within government or the media, may expect and use a single composite index score, even as they may also be keen to engage with its component dimensions.

For example, in Italy, a newspaper published its composite quality of life index broken down by province, based on six dimensions of six variables each, and its trends have been widely scrutinised (Lun et al., 2006). Similarly, GCRO’s previous composite QoL index results have been launched biennially in a high-profile event attended by the Premier of the Gauteng province. The results receive extensive media attention, for example in comparisons of the overall quality of life standing of the province’s nine constituent municipalities (Hosken, 2018). Changes in municipal standing have been considered on the basis of each of the ten different constituent dimensions, as well as the overall quality of life score.

Accordingly, in re-working the previous GCRO QoL index this paper retains the overall two-step approach. Indicator variables are compiled statistically into different dimensions of quality of life, which can be presented and monitored on a ‘dashboard’. But in addition, the constructs are statistically aggregated into a single composite index of quality of life.

3.3 Theoretical Framework, with Applicable Schema from Prior Cognate Studies

The OECD Handbook (2008) provides a checklist for the creation of a composite index: theoretical framework, data selection, imputation of missing data, normalization of variables, multivariate analysis, weighting and aggregation, sensitivity analysis, and identification of main drivers (The last two items are beyond the scope of this paper). Mazziotta and Pareto (2017) have digested similar broad guidelines from a review of several instances. The theoretical framework ‘provides the basis for the selection and combination of variables into a meaningful composite indicator under a fitness-for-purpose principle’, with the aim of getting ‘a clear understanding and definition of the multidimensional phenomenon to be measured’. The remainder of this section, along with the next, explore approaches to establishing a theoretical framework to guide the creation of a composite index, and highlight how our approach differs.

Nine analogous studies were reassuring to different extents in their conception and number of indicators and dimensions, and their approach to aggregating dimension scores into a one-number index. Estes (2019) constructed the Weighted Index of Social Progress (WISP) based on forty indicators grouped into ten dimensions, namely education, health status, economic, demographic, environmental, social chaos, cultural diversity, and welfare effort. The statistical weights applied to the indicators and dimensions were derived from a two-stage principal component analysis and oblique factor analysis. The Australian Personal Well-being Index (Cummins et al., 2012) measures subjective well-being by averaging the level of satisfaction across seven dimensions of quality of life, namely standard of living, health, achieving in life, personal relationships, personal safety, community-connectedness and future security. Stanojević and Benčina (2019) constructed a composite index of well-being based on three dimensions, i.e. health and education, political system and economy, by computing a weighted sum of indicators from factor analysis.

Ihsan and Aziz (2019) ranked districts in three provinces of Pakistan on the basis of quality of life, with a set of 31 variables grouped into seven dimensions. They used the percentage variance explained by each of the factors, the eigenvalues, as weights in the aggregation of the index. Verdugo et al. (2005) built on a polychoric correlation matrix because their 41 items were ordinal in scale. They validated their five-dimension structure with a confirmatory factor analysis enriched by fit statistics. Helmes et al. (1998) noticed that oblique rotation improved the fit of their factor structure to the data.

But the devil is in the detail. In the context of South Africa, in the latest of their three successive papers Greyling and Tregenna (2020) retained the classical approach from their earlier two papers (Greyling, 2013; Greyling and Tregenna 2017): of defining their sets of dimensions a priori, by comparison with previous international quality of life studies. The dimensions in these studies are summarised in Table 1 in Greyling and Tregenna (2020). Their chosen dimensions are housing and infrastructure, social relationships, socio-economic status (SES), health, safety, and lastly ‘governance’. Then, again guided by the literature, they selected and assigned 33 variables to the six dimensions, out of the items used for quality of life in QoL I analysis; used EFA on each such dimension successively to check its unidimensionality; and finally validated the six-dimension model with CFA.

The point to carry forward is that their last dimension, ‘governance’ is incompletely defined and populated. A fuller understanding of this dimension is elaborated at length in Stiglitz et al. (2009) as ‘governance and voice’ [our italics]: the top-down emphasis of governance is importantly complemented (or countered!) by the bottom-up emphasis of citizen voice and active engagement. As stated (p. 177),

Political voice is an integral dimension of quality of life, having both intrinsic and instrumental worth. Intrinsically, the ability to participate as full citizens, to have a say in the framing of public policy, to be able to dissent without fear and to speak up against perceived wrongs—not only for oneself but also for others—are essential freedoms and capabilities. Political voice can be expressed both individually (such as by voting) and collectively (such as joining a protest rally).

Given the restricted emphasis of Greyling and Tregenna (2020) on governance, in choosing five variables for this dimension they include only one variable that, abstractly, addresses bottom-up voice and engagement: ‘Politics is not a waste of time’. This is an item on political alienation drawn from a survey by Everatt and Orkin (1993). Their other four items are respondents’ perceptions of top-down processes: ‘Country is going in right direction’, ‘Elections were free and fair’, ‘Satisfaction with local government’, and ‘Judiciary is free’.

This seemingly minor conceptual attenuation has methodological and empirical implications. We find empirically that our approach, within a robust model, results in a seventh dimension, distinct from Greyling and Tregenna’s (2020) largely top-down governance dimension of satisfaction with different levels of government. It comprises four bottom-up indicators of active political voice and engagement, close to that envisaged by Stiglitz et al. in the quotation above. As will be seen in Sect. 5, Results, the four indicators are: ‘Participated in the activities of any clubs’; ‘Attended community development forum’; ‘Communicated with municipality’; and ‘Voted in the 2016 local election’.

Methodologically, this points to the worth of our taking full advantage of the exploratory capacity of EFA, to both identify and populate dimensions, while drawing on the full set of available indicators (by the time of QoL V). By allowing the EFA to generate configurations of indicators and constituent variables, for empirically informed consideration, we let the relationships in the data—the painstakingly elicited responses of some 24,800 diverse Gauteng residents to 60 questions —revail on which indicators were to be selected, and how they congregate into dimensions. We believe that this is especially appropriate in very unevenly developed lower and middle income country contexts such as South Africa, where the patterns across multiple responses, and indeed the importance attached to the questions, may differ considerably in dense informal shack settlements or deep rural villages from those in formal suburban housing, for example. In this vein, within an overview of surveys in such contexts (Land et al., 2012), Shin (2008) stresses that despite Korea’s transition ‘from a low-income country into an economic powerhouse…Koreans neither interpret nor value democracy in the same way as Westerners do’. We believe that the manner and extent to which this variously manifests in the quality of life should be allowed, by the methodology, to be an empirical matter as far as possible.

Finally, there is the philosophical debate underlying the ‘exploratory’ approach to generating theory (or, more modestly, multidimensional quality of life models) out of data analysis, as against the ‘hypothetico-deductivist’ approach of testing prior theory (models) against the data, that we have now encountered. Indeed, operationally, they eventually converge, in that the exploratory approach will seek—in these instances—to validate its model by the same technique of CFA that is the end-point of the hypothetico-deductivism. This philosophical debate is beyond the scope of this paper, but we touch upon it in Sect. 3.5.

3.4 On the Nature of Quality of Life Indicators and Measurement Models: The Reflective/Formative Debate

The next, second item of the OECD Handbook’s checklist, data selection, conceals difficult and far reaching presuppositions: are the variables under consideration, and the dimensions into which they will be somehow combined as measurement models, ‘reflective’ or ‘formative’? As preliminarily characterised in Sects. 1 and 2, observable indicators are regarded as reflective if they are functions of a latent construct or variable, ‘whereby changes in the latent variable are reflected (i.e. manifested) in changes in the observable indicators’ (Diamantopoulos & Siguaw, 2006). An example of reflective variables is the list of questionnaire items put to respondents to measure the underlying construct of depression.

By contrast, sets of variables are said to be formative when ‘combined to form weighted linear composites intended to represent theoretically meaningful concepts’ (Edwards, 2011). For example, changes in any of the observable variables comprising socio-economic status—education, income, etc.—will result in changes in the composite variable to which they contribute. Precisely speaking, the reflective variables associated with a latent construct comprise a scale, while formative indicators jointly comprise an index. The contrast between them is sometimes expressed causally (Diamantopoulos & Winklhofer, 2001): the ‘direction of causality’ runs from a latent construct to its reflective indicators, but in the other direction from formative indicators to the construct, typically a composite index. The former are correctly handled by factor analysis and traditional structural equation modelling, and the latter by principal components analysis (Tabachnik and Fidell 2007) or, more recently, by partial least squares structural equation modelling (Hair et al., 2021).

There is discussion as to whether this distinction should be made primarily on empirical or theoretical grounds. Both are favoured by Finn and Kayande (2005), Petter et al. (2007), and Coltman et al. (2008). Additional relevant considerations are that reflective indicators in a scale are expected to be highly correlated, and some may thus be abandoned without changing the construct; whereas formative indicators in an index may well not be highly correlated, so that abandoning one may substantially change the conception of the composite index. Indicators and their respective constructs constitute measurement models, whereas structural models establish relationships among constructs (Jarvis et al., 2003; Maggino, 2014). It is possible that that the measurement models for the extraction of dimensions may be conceived as reflective, and the structural model for the aggregation of dimension scores into an index may be conceived as formative (Jarvis, 2003), or vice versa; or they may match.

In treating formative indicators as reflective or vice versa, the measurement model is said to be mis-specified. But, as Diamantopoulos and Siguaw (2006) note, ‘determining the nature and directionality of the relationship between a construct and a set of indicators can often be far from simple’. They show that the implications can be far-reaching. In an exercise comparing reflective and formative constructions of a dimension, they find that the number of indicators varies, and indeed that the indicators finally included are disparate.

In applying these careful distinctions to the original, stipulative GCRO approach, it appears initially (see Fig. 2) that the objective indicators constituting three of the dimensions were formative: in the socio-economic dimension, for example, the presence of piped water, flush toilet and electricity; in the dwelling dimension, whether the structure is made of bricks, and is owned; and in the connectivity dimension, highest education, and ownership of cellphone or television. The other seven dimensions appear to be more clearly reflective, containing items regarding satisfaction—with family, health, security, work etc., or overall.

On closer inspection, however, nearly all the ten stipulated dimensions are actually a mixture of reflective and formative items, to a greater or lesser extent: for example, there are seemingly reflective items of satisfaction within the infrastructure and dwelling dimensions, and conversely, formative elements of employment status and income in the work-satisfaction dimension. How should such combinations have best been handled? In effect, since the assignment of variables into dimensions as well as their combination into an index were originally stipulated by the developer and then treated additively, both the measurement models and the 10-dimensional structural model were effectively formative.

The challenge, however, for our QoL index revision was how to proceed in regard to this reflective/formative issue. It will be seen in Sect. 4 below that, not unusually, we treat the relation of measures to dimensions, and also of dimensions to index, reflectively. As with the adoption of an appropriate theoretical perspective above, so with our selection and construal of variables: the route we adopted was necessarily pragmatic, but again has methodological support, precedent, and a philosophical foundation. Pragmatically, the given selection of 60 subjective and objective indicators we were revisiting was fortunately extensive; relevant in coverage, having been informed by the prior review (Jennings, 2012); and covering, as noted just above, a spread of putatively formative or reflective items.

Methodologically the overall reflective approach that we take on the reflective/formative issue accords with the position of Edwards (2011) and Simonetto (2012). They distinguish five objections to the use of formative indicators, which quite largely follow from the respective definitions elaborated above. The first is dimensionality: a reflective latent construct is unidimensional, in that its indicators are highly correlated, and therefore replaceable. But formative indicators represent distinct aspects of the index they comprise, with low correlation. This renders the index conceptually ambiguous. Secondly, internal consistency is a prerequisite for the indicators of a reflective construct, in that they correlate; but a problem for formative indicators, in that multicollinearity becomes problematic the more consistent they may be. Thirdly, a reflective measurement model is identified provided it has three or more indicators; but a formative construct needs ‘to be inserted into a larger model’ (Simonetto, 2012) involving one or two reflective indicators, which may well affect its loadings and its meaning. Fourthly, reflective measurement models incorporate errors of the indicators, whereas formative models implausibly assume that these are error free. Finally, the construct validity of a reflective measure is based on the extent of the loadings of its measures on the latent construct, as evidenced for instance by Cronbach’s alpha. But such measures for formative constructs are altered by the additional indicators required for identification.

These advantages of the reflective over the formative approach may also be empirically sustained. As a counter to the experiment mentioned earlier of Diamantopoulos and Siguaw (2006), a full path-model study (von Stumm et al., 2013) measuring life-course pathways to psychological distress found that the formative model underestimated path coefficients, and there was difficulty in finding suitable identifier variables; whereas the reflective model was more sensitive, and had better fit.

We may also invoke precedent. Among the studies mentioned at the end of Sect. 3.3, Ihsan and Aziz (2019) notably use eigenvalues from their second-step factor analysis, i.e. of reflective dimensions, to weight the aggregation of their final quality of life score. And in South Africa Greyling and Tregenna (2020), considered above, moved in their successive analyses of the GCRO QoL I data from their earlier use of principal components analysis, consonant with formative measurement models, to using exploratory oblique factor analysis, i.e. presuming reflective indicators, to check the unidimensionality of their hypothesised dimensions; plus subsequent confirmation of the validity of the reflective dimensions using confirmatory factor analysis (CFA).

Finally, philosophically, this decision has a powerful implication. It makes easy sense with a psychological latent construct like depression that changes in the construct ‘cause’ its observable indicators to change. But surely the direction of causality is the other way around with SES? Not necessarily! This is where the standpoint of critical realism becomes relevant. We briefly take this up in the next section, including its relation to the exploratory approach broached in the previous section.

On all these grounds, we believe it is defensible to resort to a reflective extraction both of dimensions and of the ensuing one-number index. In Sect. 4, Methodology we describe in Sect. 4.2 our resulting methodology of multivariate analysis. The other steps of the OECD checklist are taken up in the remainder of the section.

3.5 Philosophical Foundations of Competing Approaches

We noted earlier the remark of Greco et al. (2019) that ‘each choice made for the construction of a composite index appears to be “between the devil and the deep blue sea”.’ In treading this perilous path, we defended our choice of starting with a boldly exploratory use of EFA, in Sect. 3.3. This entailed treating our dimensions and their constituent indicators as reflective, as argued in Sect. 3.4. As promised, the present section now signposts the competing standpoints in philosophy of science underpinning what we called the classical approach and our alternative—and indeed their rapprochement, if only in a final methodological step.

In Sect. 3.3 we noted that the ‘classical’ approach to compiling a composite multidimensional indicator applies, albeit in compacted form, the hypothetico-deductive philosophy (Popper [1935], 1959) of scientific theory testing. Having established their hypotheses—based on the prior literature or intuition—scientists deduce predictions that are testable against observable evidence. In this case, as we saw for Greyling and Tregenna (2020), the a priori configuration of six dimensions and their constituent indicators simultaneously provided the overall hypothesis and its testable implications. After the indicator assignments were separately tested for unidimensionality with EFA, the fit or otherwise of the overall CFA against the data, using standard criteria, provided the test of the configuration.

Our approach, by contrast, was informed by the equally venerable (e.g. Tukey, 1969) philosophy of exploratory enquiry. It basically asks the question, ‘What is going on here?’ (Behrens, 1997). This may be because of lack of relevant literature to inform prior hypotheses in a new field; or, as in our case, because we wished to allow for the possible effects of a context with highly uneven levels of development on indicator salience and dimension formation, rather than assume that influential quality of life measures primarily in the developed North would apply. The exploration begins with the data, for which we had the very large QoL V survey sample, including a generous set of 60 objective and subjective quality of life-related indicators. The exploratory enquirer fits tentative models with appropriate statistical techniques, in our case EFA, to supply configurations of dimensions with constituent indicators; and then seeks to improve their fit and interpretability by an iterative process of model re-specifications. For EFA this is constrained by a number of established criteria for a defensible number of dimensions.

In other words, exploratory enquiry is a mainly process of theory-generating. But to avoid over-fitting and Type I error, the enquirer expects (resorting to the hypothetico-deductive approach) to test the finalised model: on a ‘hold-out’ sub-sample, or more recently ‘bootstrapping’, or best of all a matching independent data-set. In our case, in addition to applying CFA on the modelling data, we had available the earlier QoL IV and III datasets, against which to check the model for configural invariance.

In practice, the CFA furnishes ‘modification indices’, which allow the hypothetico-deductivist some exploratory adjustments, provided they are minor, to ‘save the hypothesis’ (Lakatos, 1980). For the exploratory enquirer, as we saw, incorporating these adjustments in their last model is merely a further final stage in exploratory model-building, following their CFA test. This momentary rapprochement does suggest that in the stage of building a composite index in this way, the scientist expects to move through phases of both explanatory inquiry and hypothetico-deductivism: ‘hypothesis driven and data-driven science are not in competition with each other but are complementary and best carried out iteratively’ (Kell & Oliver, 2004).

Finally, there is the matter of critical realism, raised in Sect. 3.3, where we drew on Edwards (2011) for the position by which seemingly formative indicators are seen as actually reflective of latent constructs existing in in the world. This position is a corollary of realist philosophy of science (Gorski, 2013). On this view, SES—or, perhaps less surprisingly—social classes, economies, ideologies, agency, and so on are real entities that have ontological existence separately from their manifestations, measures, and our thought about them. This view crucially meets ‘the prerequisite for causality … that the variables involved refer to distinct entities’; whereas with formative constructs ‘one variable is part of another, [and] then their association is a type of part-whole correspondence, not a causal relationship’ (Edwards, 2011).

But realism does immediately pose the considerable challenge of researching the mediations relating theories and their empirical refractions to the ontology (Archer et al., 1999). A proposal particularly suitable to our approach, and our hopes for its policy impact, sees an adaptation of ‘grounded theory’ (Glaser & Strauss, 1967), a particular form of iterative exploratory enquiry, ‘providing a method for critical realism’ (Oliver, 2011).

4 Methodology

In the previous Theory section, we covered the first two points of the OECD checklist, theoretical framework and data selection, within the context of revisiting GCRO’s QoL index. We are positioned to take forward a two-step reflective analysis, drawing on 61 indicator variables listed in “Appendix”. The original 58 categorical variables were augmented with three new, longitudinally available, user-relevant variables from QoLs III to V (denoted r1, r4 and r5 in “Appendix”); and one variable, the participant’s rating of their overall quality of life, was removed to serve as a possible reference indicator. This left a total of 60 variables which were considered in our analysis.

As before, there are choices to be made among possible techniques at each juncture (Greco et al., 2019; Maggino & Zumbo, 2012). In this section we give a methodological account of how we handled the next five points of the OECD checklist: comparability of variables, imputation of missing data, multivariate analysis, weighting and aggregation, and our validation exercise in lieu of a sensitivity analysis. We shall touch on the last point, identification of main drivers, in Sect. 6, following the Results section.

4.1 Comparability of Variables

All the variables in the questionnaire were categorical: either dichotomies (0 or 1), or three- or five-point Likert scales. Variable coding was reversed where necessary so that higher values indicated increasingly positive responses. The 3 and 5-point scales were re-set to start from 0, like the dichotomies. In contrast with the original indexing, they were not dichotomised, in order to respect the nuances in the data. This work was conducted using SPSS v27.

4.2 Missing Values

Structurally missing values (e.g. missing values arising from ‘inapplicable’ questions such as a question on marriage satisfaction for single people) were set to the neutral mid-point for Likert scales. Values which could be classed as missing at random (i.e. where respondents said ‘Don’t know’ or declined to answer, or the fieldworker simply overlooked a question) were imputed using the ‘missForest’ software package in R (Stekhoven & Bühlmann, 2012), a non-parametric multiple imputation method suitable for categorical data.

The process of imputing the variables with missing values was desirable to avoid pairwise or listwise deletion of variables during computation of the correlation matrix for the EFA. It also prevented changes in sample size as variables were removed during EFA. For example, the variable w6, ‘Total monthly household income’, had missing values for approximately 35% of observations, meaning that listwise deletion would have resulted in a substantial reduction in sample size.

4.3 Multivariate Analysis

4.3.1 Exploratory Factor Analysis (EFA)

As discussed in Sect. 3.3, our two-stage approach to the use of factor analysis was guided jointly by our preference − based on theoretical, empirical and philosophical grounds —for a broad exploratory approach to both the selection of indicators and the population of factors (dimensions); and for reflective rather than formative measurement as discussed in Sect. 3.4. The EFA technique groups correlated observed variables, or ‘indicators’, into distinct unobserved dimensions which are also referred to as latent constructs (Stanojević & Benčina, 2019). The obtained latent constructs are then assumed to ‘cause’ the observed variables in the respective dimensions; equivalently, the observed variables ‘reflect’ the respective latent constructs. The correlation obtained between a variable and its causal factor is referred to as factor loading (Cooper, 1983). Ideally, each indicator loads strongly on only one dimension. However, dimensions are likely to be correlated in practice, as they are in the social world to varying extents. In factor analysis, the particular cross-loadings can be greatly reduced by invoking oblique rotation of the dimensions (Plucker, 2003).This makes factor analysis the method of choice in the construction of a composite QoL index.

By contrast, principal components analysis (PCA) seeks, ideally, a single principal component that is the linear combination of variables that explains the most variance in the data. It is consonant with the conception of lightly correlated indicators formatively contributing to an outcome, typically a multivariable index. However, more than one principal component (dimension) may be extracted in PCA, in which case the dimensions are by definition orthogonal and uncorrelated (Howard, 2016). This orthogonality is retained by the choice of rotation, e.g.varimax, that may be undertaken to ease interpretation.

In EFA, the characterisation of the dimensions is suggested by the one or two highest loading variables, and the process of retaining variables, including the choice of a minimum cut-off, is then informed by how variables load and also how they contribute to the meaning of the dimension under which they fall. For these reasons our choice departed from the use of PCA by, for example, Ihsan and Aziz (2019), as well as Greyling (2013) and her precedent in Nicoletti et al. (2000). But our comprehensive exploratory application of EFA also departed from the later approach of Greyling and Tregenna (2020), who confined their use of EFA to confirm or refine the unidimensionality of the dimensions and contents that they had selected previously, on the basis of prior specifications of dimensions in use in, primarily, the Global North.

To accommodate the categorical nature of variables, our EFA used a polychoric correlation matrix, like Verdugo et al. (2005). The most frequently used methods of extracting the factors in a factor analysis include the maximum likelihood method and principal axis factoring, which are suited for continuous (i.e. interval and ratio) variables. Given that the data employed in this study are categorical (i.e. binary and ordinal), weighted least squares (WLS) was the appropriate method of factor extraction. Oblique rotation was applied to allow for the correlation between dimensions, using the oblimin variant.

4.3.2 The Number of Dimensions

Four criteria were considered to determine the optimal number of dimensions, or factors (Afifi et al., 2019). Firstly, on the Kaiser criterion, dimensions with eigenvalues equal to or greater than one are retained. Secondly, parallel analysis compares eigenvalues obtained through factor analysis on the actual dataset and on a similar dataset populated with random numbers. Dimensions with higher eigenvalues from the actual dataset compared to their equivalent from the randomised dataset are then retained. The third criterion examines a scree plot for the ‘knee’. The fourth criterion is the conceptual coherence and interpretability of dimensions, given the distribution of variables among them.

4.3.3 Rationalising Indicators Per Dimension

In the model with the chosen number of dimensions, all variables with absolute factor loadings greater than or equal to 0.35 were considered for retention (Howard, 2016). Then, following Kenny’s (2016) recommendations for desirable numbers of indicators per dimension, all variables with loadings greater or equal to 0.5 were retained. Where there were fewer than five such variables in a dimension, additional variables with factor loadings less than 0.5 but greater than or equal to 0.35 were additionally retained, from highest factor loading to lowest, until the dimension included five variables, or until all variables with factor loadings of 0.35 or above were included.

The factor analysis yielded fit statistics and also an eigenvalue for each factor (dimension), to be used in weighting the dimension scores in the second stage, the aggregation of the index. The established criteria for an ‘excellent’ model (Brown, 2015) are root mean square error of approximation (RMSEA) < 0.05 and root mean square residual (RMSR) < 0.05; and comparative fit index (CFI) and Tucker-Lewis index > 0.95. However, earlier, less stringent criteria are taken as ‘good’ for more diverse, non-psychological models: < 0.06 and > 0.90, respectively. Our fit statistics were assessed against these criteria.

4.3.4 Testing the Dimensions’ Construct Validity

After factor analysis has identified the dimensions and the contributing variables have been established, Cronbach’s alpha is useful in testing the separate internal consistency of each dimension (Tavakol & Dennick, 2011), i.e. the extent to which the constituent variables measure the same concept. Cronbach’s alpha runs from 0 to 1, and a value of 0.7 or more is typically considered acceptable for uses such as testing a relatively homogeneous psychological scale for a latent variable. However, given the great diversity of indicators, we allowed for a marginal zone down to 0.6.

4.3.5 Checking EFA Model Fit with Confirmatory Factor Analysis (CFA)

Following the EFA establishment of dimensions and the refining of their indicator selections, the overall measurement model was tested by CFA, initially against the same GCRO QoL V data (Greyling & Tregenna, 2020), and then subsequently using the data from earlier iterations of the GCRO QoL survey (see Sect. 4.5). CFA is a type of structural equation modeling which requires the analyst to specify the model’s parameters a priori based on prior knowledge about the sample data (Brown, 2015). These parameters are estimated to predict a variance–covariance matrix which approximates the variance–covariance matrix of the sample data (Brown, 2015). The aim is to confirm that there is construct validity. Different levels of invariance can be tested across samples and also over time (Liu et al., 2017). This study sought to check ‘configural invariance’, which tests the assumption that the pattern of indicators among the dimensions has remained the same over successive QoL surveys.

We implemented all of our EFA and CFA in R.

4.4 Weighting and Aggregation

The composite QoL index was computed from the overall model in the following three steps:

4.4.1 Scaling of the Variables

All non-dichotomous variables were re-scaled to range from 0 and 1. For example, the variable ‘Total monthly household income’ (w6 in “Appendix”), a Likert scale ranging from 0 to 4 (after re-setting its start value to 0), was divided by 4. As monotonic transformations of variables, re-scaling did not alter the distribution of the original dataset.

4.4.2 Computation of the Weighted Dimension Scores

Each dimension score was calculated by weighting each of its transformed individual variables with the factor loading of that variable, then summing, and finally re-scaling the dimension total to run from 0 to 1.

In mathematical notation, the computation of dimension score \({D}_{s}\) is given by the expression (1) below.

where \({I}_{si}\) = the value of indicator i scaled to 0–1, \({f}_{si}\) = the factor loading of indicator i in dimension s, \({n}_{s}\)= total number of variables within dimension \({D}_{s}\).

4.4.3 Aggregation of the Dimension Scores into the QoL Index

The overall QoL index was calculated by weighting each of the dimension scores with its eigenvalue, then summing the weighted dimensions scores, and finally re-scaling the total to run from 0 to 100.

In mathematical notation, the computation of the overall index score is provided by the expression (2) below:

\({E}_{s}\) = eigenvalue of dimension s, m = total number of dimensions (in this case m = 7). The use of the value 100 as the numerator in this formula serves to scale the score to run from 0 to 100. The calculations outlined above were completed in SPSS.

4.5 Validation Exercise

The overall model validated by CFA on the QoL V data was then checked for ‘longitudinal configural invariance’ (Byrne, 2012) against the datasets for the two preceding GCRO QoL surveys—IV (2015/16) and III (2013/14). This checks whether the number of factors and the location of indicators is retained. The next level of invariance, ‘construct-level metric invariance’, of factor loadings and thus of the respective dimension scores, was not tested. Such changes, and the resulting change in the overall quality of life score, were expected; indeed, the examining thereof was much of the purpose of repeating the QoLs. Accordingly, in the runs against QoLs III and IV, parameters were freely estimated each time. Given the size of the model, minor adjustments were anticipated for convergence to be achieved. Consequently, we were able to calculate Index scores for QoLs III and IV using the model that was generated using QoL V.

5 Results

5.1 Modelling of QoL Indicators and Dimensions

5.1.1 Original EFA on 60 Variables

EFA with oblimin oblique rotation was applied to the re-coded data with imputed missing values for the 60-variable dataset for QoL V listed in “Appendix”.

5.1.2 Number of Factors

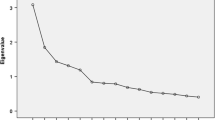

The four criteria for likely numbers of factors were examined. The Kaiser criterion, retaining dimensions with eigenvalues equal to or greater than one, supported a seven-dimension model. Parallel analysis, retaining dimensions with higher eigenvalues than their equivalent from the randomised dataset, preferred 16 dimensions. The ‘knee’ in a scree plot suggested seven dimensions.

Regarding conceptual coherence, models for 8, 9 and 10 factors were examined in case this extended insight. But this mainly had the effect of adding low-loading variables to the robust 7 factor solution. Despite some scepticism in the literature about the Kaiser criterion (Howard, 2016), and given the corroborating result from the scree plot, 7 dimensions were tentatively adopted. They are shown in the column of Table 1 marked ‘7 factor’, with the standardised loadings of their component indicators.

Application of Kenny’s (2016) recommendations, mentioned above, improved the parsimony by retaining all variables with loadings > 0.5, but augmenting factors with fewer than five such loadings with additional variables with loadings > 0.35. Following this process, a set of 33 variables remained.

5.1.3 Reduced EFA on 33 Variables

A follow-up EFA specifying seven dimensions was run on this reduced set of variables, to provide the factor loadings of the indicators on their respective dimensions that would be used in the stage 1 computations of the dimension scores; and also the eigenvalues for use as weights on those scores in the stage 2 computation of the overall QoL index score. The final dimensions and factor loadings are shown in Table 1, in the column ‘Final 7’ (Table 2). The eigenvalues for each dimension are provided in Table 2.

On the characterisation of Brown (2015), the fit statistics of this model were ‘good’ for RMSEA, 0.055, and excellent for RMSR, 0.02. Its TLI, 0.886, was a little short of the ‘good’ value of 0.9. This may be considered adequate in a large model with conceptually varied dimensions. (In Table 3 below, it will be seen that the CFA conducted with this model had excellent RMSEA and TLI, but only good RMSR, 0.058).

When the anomalous parallel analysis test was checked against the reduced set of 33 variables, it now suggested 8 factors. But it is seen in Table 1 that an 8th factor added only one variable ‘Dwelling owned’, rather surprisingly in the ‘Political Engagement’ dimension. Hence, the 7 factor solution remained optimal.

5.1.4 Cronbach’s Alpha

Application of Cronbach’s alpha to each of the 7 dimensions shows that, in this reduced 33-variable solution, three dimensions have alpha > 0.7, and three are > 0.6, our suggested criterion of internal consistency for such a variegated model. Only the 7th dimension, political engagement, is < 0.6, reflecting that two of its four factor loadings are < 0.5 but > 0.35.

5.1.5 Testing Model Fit with Confirmatory Factor Analysis (CFA)

Following development of the 33-variable, 7-factor model, this was then tested against the same GCRO QoL V data using CFA. Results for this model are presented in the top line of Table 3.

The table also shows the fit results of a test for overall configural invariance (Byrne, 2012), i.e. whether the number of factors and their respective indicators from QoL V fitted the datasets from QoL IV and QoL III. For QoL IV, only one variable among the 33—indicated by modification indices to be p10, ‘Attended community development forum’—had to be dropped for convergence to be achieved. In QoL III, variable h1, ‘Health status in the past 4 weeks’ had not been included in the questionnaire. Given these small variations, Table 3 shows that according to RMSEA and CFI the fit of the model was ‘excellent’ on the two earlier data sets, and ‘good’ on RMSR. This licensed the absolute comparison across the scores of the Index as calculated using data from all three survey iterations.

5.1.6 Oblique Rotation

The need for oblique (oblimin) rotation in the EFAs is confirmed in the co-variations among dimensions obtained from the CFA, shown in Table 4. All the significances were p < 0.001 except for that between Political Engagement and Government Satisfaction, p = 0.063, and between Infrastructure and services and Health, p = 0.165.

5.2 Comparison of Configurations Between the Original and Factor-Analysed Approaches

The differing composition of the dimensions, between the original, 10-dimension, 60 variable model and the factor-analysed, 7-dimension, 33 variable model is shown in Fig. 2.

Obviously, the numbers of variables in cognate dimensions will mostly differ in two such different models. But the placing of variables is sometimes revealingly different, showing how the use of the EFAs exposes conceptually plausible patterning in the data that undercuts the original, stipulative approach. These shifts are taken up in the Discussion section.

5.3 Comparison of Index Scores Between the Original and Factor-Analysed Approaches

Aggregate QoL index scores for various demographic groups using the original 10-dimensional approach (Everatt, 2017) and the 7-dimension factor-analysed approaches are compared by population group and gender in Table 5. (The original QoL index scores were historically presented as scores out of ten. For ease of comparison, these have been scaled to scores out of 100.)

At the aggregated QoL index level across large numbers of variables (58 and 33 respectively), it is not surprising that the differences between the original and new indices are numerically slight. The trends—slightly differing improvements of overall quality of life—are sustained. However, when the scores are broken down by municipality, differences over time emerge which may be important in considering their relative progress. The differences are most clearly seen in terms of rankings of the municipalities, as in Table 6.

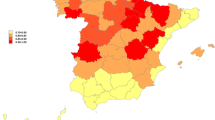

Most of the changes between the original and factor-analysed methods are by one rank position. In the two earlier QoLs there are some changes by two positions, e.g. in QoL IV, Merafong City from 7 to 5th. The rankings of Ekurhuleni are different for the two methods in all three survey iterations, moving from 3rd to 2nd to 4th over the last three QoLs, rather than 5th to 3rd to 3rd. Only the City of Tshwane’s rankings were unaffected by the choice of method: a deterioration from 1st to 4th to 6th. These rankings are graphed, for the new approach, in the Discussion section.

6 Discussion of Results, Philosophical Foundations, and Limitations

This section consists of three parts. In the first part we consider some selected salient results of the revised QoL index based on our all-reflective, 2-stage, weighted, EFA using the QoL V data, and subsequently validated through CFA on earlier datasets. We make comparison between the original GCRO approach and our new analysis. This also allows us to point to selected ‘drivers’ for future research, our last duty to the schema from the OECD Handbook (2008).

In the second part, as promised in Sects. 3.3 and 3.4, we contrast our methodological sequence with that of a closely relevant and recent South African study using the QoL I dataset, by Greyling and Tregenna (2020). We indicate the consequent, marked difference in dimensions that arise empirically. Finally, in the third part we touch on some limitations and strengths of our analysis.

6.1 Salient Results

Beforehand, we may recall the seven dimensions of our model, drawn on 33 of the 60 selected quality of life indicators available in GCRO’s QoL V (2017/18) dataset. The distribution of indicators in the original model and in our factor model was shown in Fig. 2. It was noted there that using factor analysis to determine the actual import of several indicators from respondents’ replies through factor analysis revealed notable differences from the assignment of indicators to dimensions in the original model by the developer. ‘Having medical aid’ was a telling example, actually functioning in the EFA-based model as the strongest indicator in the SES dimension (because of its relation to formal better-paid employment), rather than in Health as allocated in the original model. This poses a challenge for the theory- and literature-based approach championed by the OECD (2008), to which we return below.

The dimensions (and rounded eigenvalues) of the revised model, from Table 2, are: Infrastructure and Services (3.8), Government Satisfaction (2.6), Health Status (1.9), Safety (1.8), Life Satisfaction (1.7) and Political Engagement (1.4). All but Health Status have four or more indicators, while all but Political Engagement have nearly all their indicators loadings > 0.5. That the highest eigenvalue is nearly three times the lowest is a reminder that applying them to weight the dimension scores in aggregating them (like Ihsan & Aziz, 2019) is a substantial adjustment to not weighting them, as in the original approach. Of the latter approach, Chowdhury and Squire (2006) remark that.

This is ‘obviously convenient but also universally considered to be wrong.’ Further, against the objection that choosing one over another weighting approach is subjective, Greco et al. (2019) note that not weighting is indeed also subjective.

In Table 3 in Sect. 5.1 we reported empirical evidence based on CFA validity checks across survey iterations, showing configural invariance of dimensions and their indicator content over time. Consequently, we are encouraged to examine trends in the revised QoL index scores over time. As the original index was extensively used for comparison over time, it is appropriate to explore the variation in the longitudinal trends derived from use of each model. For convenience, results first presented in Sect. 5 are illustrated here with graphs. The solid lines in Fig. 3, drawn from Table 5, show the difference over time between the original and the factor-analysed overall QoL index scores. Given the aggregation over a large number of indicators common to both, it is to be expected that the differences at overall index level are small: at most 1.7 points at QoL IV. But these small differences are noteworthy given the very large samples. Figure 3 also shows the differences by sex over time.

On the original index, shown by the lower set of three lines, it appears that females’ quality of life, from being 1 point behind males’ at QoL III, had risen to slightly exceed that of males by QoL IV, and then fell back by QoL V. However, according to the new factor-based index, shown by the upper set of three lines, females’ quality of life increased more sharply from QoL III to IV by 2.8 points, clearly to exceed that of males; so that, although it fell back, it still equalled that of males in QoL V. Further research could show which dimensions were responsible for the sharp initial improvement—a matter of considerable relevance in South Africa, where, for example, representation by sex has been legislated at all levels of government (Vyas-Doorgapersad & Bangani, 2020).

Thus sex is one ‘driver’ (OECD Handbook 2008) of index differences, albeit not a strong one. In post-apartheid South Africa, unsurprisingly, another driver is race. Using the factor index, Fig. 4 reflects the different levels, and different rates of improvement over time, among the four main population groups. White people have the highest average score, while Black African people have the lowest. Disturbingly, the overall quality of life gap between them is also still widening over time. (This was also evident in the original index.) Even though some QoL indicators show improved satisfaction with national government, others such as sanitation and health care have not, and some have turned downwards. The interplay between indicators suggesting progress, and those suggesting growing challenges, needs to be differentiated and further examined over time.

Another evident driver is the separate municipalities’ reported organizational performance. Table 6 above, not graphed, showed how municipal rank orders differed at each point in time according to original and factor index scores. There were five such changes among the nine municipalities in QoL III, six in QoL IV, and two in QoL 5. Most were changes of one place in the ranking, but in a few cases there were changes of two places, and in one instance three. This positioning is accorded prominence by the Premier of Gauteng in response to QoL survey launches, and the extensive ensuing newspaper publicity (Sunday Times, 2018)—much as with analogous rankings in Italy (Lun et al., 2006). The empirically selected and validated configuration in the factor index will help to obviate debate about changes in rank.

Changes over time in municipalities’ seven scores for the constituent dimensions can also encourage investigations of the reasons for noteworthy changes, as seen in Fig. 5. The changes are quite striking. Notably, the initially highest index scores of Johannesburg and Tshwane at QoL III were exceeded just four years later, by the time of QoL V, by very rapid increases by Midvaal, from a low base. From a better base Lesedi, Emfuleni and Merafong also improved, but more gradually, and remained comparatively low. Rand West fell back at QoL V; and Tshwane actually fell back at QoL IV, and failed fully to recover by QoL V. This regress in Tshwane in 2016 evidently reflected citizen reactions to the widespread rioting and looting in Tshwane following the imposition by the ruling party of an unpopular executive mayor. (Maverick, 2016).

Municipal management, and monitoring by the provincial government, can be informed in even greater detail by the changes at indicator and dimensional level—for instance, respondents’ assessment of the objective provision of services in the Infrastructure dimension, and their subjective satisfaction with the municipality in the dimension of Satisfaction with Government. These two dimensions were seen to have the second highest covariance, in Table 4.

6.2 Limitations

This study escapes some limitations common in the literature, such as those of the representivity and adequate size of the sample. Nonetheless, it has a number of limitations. The first of these is that the 60 candidate indicators listed in “Appendix”, and the approach taken to their measurement, were a given—for reasons of economy and comparability. Sound practice would have reviewed their suitability. But the list was extensive, and informed by a review of previous developmental surveys. Certain objective variables, for example related to housing, were perhaps under-represented. Unfortunately, inclusion of new variables in future survey iterations, and incorporation into an index, would require adjustments to weighting and aggregation, in turn invalidating direct comparison with index scores from earlier survey iterations.

Secondly, because of the original mis-coding of variables, and the smaller earlier samples, recoding for use in the extra CFAs was taken back only to QoLs IV and III. However, before that the area sampling was less fastidious (Orkin, 2020) so this is perhaps as well. Thirdly, the successive samples are cross-sectional at the individual level rather than a panel, which would be prohibitively expensive on the necessary scale. But using the largely unchanged wards and municipalities (529 across the nine municipalities) as the unit of analysis, multi-level statistical analysis of trends would be entirely appropriate. In addition, more referencing with external variables to understand trends would be revealing: for example, with the Auditor General’s (2020) five levels of annual audit outcomes.

Against these limitations may be set the evident utility of the index: the great attention to the results by the province’s Premier, municipal governments, and residents; and as a result the many requests by municipal service-delivery departments, for example, for special analyses with which to respond to the Premier’s queries and criticisms.

7 Concluding Remarks

Our proximate purpose, in undertaking a statistically based reworking of the GCRO’s original QoL index, was to correct the misleading data-coding, discard extraneous indicators that are costly to collect, and replace the ad hoc indicator assignments and unweighted original aggregations. Using the QoL V data we were able to provide a parsimonious model—inally consisting of 33 indicators with appropriately weighted aggregations into 7 dimensions and a single QoL score—and establish its configural invariance across the two prior datasets. Additionally, this model may be updated in future, if a CFA fits well and survives validation, by obtaining from it revised weights for dimension scores and the single QoL score.

The indicative results are promisingly revealing, and can prompt a research programme within the GCRO and beyond: for instance, into drivers of the index and its dimensions, as well as dis-aggregations—subjective as well as objective—that will be informative for monitoring and planning.

Our distal purpose was to shape a simple but defensible methodological sequence, based entirely on straightforward recoding of variables, imputation of missing values, and some runs of EFA before use of CFA for validation. All techniques are available in any widely known statistical package, and could easily be replicated: in other provinces of South Africa, in fellow lower and middle income countries in Africa, and beyond.

This approach did prompt some penetrating enquiries by the journal’s reviewers. Answering these required a brisk journey through methodological and finally philosophical questions. Our factor-analytic approach is overtly exploratory, which may not be comfortable to people schooled in prior hypothesizing and subsequent tests. And it in turn presumes that indicators (and scores for the aggregation of dimensions) are all reflective rather than formative, even for indicators such as SES. This posits a known but perhaps unusual philosophical understanding of what one is measuring.

A major benefit of the approach, alongside its coherent statistical simplicity, is its sensitivity to local context. This is highlighted by the discovery in our particular milieu of a separate seventh dimension of bottom-up political engagement or ‘voice’ that complements the dimension of satisfaction with top-down governance, and confirms the importance accorded to such measures by Stiglitz et al. (2009).

Data Availability

The datasets that were analysed in this study, along with related documentation, is available from the DataFirst repository: 2017/18 QoL V dataset: https://doi.org/10.25828/8yf7-9261, 2015/16 QoL IV dataset: https://doi.org/10.25828/w490-a496, 2013/14 QoL III dataset: https://doi.org/10.25828/gn3g-vc93

Code Availability

SPSS and R scripts were used to analyse datasets considered in this study by following the methodology described in Sect. 3. The scripts are available on request.

Notes