Abstract

For many years, the journal evaluation system has been centered on impact indicators, resulting in evaluation results that do not reflect the academic innovation of journals. To solve this issue, this study attempts to construct the Journal Disruption Index (JDI) from the perspective of measuring the disruption of each journal article. In the actual study, we measured the disruption of articles of 22 selected virology journals based on the OpenCitations Index of Crossref open DOI-to-DOI citations (COCI) first. Then we calculated the JDI of 22 virology journals based on the absolute disruption index (\({D}_{Z}\)) of the articles. Finally, we conducted an empirical study on the differences and correlations between the impact indicators and disruption indicators as well as the evaluation effect of the disruption index. The results of the study show: (1) There are large differences in the ranking of journals based on disruption indicators and impact indicators. Among the 22 journals, 12 are ranked higher by JDI than Cumulative Impact Factor for 5 years (CIF5), the Journal Index for PR6 (JIPR6) and average Percentile in Subject Area (aPSA). The ranking difference of 17 journals between the two kinds of indicators is greater than or equal to 5. (2) There is a medium correlation between disruption indicators and impact indicators at the level of journals and papers. JDI is moderately correlated with CIF5, JIPR6 and aPSA, with correlation coefficients of 0.486, 0.471 and − 0.448, respectively. \({D}_{Z}\) was also moderately correlated with Cumulative Citation (CC), Percentile Ranking with 6 Classifications (PR6) and Percentile in Subject Area (PSA) with correlation coefficients of 0.593, 0.575 and − 0.593, respectively. (3) Compared with traditional impact indicators, the results of journal disruption evaluation are more consistent with the evaluation results of experts’ peer review. JDI reflects the innovation level of journals to a certain extent, which is helpful to promote the evaluation of innovation in sci-tech journals.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

At present, scientific and technological innovation has become a decisive factor in enhancing a country’s core competitiveness. As an important platform for researchers to exchange academic achievements, the evaluation of sci-tech journals has a positive or negative influence on the value orientation of their published research achievements. However, since Garfield (Garfield, 1972) put forward the idea that “citation analysis can be used as a tool for journal evaluation” in 1972, mainstream evaluation systems have been based on citation indicators. However, there is a problem that can’t be ignored when evaluating journals based on citation indicators, that is, citation indicators essentially represent the journal’s influence, rather than its academic innovation. External emergencies (such as COVID-19) can have a devastating impact on citation indicators (Fassin, 2021).

On June 28, 2022, JCR2021 was officially released. This is also the first time that publications related to COVID-19 are included in the calculation of the impact factors of journals. Among them, the impact indicators of journals in the fields of infectious diseases, intensive care and public health have increased significantly. Zhang et al. (Zhang et al., 2022) determined that among the 10 medical journals whose COVID-related papers contributed the most to the journal impact factors, COVID-related papers contributed about 50% of the journal impact factors. This phenomenon has brought unprecedented challenges to the existing evaluation system. If we continue to implement the evaluation system based on citation indicators, it will continue to affect the development of academic journals and even academic research and bring artificial obstacles to unpopular fields (Waltman & van Eck, 2013) and disruptive research (Du et al., 2016).

As early as 2012, “The San Francisco Declaration on Research Assessment”(DORA) pointed out: do not use journal impact factors as an alternative indicator to measure the quality of individual research articles (O’Connor, 2022). In February, 2020, the Chinese Ministry of Education and the Ministry of Science and Technology also issued a notice of "Several Opinions entitled Standardizing the Use of Relevant Indicators of SCI Papers in Colleges and Universities and Establishing a Correct Evaluation Orientation", clearly stating that innovation and actual contribution should be highlighted in the evaluation of innovation ability and relevant indicators of SCI papers should not be used as the direct basis for evaluation. On July 8, 2022, the European Union released the “Reform Research Evaluation Plan” and proposed that the scientific community should reject the improper use of journal and publication-based indicators in research evaluation and research evaluation should be based on the use of quantitative indicators to support qualitative assessment.

On November 9, 2022, eight departments of China namely the Ministry of Science and Technology, the Ministry of Education, the Ministry of Industry and Information Technology, the Ministry of Finance, the Ministry of Water Resources, the Ministry of Agriculture and Rural Affairs, the National Health Commission and the Chinese Academy of Sciences issued the "the work plan of carrying out the pilot reform of scientific and technological talent evaluation". The plan also pointed out the important problem of “set new standards” after “ break the four only” (paper-only, title-only, education-only and award-only tendency) (Pan et al., 2022). Therefore, it is extremely important and necessary to find a scientific and reasonable journal innovation evaluation method which has both theoretical value and practical value. To solve this issue, this study attempts to construct the Journal Disruption Index (JDI) from the perspective of measuring the disruption of journal articles and conduct an empirical study on the differences and correlations between the impact indicators and disruption indicators as well as the evaluation effect of the disruption indicators.

Concept and research status of the disruption index

Concept of the disruption index

Innovation is not a new concept. Already more than 1000 years ago, the concept of innovation was mentioned many times in Chinese ancient books and records. For example, in “the 50th Biography in the Book of Wei”, there is "reform and innovation" and in "the Book of Zhou", there is also "innovation and renew the old". But it was not until 1912 that the Austrian economist Schumpeter (Schumpeter, 1992) first proposed the basic connotation of innovation in his classic book “The Theory of Economic Development: An Inquiry into Profits, Capital, Credit, Interest and the Business Cycle” and believed that innovation is the “establishment of production function”, a new combination of production factors and production conditions in the production system, which creates a precedent for innovation theory research.

Since then, in order to explore the essence of innovation better, Henderson and Clark (Henderson & Clark, 1990), divided innovation into four categories from the perspective of knowledge management: incremental innovation, architectural innovation, modular innovation and fundamental innovation. In 1996, Christensen and Bower (Bower & Christensen, 1996) of Harvard Business School took the lead in proposing the “disruptive innovation theory” and Christensen divided innovation into sustaining innovation and disruptive innovation according to the different value networks on which innovation depends and constituted a basic framework of disruptive innovation theory in his book “The Innovator’s Dilemma”(Christensen, 1997). Therefore, disruptive innovation has become an important paradigm in the field of innovation research.

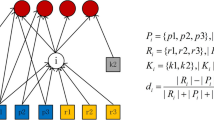

Based on this theory, Huang et al. (Huang et al., 2013) proposed the ‘Disruption Score’ and put forward that the emergence of disruptive research destroys the existing citation path and forms a new research paradigm. Funk and Owen-Smith (Funk & Owen-Smith, 2017) proposed an index to measure technological disruption based on the dynamic citation network of patents. This index reflects the degree of disruption of new patents by measuring the impact of new patents on existing citation networks. Wu et al. (Wu et al., 2019) published the article ‘Large teams develop and small teams disrupt science and technology’ in Nature and proposed the Disruption index (abbreviated as D index, as shown in formula 1), which measures the disruption by calculating the citation substitution of focus papers in the citation network (as shown in Fig. 1, the single arrow indicates the citation relationship, with the end of the arrow connecting the cited literature and the tip of the arrow connecting the reference). Based on this index, Bornmann et al. (Bornmann et al., 2020a) conducted a study of disruptive papers published in Scientometrics. Horen et al. (Horen et al., 2021), Sullivan et al. (Sullivan et al., 2021), Meyer et al. (Meyer et al., 2021), Jiang and Liu (Jiang & Liu, 2023a) respectively excavated disruptive papers in the fields of craniofacial surgery, pediatric surgery, synthetic biology and energy security. This series of research makes it possible to make innovative evaluation of scientific research papers and gradually mature.

In formula 1: \({N}_{F}\) refers to the literature that only cites the focus paper (FP), \({N}_{B}\) refers to the literature that cite both the focus paper and at least one reference (R) of the focus paper and \({N}_{R}\) refers to the literature that only cite at least one reference(R) of the focus paper but not the focus paper.

Data sources for studies related to the Disruption Index

With the development of computer technology and the rise of the Internet age, the emergence of online citation databases has greatly pushed the boundaries of the exploration of scientometrics and improved the ability of researchers who study scientometrics to conduct large-scale research. As an important field of science of science, the improvement of the large-scale research capabilities has greatly enhanced the value in use and policy significance of scientometrics. But in the first two decades of the twenty-first century, most research was based on commercial citation databases which have the advantages of fast data updating, sustainable operation and rich functions.

However, the current international mainstream commercial citation databases (such as Web of Science and Scopus) usually do not allow researchers to make in-depth use of data without any obstacles, nor do they allow researchers to redistribute the data obtained from the database. This has brought great difficulties to scholars who pay attention to related research to conduct achievement verification, multi-angle research and baseline comparison (Freese & Peterson, 2017; Vasilevsky et al., 2017). Shotton (Shotton, 2018) pointed out that the authors of scientific papers provide the citation link data of the papers. When authors need to use citation data, they should get them for free, but these data are hidden in the hands of major publishing houses. This actually reflects the sharp conflict between the intellectual property rights and data disposal rights of database vendors and the data knowledge and autonomy rights of the academic community.

In this study, there are mainly two types of data that need to be obtained, namely journal index data and citation relationship data. Among them, journal index data can be easily obtained through JCR, but the workload of obtaining citation relationship data is quite huge. Therefore, this study chooses to analyze the sources of citation relationship data used in related studies based on the disruption index first. This paper investigates the citation data sources and data acquisition methods of 29 articles that use the D index and related variables to measure disruptive innovation. The chronological results are shown in Table 1.

Among the 29 research papers, 13 papers used commercial databases and 17 papers used open data. The main commercial database of 13 research papers using commercial databases is Web of Science, but the methods of obtaining them are different. Among them, the three research teams headed by Wu, Li and Bornmann separately mainly relied on the citation data resources provided by Clarivate (from Clarivate, MPDL and Indiana University respectively), while Song obtained them through web crawlers. The source of WOS data is not stated in the text. Among the 17 papers using open data for research, there are many types of open datasets/libraries used, namely USPTO Open Data, ORCID, APS Open Data, Third-party dataset, MAG, PubMed, etc.

Variants of the disruption Index

After Wu proposed the D index, many scholars have improved it and carried out further applications and researches according to specific application scenarios (as shown in Table 2). But from Table 1, we can find that no matter which indicator variant actually depends on the three parameters \({N}_{F}\)、\({N}_{B}\) \(\mathrm{and}\) \({N}_{R}.\) Therefore, only three relevant parameters of the focus paper can be obtained to complete the measurement of disruption of the focus paper.

Concept and calculation method of journal disruption index

From the above review of the research on the D index, we can find no scholarly evaluation of disruptive innovation in journals yet. Therefore, this paper chooses to identify the disruption of research papers by measuring the degree of substitution of research papers (focus papers) for references and then evaluates the disruption of journals by constructing a new index for evaluating journals through academic disruptive innovation rather than academic influence—Journal Disruption Index (JDI, as shown in formula 2).

In formula 1: n is the number of' article' type papers in the journal and \({Dz}_{i}\) is the \({D}_{Z}\) of the ith paper in the journal, which indicates the absolute disruption of the paper.

First of all, it should be noted that \({D}_{Z}\) is called the absolute disruption index but it is not the absolute value of the D index. The main reason for using this expression is to follow the expression of the indicator proposer in its original context. Secondly, in the calculation process of JDI, the reasons for choosing \({D}_{Z}\) instead of D index and other variants for calculating the disruption index of paper are as follows: (1) Among the three parameters needed to calculate the disruption of a paper, the disruption of a paper is reflected in \({N}_{F}\) only and the influence of \({N}_{F}\) on the results of disruption evaluation should be strengthened. (2) Since there may be large differences between \({N}_{R}\) and the research topic of the focus paper (e.g., a bibliometric paper which cites a research paper on a natural science topic is cited by a subsequent bibliometric paper), the effect of \({N}_{R}\) on the disruption index is supposed to be appropriately smaller than the other two types of citations. (3) The degree of disruption of science of any article should be non-negative with a tacit acknowledgement of the scientific validity of the conclusions of all research papers. Finally, the reason for adding 1 in the calculation process is to prevent the calculation problem when \({D}_{Z}\) is 0.

As for the evaluation effect of \({D}_{Z}\), Liu et al. (Liu et al., 2020) have verified it by journal papers and selected milestone papers of American Physical Society (APS). As shown in Table 3, \({D}_{Z}\) is better than the original D index in identifying disruptive innovations. Therefore, it is more appropriate to use \({D}_{Z}\) for disruptive innovation evaluation of research papers in a comprehensive view.

Empirical research

Research object

Most scholars believe that because the \({\mathrm{D}}_{Z}\) index is not an interdisciplinary index with the function of interdisciplinary evaluation, so this paper only studies the journals involved in virology, a single discipline. In 2016, there were 34 journals of virology in Web of Science. As the measurement of disruptive innovation is mainly aimed at research papers and there is no disruptive innovation attribute in the review literature, this study excludes journals whose papers account for less than 50% of the total published papers and those whose citation relationship is not included in COCI. (Acta Virologica, Advances in Virus Research, AIDS Research and Human Retroviruses, Annual Review of Virology, Antiviral Therapy, Current Hiv Research, Future Virology, Intervirology, Journal of Neurovirology, Retrovirology, Reviews in Medical Virology and Virology). A total of 22 journals were eventually included in the study (as shown in Table 4). In addition, this study also selected Faculty Opinions and the “High-quality Journals Classification Catalogue” published by China Association for Science and Technology as the peer review results to compare with the disruptive evaluation results.

Data acquisition and processing

After full consideration based on a survey of related research, this study chooses to use the main database of OpenCitations called COCI as the data source for this study to obtain citation relationship data. OpenCitations is an independent not-for-profit infrastructure organization dedicated to publishing open bibliography and citation data through the use of semantic technologies. It originated from the proposal of the Initiative of Open Citations (I4OC) in 2017.

The purpose of this initiative is to promote structural, separable and open citation data. Structural means that the data representing each publication and each citation instance is represented in a common machine-readable format and can be accessed programmatically. Separable means that citation instances can be accessed and analyzed without access to the original documents (such as journal articles and books) in which the citation was created. Open means data can be freely accessed and reused.

To achieve these goals, the COCI database treats each reference as an independent data entity (Heibi et al., 2019). The advantages of this kind of data storage mode are: (1) it allows descriptive attributes to be assigned to citations; (2) all information about each citation can be found in one place, because this information is defined as an attribute of the citation itself; (3) citations become easier to describe, differentiate, count and process; (4) it is easier to analyze by using bibliometric methods.

COCI now contains more than 77 million bibliographic resources and more than 1.463 billion citation links (as of January 2023) across all scholarly subject areas. According to an independent analysis published in 2021 by Martin-Martin (Martín-Martín et al., 2021), the coverage of OpenCitations is now approaching that of the two major proprietary citation indicators, Web of Science and Scopus. Compared with the commercial database Web of Science, COCI has achieved a certain balance in terms of accessibility and domain coverage.

OpenCitations provides quadruple access to all data in COCI: (1) query via SPARQL endpoint; (2) retrieve using REST API; (3) search using OpenCitations search interface; (4) available on Figshare based on CSV, N-Triples and Scholix data dumps. The provision of multiple acquisition methods can meet the needs of various types of users in different usage scenarios and effectively relieve the network load pressure of the service provider. Among them, the most suitable for large-scale data calculation is to use the data updated to Figshare and download it to the researcher's local area for in-depth research (as shown in Fig. 2).

After obtaining the downloaded data from Figshare, it needs to be processed accordingly so that researchers can use it efficiently. Take the processing of the CSV format downloaded data set stored on Figshare as an example. The file of this format is a plain text file containing a data list, which is usually used to exchange data between different applications. The special value of this format lies in solving data storage, transmission, sharing, etc. between different application environments, rather than direct utilization. Considering the resource scale of the COCI dataset, transforming the data resources in this format into a local database is more conducive to researchers' measurements of disruption at all levels.

Due to the file storage settings of Figshare, the dump file of the COCI dataset on Figshare is divided into multiple CSV format files labeled with time series. Using the data import tool of visual database management tools such as Navicat can complete the conversion of data sets from multiple scattered files to a single database. If the hardware environment used in the research does not support visualization operations, the same purpose can also be achieved by directly using local database software such as SQLite or programming languages with database manipulation functions such as Python (based on sqlite3 lib).

After completing the basic data format conversion, this study chooses to slice the entire database into multi-dimensional and multi-level data according to attributes such as citation creation time, journal sources and authors and reasonably indicators the data tables (as shown in Fig. 3). This can save the time spent in the follow-up process of measuring the disruption of the focus paper and further optimize the measurement process. Considering the data scale and the performance requirements of actual research, the above model is more suitable for a single researcher or a small team to carry out the small-scale measurement of disruption work. If the researcher's institution can provide high-performance equipment, it will be a better choice to use an in-memory database such as Redis for data processing. High-performance equipment can also provide possibilities for interactive research operations (Light et al., 2014).

After proper processing of the acquired COCI database, we can use local data for the measurement of disruption. In the COCI database, a total of 7 fields are provided for researchers to use (as shown in Table 5). After the researchers select the focus paper, they can construct ‘Journal’ and ‘Article’ tables based on the journal collection information obtained from JCR and the COCI database and then calculate the three parameters \({N}_{F}\), \({N}_{B}\) and \({N}_{R}\) of the focus paper based on the DOI number of the focus paper and the ‘Citing’, ‘Cited’ and ‘Creation’ fields in the COCI database. After obtaining the three parameters of the focus paper, the calculation formula of the paper/journal disruption index can be used to obtain the disruption index at the paper level and journal level respectively. The specific data entity relationship diagram of the local database is shown in Fig. 4.

Comparative indicators

In order to ensure the accuracy of the measurement results of papers' innovativeness using the disruption index, researchers need to measure after the focus paper has experienced a period of citation accumulation. In this regard, Bornmann and Tekles (Bornmann & Tekles, 2019a) believes that no matter which discipline’s disruptiveness is measured, a 3-year citation accumulation cycle is necessary. In Liu and her collaborators’ research results of (Liu et al., 2021b) on the stability time windows of the disruption index in various disciplines, the stability time windows of biology, biochemistry and immunology, which are most relevant to virology, are 4 years after publication. Therefore, in the process of calculating JDI in this paper, in order to ensure the validity of the results, the citation time window of the focus paper is selected as 5 years (from 2016 to 2020). In addition, in order to reflect the relationship between journal influence and academic innovation, several important journal evaluation indicators need to be selected for comparison. The comparative indicators selected in this paper are:

-

(1)

Cumulative Impact Factor for 5 years (\({\mathrm{CIF}}_{5}\)). \({\mathrm{CIF}}_{5}\) is obtained by dividing the sum of the citation frequencies recorded in the COCI library from 2016 to 2020 by the number of research papers published in the journals. In Eq. 3, \({a}_{i,t}\) represents the number of citations of the ith research paper in the journal in the year t and n is the number of research papers published in the journal.

$${\mathrm{CIF}}_{5}=\frac{\sum_{i}^{n}{a}_{i,2016}+{a}_{i,2017}+{a}_{i,2018}+{a}_{i,2019}+{a}_{i,2020}}{n}$$(3) -

(2)

Average Percentile in Subject Area (aPSA). In 2015, the InCites database gave the exact percentile (Percentile in Subject Area, PSA) of the citation frequency of each paper in its respective subject area. The definition of percentile here is: the pth percentile represents that p% of the data items are less than or equal to this value. aPSA is the average PSA of all papers in a journal, and the smaller the value, the greater the influence of the journal. This indicator converts the exact percentile of the citation frequency of the citable literature published by the journal into a journal evaluation indicator, which can be considered as an accurate percentile indicator (Liu et al., 2021a). In formula 4, \({PSA}_{i}\) represents the PSA of the ith research paper in the journal and n is the number of research papers published in the journal.

$$\mathrm{aPSA}=\frac{\sum_{i}^{n}{PSA}_{i}}{n}$$(4) -

(3)

Journal Index of Percentile Ranking with 6 Classifications (JIPR6). Arrange all papers in ascending order of PSA to determine 6 percentile segments, namely (0, 1%], (1%, 5%], (5%, 10%], (10%, 25%], (25%, 50%], (50%, 100%] and the Sp of papers in each section are set to 60, 50, 40, 30, 20, 10 respectively (Bornmann & Mutz, 2011). The average Sp value of journal papers is the JIPR6 of the journal. In formula 5, \({Sp}_{i}\) is the Sp obtained from the ith research paper of the journal, and n is the number of research papers published in the journal.

$$\mathrm{JIPR}6=\frac{\sum_{i}^{n}{Sp}_{i}}{n}$$(5)

Statistical processing methods

In this study, IBM SPSS STATISTICS 25 was used for statistical processing. After using SPSS to conduct Kolmogorov–Smirnov normality test on the data, we find that the data of each indicator is not normally distributed. Therefore, the Spearman correlation test was used for the correlation analysis among the indicators.

Results and analysis

Comparison of journal rankings based on the disruption index and citation indicators

The 22 journals were sorted according to JDI, CIF5, JIPR6 and aPSA (in reverse order) and the results are shown in Table 6. Among the 22 journals, 12 (accounting for 54.5% of all journals) are ranked higher by JDI than \({\mathrm{CIF}}_{5}\), JIPR6 or aPSA (in reverse order). There are 17 journals (accounting for 77% of all journals) with a gap of 5 or more between the JDI ranking of the journals and the rankings of the journals’ \({\mathrm{CIF}}_{5}\), JIPR6 or aPSA (in reverse order). This shows that there is a significant difference between the evaluation results of journals based on traditional citation indicators and the evaluation results of journals based on the innovation of research papers published in journals.

Correlation analysis of each index

The results of the Spearman test on the correlation between journal indicators are shown in Table 7. It can be seen from Table 6 that the innovation indicator JDI is moderately correlated with the citation indicators CIF5, JIPR6 and aPSA and the correlations are 0.486, 0.471 and -0.448 respectively. The selected citation indicators CIF5, JIPR6 and aPSA are highly correlated and the correlation coefficients are all close to 1. The Spearman test results of various indicators in journal research papers are shown in Table 8. It can be seen from Table 7 that \({D}_{Z}\) is moderately correlated with the citation indicators CI, PR6 and PSA and the correlations are 0.593, 0.575 and − 0.593 respectively.

Comparison of evaluation results based on the disruption index and peer review

Article level

As the most authoritative peer-reviewed database in the global biomedical field in the past 20 years, faculty opinion integrates the joint efforts of more than 8000 international authoritative experts and is a knowledge discovery tool for evaluating published research. Faculty Opinions reviewers are all leading experts in the fields of life sciences and medicine and provide comments, opinions and validation of key papers in their fields. The quality and rigor of the reviewers means that researchers can ensure the quality of their recommended papers. In this study, the 22 journals contained a total of 5566 research papers (focus papers) of which 140 were included by Faculty Opinions. The average \({D}_{Z}\) of focus papers and Faculty Opinions accepted papers is shown in Table 9. The average \({D}_{Z}\) of Faculty Opinions included papers is much higher than that of all focus papers.

Journal level

Since 2019, in order to promote the construction of a science and technology journal system that is in line with the world's scientific and technological power and to facilitate the high-quality development of Chinese science and technology journals, the Chinese Association for Science and Technology has been guiding and supporting its national societies to publish a graded catalog of high-quality journals for domestic and foreign science and technology journals in various disciplines in accordance with the principles of "peer review, value orientation and homogeneity", so as to provide reference for scientific and technological workers and research institutions in publishing papers and academic evaluation. This study selected 13 Chinese SCI journals in the two fields of mechanical engineering and environmental science for verification (relevant indicators are shown in Table 10 ~ Table 11). It can be found that the average JDI of T1 journals in each field is higher than the average JDI of T2 and T3 journals. The JDI of some journals with lower ratings is higher than that of journals with higher ratings, which may be due to the different sub-disciplines of the journals and the differences in the evaluation habits of experts belonging to different societies that grade journals.

Conclusion

JDI is moderately correlated with traditional journal citation indicators

In this paper, 22 journals in virology are selected for measurement and the results show that JDI has a certain degree of correlation with the selected citation indicators. We believe that the reason for the correlation between the evaluation results of the two different systems is that the technological threshold breakthroughs brought about by disruptive innovations have driven the booming development of related research, bringing about a large number of retrospective citation identifications, which are manifested at the level of citation indicators as an increase in the frequency of citations.

JDI is significantly different from traditional journal citation indicators

This journal evaluation method makes innovative evaluation of journals with the aid of the measurement of disruption of paper from the level of knowledge structure. There is a big difference between the evaluation results of journals based on JDI and impact indicators. This evaluation method brings new research ideas to the field of journal evaluation, which may help relevant institutions and scholars to get rid of the constraints of impact factor and promote the sound development of scientific research, sci-tech journals and academic ecology.

JDI reflects the innovation level of journals to a certain extent

By referring to the peer review results of the Faculty Opinions and the high-quality journal classification catalogue published by China Association for Science and Technology, this study found that both the \({D}_{Z}\) and JDI reflected the innovative differences among different measurement samples, both at the journal level and at the paper level. Therefore, JDI can be used as a reference index to evaluate the innovation level of journals.

Limitations and prospects

Lack of the gold standard for comparison

Bornmann and Leydesdorff pointed out that comparing indicators with peer evaluations has been widely recognized as a way of validating indicators (Bornmann & Leydesdorff, 2013). At present, the academic community generally believes that peer review, makes up for the defect that bibliometric evaluation cannot evaluate the content of journals and is the best way to achieve innovative evaluation. However, existing studies have shown that reviewers’ cognitive bias (Truex et al., 2009) and emotional bias (Serenko & Bontis, 2011) will have a significant impact on the evaluation results. In addition, if there are too few experts participating in peer review, the evaluation results may not be reliable (Serenko & Bontis, 2011) and if there are too many experts, it will not be easy to organize (Liu & Guo, 2020). Therefore, the evaluation effect of JDI still needs to be compared with a gold standard.

The application details of JDI need to be further explored

With the continuous development of interdisciplinary research, the interdisciplinary attributes of journals and published research results are becoming stronger and stronger. However, considering that the D index and related improvements (\({D}_{Z}\)) do not have the function of interdisciplinary evaluation, the JDI created based on this index is also not suitable for interdisciplinary evaluation of journals in different disciplines. For the evaluation application of JDI in the interdisciplinary field, the author will carry out further research in the follow-up. In addition, Ruan et al. (Ruan et al., 2021) pointed out that the more references the focus paper has, the more difficult it is for the focus paper to replace all its references and be cited by subsequent research papers. If the number of references in the focus paper is too small, it is likely to bring a bias to the calculation of the D index. Bornmann et al. (Bornmann et al., 2020b) argued that the D index should only count research papers with at least 10 citations and 10 references. But so far, there is no empirical research to verify the impact of the number of references on the D index. Therefore, solving this problem is crucial to enhancing the usability of JDI.

There are still problems to be improved in data acquisition and processing

In this study, open citation data was used to calculate JDI, avoiding the link of directly obtaining citation data from the database, effectively reducing the difficulty of obtaining large-scale citation data (Narock & Wimmer, 2017) and ensuring the repeatability of the research. The method of index calculation based on citation relationship refers to the automatic calculation program of D index based on Web of Science established by Leydesdorff and Bornmann. (Leydesdorff & Bornmann, 2021). Therefore, this study also has the problem of missing references and citations without DOI. This affects the accuracy of the evaluation results based on journal innovation to a certain extent. In future research, more accurate measurement results can be obtained through the joint use of multiple data sources.

Data availability

All reference relationship data of can be obtained from opencitations.net.

References

Becerra, A. Z., Aquina, C. T., Hayden, D. M., & Torquati, A. F. (2021). The top 100 most disruptive publications in academic surgery journals: 1954–2014. The American Journal of Surgery, 221(3), 614–617. https://doi.org/10.1016/j.amjsurg.2020.07.037

Becerra, A. Z., Grimes, C. E., Grunvald, M. W., Underhill, J. M., Bhama, A. R., Govekar, H. R., Saclarides, T. J., & Hayden, D. M. (2022). A new bibliometric index: The top 100 most disruptive and developmental publications in colorectal surgery journals. Diseases of the Colon & Rectum, 65(3), 429–443. https://doi.org/10.1097/DCR.0000000000002118

Bittmann, F., Tekles, A., & Bornmann, L. (2021). Applied usage and performance of statistical matching in bibliometrics: The comparison of milestone and regular papers with multiple measurements of disruptiveness as an empirical example. Quantitative Science Studies, 2(4), 1246–1270. https://doi.org/10.1162/qss_a_00158

Bornmann, L., Devarakonda, S., Tekles, A., & Chacko, G. (2020a). Disruptive papers published in Scientometrics: Meaningful results by using an improved variant of the disruption index originally proposed by Wu, Wang, and Evans (2019). Scientometrics, 123(2), 1149–1155. https://doi.org/10.1007/s11192-020-03406-8

Bornmann, L., Devarakonda, S., Tekles, A., & Chacko, G. (2020b). Are disruption index indicators convergently valid? The comparison of several indicator variants with assessments by peers. Quantitative Science Studies, 1(3), 1242–1259. https://doi.org/10.1162/qss_a_00068

Bornmann, L., & Leydesdorff, L. (2013). The validation of (advanced) bibliometric indicators through peer assessments: A comparative study using data from InCites and F1000. Journal of Informetrics, 7(2), 286–291. https://doi.org/10.1016/j.joi.2012.12.003

Bornmann, L., & Mutz, R. (2011). Further steps towards an ideal method of measuring citation performance: The avoidance of citation (ratio) averages in field-normalization. Journal of Informetrics, 5(1), 228–230. https://doi.org/10.1016/j.joi.2010.10.009

Bornmann, L., & Tekles, A. (2019). Disruption index depends on length of citation window. El Profesional de La Información. https://doi.org/10.3145/epi.2019.mar.07

Bornmann, L., & Tekles, A. (2021). Convergent validity of several indicators measuring disruptiveness with milestone assignments to physics papers by experts. Journal of Informetrics, 15(3), 101159. https://doi.org/10.1016/j.joi.2021.101159

Bower, J. L., & Christensen, C. M. (1996). Disruptive technologies: Catching the wave. The Journal of Product Innovation Management, 1(13), 75–76.

Christensen, C. M. (1997). The Innovator’s Dilemma: When New Technologies Cause Great Firms to Fail. Harvard Business Review Press.

Du, J., Tang, X., & Wu, Y. (2016). The effects of research level and article type on the differences between citation metrics and F1000 recommendations. Journal of the Association for Information Science and Technology, 67(12), 3008–3021. https://doi.org/10.1002/asi.23548

Fassin, Y. (2021). Research on Covid-19: A disruptive phenomenon for bibliometrics. Scientometrics, 126(6), 5305–5319. https://doi.org/10.1007/s11192-021-03989-w

Freese, J., & Peterson, D. (2017). Replication in social science. Annual Review of Sociology, 43(1), 147–165. https://doi.org/10.1146/annurev-soc-060116-053450

Funk, R. J., & Owen-Smith, J. (2017). A Dynamic network measure of technological change. Management Science, 63(3), 791–817. https://doi.org/10.1287/mnsc.2015.2366

Garfield, E. (1972). Citation analysis as a tool in journal evaluation: Journals can be ranked by frequency and impact of citations for science policy studies. Science, 178(4060), 471–479. https://doi.org/10.1126/science.178.4060.471

Heibi, I., Peroni, S., & Shotton, D. (2019). Software review: COCI, the OpenCitations Index of Crossref open DOI-to-DOI citations. Scientometrics, 121(2), 1213–1228. https://doi.org/10.1007/s11192-019-03217-6

Henderson, R. M., & Clark, K. B. (1990). Architectural innovation: The reconfiguration of existing product technologies and the failure of established firms. Administrative Science Quarterly, 35(1), 9. https://doi.org/10.2307/2393549

Horen, S. R., Hansdorfer, M. A., Kronshtal, R., Dorafshar, A. H., & Becerra, A. Z. (2021). The most disruptive publications in craniofacial surgery (1954–2014). Journal of Craniofacial Surgery, 32(7), 2426–2430. https://doi.org/10.1097/SCS.0000000000007804

Huang, Y.-H., Hsu, C.-N., & Lerman, K. (2013). Identifying Transformative Scientific Research. 2013 IEEE 13th International Conference on Data Mining, 291–300. https://doi.org/10.1109/ICDM.2013.120

Jiang, Y., & Liu, X. (2023). A bibliometric analysis and disruptive innovation evaluation for the field of energy security. Sustainability. https://doi.org/10.3390/su15020969

Jiang, Y., & Liu, X. (2023b). The relationship between absolute disruption index, peer review index and CNCI: A study based on virology papers. Library and Information Service, 67(3), 96–105.

Kaltenberg, M., Jaffe, A. B., & Lachman, M. E. (2023). Invention and the life course: Age differences in patenting. Research Policy, 52(1), 104629. https://doi.org/10.1016/j.respol.2022.104629

Khusid, J. A., Gupta, M., Sadiq, A. S., Atallah, W. M., & Becerra, A. Z. (2021). Changing the status quo: The 100 most-disruptive papers in urology? Urology, 153, 56–67. https://doi.org/10.1016/j.urology.2020.10.073

Leydesdorff, L., & Bornmann, L. (2021). Disruption indices and their calculation using web-of-science data: Indicators of historical developments or evolutionary dynamics? Journal of Informetrics, 15(4), 101219. https://doi.org/10.1016/j.joi.2021.101219

Leydesdorff, L., Tekles, A., & Bornmann, L. (2021). A proposal to revise the disruption index. Profesional De La Informacion, 30(1), e300121. https://doi.org/10.3145/epi.2021.ene.21

Li, J., Yin, Y., Fortunato, S., & Wang, D. (2019). A dataset of publication records for Nobel laureates. Scientific Data. https://doi.org/10.1038/s41597-019-0033-6

Liang, G., Lou, Y., & Hou, H. (2022). Revisiting the disruptive index: Evidence from the Nobel Prize-winning articles. Scientometrics, 127(10), 5721–5730. https://doi.org/10.1007/s11192-022-04499-z

Liang, Z., Mao, J., Lu, K., & Li, G. (2021). Finding citations for PubMed: A large-scale comparison between five freely available bibliographic data sources. Scientometrics, 126(12), 9519–9542. https://doi.org/10.1007/s11192-021-04191-8

Light, R. P., Polley, D. E., & Börner, K. (2014). Open data and open code for big science of science studies. Scientometrics, 101(2), 1535–1551. https://doi.org/10.1007/s11192-014-1238-2

Lin, Y., Evans, J. A., & Wu, L. (2022). New directions in science emerge from disconnection and discord. Journal of Informetrics, 16(1), 101234. https://doi.org/10.1016/j.joi.2021.101234

Liu, X., & Guo, J. (2020). Evaluation of Chinese scientific journals: Status, problems and suggestions. Acta Editologica, 32(1), 5–9.

Liu, X., Shen, Z., Liao, Y., & Yang, L. (2020). The research about the improved disruption index and its influencing factors. Library and Information Service, 64(24), 84–91. https://doi.org/10.13266/j.issn.0252-3116.2020.24.010

Liu, X., Shen, X., Guo, J., & Shen, L. (2021a). Application of percentile in subject area of papers in creating journal indicator of percentage rank. Chinese Journal of Scientific and Technical Periodicals, 32(1), 118–124.

Liu, X., Shen, Z., Liao, Y., Zhu, M., & Yang, L. (2021b). Research on the stable time window of disruption index. Library and Information Service, 65(18), 49–57. https://doi.org/10.13266/j.issn.0252-3116.2021.18.006

Liu, X., Yi, B., Li, M., & Li, J. (2021c). Is interdisciplinary collaboration research more disruptive than monodisciplinary research? Proceedings of the Association for Information Science and Technology, 58(1), 264–272. https://doi.org/10.1002/pra2.454

Lyu, D., Gong, K., Ruan, X., Cheng, Y., & Li, J. (2021). Does research collaboration influence the “disruption” of articles? Evidence from Neurosciences. Scientometrics, 126(1), 287–303. https://doi.org/10.1007/s11192-020-03757-2

Lyu, D., Ruan, X., Li, J., & Cheng, Y. (2022). Research on the relationship between the interdisciplinarity and radicalness of papers from the perspective of knowledge integration. Journal of the China Society for Scientific and Technical Information, 41(3), 263–274.

Martín-Martín, A., Thelwall, M., Orduna-Malea, E., & López-Cózar, E. D. (2021). Correction to: Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and OpenCitations’ COCI: A multidisciplinary comparison of coverage via citations. Scientometrics, 126(1), 907–908. https://doi.org/10.1007/s11192-020-03792-z

Meyer, C., Nakamura, Y., Rasor, B. J., Karim, A. S., Jewett, M. C., & Tan, C. (2021). Analysis of the innovation trend in cell-free synthetic biology. Life-Basel, 11(6), 551. https://doi.org/10.3390/life11060551

Narock, T., & Wimmer, H. (2017). Linked data scientometrics in semantic e-Science. Computers & Geosciences, 100, 87–93. https://doi.org/10.1016/j.cageo.2016.12.008

O’Connor, S. (2022). The san francisco declaration on research assessment (DORA) in nursing science. Nursing Science Quarterly, 35(2), 275–276. https://doi.org/10.1177/08943184211070602

Osório, A., & Bornmann, L. (2021). On the disruptive power of small-teams research. Scientometrics, 126(1), 117–133. https://doi.org/10.1007/s11192-020-03756-3

Pan, J., Wang, G., & Lu, X. (2022). “Break the four only” and “establish new standards” based on five value orientations. Chinese Science Bulletin, 67(3), 236–241.

Patel, P. A., Patel, P. N., Becerra, A. Z., & Mehta, M. C. (2022). Bibliometric analysis of the 100 most-disruptive articles in ophthalmology. Clinical and Experimental Ophthalmology, 50(6), 690–695. https://doi.org/10.1111/ceo.14109

Ruan, X., Lyu, D., Gong, K., Cheng, Y., & Li, J. (2021). Rethinking the disruption index as a measure of scientific and technological advances. Technological Forecasting and Social Change, 172, 121071. https://doi.org/10.1016/j.techfore.2021.121071

Schumpeter, J. A. (1992). The Theory of Economic Development: An Inquiry Into Profits, Capital, Credit, Interest, and the Business Cycle. Transaction Publishers.

Serenko, A., & Bontis, N. (2011). What’s familiar is excellent: The impact of exposure effect on perceived journal quality. Journal of Informetrics, 5(1), 219–223. https://doi.org/10.1016/j.joi.2010.07.005

Shotton, D. (2018). Funders should mandate open citations. Nature, 553(7687), 129–129. https://doi.org/10.1038/d41586-018-00104-7

Song, P., Feng, C., Long, C., Yang, Z., & Song, Y. (2022). Study on discovery of outstanding scientific and technological talents in specific domains based on optimized disruptive index. Journal of Intelligence, 41(5), 61–65.

Sullivan, G. A., Skertich, N. J., Gulack, B. C., Becerra, A. Z., & Shah, A. N. (2021). Shifting paradigms: The top 100 most disruptive papers in core pediatric surgery journals. Journal of Pediatric Surgery, 56(8), 1263–1274. https://doi.org/10.1016/j.jpedsurg.2021.02.002

Truex, D., Cuellar, M., & Takeda, H. (2009). Assessing scholarly influence: Using the hirsch indices to reframe the discourse. Journal of the Association for Information Systems. https://doi.org/10.17705/1jais.00203

Vasilevsky, N. A., Minnier, J., Haendel, M. A., & Champieux, R. E. (2017). Reproducible and reusable research Are journal data sharing policies meeting the mark? PeerJ, 5, e3208. https://doi.org/10.7717/peerj.3208

Waltman, L., & van Eck, N. J. (2013). Source normalized indicators of citation impact: An overview of different approaches and an empirical comparison. Scientometrics, 96(3), 699–716. https://doi.org/10.1007/s11192-012-0913-4

Wang, S., Ma, Y., Mao, J., Bai, Y., Liang, Z., & Li, G. (2023). Quantifying scientific breakthroughs by a novel disruption indicator based on knowledge entities. Journal of the Association for Information Science and Technology, 74(2), 150–167. https://doi.org/10.1002/asi.24719

Wu, L., Wang, D., & Evans, J. A. (2019). Large teams develop and small teams disrupt science and technology. Nature, 566(7744), 378–382. https://doi.org/10.1038/s41586-019-0941-9

Zhang, J., Liu, Y., & Shen, Z. (2022). Covid-related papers contribute 50% on the JIF of high impact medicine journals. Journal of Data and Information Science, 7(4), 1–2. https://doi.org/10.2478/jdis-2022-0020

Zhao, Z., Li, J., Min, C., Bu, Y., Kang, L., & Bian, Y. (2019). Scientists’ academic disruptiveness significantly increased after they moved to China. Proceedings of the Association for Information Science and Technology, 56(1), 852–854. https://doi.org/10.1002/pra2.201

Acknowledgements

Our gratitude also goes to the anonymous reviewers for their valuable comments.

Funding

This research received the financial support from the National Social Science Foundation of China under Grant Number 19BTQ087 and Xinxiang Medical University Postgraduate Research Innovation Support Program Funding Project under Grant Number under Grant Number YJSCX202277Y.

Author information

Authors and Affiliations

Contributions

YJ: Literature research, Data collection and processing, Program writing, Paper writing; XL: Propose the topic of this article, Determine the content framework, Review and revise this article.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no financial or non-financial interests to disclose.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jiang, Y., Liu, X. A construction and empirical research of the journal disruption index based on open citation data. Scientometrics 128, 3935–3958 (2023). https://doi.org/10.1007/s11192-023-04737-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-023-04737-y