Abstract

We propose an innovative use of the Leiden Rankings (LR) in institutional management. Although LR only consider research output of major universities reported in Web of Science (WOS) and share the limitations of other existing rankings, we show that they can be used as a base of a heuristic approach to identify “outlying” institutions that perform significantly below or above expectations. Our approach is a non-rigorous intuitive method (“heuristic”) because is affected by all the biases due to the technical choices and incompleteness that affect the LR but offers the possibility to discover interesting findings to be systematically verified later. We propose to use LR as a departure base on which to apply statistical analysis and network mapping to identify “outlier” institutions to be analyzed in detail as case studies. Outliers can inform and guide science policies about alternative options. Analyzing the publications of the Politecnico di Bari in more detail, we observe that “small teams” led by young and promising scholars can push the performance of a university up to the top of the LR. As argued by Moed (Applied evaluative informetrics. Springer International Publishing, Berlin, 2017a), supporting “emerging teams”, can provide an alternative to research support policies, adopted to encourage virtuous behaviours and best practices in research. The results obtained by this heuristic approach need further verification and systematic analysis but may stimulate further studies and insights on the topics of university rankings policy, institutional management, dynamics of teams, good research practice and alternative funding methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Universities are subject to constant scrutiny by various stakeholders. The “evaluation society” (Dahler-Larsen, 2011) in which we live, did not spare universities. Universities must be transparent and accountable for the public money invested in their activities. However, the evaluation of performance by universities is far from simple. The multiple activities that universities carry out, which include teaching, research, and the “third mission”, interact with one another and with the objectives of policy makers and institutional missions.

Rankings have been established as a tool for informing the governance of universities. Rankings, however, influence institutional behaviour and increase competition in the higher education system, asking for policy measures in response to rankings. Even today, some twenty years after the introduction of the first rankings, there is still a great deal of interest in the theory and methodology of rankings and their impact and influence (see e. g., Hazelkorn & Mihut, 2021).

In a number of studies the inconsistencies of rankings have been analyzed from a methodological perspective. For example, Fauzi et al. (2020) analyzed five of the leading world rankings which include: Quacquarelli Symonds (QS), Times Higher Education (THE), Academic Ranking of World Universities (ARWU), Leiden Rankings (LR), and Webometrics ranking. Similarly, Olcay and Bulu (2017) analyzed the following rankings: University Ranking by Academic Performance (URAP), THE, ARWU, QS and LR. Moed (2017b) compared the ARWU, LR, THE, QS and U-Multirank rankings. These authors also analyzed the geographic coverage, the overlap of the institutions across rankings, and how the indicators were calculated from the raw data.

Moed (2017b) argued that existing rankings provide purposeful information on a single aspect rather than a multidimensional information system. In response to this critique, Daraio et al. (2015) proposed a new approach that allows to overcome the four main limitations of university rankings, namely: (1) mono-dimensionality; (2) lack of robustness from a statistical point of view; (3) dependence on the scale and subject specialization of universities; and (4) the absence of considerations of the input–output structure of academic activities. These authors proposed to rank universities based on the integration of different kinds of information and the use of more robust ranking techniques, based on advanced nonparametric efficiency methods. Moed and Halevi (2015) proposed a multidimensional matrix of scientific indicators to support the choice of metrics to be applied in a research evaluation process depending on the unit of evaluation, the dimension of the research to be evaluated, and the aims and policy contexts of a specific evaluation.

On the basis of an analysis of the main problems of existing rankings, Daraio and Bonaccorsi (2017), furthermore, proposed to invest in a data infrastructure instead of investing heavily in the creation of integrated datasets for specific indicators. Data may soon prove obsolete given the need for policy makers to have more and more granular and contextualized indicators available. Given the dynamic of the landscape, these authors proposed to co-produce indicators within open data platforms “combining heterogeneous sources of data to generate indicators that address a variety of user requirements” (Daraio & Bonaccorsi, 2017, p. 508). Vernon et al. (2018) claimed that no single ranking system provides a comprehensive assessment of the quality of academic research and stated that the measurement of university research performance through standardized rankings remains a candidate to be further investigated.

In the next sections, we will analyze the information provided by LR to investigate whether it is possible to provide higher education institutions with useful feedback on their scientific achievements in order to improve their respective performance. We propose a heuristic approach that uses the information included in LR as a base to identify outliers that can be further analyzed through case studies. Following Leydesdorff and Bornmann (2012) and Leydesdorff et al. (2019), we used the so called “excellence indicator” PPtop-10%—that is, the proportion of the top-10% most-highly-cited papers assigned to a specific unit of analysis (e.g., university; cf. Waltman, et al., 2012)- of LR to cluster universities into groups of universities which are not significantly different in terms of relevant statistics. Universities that are not statistically different in terms of their output can be considered as belonging to the same group. PPtop-10% is a percentile indicator and therefore size-independent. Percentiles can be used as an alternative to normalized citation impact of scientific publications (Wagner et al., 2022). Bornmann et al. (2013) reviewed the advantages and limitations of percentile ranks in bibliometrics. Leydesdorff et al. (2019) thus analyzed 902 universities in 54 countries; focusing on the UK, Germany, Brazil, and the USA. Applying the same methodology, Leydesdorff et al. (2021) analyzed and compared 205 Chinese universities with 197 US universities in LR 2020.

Behind the production of the LR there is a huge standardization and data cleaning work with regard to the names of the organizations. This activity, pioneered by Henk F. Moed, led to the development of an extensive and sophisticated system to identify, register, and harmonize organization names (Calero-Medina et al., 2020). In this study, we elaborate on LR to identify outlying universities, i.e. those that outperform expected results. Once the outliers have been identified, through statistical analysis and mappings, one can carry out case studies on individual specific successful institutions. Starting from the bibliometric data derived from the LR we download the raw data from WOS of the identified outliers which are then used to characterize the topics specialization and the research organization of the investigated outliers. We show how this new use of the LR can work in practice by elaborating the analysis for the Politecnico di Bari as a case.

The paper is organized as follows. In the next section we describe the main objective of the work and its contribution to the literature. Section “Data” describes the data used for the analysis carried out, while “Methods” section illustrates the methods used in the empirical analysis. Section “Results” reports the main results. “Discussion and policy implications” section discusses the obtained results and “Concluding remarks” section concludes the paper. More detailed information and data are reported in the Supplementary Materials (SM) available online.

Aims and contribution

In this paper we investigate the following research questions:

-

1.

What is the position of Italian universities in the European landscape as it appears in the LR?

-

2.

What is the position of Italian universities within Italy, shown by LR?

-

3.

How can we interpret the obtained results in terms of institutional management that is, providing universities useful feedbacks to improve their performance?

The main objective of this work is to propose a heuristic approach to identify outperforming higher education institutions (“outliers”) using as a departure base the information provided by LR. Outlier institutions are institutions that outperform expected results, i.e. institutions performing significantly different from the expectation.

We are well aware of the limitations associated with using LR as the baseline for our analyses. In fact, LR only provide information on outputs from the WOS database, and are affected by bias due to underlying technical choices (such as normalizations and weights assigned to different disciplines) and incompleteness arising from the selection of institutions to be included for each country. For this reason, we called our approach a “heuristic” approach, i.e., not a rigorous one, whose outcomes should subsequently be systematically investigated for confirmation. Nevertheless, we will show that analyzing LR through statistical analysis (Leydesdorff & Bornmann, 2012; Leydesdorff et al., 2019) and network mapping (Blondel et al., 2008; Van Eck & Waltman, 2010), can be useful for delving into the complex performance of universities.

We will focus on the position of Italian universities in the European scientific landscape, on the basis of their respective values as depicted by the LR. Italy provides an interesting international case study as the Italian academic system is primarily a public system, but has one of the lowest public funding rates in Europe. Nonetheless, it has levels of scientific production (measured in terms of published articles) and scientific impact (measured by the number of citations received) comparable to the majority of other countries with a similar level of economic development. In Italy, two thirds of the funds are allocated to universities on the basis of the number of students enrolled at the university and the remainder on the basis of scientific production weighted with the quality of research.

Despite the exclusive focus of LR on the output of the research insofar as indexed in Web of Science, our heuristic approach uses LR to make a representation of the positioning of the different universities from which to identify outlier institutions on which carry out in-depth case studies. This will be shown in the case of the Politecnico di Bari which is the only polytechnic in the South of Italy and also the youngest polytechnic in Italy. Politecnico di Bari ranks first not only in Italy but also in Europe in the Social Science and Humanities research field, and it is second worldwide in the LR.

We first provide an interpretation of the characteristics of the clusters of universities identified at the European and Italian levels. Surprisingly, we find that the best-performing Italian universities are located in both the North and the South. Thus, Politecnico di Bari represents a special case of Southern development that we would expect instead in the North. Using raw data from Politecnico di Bari’s publications downloaded from WOS, we try to understand what is behind this outstanding result of Politecnico di Bari, investigating in particular the dynamics of “teams”, identified as an expectation on the basis of previous common research but also a result of the analysis and analyzed through co-authorships.

Data

Data of LR 2021 were downloaded in Excel format from http://www.leidenranking.com/downloads. LR 2021 analyzed 902 universities in 69 countries. The file contains ranks for these universities in the preceding years (in intervals of four years). Rankings are counted both fractionally and in whole numbers. Data is provided for “All sciences” and five major fields: (i) biomedical and health sciences (BIO), (ii) life and earth sciences (LIFE), (iii) mathematics and computer science (MAT), (iv) physical sciences and engineering (FIS), (v) social sciences and humanities (SSH).

First, we explored “All sciences” (cf. Strotmann & Zhao, 2015), the last available period (2016–2019), and fractional counting. Only the fully-covered core journals and not the non-core journals are included. Thereafter we analyze also the five major fields of science as distinguished in LR. If so wished, the analysis can be repeated analogously with differently classified data. See SM 1 for additional information and for an available routine to extract the data.

Methods

We propose a heuristic approach that uses in an innovative way the information provided by LR. We consider the information of the LR as a base to identify “outlying” institutions that perform significantly below or above expectations. Our approach is a non-rigorous intuitive method (“heuristic”) because may be affected by all the bias, distortions due to the technical choices and incompleteness that affect the LR but offers the possibility to discover interesting findings to be systematically verified later. We use the “excellence indicator” PPtop-10% of the LR as a baseline on which to apply statistical analysis and network mapping to identify “outlier” institutions to be analyzed in detail as case studies.

Our approach is based on Leydesdorff and Bornmann (2012) who use LR to group universities into clusters characterized by a non-statistically significant difference in their research outputs. To test the statistical significance of differences in performance, Leydesdorff and Bornmann (2012) propose using the z-test by considering the “excellence indicator” PPtop-10% of LR. The z-test allows us to assess whether an observed proportion differs significantly from the expectation and whether the proportions of two institutions are significantly different. See some examples reported below.

Following Leydesdorff et al. (2019), we combine the calculation of the z-test on the PPtop-10% indicator provided in the LR with network and mapping techniques (Blondel et al., 2008; Van Eck & Waltman, 2010) to analyze and visualize groups and intergroup relationships by means of network analysis tools. In particular, as we will see in the “Results” section, the size of the nodes reported in the maps will be proportional to their z-values and the links among universities will be based on significance levels of the chi-square: the connections will be then between institutions not statistically different. Leydesdorff et al. (2019) compared three methodologies for the identification of homogeneous groups vs. statistically significant differences using the PP-top10% as the dependent variable: (1) stability intervals (e.g., Colliander & Ahlgren, 2011, p. 105), (2) the z-test which is based on the chi-square distribution (Leydesdorff & Bornmann, 2012), and (iii) power analysis (Cohen, 1977). The conclusion of the comparison between the UK and German universities was that the first two methods provided comparable and meaningful results, but the third one (that is, power analysis) led to very different results, which the authors were not able to explain. In order to focus on the substantial research question about Italian universities, we limit the discussion to using the z-test for the delineation of groups.

We are aware of the limits of the use of statistical significance tests in research assessment. However, given the exploratory nature of our approach which looks to identify outliers institutions to further analyze by means of in-depth case studies, we are less affected by the limits identified in the literature (see e.g. Schneider, 2013). We use the Louvain-algorithm for community finding (Blondel et al., 2008), because it provides fewer isolates than the algorithm of VOSViewer. Note that we use VOSviewer for the visualization (cf. Abramo et al., 2016), but not for the decomposition. We complement these methods with descriptive analyses to try to identify and interpret the main features of the identified clusters of universities. In addition, we use co-authorship maps and keywords frequency graphs from VOSviewer and the three fields plot performed using the R package bibliometrix (Aria & Cuccurullo, 2017) to better explain the outperforming results of Politecnico di Bari.

Some examples

The z-test can be used to measure the extent to which an observed proportion differs significantly from expectation. In the case of PPtop 10%, the expectation is 10%: without prior knowledge, one can expect a randomly selected sample to contain 10% of publications in the top 10%. The test statistics can be formulated as follows:

where: n1 and n2 are the numbers of all the papers published by institutions 1 and 2 (under the column “P” in LR); and p1 and p2 are the values of PPtop 10% of institutions 1 and 2. The pooled estimate for proportion (p) is defined as:

where: t1 and t2 are the numbers of top-10% papers of institutions 1 and 2. These numbers can be calculated on the basis of “P” and “PPtop 10%.”. When testing values for a single university, n1 = n2, p1 is the value of the PPtop 10%, p2 = 0.1, and t2 = 0.1 * n2 (that is, the expected number in the top-10%).

An absolute value of z larger than 1.96 indicates the statistical significance of the difference between two ratings at the five percent level (p < 0.05). The threshold value for a test at the one percent level (p < 0.01) is 2.576; |z|> 3.29 for p < 0.001. In a series of tests for many institutions, one may wish to avoid a family-wise accumulation of Type-I errors by using the Bonferroni correction; that is, pBonferroni = α/n where α is the original test-statistics and n the number of comparisons.

For example, we shall elaborate below the Politecnico di Bari in Italy. This university has 800 publications in the set of universities used in the construction of the LR. Of these 800, 112 are “excellent” publications in the top-10% layer, whereas one would expect only 10% of 800; that is, 80. The PPtop10% is thus 112/80 = 1.4 of its expected value.

One can use this figure as an indicator in comparisons within Italy or Europe. When compared with Politecnico di Milano, one would expect 10% of 4268 (= 427) publications to belong to the top-10% layer in the same year (for stochastic reasons). However, while 492 of its publications are thus classified, the PP-top10% of Politecnico di Milano is thus 492/427 = 1.15 of its expected value. Using test-statistics, one can also show that this difference between the two polytechnics is significant at the 5% level (Leydesdorff & Bornmann, 2012).

Universities which are not statistically significantly different can be considered as belonging to the same performance group. Despite differences in PPtop 10% the performance of these universities can be denoted as similar in statistical terms. As noted above, this group membership is represented as links of a network, so that groups can be visualized and analyzed using network software.

At http://www.leydesdorff.net/leiden11/index.htm the user can retrieve a file leiden11.xls which allows for feeding P and PPtop 10% values harvested from the LR for each two universities. The spreadsheet provides the significance level of the difference measured as z-score. For example, Politecnico di Bari is listed (in the category “All sciences” of LR 2021) with P = 800 articles of which 112 (14%) participate in the top-10% layer for the comparable set worldwide (PPtop 10%); z = 6.29. The Politecnico di Milano has 4268 articles with PPtop 10% = 11.5%. For the z-test one needs the pooled estimate:

Using Eq. (1), it follows that z = 2.004. The difference between Politecnico di Bari and Politecnico di Milano is thus statistically significant at the 5% level. Figure 1 illustrates the Excel sheet cited above (leiden11.xls) that enables the user to perform the test for data between two universities (Leydesdorff & Bornmann, 2012, p. 781).

Results

Clusters of European Universities

We analysed 302 European Universities included in the LR of 2021 employing a multi-level Louvain Communities detection approach (see Blondel et al., 2008). Figure 2 shows the three clusters obtained from the European universities by applying the approach of Blondel et al. (2008). We observe a strong presence of Dutch and Belgium universities. North-western Europe (including Denmark; part of Germany) are central. We assigned a number to each cluster of Fig. 2: the red cluster is Cluster 1, the green cluster is Cluster 2 and the blue cluster is called Cluster 3.

The 302 European universities: three clusters distinguished by the Louvain algorithm (Cluster 1 in red, Cluster 2 in green, Cluster 3 in blue); modularity Q = 0.165; Size of nodes is proportional to their z-values; links are based on significance levels of the chi-square. Parameters of VOSviewer: scale 1.19; size-variation 0.38; Normalization = Association Link, attraction = 4; repulsion = 0

Table 1 shows the description of the average of the main indicators of the LR (P, P top 10, total citations and PP top 10%) in the three clusters of European universities shown in Fig. 1. Cluster 1 includes the group of universities with the highest average of top 10 publications, the highest total citation average (more than 30,000 citations), the highest average of publications (more than 3900 publications) and an average PP top10% above 11%. Cluster 2 is composed of universities with an average number of publications close to 2000, the lowest average number of citations (around 10,000) and a PP top 10% below 7%. Cluster 3, is characterized by an average number of publications close to 3000 and with an average top 10% PP around 10%. Thanks to this characterization, we can identify Cluster 1 as the group of the best performing universities, cluster 3 as the medium-performing group while Cluster 2 as the group of the least performing universities.

Table 2 reports the top 50 universities in the EU27 which includes also the Politecnico di Bari as one of the best Italian universities in the LR. All these universities are in Cluster 1 (the red one in Fig. 1) identified previously as the best performing universities’ cluster. Interested readers can find the full list of the universities included in the three clusters, with their data about P, P top 10 and PP top 10%, in SM 2.

Apart from the different scientific performances of the obtained clusters, illustrated in Table 1, we note that there is much heterogeneity among the universities included in each cluster. Let us try to see if it is possible to identify and explain an institution’s membership in a certain cluster by considering size, age and geographic area.

Table 3 shows the distribution of Size and Foundation Year in the 3 clusters. Data comes from ETER (European Tertiary Education Register, https://eter-project.com/). Three universities (Institut Polytechnique de Paris, Polytechnic University of Bucharest and University of Bucharest) were excluded from Table 3 because didn’t report data in ETER. Size is given by the Total number of students enrolled in ISCED (International Standard Classification of Education) 5–7, years 2016–2019.

From Table 3, the universities in Cluster 1 appear to be the smallest in terms of number of students and the relatively youngest in terms of Foundation Year. Those in Cluster 2 appear to be the largest in terms of number of students enrolled, and those in Cluster 3 are intermediate in size. However, a high degree of heterogeneity is present in all 3 clusters.

Table 4 shows a final characterization of the 3 European university clusters from a geographical perspective. EUZONE in Table 4 is given by NORTH EUROPE which includes: Denmark, Sweden, Ireland, Finland, Estonia and Lithuania; CENTRAL EUROPE which includes: the Netherlands, Belgium, France, Germany, Austria, Luxembourg, Poland, Czech Republic, Romania and Hungary; SOUTH EUROPE which includes: Italy, Spain, Portugal, Cyprus, Slovenia, Slovakia and Croatia.

As can be seen from Table 4, the universities in the three clusters are spread in all the three territorial areas of the EUZONE, with a preponderance of universities from NORTH EUROPE and CENTRAL EUROPE in Cluster 1, and a prevalence of those from SOUTH EUROPE in Cluster 3, while Cluster 2 is characterized by a low presence of universities from NORTH EUROPE.

Overall, the results presented in the previous tables do not give us a clear picture of the three groups of European universities shown in Fig. 2. For this reason, we believe that the analysis of outliers and the study of small group dynamics can provide further insights. This is exactly what we will do in “The case of Politecnico di Bari” after analyzing Italian universities in depth in “Clusters of Italian Universities”.

Clusters of Italian Universities

According to the Italian Ministry of Universities and Research (MUR), the Italian university system is composed of 97 universities, of which 67 are state universities, 19 legally recognized non-state universities and 11 legally recognized non-state telematics universities.

The state universities are further distinguished into: 56 universities, 3 polytechnics, 6 schools of advanced studies, 2 universities for foreigners.

Forty-two Italian universities are included in the Leiden Ranking 2021:

-

3 state polytechnics (Politecnico di Milano, Politecnico di Torino, Politecnico di Bari);

-

2 non-state universities (Vita-Salute San Raffaele University, Università Cattolica del Sacro Cuore);

-

37 State Universities.

Figure 3 shows the two clusters on the Italian universities obtained by applying the Blondel et al. (2008) approach, with the following parameters: NCl = 2; Modularity Q = 0.081. Cluster 1 includes 22 universities and Cluster 2 contains 20 universities.

The 42 Italian universities in LR, 2 clusters, distinguished by the Louvain algorithm (Cluster 1 in red, Cluster 2 in green); modularity Q = 0.081; NCl = 2. Size of nodes is proportional to their z-values; links are based on significance levels of the chi-square. Parameters of VOS viewer: scale 1.19; size-variation 0.38; Normalization = Association Link, attraction = 4; repulsion = 0

In Fig. 3 we have applied the same methodology as in Fig. 2. As described in the “Methods” section, following Leydesdorff and Bornmann (2012) and Leydesdorff et al. (2019), the clusters among universities are built considering universities that are not statistically significant different as homogeneous sets. The size of the nodes is proportional to their z-values and the links are based on the significance levels of the chi-square, meaning that only non-statistically different universities are connected.

Table 5 shows the list of the universities included in the two clusters and reports the z score, rank in LR, number of Publications (P), number of publications in the top 10% of most frequently cited (P_top10) and % of publications in the top 10% most cited (PP_top10).

We observe that the Italian universities included in the Table 2 of the top 50 European universities are all included in Cluster 2.

We did some descriptive analysis to try to characterize and differentiate the two groups of Italian universities obtained by applying the Louvain clustering methodology.

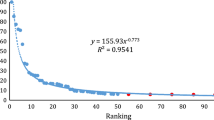

Figure 4 shows the linear regressions over the two clusters of Italian universities obtained with the Louvain method. The number reported close to the points in Fig. 4 is the value of the PP top 10%. It appears that the universities belonging to Cluster 2 have a higher percentage of papers in the top 10% of most cited works: the regression line of Cluster 2 dominates—i.e. is over- the regression line of Cluster 1. Using Discriminant Analysis, the difference is statistically significant (Wilks Lambda = 0.631; p < 0.010). For example, the Politecnico di Bari is located at the beginning of the regression line of Cluster 2, meaning that it has a small number of publications (P), but it has the highest value of PP top 10%.

Table 6 shows the distribution of Size and Foundation Year in the 2 clusters. Data comes from ETER. Size is given by the Total number of students enrolled in ISCED 5–7, years 2016–2019. Table 6 shows that the distribution of Foundation Year of the two clusters is very similar, while the size in terms of total number of enrolled students at ISCED 5–7 differs from Cluster 1 which includes the biggest universities compared to Cluster 2.

We pursued the analysis for the Italian universities by fields of science: Biomedical and health sciences (BIO), Life and earth sciences (LIFE), Mathematics and computer science (MAT), Physical sciences and engineering (FIS) and Social sciences and humanities (SSH).Please provide a definition for the significance of underlined values in the Table 7.In Table 7 we underline the values of Politecnico di Bari on which we will carry out the case study in the following.

Table 7 shows the % of P(top 10%) in All sciences and in each of the five fields considered in LR 2021 (BIO, LIFE, MAT, FIS and SSH) for each Italian university. For example, considering the Politecnico di Bari, the % of publications in the top 10% -PP(top 10%)- in SSH is around 32% while in FIS is around 13% in MAT is around 13% and both in LIFE and BIO is below the expected value of 10%, with respectively 8.57% and 7.6%. To visualize immediately the values of the PP(top 10%) that are above or below the expected value of 10%, Table 7 shows in green the % above the expected value of 10% and in red the % below the expected value of 10%.

Table 8 shows the average of the % of P(top 10%) in All sciences and in each of the five fields considered in LR (BIO, LIFE, MAT, FIS and SSH) by each cluster of Italian universities. It appears that Cluster 2 shows average values above the expected values in All sciences and in each field of science. On the contrary, Cluster 1 displays average values of the % of P(top 10%) systematically below Cluster 2 and in most of the cases below the expected values of 10%.

We calculated Spearman correlations between LR rank according to PP(top 10%) and our z scores for “All fields” and by Fields of Science (FOC): BIO, LIFE, MAT, FIS and SSH. We obtain a Spearman correlation of − 0.99 of LR rank with z “All fields”, of − 0.68 with z BIO, of − 0.71 with z LIFE, of − 0.56 with z MAT, of − 0.57 with z FIS and − 0.46 with z SSH. Interestingly, the two fields most correlated to the LR ranks are BIO and LIFE.

Table 9 compares the 2 Clusters of Italian universities by Total Revenue, Educational Income, Income from Competitive Research, Income from Commissioned Research, Staff for Research and Teaching. Data refer to the years 2016–2018 and come from the balance sheets of universities and refer to 39 out of 42 Italian universities in the LR due to the non-availability of data for 2 private universities (Università Cattolica del Sacro Cuore and Vita-Salute San Raffaele University) and for University of Bari Aldo Moro. By inspecting Table 9 we observe that there is high heterogeneity in both clusters. Cluster 2, the cluster of most performing universities, compared to Cluster 1 contains universities on average with a smaller size (in terms of Staff for Research and Teaching) and with lower Income from Competitive Research, lower Educational Income and lower Total Revenue but with higher Income from Commissioned Research.

We investigated the geographical localization of Italian universities to check whether there is a geographical concentration of the two clusters in specific regions of the country. One would expect that universities in Cluster 2, the most productive of publications in the top 10%, would be located in the Northern and more developed regions of Italy.

Figure 5 shows a map of the localization of the Italian universities included in the LR. Contrary to common wisdom, it appears that the two Louvain groups detected (Cluster 1 and Cluster 2) are all spread across the country, having many high performing universities in the South of Italy and showing that in the same geographical areas coexist universities from the two groups (for instance in the city of Bari there is the Politecnico di Bari that belongs to Cluster 2 and University of Bari Aldo Moro that belongs to Cluster 1).

The case of Politecnico di Bari

All the analyses reported in the previous sections point to the Politecnico di Bari as an outlier. We analyzed this in more detail. According to LR, Politecnico di Bari is the top-ranked Italian university and the 37th in Europe for PP top 10%, with a total of 800 publications (fractional count) and 14% of them in the top 10%, considering all fields. Politecnico di Bari was founded in 1990. It is the only polytechnic in the South of Italy and also the youngest polytechnic in Italy. Its disciplinary specialization is in the fields of architecture, engineering, and industrial design.

In the category of Social science and humanities (SSH), the Politecnico di Bari ranks first not only in Italy but also in Europe and it is ranked second worldwide. Full details of Politecnico di Bari’s results in Leiden Rankings are shown in Table 10 with the values both with and without fractional counts.

We analyzed Politecnico di Bari’s publications in social science and humanities (SSH) to try to understand the causes behind its Italian, European and even worldwide excellence in this field. It is peculiar for a polytechnic to excel in social science and humanities subjects. To try to understand the reasons behind this success and the kind of research done at Politecnico di Bari, we looked in more detail at the total publications of the Apulian university. All the publications of Politecnico di Bari, including the 123 publications (integer count) in SSH analyzed, were extracted from WOS. The publications identified for these analyzes were obtained through the process detailed in SM 3.

Figure 6 shows the keyword frequency graph of the entire Politecnico di Bari from which we can observe the prevalence of the keywords of the publications in Physics, the research field (FOS) of Politecnico di Bari in which are published more than half of the whole publications of Politecnico di Bari, as shown in Table 10. In the central part of the graph in Fig. 6 we observe a small cluster in red which includes some keywords related to the area of social science such as “open innovation” connected to other more technical keywords such as “data mining”. To better visualize the main keywords of publications in SSH of Bari Polytechnic we have illustrated them in Fig. 7. Figure 7 shows the frequency distribution of keywords in SSH characterized by a mixture of social sciences keywords, such as “open innovation” and “innovation” with more technical keywords such as “internet of things” and “big data analytics”.

Figure 8 shows the co-authorship analysis performed on the SSH publications of Politecnico di Bari using VosViewer.

Figure 8 shows that a prominent role in the Politecnico di Bari’s SSH publication network is played by the green sub-network, located in the eastern part of the graph. This sub-network is driven by a young and promising scholar, Antonio Messeni Petruzzelli and another emerging star that is Lorenzo Ardito. Antonio Messeni Petruzzelli is a full professor of Management Engineering. He was born on 10 February 1980 in Bari and did his undergraduate studies and post-graduate studies, including his PhD, at the Politecnico di Bari. Lorenzo Ardito is an assistant professor of Management Engineering at the Politecnico di Bari, where he did his studies, including his PhD under the supervision of Antonio Messeni Petruzzelli.

Figure 9 confirms what we found in the previous figures providing additional details about the relationships of institutions, authors, and author-keywords shown in the so called “three fields plot”. Figure 9 shows the three fields plot made using the R package bibliometrix (Aria & Cuccurullo, 2017).

The inspection of Fig. 9 shows that most of the scientific production in SSH of Politecnico di Bari is driven by Antonio Messeni Petruzzelli and by Lorenzo Ardito as already noted in Fig. 8. In addition we have information about the main keywords of the scientific production of Politecnico di Bari which include innovation, open innovation, simulation, big data analysis, performance evaluation, sustainable development, knowledge maturity, Italy,”servitization”, strategic planning, “ambidexterity”, artificial intelligence, rough set theory, fuzzy logic, optimization, automated valuation model, business process management and data envelopment analysis.

We asked a few members of the Politecnico di Bari’s team, namely Vito Albino the historical leader of the group, Antonio Messeni Petruzzelli and Rosa Maria Dangelico to give us the list of topics in which they are active and to describe us the strategy they used in the organization of their research team.

We observe an important overlap between the authors’ keywords shown in Fig. 9 which come from our bibliometric analysis based on the publications retrieved from WoS and the main topics in which the team declared to be active, which are the following:

-

knowledge search and recombination;

-

R&D alliance;

-

industrial symbiosis;

-

crowdfunding;

-

patent analysis;

-

digital innovation;

-

sustainability.

We observe that the keywords listed by the team are predominantly economic-social and are not typical of polytechnics where there is a prevalence of the more technical-quantitative areas.

This is especially true for Bari polytechnic in which the physics area definitely prevails as we showed earlier. The team’s speciality is in management engineering, and thus at the intersection of management research and quantitative methods such as those shown in Fig. 9, right column.

As stated by the management engineering research group of the Politecnico di Bari, the team implemented the following strategies: (i) orientation to social challenges; (ii) creation of national and international research networks; (iii) thematic specialization among members of the team and (iv) valorization of team members with a high propulsive thrust.

From the analyses carried out, we can derive that Politecnico di Bari ranks first in SSH because developed research projects that lead to publications at the intersection between social sciences and quantitative methods. This outperforming result seems related also to the intuition and research strategy of the management engineering group of Bari Polytechnic that has invested before others in hot topics such as “sustainability”, “digital innovation”, “industrial symbiosis”, with an interdisciplinary methodological approach, combining economics and management with quantitative methods and focusing on Italy.

Discussion and policy implications

Understanding, modelling and evaluating the scientific performance of universities is a complex activity. Scientific performance is influenced by various factors and by the political-institutional context. Without claiming to be exhaustive, the available funding and infrastructures, the complementarity and substitutability of other activities carried out -such as teaching and the so-called “third mission”-, the contribution provided by the technical and administrative staff of the university (Avenali et al., 2022), are some of the factors that influence the performance of research, in addition to scientific merit and individual capabilities of academic staff.

The Italian university system is characterized by an “endemic” public underfunding, and it is an “undifferentiated” system in which all academics have to do both research and teaching. Universities then, having similar institutional incentives, show a certain degree of isomorphism (DiMaggio & Powell, 1983) which makes it difficult to discriminate between them. In this context, existing university rankings, including LR, can hardly help to understand what lies behind the rank and indicator numbers reported in the university rankings. Moreover, Bruni et al. (2020) studying the heterogeneity of European universities, including Italian universities, warned against using one-dimensional approaches to the performance of higher education institutions.

Hence, the analyses carried out in this paper using the LR are far from being a comprehensive and complete analysis of Italian universities. This is due, in addition to the theoretical problems that were mentioned above on the complexity of the assessment of research, also to several potential limitations and biases in the analyses performed, which can be attributed to problems with the data and methodologies adopted.

First of all, Leiden Rankings share the main limits of other existing rankings (see e.g. Daraio & Bonaccorsi, 2017 and Fauzi et al., 2020) and consider only the publications indexed in the Web of Science database to build the bibliometric indicators proposed. It is well known that the coverage of Web of Science differs by discipline, having social science and humanities less represented in terms of outputs reported in WoS compared to the total production of the considered universities.

Secondly, the coverage of universities in the LR is not complete. Of the 97 Italian universities, only 42 are included in the LR.

Thirdly, the classification by fields of science proposed in the LR implies technical choices, is much aggregated and combines two quite different disciplinary fields such as physics and engineering. This may imply, for example, that universities with a solid tradition of excellence in physics, such as the University of Rome La Sapienza, whose professor Giorgio Parisi won the Nobel Prize for Physics in 2021, are not well positioned in the LR.

Fourthly, in this paper, we analyzed only a small number of indicators, focusing mainly on the PP(top10%) indicator, i.e., considering the percentage of publications in the top 10%. Although this is a percentile indicator and therefore “size-independent”, the analyses we have carried out show that the Politecnico di Bari outperforms all other Italian universities with a PP(top10%) equal to 14% and 112 publications in the top 10% out of a total of 800 publications (all science disciplines together, fractional counting). If we consider larger universities such as Sapienza university, we notice that it shows a PP(top10%) equal to 9.3% and 852 publications in the top 10% out of a total of 9150 publications, much larger than that of Politecnico di Bari. It seems then more difficult for larger universities to have a high PP(top10%). Furthermore, an important role is played by the disciplinary specialization of the university which, if generalist, suffers more from the poor coverage of social science and humanities research outputs in the WoS database.

Finally, the methodology used in the paper (Leydesdorff & Bornmann, 2012; Leydesdorff et al., 2019) may be subject to the limits of the use of statistical significance tests in research assessments that are well known in the existing literature (see e.g. Schneider, 2013). However, in this paper we use the statistical information with an exploratory purpose, i.e. for starting an activity of further investigation to analyze more in-depth the identified outlying institutions through case studies. Moreover, to check the robustness of our analyses, we provided several rank correlations among the results obtained by applying the statistical test procedure proposed by Leydesdorff et al. (2019) and the bibliometric indicators reported in the LR.

The new way of looking at and using LR we propose in this paper could be further explored and linked to the “science of science” perspective (Wang & Barabási, 2021) by analyzing the performance of “emerging” groups or scholars within their networks. The creation and assembly mechanisms of groups influence both the structure of collaboration networks -analysed through co-authorship, and the performance of scientific teams (Guimera et al., 2005). As shown by Wuchty et al. (2007), there is an increasing dominance of relatively small groups in the production of knowledge. In all disciplines, both for scientific production (measured in terms of publications) and for innovative production (measured in terms of patents), research is increasingly conducted in teams, which produce more cited and high-impact works than individual works.

Although there is a growing trend (Wu et al., 2019) in all scientific fields toward the presence of large teams, Wu et al. (2019) showed that alongside large teams there are small groups that are responsible for high impact research. Small teams tend to produce disruptive work while large teams are inclined to carry out developing work. According to Wu et al. (2019) one major implication is that both small and large teams are essential to scientific development.

The problem of optimal allocation of research funds to researchers, groups, and departments within a university is a long-standing one to which too few studies have been devoted. Despite some notable exceptions, such as Ioannidis (2011) and Stephan (2012), the problem of how to best fund individuals and groups within universities is still not thoroughly addressed today.

Wu et al. (2019) suggested that research policies should aim to support both large and small teams. Along the same lines, Moed (2017a, pp. 150–151) proposed a method of funding basic research which would be expected to cushion the Matthew effect implied when funding is given on the basis of previous performance. He proposed to focus on “emerging groups” rather than on the total academic staff of universities. An emerging group is considered to be a small research group that is expected to have great research potential. The director of this group should normally be a young “rising star” with a promising research agenda. The assessment of these emerging groups should be based on quantitative minimum standards in terms of bibliometric indicators and peer review. The main idea behind this type of research policy, based on the awarding of emerging groups, is to support and develop good research practices. As shown by Daraio and Vaccari (2020), reflection on good research practices and the role of researchers in them provides important information for both individual development and self-assessment and improvement. The results of our approach might be useful in helping to identify productive and emerging researchers and groups.

Concluding remarks

In this work we proposed a new use of Leiden rankings as a source of information to be further elaborated and combined with statistical analysis and mappings to identify groups of universities that are not statistically different in terms of their scientific production and to highlight outlier institutions that are particularly performing to further investigate through case studies. We applied this approach to analyze the position of Italian universities as it appears in the LR 2021 in the European landscape and within Italy. We provided an interpretation of the characteristics of the clusters of universities identified at the European and Italian levels. We found a great heterogeneity within Italian universities. Surprisingly, we found that the best-performing Italian universities are located in both the North and the South. Thus, Politecnico di Bari represents a special case of Southern development that we would expect instead in the North. Using raw data from Politecnico di Bari’s publications downloaded from WOS, we try to understand what is behind this outstanding result of Politecnico di Bari, investigating in particular the dynamics of “teams”. In particular, we identified an “emerging team” led by a young and promising scholar. Thanks to the elaborations carried out on the LR and the case study on the Politecnico di Bari, we were able to identify a small team led by a young and promising scholar who pushes for the highest scientific results in the whole university. This shows that the organization of a research team and the choice of the topics of research and journal outlets can affect the positioning of a university in the LR. Furthermore, the analyses carried out showed that the disciplinary composition of the research of a university can impact the overall position of that university in LR. This is a topic that should be further investigated.

Analyzing the publications of the Politecnico di Bari in more detail, we observe that “small teams” led by young and promising scholars can push the performance of a university up to the top of the LR. As argued by Moed (2017a), supporting “emerging teams”, can provide an alternative to research support policies, adopted to encourage virtuous behaviours and best practices in research. The results obtained by our heuristic approach need further verification and systematic analysis but could stimulate further studies and insights on the topics of university rankings policy and institutional management connecting those topics to the research line on teams, good research practices and stimulating discussion on research policies related to alternative ways to allocate research funds.

References

Abramo, G., D’Angelo, A. C., & Grilli, L. (2016). From rankings to funnel plots: The question of accounting for uncertainty when assessing university research performance. Journal of Informetrics, 10(3), 854–862.

Aria, M., & Cuccurullo, C. (2017). bibliometrix: An R-tool for comprehensive science mapping analysis. Journal of Informetrics, 11(4), 959–975.

Avenali, A., Daraio, C., & Wolszczak-Derlacz, J. (2022). Determinants of the incidence of non-academic staff in European and US HEIs. Higher Education. https://doi.org/10.1007/s10734-022-00819-7

Blondel, V. D., Guillaume, J. L., Lambiotte, R., & Lefebvre, E. (2008). Fast unfolding of communities in large networks. Journal of Statistical Mechanics: Theory and Experiment, 2008(10), P10008.

Bornmann, L., Leydesdorff, L., & Mutz, R. (2013). The use of percentiles and percentile rank classes in the analysis of bibliometric data: Opportunities and limits. Journal of Informetrics, 7(1), 158–165.

Bruni, R., Catalano, G., Daraio, C., Gregori, M., & Moed, H. F. (2020). Studying the heterogeneity of European higher education institutions. Scientometrics, 125(2), 1117–1144.

Calero-Medina, C., Noyons, E., Visser, M., & De Bruin, R. (2020). Delineating organizations at CWTS: A story of many pathways. In C. Daraio & W. Glanzel (Eds.), Evaluative Informetrics: The art of metrics-based research assessment: Festschrift in Honour of Henk. F. Moed (pp. 163–177). Springer Nature.

Cohen, J. (1977). Statistical power analysis for the behavioral sciences. Academic Press.

Colliander, C., & Ahlgren, P. (2011). The effects and their stability of field normalization baseline on relative performance with respect to citation impact: A case study of 20 natural science departments. Journal of Informetrics, 5(1), 101–113.

Dahler-Larsen, P. (2011). The evaluation society. Stanford University Press.

Daraio, C., Bonaccorsi, A., & Simar, L. (2015). Rankings and university performance: A conditional multidimensional approach. European Journal of Operational Research, 244, 918–930.

Daraio, C., & Bonaccorsi, A. (2017). Beyond university rankings? Generating new indicators on universities by linking data in open platforms. Journal of the Association for Information Science and Technology, 68(2), 508–529.

Daraio, C., & Vaccari, A. (2020). Using normative ethics for building a good evaluation of research practices: Towards the assessment of researcher’s virtues. Scientometrics, 125(2), 1053–1075.

DiMaggio, P. J., & Powell, W. W. (1983). The iron cage revisited: Institutional isomorphism and collective rationality in organizational fields. American Sociological Review, 48, 147–160.

Fauzi, M. A., Tan, C. N. L., Daud, M., & Awalludin, M. M. N. (2020). University rankings: A review of methodological flaws. Issues in Educational Research, 30, 79–96.

Guimera, R., Uzzi, B., Spiro, J., & Amaral, L. A. N. (2005). Team assembly mechanisms determine collaboration network structure and team performance. Science, 308(5722), 697–702.

Hazelkorn, E., & Mihut, G. (Eds.). (2021). Research Handbook on University Rankings: Theory, Methodology, Influence and Impact. Edward Elgar.

Ioannidis, J. (2011). Fund people not projects. Nature, 477(7366), 529–531.

Leydesdorff, L., & Bornmann, L. (2012). Testing differences statistically with the Leiden ranking. Scientometrics, 92(3), 781–783.

Leydesdorff, L., Bornmann, L., & Mingers, J. (2019). Statistical significance and effect sizes of differences among research universities at the level of nations and worldwide based on the leiden rankings. Journal of the Association for Information Science and Technology, 70(5), 509–525.

Leydesdorff, L., Wagner, C. S., & Zhang, L. (2021). Are university rankings statistically significant? A comparison among chinese universities and with the USA. Journal of Data and Information Science, 6(2), 67–95.

Moed, H. F. (2017a). Applied evaluative informetrics. Springer International Publishing.

Moed, H. F. (2017b). A critical comparative analysis of five world university rankings. Scientometrics, 110(2), 967–990.

Moed, H. F., & Halevi, G. (2015). Multidimensional assessment of scholarly research impact. Journal of the Association for Information Science and Technology, 66(10), 1988–2002.

Olcay, G. A., & Bulu, M. (2017). Is measuring the knowledge creation of universities possible?: A review of university rankings. Technological Forecasting and Social Change, 123, 153–160.

Schneider, J. W. (2013). Caveats for using statistical significance tests in research assessments. Journal of Informetrics, 7(1), 50–62.

Stephan, P. (2012). How economics shapes science. Harvard University Press.

Strotmann, A. & Zhao, D. (2015). An 80/20 data quality law for professional scientometrics? In ISSI 2015 Proceedings.

Van Eck, N. J., & Waltman, L. (2010). Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics, 84(2), 523–538.

Vernon, M. M., Balas, E. A., & Momani, S. (2018). Are university rankings useful to improve research? A systematic review. PLoS ONE, 13(3), e0193762.

Wagner, C. S., Zhang, L., & Leydesdorff, L. (2022). A discussion of measuring the top-1% most-highly cited publications: Quality and impact of Chinese papers. Scientometrics, 127(4), 1825–1839.

Waltman, L., Calero-Medina, C., Kosten, J., Noyons, E. C., Tijssen, R. J., van Eck, N. J., van Leeuwen, T. N., van Raan, A. F., Visser, M. S., & Wouters, P. (2012). The Leiden Ranking 2011/2012: Data collection, indicators, and interpretation. Journal of the American society for information science and technology, 63(12), 2419–2432.

Wang, D., & Barabási, A. L. (2021). The science of science. Cambridge University Press.

Wu, L., Wang, D., & Evans, J. A. (2019). Large teams develop and small teams disrupt science and technology. Nature, 566(7744), 378–382.

Wuchty, S., Jones, B. F., & Uzzi, B. (2007). The increasing dominance of teams in production of knowledge. Science, 316(5827), 1036–1039.

Web links consulted and latest access dates

https://eter-project.com/, last access 8/08/2022, 11.30

https://www.leidenranking.com, last access 8/08/2022, 11.30

https://www.webofscience.com/wos/woscc/basic-search, last access 15/07/2022, 15.20

https://www.leidenranking.com/information/fields, last access 15/07/2022, 17.00

https://www.miur.gov.it/web/guest/istituzioni-universitarie-accreditate. last access 10/08/2022, 11.30

Acknowledgements

We thank Vito Albino, Alessandro Avenali, Rosa Maria Dangelico and Antonio Messeni Petruzzelli for the comments provided during the writing of the work. The financial support of Sapienza University of Rome, Research Award no. RM11916B8853C925 and no. RM12117A8A5DBD18, is gratefully acknowledged.

Funding

Open access funding provided by Università degli Studi di Roma La Sapienza within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The first author (Cinzia Daraio) and the third author (Loet Leydesdorff) are members of the Board of Scientometrics.

Additional information

Some of the ideas of this work were first discussed with Henk F. Moed. After his sudden passing, we decided to complete this paper and dedicate it to his memory.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Daraio, C., Di Leo, S. & Leydesdorff, L. A heuristic approach based on Leiden rankings to identify outliers: evidence from Italian universities in the European landscape. Scientometrics 128, 483–510 (2023). https://doi.org/10.1007/s11192-022-04551-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-022-04551-y