Abstract

Quantified indicators are increasingly used for performance evaluations in the science sectors worldwide. However, relatively little information is available on the expanding use of research metrics in certain transition countries. Central Asia is a post-Soviet region where newly independent states achieved lower research performance relative to comparators in key indicators of productivity and integrity. The majority of the countries in this region showed an overall declining or stagnating research impact in the recent decade since 2008. This study discusses the implications of research metrics as applied to the transition countries based on the framework of ten principles of the Leiden Manifesto. They can guide Central Asian policymakers in creating systems for a more objective evaluation of research performance based on globally recognized indicators. Given the local conditions of authoritarianism and corruption, the broader use of transparent indicators in decision-making can help improve the positions of Central Asian science in international rankings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Though starting conditions were similar to a certain extent at the Soviet Union's (USSR) collapse in 1991, five newly-independent Central Asian countries (Kazakhstan, Uzbekistan, Kyrgyzstan, Tajikistan, and Turkmenistan) diverged later in characteristics of the national academic sectors relative to other transition economies (Nessipbayeva & Dalayeva, 2013). The effects of introducing new metrics can be powerful in transition and developing countries that recently emphasized scientific indicators, as happened in Central Europe and China (Grančay et al., 2017; Roach, 2018).

To the best of the authors' knowledge, the most recent reports about Central Asia' 's research output in its entirety as a separate region provided only a limited comparison within the area without a detailed analysis of quantified indicators (Ovezmyradov & Kepbanov, 2020, 2021; UNESCO, 2016). There exist peer-reviewed studies employing a bibliometric analysis to provide specific comparisons within and between countries in the region, but their main focus is either individual countries or generally foreign research on Central Asia (Wang et al., 2015; Kuzhabekova & Ruby, 2018b; Cмaгyлoв et al., 2018; Wang et al., 2019; Eshchanov et al., 2021). Meanwhile, this less-known part of the world presents an intriguing case for scientometrics to study.

This study aims to answer two research questions: (i) Did the policies of introducing metrics in science sectors change the quantity and quality of local publications in Central Asia? (ii) What are the best metrics practices to improve research performance using quantified indicators as applied within Central Asian countries? The research contributes to both areas of scientometrics and regional studies by investigating how a difference in research outcomes can be explained by success or failure in introducing quantified indicators within each of the comprising countries in Central Asia, as the subsequent analysis shows. This topic is significant because it could provide insights into scientific development in less known or studied regions where local specifics could require different approaches to evaluating and improving research performance. Furthermore, the findings could be relevant for policymakers in the area, including administrators, managers, officials, and other representatives of the private and particularly public sectors responsible for the management, funding, and promotion of institutions and researchers in the national science sectors.

The analysis in this study is based on global academic databases using a mix of quantitative and qualitative methods. Results of the analysis are interpreted according to the Leiden Manifesto principles, which offer helpful insights on improving the situation with metrics (Hicks et al., 2015). The central argument of the presented analysis is that the balanced use of globally recognized measures of research performance and internationalization should become necessary conditions for improvements in scientific development and global status.

The paper is divided into ten sections. The following sections consecutively describe the research design, background, international performance, regional performance, local research excellence, language skills, academic integrity, and data issues. The study discusses implications for policymakers and summarizes the main findings in the final two sections.

Methods

This study employs mixed methods: quantitative (visualization, regression, and analysis of data using publicly accessible datasets) and qualitative (document review and interviews). This research approach is believed by the author to best suit the broad topic of science sector comparisons within a region about which limited information is available.

This section first clarifies the data-driven approach applied by the author to the Central Asian case. Theoretically, this study is organized around analyzing a variety of quantified indicators widely used to gain insights into research performance. The indicators are calculated as ratios, percentages, weighted values, and other relative measures primarily incorporating a number of published documents and citations: citation share, publication share, citations per document, h-index, citations per GDP, and citations per population. Those leading indicators affect countries' relative positions and institutions in the global academic rankings (Cantu-Ortiz, 2017; World Bank Group, 2014). The number of publications, sometimes differentiated as citable documents, is the traditional measure of research productivity. The total number of citations is the primary measure of research impact (Mingers & Leydesdorff, 2015). If a paper has never been cited, it is not necessarily low-quality, but its content is likely disconnected from the respective scientific field.

The rationale behind the preference for relative measures rather than absolute values (document and citation numbers) is the facilitation of comparisons between countries. For example, comparing countries using citations per paper can help correct size differences (InCites, 2021). The global distribution of scientific efforts across countries and regions is uneven and has long been dominated by developed countries. The level of research is correlated with the country's measures of economic output. Weighted scientific output is a reasonable measure when comparing countries (World Bank Group, 2014). They can be stringent criteria in a study of country performance (Xu et al., 2014).

The preference given to relative or weighted indicators is visible in the subsequent figures majority of which present column charts and time series. The data analysis in this study heavily relies on visualizations. While most figures are of column chart type comparing entire regions, time series were used when showing change over a more extended period only for five Central Asian countries in order to avoid clutter. Regression analysis accompanies two figures in the section on implications to support the discussion of principles 2 and 8 related to institutional missions and precision correspondingly.

The analysis of publications from datasets primarily provided by SCImago (Scopus) and, to a less extent, WoS (Web of Science) through import to an electronic spreadsheet using Excel is the primary tool used in this study. These two global databases form the basis of the widely accessible web-based tools to easily compare research productivity and impact (Cantu-Ortiz, 2017; Hicks et al., 2015). The SCImago portal is based on Elsevier's annually updated version of the Scopus database (SCImago, 2021). WoS platform (Clarivate, 2021) seemed to represent a broader range of documents than Scopus and covered a greater timespan than Scopus with its multiple databases. Meanwhile, these leading academic databases might occasionally get contaminated by illegitimate content, predatory, or highjacked journals (Abalkina, 2021). Preference in the analysis was given to Scopus rather than WoS due to the prevalence in publication requirements as well as the convenience of data exports and validation.

In addition to Scopus and WoS, the Global Innovation Index dataset proved helpful in specific R&D comparisons across regions (GII, 2020). This study has to rely on the databases given the limited official statistics provided on research metrics by the region's countries. Where available, several reports and other documents are reviewed to complement quantitative indicators with qualitative findings. Finally, the author interviewed one local expert in Turkmenistan to acquire the local knowledge, which was unavailable elsewhere.

This study concentrates on comparisons between Central Asia and other post-Soviet regions and the neighbouring states as illustrations of the relative progress in research metrics. Notably, a quick look at various sources reveals Central Asia has been included in different regional categories depending on the organizations using their own classification: South and Central Asia (US Department of States), Europe and Central Asia (World Bank), Eastern Europe and Central Asia (UNICEF), Caucasus and Central Asia (UN), Eastern Europe and Eurasia (International Energy Agency), Former Soviet Union (Institute for Applied Systems Analysis). Subsequently, the comparisons additionally include Mongolia, China, Afghanistan, Iran, Turkey, Poland, and Finland. The experience of Central and Eastern Europe is especially interesting for Central Asia as they were all strongly influenced by Soviet science. In addition, Finland is one leading Western countries in R&D included as a benchmark since it used to be a part of the Russian Empire but later developed outside the Soviet area. Finally, the document review and interpretation of data analysis results (especially for propositions under each principle) frequently refer to the well-studied (relative to Central Asian neighbours) experience of Kazakhstan as having the largest economy with the highest research performance in the region. The choice of the comparators is considered relevant given the shared historical, geopolitical, and economic background (Fig. 1).

Location of Central Asian countries (the area outlined with a yellow line) and the neighbouring regions. Image source: adapted from World Bank (2021)

Recommendations for policymakers in this study rely on the Leiden manifesto. The ten principles of this guiding framework describe how to best use research metrics (Hicks et al., 2015). A dedicated section on implications presented at the end of this study will briefly outline each principle. Therefore, this section omits details of the principles.

Soviet legacy

Why did Central Asia experience grave issues with academic performance and integrity? The partial explanation lies in the historical consequences of the communist past. The authoritarian and ideologically troubled history of Russian and Soviet science is still felt in the region: USSR purged thousands of its best scholars in the earlier periods yet boasted a higher number of researchers than the US, its main geopolitical rival, by the time of the Soviet collapse; thrived under certain periods of the communist rule but declined during the democratization of the post-Soviet states in the 1990s; was the world leader in atomic power and space exploration, but weak in many fields that rapidly developed in the West (Graham, 1993; Graham & Dezhina, 2008). The research performance of university-based researchers was low compared to Western peers due to instruction-based salary, high education-administrative workload, dependence on local non-English-language journals, and, in particular, the traditional separation of teaching from research, with the Academy of Sciences and specialized R&D institutions leading science instead (Kuzhabekova & Ruby, 2018b). Overall, the European countries of the former Warsaw Pact under the strong influence of the USSR demonstrated a lower research impact than the world average as measured by the Western metrics (Kozak et al., 2015).

The positive legacy of Soviet science diminished with the aging infrastructure (developed back in USSR) and senior research staff, while the negative aspect of poor internationalization continued to affect Central Asia. The impact of considerable drawbacks of the Soviet economy in the form of central planning and rigid structures proved challenging to quickly remove from science sectors (Kozak et al., 2015). The problem particularly affected more closed countries in Central Asia: Uzbekistan, Tajikistan, and Turkmenistan. In Kazakhstan, faculty members primarily published in journals based in the Commonwealth of Independent States (the greater part of the post-Soviet area), rarely using thourough peer review by foreigners to ensure quality and academic integrity. There were more articles in international journals by the staff of research centers outside universities, but they still constituted one-third of publications (Kuzhabekova & Ruby, 2018b). With Kazakhstan being a case of the best practices in Central Asia, the remaining countries of the region were likely to be even less integrated into the global research community.

Academic institutions in Central Asia could not stay immune to post-Soviet ills of widespread bribery and nepotism (ETICO, 2004; Heyneman, 2010; AsiaNews.it, 2011; Wickberg, 2013; Yun, 2016; ETICO 2017; Bussen, 2017; Horák, 2020; Dissernet.org, 2021). Despite inevitable progress in internationalization, academic standards were believed to have degraded overall in most post-Soviet countries immediately after the collapse of the USSR as economic hardships and worsening corruption took hold in the 1990s (Brunner & Tillett, 2007).

The regional governments made efforts to reform the academic sectors, and changes in the advanced degree structure were among the most serious for science. Kazakhstan and Uzbekistan completely switched from the Soviet Candidate of Science degree to its rough Western equivalent, Ph.D., while the remaining three countries maintained a hybrid structure. The requirement to publish in Scopus-indexed journals led to dramatic effects in Kazakhstan: the number of degrees in social sciences and humanities decreased over ten-fold in five years following the introduction of stricter criteria after 2011 (Cмaгyлoв et al., 2018). The decline was sudden and sharp to the extent that the publication requirements were somewhat relaxed in 2015. Kazakhstan only recently started expanding its postdoctoral system in its leading university (Holley et al., 2018). Postdoctoral fellows were not present in Soviet science, and related positions were seemingly either non-existent or rare in other countries of Central Asia as of 2020.

Central Asian economies seemed to promote research relevant to policy, industry, or the public rather than advances in the frontiers of academic knowledge. Such interest could be motivated by heightened expectations toward technology (Brunner & Tillett, 2007). The declared state priorities in the academic sectors before 2013 were as follows: manufacturing in Kazakhstan; small business in Kyrgyzstan; industry and infrastructure in Uzbekistan (Nessipbayeva & Dalayeva, 2013). Suppose science and engineering remain priorities for local policymakers. In that case, it is an additional argument in favour of the broader use of research metrics as the relevant fields are more suitable for international publications relative to regional studies.

Performance issues in Central Asia were not an isolated phenomenon—other countries of the former Warsaw Pact experienced them too. The end of the communist regime did not lead to a considerable boost in the scientific publications of the Eastern European countries, and international cooperation among authors was lower than expected (Kozak et al., 2015). One could also argue that the European parts of the Soviet Union had better starting conditions than Central Asia in infrastructure and human capital levels when they gained independence.

Finland stands out among comparators as a former part of the Russian Empire that managed to break away from the communist regimes and became one of the most developed Western countries. Numerous examples of the Finnish leadership in the academic and technological spheres attest to the overall superiority of the Western neoliberal system over the communist regimes in economic and scientific terms. This suggests that the Baltic states would likely achieve a more extraordinary research performance were they not a part of the Soviet Union. However, the same conclusion is more problematic to make about the Central Asian states with less geographical and cultural proximity to Finland, even though all of them used to be parts of the vast Russian Empire before the formation of the Soviet Union. It could be more appropriate to conjecture that Central Asian science would be comparable to its Asian neighbours if the region developed apart from the Soviet Union. For example, China, in the optimistic scenario, or Afghanistan, in the pessimistic one. This study later discusses some of the related implications of the liberalization level achieved by a country for scientific progress.

Finally, the experience of post-communist China as a big neighbour with a growing economic and geopolitical presence in Central Asia should be cited before further analysis. The country that historically experienced the strong Soviet influence started allocating funds and ranking research based on publication metrics in reputable journals earlier than in Central Asia. Researchers were sometimes given cash rewards for publishing. The shift to scientific indicators helped achieve a remarkable success: the number of articles increased almost fourfold between 2009 and 2019. However, Chinese science and education ministries recently instructed institutions not to promote researchers solely based on their output or citation numbers since these practices pushed quantity at the expense of quality (Mallapaty, 2020). This study does not advocate financial rewards for publications due to their questionable ethics and perverse incentives. Nevertheless, the Chinese scientific accomplishment since emphasizing metrics is worth considering for adoption in Central Asia despite some negative consequences for publication quality.

Comparison of performance

In this section, the progress of Central Asia in research metrics is discussed chiefly relative to other post-Soviet areas and neighbouring regions. Figures 2, 3, 4 and 5 show the research funding and most metrics in Central Asia were much lower relative to the average for comparator countries, on par with Mongolia and Afghanistan, the bottom ranking countries Kazakhstan still managed to become a leader in this respect in the region. The Kyrgyz Republic demonstrated decent performance in citations per document and h-index.

Source: GII (2020); data were unavailable across all years for Moldova, Uzbekistan, and Turkmenistan

Research and development (R&D) score of comparator countries according to Global Innovation Index., 0–100 Score – average value between 2013 and 2020).

Source: GII (2020) data were unavailable for Turkmenistan and Afghanistan

Gross expenditure on R&D (% of GDP).

Source: SCImago (2021)

SCImago citations per document in comparator countries.

Source: SCImago (2021)

h index of comparator countries.

SCImago (2021) reveals how the share of other post-Soviet areas, such as Baltic states, in the global output exceeds the percentage of Central Asia despite having several times less population size. Figure 6 still allows for cautiously optimistic positive trends with the increasing global share of Central Asia in the publications and citations since 2011. However, only Kazakhstan was able to drive this progress, while the other four states showed little improvement. An increasing number of regional studies were published in open access (SCImago, 2021).

Source: SCImago (2021)

Share Central Asian countries' global research output of citable documents.

Unfortunately, research in the post-Soviet area generally did not seem to have caught the public eye globally: the Altmetric Attention Score recently included only one Russian contribution (Altmetric, 2018). It could be even longer relative to other transition regions until Central Asian research gets noticed by the general audience worldwide. The low research performance relative to post-Soviet peers is noticeable. Still, there is a reason for cautious optimism about positive development, as Kazakhstan showed an example of growth in indexed institutions and global output share. Research metrics can become both indicators and incentives for further progress when abiding by the best practices (principles of the Leiden Manifesto discussed later).

Intraregional variations

One of the national research missions that could strongly motivate Central Asian countries to catch up with the global scientific progress could be the adoption of the best practices of the neighbouring countries as a matter of national prestige. Figures 7 and 8 illustrate how Kazakhstan and Kyrgyzstan, Central Asian countries achieving a higher level of internationalization of education and research, also achieved decent progress in scientific indicators (Ovezmyradov & Kepbanov, 2020). Research metrics thus become crucial components of benchmarking in science. The goal of objective country-level comparisons then necessitates the inclusion of additional metrics outside of mere numbers of publications and citations. In contrast to Kazakhstan, the example of Turkmenistan remaining at the bottom of the ranking in relative performance illustrates that economic wealth from hydrocarbon resources does not necessarily convert to higher research output. The importance of global research metrics becomes all more evident in the context of more closed countries such as Turkmenistan, where objective measures provide more accurate results compared to a rosy picture of national scientific achievements painted by the official media. 'Uzbekistan's performance measure first increased until 2008 and then declined due to reasons yet to be identified. Healthy competition between science sectors within the region could stimulate higher research metrics results.

The conjecture made in this study is that the inefficient and ineffective use of research metrics in Central Asia could be one of the reasons the region mostly lagged behind post-Soviet comparator countries in significant indicators of R&D, as Figs. 2, 3, and 4 already illustrated. Overall, Central Asia seemed less reliant on quantified indicators than other post-Soviet areas and Western countries before the 2010s. Kazakhstan and Kyrgyzstan started requiring publications in journals with a positive impact factor for faculty hiring and promotion in 2011 and 2015, correspondingly (Kuzhabekova & Ruby, 2018b). The Supreme Attestation Commission of Uzbekistan revised a list of journals to publish the results of dissertations for obtaining doctoral degrees in the 2010s, making an effort to include reputable international journals (Eshchanov et al., 2021). No accurate data has been available on the prevalence of using research metrics for promotion and funding in Turkmenistan and, partially, Tajikistan, to the best of the author's knowledge; the limited results of interviews conducted by the author with representatives of the local science sectors suggest the evaluations there are still led mainly by judgment rather than data. Meanwhile, it was estimated that Kazakhstan and Uzbekistan, the two largest Central Asian countries (in terms of economic and population size), earned a dubious achievement of becoming the global leaders regarding the share of articles published in predatory journals (Kessenov, 2020). This unfortunate result of promoting metrics was highly publicized in both countries (Trilling, 2021).

Fortunately for Kazakhstan in the studied period, the leading Central Asian economy demonstrated a spectacular success of its national innovation system reforms when measured in research output, eventually leaving the remaining countries of the region far behind in terms of the impact (number of citations). During the same period, Uzbekistan, unfortunately, not only failed to increase the research output but reversed the positive trends observed before 2008. The differences in policies regarding research metrics seem to be the most plausible explanation here. Kazakhstan required not only higher-level publications but also empowered academic institutions to achieve this goal through substantial infrastructure support, global database subscriptions, international mobility programs, and a peer-review-based system of grant distribution (Kuzhabekova & Ruby, 2018b). Funding is another explanatory variable. Kazakhstan has accompanied stricter publication requirements in five years since their introduction, nearly doubling government expenditure on science and increasing the research staff by more than one-third (Kuzhabekova & Ruby, 2018b). No other country in the region appeared to afford or match such investments in reforming national science.

Meanwhile, Uzbekistan imposed unsustainable targets of annual publication for researchers' promotion and bonuses, leading to the prevalence of publishing in predatory journals (Eshchanov 2021). In contrast, publication in impact factor journals at Kazakhstan's universities was not necessarily linked to promotion but could lead to salary increases (Kuzhabekova & Ruby, 2018a).

Turkmenistan, in this context, appears to demonstrate the least favourable approach to international research metrics by almost completely neglecting them in the promotion of its research staff: award of advanced academic degrees in the country have been based on publications in the short list of approved local journals (not indexed in the global academic databases – see Science.gov.tm, 2022), while recognition of foreign Ph.D. qualifications (even from the top Western universities) required passing cumbersome procedures. Therefore, it is not surprising that local researchers and institutions did not find the metrics very attractive. Turkmenistan remained the lowest ranking economy in the regional science comparisons despite having sufficient financial resources to support the academic sector from its vast hydrocarbon export revenues.

The Supreme Attestation Commission within the Ministry of Education and Science of the Russian Federation seemed to be the exclusive government agency awarding advanced degrees to candidates of science and doctoral candidates in Tajikistan (Maльцeвa & Бapcyкoвa, 2019). This implies approved publications in the Russian language and journals. The minimum required number of publications seemed to be 3 for the Candidate of Science degree and 15 for the Doctor of Science degree (RTSU, 2022). The plagiarism issue in dissertations became so widespread among high-ranking Tajik officials. They held advanced degrees in numbers that attracted comments from the presidents of Tajikistan and the Russian Federation (Centre1.com, 2018).

A practice of defending degrees in the Russian Federation took place among Kyrgyzstan' 's scholars, though likely to a less extent than in Tajikistan (Maльцeвa & Бapcyкoвa, 2019). The country took a relatively flexible point-based approach to the publication requirements allowing doctoral degree awards based on seven publications on Russian Science Citation Index (PИHЦ) database in addition to Scopus and WoS. Reasonable limits were introduced about the publication dates before the degree defence and the number of articles in the same journal; higher points were given for foreign publications indexed in more reputable databases. The Kyrgyz Republic was also known to become the most liberalized state, with a high level of internationalization in its academic sector (Ovezmyradov & Kepbanov, 2020, 2021). All the aforementioned factors seemed to have a positive impact on the higher-than-average performance in Central Asian science.

The lack of local journals indexed by WoS or Scopus undoubtedly hampers the progress in metrics. There were only 15 such journals in the region, mainly in Kazakhstan, and few globally-ranked research institutions based in Kazakhstan (11) and Uzbekistan (2) as of 2021, none of them in the Kyrgyz Republic, Tajikistan, and Turkmenistan (Ovezmyradov & Kepbanov, 2021). The unique experience of the Eastern and Central European transition countries shows how strong the impact of domestic outlets can be: a fourfold increase in the number of publications between 2000 and 2015 was primarily due to the inclusion of local journals in the WoS rather than a sign of improving research (Grančay et al., 2017).

Locally relevant research

It is common in Western countries to observe cases of promotion being judged based on publication count in the academic databases, usually based on the institutional affiliations given on published papers with at least one address from a country or region; all addresses are credited equally for a cited article (InCites, 2021). This practice could lead to underestimation of the actual scientific potential of many post-Soviet countries where considerable emigration of the local talent took place. For instance, brain drain seems to be a significant issue for Turkmenistan, the Central Asian country with the lowest research productivity, since the 1990s, when many highly skilled citizens, including researchers, emigrated (UNESCO, 2010).

The focus on WoS and Scopus figures in this study thus likely excludes the majority of local publications. Limited interviews with representatives of academic institutions in the region suggest local publications predominantly compare less favourably relative to those indexed in the aforementioned global databases (this finding, however, is preliminary; no strong statements can be made about overall quality or other characteristics of local scientific publishing based on the anecdotal evidence at this point). Ironically, one respondent even claimed it could be more accessible to publish in an average international journal than a local journal at an institution where a researcher has no affiliation or other connection facilitating acceptance of submission. For instance, a publication even in a top international journal with the highest impact in a relevant field would not qualify for obtaining an advanced academic degree in Turkmenistan, but a local publication could. The situation with the adoption of international publications was better and seemed to improve in the countries that achieved higher internationalization, such as Kazakhstan, Kyrgyzstan, and increasingly Uzbekistan. Unfortunately, the progress appears slow compared to many other parts of the post-Soviet area. We still admit that the local publications could be more relevant for the society of each Central Asian state in some cases, even with lower quality on average relative to foreign ones.

Local non-English journals must remain essential parts of national science, and research metrics should be applied to achieve their excellence. Unfortunately, concerns remain about academic standards, the potential misuse of power, and transparency issues of decision-making in local publishing, just as in the case promotion and funding of national sectors in general, as discussed earlier. Many journals in Central Asia appear to be based at academic institutions where affiliated researchers can conveniently publish. Limited space in any academic volume means factors other than purely merit-based ones could play a role in accepting and allocating submitted papers in academic systems that could suffer from questionable practices. Most Central Asian researchers seemed to continue publishing in numerous local journals even decades after the USSR collapse; such journals had a limited pool of potential peer reviewers, which led to minimum or low-quality reviews (Nauryzbayev et al., 2015). A requirement to publish in an acceptable international journal outside a local institutional one for academic programs in the Central Asian countries could be meant to avoid lowering standards.

A viable strategy to benefit from the academic rigour of high-impact international publication while preserving local research relevance could be the consideration of reputable English-language journals with a regional focus. For instance, Central Asian Survey and Central Asian Affairs offer publications with sufficient research excellence in the topics highly relevant to the region or one of its comprising countries. Alternatively, English-language journals with an international and local focus could be created or converted from a local language in each Central Asian country. However, they could be prone to the same potential weaknesses characteristic of local non-English publications. A journal in chemistry in Kazakhstan developed a strategy to enter Scopus and WoS by enabling quality peer reviews which necessitated a shift to the English language since it would attract international expert reviewers (Nauryzbayev et al., 2015). The language skill factor is discussed in detail in the next section.

English proficiency

English proficiency improvement is deliberately included in this section as the critical point of concern (with numerous implications in the later discussed principle 3). It was the most commonly reported barrier and strategy in meeting publication requirements among Kazakhstan's faculty, as English-speaking staff contributed more to internationalization (Kuzhabekova & Ruby, 2018b). For faculty lacking the language skills, academic English services, including translation and formatting fees per article in Kazakhstan, could fetch over one thousand USD, exceeding a monthly faculty salary (Kurambayev & Freedman, 2021). Poor English proficiency was an important reason behind Central Asian researchers' preference for local publishing (Nauryzbayev et al., 2015). A survey found only 23% of faculty had a sufficient level of English to understand an article (Kuzhabekova & Ruby, 2018a). And this happened in the leading Central Asian country regarding early support of trilingualism among the wider population and the English language publication requirement for Ph.D. students (Agbo, 2013). The proficiency in the neighbouring countries can be even lower: Fig. 9 shows the lowest ranking of Central Asian countries in the English Proficiency Index, except for Kazakhstan (EF EPI, 2020).

Figure 10 illustrates the relative performance and participation of test-takers from Central Asia in TOEFL. Standardized tests have been gaining popularity in recent years. Kazakh and Uzbek test-takers seem to have significantly improved their skills as measured by TOEFL, though the same cannot be stated about other countries since 2004. Interestingly, those speaking the main local language of Central Asian countries seemed to demonstrate lower results and participation in TOEFL relative to the level of all test-takers in a country—minorities in the region were, on average, better prepared and more willing to study abroad.

Source: ETS (2020)

The proportion of the population taking the TOEFL test (top) and corresponding performance converted to PBT score (bottom) in selected post-Soviet countries.

Kazakhstan and Turkmenistan are both compelling cases of economies benefitting from prosperous extractive industries that declared the importance of learning English in the past. Unlike Turkmenistan, Kazakhstan was an example of a more open country achieving rapid progress in internationalization and foreign language skills, though it still experienced foreign language-related issues in science. Most of the articles were published in Russian journals (including the more significant share of publications in Kazakhstan), seldom cited and rarely indexed in Scopus or WoS (Kuzhabekova & Ruby, 2018a, 2018b). Despite ranking low in English proficiency globally, the country still achieved the best results in Central Asia.

Systemic deficiencies

Data and metrics are now routinely used for research evaluations instead of relying solely on peers' judgment (Cronin et al., 2014). The proliferating metrics are often ill-applied by institutions without knowledge of good practices (Hicks et al., 2015). Universities pay attention to global rankings such as Times Higher Education's list, even though such orders could rely on inaccurate data and arbitrary indicators. Researchers are increasingly promoted and funded based on their publications in high-impact journals and other numbers. Risks of forcing quantified indicators in the academic sector were mentioned earlier in the example of predatory publishing by Central Asian authors: Kazakhstan and Uzbekistan had the highest proportion of articles in publications discontinued by Scopus (Kessenov, 2020). Less severe but still significant adverse effects were reported elsewhere: Eastern Europe and China, for example, experienced the proliferation of fraudulent research and retractions as one of the consequences of emphasizing publication metrics (Grančay et al., 2017; Roach, 2018).

Uzbekistan's recent experience illustrates the dangers of focusing on a single required number of publications in a given period. Defending a doctoral dissertation in the country needed at least ten scientific publications (including one international), and attestation of researchers required two publications (Eshchanov et al., 2021). Such strict requirements, as even the experienced researchers in developed countries would probably attest, seem unrealistically high for many scholars when it comes to publishing only in reputable journals. In contrast, most universities in Central and Eastern Europe required two or three publications for promotion to the position of Associate Professor (Grančay et al., 2017). Not surprisingly, researchers from Uzbekistan reacted to the harsh requirements by rapidly increasing their share of predatory publishing in 2010, coinciding with the period of the "Publish or Perish" policy. This likely distracted their constrained resources and efforts from publishing in reputable journals, as reflected by unfavourable changes in Fig. 11.

Kazakhstan experienced declining average citations per journal article in the same period from 2010 to 2017, when the country boosted the number of publications. This productivity increase at the expense of quality as measured by less-cited or predatory journals could be driven by stricter quantitative requirements and so-called salami slicing of research output (Cмaгyлoв et al., 2018). In the first years after the introduction of the requirements, proliferating intermediaries and, sometimes, fraudsters started offering publishing services (Kurambaev and Freedman 2021). However, Kazakhstan appeared to reverse the negative trends in predatory publishing relative to Uzbekistan since 2016. Social and interdisciplinary sciences were fields with the highest share of predatory journals where authors from the country published (Cмaгyлoв et al., 2018).

Predatory publishing venues have not only reputational but also economical costs. Given a typical publication charge of USD500 (much higher if authors employed an intermediary), Kazakhstan's total losses due to predatory publications were estimated to exceed two million USD between 2009 and 2017 (Cмaгyлoв et al., 2018). Articles and authorship for sale seem to be a multimillion business and have a considerable (negative) economic impact elsewhere in post-Soviet and Eastern European areas, too (Cмaилoвa, 2020; Aбaлкинa et al., 2020; Perron et al., 2021; Xaн, 2022b). Lack of money to publish in open-access journals together with time was the top barrier to research publication reported by a survey of respondents in Kazakhstan (Kuzhabekova & Ruby, 2018a).

Retraction data suggests the widespread reasons for retraction notices included fake peer reviews in Kazakhstan, withdrawals in Kyrgyzstan, plagiarism in Uzbekistan and Tajikistan (Retraction Watch, 2021). Non-authentic content made up a significant share of Scopus-indexed papers from Uzbekistan in 2021 (Abalkina, 2021). Kazakhstan's researchers cited themselves more often in the region. Kyrgyz Republic had a favourable standing in self-citations (SJR – SCImago, 2021).

The general public attitude towards the value of advanced degrees has to be mentioned as a critical detrimental factor shaping science in Central Asia. Bureaucrats and managers at various levels could too often simply have "bought" their way to publications and degrees for reasons of prestige and wage increase, not true devotion to science cause. The problem is by no means an isolated phenomenon in the region but characteristic of the entire post-Soviet area. There is sadly no shortage of scandals and controversies in the former USSR surrounding the rampant problem of plagiarism (by translation in particular), predatory publications, ghostwriting, and academic integrity: many professors, heads of departments, governors, parliament members, ministers, assistants to a president, and even presidents of two countries among them (Toппыeв, 2003; Дpючкoвa., 2017; Aбдyвaитoвa, 2018; Centre1.com, 2018; Клeмeнкoвa, 2018; Aбaлкинa et al., 2020, Abalkina and Libman, 2020; Trilling, 2021; Uznews, 2021; Xaн, 2022a; Xaн, 2022b). A prominent paper mill selling authorship in academic journals seemingly has been targeting Russian-speaking scholars via several websites (Perron et al., 2021). And this could be only one well-investigated among numerous intermediaries in fraudulent publishing services popular among researchers under the pressure of proliferating "Publish or Perish" policies in the post-Soviet countries (Cмaилoвa, 2020; Aбaлкинa et al., 2020; Eschchanov, 2021). Unlike in the post-Soviet countries, the high-ranking officials in Western countries seemed to have a more challenging time dismissing plagiarism charges and avoiding resignation, reprimands, and other severe sanctions (Abalkina and Libman, 2020).

To summarize, the hidden impact of the widespread academic fraud in the region is likely to be enormous. Its connection with increasingly strict policies on research metrics needs further investigation.

Implications for policymakers

This study discusses research metrics' relevant implications for stakeholders to make the national economies more competitive in research and development. While qualitative evidence can be objective, quantitative research metrics can provide information that is difficult to acquire through individual expertise. To prevent the widespread abuse of research metrics, the Leiden Manifesto, presented in 2015, offers the best practice in metrics-based assessment so that evaluators and their indicators can be held to account (Hicks et al., 2015). Ten principles of the manifesto used in this study provide in one framework the distillation of the relevant methodologies of scientific evaluation in the best practices previously stated by experts in scientometrics. The subsequent ten paragraphs of this section separately cover implications based on each of the principles of the Leiden Manifesto, with a discussion on their applicability for improving scientific indicators in the Central Asian countries by policymakers. Each of them starts by briefly introducing a principle (based on Hicks et al., 2015), then describes an example (based on Wildgaard et al., 2018), and.proposes the way to improve Central Asian performance in a respective area of concern summarizing the corresponding principle's guidelines. Recommendations for policymakers presented at the end of each principle's subsection are mainly based on the opinions of the local experts that the existing studies expressed in the past.

Principle 1: Quantitative evaluation should support qualitative, expert assessment

The first principle of the Leiden manifesto states quantitative metrics can challenge bias in peer review and facilitate deliberation. The metrics strengthen peer review; however, the numbers must not substitute for informed judgment, and everyone should retain responsibility for their assessments. For instance, researchers' or departments' applications could include an analysis of bibliometric or background materials evaluated by a panel from a relevant scientific field.

Acknowledging weaknesses of research metrics, the argument in this study is for more data in a suitable combination of qualitative and quantitative assessments, not less in the context of Central Asia. Ignorance (in the case of Turkmenistan) and excessive emphasis (in the case of Uzbekistan) are the two extremes of the metrics' applications without accompanying support policies, which can be detrimental to country-level performance. Kazakhstan's adoption of the standard Western approach to research productivity links to promotion created powerful incentives to prioritize research as a new and vital career target in addition to teaching. This policy motivated the local academic staff to seek collaboration, attend seminars, learn good examples, conduct literature reviews, and eventually publish more improving articles (Kuzhabekova & Ruby, 2018b). Avoiding the broader use of metrics due to potential issues can be detrimental. Despite welcoming the recent policy of deemphasizing metrics, even Chinese research experts worried it could reduce the country's competitiveness in science as local researchers could feel less pressure to publish (Mallapaty, 2020).

The traditional alternative to scientific indicators has long been a peer-review – an evaluation of research output by experts. Qualitative judgment based on peer review has traditionally been highly valuable in academic publishing. Unfortunately, such considerations are also known to be time-consuming and occasionally biased. The local specifics raise doubts about the region's continued heavy reliance on peer review. Notably, there are strong reasons to be concerned about the extent to which local systems are merit-based. Central Asian countries achieved the lowest performance in many indicators of corruption and governance among post-Soviet countries (World Bank, 2021). So, personal judgment should be deemphasized in places known for widespread nepotism and abuse of power. Arguably, scientific indicators are preferable to peer-review as a low-cost and relatively accurate determination of country performance in Central Asia, where less expert knowledge and qualitative indicators are available. The intensive use of quantified indicators has other added benefits since it provides quantified results, which are harder to argue with and can be updated quickly during decision-making. A balanced approach supported by national institutions can help avoid shortcomings (example of the most recent progress in Kazakhstan).

Principle 2: Measure performance against the research missions of the institution, group, or researcher

The second principle suggests that indicators to evaluate performance should relate clearly to the goals that consider the broader socio-economic and cultural contexts. A single evaluation model cannot apply to all contexts of diverse research missions. Suppose a department's mission was an international collaboration, for example. In that case, an indicator could be a share of publications with a foreign co-author, automatically calculated from a global database export. In Kazakhstan, internationalization at a world-class university created conditions for many foreign faculties to contribute to research areas aligned to national priorities defined as strategic by the government (Kuzhabekova et al., 2016).

Baltic states, Georgia and Armenia, are post-Soviet democracies achieving relatively higher results. Poland illustrates yet another post-communist success story of increasing publication trend after early liberalization efforts opening to the Western countries, welcoming collaborations with foreign colleagues, providing more university autonomy from the government, rejecting ideological constraints of the communist past, and accessing global sources (Kozak et al., 2015). If anything, Turkmenistan could achieve a higher performance as a country of comparable population size, with Kyrgyzstan possessing more state revenues from its rich hydrocarbon resources. Instead, this most closed country in the region showed the lowest indicators in absolute and relative measures.

Meanwhile, Kyrgyzstan achieved regional leadership in internationalization regarding the share of international students and institutions (Ovezmyradov & Kepbanov, 2021). More relevantly, Kyrgyzstan achieved the best performance in relative measures being the most liberalized state in Central Asia (Figs. 7 and 8).

Figure 12 suggests a strong correlation between the research performance and liberalization indicators in the post-Soviet states. The correlation naturally does not necessarily imply cause and effect relationships (especially with the few data points given). It does suggest, though, that the critical aspect of liberalization as a minimum could play an important role yet has to be analyzed. Furthermore, the h-index is an imperfect measure of both productivity and output. Yet, it was chosen as the dependent variable of research performance since alternatives such as citations per article are even less adequate reflections of combined output and quality. Voice and accountability (for WGI based on a survey) are also not exhaustive measures of political liberalization, yet no better alternatives were found for the analysis (Fig. 12).

Indicators of questionable academic practices: left—the percentage of research output published in sources discontinued by Scopus; right – the percentage of self cites. Data sources: Kesenov(2020) for discontinued Scopus sources (no data available for Kyrgyzstan and Turkmenistan); SCImago (2021) for self cites between 1996 and 2017

The regression analysis with post-Soviet countries' h-indices as dependent variable and governance indicators related to liberalization as an independent variable. Data source: TCdata360 (2021) and GII (2020); 14 data points correspond to the former Soviet republics as of 2020 indicators; Russian Federation was excluded as an outlier in terms of population size distorting the h-index value for purposes of the analysis

Among various ideas empirically and theoretically linking science to liberalism, this study embraces the concept of open societies exhibiting a higher level of innovation. The argument supported by the above analysis is that liberalism correlates with scientific and technological progress (McCloskey, 2019; Mokyr, 2016). In a narrow regulatory and cultural context, the lack of liberal attitudes hampers the research process in the region (Jonbekova & Kuchumova, 2020). In a broader sense, the authoritarian regimes of Central Asia create an environment difficult for a free exchange of ideas and open debates about the pressing socio-political issues considered sensitive in the region. The post-SovietBaltic states (Latvia, Lithuania, and Estonia) that had early oriented themselves toward neoliberalism and soon joined the EU achieved better progress, as the next section will show. Two extremes of the post-communist paths thus can illustrate how Estonia, the most open liberal state, gained the most outstanding scientific progress, while Turkmenistan, the most closed authoritarian state among the comparators, remained the lowest ranking country in research metrics. Benchmarking against other post-Soviet countries can become an essential part of the national research mission providing powerful incentives for using appropriate research metrics, which is impossible without more comprehensive institutional reforms beyond academic sectors in Central Asia.

Principle 3: Protect excellence in locally relevant research

The third principle raises concerns about equating research excellence with English language publications indexed in academic databases primarily based in Western countries, which creates biases in the more regionally engaged fields with societal relevance as social sciences and humanities. The bias also leads to interest in studies of more abstract models or foreign (often US-based) data that is publishable in high-impact journals. More broadly, the established relationships in polarized North–South antitheses could primarily benefit certain countries (the North) while undermining the potential of the former Soviet research community to contribute to global knowledge on equal terms. (Kuzhabekova, 2020). Metrics built on high-quality non-English literature are necessary to identify and reward excellence in locally relevant research on topics such as labour, law, health care, and migration. The principle is less applicable for research with an international profile, though.

Biased or not, the focus on English proficiency is crucial for conducting international-level research. Research metrics in both international and regional levels of relevance can be substantially improved with targeted support for publication in the English language and choice of international journals with regional focus when appropriate to specific fields. Financial support in English-language learning and publication was among the most desired by faculty in a survey in Kazakhstan (Kuzhabekova & Ruby, 2018a). While language training and testing continue to be necessary, it could take too long for Central Asian academic staff to improve English proficiency from the currently prevailing low language skills of the population in the region to have an immediate impact on research performance. Mobility abroad in an English-speaking environment is also not viable for most research staff to improve language skills with limited grants.

It could be more effective to provide targeted support for researchers in translation and editing papers for publication in international journals. The relatively high publication fees in easier-to-publish and open-access academic journals require high financial investments relative to the local faculty wages (Kuzhabekova & Ruby, 2018b). Subsidizing or fully reimbursing publication charges and professional translation to English would encourage scientists with poor mastery of the language but are talented otherwise. Similar services in proofreading would increase the English-language output of a substantial part of academic staff with intermediate language skills. Where funding is limited, mass subscriptions to more affordable services such as Grammarly could be provided at no cost for individual users within academic institutions. Finally, basic training in academic writing explaining the bolts and nuts of publishing in specific fields cannot be overestimated in importance. Kazakhstan can be considered a success story of publication requirements encouraging researchers to improve their level of English (Kuzhabekova & Ruby, 2018a, 2018b).

Principle 4: Keep data collection and analytical processes open, transparent and simple

Black-box evaluation machines are not acceptable. The established bibliometric evaluation methodology built over several decades by academic and commercial groups enabled scrutiny by publishing referenced protocols in the peer-reviewed literature. An evaluation must strive for simple indicators that enhance transparency. At the same time, metrics should not be overly simplistic to avoid distorting the record while being true to the complexity of the research process. Department heads and staff should be consulted throughout an evaluation, and they should be familiar with utilized databases, indicators, and analyses.

The initial adoption of the existing evaluation system proven to have open and straightforward protocols with the possibility of future adaptation would minimize the risks of misunderstanding and manipulation in the context of Central Asia. Transparency is vital in the context of Central Asia. At least in the introductory period, adopting an established evaluation methodology "as is" seems reasonable due to the region's lack of experience and expertise. Before and during the introduction process, public debate should take place on the technical properties of indicators as applied in the local settings. Qualitative and quantitative assessments of local research output in non-English languages can be included. Codification and quantification of qualitative evaluation, whenever possible, would allow benefitting from the same strengths that research metrics boast, provided bias is minimized. In particular, comprehensive data analysis adding to theoretical support is necessary to develop effective policies for the objective evaluation of research performance at the levels of local institutions soon. Finally, governments of all countries in the region should improve data availability and depth on scientific indicators at all levels. Transfer to a more objective system should be supported with advanced data collection systems, including big data and machine learning.

Principle 5: Allow those evaluated to verify data and analysis

The creation of a system that processes and verifies data among all stakeholders should become a priority at the planning stages of implementing evaluation within institutions at risk of less transparent decision-making. Evaluated researchers should be able to check the correctness of identified outputs. Managers of evaluation processes should allow self-verification or third-party audits for data accuracy. Heads of evaluated departments, for example, could have a chance to provide feedback on draft analysis results. Institutions could budget for implementing research information systems capable of processing accurate, high-quality data. The fact that research metrics can be more objective than judgment does not mean they cannot be affected by corruption and abuses of power by influential decision-makers during the evaluations. The ability to quickly verify and dispute each evaluation step would deter potential actions resulting in outcomes different from those that are purely merit-based.

Principle 6: Account for variation by field in publication and citation practices

Specific research areas might receive a relatively low rating in peer-review assessment at a national level because their significant output (for instance, books rather than articles in journals in social sciences or conference papers in computer science) have less probability of being indexed by the global databases. Furthermore, citation rates of top-ranked journals substantially vary by field. Therefore, normalized indicators, such as the top 1%, 10%, or 20%, should be used. The evaluation analysis could support comparisons with indicators used by other research fields.

Percentile-based measures such as a certain top percentage of the papers with the highest citations are increasingly adopted in the evaluation of research in Western countries, and there is no reason to ignore this and other normalization methods in Central Asia for differentiation across various scientific fields. As for science specialization, WoS data indicates Kazakhstan and Uzbekistan were more productive in physics and chemistry, Tajikistan in mathematics and chemistry, the Kyrgyz Republic in environmental and geosciences, and Turkmenistan in mathematics (Clarivate, 2021). Biomedical fields reflect the strength of each country in critically essential sciences. In July 2021, the PubMed search engine using country names in the affiliation field yielded the following outputs: 29 for Turkmenistan, 264 for Tajikistan, 461 for Kyrgyzstan, 1081 for Uzbekistan, and 3456 for Kazakhstan (PubMed.gov, 2021).

Principle 7: Base assessment of individual researchers on a qualitative judgment of their portfolio

Combining research metrics with a quantified qualitative evaluation of individuals while taking measures against widespread biases in the judgment of peers could ensure a more fair process. Reading an individual's research output and considering information about expertise, experience, activities, and influence is more appropriate than relying on one number.

Reliance on research metrics can be risky when funding and promotion decisions are made comparing institutions or individuals with a low number of publications and citations. Even a slight advantage over evaluated counterparts can be decisive in such an environment. Available statistics show a lack of detailed and accurate data on many indicators in Central Asia. Little information was available on the individual level of researchers. It could be challenging to avoid the influence of cultural or psychological factors such as power distance, reverence for seniors, groupthink, and personal attitudes in a peer review when a double-blind process is impossible. Anonymous or strictly confidential surveys and interviews with peer researchers could be essential to an evaluation to complement data-driven decision-making with qualitative findings. Additional evaluation weights should be given to researchers who publish in various foreign journals rather than the same local journal (Grančay et al., 2017).

Given the relatively high instructional workload in post-Soviet academia, consideration of teaching talent and research might be necessary for a faculty portfolio. A survey in Kazakhstan revealed concerns about unfair dismissal or demotion of some faculty who could not reach publication targets but were accomplished educators otherwise (Kuzhabekova & Ruby, 2018a).

Principle 8: Avoid misplaced concreteness and false precision

In cases when a difference in quantitative indicators between evaluated researchers or institutions is insignificant, additional evaluation criteria should be included in comparisons deciding funding and promotion outcomes. Technical indicators can be ambiguous, uncertain, and based on solid assumptions. Distinguishing based on tiny differences and false precision should be avoided. The best evaluation practices use multiple indicators to be more robust. The data used in an analysis should ensure the best possible coverage. Single reused indicators can be unreliable. For instance, junior researchers tend to have a lower h-index, which also varies by field, so additional judgment becomes necessary.

Instead of devising unique or untested techniques for evaluation, Central Asian research should adopt proven metrics already in wide use among foreign leaders in science. Due to data availability and other limitations, statistical analysis can be conducted at basic levels without advanced methods such as tests of difference and regression. Statistical analysis of the frequency and selected statistics on citations can be helpful in a deeper understanding of research performance when enough data is available. For instance, histograms and summary of statistics for citation of papers published in Central Asian countries reveal a highly skewed distribution with medians close to zero meaning many papers are never cited (Clarivate, 2021; Ovezmyradov & Kepbanov, 2021).

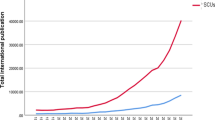

One more analysis illustrates the pitfalls of advanced analysis. Figure 13 shows absolute values of metrics (the previous figures showed relative values) together with trendlines. Such visualizations involving statistical techniques help determine significant changes over the long term. Suppose ministries of education and science in Central Asian countries tasked analysts to assess whether national science achieved any progress as a result of major reforms in the period between 1996 and 2018. Low coefficients of determination (R-squared below 0.6) indicate a poor model fit for all countries except for the more or less significant trend of the increasing number of documents in Kyrgyzstan, Tajikistan, and Kazakhstan. Without additional analysis, one could conclude that no convincing changes took place in citations across the region. Even an absence of significant changes by itself could be a necessary result with implications for government bodies. However, such a statement would be justified only if linearity was clearly the case. As for Uzbekistan, the nonlinear pattern in citations is evident from the visualization, so the linear regression over the entire period is unsuitable for the analysis. Figure 14 shows more appropriate use of the piecewise regression analysis: two segments with 2008 as a breakpoint clearly distinguish two-time segments compared to other techniques such as nonlinear or binary variables. The model shows a good fit this time (R-squared > 0.7) to present a worrying conclusion to the relevant ministry that the national research impact significantly declined in the 2010s despite growing before. Eshchanov (2021) similarly identified the breakpoint and associated it with the open access model and predatory publishing. The finding would warrant further investigation. The previous section discussed possible explanations for the low indicators in the country. A similar analysis could be implemented, at least with the Tajikistan data.

The research productivity (left axes, Doc, show a number of publications) and impact (right axes, Cite, show a number of citations) of Central Asian states between 1996 and 2018; regression analysis presents trends over the selected period. Data source: SCImago (2021)

The regression analysis with Uzbekistan' 's output and citations as dependent variables and time as an independent variable. Data source: SCImago (2021)

When no conclusive statement can be made on the significant difference between evaluated subjects, other quantitative and qualitative criteria should guide final decisions. Funding, promotion, and other important decisions should never be made merely based on negligible differences in numbers.

Principle 9: recognize the systemic effects of assessment and indicators

Wider use of research metrics will inevitably create extra incentives for undesirable publication practices. Hence, a set of indicators and measures has to be thoroughly planned to maintain a balance between quantity and quality of output. Indicators establish incentives that change the system, so a suite of indicators is preferable. For example, funding university research using a formula based on the number of papers could increase output but decrease quality.

As research metrics get increasingly introduced in Central Asia, cases of academic fraud of certain kinds would likely expand. No other country illustrates the dangers of unrealistic publication targets better than Uzbekistan: the high number of required articles for degree awards and attestation led the local authors to become the global leader in predatory publishing (Eshchanov et al., 2021). Success stories of universities in other European transition countries, such as Estonia and Hungary, demonstrate that decent indicators of research output can be achieved with a more flexible approach and less ambitious targets (Grančay et al., 2017).

While research output in reputable journals also increased, more than half of survey respondents in Kazakhstan cited using predatory journals to avoid an adverse effect of the new publication requirement on salaries (Kuzhabekova & Ruby, 2018a, 2018b). The country updated regulations in the academic sector several times between 2011 and 2018 to improve the situation (Cмaгyлoв et al., 2018). These efforts seemed to bring a positive effect. Unlike Uzbekistan, Kazakhstan decreased the predatory publishing rates after 2016. Local experts suggested the following measures that could help against low-quality publication practices: allowing degree award only after a certain period after a publication (for instance, after one year) so that discontinued journals can be excluded; involving experts in academic database use; internationalizing research aimed at Scopus/WoS publication; inviting foreign scholars; providing full-text access to scientific sources; and standardizing national publications in accordance with the reputable global databases (Cмaгyлoв et al., 2018).

Furthermore, the experience of the European countries in transition that also suffered increases in predatory publishing (less than in Central Asia, though) in response to "publish or perish" policies can be valuable here. It suggests the following measures might be helpful: allowing but limiting the acceptable number of publications in local journals eligible for promotion while setting less controversial targets and creating a blacklist of predatory journals (Grančay et al., 2017). The Chinese policy of first reprimanding authors who published in predatory journals and, if ignored, cutting grants or even dismissing them can also be considered (Клeмeнкoвa, 2018).

Being one strategy to boost metrics, the expansion of the presence of local journals in WoS or Scopus is a daunting task that could take years. Alternative databases can be considered for certain fields of science only if they have already gained an international reputation, for instance, EBSCO in management and ERIH in social sciences and PИHЦ for publications in Russian.

As in other principles we discussed, combining carefully chosen indicators in research evaluation would be highly beneficial in minimizing the unwanted effects of emphasizing metrics. Practical training and appropriate sanctions against poor practices would be helpful.

Principle 10: scrutinize indicators regularly and update them

Administrators cannot improve things that cannot be continuously measured. Research missions and assessment goals can shift so that some metrics would become inadequate. Indicators have to be reviewed and updated as necessary. Sometimes, simplistic formulas have to be modified toward more complex ones. Publicly available information should be available on when and how indicators were updated.

As Central Asian academic institutions review progress and update research metrics, leading foreign partners can become the most essential sources to rely on. Objective assessment of researchers and their institutions in Central Asia on a regular basis is crucial. This study does not advocate for developing purely local rankings without international partners. The experience of Uzbekistan shows why: the domestically produced research platforms might underperform (Eshchanov et al., 2021). The Supreme Attestation Commission in the country has approved a list of journals for publishing to qualify for advanced degrees, and it included predatory journals widely used by the local authors. Furthermore, the local web platforms for science monitoring and evaluation, such as www.salohiyat.uz and www.fan-portal.mininnovation.uz, demonstrated poor usability and lack of integration into the global databases.

Conclusions

Research metrics have been developed and increasingly used in science sectors of many countries and regions, but their use received relatively less attention in certain regions such as Central Asia. This region presents curious cases for the theory and practice of scientometrics to study the valuable experience of transition countries in introducing the metrics. This study explores the region's scientific development and how quantitative evaluation might become highly relevant after adaptation to local specifics.

Central Asia showed lower than average research performance compared to the neighbouring countries of Eastern Europe and Asia. Science in the region suffered from predatory publishing, academic fraud, low investments, and poor English proficiency. The country-specific results compared to the region's peers can be summarized as follows.

-

Kazakhstan has been the most influential economy in quickly achieving the goal of higher fast-growing research productivity and impact in.the recent decade due to adequate investments and modern indicators.

-

Kyrgyzstan has been the most efficient economy using limited resources to achieve a decent and growing research performance, with the relatively liberal socio-political environment likely contributing factor.

-

Uzbekistan showed a worrying decline in research indicators during the recent decade despite the available size of resources, possibly due to ill-applied metrics with excessive numeric targets.

-

Tajikistan remained an economy with low and stagnating indicators, likely due to their slow adoption in the academic sector facing institutional and financing constraints.

-

Turkmenistan presents a case of largely neglecting international indicators of research metrics with the institutional constraints, such as an illiberal regime and lower performance relative to all other regional economies despite considerable financial resources.

This research discusses the best practices of using research metrics in the Central Asian context. Ten principles of the Leiden Manifesto can guide administrators in science sectors towards higher performance without risking unnecessary effects and disruptions. These principles should be considered in the development of science and its interactions with society. Policymakers in Central Asia should worry less about possible shortcomings of research metrics than the dangers of continued decline in scientific decline due to excessive reliance on peer review and other subjective evaluations in the region that lacks progress in transparency and funding of science. Kazakhstan showed exemplary improvement in gradually overcoming issues accompanying the expanded use of quantitative indicators. There is no question about whether researchers should use research metrics or not; the matter is how to do it based on the best international practice outlined by the Leiden Manifesto.

The presented analysis with limited examples admittedly could not cover many aspects of science in the studied region due to information and space constraints. These are highly desirable to investigate yet. Each of the Leiden Manifesto principles could become an interesting direction for future research on applications of scientometrics in Central Asia. The principles could allow more expansive interpretations in some cases. For instance, the interpretations may differ but be seen as complimentary for principle 3; and interpretations can overlap for principles 3 and 6 (Wildgaard et al., 2018). Open discussion of indicators must guide policymaking in improving research and development in the region. As a relevant case, a visible decline in research performance in Uzbekistan after 2008 could be studied in the authoritarian context of stricter socio-political measures by the government following dramatic events immediately preceding the year in the period since 2004. The propositions suggested in this research are results of a preliminary analysis based on limited quantitative and qualitative findings. They should be refined and verified as new studies on Central Asian research metrics emerge in each country of the region.

References

Abalkina, A. (2021). How hijacked journals keep fooling one of the world's leading databases. Retraction Watch. https://retractionwatch.com/2021/05/26/how-hijacked-journals-keep-fooling-one-of-the-worlds-leading-databases/?fbclid=IwAR1PUQ63tkiW_eGCVQFBj99JZXCh66B6gnAae4YtyCPZs64HlyGMF_Jamj0. Accessed 20 Mar 2021.

Abalkina, A., & Alexander, L. (2020). The real costs of plagiarism: Russian governors, plagiarized PhD theses, and infrastructure in Russian regions. Scientometrics, 125(3), 2793–2820.

Agbo, S. (2013). New perspectives on higher education reform in post-Soviet Kazakhstan: the dilemma of English language competence. library.iated.org

Altmetric (2018). The 2018 Altmetric Top 100. https://www.altmetric.com/top100/2018/. Accessed 20 Mar 2021.

AsiaNews.it (2011) Tajikistan Corruption reaches crisis level in Tajik universities.

Aбaлкинa, A.A., Кacьян, A.C., Meлиxoвa, Л.Г. (2020). Инocтpaнныe xищныe жypнaлы в Scopus и WoS: пepeвoднoй плaгиaт и poccийcкиe нeдoбpocoвecтныe aвтopы. Кoмиccия PAH пo пpoтивoдeйcтвию фaльcификaции нayчныx иccлeдoвaний

Aбдyвaитoвa, A. (2018). Пpeпoдaвaтeли, дoцeнты и дeпyтaты. Имeнa тex, ктo eщe пoпaлcя нa плaгиaтe диccepтaций. Kaktus media https://kaktus.media/doc/376960_prepodavateli_docenty_i_depytaty._imena_teh_kto_eshe_popalsia_na_plagiate_dissertaciy.html. Accessed on 26 July 2022.

Brunner, J. J., & Tillett, A. (2007). Higher education in Central Asia; the challenges of modernization – an overview. World Bank.

Bussen, T. (2017). The Kyrgyz Republic and bribes in the classroom: increase wages and oversight, decrease corruption. ETICO.

Cantu-Ortiz, F. J. (Ed.). (2017). Research analytics: Boosting university productivity and competitiveness through scientometrics. Boca Raton: CRC Press.

Centre1.com (2018) Пpeзидeнт Taджикиcтaнa ocyдил чинoвникoв зa плaгиaт. https://centre1.com/tajikistan/prezident-tadzhikistana-osudil-chinovnikov-za-plagiat

Clarivate (2021). Web of Science. Web of Science Group.

Cronin, B., & Sugimoto, C. R. (Eds.). (2014). Beyond bibliometrics: Harnessing multidimensional indicators of scholarly impact. Cambridge: MIT Press.

Dissernet.org (2021) Typкмeнcкoгo кapдиoлoгa лишили cтeпeни кaндидaтa нayк зa плaгиaт.

EF EPI (2020). English Proficiency Index. EF Education First.

Eshchanov, B., Abduraimov, K., Ibragimova, M., & Eshchanov, R. (2021). Efficiency of “publish or perish” policy—Some considerations based on the Uzbekistan experience. Publications, 9(3), 33.

ETICO (2004). Central Asia: buying ignorance – Corruption in education widespread, corrosive. UNESCO.

ETS (2021). https://www.ets.org/toefl. Accessed 20 Mar 2021.

GII (2020). Global Innovation Index. https://www.wipo.int/global_innovation_index/en/ . Accessed 20 Mar 2021.

Graham, L. R. (1993). Science in Russia and the Soviet Union: A short history. Cambridge: Cambridge University Press.

Graham, L. R., & Dezhina, I. (2008). Science in the new Russia: Crisis, aid, reform. Indiana University Press.

Grančay, M., Vveinhardt, J., & Šumilo, Ē. (2017). Publish or perish: How Central and Eastern European economists have dealt with the ever-increasing academic publishing requirements 2000–2015. Scientometrics, 111(3), 1813–1837.

Heyneman, S. P. (2010). A comment on the changes in higher education in the former Soviet Union. European Education, 42(1), 76–87.

Hicks, D., Wouters, P., Waltman, L., De Rijcke, S., & Rafols, I. (2015). Bibliometrics: The Leiden Manifesto for research metrics. Nature News, 520(7548), 429.

Holley, K., Kuzhabekova, A., Osbaldiston, N., Cannizzo, F., Mauri, C., Simmonds, S., & van der Weijden, I. (2018). Global perspectives on the postdoctoral scholar experience. In A. Jaeger & A. J. Dinin (Eds.), The Postdoc Landscape (pp. 203–226). Cambride: Academic Press.

Horák, S. (2020). Education in Turkmenistan Under the Second President: Genuine Reforms or Make Believe? In D. Egéa (Ed.), Education in Central Asia: A Kaleidoscope of Challenges and Opportunities (pp. 71–91). Cham: Springer.