Abstract

A major problem in scientific literature is the citation of retracted research. Until now, no long-term follow-up of the course of citations of such articles has been published. In the present study, we determined the development of citations of retracted articles based on the case of anaesthesiologist and pain researcher Scott S. Reuben, over a period of 10 years and compared them to matched controls. We screened four databases to find retracted publications by Scott S. Ruben and reviewed full publications for indications of retraction status. To obtain a case-controlled analysis, all Reuben’s retracted articles were compared with the respective citations of the preceeding and subsequent neighbouring articles within the same journal. There were 420 citations between 2009 and 2019, of which only 40% indicated the publication being retracted. Over a 10-year period, an increasing linear trend is observed in citations of retracted articles by Scott S. Ruben that are not reported as retracted (R2 = 0.3647). Reuben’s retracted articles were cited 92% more often than the neighbouring non-retracted articles. This study highlights a major scientific problem. Invented or falsified data are still being cited after more than a decade, leading to a distortion of the evidence and scientometric parameters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Scientific research is the foundation of technical, economic, socio-political, and medical decisions; accuracy, high quality, and integrity are therefore essential requirements (Kretser et al., 2019). In contrast, the scientific community is confronted with the fact that these ideals are not being met by all researchers. Scientific misconduct is a major problem (Gross, 2016, Hesselmann et al., 2017). Such scientifically unethical practices may be motivated by a variety of reasons, including financial gain, scientific recognition or pressure to publish (George, 2016).

Ethical misconduct can be attributed to an adverse influence of socio-organizational factors (Parlangeli et al., 2020). In some countries, as well as in some scientific institutions, the pressure to publish is also linked to the maintenance of scientific careers, resulting in the danger of more manipulated or falsified scientific work being produced (Aspura et al., 2018; Davies, 2019). The institutional use of evaluation metrics and incentives as pay per paper have been linked to data fabrication and fraudulent publications (Ma, 2019).

If, however, a violation of these basic scientific and ethical principles is discovered, a publication must subsequently be retracted and can thus not be regarded as valid evidence. The number of retracted publications has risen substantially in recent years across all scientific disciplines (Steen et al., 2013). Scientific misconduct is the cause of the majority of the retractions (Campos-Varela & Ruano-Raviña, 2019, Craig et al., 2020). Nevertheless, compared to the total number of publications, only a tiny proportion are retracted (Grieneisen & Zhang, 2012, Aspura et al., 2018).

One challenging issue with publications that have been retracted is the fact that they continue to be cited—even 5 years later—without any indication that they have been retracted (Bornemann-Cimenti et al., 2016). A large-scale analysis of over 12,000 retractions revealed that more than half of the retracted papers are cited at least once (Sharma, 2021). This allows erroneous or invented conclusions to be disseminated through the scientific ecosystem. However, the extent to which retracted research also persists in the long term remains unclear.

In 2009, Scott S. Reuben, a formerly highly reputed anaesthesiologist and pain researcher, was convicted of large-scale data fabrication (Shafer, 2009a, b, c). He was sentenced to six months imprisonment and more than $400,000 of fines and restitution (U.S. Attorney’s Office, 2010). Consequently, 25 of his publications were retracted, most from highly esteemed journals in his research field (Shafer, 2009a, b, c). At the time, this was the highest number of retractions due to scientific misconduct by a single researcher. Even though others have since exceeded the extent of his misconduct (e.g., Fujii (Dyer, 2012) and Boldt (Dyer, 2011)), a detailed examination of Reuben’s case can be useful in understanding how the scientific community deals with retracted work.

In this article, we use his case to provide the first long-term follow-up of citations of retracted articles using a case-controlled approach. We focused on three research questions. What is the long-term trend of citations of retracted work and are these citations indicating that the references have been retracted? How do retracted work perform compared to a matched control? Is there a difference in the citation of retracted articles between high-impact and low-impact journals?

Method

We examined all scientific publications in a period of 10 years respectively between 2009 and 2019 citing publications by Scott S. Reuben. For this purpose, we consulted the following sources: Web of Science (Clarivate™ Analytics, Philadelphia, Pennsylvania), PubMed (U.S. National Library of Medicine, National Institutes of Health) and Google Scholar (Google LLC).

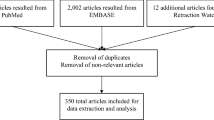

Further characteristics were extracted from Journal Citation Report (Clarivate Analytics, Philadelphia, Pennsylvania), such as journal subject and average journal impact factor (JIF) percentile (Yu & Yu, 2016), and journal impact factor. In order to do this, Journal Citation Reports Web of Science (Clarivate™ Analytics, Philadelphia, Pennsylvania) was used. All papers citing Scott S. Reuben were content analysed for an indication of a retraction. Four procedures were used to determine whether Scott S. Reuben’s papers that were cited had an “official” retraction. We first verified whether the cited paper was listed as retracted by the journal it was published. In addition, we searched the referenced paper in the Web of Science (Clarivate™ Analytics, Philadelphia, Pennsylvania), PubMed U.S. National Library of Medicine, National Institutes of Health) and Google Scholar (Google LLC) for a retraction. If Scott S. Reuben’s paper was indicated as retracted in at least one of the sources, the status "retracted" was assessed for this paper (Addendum 1). Two investigators (IZS, HBC) independently carried this out. Non-English publications that we found were in the French language and were also analysed and included in our study. Figure 1 illustrates a flowchart showing all of the steps that were taken.

To allow a case-controlled analysis, we followed the methodology used by Mott et al. (2019). All of Reuben’s retracted articles were compared with their previous and subsequent neighbouring articles in the journals in which they were published. For this purpose, only articles of similar categories (e.g., original articles, reviews) were selected as neighbouring articles. If the previous or subsequent article had a different category (e.g., an editorial or case report instead of an original article), the next article of the same category as Reuben’s publication was used (Mott et al., 2019).To control for different study designs (randomized controlled trial, retrospective analysis), the closest article with a comparable design was chosen. The mean citation of the two neighbouring articles was used as a matched control. Comparing the citations of a publication with its neighbours has been proposed method to measure the success of research output (Franceschini et al., 2012).

In order to get insights on the role of the journals ranking we calculated the proportion of high vs. low-impact journals defined by the 50th Journal Impact Factor Percentile. Chi-square was used to determine the frequency distribution of cited retracted and non-retracted papers in relation to the quartile classification of the journals. Correlations were performed to determine possible contributors to a “retraction” indication.

The results were analysed and evaluated descriptively Microsoft Office 365—Microsoft Excel (Microsoft Corp., Redmond, Washington) and SPSS (IBM, Armonk, New York).

Results

Scott S. Reuben’s articles were cited a total of 420 times over a 10-year period or between 2009 and 2019. Of these, retracted articles were cited 360 times. In 60% of the publications that cited Scott S. Reuben retracted papers, no indication was made that the cited paper had been retracted. A 10-year comparison of citations since 2009 shows a declining trend in total Reuben citations, but a significant increasing trend in citations of retracted articles that are not cited as retracted. The respective coefficient of determination has a value of R2 = 0.3647 (Fig. 2).

Frequency of citations of retracted and non-retracted publications by Scott S. Reuben in conjunction with the indication of being retracted over a period of ten years. A percentage dynamic of the non-indicated retracted publications, as well as a regression trend line, highlights the increasing trend of papers retracted but not indicated as such

When comparing the percentage of citations with the citations of the neighbouring articles over the last 10 years, it appears that ten articles retracted by Reuben were cited more often than the neighbouring non-retracted articles. On average, all of Reuben’s articles received 92% more citations than their matched control.

To examine, whether the impact of journals has an influence on the occurrence of non-indicated citation of retracted work, citations in journals with Journal Impact Factor Percentile (JIF) above 50 were compared to the journals with a JIF below 50. A significant higher proportion of non-indicated citations was published in journals with lower Impact Factors (30.9% vs. 21.2%, p = 0.0271). In other words, there is a clear tendency for journals with a higher average JIF percentile to indicate that Reuben’s articles have been retracted. This is also evident when we use the quartile ranking of the journals in which Reuben’s papers were quoted. Higher quartile ranked journals tend to indicate that Scott S. Reuben’s articles were retracted. A respective chi-square test reveals a highly significant frequency distribution X2(3, N = 420) = 61.174, p = p < 0.01 (Fig. 3). We were able to determine the following factors influencing the indication of a retraction: the year of citation, average journal impact factor, and journal quartile ranking of the citing article (Table 1).

Discussion

Our results clearly show that, despite the tendency for citations to decline, retracted articles are still cited even after 10 years without indication of their retraction status. This has many negative effects: The conclusions of systematic reviews can be distorted, the arguments of other studies are based on invalid data, and methods are regarded as established although they have not been carried out in this form (Avenell et al., 2019). This list could be continued. In Reuben’s case, an analysis of review articles showed that the majority of qualitative reviews citing his retracted publications were distorted by his fraudulent data (Marret et al., 2009). Accordingly, it is essential for the reliability of science to create increased awareness of the problem of citing retracted papers.

When interpreting our results, the context of the “life cycle” of scholarly publications, which is related to the particular field of research, has to be taken into consideration. In medicine, publications have approximately 35% of their citations within the first 5 years of publication, and the annual citation rate decreases thereafter (Galiani & Gálvez, 2019). In Reuben’s case, the mean age of the publications at the point of retraction was 5.4 ± 3.3 years. This means that for the major part of Reuben’s publications, the majority of citations occurred at a time when the publication had not yet been retracted. However, since the citations were made as a common science before the retraction, we did not include them in our analysis.

Our data shows that only a minority of publications that cited Reuben’s articles indicated that the work had been being retracted: only about a quarter of the referring literature included such a statement. The exact reason why authors cite retracted literature was not yet fully investigated. It might be explained by authors not recognizing that an article was retracted because it came from an outdated source (e.g., paper files, online repositories), or authors considering the described methodology or conclusions useful despite retraction (Da Silva & Dobránszki, 2017). We experienced large differences in the indications of a retraction when analysing for retractions through four different approaches. However, we assume that other authors also experience similarly (Addendum 1).

To improve retraction procedures and the quality assurance of scientific work, the Committee on Publication Ethics (COPE Council, 2009) developed retraction guidelines that recommend a set of clear criteria of notes on retraction (Wager et al., 2009; Council, 2019). An analysis from 2019 shows that not all of Reuben’s retractions fulfill the COPE criteria, and they are therefore often not recognized by authors using the citations, as having been retracted (Mchugh & Yentis, 2019). Likewise, the content of retraction notes is frequently lacking relevant information (Vuong, 2020). The usage of printed versions or non-updated online repositories are other possible factors that mislead to refer to publications that have been retracted (Da Silva & Bornemann-Cimenti, 2017; Davis, 2012).

Our data demonstrate that the ranking of the journal influences the occurrence of inappropriate citations. Journals with higher JIF percentiles indicate the retracted status of a cited article significantly more often. These findings may mean that high-ranking journals have effective mechanisms, such as more robust peer review or more vigilant editors, to reduce the incidence of such citations. Of course, it may also mean that the authors published in top journals are less likely to use such problematic sources. Regardless, more than 1/3th of the citations without a retraction indication were published in high-ranking journals, which clearly shows that there is still room and need for improvement, even among top journals.

Our study uncovers a main issue. It shows that a large number of Reuben’s retracted articles continue to be cited more frequently than the non-retracted control articles, which might produce an undesirable effect whereby falsified data continues to be widely disseminated (Fig. 3, Fig. 4). This may be due in part to the fact that Reuben’s falsified data were very appealing and his conclusions fit a variety of successive publications.

It is an essential responsibility of the entire scientific community to take measures against the continued citation of retracted papers, as readers, authors, editors, and reviewers alike (Da Silva & Bornemann-Cimenti, 2017; Teixeira Da Silva et al., 2020). Only by this means, a potential loss of confidence in the sciences as a whole can be avoided.

We have previously pointed out a possible solution to avoid the perpetuation of retracted research by incorporating an electronic “retraction check” in reference management software, as well as in the submission or review process (Bornemann-Cimenti et al., 2016; Da Silva & Bornemann-Cimenti, 2017). As one may suppose that citation of retracted work is far more often a result of carelessness and inadvertence than of conscious malpractice, such automated systems could be a suitable way to reduce incidence. Several good approaches already exist that implement what we propose. For example, the open source software “Zotero” already provides a way to automatically match the references with the database “Retraction Wach” (retractiondatabase.org), which also represents a very comprehensive source (Berenbaum, 2021; Stillman, 2019). Further “Scite” (scite.ai) analyses scientific publications regarding certain topics, provides an overview of citations and has also an integration to “Zotero”. As well as ReTracker, an open source plugin designed for the literature management software “Zotero” to detect retracted scientific journal articles (Dinh et al., 2019).

The manuscript review software “retractcheck” compares a Digital Object Identifier (DOI) within the manuscript with the database “OpenRetractions” (http://openretractions.com/), a database of retractions drawn only from CrossRef and Pubmed (Hartgerink et al., 2019). These projects raise the hope that citation of retracted papers will become less prevalent in the future.

In conclusion, our study reveals a major scientific problem. Invented or falsified data continues to be cited over a decade later, resulting in a distortion of evidence. Our study offers results that quantify this problem over a ten-year period with a case–control. The handling of retracted articles is still a challenge for the scientific community, and the optimization of retraction procedures and the implementation of automatic rejection checks in references managers and submission systems are needed to reduce the dissemination of falsified data to an unavoidable minimum.

Data availability

Data will be shared on request.

References

Aspura, M. Y. I., Noorhidawati, A., & Abrizah, A. (2018). An analysis of Malaysian retracted papers: Misconduct or mistakes? Scientometrics, 115(3), 1315–1328. https://doi.org/10.1007/s11192-018-2720-z

Avenell, A., Stewart, F., Grey, A., Gamble, G., & Bolland, M. (2019). An investigation into the impact and implications of published papers from retracted research: Systematic search of affected literature. British Medical Journal Open, 9, 10. https://doi.org/10.1136/bmjopen-2019-031909

Berenbaum, M. R. (2021). On zombies, struldbrugs, and other horrors of the scientific literature. Proceedings of the National Academy of Sciences USA. https://doi.org/10.1073/pnas.2111924118

Bornemann-Cimenti, H., Szilagyi, I. S., & Sandner-Kiesling, A. (2016). Perpetuation of retracted publications using the example of the Scott S. Reuben case: Incidences, reasons and possible improvements. Science and Engineering Ethics, 22(4), 1063–1072. https://doi.org/10.1007/s11948-015-9680-y

Campos-Varela, I., & Ruano-Raviña, A. (2019). Misconduct as the main cause for retraction. A descriptive study of retracted publications and their authors. Gaceta Sanitaria, 33, 356–360. https://doi.org/10.1016/j.gaceta.2018.01.009

Council, C. (2019). COPE guidelines: retraction guidelines. https://doi.org/10.1080/10236660903474522

Craig, R., Cox, A., Tourish, D., & Thorpe, A. (2020). Using retracted journal articles in psychology to understand research misconduct in the social sciences: What is to be done? Research Policy, 49(4), 103930. https://doi.org/10.1016/j.respol.2020.103930

da Silva, J. A. T., & Bornemann-Cimenti, H. (2017). Why do some retracted papers continue to be cited? Scientometrics, 110(1), 365–370.

da Silva, J. A. T., & Dobránszki, J. (2017). Highly cited retracted papers. Scientometrics, 110(3), 1653–1661.

Davies, S. R. (2019). An ethics of the system: Talking to scientists about research integrity. Science and Engineering Ethics, 25(4), 1235–1253. https://doi.org/10.1007/s11948-018-0064-y

Davis, P. M. (2012). The persistence of error: A study of retracted articles on the Internet and in personal libraries. Journal of the Medical Library Association: JMLA, 100(3), 184. https://doi.org/10.3163/1536-5050.100.3.008

Dinh, L., Cheng Y.-Y. & Parulian N. (2019). ReTracker: An open-source plugin for automated and standardized tracking of retracted scholarly publications. 2019 ACM/IEEE Joint Conference on Digital Libraries (JCDL), 406-407. https://doi.org/10.1109/JCDL.2019.00092

Dyer, C. (2011). Researcher didn’t get ethical approval for 68 studies, investigators say. BMJ. https://doi.org/10.1136/bmj.d833

Dyer, C. (2012). Japanese doctor is heading for record number of retracted research papers. British Medical Journal Publishing Group.

Franceschini, F., Galetto, M., Maisano, D., & Mastrogiacomo, L. (2012). The success-index: An alternative approach to the h-index for evaluating an individual’s research output. Scientometrics, 92(3), 621–641. https://doi.org/10.1007/s11192-011-0570-z

Galiani, S., & Gálvez, R. H. (2019). An empirical approach based on quantile regression for estimating citation ageing. Journal of Informetrics, 13(2), 738–750.

George, S. L. (2016). Research misconduct and data fraud in clinical trials: Prevalence and causal factors. International Journal of Clinical Oncology, 21(1), 15–21. https://doi.org/10.1007/s10147-015-0887-3

Grieneisen, M. L., & Zhang, M. (2012). A comprehensive survey of retracted articles from the scholarly literature. PLoS ONE, 7, 10. https://doi.org/10.1371/journal.pone.0044118

Gross, C. (2016). Scientific misconduct. Annual Review of Psychology, 67, 693–711.

Hartgerink, C., Aust F. & Sobrak-Seaton P. retractcheck 2019 [cited 2021 03/17]. Available from https://github.com/libscie/retractcheck

Hesselmann, F., Graf, V., Schmidt, M., & Reinhart, M. (2017). The visibility of scientific misconduct: A review of the literature on retracted journal articles. Current Sociology, 65(6), 814–845.

Kretser, A., Murphy, D., Bertuzzi, S., Abraham, T., Allison, D. B., Boor, K. J., Dwyer, J., Grantham, A., Harris, L. J., Hollander, R., Jacobs-Young, C., Rovito, S., Vafiadis, D., Woteki, C., Wyndham, J., & Yada, R. (2019). Scientific integrity principles and best practices: Recommendations from a scientific integrity consortium. Science and Engineering Ethics, 25(2), 327–355. https://doi.org/10.1007/s11948-019-00094-3

Ma, L. (2019). Money, morale, and motivation: A study of the output-based research support scheme in University College Dublin. Research Evaluation, 28(4), 304–312. https://doi.org/10.1093/reseval/rvz017

Marret, E., Elia, N., Dahl, J. B., McQuay, H. J., Møiniche, S., Moore, R. A., Straube, S., & Tramèr, M. R. (2009). Susceptibility to fraud in systematic reviews: Lessons from the Reuben case. The Journal of the American Society of Anesthesiologists, 111(6), 1279–1289. https://doi.org/10.1136/bmjopen-2019-031909

McHugh, U., & Yentis, S. (2019). An analysis of retractions of papers authored by Scott Reuben, Joachim Boldt and Yoshitaka Fujii. Anaesthesia, 74(1), 17–21. https://doi.org/10.1111/anae.14414

Mott, A., Fairhurst, C., & Torgerson, D. (2019). Assessing the impact of retraction on the citation of randomized controlled trial reports: An interrupted time-series analysis. Journal of Health Services Research & Policy, 24(1), 44–51.

Parlangeli, O., Guidi, S., Marchigiani, E., Bracci, M., & Liston, P. M. (2020). Perceptions of work-related stress and ethical misconduct amongst non-tenured researchers in Italy. Science and Engineering Ethics, 26(1), 159–181. https://doi.org/10.1007/s11948-019-00091-6

Shafer, S. L. (2009a). Notice of retraction. Anesthesia & Analgesia, 108(4), 1350. https://doi.org/10.1213/01.ane.0000346785.39457.f4

Shafer, S. L. (2009b). Anesthesia & Analgesia, 108(5), 1361–1363.

Shafer, S. L. (2009c). Tattered threads. Anesthesia and Analgesia, 108(5), 1361–1363.

Sharma, K. (2021). Team size and retracted citations reveal the patterns of retractions from 1981 to 2020. Scientometrics, 126(10), 8363–8374. https://doi.org/10.1007/s11192-021-04125-4

Steen, R. G., Casadevall, A., & Fang, F. C. (2013). Why has the number of scientific retractions increased? PLoS ONE, 8, 7. https://doi.org/10.1371/journal.pone.0068397

Stillman, D. Retracted item notifications with Retraction Watch integration 2019 [cited 2019 06/14]. Available from https://www.zotero.org/blog/retracted-item-notifications/

Teixeira da Silva, J. A., Bornemann-Cimenti, H., & Tsigaris, P. (2020). Optimizing peer review to minimize the risk of retracting COVID-19-related literature. Medicine, Health Care and Philosophy. https://doi.org/10.1007/s11019-020-09990-z

U.S. Attorney’s Office. (2010). Anesthesiologist pleads guilty to health care fraud. Retrieved February 22, https://archives.fbi.gov/archives/boston/press-releases/2010/bs022210.htm

Vuong, Q.-H. (2020). The limitations of retraction notices and the heroic acts of authors who correct the scholarly record: An analysis of retractions of papers published from 1975 to 2019. Learned Publishing, 33(2), 119–130. https://doi.org/10.1002/leap.1282

Wager, E., Barbour, V., Yentis, S., Kleinert, S., on behalf of COPE Council. (2009). Retraction guidelines. Committee on publication ethics. Publication Ethics. https://doi.org/10.24318/cope.2019.1.4

Yu, L., & Yu, H. (2016). Does the average JIF percentile make a difference? Scientometrics, 109(3), 1979–1987.

Acknowledgements

None

Funding

Open access funding provided by Medical University of Graz. This project was supported by institutional funding.

Author information

Authors and Affiliations

Contributions

ISS: Conceptualization, investigation. Formal analysis, data curation. GAS: Data extraction, drafting the manuscript. CK: Data Extraction, Drafting the manuscript. HS: Data extraction, drafting the manuscript. TU: Data extraction, drafting the manuscript. HB-C: Conceptualization, methodology, writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

Authors have no conflict of interest relevant to this article.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Szilagyi, IS., Schittek, G.A., Klivinyi, C. et al. Citation of retracted research: a case-controlled, ten-year follow-up scientometric analysis of Scott S. Reuben’s malpractice. Scientometrics 127, 2611–2620 (2022). https://doi.org/10.1007/s11192-022-04321-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-022-04321-w