Abstract

This article presents a novel application of a two-phase Data Envelopment Analysis (DEA) for evaluating the efficiency of innovation systems based on the Triple Helix neo-evolutionary model. The authors identify a niche to measure Triple Helix-based efficiency of innovation systems scrutinizing different methodologies for measuring Triple Helix performance and indicating different perspectives on policy implications. The paper presents a new Triple Helix-based index that engages a comprehensive dataset and helps provide useful feedback to policymakers. It is based on a set of 19 indicators collected from the official reports of 34 OECD countries and applied in a two-phase DEA model: the indicators are aggregated into pillars according to the Assurance Region Global and DEA super-efficiency model; pillar scores are aggregated according to the Benefit-of-the-Doubt based DEA model. The results provide a rank of 34 countries outlining strengths and weaknesses of each observed innovation system. The research implies a variable set of weights to be a major advantage of DEA allowing less developed countries to excel in evaluating innovation systems efficiency. The results of Triple Helix efficiency index measurement presented in this paper help better account for the European Innovation Paradox.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The COVID-19 pandemic shows the importance of synergistic effects of multiple stakeholders’ joint efforts in overcoming threats and reducing the risks of a global crisis which leads to disease, death, economic decline, business failure and job losses. Effective collaboration between government, industry and academia is crucial for economic survival, where a crisis may be overcome using innovative solutions (Layos & Peña, 2020; Niankara et al., 2020). It is argued that in regular circumstances, collaboration and cross-sectoral joint efforts are equally effective and substantially contribute to the prosperity and sustainability of an innovative society (Lerman et al., 2021).

The Triple Helix model, originated by Etzkowitz and Leydesdorff (1995), is widely acknowledged as a conceptual tool that promotes innovation and entrepreneurship through better understanding, cooperation and interaction between university, industry, and government institutions, and supports economic growth and innovation policy design in turn (Cai & Liu, 2020; Galvao et al., 2019). The Triple Helix is effective at both the national and regional levels (Leydesdorff & Deakin, 2011; Rodrigues & Melo, 2012), while its cross-sectoral interactions are recognized as a key force of circular economy and sustainability (Anttonen et al., 2018; Scalia et al., 2018; Ye, & Wang, 2019). In addition to social benefits, the usefulness of Triple Helix interactions has also been demonstrated organizationally. Hernández-Trasobares and Murillo-Luna (2020) have confirmed that the cooperation of industry, academia, and government in R&D contributes to success in business innovation.

National efforts to enhance innovation policies and competitiveness (e.g., Smart Specialization Strategy by EC JRC, EC HEG KET) point to a paradox of increased innovation investments not fulfilling the proclaimed sustainable development goals, designated as the European Innovation Paradox (Dosi, Llerna, & Labini, 2006). Some recent studies have attempted to find methods and tools for overcoming this Paradox, focusing on the assessment innovation systems indicators. Their conclusions highlighted the changes that needed to be made accordingly, based on the approaches and methodology used for innovation systems evaluation (Argyropoulou et al., 2019). Argyropoulou et al. recognize that the Triple Helix model is a tool to be adapted for wider application in overcoming the effects of the European Innovation Paradox, aimed at reaching a “harmony for knowledge-based economy” (Argyropoulou, Soderquist, & Ioannou, 2019).

While the development of the Triple Helix model needs to be pre-structured and coordinated (Cai & Etzkowitz, 2020), the interplay of the Triple Helix actors is difficult to manage as it requires activities of multiple, disparate sectors within complex subsystems (Jovanović et al., 2020; Ranga & Etzkowitz, 2013). New approaches to the theory and practice of the Triple Helix concept are constantly arising (see e.g., Todeva et al., 2019), but challenges remain on how to best manage Triple Helix interactions in order to improve the effectiveness and efficiency of the innovation ecosystem. Successful Triple Helix dynamics requires us to measure and evaluate the performance of Triple Helix actors (Dankbaar, 2019; Sá et al., 2019). For this purpose, a range of measurements have been developed (e.g., Jovanović et al., 2020; Leydesdorff, 2003; Leydesdorff & Ivanova, 2016; Mêgnigbêto, 2018; Priego, 2003; Xu & Liu, 2017). Measuring Triple Helix performance helps identify both good practices and possible deficiencies in interactions, enables comparison between countries and regions, solicits solutions to challenges, and points to strategies taking advantage of opportunities.

Cirilloa et al. (2018) emphasize that the origins of the European Paradox may be better examined when using a proper set of measurements and scientific indicators. Extensive literature review has helped identify three major research gaps. Firstly, although literature on quantitative measurement of Triple Helix abounds, there are still very few studies which compare Triple Helix performance measurement tools. Secondly, the existing approaches are highly focused and lack the capacity and specific design to detect strengths and weaknesses of systems based on the measurements, consequently failing to contribute to innovation strategy and policy development. Finally, the European Innovation Paradox has been insufficiently examined from the Triple Helix perspective, which is recognized as having the strength to shed more light on causal relationships within this phenomenon.

To bridge the above stated literature gaps, this paper poses two research questions: (1) How may the existing approaches of measuring Triple Helix be assessed? and (2) How can Triple Helix-based efficiency at a national level be measured using a comprehensive set of indicators that provide understandable and useful feedback to policymakers on system improvement? The first research question is tackled by analyzing different research studies on measuring Triple Helix. This helped evaluate the observed aspects of Triple Helix interactions and identify a niche for further research. As for the second research question, we have developed a novel application of the Data Envelopment Analysis (DEA) to measuring Triple Helix-based efficiency scores of innovation systems. Empirically, 34 OECD countries are compared according to their efficiency in transforming inputs of an innovation system into innovative outputs fostered by Triple Helix actors. The results of this measurement should provide useful feedback on innovation action for policymakers, particularly as a potential solution for the European Innovation Paradox.

The paper is structured as follows: Sect. 2 provides literature review on the existing approaches to measuring Triple Helix performance and Data Envelopment Analysis. Section 3 introduces the development of the proposed index of Triple Helix-based efficiency. Section 4 presents the results of measuring the Triple Helix efficiencies of 34 OECD countries. Section 5 discusses the implications of our findings for existing and potential stakeholders. Finally, we conclude by indicating the limitations of our study, and suggesting the direction for further research.

2. Literature review: Triple Helix concept and measures

The concept of the Triple Helix model originated in 1995 to help comprehend the dynamics of interactions between university, industry, and government, which in turn would promote innovation and entrepreneurship (Etzkowitz & Leydesdorff, 1995). Since then, it has become prominent in scholarly research and policy discourse. An area of Triple Helix studies has focused on measuring Triple Helix performance. Literature review in this paper is mostly based on studies related to Triple Helix measurement found in prominent scientific databases (Web of Science, Scopus, and Google Scholar) under the key words ‘Triple Helix indicators’, ‘Triple Helix measures’, ‘Triple Helix measuring’, ‘Triple Helix performance’ and ‘Triple Helix evaluation’. This section aims to clarify the state-of-the-art of research in the field and to identify existing research gaps, rather than provide a systematic literature review report.

Why is measuring the Triple Helix important?

Cross-sectoral collaborations are key to successful innovation, since joint forces and efforts allow for a better understanding of diverse perspectives (Singer & Oberman Peterka, 2012), facilitate knowledge exchange and distribution, provide additional opportunities (e.g., in funding, projects, products), reduce knowledge redundancy (Leydesdorff & Ivanova, 2016), and boost innovative and economic performance (Luengo & Obeso, 2013; Razak & White, 2015). The Triple Helix model is aimed at better understanding of complex interactions among multiple university, industry and government actors which may foster innovation and entrepreneurship (Etzkowitz & Leydesdorff, 2000). Recognizing the usefulness of this model, governments and decision-making bodies have strived to not only design policies which would improve their innovation systems, but also allocate resources to promote Triple Helix interactions (Cai & Etzkowitz, 2020). However, such policies may only be effective when informed by a purely evidence-based Triple Helix model, e.g., through measuring Triple Helix synergies (Leydesdorff & Smith, 2021). Park and Leydesdorff (2010) come to important conclusions using the so-called Triple Helix indicator to evaluate the effectiveness of governmental policies in South Korea and their impact on co-authorship collaboration patterns.

Three major arguments are put forward concerning the importance of Triple Helix measurement. First, it provides a control mechanism for policy implementation which helps estimate its efficiency and effectiveness (e.g., Brignall & Modell, 2000; Ivanova & Leydesdorff, 2015). Secondly, performance evaluation is essential to improve Triple Helix interactions as it permits the detection of weak links and good practices within the Triple Helix systems observed (e.g., Keramatfar & Esparaein, 2014; Lebas, 1995). Finally, measuring the Triple Helix efficiency may be used in developing ranking tools for innovation competitiveness on a global scale (e.g., Jovanović et al., 2020; Ye & Wang, 2019).

Evaluating Triple Helix performance – current methodological approaches

The following table presents a summary of approaches to measuring Triple Helix performance (Table 1).

Identified gaps in Triple Helix performance measurements

Extensive literature review has suggested a niche for further development of a Triple Helix-based index for measuring comprehensive performance. While current measurements are mainly based on a single indicator or multiple measure reports, some available comprehensive datasets have not been fully utilized for measuring Triple Helix performance due to methodological challenges. For instance, the OECD Science, Technology and Innovation (STI) Outlook provides a comprehensive overview of major trends in STI development of OCED countries and may assist policymakers in detecting global patterns and help define and update their STI strategies accordingly (OECD, 2020a). With a set of almost 130 indicators, it chiefly evaluates R&D and patent activity performance, providing separate values for university, government and business sectors, thereby offering an insight into of all three Triple Helix actors’ performance. However, the indicators are neither aggregated nor do they provide a composite measure of a country’s performance, so it might be challenging to compare, benchmark or rank countries by distinct observation of separate indicators.

Although there are multivariate approaches to Triple Helix measurement, the existing approaches neglect some important aspects. Meyer et al. (2014) highlight that “…more enriched indicators that are multi-layered and multidimensional are required to unpick the situation from different angles, thus allowing for the heterogeneity of the different actors to be voiced and heard”. Triple Helix performance measures focus more on R&D activities observed through patent and publishing activity (Leydesdorff & Meyer, 2006; Meyer et al., 2003; Xu et al., 2015). Patent activity is one of the main determinants of Triple Helix measures, since it is one of the major results of R&D activities within the innovation ecosystem (see e.g., Meyer et al., 2003; Ivanova et al., 2019). However, Baldini (2009) and Alves and Daniel (2019) stress that institutions and individuals (especially in academia) face numerous hurdles in the patenting process. Thus, this aspect should not be observed as a unique and ultimate result of Triple Helix activities. The number of spin-off companies is another R&D mechanism that may support the entrepreneurial ecosystem which is also an important indicator of the entrepreneurial level of a university (Ferri et al., 2019; Fini et al., 2017; Lawton Smith & Ho, 2006).

In the context of the Triple Helix model, support from all three actors is crucial for higher entrepreneurial intentions and the number of academic ventures (Fini et al., 2017; Samo & Huda, 2019). Nonetheless, studies and researchers have as of yet to sufficiently apply this indicator due to the limited or missing national data on the total number of spin-off companies. From another perspective, Villanueva-Felez et al. (2013) evaluated the importance of a social network and its relationship to research output (i.e., the number of research papers, books and conference papers published). Although the approach shows and evaluates the impact of interpersonal networks on research performance, it does not cover the overall performance and efficiency of a Triple Helix society. Publishing activity and a bibliometric analysis is another crucial aspect for Triple Helix collaboration (Xu et al., 2015; Priego, 2003). While Tijssen’s (2006) extensive research sheds light onto the R&D cooperation of industry and academia, it fails to incorporate a legislative perspective in order to support a holistic approach to Triple Helix measuring.

Extant approaches of measuring Triple Helix performance sometimes fail to pinpoint policy implications as a main tool for directing Triple Helix actors. Tijssen’s (2006) model, for instance, does not emphasize the importance of implications for policymakers and strategists, although the selected indicators are pertinent to the subject matter. As a further example, Egorov and Pospelova (2019) evaluated innovative activities of the Russian Arctic based on three factors: (1) the number of Russian patents granted for inventions per workforce, (2) the share of innovative goods, works and services in the total volume, and (3) the share of budget expenditures on scientific research. The results provided ranks, but they did not specify weak links and implications for all actors. Marinković et al. (2016) analyzed a broad set of multidimensional indicators, but the research only evaluated governmental performance within the Triple Helix model.

To the best of our knowledge, the most developed and applied approach to measuring Triple Helix performance thus far has been proposed by Loet Leydesdorff based on Shannon’s entropy formula as it evaluates the strength of synergy within a system based on the joint work on papers and projects. It has been further adapted and applied at a national and regional level in Germany, Norway, the Netherlands, Japan, Russia, among other countries (Leydesdorff et al., 2006; Leydesdorff & Fritsch, 2006; Leydesdorff & Sun, 2009; Leydesdorff & Strand, 2012a; b; Leydesdorff et al., 2015). The approach provides comparisons among both regions and countries and suggests a different perspective for further strategies and policies. For example, application of the mutual information Triple Helix indicator in South Korea signaled that their governmental policies failed to improve their national system by connecting actors in the field of science, technology, and industry (Park & Leydesdorff, 2010). The research provided important implications for strategists, but the conclusions were based on publication activity within the Korean innovation system. In sum, the potential of Triple Helix measurement for policy implications should be further strengthened.

Current approaches measure Triple Helix performance in the form of Triple Helix synergies and outcomes. However, there is no ready-made method to measure Triple Helix efficiency (e.g., how resources allocated to innovation can generate expected outputs). Literature review justifies focusing on the comprehensive efficiency measurement approach to improve policy. Ivanova and Leydesdorff (2015) have posed a related question: “What innovation systems are most efficient and why?” Some attempts to respond to the question have also informed our study. For instance, Mêgnigbêto (2018) used game theory to structure a model of Triple Helix relations and examine synergy indicators based on the number of papers. Tarnawska and Mavroeidis (2015) used DEA to evaluate the efficiency of 25 EU-member states based on six indicators of national innovation system performance. Another multivariate approach proposed by Jovanović et al. (2020) examines the measure of the Triple Helix synergy of 34 OECD countries through a two-step Composite I-distance method to create multivariate composite measures based on a set selected from OECD Main Science and Technology Indicators. The result was a categorization of indicators into pillars (Triple Helix actors). Jovanović et al. analyzed the performance of every pillar and the overall Triple Helix performance and rank but did not analyze the efficiency of the countries selected. Building upon the previous experience (Jovanović et al., 2020; Tarnawska & Mavroeidis, 2015), we address these issues and use DEA on a set of OECD indicators, extending the analysis using additional official data. To do so, we propose a multi-criteria efficiency approach by applying the Data Envelopment Analysis (DEA) to a dataset from 34 countries.

The approach proposed to measure Triple Helix efficiency of innovation systems

To overcome the challenge of comprehensive performance measurement, we have designed a model to measure Triple Helix efficiency. Current approaches to measuring Triple Helix performance mainly focus on activities and outcomes, and do not fully consider the level of efficiency, in particular how input resources are efficiently used to deliver outcomes through Triple Helix interactions. Literature highlights three concepts concerning the Triple Helix interactions: spheres, spaces, and functions. Spheres refer to university, industry and government (Etzkowitz, 2008; Etzkowitz & Leydesdorff, 1995). While Etzkowitz and Leydesdorff jointly developed the Triple Helix model with a shared understanding of synergy building among the three spheres/helices, they have further elaborated on the mechanisms of Triple Helix interactions by using the concepts of spaces and functions, respectively (Leydesdorff, 2012). From a neo-institutional perspective, Etzkowitz draws attention to Triple Helix interactions of knowledge, consensus and innovation spaces, taking place in parallel with the interactions of the spheres (Etzkowitz, 2008; Etzkowitz & Zhou, 2017). From a neo-evolutionary perspective, Leydesdorff considers that the three helices also operate “as selection mechanisms asymmetrically on one another, but mutual selections may shape a trajectory as in a coevolution” (Leydesdorff, 2012, p. 28). In such a lens, the Triple Helix is perceived as three functions—namely, wealth creation, knowledge production, and normative control (Leydesdorff, 2012). In our measurement of Triple Helix efficiency, we focus on the performance of these functions.

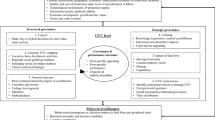

As previously noted, current approaches overlook some aspects of the Triple Helix performance, so we propose a set of 19 indicators (Table 2) that offer a more comprehensive approach and address additional areas of the innovation system. The proposed model is a multi-criteria approach specifying Triple Helix functions: wealth creation, knowledge production, and normative control (Fig. 1). The model uses the efficiency approach measured by Data Envelopment Analysis (DEA). The approach examines the success of an entity (an observed unit, e.g., a country, department, sector, region, etc.) in using the provided inputs and transforming them into desired outputs (Ćujić et al., 2015). In comparison to the method developed by Leydesdorff (2003), the proposed DEA approach may imply areas to be improved for better efficiency results within the countries observed. Upon calculation, this method suggests improvements an entity should undertake to increase efficiency, improve its potential, and reach better results with the resources provided. It therefore aims to provide critical contributions and feedback to policymakers for the further development of innovation policies and technological strategies.

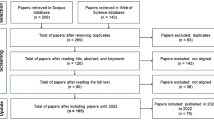

In the process of designing a measure of Triple Helix efficiency, we followed the OECD framework for the creation of composite indicators (OECD, 2008): (1) developing a theoretical framework; (2) selecting variables; (3) imputation of missing data; (4) multivariate analysis; (5) normalization of data; (6) weighting and aggregation; (7) robustness and sensitivity; (8) back to the details; (9) links to other variables; and (10) presentation and dissemination.

The presented index evaluates efficiency of the selected countries based on the neo-evolutionary Triple Helix concept and its three main neo-evolutionary functions (pillars): Novelty Production (NP), Normative Control (NC) and Wealth Generation (WG) (Leydesdorff & Meyer, 2006; Leydesdorff & Zawdie, 2010). The database is comprised of four sources (Table 2): (1) OECD Main Science and Technology Indicators, (2) the Global Innovation Index (GII), 3) the SCImago Journal & Country Rank, and 4) the World Bank.

Table 2 outlines a list of the selected indicators and the source database. Indicators are classified according to the following two criteria:

-

1.

The Triple Helix function they refer to (wealth generation, normative control and/or novelty production), and

-

2.

Input or Output in relation to the nature of the indicator (i.e., whether it is a resource or a result) and whether it is intended to be minimized or maximized.

The indicators used in our measurement to combine multiple aspects are based on a synthesis of the literature. These indicators mainly concern R&D activities such as patents, published papers and research staff. Jovanović et al. (2020) attempted to identify and test a similar set of indicators in this paper, adding some essential aspects of innovative activity (i.e., new business density, intellectual property receipts, university and industry collaboration, and tertiary education graduates). Some input indicators are associated with two functions (informative control and wealth generation (NC-WG)), so the values of the indicators were assigned to both Triple Helix functions (1/2 of the value). As it is impossible to divide all output indicators by Triple Helix functions, the results were assigned to each Triple Helix pillar (1/3 of the value). A scheme of the Triple Helix efficiency index is given in Fig. 1.

Composite index-based performance measurement is prone to sensitive stages: availability and reliability of data, preprocessing, weighting the system and the selection of an aggregation method (Jovanović et al., 2020). To assure comprehensive data, this paper uses only reliable resources—OECD, GII, SCImago and the World Bank. An initial set consisted of 38 measures, but due to redundancy and high correlations, the final set was limited to the 19 indicators presented. Indicator values were collected for the year 2015, the last year when all data was available. Owing to reliable data and comprehensive databases, imputation was not necessary. Research results, implications and conclusions are based on the data from 2015 for 34 OECD-country members. The efficiency analysis, including normalization, weighting and aggregation were all performed through a two-phase DEA approach.

Data envelopment analysis

Data Envelopment Analysis is an operational research non-parametric method used to evaluate the efficiency of the entities studied in decision-making units (DMUs). Charnes, Cooper, and Roads (1978) introduced this method to estimate how successful a DMU is when using multiple inputs to transform them into desired outputs (Ćujić et al., 2015). If a unit is efficient, it has an efficiency score of 1. To allow for the ranking of efficient units, it is also necessary to create a super-efficiency model (Andersen & Petersen, 1993) to calculate the exact measurements and provide efficiency scores above 1.

DEA is applicable for Triple Helix-based efficiency evaluation at a national level since this approach allows a country to achieve outstanding results, despite limited resources. This feature is especially important for smaller and less-privileged countries with restricted funds, but still able to exploit their full potential. The efficiency approach is also needed to estimate if the employed inputs result in the expected outcomes, especially in innovative activities, since practice indicates this is not usually the case (e.g., the Swedish, European and Serbian paradox) (Levi Jakšić et al., 2015).

An additional feature of the DEA method is that it allows every entity to determine the most suitable weights. As such, each DMU (for this study, country) can choose its own set of weights to maximize its efficiency. The feature is significant for this type of evaluation, as some countries may have superior publishing activity, but an insufficient number of patents. A unique set of weights allows units to compensate outcomes when underperforming in some of the aspects. DEA also evaluates strengths and weaknesses for every unit, provides a benchmark country and possible project improvements for more efficiency (Ćujić et al., 2015). Thus, the implications could be useful for policymakers as important input needed to create and propose national strategies.

DEA has proven superior when comparing countries from multiple, disparate perspectives:

-

Technology and educational efficiency (Aristovnik, 2012; Xu & Liu, 2017)

-

Innovation performance (Cai, 2011; Carayannis et al., 2015; Yesilay & Halac, 2020)

-

Sustainability (Ouyang & Yang, 2020; Vierstraete, 2012; Halkos & Petrou, 2019)

-

Public sector performance (Afonso et al., 2010; Baciu, & Botezat, 2014; Msann, & Saad, 2020)

-

Energy efficiency (Guo et al., 2017; Song et al., 2013; Dogan & Tugcu, 2015; Ziolo et al., 2020)

Nevertheless, the Triple Helix theory and the DEA method have not been sufficiently utilized. Tarnawska and Mavroeidis (2015) applied this method, employing six indicators at most, which is an insufficient number for such a complex problem as knowledge triangle policy in the EU countries. Our research aims to introduce a comprehensive measure of Triple Helix-based efficiency, for which we provide a detailed model structure in the following section. The research involves a set of 34 OECD countries and a selected set of 19 indicators. The results will compare the efficiency of OECD countries based on the cooperation between the three pillars.

The two-phase DEA approach

DEA has proven to be a useful method when constructing a composite index due to its specific characteristics (Cherchye et al., 2008) in which individual indicators are aggregated free of a predefined set of weights. This allows each unit observed to determine its own weighting system. Every assessed entity also takes into consideration the performance of the other entities observed, which is known as the “benefit of the doubt—BOD” approach (Cherchye et al., 2007; Savić & Martić, 2017). DEA-based composite indices are proven to be an effective tool for the evaluation and comparison of entities from disparate perspectives: logistic performance, sustainability, human development and eco-efficiency as well as company performance (see e.g. Mariano et al., 2017; Halkos et al., 2016; Shi & Land, 2020; Huang et al., 2018; Dutta et al., 2020).

A number of DEA mathematical model formulations may be applied depending on the type of the problem examined (e.g., input-oriented, output-oriented, BCC, CCR, undesired outputs, BOD, hierarchical approach) (Paradi et al., 2018). This paper uses the two-phase approach to construct a composite measure of Triple Helix-based efficiency.

In the first phasea, indicators were aggregated within each Triple Helix pillar and the scores were provided by a combination of Assurance Region Global (Cooper et al., 2007) and DEA super-efficiency models:

where n is the number of DMUs – countries (j = 1,…n); m – the number of inputs (i = 1,…,m); s – the number of outputs (r = 1,…,s); \({x}_{ij}\) – the known amount of i − the input of DMUj (\({x}_{ij}\)> 0, i = 1,2,…,m, j = 1,2,…,n); \({y}_{rj}\) – the known amount of r − the output of the DMUj (\({y}_{rj}\)> 0, r = 1,2,…,s, j = 1,2,…,n); \({h}_{k}\) (k = 1,…,n) – the efficiency score; \({v}_{i}\) (i = 1,…,m) – the weight assigned to i − the input by the DMUk; \({u}_{r}\) (r = 1,…,s) – the weight assigned to r − the input by the DMUk; and the range [lb,ub] signifies the influence of all inputs into the total weighted input. This model provides relatively efficient scores and ranks by comparing countries within the studied set of 34 OECD countries, for each pillar of wealth generation, normative control and novelty production.

In the second phase, the pillar scores were aggregated for every country through a “BOD”-based DEA model that had no explicit inputs (Cherchye et al., 2007):

where n–the number of DMUs (countries), s–the number of pillars; \({h}_{rj}\)–the efficiency score obtained in the previous phase for r − the pillar (\({y}_{rj}\)> 0, r = {1,2,3}, j = 1,2,…,n); \({eff}_{k}\) (k = 1,…,n) –the efficiency score of the DMUk; \({\overline{u} }_{r}\)(r = 1,…,s) –the weight assigned to r − the output by the DMUk.

Based on the two models presented, the efficiency of each sub-index was calculated, thus obtaining the efficiency scores for each Triple Helix function. The values of the sub-indices and the second DEA model, as well as the overall Triple Helix super-efficiency of the countries selected, provided a country ranking based on the scores yielded.

Measuring the Triple Helix-based efficiency of OECD countries

Having evaluated the efficiency of each Triple Helix pillar, Table 4 presents the efficiency of the selected countries using a specified efficiency measure within each Triple Helix function, as well as the overall Triple Helix efficiency. Appendix A-C provides a detailed calculation and impact of the indicators for every country. Figure 2 gives a graphical overview of the scores in Table 4, which enables visual comparison of country scores. The values in the Appendix represent data-driven weights illustrating the importance of each input and output indicator informing the efficiency score. The value of the efficiency score specifies if a country has been efficient. When the entity has an efficiency score equal to or higher than 1, it is considered efficient, wherein the given resources result in a high outcome level. Table 3 provides the results of descriptive statistics for all three pillars: the number of efficient countries, the maximum, minimum and average efficiency as well as the standard deviation of the efficiency scores.

A total of 10 countries are efficient in terms of normative control performance (efficiency score > 1). The results of the first phase indicate the most efficient countries to be South Korea, Iceland and Latvia. South Korea’s high score is based on its respectively high level of patent activity (0.9554). Latvia also shows strong publishing activity (0.7803) in addition to somewhat significant trade exports (0.1123) and patents (0.1024). Iceland is marked as efficient due to its exceptional intellectual property receipts (0.9850) in comparison to invested resources. Conversely, Mexico, Germany, and Turkey all have low efficiency scores (0.278, 0.362, and 0.368, respectively). Turkey has high government investments in GERD but does not sufficiently commercialize intellectual property (0-weight) as well as trade exports (0.005). Similarly, Mexico scores well on published papers (0.6450) and exports (0.3490), but is brought down by its low number of patents and intellectual property receipts. It may come as a surprise due to its high investments, but Germany’s insufficient outcomes result in it being ranked as inefficient. Although Germany is strongest in patent activity (0.6782) and trade exports (0.2947) for innovative activity, these indicators insufficiently compensate for its low commercialization of intellectual property and its low number of published papers.

The results show there to be 25 efficient countries in the wealth generation pillar among the innovative systems examined. Most of the countries studied show an efficient function, where the average efficiency score is 1.317 with a standard deviation of 0.542. The most efficient are Luxembourg (2.857), Iceland (2.495), and Estonia (2.088). Luxembourg scores high due to its 2 innovative outputs: new business density (0.4660) and intellectual property receipts (0.4881), while Estonia has an astonishing new business density as a leading innovative output, followed by a modest number of scientific articles. Iceland again owes its high rank to intellectual property receipts (0.8682), but its new business density also plays an important role in their innovative output (0.1168). On the other hand, Canada (0.274), Spain (0.592) and the Netherlands (0.690) all score the lowest, which may come as a surprise. Whereas Canada does present high patent activity, it is insufficient to compensate for its other outputs and their activity does not follow the investments provided. Spain’s innovative activity output is based solely on scientific articles, while its intellectual property receipts are so low that they only gain a 0-weight, unable to contribute to a better efficiency score. Similarly, the Netherlands scores no weight for intellectual property, signifying that this factor should be improved through policy intervention.

Evaluating novelty production performance shows there to be 24 efficient countries. As in the wealth generation pillar, most are efficient, achieving a high average efficiency score of 1.400 with a standard deviation 0.686. The United States (2.857), Slovenia (2.599) and Luxembourg (2.553) have the most efficient universities in relation to innovative activity. Although the United States presents outstanding university output for all indicators, its level of intellectual property receipts has the highest impact (0.98) in comparison to the other countries examined. Slovenia scores a balanced innovative output mainly focused on patents (0.2613), journal articles (0.2344) and intellectual property receipts (0.4943). In contrast, the results show that Latvia (0.474), Estonia (0.512), and Turkey (0.666) have the least efficient knowledge creation sector. Even though Latvia and Estonia do have a substantial number of scientific articles, their number of graduates and intellectual property receipts is not sufficiently high in comparison to their investments. With a low value of university/industry collaboration, Turkey scored the lowest with a 0-weight for intellectual property receipts.

In terms of the Triple Helix super-efficiency index, the results of the second phase analysis show only three countries to be efficient: South Korea (1.220), Iceland (1.162) and the USA (1.018). The remaining countries fall below the efficiency frontier. South Korea, wealth generation not being a crucial factor (0.050), achieves its high score mainly due to its strong normative control results (0.882) and moderate novelty production (0.288). The United States bases its high score on its strong knowledge creation performance (0.8795), while Iceland, leading with wealth creation (0.6481) and normative control (0.3478), has a more balanced Triple Helix functions’ efficiency. Turkey (0.2486), Canada (0.2607) and Austria (0.2776) score the least efficient Triple Helix-based innovation systems. In comparison to the other countries studied, Turkey has highly inefficient normative control and novelty production (0.050), possessing only slightly stronger wealth-generation performance (0.1486). Canada is similar in its weak novelty creation and normative control (0.0500), although it does present a stronger legislative function (0.1607).

Discussion and implications

The results present provide six important implications for the proposed model.

-

1.

The most competitive advantage of DEA is its variable set of weights, allowing for every unit to compensate for indicators which may be used to rank the results. As for the efficiency of legislative function, the top three countries have their high scores rooted in separate aspects: Ireland’s high trade exports, Latvia’s outstanding level of published articles and trade exports, and South Korea’s superior number of patents. Estonia emerged as the 3rd ranked country by wealth generation efficiency due to its high intensity of newly established businesses, which is another example of DEA’s advantage of taking into consideration the competitiveness of an innovative ecosystem. Japan, for example, focuses its efforts on patents, Iceland is excellent in charging for the use of its intellectual property, while Estonia creates new business ventures. South Korea’s superiority in innovative performance has already been confirmed by Bloomberg’s Innovation Index methodology – ranked 1st (Bloomberg, 2020, 2016), and Global Innovation Index (Cornell University, INSEAD, and WIPO, 2016) – ranked 11th.

-

2.

The approach presented in this paper evaluates if a country is efficient – exploiting its invested resources possibly resulting in an appropriate output. Despite its remarkable innovative output, Germany is surprisingly not highly ranked. These results imply that Germany might achieve higher scores considering its resources and investments. Likewise, France’s high GOVERD financed by the business sector has not resulted in high outputs within the normative control function.

-

3.

Descriptive statistics in Table 3 indicates the greatest deviations in the normative control sector, which has also been shown as the least efficient. As expected, the main role of this sector is to provide sufficient funds and legislative support; the main role of creator of innovative outputs, on the other hand, is dedicated to wealth generation and novelty production functions.

-

4.

In comparison to the Global Innovation Index aggregation method, the presented approach considers the size of a country and its available resources. Figure 3 highlights these differences, comparing the normalized GII (in comparison to the countries studied) and the Triple Helix super-efficiency rank. Beneath the red line are those countries that have a higher GII score than their Triple Helix super-efficiency score, while above the red line are those countries that have a higher Triple Helix super-efficiency score in comparison to the GII.

The first (bottom left) quadrant represents those countries successful by both criteria, while the second (bottom right) lists countries that are successful innovators, but not efficient from a Triple Helix functions perspective. The top left quadrant shows Triple Helix efficient countries that maximize the utilization of their resources but are not listed in the top national innovative systems according to GII methodology. The top right quadrant shows the countries with a lower rank in both scores. The closer the countries are to the top right corner of the quadrant, the less innovative they are according to both criteria. This matrix might prove to be a valuable visual tool for policymakers.

According to Fig. 3, the United States is the best among all the countries examined, while Switzerland also achieved significant results. Were Switzerland to improve their novelty production efficiency (scoring the lowest weight in the Triple Helix index – 0.050) they could upgrade their position in this innovation matrix. Moreover, some countries, such as Estonia, are successful in the utilization of their resources but do not follow the same trend in GII, which might imply higher potential should additional resources be invested. This result is especially important for highly efficient countries, such as Iceland, Luxembourg, Japan, and South Korea, all of which have a high potential to grow even more innovative with added resources. On the other hand, countries such as Sweden, the Netherlands, the United Kingdom, Germany and Canada must create higher outputs to justify their resources, such as with Estonia. For example, Germany’s novelty production and legislative function efficiency is surprisingly low, as well as the Netherlands’ wealth generation and Canada’s normative control.

-

5.

Aparticular quality of the DEA method is that it provides feedback on improvements that should be made within an entity in order for it to achieve a higher level of efficiency (Paradi et al., 2018). The results help identify weak links within each innovation system, providing the exact measure of the improvement that an entity should make to become efficient. For policymakers, this advantage could be a crucial contribution, applicable when determining the need to improve measures and policies within the system examined.

The results of this method may be used as a direct input for a national-level decision-making process to improve the performance of Triple Helix pillars. The proposed model is scalable and, with proper data collection, could be applicable regionally or locally. Such an approach should indicate where countries stand from a Triple Helix functions perspective and provide further steps to be taken for a higher level of innovativeness within their system. Low weighting scores for a number of the outputs in Appendix A-C indicate where improvements could be made. Policymakers could use their expertise to target these quantitative results to forge better policies that may strengthen their national system’s innovativeness and efficiency.

-

6.

The results provide insight into the European Innovation Paradox. The DEA Triple Helix score presented in Fig. 3 clearly points to lower innovation systems’ efficiency for European countries within the innovation efficiency rankings. The new proposed Triple Helix-based efficiency measurement tool has indicated the domains of inefficiency specifically related to the Triple Helix functions: normative control, novelty production, wealth generation. It clearly lays out the need to develop and implement policy measures towards the interconnectivity and harmonization of the said factors. Listed below are five factors that help account for the innovation paradox as indicated by the results of the Triple Helix efficiency measurements:

-

1.

The “Valley of Death” phenomena within the innovation cycle (Beard et al., 2009), with losses of innovation potential in phases of innovation transition from ‘discoveries to ideas to implementation and diffusion’ due to the limited efficiency of the Triple Helix actors involved in innovation creation (Research), innovation implementation and diffusion (Development).

-

2.

A comprehensive approach not sufficiently established and implemented that may incorporate the corresponding complements to investments necessary to achieve complex sustainable development returns.

-

3.

Weak managerial and organization practices, as key firm capabilities, to bring innovation successfully to the market especially for new emerging technology and innovation entrepreneurial ventures.

-

4.

Ineffective innovation policies not finely tuned to the characteristics of concrete innovation ecosystems.

-

5.

Weak government capabilities in developing and implementing effective innovation policies.

The analysis presented provides thorough and substantial answers to the research questions posited. The results firmly establish the opportunity of developing an efficiency-based Triple Helix composite index. This approach allows smaller countries to excel even under limited resources when used efficiently. In addition, the research illustrates potential implications for policy makers where additional expertise may improve certain national environments. Nevertheless, there are prerequisites for the DEA method: sufficient observation units, available indicators, non-negative data and classification according to the Triple Helix agents. This paper proposes a framework for measuring Triple Helix-based efficiency. With an updated set of indicators, the solution is scalable at any level (i.e., national, regional, local) and is useable to measure unit efficiency within each Triple Helix pillar (government, university or industry) with the data available.

Conclusion

The presented research and results point to a novel approach to creating a composite index for Triple Helix-based efficiency evaluation. Leydesdorff and Ivanova (2016) have highlighted that the Triple Helix model has become neo-evolutionary in relation to interactions among selection environments as determined by demand, supply and technological capabilities. The approach presented in this paper supports this claim and considers the technological capabilities (inputs) of the selected environment (a country) and evaluates the results obtained (outputs) in comparison to the available resources of a national innovation system.

In response to the first research question, we have summarized and evaluated approaches to measuring Triple Helix performance (Table 1). The analysis identified gaps in existing methodologies, which in turn served as a foundation to propose a novel application of DEA. Answering the second research question is an original methodology aimed at introducing a holistic, systemic approach to measuring Triple Helix-based efficiency of innovation systems. A combined set of indicators from verified official databases is classified into three separate pillars building up a comprehensive composite index of a Triple Helix-based innovation ecosystem.

To estimate the innovation efficiency of the 34 OECD countries examined, a multilevel DEA model was applied. The findings imply the possibility of creating a comprehensive measure of Triple Helix efficiency at the national level that may provide performance scores for all Triple Helix functions: Novelty Production, Normative Control and Wealth Generation, as well as an overall Triple Helix index based on the scores of these three pillars. The outcomes presented provide valuable data on weak links within an ecosystem and the improvements that could be made to create a more innovative and efficient national system based on the examination of indicators within the pillars. The measurement findings point to multiple important factors to be considered more as accounted for the European Innovation Paradox: weak governmental capacities for policy implementation, ineffective and unadjusted innovation policies, weak managerial practices, lack of comprehensive approaches and practices to utilize investment, and loss of innovation potential that may be attributed to the limited efficiency of the Triple Helix actors involved in both research and development activities.

This study provides three main scholarly contributions: (1) a summary and critical analysis of approaches to measuring the Triple Helix; (2) a further utilizable application of the Data Envelopment Analysis method as well as a demonstration of its functionality; and (3) a step towards measuring the Triple Helix-based performance nationally.

While this study provides an innovative and useful approach to measuring Triple Helix-based efficiency, there are five distinct limitations that must be acknowledged:

-

1.

The method is sensitive to the number of indicators in the model. In DEA, the number of indicators is determined by the total number of units (for the purpose of our research, countries). In general, the multiplied score of both the inputs and outputs should be the minimum of the countries examined (e.g., four input and five output indicators require at least 20 countries). As this paper uses a two-phase approach and aggregates indicators into pillars, this limitation is mitigated.

-

2.

The set of indicators presented may be expanded, as data important as markers of innovation activity were unavailable. The data in this research was manly collected from the OECD’s Main Science and Technology indicators, providing official, functional and available data. However, numerous important aspects may not be covered by these indicators. For instance, a crucial factor of a university’s innovative performance is its number of spin-off companies. Unfortunately, to the best of our knowledge, there are no publicly available national data. This indicator would enhance a holistic approach and improve the reliability of the results provided. This limitation could be resolved by adapting methodologies and instructions for measuring innovative performance at a national level.

-

3.

The weights presented are data-driven, based on the presented indicator values. If data or a country is excluded from the ranking, the values and efficiency scores might change.

-

4.

The boundaries provided for the weight values are subject to debate and might be changed in a what-if analysis to provide more reliable information. In this research, no zero-weights were permitted for any indicator, but the limitations were not strict, following the theoretical approach of the Triple Helix concept, in which actors may take one another’s roles within the ecosystem (Cai & Etzkowitz, 2020).

-

5.

For those indicators that affect two or three helices, indicator values were arbitrarily assigned (equally distributed).

To further improve the measurement and its applications, particularly to overcome the hitherto noted limitations, further studies are to be carried out. Future research will assess an updated set of indicators, by which we will attempt to identify novel metrics necessary to shed light onto all important perspectives of Triple Helix-based innovative activity. In order to compare the results with the most renowned approach in this field, mutual information Triple Helix indicator, it would be useful to calculate the mutual information indicator for examined OECD countries. This indicator provides valuable conclusions for policymakers, while the presented efficiency approach could offer additional information and a different perspective of implications that would be significant for strategies. We shall also test alternative DEA models and a modified set of weights to identify the most relevant approach to measuring Triple Helix-based efficiency. Network DEA is suitable for the Triple Helix perspective, as it may include indicators of inputs and outputs created individually or mutually by actors. That way, the interactions between the Triple-Helix actors may also be covered.

Finally, the applicability of the model will be tested at other levels (regional and local) to assess the scalability of the model and derive additional applications of the proposed framework. Furthermore, modification similar to Fair DEA (Radovanović et al., 2021) model may be implied to eliminate disparate impact on efficiency measure between the developed and undeveloped units.

With proper model adjustments and the domain expertise of local strategists and analysts, the model presented might prove to be a valuable tool for policymakers by providing essential results through an approach scalable at national, regional and local levels.

Availability of data and material

The datasets analysed during the current study are available in the OECD repository, https://stats.oecd.org/Index.aspx?DataSetCode=MSTI_PUB, World Bank repository, https://databank.worldbank.org/source/education-statistics-%5e-all-indicators, SCImago JR repository, https://www.scimagojr.com/countryrank.php, and Global Innovation Index repository, https://www.wipo.int/edocs/pubdocs/en/wipo_pub_gii_2016.pdf. The datasets generated during the current study are available from the corresponding author on reasonable request.

Code availability

Not applicable.

References

Afonso, A., Schuknecht, L., & Tanzi, V. (2010). Public sector efficiency: Evidence for new EU member states and emerging markets. Applied Economics, 42(17), 2147–2164. https://doi.org/10.1080/00036840701765460

Alves, L., & Daniel, A.D. (2019). Protection and Commercialization of Patents in Portuguese Universities: Motivations and Perception of Obstacles by Inventors. In: J. Machado, F. Soares, G. Veiga (Eds), Innovation, Engineering and Entrepreneurship. HELIX 2018. Lecture Notes in Electrical Engineering, (vol 505, pp. 471–477). Springer, Cham. https://doi.org/10.1007/978-3-319-91334–6_

Andersen, P., & Petersen, N. C. (1993). A Procedure for Ranking Efficient Units in Data Envelopment Analysis. Management Science, 39(10), 1261–1264. https://doi.org/10.1287/mnsc.39.10.1261

Anttonen, M., Lammi, M., Mykkänen, J., & Repo, P. (2018). Circular economy in the triple Helix of innovation systems. Sustainability, 10(8), 2646. https://doi.org/10.3390/su10082646

Argyropoulou, M., Soderquist, K. E., & Ioannou, G. (2019). Getting out of the European Paradox trap: Making European research agile and challenge driven. European Management Journal, 37(1), 1–5. https://doi.org/10.1016/j.emj.2018.10.005

Aristovnik, A. (2012). The relative efficiency of education and R&D expenditures in the new EU member states. Journal of Business Economics and Management, 13(5), 832–848. https://doi.org/10.3846/16111699.2011.620167

Baciu, L., & Botezat, A. (2014). A comparative analysis of the public spending efficiency of the new EU member states: A DEA approach. Emerging Markets Finance and Trade, 50(sup4), 31–46. https://doi.org/10.2753/REE1540-496X5004S402

Baldini, N. (2009). Implementing Bayh–Dole-like laws: Faculty problems and their impact on university patenting activity. Research Policy, 38(8), 1217–1224. Doi: https://doi.org/10.1016/j.respol.2009.06.013

Beard, T. R., Ford, G. S., Koutsky, T. M., & Spiwak, L. J. (2009). A Valley of Death in the innovation sequence: An economic investigation. Research Evaluation, 18(5), 343–356. https://doi.org/10.3152/095820209X481057

Bloomberg (2016). The Bloomberg’s most innovative countries 2015. Retrieved February, 20, 2021. https://www.bloomberg.com/graphics/2015-innovative-countries/

Bloomberg (2020). Germany breaks Korea’s six-year streak as most innovative nation. Retrieved February, 20, 2021. https://www.bloomberg.com/news/articles/2020-01-18/germany-breaks-korea-s-six-year-streak-as-most-innovative-nation

Brignall, S., & Modell, S. (2000). An institutional perspective on performance measurement and management in the ‘new public sector’. Management accounting research, 11(3), 281–306. Doi: https://doi.org/10.1006/mare.2000.0136

Cai, Y. (2011). Factors affecting the efficiency of the BRICSs' national innovation systems: A comparative study based on DEA and Panel Data Analysis. Economics Discussion Paper, No 2011–52. Kiel: Kiel Institute for the World Economy.

Cai, Y., & Etzkowitz, H. (2020). Theorizing the triple Helix model: Past, present, and future. Triple Helix, 7(2–3), 189–226. https://doi.org/10.1163/21971927-bja10003

Cai, Y., & Liu, C. (2020). The Role of University as Institutional Entrepreneur in Regional Innovation System: Towards an Analytical Framework. In M. T. Preto, A. Daniel, & A. Teixeira (Eds.), Examining the Role of Entrepreneurial Universities in Regional Development. 133–155. IGI Global. Doi: https://doi.org/10.4018/978-1-7998-0174-0.ch007

Carayannis, E. G., Goletsis, Y., & Grigoroudis, E. (2015). Multi-level multi-stage efficiency measurement: The case of innovation systems. Operational Research, 15(2), 253–274. https://doi.org/10.1007/s12351-015-0176-y

Cetin, V. R., & Bahce, S. (2016). Measuring the efficiency of health systems of OECD countries by data envelopment analysis. Applied Economics, 48(37), 3497–3507. https://doi.org/10.1080/00036846.2016.1139682

Charnes, A., Cooper, W. W., & Rhodes, E. (1978). Measuring the efficiency of decision makin units. European Journal of Operational Research, 2(6), 429–444. https://doi.org/10.1016/0377-2217(78)90138-8

Cherchye, L., Moesen, W., Rogge, N., & Puyenbroeck, T. (2007). An Introduction to ‘Benefit of the Doubt’ composite indicators. Social Indicator Research, 82, 111–145. https://doi.org/10.1007/s11205-006-9029-7

Cherchye, L., Moesen, W., Rogge, N., Van Puyenbroeck, T., Saisana, M., Saltelli, A., Liska, R., & Tarantola, S. (2008). Creating composite indicators with DEA and robustness analysis: The case of the Technology Achievement Index. Journal of the Operational Research Society, 59(2), 239–251. https://doi.org/10.1057/palgrave.jors.2602445

Cirilloa, V., Martinelli, A., & Trancheroa, A. N. (2018). How it all began: The long term evolution of scientific and technological performance and the diversity of National Innovation Systems. Retrieved August, 5, 2021. http://www.isigrowth.eu/wp-content/uploads/2018/05/working_paper_2018_12.pdf

Cooper, W., Seiford, L., & Tone, K. (2007). Models with Restricted Multipliers. In: Data Envelopment Analysis. Springer, Boston, MA. Doi: https://doi.org/10.1007/978-0-387-45283-8_6

Cornell University, INSEAD, and WIPO. (2016). The Global Innovation Index 2016: Winning with GlobalInnovation. Ithaca, Fontainebleau, and Geneva. Retrieved May, 15, 2020. https://www.wipo.int/edocs/pubdocs/en/wipo_pub_gii_2016.pdf

Ćujić, M., Jovanović, M., Savić, G., & Levi Jakšić, M. (2015). Measuring the efficiency of air navigation services system by using DEA method. International Journal for Traffic and Transport Engineering, 5(1), 36–44. https://doi.org/10.7708/ijtte.2015.5(1).05

Dankbaar, B. (2019). Design rules for ‘Triple Helix’ organizations. Technology Innovation Management Review, 9(11), 54–63. Doi: https://doi.org/10.22215/timreview/1283

Dogan, N. O., & Tugcu, C. T. (2015). Energy efficiency in electricity production: A data envelopment analysis (DEA) approach for the G-20 countries. International Journal of Energy Economics and Policy, 5(1), 246–252.

Dosi, G., Llerena, P., & Labini, M. S. (2006). The relationships between science, technologies and their industrial exploitation: An illustration through the myths and realities of the so-called ‘European Paradox.’ Research Policy, 35(10), 1450–1464. https://doi.org/10.1016/j.respol.2006.09.012

Dutta, P., Jain, A., & Gupta, A. (2020). Performance analysis of non-banking finance companies using two-stage data envelopment analysis. Annals of Operations Research, 295, 91–116. https://doi.org/10.1007/s10479-020-03705-6

Egorov, N., & Pospelova, T. (2019) Assessment of Performance Indicators of Innovative Activity of Subjects of The Russian Arctic Based on The Triple Helix Model. In IOP Conference Series: Earth and Environmental Science, 272(3), 032178. IOP Publishing. Doi: https://doi.org/10.1088/1755-1315/272/3/032178

Etzkowitz, H. & Leydesdorff, L. (1995). The Triple Helix -- University-Industry-Government Relations: A Laboratory for Knowledge Based Economic Development. EASST Review, 14(1), 14–19. Available at SSRN: https://ssrn.com/abstract=2480085

Etzkowitz, H., & Leydesdorff, L. (2000). The dynamics of innovation: From National Systems and “Mode 2” to a Triple Helix of university-industry-government relations. Research Policy, 29(2), 109–123. https://doi.org/10.1016/S0048-7333(99)00055-4

Etzkowitz, H., & Zhou, C. (2017). The triple helix: University–industry–government innovation and entrepreneurship. Routledge.

Ferri, S., Fiorentino, R., Parmentola, A., & Sapio, A. (2019). Patenting or not? The dilemma of academic spin-off founders. Business Process Management Journal, 25(1), 84–103. https://doi.org/10.1108/BPMJ-06-2017-0163

Fini, R., Fu, K., Mathisen, M. T., Rasmussen, E., & Wright, M. (2017). Institutional determinants of university spin-off quantity and quality: A longitudinal, multilevel, cross-country study. Small Business Economics, 48(2), 361–391. https://doi.org/10.1007/s11187-016-9779-9

Galvao, A., Mascarenhas, C., Marques, C., Ferreira, J., & Ratten, V. (2019). Triple helix and its evolution: A systematic literature review. Journal of Science and Technology Policy Management, 10(3), 812–833. https://doi.org/10.1108/JSTPM-10-2018-0103

Guo, X., Lu, C. C., Lee, J. H., & Chiu, Y. H. (2017). Applying the dynamic DEA model to evaluate the energy efficiency of OECD countries and China. Energy, 134, 392–399. https://doi.org/10.1016/j.energy.2017.06.040

Halkos, G. E., Tzeremes, N. G., & Kourtzidis, S. A. (2016). Measuring sustainability efficiency using a two-stage data envelopment analysis approach. Journal of Industrial Ecology, 20(5), 1159–1175. https://doi.org/10.1111/jiec.12335

Halkos, G., & Petrou, K. N. (2019). Assessing 28 EU member states’ environmental efficiency in national waste generation with DEA. Journal of Cleaner Production, 208, 509–521. https://doi.org/10.1016/j.jclepro.2018.10.145

Hernández-Trasobares, A., & Murillo-Luna, J. L. (2020). The effect of triple helix cooperation on business innovation: The case of Spain. Technological Forecasting and Social Change, 161, 11. https://doi.org/10.1016/j.techfore.2020.120296

Huang, J., Xia, J., Yu, Y., & Zhang, N. (2018). Composite eco-efficiency indicators for China based on data envelopment analysis. Ecological Indicators, 85, 674–697. https://doi.org/10.1016/j.ecolind.2017.10.040

Ivanova, I., & Leydesdorff, L. (2015). Knowledge-generating efficiency in innovation systems: The acceleration of technological paradigm changes with increasing complexity. Technological Forecasting and Social Change, 96, 254–265. https://doi.org/10.1016/j.techfore.2015.04.001

Ivanova, I., Strand, Ø., & Leydesdorff, L. (2019). An eco-systems approach to constructing economic complexity measures: Endogenization of the technological dimension using Lotka-Volterra equations. Advances in Complex Systems, 22(1), 1850023. https://doi.org/10.1142/S0219525918500236

Jovanović, M.M., Rakićević, J.Đ., Jeremić, V.M., & Levi Jakšić, M.I. (2020). How to Measure Triple Helix Performance? A Fresh Approach. In A. Abu-Tair, A. Lahrech, K. Al Marri, B. Abu-Hijleh (Eds.), Proceedings of the II International Triple Helix Summit. THS 2018. Lecture Notes in Civil Engineering, 43, 245–261. Springer, Cham. Doi: https://doi.org/10.1007/978-3-030-23898-8_18

Keramatfar, A., & Esparaein, F. (2014). University, Industry, Government Measuring Triple Helix in the Netherlands, Russia, Turkey, Iran; Webometrics approach. In H. Etzkowitz, A. Uvarov, E. Galazhinsky (Eds.) Proceedings of Triple Helix XII International Conference «The Triple Helix and Innovation-Based Economic Growth: New Frontiers and Solutions» (pp. 209–212). TUSUR, Tomsk. Doi: https://doi.org/10.13140/2.1.3668.8646

Lawton Smith, H., & Ho, K. (2006). Measuring the performance of Oxford University, Oxford Brookes University and the government laboratories’ spin-off companies. Research Policy, 35(10), 1554–1568. https://doi.org/10.1016/j.respol.2006.09.022

Layos, J. J. M., & Peña, P. J. (2020). Can Innovation Save Us? Understanding the Role of Innovation in Mitigating the COVID-19 Pandemic in ASEAN-5 Economies. De La Salle University Business Notes & Briefings (BNB), 8(2). Doi: https://doi.org/10.2139/ssrn.3591428

Lebas, M. J. (1995). Performance measurement and performance management. International journal of production economics, 41(1–3), 23–35. Doi: https://doi.org/10.1016/0925-5273(95)00081-X

Lerman, L. V., Gerstlberger, W., Lima, M. F., & Frank, A. G. (2021). How governments, universities, and companies contribute to renewable energy development? A municipal innovation policy perspective of the triple helix. Energy Research and Social Science, 71, 101854. https://doi.org/10.1016/j.erss.2020.101854

Levi Jakšić, M., Jovanović, M., & Petković, J. (2015). Technology entrepreneurship in the changing business environment—A triple Helix performance model. Amfiteatru Economic, 17(38), 422–440.

Leydesdorff, L. (2003). The mutual information of university-industry-government relations: An indicator of the Triple Helix dynamics. Scientometrics, 58, 445–467. https://doi.org/10.1023/A:1026253130577

Leydesdorff, L. (2012). The triple helix quadruple helix … and an N-Tuple of helices: Explanatory models for analyzing the knowledge-based economy? Journal of the Knowledge Economy, 3(1) 25–35. https://doi.org/10.1007/s13132-011-0049-4

Leydesdorff, L., & Deakin, M. (2011). The triple-Helix model of smart cities: A neo-evolutionary perspective. Journal of Urban Technology, 18(2), 53–63. https://doi.org/10.1080/10630732.2011.601111

Leydesdorff, L., & Fritsch, M. (2006). Measuring the knowledge base of regional innovation systems in Germany in terms of a triple Helix dynamics. Research Policy, 35, 1538–1553. https://doi.org/10.1016/j.respol.2006.09.027

Leydesdorff, L., & Ivanova, I. (2016). “Open innovation” and “triple helix” models of innovation: Can synergy in innovation systems be measured? Journal of Open Innovation: Technology, Market, and Complexity, 2, 11. https://doi.org/10.1186/s40852-016-0039-7

Leydesdorff, L., & Meyer, M. (2006). Triple Helix indicators of knowledge-based innovation systems: Introduction to the special issue. Research Policy, 35(10), 1441–1449. https://doi.org/10.1016/j.respol.2006.09.016

Leydesdorff, L., & Smith, H. L. (2021). Triple, Quadruple, and Higher-Order Helices: Historical Phenomena and (Neo-)Evolutionary Models. SSRN: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3817410

Leydesdorff, L., & Strand, Ø. (2012a). The Swedish system of innovation: Regional synergies in a knowledge-based economy. Journal of the American Society for Information Science and Technology, 62(11), 2133–2146. https://doi.org/10.1002/asi.22895

Leydesdorff, L., & Strand, Ø. (2012b). Triple-Helix Relations and Potential Synergies Among Technologies, Industries, and Regions in Norway. Procedia: Social and Behavioural Sciences, 52, 1–4. https://doi.org/10.1016/j.sbspro.2012.09.435

Leydesdorff, L., & Sun, Y. (2009). National and international dimensions of the Triple Helix in Japan: University–industry–government versus international co-authorship relations. Journal of the American Society for Information Science and Technology, 60(4), 778–788. https://doi.org/10.1002/asi.20997

Leydesdorff, L., & Zawdie, G. (2010). The triple helix perspective of innovation systems. Technology Analysis and Strategic Management, 22(7), 789–804. https://doi.org/10.1080/09537325.2010.511142

Leydesdorff, L., Dolfsma, W., & Van der Panne, G. (2006). Measuring the knowledge base of an economy in terms of triple-Helix relations among ‘technology, organization, and territory.’ Research Policy, 35, 181–199. https://doi.org/10.1016/j.respol.2005.09.001

Leydesdorff, L., Perevodchikov, O., & Uvarov, A. (2015). Measuring triple-Helix synergy in the Russian innovation systems at regional, provincial, and national levels. Journal of the Association for Information Science and Technology, 66(6), 1229–1238. https://doi.org/10.1002/asi.23258

Luengo, M. J., & Obeso, M. (2013). El efecto de la triple héliceen los resultados de innovación. Revista De Administração De Empresas, 53, 388–399. https://doi.org/10.1590/S0034-75902013000400006

Mariano, E. B., Gobbo, J. A., Jr., de Castro Camioto, F., & do Nascimento Rebelatto, D. A. (2017). CO2 emissions and logistics performance: A composite index proposal. Journal of Cleaner Production, 163, 166–178. https://doi.org/10.1016/j.jclepro.2016.05.084

Marinković, S., Rakićević, J., & Levi Jaksić, M. (2016). Technology and Innovation Management Indicators and Assessment Based on Government Performance. Management: Journal of Sustainable Business and Management Solutions in Emerging Economies, 21(78), 1–10. Doi: https://doi.org/10.7595/management.fon.2016.0001

Mêgnigbêto, E. (2018). Modelling the Triple Helix of university-industry-government relationships with game theory: Core, Shapley value and nucleolus as indicators of synergy within an innovation system. Journal of Informetrics, 12(4), 1118–1132. https://doi.org/10.1016/j.joi.2018.09.005

Meyer, M., Grant, K., Morlacchi, P., & Weckowska, D. (2014). Triple Helix indicators as an emergent area of enquiry: A bibliometric perspective. Scientometrics, 99(1), 151–174. https://doi.org/10.1007/s11192-013-1103-8

Meyer, M., Siniläinen, T., & Utecht, J. (2003). Towards hybrid Triple Helix indicators: A study of university-related patents and a survey of academic inventors. Scientometrics, 58(2), 321–350. https://doi.org/10.1023/A:1026240727851

Msann, G., & Saad, W. (2020). Assessment of public sector performance in the MENA region: Data envelopment approach. International Review of Public Administration, 25(1), 1–21. https://doi.org/10.1080/12294659.2019.1702777

Niankara, I., Muqattash, R., Niankara, A., & Traoret, R. I. (2020). COVID-19 Vaccine development in a quadruple helix innovation system: Uncovering the preferences of the fourth Helix in the UAE. Journal of Open Innovation: Technology, Market, and Complexity, 6(4), 132. https://doi.org/10.3390/joitmc6040132

OECD. (2008). Handbook on Constructing Composite Indicators: Methodology and User Guide. OECD. Retrieved May, 15, 2020. https://www.oecd.org/sdd/42495745.pdf

OECD. (2019) Main Science and Technology Indicators. OECD. Retrieved December, 10, 2019, from http://www.oecd.org/sti/msti.htm (Accessed Dec 10, 2019)

OECD. (2020a). OECD Science, Technology and Innovation Outlook. OECD. Retrieved May, 20, 2020a. https://www.oecd.org/sti/science-technology-innovation-outlook/

OECD. (2020b). Main Science and Technology Indicators. OECD. Retrieved May, 20, 2020b, from https://stats.oecd.org/Index.aspx?DataSetCode=MSTI_PUB

Ouyang, W., & Yang, J. B. (2020). The network energy and environment efficiency analysis of 27 OECD countries: A multiplicative network DEA model. Energy, 197, 117161. https://doi.org/10.1016/j.energy.2020.117161

Paradi, J.C., Sherman, H.D., & Tam, F.K. (2018). DEA Models Overview. In Data Envelopment Analysis in the Financial Services Industry. International Series in Operations Research & Management Science, 266 (pp. 3–40). Springer, Cham, Doi: https://doi.org/10.1007/978-3-319-69725-3_1

Park, H. W., & Leydesdorff, L. (2010). Longitudinal trends in networks of university-industry-government relations in South Korea: The role of programmatic incentives. Research Policy, 39, 640–649. https://doi.org/10.1016/j.respol.2010.02.009

Priego, J. L. O. (2003). A Vector Space Model as a methodological approach to the Triple Helix dimensionality: A comparative study of Biology and Biomedicine Centres of two European National Research Councils from a Webometric view. Scientometrics, 58(2), 429–443. https://doi.org/10.1023/a:1026201013738

Radovanović, S., Savić, G., Delibašić, B., & Suknović, M. (2021). FairDEA—Removing disparate impact from efficiency scores. European Journal of Operational Research, Online First,. https://doi.org/10.1016/j.ejor.2021.12.001

Ranga, M., & Etzkowitz, H. (2013). Triple Helix systems: An analytical framework for innovation policy and practice in the knowledge society. Industry and Higher Education, 27(4), 237–262. https://doi.org/10.5367/ihe.2013.0165

Razak, A. A., & White, G. R. T. (2015). The Triple Helix model for innovation: A holistic exploration of barriers and enablers. International Journal of Business Performance and Supply Chain Modelling, 7(3), 278. https://doi.org/10.1504/ijbpscm.2015.071600

Rodrigues, C., & Melo, A. (2012). The triple Helix model as an instrument of local response to the economic crisis. European Planning Studies, 20(9), 1483–1496. https://doi.org/10.1080/09654313.2012.709063

Sá, E., Casais, B., & Silva, J. (2019). Local development through rural entrepreneurship, from the Triple Helix perspective: The case of a peripheral region in northern Portugal. International Journal of Entrepreneurial Behavior & Research, 25(4), 698–716. https://doi.org/10.1108/IJEBR-03-2018-0172

Samo, A. H., & Huda, N. U. (2019). Triple Helix and academic entrepreneurial intention: Understanding motivating factors for academic spin-off among young researchers. Journal of Global Entrepreneurship Research, 9, 12. https://doi.org/10.1186/s40497-018-0121-7

Savić, G. & Martić, M. (2017). Composite Indicators Construction by Data Envelopment Analysis: Methodological Background. In V. Jeremić, Z. Radojičić, & M. Dobrota, Emerging Trends in the Development and Application of Composite Indicators (pp. 98–126). Hershey, PA: IGI Global. Doi: https://doi.org/10.4018/978-1-5225-0714-7.ch005

Scalia, M., Barile, S., Saviano, M., & Farioli, F. (2018). Governance for sustainability: A triple-helix model. Sustainability Science, 13, 1235–1244. https://doi.org/10.1007/s11625-018-0567-0

SCImago JR (2020). Scimago Journal & Country Rank. Retrieved May, 15, 2020, from https://www.scimagojr.com/countryrank.php

Shi, C., & Land, K. C. (2020). The data envelopment analysis and equal weights/minimax methods of composite social indicator construction: A methodological study of data sensitivity and robustness. Applied Research Quality Life. https://doi.org/10.1007/s11482-020-09841-2

Singer, S., & Oberman Peterka, S. (2012). Triple Helix evaluation: How to test a new concept with old indicators? Ekonomski Pregled, 63(11), 608–626.

Song, M. L., Zhang, L. L., Liu, W., & Fisher, R. (2013). Bootstrap-DEA analysis of BRICS’ energy efficiency based on small sample data. Applied Energy, 112, 1049–1055. https://doi.org/10.1016/j.apenergy.2013.02.064

Tarnawska, K., & Mavroeidis, V. (2015). Efficiency of the knowledge triangle policy in the EU member states: DEA approach. Triple Helix, 2, 17. https://doi.org/10.1186/s40604-015-0028-z

Tijssen, R. J. W. (2006). Universities and Industrially relevant science: Towards measurement models and indicators of entrepreneurial orientation. Research Policy, 35(10), 1569–1585. https://doi.org/10.1016/j.respol.2006.09.025

Todeva, E., Alshamsi, A. M., & Solomon, A. (2019). Triple Helix Best Practices—The Role of Government/Academia/Industry in Building Innovation-Based Cities and Nations, Vol. 1. The Triple Helix Association.

Top, M., Konca, M., & Sapaz, B. (2020). Technical efficiency of healthcare systems in African countries: An application based on data envelopment analysis. Health Policy and Technology, 9(1), 62–68. https://doi.org/10.1016/j.hlpt.2019.11.010

Vierstraete, V. (2012). Efficiency in human development: A data envelopment analysis. The European Journal of Comparative Economics, 9(3), 425–443.

Villanueva-Felez, A., Molas-Gallart, J., & Escribá-Esteve, A. (2013). Measuring personal networks and their relationship with scientific production. Minerva, 51(4), 465–483. https://doi.org/10.1007/s11024-013-9239-5

World Bank (2020). World Bank Education Statistics. Retrieved May, 15, 2020, from https://databank.worldbank.org/source/education-statistics-%5e-all-indicators

Xu, H., & Liu, F. (2017). Measuring the efficiency of education and technology via DEA approach: Implications on national development. Social Sciences, 6(4), 136. https://doi.org/10.3390/socsci6040136

Xu, H.-Y., Zeng, R.-Q., Fang, S., Yue, Z.-H., & Han, Z.-B. (2015). Measurement methods and application research of triple Helix model in collaborative innovation management. Qualitative and Quantitative Methods in Libraries, 4(2), 463–482.

Ye, W., & Wang, Y. (2019). Exploring the triple helix synergy in Chinese national system of innovation. Sustainability, 11(23), 6678. https://doi.org/10.3390/su11236678

Yesilay, R.B., & Halac, U. (2020), "An Assessment of Innovation Efficiency in EECA Countries Using the DEA Method", Grima, S., Özen, E. and Boz, H. (Eds.), Contemporary Issues in Business Economics and Finance,104 (pp. 203–215). Doi: https://doi.org/10.1108/S1569-375920200000104014

Ziolo, M., Jednak, S., Savić, G., & Kragulj, D. (2020). Link between energy efficiency and sustainable economic and financial development in OECD Countries. Energies, 13(22), 5898. https://doi.org/10.3390/en13225898

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

Conceptualization of the study MJ; Methodology: MJ and GS; Formal analysis and investigation: all authors; Writing—original draft preparation: MJ; Writing—review and editing: all authors; Resources: MJ and GS; Supervision: YC, MLJ. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Ethics approval

This paper does not contain any studies with human participants or animals performed by any of the authors.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Appendices

Appendix A Normative Control sub-index performance

Inputs | Outputs | Results | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

GERD financed by govt | Gov. researchers | BERD financed by govt | GOVERD financed by bus | Civil GBARD for gen. uni. funds | Gov. expenditure on tert. edu | Number of patents | Scientific & technical articles | Intellectual property receipts | Trade exports | Efficiency | Gov. rank | |

Austria | 0.3558 | 0.1791 | 0.0500 | 0.0500 | 0.1110 | 0.6000 | 0.8051 | 0.1849 | 0.0050 | 0.0050 | 0.743 | 23 |

Belgium | 0.3793 | 0.0500 | 0.0500 | 0.0500 | 0.0568 | 0.6000 | 0.5803 | 0.0953 | 0.3195 | 0.0050 | 0.843 | 21 |