Abstract

The beauty of science lies within its core assumption that it seeks to identify the truth, and as such, the truth stands alone and does not depend on the person who proclaims it. However, people's proclivity to succumb to various stereotypes is well known, and the scientific world may not be exceptionally immune to the tendency to judge a book by its cover. An interesting example is geographical bias, which includes distorted judgments based on the geographical origin of, inter alia, the given work and not its actual quality or value. Here, we tested whether both laypersons (N = 1532) and scientists (N = 480) are prone to geographical bias when rating scientific projects in one of three scientific fields (i.e., biology, philosophy, or psychology). We found that all participants favored more biological projects from the USA than China; in particular, expert biologists were more willing to grant further funding to Americans. In philosophy, however, laypersons rated Chinese projects as better than projects from the USA. Our findings indicate that geographical biases affect public perception of research and influence the results of grant competitions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Numerous economic studies have provided evidence that the country of origin of a given work influences how the work is perceived (Halkias et al., 2016; Ivens et al., 2015). Typically, products originating from prosperous and economically developed countries are perceived as better than products from less-economically developed countries (Al-Sulaiti & Baker, 1998; Sean Burns & Fox, 2017; Verlegh & Steenkamp, 1999). For instance, products made in well-developed countries are rated as more trustworthy and more durable than products made in less-developed countries. These differences are even more pronounced in the case of expensive and complex products (e.g., cars, computers) compared with simpler and cheaper products (e.g., clothes, food Al-Sulaiti & Baker, 1998; Magnusson et al., 2011; Verlegh & Steenkamp, 1999).

To date, experimental studies have focused mainly on consumer goods ratings without analyzing the stereotyping process in evaluating the quality of scientific publications. Most scientific discoveries and scientific publications are derived from the wealthiest countries. For instance, King (2004) found that 98% of the world's most highly cited papers originated from only 31 countries, which leaves 2% originating from the remaining > 150 countries (Sumathipala et al., 2004). This disparity may lead to the stereotypically lowered ratings of scientific papers deriving from those other countries, decreasing their chances of a publication (Merton, 1973). Researchers from less-developed countries commonly share this perspective because they believe that the rejections of their papers are due to a substantial editorial bias against studies conducted in their countries (Horton, 2000). Some studies support this assumption (Harris et al., 2017; Yousefi-Nooraie et al., 2006). For instance, the rate of manuscript acceptance at the American Journal of Roentgenology was found to be 23.5% for manuscripts from North America, 12.8% from Europe, and 2.5% from Asia; in addition, the rate of manuscript rejections was 37.2% for manuscripts from North America, 59% from Europe, and 80% from Asia (Kliewer et al., 2004). Most recently, the Association for Psychological Science honored 18 scientists at its annual awards. Out of the 18 award recipients, 17 were from US institutions (Association for Psychological Science, 2020).

Noteworthy, geographical bias may be more pronounced in some countries than in others. Opthof et al. (2002) observed that although American and British reviewers more favorably rated manuscripts from their countries (USA and UK, accordingly), there were substantial differences in ratings of foreign manuscripts. Namely, reviewers from the USA rated non-USA manuscripts higher than non-USA reviewers, but reviewers from the UK rated non-UK manuscripts lower than the non-UK reviewers did.

However, it is still unclear whether the affiliation effect on the study rating is due to the quality of the studies (i.e., researchers from well-developed countries and prestigious universities are assumed to be better scientists who conduct higher-quality research) or is a source of a country bias. We hypothesize that:

-

1.

Study's country of origin influences ratings of the study's impact on the research field, appropriateness of methodology, and justifiability of funding.

-

2.

Geographical bias in the reception of the given study differs across different disciplines.

-

3.

The patterns of geographical bias in the reception of the given study differ when the study is rated by scholars and by laymen.

Methods

The present OSF preregistered studies are available from the OSF Registries website (see https://osf.io/rk2wh). The Institutional Ethics Committee at the Institute of Psychology, University of Wroclaw approved the study protocols. The Institutional Ethics Committee gave their ethical approval for conducting the study and ethical consent not to reveal the study's true purpose to the participants. It was essential because there was a risk that knowing the true aim of the study could affect participants' responses (i.e., participants may want to modify their ratings to be perceived as more objective and tolerant). The study was conducted in 2019.

Study 1

Participants

A survey sample consisted of 1532 participants (876 women) from a non-scientific background: 510 were French, 516 were German, and 506 were British. The participants were recruited through an external survey firm, and they provided their informed, written consent to participate in the study.

Materials and procedure

The participants were invited to participate in a study in which they would rate a scientific press release. Three different press releases were created by experts from three different research fields (biology, philosophy, and psychology). All texts described findings from a recently 'conducted' research study within these three research fields. All texts outlined fictional studies, but the information that the given press release 'will soon be published in the international press' prevented the participants from uncovering their fictional nature. All press releases were short and of a similar word count (325 words in the philosophy press release, 393 words in biology, and 345 words in psychology). Three versions of each of the three studies in the press releases were produced that varied concerning the researchers' country of origin (i.e., USA, Poland, or China). In each version, the name of the researcher leading the research team appeared three times (i.e., it was Johnson in the American version, Nowak in the Polish version, and Wong Xiaoping in the Chinese version), the nationality of the researcher and the name of the researcher's city were mentioned once (it was Washington in the American version, Warsaw in the Polish version, and Beijing in the Chinese version). We did not mention any particular university to avoid the potential bias connected to the prestige of a given institute.

In total, there were three press releases for the three research fields (i.e., biology, philosophy, and psychology), with three versions of each press release within each of the fields using a different origin country for the study (USA, Poland, or China). Each participant was randomly provided with only one press release. All press releases are available in the Supplementary Material (deposited on OSF under Stimuli, see https://osf.io/zf3dj/).

The questionnaire created for the present study is available in the Supplementary Material (https://osf.io/zf3dj/). Participants responded on a seven-point Likert scale (ranging from 1 = 'I completely disagree' to 7 = 'I completely agree') to the ten questions. The first seven questions were buffer questions in the context of our hypotheses and concerned the press release quality (e.g., 'The press release is interesting and riveting.' 'The press release is of sufficient length.'). The following three questions were the items that tested the main hypothesis of the present study: (1) 'The described study is likely to have a big impact on the research field'; (2) 'The described study was conducted appropriately (without major limitations)'; and (3) 'The grant funding provided to this research project seems justified.'

Study 2

Participants

The survey sample comprised 480 participants (205 women) from a scientific background who were recruited: (1) through an email invitation (email invitations were sent to corresponding authors of papers published in journals indexed on the Journal Citations Reports list that was from one of the three research areas: biology, philosophy, and psychology) and (2) with the help of an external survey company which recruited doctoral graduates from biology, philosophy, and psychology. We obtained data regarding the nationality of 455 participants, among whom the most numerous five groups were: Germans (N = 101), French (N = 79), British (N = 107), Polish (N = 52), and Americans (N = 34). See the Supplementary Material for a detailed list of the scientists' nationalities.

Materials and procedure

The press releases and questionnaire used in the second study were the same as those used in the first study, with the addition of an 11th question: 'How much of an expert are you to evaluate the press release?', with responses ranging from 1 = 'I do not feel like I have the expert knowledge to evaluate the press release', to 7 = 'I have the expert knowledge to evaluate the press release.'

Statistical analyses

To test the hypotheses about the geographical bias in ratings of the study's (either deriving from the USA, Poland, or China): impact on the research field, appropriateness of methodology, and justifiability of funding across three disciplines (i.e., Biology, Psychology, and Philosophy), we run ANOVA models 3 (USA, Poland, China) × 3 (Biology, Psychology, Philosophy) with their interaction for both scholars and non-scholars. For models with scholars, we repeated the analyses: (1) only with scholars who described themselves as experts (answers four or above on the question about being an expert), and (2) with non-USA scholars. We followed all significant results with post-hoc analyses (Fisher's least significant difference procedure; Meier, 2006). All the analyses were performed in Jamovi (1.8.1) and JASP (0.14.1.0).

Results

Results of study 1

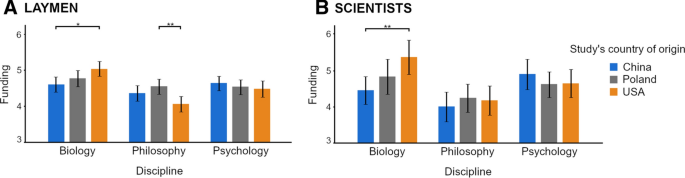

Our analysis of the answers provided by non-scientists (N = 1532; 34% from Germany, 33% from France, and 33% from Great Britain; 57% of whom were women) revealed a significant country effect on the ratings of the magnitude of the study’s impact on the research field (F(4,1523) = 5.8; p < 0.001) and willingness to grant funding to the research project (F(4,1523) = 4.4; p = 0.001). Ratings of the appropriateness of the study methodology almost reached statistical significance (F(4,1523) = 2.3; p = 0.05). In the case of biology, the participants rated American studies higher (p < 0.05) than Chinese studies on all three scales: Impact (MUSA = 5.03, SDUSA = 1.34 vs MChina = 4.69, SDChina = 1.32), Methodology (MUSA = 4.97, SDUSA = 1.31 vs MChina = 4.66, SDChina = 1.18), and Funding (MUSA = 5.03, SDUSA = 1.48 vs MChina = 4.61, SDChina = 1.23). In the case of philosophy, the participants rated American studies as having a lower impact on the field (MUSA = 3.98, SDUSA = 1.43) than Chinese (MChina = 4.39, SDChina = 1.60; p < 0.01) or Polish studies (MPoland = 4.53, SDPoland = 1.42; p < 0.01), and as being less justifiable funded (MUSA = 4.08, SDUSA = 1.41) than Polish studies (MPoland = 4.55, SDPoland = 1.37; p < 0.01). In the case of psychology, Chinese studies were rated as having higher impact (MChina = 4.70, SDChina = 1.37) than studies from the USA (MUSA = 4.26, SDUSA = 1.51; p < 0.01). All other comparisons within the same discipline were non-significant (see Fig. 1, and Fig S1–S2 in the Supplementary Material). For detailed comparison of descriptive statistics of the dependent variables, see Table S1 in the Supplementary Material.

Granting funds to support research projects. Means and 95% confidence intervals of non-scientists' (N = 1532, Panel A) and scientists' (N = 480; Panel B) ratings of their willingness to grant funding to the given research project, concerning the discipline (i.e., biology, philosophy, or psychology) and the origin country of the study (i.e., China, Poland, or the USA). * p < 0.05; ** p < 0.01

Results of study 2

The same analysis as in the case of non-scientists was conducted for scholars (total: N = 480 from 37 countries, 138 biologists, 163 philosophers, and 179 psychologists; 43% of whom were women; see the Supplementary Material). The results were statistically non-significant: the ratings of the magnitude of the study’s impact on the research field were F(4,471) = 0.71 (p = 0.58); the ratings of the appropriateness of the study methodology were F(4,471) = 1.16 (p = 0.32); and ratings of willingness to grant funding to the research project were F(4,471) = 2.1 (p = 0.07) (see Fig S1–S2 in the Supplementary Material).

Nevertheless, in the case of biology, even though biologists judged the press release materials, their ratings of willingness to grant funding to the research project were biased by the origin country of the study (F(2,135) = 5.19; p < 0.01). Biology researchers considered the granting of funds to biological research conducted by American researchers (MUSA = 5.38, SDUSA = 1.39) as being more reasonable compared with granting funds to the same biological research conducted by Chinese researchers (MChina = 4.46, SDChina = 1.53; post-hoc tests: p < 0.01) (see Fig. 1). Moreover, this effect remained significant: (1) if taking into consideration answers provided only by experts (i.e., biology researchers who rated themselves as biology experts with scores of four or higher on a seven-point Likert scale: ‘I do feel like I have the expert knowledge to evaluate the press release’) granting funds to American researchers (MUSA = 5.73, SDUSA = 1.24), granting funds to Chinese researchers (MChina = 4.67, SDChina = 1.56) (F(2,49) = 4.2; p = 0.02); and (2) if excluding from the analysis researchers from USA, (granting funds to the Americans: MUSA = 5.28, SDUSA = 1.37; granting funds to the Chinese: MChina = 4.49, SDChina = 1.52; F(2,85) = 4.8, p = 0.03. As the conservative approach advice against performing post-hoc tests if the main effect is non-significant (as in the present case: p = 0.07), we have also performed the Bayesian independent samples t-test to evaluate the likelihood of the alternative hypothesis as compared with the null hypothesis (Jarosz & Wiley, 2014). When we compared the willingness to fund a study from China versus America, we found that the alternative hypothesis (that American study is rated as more justifiable for funding than Chinese study) is 24 times more likely than the null hypothesis, which indicates strong evidence in support of the alternative hypothesis (Raftery, 1995). The results were non-significant in the cases of philosophy and psychology. See Table S2 in the Supplementary Material for a detailed comparison of the dependent variables' descriptive statistics. We have also tested for the foreign bias (all the results are presented in the Supplementary Material). In brief, we found that American scholars rated non-American studies significantly worse than non-American scholars rated non-American studies. However, noteworthy, these results are based on the analyses on small samples and do not allow for drawing any reliable conclusions.

Discussion

Although there is an ongoing discussion about gender biases in the scientific peer-review process (Caelleigh et al., 2003; Gilbert et al., 1994; Johansson et al., 2002; van den Besselaar et al., 2018), geographical bias is much less recognized and debated (Song et al., 2010). Nevertheless, it has been suggested to substantially impact the chances of publication of papers and receiving research grants (Kliewer et al., 2004; Opthof et al., 2002). Some of the findings may seem intuitive, such as better assessment of the same paper regarding its scientific value when the paper is written in English compared with other languages (e.g., Scandinavian language Nylenna et al., 1994) while others may seem less intuitive. For instance, Ross and colleagues (2006) provided evidence that blinding the authors' papers led to a significant decrease in the likelihood of reviewers from countries where English is the official language preferring abstracts from American authors over authors from other countries.

Interestingly, the results of our study, which was preregistered and conducted with a relatively large sample, showed that the geographical bias might not be as pronounced as thought previously (Song et al., 2010; Thaler et al., 2015). The fact that the geographical origin had a minor impact on ratings from reviewers with a scientific background corroborates some of the previous findings (Harris et al., 2015). In one case, however, we found a clear preference for American authors: i.e., the participants who were biology scientists indicated that granting funds to the study allegedly conducted by American authors was more reasonable than granting funds to the very same study allegedly conducted by Chinese authors. This geographical bias may be a considerable barrier for Chinese scientists (and most likely from those other developing countries) to receive scientific grants, especially in the current competitive times, when one point may decide who wins or loses in the race for scientific grants. Therefore, the geographical bias may be one of the factors sustaining the Matthew effect in science funding (Merton, 1973).

We observed much larger differences among the ratings of the scientific press releases assessed by laypersons than the relatively modest differences among the ratings assessed by experts (except for funding biological studies). Surprisingly, their assessments were sometimes counter-intuitive because they did not always favor American authors. In the case of philosophy, laypersons gave the highest ratings to a study conducted by Chinese authors, which, we believe, might be explained by the study's strong association with Confucius, a renowned Chinese philosopher (Ames & Rosemont, 1999).

If we extrapolate the present findings to make a real-world example,Footnote 1 other people would judge the same biological study that originated from China as having a worse impact on the research field by 5%, less appropriate methodology by 5.8% and being less justifiably funded by 9.7% than the study from the USA. In the case of the philosophical study, the one that allegedly originated from the USA would be rated as having a worse impact by 4.1%, worse methodology by 2.8%, and being less justifiably funded by 3.2% than the study from China. Future studies could draw on these findings and investigate the real-life outcomes of international grant competitions, as it may be that, for instance, biological applications from Chinese authors would be systematically undervalued as compared to applications from American authors.

Although the present studies contribute to a better understanding of geographical bias, we want to emphasize the main limitation. The participants read and evaluated press releases and not full papers or grant proposals. Thus, they based their opinions on superficial information without extensively reviewing the given work. Usually, even during the evaluation of grant proposals, Reviewers can read, at least, the shortened synopsis (and not only the abstract).

In conclusion, we want to emphasize that geographical biases should not be underestimated. There are many examples of how public opinion can affect research policy (Pullman et al., 2013), which shows the importance of the public perception of research, especially nowadays in the era of social media and trending news. Moreover, as our results corroborate previous research conducted within the realms of biology (e.g., Kliewer et al., 2004), we hypothesize that there may be a pronounced pattern of geographical bias, especially in funding biology studies. Thus, we believe that more studies are needed to evaluate the impact of geographical biases in the scientific world.

Availability of data and material

The data for the findings of this study are available from OSF (https://osf.io/zf3dj/).

Notes

In order to make that comparison, we have computed a mean of coefficient estimates for the scholars and the laymen and calculated the percentage of that estimate concerning a 1–7-point scale we used in this study to make the comparison more imaginative.

References

Al-Sulaiti, K. I., & Baker, M. J. (1998). Country of origin effects: A literature review. Marketing Intelligence and Planning, 16(3), 150–199. https://doi.org/10.1108/02634509810217309

Ames, R. T., & Rosemont, J. (1999). The analects of confucius: A philosophical translation. Choice reviews online. Ballantine.

Association for Psychological Science. (2020). ASP Awards. https://www.psychologicalscience.org/2020awards/

Caelleigh, A. S., Hojat, M., Steinecke, A., & Gonnella, J. S. (2003). Effects of reviewers’ gender on assessments of a gender-related standardized manuscript. Teaching and Learning in Medicine, 15(3), 163–167. https://doi.org/10.1207/S15328015TLM1503_03

Gilbert, J. R., Williams, E. S., & Lundberg, G. D. (1994). Is there gender bias in JAMA’s peer review process. JAMA: the Journal of the American Medical Association, 272(2), 139–142. https://doi.org/10.1001/jama.1994.03520020065018

Halkias, G., Davvetas, V., & Diamantopoulos, A. (2016). The interplay between country stereotypes and perceived brand globalness/localness as drivers of brand preference. Journal of Business Research, 69(9), 3621–3628. https://doi.org/10.1016/j.jbusres.2016.03.022

Harris, M., Macinko, J., Jimenez, G., Mahfoud, M., & Anderson, C. (2015). Does a research article’s country of origin affect perception of its quality and relevance? A national trial of US public health researchers. British Medical Journal Open, 5(12), 8993. https://doi.org/10.1136/bmjopen-2015-008993

Harris, M., Macinko, J., Jimenez, G., & Mullachery, P. (2017). Measuring the bias against low-income country research: An implicit association test. Globalization and Health, 13(1), 1–9. https://doi.org/10.1186/s12992-017-0304-y

Horton, R. (2000). North and south: Bridging the information gap. Lancet, 355(9222), 2231–2236. https://doi.org/10.1016/S0140-6736(00)02414-4

Ivens, B. S., Leischnig, A., Muller, B., & Valta, K. (2015). On the role of brand stereotypes in shaping consumer response toward brands: An empirical examination of direct and mediating effects of warmth and competence. Psychology and Marketing, 32(8), 808–820. https://doi.org/10.1002/mar.20820

Jarosz, A. F., & Wiley, J. (2014). What are the odds? A practical guide to computing and reporting bayes factors. Journal of Problem Solving, 7(1), 2–9. https://doi.org/10.7771/1932-6246.1167

Johansson, E. E., Risberg, G., Hamberg, K., & Westman, G. (2002). Gender bias in female physician assessments: Women considered better suited for qualitative research. Scandinavian Journal of Primary Health Care, 20(2), 79–84. https://doi.org/10.1080/02813430215553

King, D. A. (2004). The scientific impact of nations. Nature, 430(6997), 311–316. https://doi.org/10.1038/430311a

Kliewer, M. A., DeLong, D. M., Freed, K., Jenkins, C. B., Paulson, E. K., & Provenzale, J. M. (2004). Peer review at the American Journal of Roentgenology: How reviewer and manuscript characteristics affected editorial decisions on 196 major papers. American Journal of Roentgenology, 183(6), 1545–1550. https://doi.org/10.2214/ajr.183.6.01831545

Magnusson, P., Westjohn, S. A., & Zdravkovic, S. (2011). “What? I thought Samsung was Japanese”: Accurate or not, perceived country of origin matters. International Marketing Review, 28(5), 454–472. https://doi.org/10.1108/02651331111167589

Meier, U. (2006). A note on the power of Fisher’s least significant difference procedure. Pharmaceutical Statistics, 5(4), 253–263. https://doi.org/10.1002/pst.210

Merton, R. K. (1973). The matthew effect in science. In N. W. Storer (Ed.), The sociology of science: Theoretical and empirical investigations (pp. 439–459). University of Chicago Press.

Nylenna, M., Riis, P., & Karlsson, Y. (1994). Multiple blinded reviews of the same two manuscripts: effects of referee characteristics and publication language. JAMA: the Journal of the American Medical Association, 272(2), 149–151. https://doi.org/10.1001/jama.1994.03520020075021

Opthof, T., Coronel, R., & Janse, M. J. (2002). The significance of the peer review process against the background of bias: Priority ratings of reviewers and editors and the prediction of citation, the role of geographical bias. Cardiovascular Research, 56(3), 339–346. https://doi.org/10.1016/S0008-6363(02)00712-5

Pullman, D., Zarzeczny, A., & Picard, A. (2013). Media, politics and science policy: MS and evidence from the CCSVI Trenches. BMC Medical Ethics, 14(1), 6. https://doi.org/10.1186/1472-6939-14-6

Raftery, A. E. (1995). Bayesian model selection in social research. In D. M. Melamed & M. Vuolo (Eds.), Sociological methodology (pp. 111–196). Blackwell.

Ross, J. S., Gross, C. P., Desai, M. M., Hong, Y., Grant, A. O., & Daniels, S. R. (2006). Effect of blinded peer review on abstract acceptance. Journal of the American Medical Association, 295(14), 1675–1680. https://doi.org/10.1001/jama.295.14.1675

Sean Burns, C., & Fox, C. W. (2017). Language and socioeconomics predict geographic variation in peer review outcomes at an ecology journal. Scientometrics, 113, 1113–1127. https://doi.org/10.1007/s11192-017-2517-5

Song, F., Parekh, S., Hooper, L., Loke, Y. K., Ryder, J., & Sutton, A. J. (2010). Dissemination and publication of research findings: An updated review of related biases. Health Technology Assessment, 14(8), 1–220. https://doi.org/10.3310/hta14080

Sumathipala, A., Siribaddana, S., & Patel, V. (2004). Under-representation of developing countries in the research literature: Ethical issues arising from a survey of five leading medical journals. BMC Medical Ethics, 5, 1–6. https://doi.org/10.1186/1472-6939-5-5

Thaler, K., Kien, C., Nussbaumer, B., Van Noord, M. G., Griebler, U., Klerings, I., & Gartlehner, G. (2015). Inadequate use and regulation of interventions against publication bias decreases their effectiveness: A systematic review. Journal of Clinical Epidemiology, 68(7), 792–802. https://doi.org/10.1016/j.jclinepi.2015.01.008

van den Besselaar, P., Sandström, U., & Schiffbaenker, H. (2018). Studying grant decision-making: A linguistic analysis of review reports. Scientometrics, 117, 313–329. https://doi.org/10.1007/s11192-018-2848-x

Verlegh, P. W. J., & Steenkamp, J. B. E. M. (1999). A review and meta-analysis of country-of-origin research. Journal of Economic Psychology, 20(5), 521–546. https://doi.org/10.1016/S0167-4870(99)00023-9

Yousefi-Nooraie, R., Shakiba, B., & Mortaz-Hejri, S. (2006). Country development and manuscript selection bias: A review of published studies. BMC Medical Research Methodology, 6(1), 37. https://doi.org/10.1186/1471-2288-6-37

Funding

The study was made possible due to funds from the University of Wroclaw.

Author information

Authors and Affiliations

Contributions

All authors designed the study. Piotr Sorokowski and Marta Kowal collected the data, conducted statistical analyses, and wrote the manuscript. All authors revised the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare no competing interests.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kowal, M., Sorokowski, P., Kulczycki, E. et al. The impact of geographical bias when judging scientific studies. Scientometrics 127, 265–273 (2022). https://doi.org/10.1007/s11192-021-04176-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-04176-7