Abstract

Identifying the evolution path of a research field is essential to scientific and technological innovation. There have been many attempts to identify the technology evolution path based on the topic model or social networks analysis, but many of them had deficiencies in methodology. First, many studies have only considered a single type of information (text or citation information) in scientific literature, which may lead to incomplete technology path mapping. Second, the number of topics in each period cannot be determined automatically, making dynamic topic tracking difficult. Third, data mining methods fail to be effectively combined with visual analysis, which will affect the efficiency and flexibility of mapping. In this study, we developed a method for mapping the technology evolution path using a novel non-parametric topic model, the citation involved Hierarchical Dirichlet Process (CIHDP), to achieve better topic detection and tracking of scientific literature. To better present and analyze the path, D3.js is used to visualize the splitting and fusion of the evolutionary path. We used this novel model to mapping the artificial intelligence research domain, through a successful mapping of the evolution path, the proposed method’s validity and merits are shown. After incorporating the citation information, we found that the CIHDP can be mapping a complete path evolution process and had better performance than the Hierarchical Dirichlet Process and LDA. This method can be helpful for understanding and analyzing the development of technical topics. Moreover, it can be well used to map the science or technology of the innovation ecosystem. It may also arouse the interest of technology evolution path researchers or policymakers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The technology evolution path describes the emergence, transition, and extinction of a subject in this field, which can help researchers understand the history and current situation of the research field so that they can quickly identify research hotspots and gaps.

In the study of technological evolution path, discovery and presentation of topic information is a crucial problem. In recent years, an increasing number of researchers have begun to use machine-learning methods to identify the development of specific research domains based on literature data. Probabilistic topic models are useful in detecting different research topics and mining research hotspots. Especially Probabilistic Latent Semantic Analysis (PLSA) (Hofmann 1999) and Latent Dirichlet Allocation (LDA) (Blei et al. 2003), have drawn much attention in the field of topic discovery because of their effectiveness in analyzing sparse high-dimensional data, like literature data (Jeong and Min 2014; Yau et al. 2014).

There are usually two main questions when using topic models for technology mapping. First, is non-textual technical information useful for technical evolution analysis? If yes, how to add it to the topic model? Second, can we dynamically identify and track technical topics in different periods? It can help us discover the evolution path of technology more flexibly.

Most existing topic models only consider textual information. However, scientific literature contains textual information, citation information, co-author information, and so on. When topic models using only textual information are applied to analyze scientific literature, many useful features of literature are ignored. In particular, the citation relationship, which also contains robust technical evolution information, cannot be ignored when analyzing the development of specific research domains (Kajikawa et al. 2007; Zhou et al. 2016, 2019b, 2020).

The determination of the number of topics is essential for technical evolution analysis, but this is usually a troublesome problem. Generally, topic models, such as PLSA, LDA, and their extended models, need a preset number of topics. Two strategies can be used to handle this problem. One is comparing the experimental results for multiple times based on qualitative indicators such as perplexity or Normalized Mutual information (NMI) to determine the optimal number of topics. But this method requires a lot of experimentation, and the best result depends on the selected indicators. The second method is setting a relatively large number of topics, and then aggregating similar topics through Kullback–Leibler divergence, Cosine similarity, or another measure. By using the second method, the topics finally extracted are usually hard to understand (Griffiths and Steyvers 2004; Yao et al. 2011; Ding and Chen 2014).

When performing technology mapping, we hope that the algorithm can automatically determine the number of topics according to the structure of the data itself. In this way, we can not only have good adaptability to different data but also dynamically track changes of technical topics between different times. Teh et al. (2006) introduced HDP that can handle this problem. By utilizing the Dirichlet Processes feature of generating infinite clustering, the Hierarchical Dirichlet Processes (HDP) can automatically determine the appropriate number of mixture components.

In this paper, we combine textual information and citation information based on HDP to propose a new non-parametric topic model (no need to preset the parameters of the number of topics) to map the evolution path of the technology better. The novel non-parametric model based on HDP was named the citation-involved Hierarchical Dirichlet Process (CIHDP). Based on citation information, node2vec was used to convert papers in the citation network into vector form. Then we calculated the similarity of each pair of papers in the given paper data set to construct a similarity matrix. Unlike the Hierarchical Dirichlet Process (HDP), topic distribution for each document in the CIHDP was influenced by all of the other documents with different degrees of impact. The similarity of the two articles determined the degree of influence. That is, the less similarity there was in the citation network, the smaller the impact was. As other researchers did, we used the Gibbs sampling inference to estimate parameters in our model. Quantitative experiments prove that CIHDP can achieve better subject modeling effects than LDA. Through case analysis, CIHDP can find complete path evolution information than HDP.

The technology evolution path dynamically tracked by CIHDP is visualized through D3.js finally. For those who are not very familiar with technology mapping methods, visualization helps to adjust the topic model (such as parameters adjustment), and also facilitates understanding and discussing the technology path effectively.

The rest of this paper is organized as follows. The “Related work” section briefly reviews the related works. The “Methodology” section presents the overall research process and method introduction. The “Result and discussion” section conducts a case study in the field of AI research and evaluates the validity of the model. The “Conclusions” section lays out our key findings and future works. In the end, the Appendix provides details about the improved algorithm and experiment results. The code of CIHDP and sample data are available on the GitHub repository.Footnote 1

Related work

Technology evolution path

As a powerful presentation of the development of technology, the technology evolution path can track historical development, explore knowledge diffusion and predict future trends in technology (Adomavicius et al. 2007; Yu 2011; Huang et al. 2016; Huang et al. 2020). Given the explosive growth in the quantity of literature in the current research environment, analysis of the technology evolution path is usually based on data mining. There are two kinds of existing technology path research using literature data: bibliometrics and method based on the topic model.

Most methods of bibliometrics are based on citation analysis of scientific and technological literature (Zhou and Minshall 2014; Li et al. 2015, 2016b; Zhou et al. 2018; Xu et al. 2017, 2020; Nordensvard et al. 2018; Pan et al. 2019; Wang et al. 2018; Liu et al. 2019; Miao et al. 2020). Some methods can use to find simple information, such as keywords, influential authors, or core articles in the field literature. And then we can analyze the changes in this information over time to analyze the evolution of technology. These methods include co-word analysis (Callon et al. 1983), co-author analysis (Braun et al. 2001), bibliographic coupling (Kessler 1963), and co-citation analysis (Small 1973) and so on. Based on the citation network, some researchers also use the main path analysis method for path identification. For instance, Xiao et al. (2014) explore the knowledge diffusion path through an analysis of the main paths. Kim and Shin (2018) identify the main path of high voltage direct current transmission technology. Recently, researchers have begun to use citation network-based clustering methods, which can identify major research communities in a field. Chen et al. (2013) found that fuel cell technology consisted of several communities/clusters by clustering patent network. Moreover, the clusters used to detect and analyze technology evolution. However, the use of citation information alone is not convincing, and bibliometrics methods fail to consider both citation and text information.

The approach based on the topic model has gained more and more attention in recent years (Kong et al. 2017; Zhou et al. 2019a; Li et al. 2020). The content of the literature contains much information about technology development. By analyzing the distribution of words in the corpus, topic models perform well in extracting latent topics of documents. Of the topic models proposed in the early stage, TF-IDF, PLSA, and LDA are the most frequently used by researchers for mining topics in the corpus. Based on the topic model, some researchers explore the change of technology topics of each period, to analyze the development path of technology. For example, they are using TF-IDF cluster associated terms and phrases to constitute meaningful technological topics, Zhang et al. (2016) forecast future developments. Xu (2020) explores the identification method for innovation paths based on the linkage of scientific and technological topics. Wei et al. (2020) tracing the evolution of 3D printing technology in china using LDA-based patent abstract mining.

Nevertheless, topic models like LDA also have method flaws; that is, it needs to set the number of topics in advance. Because of the ability to automatically determine the number of topics in a given corpus, the HDP has attracted more scholars’ attention. In contrast, the traditional topic model needs preset the topic number. See the next section for an introduction to the topic modeling domain.

To sum up, of the two methods used for path recognition, bibliometrics methods tend to use citation information, and topic model methods are good at using large amounts of textual information. However, the path drawn using one type of information alone is not convincing, and there are few attempts to combine citation information and text information. In this paper, we try to mapping the technology evolution path by a novel method that integrates citation information into the topic model.

Topic modeling

With the rapid increase in the amount of text data and the continuous improvement of machine learning, many latent topic discovery methods have been proposed (Hofmann 1999; Blei et al. 2003; Blei and Lafferty 2006; Teh et al. 2006; Chang and Blei 2010; Rosen-Zvi et al. 2012; Cheng et al. 2014; Fu et al. 2016; Chen et al. 2020). We first sort out the research context of the topic modeling. Among them, LDA and HDP are two typical representative algorithms. And then we introduce these two classic methods.

Most topic models, like LDA and HDP, only take the corpus as bags of words. Many data contain other information. For example, text data of web pages have hyperlinked information, comment text has user information, and scientific data have citation information and author information. Because of the excellent modularity of LDA, PLSA, and HDP, these models can be easily extended. BTM was used to integrate word co-occurrence information into LDA to solve the problem of inferring topics from large-scale short texts (Cheng et al. 2014).

Similarly, On-Line LDA (Alsumait et al. 2008) and Dynamic Online HDP (Fu et al. 2016) was used to integrate time information into LDA and the HDP to solve the problem of topic detection and tracking. Some researchers integrated author information into LDA, PLSA, or HDP to solve the problem of mining the author–topics distribution (Steyvers et al. 2004; Rosen-Zvi et al. 2012; Ming and Hsu 2016). Some other researchers integrated information besides author information, like recipient information (Mccallum et al. 2007) and conference information (Jie et al. 2008). For example, Dai and Storkey (2009) integrated author information into the HDP to solve the author’s disambiguation problem.

The above models have been shown to perform well under specific tasks and data. However, when these models are used to obtain scientific literature data, the citation information is ignored. And the citation information represents a strong topical relevance between the papers.

Several research advances have already incorporated citation information into topic modeling, and these works can be divided into two categories. One takes citation as an undirected link. For instance, the Relational Topic Model (RTM) (Chang and Blei 2010) uses LDA to model each document and uses the binary variables of a link whether or not there is a link between documents to optimizing model parameters. The other takes citation as a directed link. Based on PLSA and PHITS, Cohn and Hofmann (2000) proposed a joint probabilistic model link LDA, which generated terms and citations under a common set of underlying factors. Based on the link-based LDA, pairwise-ink LDA uses the Mixed Membership Stochastic Block (MMSB) model to generate a citation relationship alone to model the topicality of citations explicitly. Moreover, the link-PLSA-LDA method dividing data into citing part and cited part, and use the same global parameters to generate terms and citations (Nallapati et al. 2008). But, it could use PLSA to model the cited part while using LDA to model the citing part to reduce the calculation costs of a pairwise-link LDA. Kataria et al. (2010) proposed that cited-LDA and cited-PLSA-LDA extended link-LDA and link-PLSA-LDA. These two models explicitly model the influence propagation of words by citation. However, in these two models, words belonging to a citation are taken as clear information, yet the Inheritance Topic Model (ITM) views whether the word belongs to the citation as unclear information (He et al. 2009).

Specifically, LDA is a topic model based on the corpus (Blei et al. 2003), which treats the document as a set of words. LDA believes that the document contains only a limited number of hidden topics, and the number of topics corresponding to the corpus can be set to a fixed constant. Therefore, before being used, the number of topics needs to be preset (usually need). It can extract latent topics in the corpus, and each topic is composed of a set of words with different weights. At the same time, we can also obtain the probability value of each topic in the corpus (can be understood as the proportion of topics in the corpus).

The HDP is a topic model that automatically determines the number of expected topics, and can achieve dynamic topic mining. This model does not depend on the preset number of topics. As the data changes, the model can achieve adaptive changes, such as model parameter learning and automatic classification number update tasks. The model believes that the number of topics in the corpus can be infinite, and automatically learns the optimal set of topics based on the data. This model introduces the Dirichlet process and builds a hierarchical Dirichlet process, which provides a solution for sharing an infinite number of clusters among multiple documents. Similarly, its topic modeling process can mine latent topics in the corpus and output high-frequency words under each topic.

After the above overview, we focus on two problems with the topic model to make it better for technology path mapping. How to determine the number of topics automatically? How to use the citation information to mine more coherent topics? To solve these two problems, we aimed to propose a citation-involved topic model that automatically determines the number of topics (see "Methodology"). Since HDP has the specificity of automatically determining the number of topics, we selected it as the benchmark model of the improved algorithm. Unlike the link-and-content-involved topic model mentioned above, we used the citation information to calculate the similarity of each literature pair. Besides, when sampling the topics of a specific document, the similarity information was used to adjust the impact of the topic distribution of other documents.

Path visualization

The process of technology path mapping can be divided into two parts: one is to mine the path information based on the topic model and other methods; the other is to visualize the evolution path information of technology effectively.

The visualization of the path can help us understand and analyze the development process of technology more intuitively, and there are many existing visualization methods. Citespace (Chaomei 2006), based on Java development, can perform citation analysis and timing network visualization. However, this approach cannot show the whole path of technology evolution on a single graph. Similarly, TopicRiver (Havre et al. 2002) uses rivers with varying widths to symbolize technical topics, and changes in the width of rivers to indicate changes in the strength of the topics. This method is suitable for displaying the topic of continuous development, but it is challenging to represent isolated technical topics and the developing relationship between different topics. TextFlow is a more intuitive method, which can show the split and fusion of topics by the confluence and diversion of rivers (Cui et al. 2011). Based on the semantic similarity calculation, the topic association can be used to get the split and fusion information of the topic. However, in the case of a large number of topics, this approach makes the final evolution path look messy, so that the information presentation may be inappropriate. From the perspective of the data set, a more targeted visual model is designed for different data sets based on the above two river graph representation methods. For example, the TopicFlow (Malik et al. 2013) and the OpinionFlow (Wu et al. 2014) can visualize Twitter data further be used to analyze public opinion communication. Besides, Guo et al. (2012) used a technology roadmap-style chart to represent the evolution trend, which is difficult to express complex information when the evolution path is complicated (such as cross-development path, topic intensity changes).

D3.js (data-driven documents) is a JavaScript library (Bostock et al. 2011), which is also called an interactive and dynamic data visualization tool library and can be visualized with great flexibility through programming. Based on D3.js, CellWhere showing the local interaction network organized into subcellular locations (Heberle et al. 2017), SPV simplifies biological signaling pathways visualization (Calderone and Cesareni 2018).

Combining the advantages of TopicRiver and TextFlow, we use D3.js to present the evolution path of technology. Visualize the technical topic information proposed by the novel topic model, and use the changes of rivers to represent the development of technology. It is worth mentioning that the topic model and visualization method are not isolated. They together serve to map the path of technology evolution.

Methodology

How do we map the technology evolution path? This section summarizes the overall research process, introduces the integration of citation information and topic model, and a dynamic topic detection model. Then, the process of mapping the evolution path is explained in detail.

Framework

To make the mapping process of the technological evolution path proposed in this article clearer, we have drawn the overall methodological framework, as shown in Fig. 1. Firstly, the processing of document data collection is divided into two aspects. On the one hand, we construct the citation network formed by the documents and embed the citation information of each document into a vector. Then calculate the similarity of citation information of two documents, and finally construct a document similarity matrix. On the other hand, based on the year of publication, the documents were grouped by period, and the interval of the period was uniform. Secondly, we integrated document content information with document similarity information, CIHDP was used to detect the topics of documents in each period dynamically. Thirdly, based on the topic information of each period obtained by CIHDP, we conduct topic path tracking. This part of the work is divided into two steps, the first step is to tag the topic of each period, and the second step is the correlation analysis of topics in the adjacent period to obtain the evolution path of the topics. Finally, D3.js was used to visualize the evolution path. By mapping out the evolution path, we can see the major technology branches of the field, as well as the splitting and fusion of technology evolution paths.

Measuring the similarity between documents

A citation network is a graph that contains information about the paper in each vertex, and an edge is the citation relationship between them. Vertex attributes are details about the paper, such as id, publication year, abstract, keywords, and content. Moreover, when the paper \(P_{i}\) referenced paper \(P_{j}\), there was an arrow extending from the vertex representing \(P_{i}\) to the vertex representing \(P_{j}\). Therefore, the citation network had the following characteristics. (1) The citation network was a directed graph in which each edge was an arrow going from one paper to the other. (2) All of the citation arrows almost always pointed backwards in time to older papers. Therefore, the graph of the citation network was acyclic and showed the development of the research field over time. (3) The most important characteristic of the citation network was the immediate relevance between topics of the paper and mentioned topics in other papers that it cited.

In bibliometrics, more researchers are using citation networks to identify and forecast development in the field of science and technology (Kajikawa et al. 2007). Therefore, many algorithms have been proposed to identify the similarity of each pair of documents in the citation network, such as bibliographic coupling, co-citation, Amsler, SimRank, P-Rank. Bibliographic coupling takes only out-links into account, so the similarity between two papers is computed based on the number of papers directly cited by both of them (Kessler 1963). Unlike bibliographic coupling, co-citation considers only in-links, and the similarity between the two papers depends on the number of papers that directly cite both of them (Small 1973). Amsler considers both direct in-links and out-links by combining the results of co-citation and bibliographic coupling (Amsler 1972). SimRank, a recursive version of co-citation, considers only in-links recursively, so the similarity between two papers is computed based on the papers that cite them (Jeh and Widom 2002). P-Rank, a recursive version of Amsler, considers both in-links and out-links recursively, so the similarity between two papers is computed based on the papers that cite them and are cited by them (Zhao et al. 2009).

However, because these models use co-citing and co-cited papers to calculate the similarity of each pair of documents, these models do not perform well in the task of calculating document pairs that have a direct citation relationship.

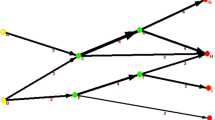

As shown in Fig. 2, Node2vec (Grover and Leskovec 2016) was chosen to calculate the similarity of each pair of documents. This model uses the random-walk procedure to catch the features of similarity between nodes and then embeds the node into a low-dimensional space. First, node2vec was used to acquire the vector representation of each node in the citation network in this study. Then, cosine similarity (cosineSim) was used to calculate the similarity of each pair of documents. To avoid the negative value of similarity, we finally use the following formula to calculate document similarity (docSim), whose range is [0,1]:

where doc denotes the document, i and j denote the order of document (or node), i∈[0, N], docVector denotes the embedding vector of the node in citation networks, and cosineSim denotes cosine similarity calculation function.

Adapted from Perozzi et al. (2014)

Flow chart of document similarity calculation.

At last, an N*N matrix was used to adjust the degree of influence between topics of different documents, where N is the number of documents in a given data set. It can be seen that the citation information is transformed into the similarity matrix of the document by way of graph embedding. The similarity matrix will be used to affect the topic allocation of the document to improve the quality of topic detection and tracking.

Citation involved Hierarchical Dirichlet Process

In this section, the citation information is indirectly introduced into the novel dynamic topic model (CIHDP) by using the document similarity matrix. Group documents by time, and by using the CIHDP algorithm, we can dynamically get the topics corresponding to each period. This part will be the primary work of path mapping.

In most cases, when researches try to extract topics from scientific literature, only the textual information (such as title and abstract) are used. In most topic models (such as LDA and HDP), topics are considered as the distribution of words, and the corpus is considered as batches of words. However, how can citations be appropriately used in topic extraction? The main idea of our improved model was to use citation information as a means to enhance the textual representation of documents, to discover more coherent technology evolution paths. Node2vec was used to construct a similarity matrix based on the citation network in the scientific literature. In our model, the topics of a paper were influenced by all other papers by different degrees in the corpus. And the similarity between papers determined the degree of influence. The similarity between documents will affect the topic modeling process.

The directed graphical representation of CIHDP is shown in Fig. 3. In the directed graph, open circles represent variables, shaded circles represent observable measurements, rounded rectangles represent parameters or basic distributions, and rectangular boxes represent iteration cycles. The numbers in the lower right corner of the rectangular boxes represent the number of cycles. Among them, G0 represents the global topic distribution of all documents, Gj represents the local topic distribution of the j-th document, and θji represents the distribution of words under the topic. xji represents observed words in the document.

CIHDP is very similar to HDP, but CIHDP has an additional influencing factor “c”, namely the influence of document similarity. In simple terms, the topic distribution of each document is not only affected by the overall topic distribution, but also by the topic distribution of similar documents. If the similarity of the two documents is higher (calculated based on the citation networks), the topic distribution between the two documents tends to be more similar, so that we can identify more coherent topics and better topic modeling effects. The citations we added are based on the global citation network, so the evolution of the topic will be more coherent, which will also improve the effect of topic tracking. To better explain our model, we provide a brief introduction of HDP (see “Appendix A”). We also proposed a metaphor for the CIHDP to explain our model. The metaphor was named the “USA Local Specialties Restaurant Franchise.” (See “Appendix B”).

To verify the effectiveness of the proposed algorithm in topic detection and topic tracking, we compared the LDA, HDP, and CIHDP. On the one hand, compare the advantages and disadvantages of the algorithm level, on the other hand, compare the effectiveness of the algorithm in the technology path mapping. In this article, we use perplexity indicators to compare algorithms between models.

Perplexity is an important measurement in information theory. It is a common way of evaluating language models. The lower the perplexity is, the better the model trains the dataset. The perplexity formula is as follows:

where D denotes the data set, \(\sum\nolimits_{d = 1}^{M} {N_{d} }\) denotes the number of words in the data set, and \(p\left( {\omega_{d} } \right)\) denotes the probability of that document generating a word in the data set.

Dynamic topic tracking and path identification

In order to complete the technology path mapping work, this section post-processes the topic modeling results to obtain the technology evolution path. Additionally, we designed the path expression and used visual methods to present the path.

The process of dynamic topic detection and tracking using CIHDP is shown in Fig. 1. First, documents were grouped by periods, and each group of documents was modeled to detect the topic in each period. In the topic modeling process, the document similarity matrix calculated in the last part would be used to affect the distribution of document topics. The topic name had to be determined by manually reading the corresponding high-frequency topic words.

Through the topic model, we get the distribution of each topic in each period (the probability of each word appearing under each topic). First, the tag of each topic is determined by manual calibration, and then the correlation analysis of the topics in different periods is carried out.

To determine the tag of each topic, we output the 25 words with the highest occurrence probability in the distribution of topics and words. Based on the tags given in the original data set, through manual reading, determine the topic for each tag. There are two more critical issues in this process. (1) For tiny topics (the total number of word frequencies corresponding to the topic is less than 100), and there are no highly directed words, we consider these topics to be background topics and filter them. (2) If the topic with the same tag appears at the same time, we believe that the domain represented by this tag has evolved into sub-domains within this period. In our research, we do not discuss the issue of the technical level, so we will do the fusion processing on the same tag in the same period (see “Appendices D and E”). The same topic in the same period retains the one with the highest word frequency.

We think the topics with the same tag can be connected directly in the two adjacent periods. Topics in the latter period are used to continue developing the topics in the previous period. As for topics with different tags in the two periods, the association between topics needs to be judged by the similarity. Here we use Jensen-Shannon divergence to characterize the similarity of two topics:

where \({\text{KL}}(T_{1} ||T_{2} )\) is the Kullback–Leibler divergence:

The value range of JS divergence is 0–1. The smaller the JS divergence, the higher the similarity of the topics. After sorting the JS divergence, we set a similarity threshold (S) between topics, and the association within this threshold will be presented.

Based on d3.js, this paper presents the evolution path visually, which is convenient for understanding development trends in the technology field. On the graph, there are tags for each topic, the intensity of the topic, and the topic relations in the adjacent periods. The topic of the topic modeling output was a set of words. The topic intensity was used to indicate the research heat of the topic. In this study, the number of words under the topic was used to measure topic intensity. The calculation formula of topic intensity strength (Ti) was as follows:

In the s_th period, n(Tsi) represents the total word frequency of the i-th topic, \(\sum\nolimits_{j \in s} {T_{sj} }\) represents the total word frequency of all topics, and \(n\left( {doc_{s} } \right)\) represents the total number of documents.

There were two elements in our visual design: points and lines. Points represented the topic in the period, whereas lines (rivers) represented the relationship between topics. Each river in the figure represents the technology evolution path, which reflects the intensity change of the technical topic and the starting and ending time of the topic.

Result and discussion

To verify the effectiveness and usefulness of the proposed methodology in technology path mapping, we select the field of artificial intelligence for a case study. Also, a comparison of similar methods was conducted.

In the first section of this part, three data sets were selected for model comparison and case study, and the data were described and preprocessed. In the second section, the model parameters are set. The third section compares the topic modeling performance of CIHDP, HDP, and LDA based on the perplexity index, and performs dynamic topic detection on the Aminer data set. The fourth section carries out path identification based on manual calibration and topic similarity calculation. The fifth section is based on D3.js to visualize the path and compare the technology path mapping capabilities of CIHDP and HDP.

Data collection

The data sets used in this paper are shown in Table 1. Our study’s data sets included two virtual data sets (Citeseer and Cora) and one real data set (Aminer). These data sets were used to verify the effectiveness of the CIHDP. We conducted a case study of an evolution path based on the Aminer data set. Details about the data are described below.

CiteseerFootnote 2: This dataset contained 3312 scientific publications. All these papers had a unique category label. There were 6 categories in the data set, namely Agents, Artificial Intelligence, Database, Human–Computer Interaction, Machine Learning, and Information Retrieval. The citation network consisted of 4732 links, and the data set had 3703 unique words after stemming and removing the stop words. Moreover, there was no spelling information in this data set. When a word was repeated multiple times in a paper, we only counted it once.

CoraFootnote 3: This dataset contained 2708 scientific publications. All these papers also had a unique category label. There were 7 categories in this dataset, namely Neural Networks, Rule Learning, Reinforcement Learning, Probabilistic Methods, Theory, Genetic Algorithms, and Case-Based. The citation network consisted of 5429 links, and the data set had 1433 unique words after the stemming process and removal of stop words. Like Citeseer, there was no spelling information in the Cora data set of each word in the vocabulary, and the words that appeared multiple times in the same paper were only recorded once.

AminerFootnote 4: The papers in this data set were mainly from the Aminer team and included about 10 research fields of artificial intelligence: “Data Mining/Association Rules” (DM/AR), “Web Services”, “Bayesian Networks/Belief function” (bayesian networks), “Web Mining/Information Fusion”(web mining), “Semantic Web/Description Logics” (SW/DL), “Machine Learning”, “Database Systems/XML Data” (DS/XD), “Information Retrieval”, “Pattern recognition/Image analysis”, and “Natural Language System/Statistical Machine Translation” (NLS/SMT). Since the raw data did not have the abstract information, we used the Web of Science database to complete the abstract information and delete some data that could not be found in the raw database. In addition, the paper of “Database Systems/XML Data” accounted for almost half of the total data. So, some papers from this category were also deleted to avoiding data skewness. At last, the data set contained 1000 scientific publications and information for 1109 citations. After the stemming process and removal of stop words, we had 670 unique words. The year range of the final data was 1990–2007. As with Cora and Citeseer, the words that appeared multiple times in the same paper were only recorded once.

Based on Aminer data, we conducted a case study on mapping the technology evolution path in the field of artificial intelligence. The overall time span of our analysis is from 1990 to 2007, and the overall time is divided into five periods. Among them, each period includes four consecutive years. Due to the small number of documents contained in 2006 and 2007, these two years are included in the last period (T5). The final period setting and the corresponding number of papers are shown in Table 2.

Parameter setting

When using HDP and CIHDP models for topic modeling, how to determine the model parameters \(\left( {\beta ,\alpha ,\gamma } \right)\) is a tricky question. We have found different parameter combinations in the previous literature and applied these parameter combinations to our data set. With the number of topics (since we used a labeled data set, the number of labels is known, so we hope the number of topics is closer to the number of labels) and the perplexity as the basis for judgment, the result is not ideal. Therefore, we conducted orthogonal experiments to obtain the optimal parameter combination.

Combining the distribution of parameters \(\left( {\alpha \sim \varGamma \left( {5,0.1} \right),\gamma \sim \varGamma \left( {0.1,0.1} \right)} \right)\) and the parameter values set in the previous HDP model, we determine the value ranges of the three parameters. We divided the value range of the parameter into 5 levels and conducted orthogonal experiments. The parameter values range and level division are shown in the following Table 3, and the results of the orthogonal experiments are shown in the “Appendix”.

When conducting orthogonal experiments, we need to consider the effect of different parameter combinations on the number of topics and perplexity. Ding and Chen (2014) designed an S value to consider the number of topics and perplexity in the parameter selection process:

The goal of parameter selection is that the perplexity is small enough and too many topics are unnecessary, so we chose the parameter combination that generates the lowest S value as the optimal parameter combination. Since the number of tags in the dataset we use is known, the number of topics determined using the S value may be very different from the actual number of tags. Therefore, for the labeled data set, we assign different weights to the perplexity part and the number of topics:

In the case of different weight distributions, the optimal parameters obtained by the S value are used for multiple repeated experiments to ensure that the number of topics finally obtained by the topic model is about 10. Finally, we determine the S value’s weight distribution value under the three data sets and the optimal parameter combination of the three data sets under the two subject models.

In this paper, the algorithm comparison experiment requires that the number of topics identified by different algorithms is basically the same, and then the subsequent perplexity index comparison and path mapping comparison. For simplicity, if there is a parameter combination in the orthogonal test table that meets our requirements, we will directly set this group of parameters as the model parameters.

According to the orthogonal experiment table (see “Appendix C”), we find that there are parameter combinations with 7 and 6 topics in the table, and set the corresponding parameter combinations as the topic model parameters of Cora and Citeseer data, respectively. Since the aminer data needs to be compared for path drawing, we want to find a better combination of parameters, using weights a = 0.7, b = 0.3 to set the parameter combination for the aminer data. The parameter settings of the three data are shown in Table 5.

In our experiment, several parameters had to be set for CIHDP and the benchmark models LDA and HDP. See “Appendix C” for details of parameter settings. Since it is selected as the empirical test data, the following uses Aminer as an example to set the parameters.

For LDA, parameters α, β, K, and iteration need to be set (see Table 4). To compare the three models, we try to make the number of topics, that generated by the three models, consistent with the number of data set categories. Thus, the number of topics, K = 10 (for Aminer), was set the same as the number of categories of data set. Dirichlet prior parameters α and β will influence the performance of the model. We set α = 50/K, β = 0.01, which has been proved to be effective for the LDA model (Heinrich 2005; Cheng et al. 2014; Li et al. 2016a; Liu et al. 2016). In all of the experiments, the number of iterations of Gibbs samples was set to according to the perplexity index. The perplexity index of the LDA topic model has a slower convergence rate. Iterations is set to 2000.

For the CIHDP and the HDP, we used a symmetric Dirichlet distribution with parameters β for the prior H over the topic distribution, and concentration parameters γ and α that influence hierarchical DP. For CIHDP and HDP, we set β = 0.2, γ = 0.7, α = 0.5, and iteration = 150 (these two models can fast achieve good performance than LDA).

In our method, we had to mine the topic of relevance between documents for CIHDP. Node2vec was used to capture the information from the network via a biased random walk (Grover and Leskovec 2016). Then, we can calculate the similarity of each document pair. Two parameters were used to control the process: the return parameter p and the in–out parameter q. The same with Kim et al. (2018), the goal of our study was to identify nodes that are closely interconnected and belong to the same communities (homophily equivalence), we set p = 2 and q = 0.125. The other parameters involved in node2vec were set as d = 128, r = 10, l = 10, and k = 10, where d, r, l, and k denote embedding dimensions, walk per node, walk length, and context size, respectively. Parameter values also were selected based on the parameter-sensitive part of the original paper (Grover and Leskovec 2016) for the best performance.

Topic modeling

The third section compares the topic modeling performance of CIHDP, HDP, and LDA based on the perplexity index, and performs dynamic topic detection on the Aminer data set. According to the model parameters set above, we set the model parameters for CIHDP, HDP, and LDA separately. Taking the Citeseer data set as an example, we use the three topic model algorithms to perform topic modeling on the data set and obtain the perplexity data in the topic modeling process. We conduct five repeated experiments for each algorithm and use the average perplexity to draw the perplexity change curve during the topic model sampling process.

We run LDA topic modeling on Citeseer, and draw the perplexity curve with the number of iterations in the topic detection process, as shown in Table 4. It can be seen from the figure that the perplexity of LDA changes slowly, and the model needs many iterations to achieve better results.

To facilitate the comparison of the three algorithms’ performance, we plot their perplexity curves in the same coordinate system. The number of iterations of LDA is much greater than the number of iterations of CIHDP and HDP, so we set a secondary abscissa system for LDA so that the three algorithms can be compared in the same graph (see Table 5a).

It can be seen from the figure that the perplexity of CIHDP and HDP is similar, and the final convergence value of the perplexity is also almost equal. It can be seen that their algorithm performance on the perplexity is similar. However, the perplexity of LDA decreases very slowly (the number of iterations needs to be 2000), and the final convergence value of the perplexity is higher than others. It can be seen that the algorithm performance of CIHDP and HDP on the perplexity is better than LDA (Fig. 4).

In the process of topic modeling for Cora and Aminer, we also found the same conclusion, the corresponding perplexity is shown in the subplot (b) and (c) of Fig. 5.

As can be seen from the above, the algorithm performance comparison between CIHDP and HDP is not apparent. Since these two algorithms can automatically determine the number of the topics, to perform dynamic topic detection and subsequent dynamic topic tracking, the following will compare the advantages and disadvantages of the two algorithms in mapping. The following section uses HDP as a benchmark model.

To compare the technology path mapping, we use CIHDP and HDP to model the topic of the Aminer data, identify the topics of each period, and get the word distribution under each topic (see “Appendix E”).

Path identification

Before topic calibration in each period, we first subject the comprehensive data set to topic modeling and pre-calibration (see “Asppendix D”). Pre-calibration can make the calibration work more directional and guiding, and improve the efficiency of each calibration work. According to the topic modeling results, we first perform manual calibration (see “Appendix E”), and then perform path tracking on the calibration results.

We divide the evolution path into two categories, one is the evolution path of the same topic, and the other is the evolution path between different topics. In two adjacent time slices, the topics with the same tag can establish an association relationship directly. As for topics with different tags in the two periods, the association relationship between topics needs to be judged by the semantic similarity. As mention above, if the semantic similarity between two topics on adjacent time slices is high, we think there is an evolutionary relationship between the two topics. In this paper, the JS divergence is used to measure the semantic similarity, set the similarity threshold, and connect the topics with higher similarity to the path. First, calculate the JS divergence of all the associations of the topics with the different tags in adjacent periods and rank the JS divergence.

We set the similarity thresholds to different values, and we can get different evolution paths. In this paper, we set the similarity thresholds (S) to 10%, 20%, and 30%, respectively, and we can get the six different evolution paths in Fig. 6. Taking machine learning as an example, the red circle in Fig. 6 indicates different paths made using different similarity thresholds. We conduct information validity analysis on the paths under different similarity thresholds, and finally, determine an appropriate threshold so that the obtained evolutionary paths present the most useful information.

Mapping the evolution path

Based on Aminer data, we conducted a case study on the path identification in the field of artificial intelligence. We use CIHDP and HDP to mapping the evolution path in this field separately, and conduct a comparative analysis to prove the usefulness and advantage of the method proposed (CIHDP). After performing topic detection and topic tracking analysis for Aminer data, we can get the correlation of artificial intelligence technology topics from 1990 to 2007. That is to say, we can obtain multiple paths of technological evolution.

To intuitively analyze the path evolution in this field, we use D3.js to visualize the path evolution process. The previous section calculated the semantic similarity between topics with different tags and connected every two topics with a semantic similarity larger than the similarity threshold. In this paper, the similarity threshold for CIHDP and HDP is set to S = 20%, because the most effective path evolution information can be obtained in this way.

The visualization results are shown in Fig. 7. In the visual design, we use different rivers to describe the evolution path of technology. (1) Each vertical line represents a period and is marked with a year interval. From left to right, the year is getting closer to the present. (2) The topics included in each period are presented on the corresponding time vertical line, and the red dots with tags represent the technical topics. (3) Connect related topics with lines to form a series of rivers, and use different rivers to represent different technical evolution paths. (4) Different colors represent different paths, and the gradation of colors indicates the fusion and splitting of paths. (5) The width of the river expresses the topic intensity. The stronger the topic, the wider the river.

After visualizing the path information, we can conveniently conduct technical evolution analysis, and at the same time, we can also compare the effectiveness of CIHDP and HDP in path mapping.

In the sub-figure (a) in Fig. 7, we can analyze the technology evolution path mapped using CIHDP. Judging from the overall time, CIHDP has identified a total of 10 types of topics, and the overall topic recognition effect is satisfactory. In general, we first analyze the development trend of each topic. The topics that first appeared represent some basic and supporting research areas. These topics include “database systems and XML data” (DS/XD), “semantic web and description logic” (SW/DL), “natural language systems and statistical machine translation” (NLS/SMT), “bayesian network”, “information retrieval”, in which DS/XD and SW/DL exist almost throughout the entire period.

The topics that emerged later were research areas that were more application-oriented and high-end R&D. These topics include “data mining”, “web mining”, “machine learning”, “pattern recognition”, and “web services”. Among them, in the T4 and T5 periods, the intensity of the topic of machine learning has increased dramatically, and this kind of research is hot and popular, occupying the mainstream research status. In T5, the new topic of “web service” appeared, and the topic intensity value is not high, which means that a new path has emerged, and it is in the initial stage of path evolution.

Next, with the help of the color gradient effect, we analyze the path evolution details between different topics. Following the direction of time development, we analyze path splitting, fusion, emergence, and even disappear. (1) In the process from T2 to T3, “database system”, “information retrieval” and “data mining” merge to form “web mining”. (2) During the process from T3 to T4, “web mining” continues to integrate DS/XD. (3) Based on the development of related technologies such as database systems, information retrieval technology, and data mining technology, the emerging of “web mining” path is reasonable. Moreover, similar path fusion and splitting conditions still exist. (4) For example, DS/XD, as a basic technology, always undergoes path splitting during its development and merges with “data mining”, “web mining” and SW/DL. (5) Another example is that the “bayesian network” path splits, and merges with “pattern recognition”, “machine learning”. (6) From the figure, we can also find some interesting phenomenon. “bayesian network” and “machine learning” have interactive fusion and splitting of paths. From T2 to T3, “bayesian network” split and merge to “machine learning”. From T3 to T4, “machine learning” is integrated into the “bayesian network” partly, it also shows that these two research areas are closely related. The same situation also exists between the DS/XD and SW/DL.

We also searched for information on the development of artificial intelligence and learned some facts. In 1995, Corinna proposed Support Vector Machine. In 1997, (a) the computer “dark blue” defeated Kasparov in chess. (b) Long Short-Term Memory (LSTM) was first proposed by Sepp Hochreiter and Jürgen Schmidhuber. (c) And AdaBoost was also proposed and used to achieve the effect of a strong classifier. In 1998, Tim Berners Lee proposed the semantic web and previous research is more biased towards “descriptive logic”. In 2001, Conditional Random Field (CRF) was proposed by Lafferty et al. Based on these critical events, and we can better understand and believe that the above findings are in line with the facts, and also find that these significant scientific advances have promoted the emergence of new paths in T3 and T4.

Similarly, in the sub-figure (b) in Fig. 7, we can analyze the technology evolution path mapped using HDP. HDP has successfully identified 10 types of topics and has also obtained some effective technological evolution paths. However, we found that many paths identified by HDP lack actual meaning. In other words, the paths tracked using HDP are less effective than CIHDP.

The technology path mapped by CIHDP has been analyzed in detail above, so here is only a brief analysis of the path traced by HDP. It can be seen from the figure based on HDP that there is a little path information identified between T1, T2, and T3. There are many unexplained correlations and evolution paths in subgraph (b). Especially T3–T4, there are many-to-many associations. For example, data mining, web mining, information retrieval, network services, and statistical machine translation are integrated into the database system, which covers almost all the topics of T3. It is difficult to judge the core evolution path. Except for the evolution process from T3 to T4, it is almost difficult to find useful information.

Combined with the above analysis, we found that the technology evolution path we identified is consistent with the facts, indicating that the proposed method is valid in mapping the technology evolution path. We use CIHDP and HDP to make a comparative analysis of the paths and find that the former can find a more complete and detailed path evolution information. Therefore, it also proves that CIHDP is better at mapping the path of technological evolution.

Conclusion

In this study, we developed a method of mapping the technology evolution path that uses a novel non-parametric topic model (CIHDP) to achieve better dynamic topic detection and tracking of scientific literature. We performed a visual analysis of the evolution path based on D3.js. By incorporating literature citation information into the topic modeling process, the combination of textual and citation information is achieved. By using CIHDP, we have successfully completed the mapping of technological evolution paths, and obtained more detailed and complete path splitting and fusion information.

The method proposed in this paper is universal and suitable for technology path mapping. In principle, CIHDP is designed to mine and analyze data containing textual information (such as the title and abstract of the literature data) and citation information, including commonly used paper or patent data. Therefore, it is also feasible to use CIHDP to process patent data. Considering the availability and standardization of the data, we selected the paper data for technology path analysis in this study.

It is worth mentioning that the method and process in this paper can also help to solve general technology management problems, such as (1) analyzing the overall development trend of technology in a field, (2) determining the mainstream or emerging technology in the process of technology evolution, (3) or technology life cycle evaluation, and so on.

The key findings and contributions were as follows. First, this paper proposes a technology path mapping method based on an improved topic model and compares CIHDP with some traditional methods (such as LDA and HDP). For evaluating the proposed method, we used three data sets to verify our model. Through the comparison of algorithms and case studies, we found that the proposed method can find more detailed and complete technical evolution path information, and the identified evolution path is more interpretable than HDP.

Second, CIHDP makes full use of the information in the literature data, taking into account both textual semantic information and citation information, and mines the literature data from a more comprehensive perspective. This method can identify the path of technological evolution, and the experimental results also indicated that the method in this paper effectively avoids the lack of information caused by a single perspective analysis.

Third, few previous studies have mentioned how to set the parameters of non-parametric Bayesian models (such as HDP). In this paper, a large number of parameter orthogonal experiments were carried out separately on three different data sets. It provides reference values or process recommendations for users of HDP and CIHDP models to set optimal parameters. Besides, the traditional evaluation indexes (such as perplexity index) of the topic modeling algorithm are not enough to explain the pros and cons of the model, and actual case verification should be carried out.

Finally, this study conducted a visual analysis of the technology evolution path based on D3.js. We found that this visual method is suitable for analyzing complex evolution paths. Data mining combined with visual analysis can find the path splitting and fusion evolution process more efficient.

However, there are still limitations and future work here. First of all, considering the workload and time, the case study uses the core literature of the AI research field organized by the Aminer team, and the data set is not large. Later, we will consider changing to another field and use data sets with large data volume for further verification. Second, this paper has conducted in-depth data mining on scientific and technological literature. To discover more and more complete path evolution information, data fusion and analysis of different types of literature data may be performed in the future. Third, the scientific literature is rich in information, including not only textual and citation relationships, but also co-occurrence of authors, the quality of journal literature, and other factors. Including these factors in this model also has the potential to mine more accurate path information.

References

Adomavicius, G., Bockstedt, J. C., Gupta, A., & Kauffman, R. J. (2007). Technology roles and paths of influence in an ecosystem model of technology evolution. Information Technology Management, 8(2), 185–202.

Aldous, D. J. (1985). Exchangeability and related topics. Ecole Dete De Probabilites De Saint Flour, 1117(3), 1–198.

Alsumait, L., Barbará, D., & Domeniconi, C. (2008). On-Line LDA: Adaptive topic models for mining text streams with applications to topic detection and tracking. In: Eighth IEEE international conference on data mining.

Amsler, R. A. (1972). Applications of citation-based automatic classification. Linguistics Research Center, University of Texas at Austin.

Blackwell, D., & Macqueen, J. B. (1973). Ferguson distributions via polya urn schemes. Annals of Statistics, 1(2), 353–355.

Blei, D. M., & Lafferty, J. D. (2006). Dynamic topic models. In: Proceedings of the twenty-third international conference machine learning (ICML 2006)

Blei, D. M., Ng, A. Y., & Jordan, M. I. (2003). Latent dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022.

Bostock, M., Ogievetsky, V., & Heer, J. (2011). D3 data-driven documents. IEEE Transactions on Visualization Computer Graphics, 17(12), 2301–2309.

Braun, T., Glänzel, W., & Schubert, A. (2001). Publication and cooperation patterns of the authors of neuroscience journals. Scientometrics, 51(3), 499–510.

Calderone, A., & Cesareni, G. (2018). SPV: a javascript signaling pathway visualizer. Bioinformatics, 34(15), 2684–2686.

Callon, M., Courtial, J. P., Turner, W. A., & Bauin, S. (1983). From translations to problematic networks: An introduction to co-word analysis. Social Science Information, 22(2), 191–235.

Chang, J., & Blei, D. M. (2010). Hierarchical relational models for document networks. Annals of Applied Statistics, 4(1), 124–150.

Chaomei, C. (2006). CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. Journal of the Association for Information Science Technology, 57(3), 359–377.

Chen, J., Zhang, K., Zhou, Y., Chen, Z., Liu, Y., Tang, Z., et al. (2020). A novel topic model for documents by incorporating semantic relations between words. Soft Computing, 24(15), 11407–11423.

Chen, S.-H., Huang, M.-H., & Chen, D.-Z. (2013). Exploring technology evolution and transition characteristics of leading countries: A case of fuel cell field. Advanced Engineering Informatics, 27(3), 366–377.

Cheng, X., Yan, X., Lan, Y., & Guo, J. (2014). BTM: Topic modeling over short texts. IEEE Transactions on Knowledge and Data Engineering, 26(12), 2928–2941.

Cohn, D., & Hofmann, T. (2000). The missing link: A probabilistic model of document content and hypertext connectivity. In: International conference on neural information processing systems

Cui, W., Liu, S., Tan, L., Shi, C., Song, Y., Gao, Z., et al. (2011). Textflow: Towards better understanding of evolving topics in text. IEEE Transactions on Visualization Computer Graphics, 17(12), 2412–2421.

Dai, A. M., & Storkey, A. J. (2009). Author disambiguation: A nonparametric topic and co-authorship model. NIPS workshop on applications for topic models text and beyond.

Ding, W., & Chen, C. (2014). Dynamic topic detection and tracking: A comparison of HDP, C-word, and cocitation methods. Journal of the Association for Information Science Technology, 65(10), 2084–2097.

Fu, X., Li, J., Yang, K., Cui, L., & Lei, Y. (2016). Dynamic Online HDP model for discovering evolutionary topics from Chinese social texts. Neurocomputing, 171, 412–424.

Griffiths, T. L., & Steyvers, M. (2004). Finding scientific topics. Proceedings of National Academy of Sciences, 101(Suppl 1), 5228–5235.

Grover, A., & Leskovec, J. (2016). node2vec: Scalable feature learning for networks. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining.

Guo, Y., Ma, T., Porter, A. L., & Huang, L. (2012). Text mining of information resources to inform forecasting innovation pathways. Technology Analysis & Strategic Management, 24(8), 843–861.

Havre, S., Hetzler, E., Whitney, P., & Nowell, L. (2002). Themeriver: Visualizing thematic changes in large document collections. IEEE Transactions on Visualization Computer Graphics, 8(1), 9–20.

He, Q., Chen, B., Pei, J., Qiu, B., Mitra, P., & Giles, L. (2009). Detecting topic evolution in scientific literature: how can citations help? In: Proceedings of the 18th ACM conference on Information and knowledge management.

Heberle, H., Carazzolle, M. F., Telles, G. P., Meirelles, G. V., & Minghim, R. (2017). CellNetVis: A web tool for visualization of biological networks using force-directed layout constrained by cellular components. BMC Bioinformatics, 18(10), 395.

Heinrich, G. (2005). Parameter estimation for text analysis, Technical report.

Hofmann, T. (1999). Probabilistic latent semantic analysis. In: Fifteenth conference on uncertainty in artificial intelligence.

Huang, Y., Zhu, F., Guo, Y., Porter, A. L., Zhang, Y., & Zhu, D. (2016). Exploring technology evolution pathways to facilitate technology management: A study of dye-sensitized solar cells (DSSCs). In: 2016 Portland international conference on management of engineering and technology (PICMET).

Huang, Y., Zhu, F., Porter, A. L., Zhang, Y., Zhu, D., & Guo, Y. (2020). Exploring technology evolution pathways to facilitate technology management: From a technology life cycle perspective. IEEE Transactions on Engineering Management, PP(99), 1–13.

Jeh, G., & Widom, J. (2002). SimRank: A measure of structural-context similarity. In: Eighth ACM Sigkdd international conference on knowledge discovery & data mining.

Jeong, D. H., & Min, S. (2014). Time gap analysis by the topic model-based temporal technique. Journal of Informetrics, 8(3), 776–790.

Jie, T., Jing, Z., Yao, L., Li, J., Li, Z., & Zhong, S. (2008). ArnetMiner:extraction and mining of academic social networks. In: ACM Sigkdd intersnational conference on knowledge discovery & data mining.

Kajikawa, Y., Ohno, J., Takeda, Y., Matsushima, K., & Komiyama, H. (2007). Creating an academic landscape of sustainability science: An analysis of the citation network. Sustainability Science, 2(2), 221–231.

Kataria, S., Mitra, P., & Bhatia, S. (2010). Utilizing context in generative Bayesian models for linked corpus. In: Twenty-fourth AAAI conference on artificial intelligence.

Kessler, M. M. (1963). Bibliographic coupling between scientific papers. American Documentation, 14(1), 10–25.

Kim, M., Baek, S. H., & Song, M. (2018). Relation extraction for biological pathway construction using node2vec. BMC Bioinformatics, 19(Suppl 8), 206.

Kim, J., & Shin, J. (2018). Mapping extended technological trajectories: Integration of main path, derivative paths, and technology junctures. Scientometrics, 116(3), 1439–1459.

Kong, D., Zhou, Y., Liu, Y., & Xue, L. (2017). Using the data mining method to assess the innovation gap: A case of industrial robotics in a catching-up country. Technological Forecasting & Social Change, 119.

Li, C., Wang, H., Zhang, Z., Sun, A., & Ma, Z. (2016a). Topic modeling for short texts with auxiliary word embeddings. In: Proceedings of the 39th international Acm sigir conference on research and development in information retrieval—SIGIR ‘16, pp. 165–174

Li, X., Zhou, Y., Xue, L., & Huang, L. (2015). Integrating bibliometrics and roadmapping methods: A case of dye-sensitized solar cell technology-based industry in China. Technological Forecasting and Social Change, 97, 205–222.

Li, X., Zhou, Y., Xue, L., & Huang, L. (2016b). Roadmapping for industrial emergence and innovation gaps to catch-up: A patent-based analysis of OLED industry in China. International Journal of Technology Management, 72(1/2/3), 105.

Li, Y., Li, Y., Wang, J., & Sherratt, R. S. (2020). Sentiment analysis for E-commerce product reviews in Chinese based on sentiment lexicon and deep learning. IEEE Access, 8(1), 23522–23530.

Liu, Y., Wang, J., & Jiang, Y. (2016). PT-LDA: A latent variable model to predict personality traits of social network users. Neurocomputing, 210, 155–163.

Liu, Y., Zhou, Y., Liu, X., Dong, F., Wang, C., & Wang, Z. (2019). Wasserstein gan-based small-sample augmentation for new-generation artificial intelligence: A case study of cancer-staging data in biology. Engineering, 2019(5), 156–163.

Malik, S., Smith, A., Hawes, T., Papadatos, P., Li, J., Dunne, C., & Shneiderman, B. (2013). TopicFlow: Visualizing topic alignment of Twitter data over time. In: Proceedings of the 2013 IEEE/ACM international conference on advances in social networks analysis and mining.

Mccallum, A., Wang, X., & Corrada-Emmanuel, A. (2007). Topic and role discovery in social networks with experiments on enron and academic email. Journal of Artificial Intelligence Research, 30(2), 249–272.

Miao, Z., Du, J., Dong, F., Liu, Y., & Wang, X. (2020). Identifying technology evolution pathways using topic variation detection based on patent data: A case study of 3D printing. Futures, 118, 102530.

Ming, Y., & Hsu, W. H. (2016). HDPauthor: A new hybrid author-topic model using latent dirichlet allocation and hierarchical dirichlet processes. In: International conference companion on world wide web.

Nallapati, R. M., Ahmed, A., Xing, E. P., & Cohen, W. W. (2008). Joint latent topic models for text and citations. In: ACM Sigkdd international conference on knowledge discovery & data mining.

Nordensvard, J., Zhou, Y., & Zhang, X. (2018). Innovation core, innovation semi-periphery and technology transfer: The case of wind energy patents. Energy Policy, 120, 213–227.

Pan, M., Zhou, Y., & Zhou, D. (2019). Comparing the innovation strategies of Chinese and European wind turbine firms through a patent lens. Environmental Innovation and Societal Transitions, 30, 6–18.

Perozzi, B., Al-Rfou, R., & Skiena, S. (2014). Deepwalk: Online learning of social representations. In: Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 701–710

Rosen-Zvi, M., Griffiths, T. L., Steyvers, M., & Smyth, P. (2012). The author-topic model for authors and documents. In: Conference on uncertainty in artificial intelligence.

Small, H. (1973). Co-citation in the scientific literature: A new measure of the relationship between two documents. Journal of the American Society for information Science, 24(4), 265–269.

Steyvers, M., Smyth, P., Rosen-Zvi, M. & Griffiths, T. (2004). Probabilistic author-topic models for information discovery. In: Tenth Acm Sigkdd international conference on knowledge discovery & data mining.

Teh, Y. W., Jordan, M. I., Beal, M. J., & Blei, D. M. (2006). Hierarchical dirichlet processes. Publications of the American Statistical Association, 101(476), 1566–1581.

Wang, B., Liu, Y., Zhou, Y., & Wen, Z. (2018). Emerging nanogenerator technology in China: A review and forecast using integrating bibliometrics, patent analysis and technology roadmapping methods. Nano Energy, 46, 322–330.

Wei, C., Chaoran, L., Chuanyun, L., Lingkai, K., & Zaoli, Y. (2020). Tracing the evolution of 3-D printing technology in China using LDA-based patent abstract mining. IEEE Transactions on Engineering Management, PP, 1–14.

Wu, Y., Liu, S., Yan, K., Liu, M., & Wu, F. (2014). Opinionflow: Visual analysis of opinion diffusion on social media. IEEE Transactions on Visualization Computer Graphics, 20(12), 1763–1772.

Xiao, Y., Lu, L. Y., Liu, J. S., & Zhou, Z. (2014). Knowledge diffusion path analysis of data quality literature: A main path analysis. Journal of Informetrics, 8(3), 594–605.

Xu, H. (2020). Topic-linked innovation paths in science and technology. Journal of Informetrics, 14(2), 101014.

Xu, G., Hu, W., Qiao, Y., & Zhou, Y. (2020). Mapping an innovation ecosystem using network clustering and community identification: A multi-layered framework. Scientometrics, 124, 2057–2081. https://doi.org/10.1007/s11192-020-03543-0.

Xu, G., Wu, Y., Minshall, T., & Zhou, Y. (2017). Exploring the emerging ecosystem across science, technology and business: A case of 3D printing in China. Technological Forecasting and Social Change. https://doi.org/10.1016/j.techfore.2017.06.030.

Yao, Q., Song, Z., & Peng, C. (2011). Research on text categorization based on LDA. Computer Engineering Applications, 47(13), 150–153.

Yau, C. K., Porter, A., Newman, N., & Suominen, A. (2014). Clustering scientific documents with topic modeling. Scientometrics, 100(3), 767–786.

Yu, J. (2011). From 3G to 4G: Technology evolution and path dynamics in China’s mobile telecommunication sector. Technology Analysis Strategic Management, 23(10), 1079–1093.

Zhang, Y., Zhang, G., Chen, H., Porter, A. L., Zhu, D., & Lu, J. (2016). Topic analysis and forecasting for science, technology and innovation: Methodology with a case study focusing on big data research. Technological Forecasting Social Change, 105, 179–191.

Zhao, P., Han, J., & Sun, Y. (2009). P-Rank: A comprehensive structural similarity measure over information networks. In: ACM conference on information & knowledge management.

Zhou, Y., & Minshall, T. (2014). Building global products and competing in innovation: The role of Chinese university spin–outs and required innovation capabilities. International Journal of Technology Management, 64(2), 180–209.

Zhou, Y., Dong, F., Kong, D., & Liu, Y. (2019b). Unfolding the convergence process of scientific knowledge for the early identification of emerging technologies. Technological Forecasting and Social Change, 144(JUL.), 205–220.

Zhou, Y., Dong, F., Liu, Y., Li, Z., Du, J., & Zhang, L. (2020). Forecasting emerging technologies using data augmentation and deep learning. Scientometrics, 123(1), 1–29.

Zhou, Y., Li, X., Lema, R., & Urban, F. (2016). Comparing the knowledge bases of wind turbine firms in Asia and Europe: Patent trajectories, networks, and globalisation. Science and Public Policy, 43(4), 476–491. https://doi.org/10.1093/scipol/scv055.

Zhou, Y., Lin, H., Liu, Y., & Ding, W. (2019a). A novel method to identify emerging technologies using a semi-supervised topic clustering model: A case of 3d printing industry. Scientometrics, 120, 167.

Zhou, Y., Pan, M., & Urban, F. (2018). Comparing the international knowledge flow of china’s wind and solar photovoltaic (pv) industries: Patent analysis and implications for sustainable development. Sustainability, 10(6), 1883.

Funding

This work was supported by the National Natural Science Foundation of China (Nos. 71974107, 91646102, L1824043, L1924058, L1824039, L1724034), the MOE (Ministry of Education in China) Project of Humanities and Social Sciences (16JDGC011), the Construction Project of China Knowledge Center for Engineering Sciences and Technology (No. CKCEST-2020-2-5), the UK–China Industry Academia Partnership Program (UK-CIAPP/260) and Tsinghua University Project of Volvo-supported Green Economy and Sustainable Development (20153000181). The findings and observations contained in this paper are those of the authors and do not necessarily reflect the views of the National Natural Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Hierarchical Dirichlet Process

The Hierarchical Dirichlet Process (HDP) is a non-parametric Bayesian topic model that assumes that there are infinite topics in the corpus. To understand what HDP is, we need to start with what is the Dirichlet Process (DP).

The DP is a stochastic process that generates probability distributions, parameterized by a scaling parameter \(\lambda\) and a base probability measure H. We denote it by \(G_{0} \sim DP\left( {\gamma ,H} \right)\), A perspective on the Dirichlet process is provided by the Chinese restaurant process (CRP) (Aldous 1985). A sequence of variables \(\theta_{1} ,\theta_{2} , \ldots\) are independent and identically distributed according to \(G_{0}\). In this metaphor, take \(\theta_{i}\) to be a customer entering a restaurant with infinitely many tables, each serving a unique dish \(\phi_{k}\). Each arriving customer chooses a table, in proportion to the number of customers already sitting at that table, denoted as \(m_{k}\). With some positive probability proportional to \(\gamma\), the customer chooses a new, previously unoccupied table. The above equation can be expressed as follows (Blackwell and Macqueen 1973):

The Dirichlet process can be used to model group data, and the HDP is used to link group-specific Dirichlet processes, which can share clusters among groups of data. The graphical model is shown in Fig. 8.

The HDP has two-level DP structures. The \(G_{j}\) is distributed as a DP corresponding group, j, with a concentration parameter, \(\alpha ,\) and a base distribution, \(G_{0}\). \(G_{0}\) is also distributed as a DP with a concentration parameter, \(\gamma ,\) and a base distribution, H. Moreover, J is the number of observed groups, \(n_{j}\) is the number of observed variables in group j, \(x_{ji}\) is the \(i_{th}\) observed variable in group j, \(\theta_{ji}\) is the factor of \(x_{ji} ,\). And \(F\left( {\theta_{ji} } \right)\) is the distribution of the \(x_{ji}\) given \(\theta_{ji}\). The generative model for HDP is as follows:

To better understand HDP, we will combine the topic model process over documents to explain the HDP model. In the HDP, the sampling order of \(\theta_{ji}\) is exchangeable, and so is \(G_{j}\). H is taken as a Dirichlet distribution whose dimension is the size of the vocabulary, i.e., it is the distribution over an uncountable number of term distributions. Moreover, \(G_{0}\) is a distribution over a countable but infinite number of topic-word distributions. For each document j, \(G_{j}\) is a distribution over a countable but infinite number of categorical term distributions, i.e., topic distributions of the document. Here, \(\theta_{ji}\) is a categorical distribution over terms, i.e., a topic, and \(x_{ji}\) represents observed variables.

We can use the Chinese Restaurant Franchise (Teh et al. 2006) to understand HDP. In this metaphor, there a lot of restaurants that shares the same menu. There is an unlimited number of dishes in this menu. Additionally, each restaurant has an unlimited number of tables. Each table can serve an unlimited number of customers. However, each table only has one dish. All of the dishes will be chosen after customers of all the restaurants have chosen a table to sit. Like customers in CRP, when the \(i_{th}\) customer \(\theta_{ji}\) enters the \(j_{th}\) restaurant, the customer will choose an occupied table or a new table according to the equation:

where mj. is the number of occupied tables of the \(j_{th}\) restaurant; \(n_{jt.}\) is the number of the customers of the \(t_{th}\) table of the \(j_{th}\) restaurant; \(\psi_{jt}\) is the dish index of the \(t_{th}\) table of the \(j_{th}\) restaurant; and \(\delta_{{\psi_{jt} }}\) is a probability measure concentrated at \(\psi_{jt}\).

After all of the customers of all of the restaurants have chosen a table to sit, the customers of each table will pick one dish for each table in turn. In each selection process, customers do not know which dish is good. Thus, the customers will consider the number of different dishes that have been selected. The more times a dish is selected, the more likely it is that the customers will select the dish. At the same time, there is a certain probability of choosing new dishes. This process is following the equation:

where m.k is the number of the tables serving the \(k_{th}\) dish in all the restaurant, \(m_{..}\) is the number of all the occupied tables of all the restaurants, and \(\phi_{k}\) is the \(k_{th}\) dish.

In this metaphor, each dish \(\phi\) corresponds to a global topic, and each restaurant corresponds to a document. Because each document’s topics are drawn from the same measure G0, global topics could be shared by all documents. Moreover, because of the two levels of DP, each document contains words from different topics.