Abstract

A small number of studies have sought to establish that research papers with more funding acknowledgements achieve higher impact and have claimed that such a link exists because research supported by more funding bodies undergoes more peer review. In this paper, a test of this link is made using recently available data from the Web of Science, a source of bibliographic data that now includes funding acknowledgements. The analysis uses 3,596 papers from a single year, 2009, and a single journal, the Journal of Biological Chemistry. Analysis of this data using OLS regression and two ranks tests reveals the link between count of funding acknowledgements and high impact papers to be statistically significant, but weak. It is concluded that count of funding acknowledgements should not be considered a reliable indicator of research impact at this level. Relatedly, indicators based on assumptions that may hold true at one level of analysis may not be appropriate at other levels.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

This paper seeks to examine an important bibliometric relationship that has been assumed to exist between the count of the funding acknowledgements received by a research paper and the paper’s impact. The paper examines this relationship, between the count of funding acknowledgements of a paper and its citation impact within the context of a single journal. Such a focus upon the count of citations as a measure of the impact of a paper rather than upon the impact factor facilitates a more precise statistical test of the relationship between the count of funding sources and impact. Account is also taken in the analysis of background or implicit funding which is indicated by the address information in the publication record.

The paper begins by reviewing the evidence on the link between the funding acknowledgements of papers and the impact of papers, and considers the reasons that have been given for such a relationship. An empirical study of a dataset of papers is then presented, the results of an analysis are then given and discussed, and conclusions made.

Literature

Funding acknowledgement data

Funding acknowledgement information on research papers identifies organisations that have supported the research that led to those publications. Information is provided by authors on their journal papers and bibliographic information companies compile it into their databases. Funding bodies may also keep records of papers that are assumed to result from their funding. Bibliographic databases have held this type of information for some time. The US Medline database which is made freely available through the PubMed service provides grant information on the indexed journals of its database. However, coverage of grant information is not complete. While in the PubMed database major international biomedical funders are identified, including from the United States, there are omissions. Likewise, the Web of Science also holds grant data, which are termed funding acknowledgement data. Again, not all funding acknowledgements in papers in the journals that are indexed are recorded in the electronic records of the Web of Knowledge although work is under way here, as in Medline, to extend coverage.

Currently in the Web of Science, funding acknowledgement data is comprised of three fields, information about the funding body, information about the grant, if available, and any further information to elaborate how the funds were employed. Funding acknowledgement data provides not only a link or relationship from organisations to papers but also through the property of co-occurrence, a means of associating the entities of the bibliographic record in new ways. For example, the new data make it possible to map the research outputs and priorities of different funding bodies to identify areas of common interest, and to facilitate comparisons between funders in terms of the impact achieved by the papers they fund.

The development of funding acknowledgement data

Before the recent advent of bibliographic databases, those wishing to use funding acknowledgement data could only acquire such information by manually trawling through the paper copies of published papers to scan the acknowledgements section for grant information and, if desired and when possible, cross-referencing to the databases of funding bodies whose support might have led to the papers. The difficulties inherent in this process, such as its large scale, the time intensity, the likelihood of omissions and errors, have led to attempts to use data mining methods to examine the published record to identify funding body and grant data information (Boyack 2004). Recently, as a result of increasing provision of funding data by Thomson Reuters and Medline within their respective databases, there has been a growing sense that there is now a new dimension to bibliometric data that can be systematically exploited for the purposes of evaluation and understanding scientific practice (Lewison 2009; Rigby 2011).

Uses of funding acknowledgement data

Funding acknowledgement information has been seen to be important to both evaluation but also to those seeking to understand better the structures of science and processes of knowledge production. Naturally, there has been some overlap between evaluation and science studies in their respective consideration and use of funding acknowledgement data. But difficulties in generating large and reliable data sets have prevented the systematic examination of this question.

Use in evaluation

Research evaluation has used funding data for a variety of purposes, but generally for two main ones, finding out what has been done, and making comparisons to judge quality and support strategy making. In terms of locating research output, funding acknowledgement data on publications or within returns to funding bodies has been used as a means of tracing what research has been done, and indeed whether any research has been carried out at all (Albrecht 2009). Finding out what research has been undertaken as well as attributing research from particular funding bodies has been the first step in answering questions about the quality of research achieved by one organisation’s funding compared with various baselines. These comparisons have included comparisons with other programmes, countries and funding instruments (Rangnekar 2005) (van Leeuwen et al. 2001); and whether the funding bodies obtained the best researchers to carry out the research (van Leeuwen et al. 2001) (Campbell et al. 2010) (van der Velde et al. 2010). Also there have been a series of other enquiries concerning value for money, cost of a publication, and impact upon other scientists who subsequently cited it (Lewison 1994). Funding acknowledgment data can also be used as a way of answering strategic questions about the strength and depth of research in particular contexts (Lewison and Markusova 2010).

However, while funding acknowledgement data should provide a link with acknowledgement of funding or funding body, such a link is, in practice, difficult to prove. Correct attribution of funding is likely to be related to the extent to which a particular grant has been instrumental in the work (Lewison 1994) but establishing just how important a particular source or grant was may not be simply related to quantity of funding given or the eminence of the funding body. Indeed, generally attribution of outputs to grants appears to remain difficult, as Butler has noted (Butler 2001).

Emerging within this body of work which is largely evaluative has been the distinctive claim by Lewison—of the link between the count of funding acknowledgements of a paper and its citation impact. This work has been a distinctive contribution to a larger debate about what leads to citation impact, one of the essential projects in science studies in which a great many factors have been considered.

Critical examinations of funding and impact relationship

The relationship between funding and research impact has been explored by a number of scholars with the predominant and not unreasonable assumption being made by many that as resources, e.g. funding, provide the conditions for research to be undertaken (Pao 1991), funding leads to impact and therefore quality. Indeed, research policy makers have generally allowed research funding to stand as measure of research impact, and recently, in Germany, where greater autonomy is being given to universities, research income from third parties is now employed as an indicator of research performance (Hornbostel 2001).

It is the work of Lewison and Dawson (1998) and Lewison and Devey (1999) which has established the link between the count of funding sources and the impact of papers. Their analysis adopts the principle of using a classification of research by research level employed by Narin et al. (1976) as a quality measure. In their 1998 paper, Lewison and Dawson suggest the causal process for the outcome they observe: “The result for the number of funding bodies tends to confirm the original hypothesis that multiple funding acknowledgements are correlated with research impact because their presence in the acknowledgements of a paper shows that the work has passed one or more screening processes, mostly through peer review.” page 25 (Lewison and Dawson 1998). Other work notes similar links between reputation of the funding body, the number of funding bodies and the mean rank of the papers (Lewison and Dawson 1998; Lewison et al. 2001; Boyack 2004). Lewison and van Rooyen (1999) also seek to rule out the effect of reviewer bias that might favour papers with more funding acknowledgements, providing evidence that the link between the count and identity of funding acknowledgements and quality is a real effect and not an issue of selection bias (by journal or funding referees).

Bourke and Butler (1999) also found an association between the nature of funding sources of a paper and its citation impact, but a more important predictor in their data was the form of employment of the member of staff. A study conducted not at the specific level of papers but at the level of individual scholars (Sandstrom 2009) did find a link between the quantity of funding and the overall quantity and quality of papers but the study did not formally examine the identities of the funding bodies from which grants had been obtained. However, neither the earlier systematic study of factors associated with citation by Baldi (1998) nor the recent study, limited to the field of social and personality psychology (Haslam et al. 2008), found evidence of a link between the funded status of papers and their citedness.

Approaches that contradict or cast doubt on the positive relationship between count of funding and impact comprise both case study based studies and bibliometric analysis. The case study approach by Heinze et al. (2009) examining two fields of science has argued against the explicit link between quantity of research funding and in favour of flexibility in how grants can be used. This research also emphasized the importance of long term funding. By contrast, the study by Tatsioni et al. (2010) on the importance of funding of the work for which Nobel Prizes were awarded suggests that a substantial proportion of the very highest-level work was “unfunded”. This latter piece of work lead to a controversy over the role of NIH in funding breakthrough research (Berg 2008; Capecchi 2008).

Importance of link between funding and impact

The claim that the count and the identities of funding sources should have a link to the quality of research produced reflects powerful and commonly held assumptions that have prima facie credibility and underpin scientific practice and research policy, extending to its systems of reward at many levels. However, evidence from the literature is that such a link, observable either bibliometrically or through other forms of evidence about the conduct and outcomes of scientific practice, is not established beyond question. Moreover, the mechanism proposed to explain the link—that more funding for a research activity implies greater peer review of the research proposals and that this then leads to higher quality in the form of greater number of citations of publication has both supporting and contrary evidence.

Given the importance of this question to evaluation and policy it is therefore proposed to re-examine the issue, taking advantage of the systematic data now available in the Web of Science. Rather than undertaking a case study based study, the approach here is to focus at the bibliometric level and examine the link between count of funding acknowledgements and citation impact of publications.

Making tests of the link between funding acknowledgements of a paper and a paper’s impact

Statistical tests that establish links between impact of papers and other variables are more reliable when citation counts (adjusted for elapsed time) are used instead of aggregated or gross measures such as impact factors or research level (Narin et al. 1976). More objective tests of the relationship of funded status, including the count of funding acknowledgements, with citation impact are those which focus upon a single journal and over a period long enough for papers to acquire sufficient citations for there to be an effect to observe, and using papers all of one kind (i.e. not a mix of review papers and cases or different research levels (Narin et al. 1976) to control for refereeing effects (peer review effects) a posteriori.

Methods and data sources

The central question pursued by the research reported here is whether funded status and count of funding acknowledgements is related to or independent of the impact of those papers and if a relation is found, to measure its extent, and to consider any other likely covariates with explanatory power over the dependent variable. While earlier approaches have observed evidence of a link between the impact factor and a paper’s citedness the count of a paper’s funding acknowledgements on the other (Lewison and Dawson 1998), here, the test of the relationship is confined to the publications within a single journal and a single year. The test carried out here is one that can focus more closely on the impact of the count funding acknowledgements on the citation count of the individual paper by using the data from a large and homogenous data set where there is close similarity of subject matter and type of publication.

While it is recognized that there is some relationship between the individual impact of the papers in a journal and the journal’s impact factor statistic (whether current or 5 year), it is now possible given the opportunity to examine the publications within a single journal to avoid the use of impact factors. Any analysis of papers within a single journal should nevertheless take into account differences in the form of academic output as journals commonly include papers of different types. The analysis used data about only one type of papers, including only research papers in the sample and excluding review papers, letters, case reports and book reviews as these latter forms of output result from work that may have different funding mechanisms.

Normalization of the citation impact by reference to the journal impact factor has not been required here as all papers are from the same journal. However, account must be taken of the elapsed time as publications taken over a period of over a year will include papers that have had less time to accumulate citations. A method for accounting determining the independence of elapsed time in the analysis is introduced below.

Selection of papers

Papers from the whole of 2009 were identified in the scientific journal the Journal of Biological Chemistry. The analysis was carried out on the Journal of Biological Chemistry for the following reasons: coverage of the funding record was extensive; the number of publications was large to give scope for the observation of a relatively small effects; on first inspection, a significant number of papers had funding acknowledgements and the frequency count of funding acknowledgements for the papers showed a large number with high counts of funding acknowledgements; the proportion of cited papers was large, giving scope to identify differences in the impact of publications; the journal, as the pre-eminent journal of the subject area, was assumed to have a strong quality threshold for papers and an effective and standardized peer review process that would lead to consistency of quality. Papers that were research papers and articles were identified, while review papers were excluded from the data set as review papers are generally less likely to be written with the assistance of funding. The citation counts of the set of papers were then collected as was information on other relevant data concerning authors, funding bodies and the addresses of the authors of the paper. Information was also collected about the point in time when the paper was published from the date and journal volume and issue number. This allowed the time between the moment when the citation counts were made (2nd February 2012, the date of download) and the date of publication of the paper. An assessment of the elapsed time from the point of publication was then made using issue numbers which are allocated on a monthly basis.

A data file was then created with the following fields to facilitate the analysis:

Index variables

-

(a)

Paper number (unique number created for the analysis of the data set giving unique record number from one to 3,596, although the Thomson Unique Identified UT could have been used);

-

(b)

Issue number in which the article appeared, corresponding to the month of publication;

Citation/impact relevant variables

-

(c)

Response variable

A response variable to measure citation impact was developed from the actual counts of citation impact by adjusting the actual counts to a standardized citation rate to take account of the elapsed time. The first step in this process was regress the mean of the total citations for each time period of a month (a journal issue) by the elapsed time measured in months from the date of census. This regression was carried out using the weighted least squares approach as mean citation rates for each elapsed time period had different variances. The regression and equation are shown below (Fig. 1; Table 1).

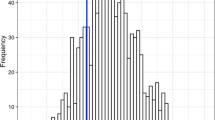

The model which fit the data could then be used to standardize the actual counts to produce a standardized citation count for each paper that could be used in an OLS regression. As the distributional properties of this standardized variable were skewed, as is often the case with citation counts (Leydesdorff and Bensman 2006), the log transformed variable was used as the predictor variable. The distribution of transformed variable is shown below. The distribution has an approximation to normality with small level of Kurtosis and some negative skew. In order to avoid significant negative skew of the distribution of transformed values for citation impact from the presence of 66 papers in the data set without citation, the standardized value was calculated as actual citations plus one divided by expected citation count (Fig. 2; Table 2).

Related to production of the paper

-

(d)

Count of authors;

-

(e)

Count of countries involved;

-

(f)

Count of institutions;

-

(g)

Count of countries;

Related to the production of the paper—funding specific

Independent variables measuring funding influence upon the publications were developed to cover implicit funding and explicit funding given the possible importance of core funding i.e. implicit funding in the production of the papers. A variable for implicit funding was created by examining with the aid of the data mining programme VantagePoint and with further examination by eye the addresses of all authors in the data set and counting as an instance of “implicit funding” any address that contained the details of a government laboratory, research institute and firms. Laboratories were identified by the presence of the word “lab” or “laboratory”, research institutes by the word “institute” and firms by the string “Ltd” or “Co”. The purpose of this coding was to attempt to identify and quantify the influence upon each paper of work carried out by individuals whose funding was core funding or implicit funding. An explicit funding variable was also quantified such that for each paper there was a count of the number of funding acknowledgements received.

-

(h)

Count of implicit funding—this was based on the count of addresses of authors where, within the address field the address of a laboratory, research institute of company occurred;

-

(i)

Count of explicit funding—supporting the research, prepared from counting the funding bodies explicitly acknowledged in each paper, i.e. the count of explicit funding.

General features of the data

Of the 3,596 papers 66 were uncited or 1.8 %. One paper had 240 citations. The count of citations is a variable with mean of 10.2, mode of 6, variance of 100 and is positively skewed with a high level of kurtosis (Table 3).

The following table analyses papers by the number of their funding acknowledgements, implicit, explicit and both kinds. 2,175 papers had no implicit sources, while 237 papers had no explicit sources. 128 papers had neither source. In all, across the whole set of papers there were 9951 sources indicated of which 1,945 were implicit sources or 19 % of all sources. Explicit sources numbered 8,006 or 81 % of all sources. Explicit sources are therefore more common on papers than implicit sources. Multiplying the count of acknowledgements in the left column by the frequency count of papers in next three columns gives the actual totals of funding acknowledgements for the three categories of papers (Table 4).

Papers were subdivided into four mutually exclusive categories according to their funding status: no funding either implicit or explicit (1), implicit only (2), explicit only (3) and explicit and implicit (4). The distribution of papers across these sets of papers is shown below. It can be seen that the number of papers noted as without funding either implicit or explicit (128 papers) corresponds to the papers in the table above whose count of funding is 0.

Analysis of the data

Two forms of analysis were carried out, an OLS regression using the predictor variable shown above (Log Transformed Standardized Citation Impact) and two non-parametric ranks tests. The OLS regression used the predictor variable and a forced selection of the independent variables related to the production of the paper including the funding of the paper. The ranks tests compared papers in terms of their citation impact measured by the predictor variable according to their funded status when grouped by category of funding as noted above in Table 5 distribution of papers by funded status. The second ranks test compared papers without funding acknowledged either implicit or explicit (128 papers) with papers that had funding acknowledged (3,468 papers). The test of ranks could have employed the untransformed variable without making any difference to the result (Table 6).

OLS regression

The model that tested used variables to indicate the implicit funding, explicit funding, the count of authors, count of countries and a number of variables relating to the paper itself (count of pages, and count of cited references). The model is statistically significant but accounts for relatively little of the variance of citation impact (Table 7).

The coefficients with influence upon predictor were the count of explicit funding, the count of authors, and count of cited references. Implicit funding is not linked here to greater or lesser impact although its coefficient is calculated as negative. Other variables without influence upon the citation impact are the number of countries from which the authors of a paper come and the length of the paper (Table 8).

Ranks test

To further investigate the role of funded status upon the citation impact, two ranks tests were carried out, one comparing four groups of papers, the other comparing two groups of papers (Tables 9, 10).

The Kruskal Wallis test of ranks indicates no statistically significant relationship between the funded status of papers and citation impact of papers. The Mann–Whitney test shown below indicates that funded status makes no difference to the impact of papers (Tables 11, 12).

Discussion

The evidence presented above has been obtained from a focused analysis of the papers from a single journal. This approach aimed to ensure a reliable and as accurate a test as possible of the relationship between the counts of funding acknowledgements of papers (explicit and implicit) and their citation impact. The analysis suggests that the count of explicit funding acknowledgements is statistically significantly related at this scale of analysis to citation impact of papers, but has an extremely small influence upon impact. It is only with univariate methods that the link between funding and impact is visible. The two ranks tests carried out on the data suggest that no link exists, the results of such tests suggesting that papers with funding acknowledgements of either explicit or implicit kind or of both kinds are no more or less likely to have a greater citation impact than papers without funding acknowledgements (i.e. papers that have a count of funding acknowledgements of 0).

It may therefore be suggested that the count of funding acknowledgements on a paper as an indicator of quality, a view based on the assumption of funding being associated with greater peer review of a research proposal, may need some re-examination. Data presented above gives little credible evidence that the most highly cited papers are those that result where there is funding acknowledged. If the count of grants received in terms of explicit funding and the count of implicit funding received does not indicate the extent of quality control of research, where do differences in impact arise? Could such differences in citation impact arise after the fact, once the funding has been allocated and the research is being done and cited? The dynamic character of scientific knowledge discovery and its uncertainty of outcome suggest this is more likely as an explanation.

Limitations and considerations of the study

There are a number of limitations in a study such as this which concern how well the analysis has covered the relationship of the key dependent variable of citation impact with the role of the count of funding acknowledgements.

The first of these limitations concerns the elapsed time from the date of publication. This study is limited to the available data and none is available prior to 2009. However, if data for a longer period were available, a stronger relationship between the count of funding acknowledgements and citation impact might be found. It is however more likely in the period immediately after publication that papers with more funding acknowledgements become more well-known than papers without funding and are therefore more cited. If, as is widely assumed, papers ultimately acquire citations based upon their usefulness and less upon their being known by the community, on the basis of the analysis carried out here, the already weak relationship between funded status and impact might be seen to reduce further if data from a longer period of time were to be used.

The second and very clear limitation of a study such as this is that no account is taken of the identity of the authors writing the papers or institutions in which such authors are based although such factors could significantly influence citation impact. Controlling for authors is desirable, but is in practice very difficult to achieve, particularly given multiple authorship of papers. Differences in the impact of publications do generally arise from the capacities of the authors and institutions and the unique combinations of authors that carry out the research and write the papers. Such differences in the quality of the applicants for funding may be the significant causal variable with funding acknowledgements on papers reflecting the quality of the researchers and their specific application for funds, rather than the funding acknowledgements themselves having any or a even a substantial impact upon impact.

If authors and the combinations of authors are the key influence upon quality, the count of funding bodies could be a token of quality, i.e. as indicator of the quality of the application itself rather than a direct influence. The count of funding acknowledgements would then constitute a mediating variable, with the quality of the original funding application mediated through a “peer review” effect operating by way of the scrutiny by the reviewers of multiple grant awarding bodies.

Thirdly, the effect observed in this journal may not be typical of the effects present in other journals and subject categories. While the size of the database used here is large, it is confined to a single journal.

Fourthly, the analysis carried out makes the assumption that funding acknowledgement data as reported by authors and identified in journals and recorded by the Web of Science reflects the actual acknowledgements of funding which supported a paper. It may however be the case that such data is in fact erroneously recorded by authors.

Fifthly, core funding by institutions may not be properly recognized in this analysis, although account has been taken of organisations that provide such funding through an analysis of implicit funding. Very little research is undertaken without some resources being used; papers that do not carry funding acknowledgements may nevertheless be funded by researchers’ host organisations. However, such implicit funding would not normally constitute a peer review influence upon the quality of research as explicit in character as would the peer review process of a formal grant awarding body such as NIH, or the MRC.

Sixthly, limitation of the data set to papers in a single journal located within two scientific fields may limit the range of funding organisations from which resources are received by research teams, although no comparisons with other journals or fields has been made. The presence of such a limit to the number of organisations funding the papers in this data set might also reduce the number of types of organisations. Such a reduction in the types of funding bodies involved may result in a more meaningful measure of impact of funding sources on quality, increasing confidence in the validity of estimates of an effect of count of funding on citation impact.

Finally, it might be argued that a true measure of the impact of research funding needs to take into account not only the citations of publications produced, but also the quantity of the publications produced, and potentially, impacts of training and skills development which may not yield increased citations to a paper. The research undertaken here has not carried out analysis that takes both quantity and quality into account. Such a step would require a great deal of additional information not presently collected either by journals, Thomson Reuters’ Web of Knowledge or Medline. Were grant information and the amount of funding to be provided, such an analysis could be carried out since the publications, and their impact could be linked directly to funding.

Conclusions

This paper has sought to examine the relationship between funding of research as evidenced by the count of funding acknowledgements on the paper and the extent of a paper’s impact, measured here by the count of citations. The aim has been to examine in detail, using actual citation impacts rather than journal impact factors, and in the case of the entire publication set of a foremost journal of the biochemical sciences for a period of two and half years, the relationship between the count of funding acknowledgements and the impact of publications.

The relationships found between the count of funding acknowledgements and the impact of papers suggests little effect if any of a peer review effect influencing the quality of publications. Other factors, such as the quality of the researchers involved, and the post publication activities of other researchers may exert more of an influence upon quality than the enhanced peer review effect of multiple grant awarding bodies.

The evidence suggests that the nature of the link observed by other scholars, but not by all, is very weak at the level of a specific journal. Further work would appear to be justified to determine if an effect is visible across a larger numbers of journals in the same field, and across scientific fields, and using citation impact rather than aggregate measures, such as impact factors.

The paper also has implications for indicator development within science policy. Where indicators are used, it is regularities of relationship between variables, positive or negative, that provide the basis upon which indicators can be created. This paper has argued that while a relationship may be found at certain levels of aggregation, analysis of empirical data presented here shows the relationship between funding and impact to be negligible at the level of the individual journal. It is suggested therefore that indicators are only useful when they can be shown to apply at the level at which they are to be used.

Finally, while multiple awards, resulting in a paper’s having a higher count of funding acknowledgements, might appear to be a sign of quality, the need to manage a multiplicity of funds for research projects and to subject the research to the possibly contrasting and conflicting criteria of different funding bodies may in fact constrain, rather than enhance, quality of research. The association between grant winning and carrying out research is a commonplace of research policy and practice and leads to the simplistic assumption that more funding leads to better work of higher quality. However, the evidence presented here is that such a relationship, measured at this scale and in terms of citations counts, is evident but is weak and questionable. Further investigation into the connection between resource input, the identity of funding bodies and other variables noted in the literature would appear timely.

References

Albrecht, C. (2009). A bibliometric analysis of research publications funded partially by the Cancer Association of South Africa (CANSA) during a 10-year period (1994–2003). South African Family Practice, 73(1), 73–76.

Baldi, S. (1998). Normative versus social constructivist processes in the allocation of citations: A network-analytic model. American Sociological Review, 63(6), 829–846.

Berg, J. M. (2008). A nobel lesson: The grant behind the prize. Science, 319(5865), 900. (letter).

Bourke, P., & Butler, L. (1999). Analysis of impact depending on mode of funding—Austria biomed papers. Research Policy, 28(5), 489–499.

Boyack, K. W. (2004). Mapping knowledge domains: Characterizing PNAS. Proceedings of the National Academy of Sciences of the United States of America, 101, 5192–5199. doi:10.1073/pnas.0307509100.

Butler, L. (2001). Revisiting bibliometric issues using new empirical data. Research Evaluation, 10(1), 59–65. (proceedings paper).

Campbell, D., Picard-Aitken, M., Cote, G., Caruso, J., Valentim, R., Edmonds, S., et al. (2010). Bibliometrics as a performance measurement tool for research evaluation: The case of research funded by the National Cancer Institute of Canada. American Journal of Evaluation, 31(1), 66–83. doi:10.1177/1098214009354774.

Capecchi, M. (2008). Response. Science, 319(5865), 900–901. (letter).

Haslam, N., Ban, L., Kaufmann, L., Loughnan, S., Peters, K., Whelan, J., et al. (2008). What makes an article influential? Predicting impact in social and personality psychology. Scientometrics, 76(1), 169–185. doi:10.1007/s11192-007-1892-8.

Heinze, T., Shapira, P., Rogers, J. D., & Senker, J. M. (2009). Organizational and institutional influences on creativity in scientific research. Research Policy, 38(4), 610–623. doi:10.1016/j.respol.2009.01.014. (article; proceedings paper).

Hornbostel, S. (2001). Third party funding of German universities. An indicator of research activity? Scientometrics, 50(3), 523–537.

Lewison, G. (1994). Publications from the european-community biotechnology action program (bap)—multinationality, acknowledgment of support, and citations. Scientometrics, 31(2), 125–142.

Lewison, G. (2009). Financial acknowledgements on the Web of Science: A new resource for bibliometric analysis. Proceedings of ISSI 2009—12th International Conference of the International Society for Scientometrics and Informetrics, 2(2), 968–969.

Lewison, G., & Dawson, G. (1998). The effect of funding on the outputs of biomedical research. Scientometrics, 41(1–2), 17–27.

Lewison, G., & Devey, M. E. (1999). Bibliometric methods for the evaluation of arthritis research. Rheumatology, 38(1), 13–20.

Lewison, G., Grant, J., & Jansen, P. (2001). International gastroenterology research: Subject areas, impact, and funding. Gut, 49(2), 295–302.

Lewison, G., & Markusova, V. (2010). The evaluation of Russian cancer research. Research Evaluation, 19(2),129–144.

Lewison, G., & van Rooyen, S. (1999). Reviewers’ and editors’ perceptions of submitted manuscripts with different numbers of authors, addresses and funding sources. Journal of Information Science, 25(6), 509–511.

Leydesdorff, L., & Bensman, S. (2006). Classification and power laws: The logarithmic transformation. Journal of the American Society for Information Science and Technology, 57, 1470–1486.

Narin, F., Pinski, G., & Gee, H. H. (1976). Structure of the biomedical literature. Journal of the American Society for Information Science, 27(1), 25–45. (article).

Pao, M. L. (1991). On the relationship of funding and research publications. Scientometrics, 20(1), 257–281.

Rangnekar, D. (2005). Acknowledged: Analysing the bibliometric presence of the Multiple Sclerosis Society. Aslib Proceedings, 57(3), 247–260.

Rigby, J. (2011). Systematic grant and funding body acknowledgement data for publications: New dimensions and new controversies for research policy and evaluation. Research Evaluation, 20(5), 11. doi:10.3152/095820211X13164389670392.

Sandstrom, U. (2009). Research quality and diversity of funding: A model for relating research money to output of research. Scientometrics, 79(2), 341–349. doi:10.1007/s11192-009-0422-2.

Tatsioni, A., Vavva, E., & Ioannidis, J. P. A. (2010). Sources of funding for nobel prize-winning work: Public or private? Faseb Journal, 24(5), 1335–1339. doi:10.1096/fj.09-148239.

van der Velde, A., van Leeuwen, T., van Welie, S., & Stam, H. A. K. (2010). High quality of research supported by the Netherlands Heart Foundation (‘Nederlandse Hartstichting’). nederlands tijdschrift voor geneeskunde, 154, A803.

van Leeuwen, T. N., van der Wurff, L. J., & van Raan A. F. J. (2001). The use of combined bibliometric methods in research funding policy. Research Evaluation, 10(3), 195–201.

Acknowledgments

The author would like to thank and anonymous referee and Dr. Fred Wheeler and Professor Keith Julian for a number of useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Rigby, J. Looking for the impact of peer review: does count of funding acknowledgements really predict research impact?. Scientometrics 94, 57–73 (2013). https://doi.org/10.1007/s11192-012-0779-5

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-012-0779-5