Abstract

Platform companies use techniques of algorithmic management to control their users. Though digital marketplaces vary in their use of these techniques, few studies have asked why. This question is theoretically consequential. Economic sociology has traditionally focused on the embedded activities of market actors to explain competitive and valuation dynamics in markets. But restrictive platforms can leave little autonomy to market actors. Whether or not the analytical focus on their interactions makes sense thus depends on how restrictive the platform is, turning the question into a first order analytical concern. The paper argues that we can explain why platforms adopt more and less restrictive architectures by focusing on the design logic that informs their construction. Platforms treat markets as search algorithms that blend software computation with human interactions. If the algorithm requires actors to follow narrow scripts of behavior, the platform should become more restrictive. This depends on the need for centralized computation, the degree to which required inputs can be standardized, and the misalignment of interests between users. The paper discusses how these criteria can be mobilized to explain the architectures of four illustrative cases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Digital marketplaces have become a cornerstone of the global economy. In 2023, nearly a fifth of global retail sales took place on digital platforms. This trend extends far beyond retail, encompassing wholesale commodities, freelance labor, and financial products. Sociologists therefore describe the platform model as the ‘distinguishing organizational form of the early decades of the 21st century’ (Stark & Pais, 2020) and dedicate substantial attention to the way platforms shape users’ experiences, social lives, and economic opportunities (Schor et al., 2020; Kenney & Zysman 2016; Rahman & Thelen, 2019; Kenney et al., 2021).

Under headings such as ‘surveillance capitalism’ (Zuboff, 2019) or ‘algorithmic management’ (Kellogg et al., 2020), much of this work has examined how platforms use the affordances of digital technologies to control their users (Farrell & Fourcade, 2023). Studies explore how machine learning algorithms, ranking systems, user interfaces, and other technical features regulate users’ experiences on these platforms (Orlikowski & Scott, 2014, Barrett et al., 2016, Rosenblat & Stark, 2016, Griesbach et al., 2019, Wood et al., 2019, Fourcade & Johns, 2020, Cameron, 2021). A central insight is that platforms do not just enforce compliance directly, but also rely on subtle strategies to nudge users toward desirable behavior (Shapiro, 2018, Rahman, 2021). Techniques of ‘gamification,’ and ‘incentive design,’ ensure that users’ further platforms’ objectives willingly (Kornberger et al., 2017, Stark & Pais, 2020).

Yet, platforms vary in their use of algorithmic control (Vallas & Schor, 2020). Some ‘gig platforms’ subject practically every interaction to granular oversight and restrictive constraints (Griesbach et al., 2019, Rahman, 2021), while others leave users free to transact with whomever they want in practically whatever way they want (Lingel, 2020). Even within the same industry (e.g. food delivery), companies might adopt different platform architectures and thereby give rise to radically different dynamics of control and resistance (Lei, 2021). ‘Malleability’ and ‘hybridity’ therefore have been described as constitutive properties of platforms (Schüssler et al., 2021). Yet, very little research has asked what explains this architectural variation. Why do some platforms deploy more restrictive architectures than others?

I pursue two goals in this paper. First, I want to recover the significance of this question for economic sociology more generally. Going beyond the literature on algorithmic control, I show that this question is crucial to explain the order of digital markets – the basic way markets organize competition, valuation, and cooperation (Fligstein 2002; Beckert, 2009, 2012, Koçak et al., 2014, Aspers & Darr, 2022). Second, the paper suggests that the answer to this question requires attention to the designers who put the platform architectures into place, joining a growing chorus of authors who have called for greater attention to the designers behind transformative technologies (Bailey & Barley, 2020, Christin, 2020, Torpey, 2020, Schüssler et al., 2021). I argue that we can explain why some platforms are more restrictive than others by focusing on the practical problems that platform companies need to solve within the purview of a particular design philosophy. In that way, the paper suggests that we can only begin to understand the social order of digital markets by including in our analysis the work of those who design them.

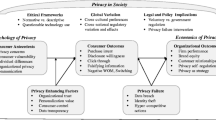

My argument will unfold in the following steps. In the second section, I survey the literature on algorithmic management and suggest that we can classify platforms’ control regimes along two dimensions. Platform architectures consist of the technical, legal, and organizational foundations that structure the market process (Lei, 2021). Concretely, this includes the rules, procedures, and interfaces that organize how actors can perceive and act in the market (communication structure) as well as the governance regimes that actively monitor and intervene in the market (control structure). Platforms can be restrictive or open on both dimensions. Restrictive means that they minimize users’ autonomy to a set of deterministic choices. Open means that users can define the terms of their transactions freely. To capture some of the resulting variation, a third section introduces four illustrative cases that mark extremes in the spectrum of possible control regimes.

Before asking why companies may choose to make communication and control structures restrictive or open, the fourth section establishes why this is a crucial question for economic sociology more generally. In the past, economic sociology has privileged the endogenous dynamics between market actors to explain how markets operate. This was a sensible choice because the institutional and legal frameworks that govern markets are neither exogenously given nor do they overdetermine the market process. Rather, market actors creatively adapt institutional rules, normative values, and social connections in their interactions (Fligstein & Dauter, 2007, Fligstein & McAdam, 2012). How these macro-structures organize the logic of competition, cooperation, and valuation thus depends on their enactment by market actors (Krippner & Alvarez, 2007).

Platform architectures, in contrast, can control the logic of the market more directly. They can define actors’ choice environment and insulate the design process form external influence. On digital platforms, the basic operation of the market therefore becomes conditional on platforms’ decisions about the definition of users’ choice environment. The more restrictive the platform architecture, the less we can understand it in terms of endogenous dynamic in the market and the more we must focus on those who put the platform architecture into place. Why designers adopt more and less restrictive architectures thus becomes a first order analytical concern.

The fifth section begins to address this question. Drawing on specialized literature in management, operations research, economics, and information system research, I reconstruct basic tenets of the philosophy behind platform design. I then suggest that this philosophy provides a basic rationale for why platform companies adopt restrictive architectures. They try to build markets that work like algorithms for the solution of constrained optimization problems. These algorithms are executed by a combination of human activities and software. Whether or not the platform needs to be restrictive depends on what it takes to realize the algorithm.

The sixth section identifies three relevant criteria and applies them to the four illustrative cases: the need for centralized computation, the degree to which the required information can be standardized, and the alignment of interest among the different users of the platform. Each factor influences how difficult it is to get the right input from market participants and thereby modulates whether the architecture needs to be restrictive.

The conclusion draws out implications for existing research on the platform economy and economic sociology. It suggests that research on digital marketplaces requires a sociology of social engineering that traces apart the interactions between the design logic of the platform and the endogenous dynamics among embedded market actors.

Constraints and control in the platform economy

This section reviews the literature on algorithmic control, suggests that there is substantial variation in the way platforms control their users, and that this variation can be understood along the two dimensions of platform architectures: their control and communication structures.

Research on the platform economy can be divided into two broad camps. One set of studies establishes what differentiates the platform as a novel organizational form from hierarchies, networks, and markets (Kenney & Zysman, 2016, Kornberger et al., 2017, Srnicek, 2017, Watkins & Stark, 2018, Stark & Pais, 2020). This literature has identified multiple distinctive features of platforms, but most are connected to one fundamental aspect of this organizational form. Platforms coopt assets are not themselves part of the platform firm – Airbnb rents apartments and Uber schedules cabs, but neither company owns these assets. Instead, the platform coordinates their use by providing a technological architecture that mediates the interactions between owners, buyers, and other stakeholder groups (‘sides’). All profits flow from this process of intermediation in one way or another (Watkins & Stark, 2018, Lei, 2021). Fundamentally, platforms are therefore socio-material and organizational arrangements that coordinate interactions between different user groups for economic gain (Orlikowski & Scott, 2014, Barrett et al., 2016, Davis & Sinha, 2021).

A second and much larger set of studies examines how specific platforms produce or ameliorate frictions in the interactions between users, companies, and workers (Griesbach et al., 2019, Vallas & Schor, 2020, Schüssler et al., 2021). The research on ‘algorithmic management’ finds that platforms both intensify and adapt traditional techniques to control the labor process (Edwards, 1979, Burawoy, 1982, Braverman, 1998), though they vary in their use of these techniques (Vallas & Schor, 2020, Schüssler et al., 2021).

To capture this variation, it is helpful to distinguish between two dimensions of platform’s technological architectures. Platforms are feedback control systems. They organize a process of economic exchange and then adjust this process based on constant feedback (Turnbull, 2017, Fourcade & Johns, 2020, Stark & Pais, 2020, Farrell & Fourcade, 2023). This corresponds to two basic components of the platform architecture: the communication and the control structure.Footnote 1 The former consists of the interfaces, rules, and procedures that define the core flow of the platform. This core flow is a cycle where users submit structured queries, the system processes this information, and submits the response back to the user (Baldwin, 2023). The latter, the control structure, is the system that collects data and adjusts the operation of the system in a continual feedback-loop (Wiener, 1965, Doyle et al., 2013). Both structures are dependent on each other, but distinct. The communication structure first makes the market legible to the control structure. It establishes what data the control structure can collect and analyze. But many elements of the communication structure only work if they receive feedback from the control structure (Leveson, 2011). In sum, while the communication structure defines the fundamental properties of the market process, the control structure actively observes and manages the interactions that unfold via the communication structure.

The communication and control structure enable distinct forms of algorithmic management. First of all, companies can use the communication structure to exercise traditional forms of ‘technical’ control (Möhlmann et al., 2021). Interfaces, rules, and procedures establish the market’s basic ‘ontology.’ They determine how market actors perceive the market and each other, what their options are and how they can make use of them. (Agre, 1994, Galloway, 2004, Kornberger et al., 2017). By narrowly defining possible choices in the market, the platform can force users to go through a fixed decision-tree of predefined choices and thereby limit their autonomy to a minimum. The transaction system then becomes analogous to the assembly line in a Fordist factory. Each action follows a tightly deterministic script that leaves little room to deviate from the course of action that is optimal for the platform company (Kellogg et al., 2020).

But communication structures also allow for more subtle forms of control. They inscribe the market with basic incentives because they define what behavior is reasonable and what is not, what will be rewarded and punished. The company can therefore design the communication structure to align users’ interests with their own (Rosenblat, 2018, Rahman, 2021, Raveendhran & Fast, 2021, Rahman,et al., 2023). For instance, designers can ‘gamify’ the interfaces and rules of a platform. They then present the user with tightly specified and granular objectives for different activities, scoring rules, and a reward system. Constant feedback, visual cues, and financial rewards lock the user into a pleasurable competition with themselves and ensure that the user behaves in ways that further the platforms’ objectives (Schüll, 2012, Ranganathan & Benson, 2020).

Another strategy involves obfuscation. By restricting what users know about how exactly they are being evaluated, it becomes easier to ensure that they do not begin to game the system (Horton et al., 2011, Rahman et al., 2023). When users do not know the rules by which they are governed, it becomes more difficult for them to relate to these rules reflexively. This makes it harder for them to find loopholes and workarounds. Obfuscation is a control strategy that limits users’ autonomy to approach the system as they see fit.

While the communication structure enables techniques of control that operate on the basic reality of the market, the control structure facilitates active intervention into the market process once trading has started (Gillespie, 2010, Chen et al., 2022, Lehdonvirta, 2022). The most basic use of the control structure once again mirrors the Taylorist factory. Companies can use it to monitor the market process and intervene when users violate rules. Because the market takes place in a software environment, platform companies can collect data about practically any aspect of user interactions on a granular level (e.g. with event logging) and deploy machine learning techniques to identify suspicious patterns of behavior automatically (Curchod et al., 2020). More subtle forms of control enlist the help of other users to enforce company policies. Platforms can set up rating systems that ask users to evaluate and discipline each other. By using the results from these rating systems to punish users who violate company policy, the platform effectively distributes active control to users, but retains centralized power over the criteria guiding behavioral control (Kornberger et al., 2017, Curchod et al., 2020, Filippas et al., 2021).

In addition to these forms of coercive control, platforms can also put governance systems into place that mediate disputes and make decisions on the basis of the recorded transaction record (Corporaal & Lehdonvirta, 2023). Here, platforms perform the role of the judicative. They negotiate between parties in disagreement and arbitrate competing interpretations of the company’s rules and procedures (Zhang et al., 2020).

Finally, platforms use the control structure to implement and evaluate changes to the platform’s communication structure. Most platforms develop regimes of experimental testing that systematically collect data about the market process, implement changes to account for changes in users’ behavior – including strategies of resistance – and measure their impact (Horton et al., 2011, Rahman et al., 2023). Such experimental change is a form of adaptive control that relies on careful observation of deviant behavior and efforts to eradicate the incentives for such behavior on the level of the platform’s interfaces (Yeung, 2017).

In sum, then, platform architectures consist of communication and control structures that can deploy both active and passive, direct and indirect, static and adaptive forms of control.

Sketching the terrain: four extreme examples

Platforms’ architectures do not just allow for different modes of control, they can also exercise more or less of it. Both the communication and the control structure can be restrictive or open. This section introduces four illustrative examples that mark extremes in the resulting space of possible control regimes (see Fig. 1 for an illustration). I have chosen these examples because they are well documented and because they widen the perspective beyond the labor platforms of the ‘gig economy’, which have typically been the focus of studies about algorithmic control (Schor & Vallas, 2021). Going forward, the paper will return to these examples to illustrate the theoretical argument and demonstrate its explanatory power.

The Feeding America auction market (top left of Fig. 1) combines a highly restrictive communication- with an open control structure. Researchers from the University of Chicago built this system as an exercise in mechanism design (Prendergast, 2017, 2022). The central office of Feeding America receives roughly 300 million pounds of food per year. It needs to redistribute these donations to 210 regional foodbanks in a way that minimizes waste. To solve this problem, Prendergast and his team created an auction platform where regional foodbanks can bid on items they need for their patrons. The web page lists available offerings with some limited descriptions such as the kind of food, its weight, and location. Beneath the offering, the interface provides a box where food banks can input their sealed bid. The range of acceptable values is restricted. A button allows them to submit the bid. The website comes online twice a day, one for offerings at noon and one at 4 PM. Once the bidding concludes, the winners are notified via email (Prendergast, 2017).

The communication structure for this auction is restrictive and simple: actors can only submit a single piece of information per item. They cannot communicate with each other, receive additional information, or negotiate the price. Upon closing, the auction simply assigns the food item to the highest bidder. No further communication takes place (sealed-bid, first price auction). By contrast, the control structure is open. If individual foodbanks feel aggrieved about the process or outcomes of the auction, they can petition an independent panel to review the auction rules. But the designers built no system to monitor patterns of transactions for manipulative behavior and they created no governance structure to actively police the market process.

Uber is the classic example of a platform that is highly restrictive on both dimensions (top right). It is also the paradigmatic case of ‘surveillance capitalism’ (Thelen, 2018, Rahman & Thelen, 2019, Lei, 2021) and arguably what provided the motivation for much of the literature on the gig economy. The Uber platform aims to replace traditional taxi companies. Drivers own their own cars and use the Uber platform to identify potential customers. Passengers use the app to find taxis. To optimize the match between buyers and sellers, Uber exercises substantial levels of control about the way these transactions unfold. The interfaces are highly deterministic. They ferry both drivers and passengers through a set of either/or choices (e.g., Standard or XL cab, now or later, etc.) without room for customization. The literature has found that the intensity of control grows over the span of the labor process (Cameron & Rahman, 2022). Before drivers switch on the app, they have some room to game the system by positioning themselves at strategic locations and coordinating their app-use with other drivers. But the longer they remain online and the longer the system can track their actions, the less room they have to deviate from the intended logic of action. Drivers can choose to accept or decline a ride, but they cannot change the price, choose the route, or adjust the service level they need to deliver. The control structure is similarly restrictive. The ranking and monitoring systems nudge drivers and passengers towards highly scripted interactions and punish violations of Uber’s code of conduct automatically by either restricting drivers’ access to customers or expelling them from the platform (Rosenblatt, 2018).

Turning to the opposite extreme, we find Craigslist (Lingel, 2020), a classified advertisements webpage with a global traffic of 250 million visitors per month, and over 80 million postings (bottom left).Footnote 2 In many ways, Craigslist represents the original promise of the sharing economy (Schor & Vallas, 2021), offering “an alternative to impersonal, big-media sites,” that is “restoring the human voice to the Internet, in a humane, non-commercial environment.”Footnote 3 The website is a largely unmoderated bulletin board where anyone can post offers or requests for practically any service or commodity. Hewing closely to the simple design from the 1990s, it offers a set of general categories to classify offers (e.g., apt/housing; housing swap; etc.) and is split among 700 different geographic locations worldwide. Users can choose whatever categories they think fit their offer and they can offer as much or as little information as they see fit. The interfaces identify crucial information that users should provide for items in different categories but leaves it up to them. Most information is provided in a ‘description’ box, which puts no formal constraints on users’ inputs.

Craigslist’s control structure is similarly weak. Users do not need accounts to post or respond to offers – in other words, most users remain anonymous and the website neither tracks them nor sells information about them to third parties. Instead, users simply negotiate deals among themselves on and off the platform. While they can report fraudulent activity to customer service, the website does not moderate trades, and does not offer recourse if users become victim to fraud. When the “Personals” section became a marketplace for illegal services, the company hesitated to intervene until the issue had become a public controversy (Lingel, 2020).

Etsy, finally, represents the last extreme: a platform with open communication but restrictive control structure (bottom right). It is an online store that virtualizes the idea of a craft fair, offering to connect private crafts people, artisans, and owners of vintage items to potential customers.Footnote 4 In contrast to Craigslist, the communication structure is highly regulated. Yet, the website offers so many different behavioral options and avenues for customization that users experience very few restrictions on their interactions. For instance, while sellers go through a set of predefined choices to post a new offering, there are countless different categories they can choose from, and they can always opt to provide the most important information in an unstructured description box. Sellers also have access to a dashboard where they can customize their offerings, modify their storefront, and access analytic tools to monitor and manage their customers. Customers, in turn, can directly contact sellers, search freely for alternative offers, and choose to reject the proposed ranking of search results (Church & Oakley, 2018). While the interfaces are highly structured, they are complex enough to effectively give buyers and sellers nearly the same level of freedom they would have offline.Footnote 5

The control structure, in contrast, is highly restrictive. As Uber, Etsy provides an extensive code of conduct for interactions on its website and monitors user transactions on a granular level. For instance, if sellers do not reply to customers’ queries within 24 hours, automatic penalties kick in and downgrade sellers’ offers. Etsy also relies on user ratings to collect information about product quality and then uses this information to determine sellers’ search rank. A complex governance structure helps to resolve conflicts. Formal complaints trigger an arbitration process that draws on granular information about the transaction history. If sellers or buyers refuse to accept the results of the arbitration they can be barred from the page. Etsy also encourages ‘community work,’ and rewards artisans who curate the work of other craftspeople into ‘treasures’ that Etsy then promotes on the front page. The company even runs educational courses that teach sellers how to do this curative work (Aspers & Darr, 2022).

Much more could be said about the way these platforms operate, but these brief sketches should illustrate the range from more to less restrictive control and communication structures. Before asking what explains this architectural variation, I want to take a step back and consider the broader question: why should we care? What is the theoretical significance of platform restrictiveness for economic sociology?

From market actors to market designers

A central project within economic sociology is to understand the ‘social order of markets’ (Beckert, 2009), to explain, that is, why markets differ on three fundamental dimensions: how they organize competition between sellers, how they ensure that actors heed contracts, and how they establish the price for products and services. Across traditions, economic sociology has pointed to social macro-structures to explain this variation (Krippner & Alvarez, 2007). Field-theoretical studies show how institutional logics impact dynamics of competition (Dobbin & Dowd, 2000, Fligstein & Dauter, 2007, Carruthers, 2015, Fligstein & McAdam, 2019). Cultural sociologists trace how normative beliefs resonate in valuation practices (Zelizer, 2018) and network researchers show how social and political networks affect both dynamics and outcomes of market competition (Aspers et al., 2020; Podolny 2001; Smith-Doerr & Powell, 2005; Beckert 2010).

The analytical focus of most explanations lies on socially embedded market actors. The interactions between market actors hold the key to understand where social macro structures come from and how they organize the market (Beckert, 2009, 2012). The reason for this analytical focus is straightforward. None of the macro-structures are exogenously given. Neither social networks nor cultural scripts, nor political institutions shape the market as monolithic artifacts from without. Instead, they are themselves the product of dynamics in and around the market (Krippner & Alvarez, 2007). Economic sociology has spent particular attention to two types of endogenous processes. First, market actors actively lobby the political apparatus for the rules they need and struggle to impose their own ‘conception of control’ on the market (Fligstein, 2002). Political and regulatory institutions thus appear as the product of ‘regulatory conversations’ that take place in networks of politicians, regulators, and stakeholders (Black, 2002, Thiemann & Lepoutre, 2017). This is one of the crucial reasons why all markets are 'embedded' in society: they are governed by institutional logics that emerge from the interactions between market actors and their broader, social worlds.Footnote 6

Second, and more importantly, social macro structures do not affect the market directly because they do not exist independently from the activity of market actors. Unless market actors decide to rely on a given network connection; unless they invoke legal codes in their contracts, and unless they follow the institutional rules of their industry, these macro-structures do not shape the market process. The operation of the market is anchored in the volition of market actors because they control the terms of transactions (White, 1981). This is analytically consequential. If market actors have to invoke institutional rules, cultural values, or social networks before they can have an effect, the market actors can, to some extent, shape what that effect will be. Indeed, market actors deploy their social embeddedness strategically. They bend rules to their own purposes and creatively adapt their meaning to the situation at hand. They draw selectively on their social connections and appeal to different norms in different circumstances. Variation in the way these macro-structures operate therefore have to be explained by focusing on how market actors use them. Even when we look at the creation of markets by regulatory, legal, or political fiat (Fligstein & Mara-Drita, 1996, Goldstein & Fligstein, 2017), we must therefore consider the endogenous dynamics between market actors to understand how these frameworks shape the order of the market.

To make this argument even clearer, consider legal doctrines for a moment (Edelman et al., 1999, Pistor, 2019). The lawis often treated as an external framework that is imposing on the market from without. For instance, Katharina Pistor has shown that certain market dynamics depend on sets of preexisting (and contingent) legal codes. She gives the example of approximately ‘efficient markets.’ For a market to successfully aggregate information into a single price, the hallmark of an efficient financial market, a set of legal codes first needs to provide a shared baseline about what counts as relevant information. This would suggest that we need to explain market dynamics by focusing on the activities of judges, legislative bodies, and other elements of the legal system. Yet, Pistor and other authors in the ‘legal endogeneity literature’ are careful to point out that we cannot understand legal in-stitutions along these lines. The relevant legal codes evolve in the practice of using them. The very meaning of the law depends on the actions of those who invoke its categories (Pistor, 2019). Even here, we cannot understand how the law shapes the market without carefully attending to the activities of market actors.

In short, economic sociology has made market actors focal point of analysis because these actors realize the social macro structures and thereby affect how these structures can shape the operation of the market (Beckert, 2009). Just as the impact of new technologies in organizations is tethered to their creative adoption by workers (Barley, 1986), so does the impact of institutions, values, and networks in markets depend on the activities of market actors who first adopt them for their own uses (Padgett & Powell, 2012).

While this approach has served the discipline well in the past, the insights from the literature on algorithmic management challenge the analytical focus on market actors for the purpose of understanding digital markets. Platform architectures differ in two ways from the social macrostructures that economic sociology has focused on so far. First, platform architectures can shape the reality of markets much more directly. While social networks, laws, institutional rules, and culture operate through the individuals who use them, platform architectures confront actors’ from without. The choice environment of a platform places the user in a reality they cannot fundamentally change through use. If the platform company chooses to, they can confront the user with a closed environment where the user is limited to a set of predefined choices and information. This is what Agre (1994) meant when he claimed that the supreme power of the software engineer is the ability to define the ‘ontology’ of a platform. If you can define the choice environment users encounter, you control the space of possible actions directly (Galloway 2004; Yeung 2017). Of course, many platforms still leave substantial room for market actors to act autonomously, as we have seen in the last section. But that is a choice. Platforms can effectively choose how much freedom they give to their users to shape the logic of the market (Levy, 2015) – at least to the extent that this logic unfolds on the software interfaces of the platform (Newlands, 2021).

Second, proprietary platforms are not the result of democratic processes. Instead, relatively small groups of experts build them in proprietary organizations.Footnote 7Most platforms have to operate at scale because they rely on network effects to lower transaction and search costs for their users. To ensure low latency for millions of queries in short periods of time, platform companies tend to integrate the different components of the platforms tightly with one another (Sutherland & Jarrhai, 2018). Transaction platforms also rely on machine learning for core processes. Search and classification systems require large quantities of data, which are stored in centralized repositories. Such repositories develop what has been called ‘data gravity’ (Vergne, 2020) – they tend to attract further data - because software will build on the information streams from the machine learning algorithms. The technological architecture of a platform is therefore a relatively centralized system (Baldwin, 2023), which, in turn, will be built and maintained by relatively small groups of technical experts. As such it is not as open to external interference as democratic processes are. Again, many platforms do elicit user input to make design decisions. But whether or not they do, and what they do with these inputs is largely up to them (Pan, 2020, Luca & Bazerman, 2021).

In sum, within the software environment of the platform, companies can limit the influence of market actors on the operation of the market. They can directly shape actors’ choice environment via interfaces and procedures, and they can insulate the construction of the software from stakeholder influence. Actors’ ability to shape the operation of the market thus becomes conditional on the underlying platform architecture. Just like intelligent technologies in the workplace may leave little flexibility to workers (Bailey & Barley, 2020), digital markets may leave market actors’ little room to shape the terms of trade.

Depending on how restrictive the market architecture turns out to be, the endogenous dynamics between market actors, the platform architecture, or their mutual interplay may drive the terms of competition, cooperation, and valuation. While a platform like Craisglist allows users the freedom to do practically anything they want, companies like Uber constrain actors along all possible dimensions. If we want to understand the order of the market on Craigslist, we have to attend to users’ interactions. If we want to understand how competition, valuation, and cooperation are organized on Uber, we need to focus on the designers of the platform. If we want to understand how these dynamics work on Etsy, we should look at the interplay between users’ interactions and designers’ reactions. In other words, the degree to which platforms rely on restrictive architectures determines where the analytical focus should be for attempts to understand the basic logic of competition, cooperation, and valuation in the market. It is a first order analytical concern. Accordingly, economic sociology should begin to ask why platform companies adopt more and less restrictive platform architectures. Because the control regimes are the product of intentional design, I propose that we can make some headway here by examining the philosophy that animates the construction of these platforms. I will now turn to this question.

The market as a search algorithm

Following recent calls to include the designers into the analysis of transformative technologies (Christin, 2020, Viljoen et al., 2021, Rilinger, 2022a, b, Li, 2023), this section reconstructs central tenets of philosophy that guide platform design. I begin by reviewing the basic problem that platforms need to solve (Rietveld et al., 2019, Chen et al., 2022, Kretschmer et al., 2022) and then recover the basic approach to this problem from market design philosophy.

Digital marketplaces facilitate transactions between two or more parties when these transactions would not happen otherwise or only at greater cost. For instance, users value Grubhub because they can more easily compare offers on price and quality than in the Yellow Pages. The platform lowers users’ search- and transaction costs relative to the telephone book (Evans, 2003, Evans et al., 2011). Typically, platforms rely on same-side or cross-side network effects to achieve these benefits. As more and more users join the platform, it becomes easier to find the right transaction partner. The more drivers are on Uber, the easier users will find cabs. And the more users are an Uber, the easier cabs can find business (Gawer, 2011).Footnote 8

Contrary to economic theory, however, proprietary platforms are not just trying to lower search- and transaction costs. To sustain their user base in the face of competition with other marketplaces, they must generally enable all sides to profit more than they could in alternative settings (Gawer, 2014, Huber et al., 2017, Cusumano et al., 2019). If a platform simply eradicated all search- and transaction costs, it would quickly become unattractive for the side that derives benefits from these costs (Boudreau & Hagiu, 2009, Cennamo, 2021).Footnote 9

This means that platforms are in the business of solving coordination problems by balancing competing interests in a way that is optimal. What counts as ‘optimal’ depends on the availability of alternative marketplaces and on the underlying power dynamics between the different platform users. In some situations, platforms can get away with providing a moderate benefit to users because their outside options are limited. In others, they may have to practically eradicate all transaction costs. In some situations, they may excessively favor the interests of one side. In others, they may have to balance them more carefully. But the basic problem always remains the same: coordinating transactions between multiple sides in a way that balances the interests of the different stakeholder groups on the aggregate level.

This is true even for public platforms that help to allocate goods like health care policies, school slots, or emission certificates (Kominers et al., 2017). Such platforms must typically balance competing normative objectives. For instance, when determining the rules for an auction that assigns public school slots to children there may be tradeoffs between rules that are easy to understand, fair results, and efficiency (Budish & Kessler, 2016, Hitzig, 2020, Pathak et al., 2021).

To understand how platforms approach such fundamental balancing problems, I turn to more specialized literatures in computer science (Hanson, 2003, economics (Vulkan et al., 2013, Roth 2023; Chen et al., 2021), operations research (Bapna et al., 2022, Chen et al., 2022, Joshi et al., 2022), as well as studies about these domains of expertise (Mirowski & Nik-Khah, 2017, Möhlmann et al., 2021, Viljoen et al., 2021, Rilinger, forthcoming ). This literature develops some of the intellectual frameworks behind market design and thus give insight into the philosophy behind the construction of platforms.

The most fundamental idea of market design is that markets are algorithms that solve constrained optimization problems (Papadimitriou & Steiglitz, 1998; Hanson, 2003; Bichler, 2017; Chen et al., 2021). A combination of human interactions and software gradually identifies the optimal allocation of buyers to sellers, given a set of constraints on that solution. The constraints could, for example, reflect tradeoffs between competing goals like quantity and quality, as in: find the allocations of freelancers to clients that maximizes the number of jobs filled, but constrained on reputational thresholds.

The idea of the market as a kind of search algorithm traces back to the 19th century, when political economists first likened the economy to an information processor (Bockman, 2011). Austrian philosophers, such as Ludwig von Mises and Friedrich Hayek, famously built on this idea to develop arguments against centralized planning (Hayek, 1945). They suggested that markets assign society’s ‘factors of production’ (e.g. resources, machines, etc.) to their most efficient use relative to consumer’s ultimate wants. The distributed transactions among companies, guided by nothing but price signals, implicitly realize a search process that identifies the best assignment of resources to production processes. Writing before the invention of computers, Hayek and Mises suggested that a centralized planner would be unable to compute the solution to this complex optimization problem (Hayek, 1945, Cockshott & Cottrell, 1993). Only the invisible hand, that quasi-theological entity, could be trusted to deliver the result.

Throughout the 20th century, market design emerged as gaps in this argument became visible. Formal mathematical models suggested that the algorithm of the perfect market was not as far beyond human comprehension as the Austrians had thought, while decades of research in information and behavioral economics established that most real markets deviate substantially from that ideal. If real markets did not work as expected, but the models revealed how the markets could do what they were meant to, maybe, so the early market designers thought, they could turn to insti-tutional design to enforce the assumptions of the ideal. This was a central insight of auction design (Vickrey, 1962) and it still resonates with much of the writing on platforms by economists as well as the professed ideology of many platform companies. The inventor of Ebay, for example, supposedly started the company because he believed that the information technology of the internet could produce the institutional foundation for a 'perfect market' (Lehdonvirta, 2022, Rilinger, 2022a, b).Market design, then, began as a project to correct the discrepancies in the institutional structure of real markets that preclude them from realizing the optimal search algorithm they were meant to realize.

However, the invention and development of computers brought forth an even more consequential insight (Mirowski & Nik-Khah, 2017, Nik-Khah & Mirowski, 2019), fully inverting Hayek’s original argument. Once it became normal to think about markets as information processors that execute search algorithms, market designers realized that conventional markets presented but a flawed algorithm. Even under ideal conditions, systems of bilateral transactions can only solve a limited set of problems and do so only very slowly. As individuals transact with each other, slowly searching for cheap offers and willing buyers, the market process very gradually identifies an allocation of homogenous goods that is Pareto efficient. But, as we saw earlier, platforms are not trying to identify such an optimal allocation of homogenous goods. Instead, they have to strike a balance between competing interests and often deal with complex tradeoffs. The optimization problems they are trying to solve are often far more complex than the basic problem envisioned in micro-economic theory. Even a perfect system of distributed negotiations can no longer ‘find’ the globally optimal solution to most of these problems (Velupillai & Zambelli, 2013).

For example, consider a market that is supposed to allocate spectrum licenses among companies in a way that satisfies companies’ preferences optimally. A spectrum license allows a company to use a part of the broadband spectrum for electronic transmissions in a geographic area. Because companies may want to use adjacent bands of the spectrum, such licenses are commodities with complementarities. That is, the value a license depends on the other licenses the company holds (Bichler & Goeree, 2017). Optimal bundles of licenses therefore differ by company and because the composition of each company’s bundle has an impact on the possible combinations that other companies can choose, identifying the globally ideal allocation of bundles is very complicated – it is an ‘NP-hard’ combinatorial problem (Kominers & Teytelboym, 2020). Distributed, bilateral trading is not only unable to sort through all options in reasonable time. Such bilateral trades would never converge on the required equilibrium. Even under ideal conditions, a standard market of bilateral negotiations would produce market failures (Velupillai & Zambelli, 2013). This is not just true for spectrum licenses, but for many problems that platforms tackle. For instance, Uber must find the cheapest allocation of drivers to passengers, given current location, destination, traffic conditions, onward journeys, distribution of other drivers and so on. A system of bilateral transactions in a hypothetically centralized market for ‘cab options’ would never identify the optimal assignment of taxis to passengers.

Yet, computer science and operations research offer suites of tools to solve these kinds of optimization problems. The resulting algorithms are very fast and efficient, but they have very little to do with how we imagine conventional markets. Techniques like simulated annealing or genetic matching are specialized mathematical procedures that cannot be translated into schedules of transactions. Yet, they work better for certain types optimization problems than any system of distributed, interactive processes (Milgrom, 2017). Accordingly, market designers develop custom tailored algorithms, implemented in software, that solve the allocation problem of the platform. Market design does, in other words, gradually turn the analogy between markets and algorithms into operational reality. The market is an algorithm that solves an optimization problem (Nik-Khah & Mirowski, 2019).

However, platform cannot implement the search algorithm in just software. The appeal of markets is that they aggregate ‘private’ information: contextual information that only market participants have. For Uber, this would be parameters such as users’ willingness to pay, desired service, the level of prices that can secure drivers’ participation, and so on (Jia et al., 2017). Parts of the algorithm cannot be executed unless the platform first elicits this information from users. Platforms therefore build interfaces, rules, and procedures that generate a market process to unearth this distributed information and feed it into the software that identifies the globally optimal solution to the optimization problem at hand. Together, these computer-human hybrids then realize the search algorithm that solves the platform’s problem.

In short, the platform market is a software environment that leads market actors to realize subroutines of a larger algorithm, transforming the idea of the market as algorithm from an analogy to operational reality (Nik-Khah & Mirowski, 2019). This algorithm links the actions of individuals on the platform to the optimal balance the company needs to strike between competing interests in the final allocation of trades. This has a simple implication. The structure of the search algorithm – specifically the balance between human inputs and software – determines how restrictive the platform will have to be: depending on how specific the required inputs to the software, the more restrictive the control regime (Kyprianou, 2018, Chen et al., 2021). I will now identify three basic criteria that shape this balance.

The criteria to explain variation in platforms’ control regimes

This section draws on the market design literature to identify three criteria shaping the choice between more and less restrictive platform architectures. These criteria capture whether the platform’s search algorithm requires users to follow a narrow behavioral script or whether the algorithm requires unstructured interactions between individuals. To be clear: this argument captures what would be optimal given the intellectual background of market design. As I will outline in the conclusion, empirical research still needs to investigate to what extent this philosophy shapes the actual design work in the platform company, which will be inflected by a variety of other forms of expertise as well as organizational and political factors. Yet, even the ideal-typical investigation of this paper yields a framework that can explain variation we observe in platform architectures.

The first criterion is the need for centralized computation by specialized software (Sutherland & Jarrahi, 2018). The last section has established that systems of bilateral transactions can, under ideal conditions, work like a slow search algorithm that identifies an optimal balance between supply and demand. If platforms solve matching problems that deviate from the logic of this algorithm, they will increasingly have to rely on software algorithms to identify the correct solution (Kominers & Teytelboym, 2020). We already saw that the combinatorial problem posed by the optimal allocation of spectrum licenses is very complex. A specialized software suite must collect information about users’ preferences and then traverse the vast space of potential license bundles to identify the optimal allocation. Because of the complex interdependencies between these bundles, the software must include all or most information about companies’ preferences in its computation. Accordingly, the solution must be identified by a centralized process.

There are a variety of reasons why platforms might need to rely on centralized computation. Whenever the market violates fundamental assumptions of the microeconomic ideal, interdependencies between user preferences or commodities may show up. As soon as such interdependencies affect the optimal result, centralized assessment may become necessary. A centralized entity can collect all relevant information in a single location and then sort through the relevant interdependencies. The same is true, when there are too many actors in the system to allow individuals to take stock of all potential trades in the available time (Stanton et al., 2019, Shi, 2023), or when the platform has to identify optimal results very fast and tolerances for errors are low (Kominers & Teytelboym, 2020, Roth, 2023). The external environment can also play an important role here. If the platform faces competitors with similar business models, it needs to become more efficient and tolerances for deviation from the optimal solution diminish (King, 1983). Thus, the need for centralized computing grows as the environment becomes more competitive. In short, centralized computation becomes necessary when the platform has to solve problems with complex constraints very accurately and quickly.

In turn, the more the platform needs to rely on centralized computing to implement the search algorithm, the more restrictive the platform architecture should become. This closely tracks the basic insight that centralized decision making in organizations is more consistent than decentralized decision making, but that it can accommodate far less variance in local information (March & Simon, 1958). The more centralized software has to contribute to identify the solution to the platform’s balancing problem, the simpler the world of the platform has to become – leading to more restrictive architectures. A user’s choice environment needs to reflect the need for ever more specific inputs that the center can process.

To make this more concrete, consider the two illustrative examples Craigslist and Uber from above (c.f. Fig. 1). The two platforms are characterized by very open and very restrictive structures respectively. Craigslist makes money by selling classified ads to sellers in a few select categories (e.g., used cars, jobs, etc.). The large number of buyers and sellers ensures that this is an attractive offer to potential advertisers. Buyers and sellers find Craigslist attractive because they can use it for free and they are typically looking to quickly buy or sell in a narrow geographic territory. To keep these users happy, Craigslist needs to optimize the intersection of supply and demand in these geographic regions – buyers and sellers need to find each other based on their respective price points. Because the market is geographically local, idiosyncratic, and slow, there are never too many potential matches that need to be considered. Even just a rudimentary search bar with keyword search helps buyers and sellers to find each other and negotiate a price that works for both parties. A simple system of distributed transactions - similar to the idealized market of micro-economic theory – is sufficient to optimize the schedule of matches relative to alternative arrangements (flea markets, classified ads, etc.). For this system to work, very little information needs to be collected and processed about the users and their transactions. Accordingly, Craigslist can impose little structure on the system and can let the market unfold without much interference (Kyprianou, 2018).

Uber marks the opposite extreme. To be cheaper than taxis taking a random walk through the city, the company must solve a very complex optimization problem very quickly and iteratively: find the regionally optimal allocation of drivers to passengers that considers geographic proximity, desired destination, traffic, level of service, onward journeys, and thousands of other parameters (Jia et al., 2017, Wang et al., 2018, Kominers & Teytelboym, 2020). Only a centralized software algorithm can compute a reasonable approximation to this matching problem. This software algorithm needs to collect a vast set of real time information from the different users, compute solutions for all requests, and then update the process as time moves on. To collect this information, human actors need to follow a narrow behavioral logic that leaves very little room for creative deviation from the mechanistic requirements the platform lays out. Competition thus turns into an analytical abstraction – an ‘as-if.’ The algorithm simply figures out the solution, choosing the most efficient driver for a given customer at a given point in time and at a specific price. The drivers and passengers then simply do what the app tells them to do. For an illustration of this argument, see the first step of Fig. 2.Footnote 10

While the need for centralized computation demands a restrictive platform architecture, two other factors tell us whether restrictions should primarily unfold via the communication structure, via the control structure, or both (see step 2 in Fig. 2). In what follows, I thus consider cases where the required algorithm deviates far enough from bilateral systems of transactions to require some measure of centralized computation (Uber, Etsy, and Feeding America).

A central authority can only control a complex social process from its ‘synoptic position’ if the social process is legible to it (Scott, 1998). Because centralized authorities need to rely on abstraction to comprehend a relatively more complex world around them (March & Simon, 1958), they tend to read the social process in terms of categories that allow aggregation and quantitative analysis. If the social process does not already conform to these categories the central authority must first force the social process to conform to them (Scott, 1998, Espeland & Stevens, 2008, Fourcade & Healy, 2016). Platforms therefore need to standardize and restrict the interfaces of the communication structures as much as possible.

However, there is a clear limit to standardization. Designers can only standardize interfaces if they know in advance what the structure and range of desired inputs will be. For some optimization problems, this is easy to infer. For instance, the designers of the Feeding America markets simply needed all foodbanks to submit their preference for a given food item on a standardized scale (Prendergast, 2022). This was sufficient to calculate a global preference ranking and assign food items to those who needed them most. Uber, likewise, solves a problem that has very specific input requirements. To allocate cabs to passengers in a way that minimizes the cost to the system and reflects users’ price sensitivity, they need standardized information such as location, service preference, traffic, and so on (Jia et al., 2017, Wang et al., 2018).

But platforms often need to solve problems whose precise parameters cannot be known ex ante. For example, if a platform has a very broad scope and offers heterogeneous goods across a variety of categories (Hasker & Sickles, 2010), it is simply not clear which information will be required to identify the optimal schedule of matches in each category. Sellers on Etsy can offer highly idiosyncratic items. Which of their characteristics will be most relevant for buyers is not clear before the products enter the marketplace. The platform therefore has to give sellers and buyers direct lines of communication for unstructured exchanges, effectively allowing the relevant information to surface organically (Tiwana, 2015, Cennamo, 2021, Rietveld & Schilling, 2021).

If platforms do not know the range of possible inputs, but understand the structure of the required information, they can control users’ inputs via incentive design. Etsy might not know exactly which aspects of a product are most relevant to consumers. But they can design their rating system to incentivize users to report whether that information was provided (Filippas et al., 2021). This can be more difficult than it might appear at first. For instance, research has shown that generalized rules of reciprocity can lead users to leave positive reviews if they receive one in turn. The review may therefore no longer reflect information about the product, but about the social relation between buyers and sellers. Platforms employ various tricks to suppress such social mechanisms and get users to submit only information pertinent to future exchanges (Vulkan et al., 2013).

Digital labor markets offer another example. These platforms do not know ex ante all criteria that clients will use to look for a freelancer and vice versa. To generate better matches, the company can give freelancers the option to bid for a higher ranking in the client’s search results. By charging an advertisement fee to freelancers, the platform creates an incentive for freelancers to identify clients who are most likely to hire them. Rather than simply suggesting an optimal match, the platform draws on the distributed logic of traditional markets and combines it with centralized computation (Horton, 2019, Barach et al., 2020). More indirect and subtle forms of behavioral control can thus be the result of difficulties to standardize the inputs required for centralized computation. If, in contrast, the platform has no way to know what kind of information will be most important for the optimization problem at hand, it must adopt an open communication structure.

In sum, the second criterion is the degree to which required inputs can be standardized. In a strange echo of socialist economists speaking during the socialist calculation debate (Hayek, 1940, Lange, 1967), transaction platforms aspire to plan the logic of the market. They collect information from users and then process this information to facilitate matches that meet global criteria of optimality. When they know exactly what information they need, they can simply confront the user with the deterministic choices of a perfectly rigid communication structure. But when they cannot anticipate the structure of the required information, they have to rely on market interactions between users to surface this information for the software (Boudreau, 2010, Stanton et al., 2019, Chen et al., 2022). In lieu of full control, they might still try to nudge market actors to supply information required for the larger search algorithm (see step 2 in Fig. 2).

The third factor explains why platforms might deploy restrictive control structures (see step 3 in Fig. 2). Market design philosophy suggests that platforms will adopt restrictive control structures when users have incentives to deviate from the intended logic of action and when the platform is unable to simply enforce compliance via its communication structure. As outlined above, platforms need to balance the interests between different user groups and between these user groups and their own goals. To the extent that users’ interests are opposed to each other or to the platform’s, these users might try to game the system and extract bandit profits. This is a constant danger for platform companies. As ample research has shown, even very restrictive communication structures may leave users room to violate the logic of action the platform is trying to realize (Cameron & Rahman, 2022). Because this would derail the intended logic of the market, the platform should adopt behavioral controls to restrict these deviations (Roth & Wilson, 2019).

Consider the two cases on the right hand of Fig. 1. Uber tries to control not just interactions on the platform, but also face-to-face interactions offline. In the past, drivers have coordinated offline to trigger surge pricing. They drove to strategic locations with sudden surges in demand (e.g., airports) and then collectively switched off their apps to trigger surge pricing. Once the prices started to spike, they came back online and reaped the high prices collectively (Möhlmann & Zalmanson, 2017). This behavior was undesirable to Uber not only because it distorted the balance of supply and demand, but because it effectively assigned disproportionate profits to collusive drivers. This made the platform less attractive to anyone outside the cartel. The problem occurred because Uber’s interests were misaligned with those of drivers. It had to balance drivers’ interests in higher wages with passengers’ desire for lower fares and their own interest in a percentage of drivers’ earnings. Drivers therefore perceived the deck as stacked against them and found it rational to game the system if possible. To avoid such gaming, the platform needs to resort to active measures of control: it carefully tracks as much of drivers’ interactions and punishes deviant behavior aggressively (Cameron & Rahman, 2022).

A parallel misalignment exists on Etsy. The platform primarily profits by charging merchants listing and transaction fees. In addition, it requires merchants to provide information that aides the search process and potentially forces them to help their direct competitors (Aspers & Darr, 2022). While the merchants want to maximize individual market share, Etsy wants consumers to find the best match to maximize total revenues on the platform. Yet, the control problem is slightly different than Uber’s. While Uber tries to control a process that unfolds outside the platform itself, Etsy needs to compensate for its open communication structure. Because it leaves users substantial freedom to interact as they please and needs to solve a complicated optimization problem (Stanton et al., 2019), it must ensure that users not abuse this freedom. Unlike centralized planners of old, the company can rely on machine learning techniques to facilitate this type of control. It can collect unstructured text, information from beyond the platform, and divergent sources of data to arrive at conclusions about deviant behavior (Yeung, 2017). Analytic technologies of the digital age can thus compensate for lack of standardization. They preserve the quantitative legibility of behavior in noisy contexts and allow the company to actively intervene if necessary. Conversely, Etsy’s dispute resolution systems reflect the need to deal with the misalignments between buyers and sellers themselves. Feeding America’s food donation system marks the opposite extreme (left side of Fig. 1). The auction market is part of a large NGO whose branches are committed to the goal of equitable and efficient distribution of donations. Because there is no real misalignment between the interests of the different branches and the central office, the platform can forgo an active control regime. The highly restrictive communication structure prevents inadvertent violations of the required market logic.

To sum up the argument of the last two sections, market design conceptualizes digital markets as algorithms that solve constrained optimization problems. Depending on the nature of the problem, these algorithms may deviate from the logic behind systems of distributed transactions. As interdependencies between individual choices grow and centralized software begins to take over from human interactions, the platform has to become more restrictive to ensure that the inputs to the software are correct. If designers can anticipate the structure of the required information, they can implement restrictive communication structures to ensure that actors only input the range of acceptable values to the software. Incentive design and ‘nudging’ become important when platforms hit limits of standardization. The less the platform can predict what relevant information looks like, the more it needs to relax the communication structures. Depending on the alignment of interests between the different sides, the platform can either compensate with restrictive control structures or relax its governance regimes. The three factors thus offer a design rationale for the basic choice between more or less restrictive forms of algorithmic management. Table 1 summarizes these three criteria and the main points developed in this section. Figure 2 illustrates how they are related in platforms’ choice of control regimes. I will now discuss what implications this argument has for the literature on algorithmic management and economic sociology more generally.

Conclusion

In this paper, I have joined scholars who call for greater attention to the values, goals, and philosophies of those who design the technologies that mediate our social lives (Kornberger et al., 2017, Bailey & Barley, 2020, Christin, 2020, Kellogg et al., 2020, Schüssler et al., 2021). These authors observe that digital technologies exercise a growing influence over social interactions. As designers’ intent begins to imprint social dynamics via these technologies, we can no longer bracket them from our analysis (Bailey, et al. 2022). The first part of this paper has suggested that a similar argument applies to economic sociology. Because platform architectures determine how much freedom market actors have to act, research on digital markets should question the traditional focus on these actors (Krippner & Alvarez, 2007, Beckert, 2009). Whether or not it is appropriate, I have argued, depends on how restrictive the communication and control structures of the platform are.This insight has several implications for research on the platform economy.

First, the paper raises questions about the scope conditions for theories about competition, cooperation, and valuation in digital markets. From research on social networks to work on the regulatory state, economic sociology has put market actors into the center of analysis. This tendency has carried through to research on digital marketplaces (Diekmann et al., 2014, Diekmann & Przepiorka, 2019). Yet, we need to reconsider how generalizable the resulting findings are if endogenous dynamics between market actors can no longer be considered as the obvious starting point for research about digital markets. We should ask for what architectural frameworks empirical findings hold true, and, how to account for cases where they do not. For instance, sociological research has shown that generalized norms of reciprocity guide how market participants rate each other in platform's review systems (Diekmann et al. 2014). Yet, studies in market design show that the structure of the reputation mechanism determines which social norms actors come to invoke in their decisions (Vulkan, Roth et al. 2013). Similarly, we might wonder whether findings from other contexts extend to digital marketplaces. To give a concrete example: research on labor markets has shown that task structures associated with jobs can drive inequality in payment (Wilmers 2020). But what task structures look like in online labor markets and whether they can influence wage negotiations may well depend on the architecture of the underlying matching system. Online labor markets may thus moderate or even substitute social mechanisms that explain inequality in other contexts. In short, the paper suggests that research on digital marketplaces should include considerations about the underlying platform architecture in the specification of scope conditions. Conversely, future research should explore how these architectures influence competitive dynamics in cases where available theories do not apply.

Second, the paper offers a framework to explain variation in platform architectures itself. The literature on algorithmic management (Griesbach et al., 2019; Burrell & Fourcade 2020; Kellogg et al., 2020; Stark & Pais, 2020) has long asked why platforms adopt different kinds of control regimes (Schüssler et al. 2021). The paper suggests that we should approach this question from the perspective of the platform companies. They are pursuing projects of centralized planning where they try to coordinate the interactions between market actors to strike an optimal balance on the aggregate level. The intellectual framework of market design suggests that they will approach this problem by building infrastructures that guide market actors to realize the logic of an appropriate search algorithm. A platform's control regime ideally reflects the input requirements for the algorithm that can deliver the best schedule of transactions on the platform. This argument implies that designers' imagination of the market is not innocent. Analogies and metaphors can dictate architectural choices with real implications for workers and other users of these platforms. As other scholars have also observed (Rahman et al., 2024), we may better understand user experiences on these platforms by attending to the guiding ideas of the scientific disciplines that inform their construction.

The paper identifies a basic rationale for design decisions, but it does not examine how this rationale comes to be translated into reality. It ignores, in particular, the organizational dynamics that shape real design work in platform companies. A burgeoning literature on ‘critical algorithm studies’ explores such design work (Kelkar, 2017, Shestakofsky, 2017, Seaver, 2022), but only few studies have tried to understand the relation between designers’ activities and market logics (Li, 2023). Software infrastructures are complex and path-dependent creations that are often opaque to their own creators (Ziewitz, 2016). Creating the affordances of platforms to users is not typically a simple matter of deploying technologies, but a process that combines technologies and risky relationship work by the platform organization (Karunakaran, 2022). Design work occurs in piecemeal and experimental fashion (Rahman et al., 2023) that is often driven not by profit motifs alone, but by subjective preferences of designers (Seaver, 2022), or occupational conflicts between different expert groups (Rilinger, 2022a, b) as well as organizational (Rilinger, 2022a, b) and political (Muniesa, 2011) dynamics. How do these dynamics influence what architecture a platform is going to adopt? How do they influence what market dynamics will unfold on the platform? In raising these questions, the paper points to the need for an organizational sociology that asks what enables or prevents platform designers to realize the markets they envision. This research would return economic sociology to its roots in organizational theory (Nedzhvetskaya & Fligstein, 2020).

Once we realize that organizational, political, and strategic dynamics can affect platforms' ability to coordinate the market as they see fit, another implication for the literature on the platform economy looms. Experiences of precarity, dependence, and exploitation may not simply reflect the rational calculus of surveillance capitalism (Zuboff, 2019). Instead, these experiences may result from problems that emerge when companies try to substitute the invisible hand of the market with their own and set out to plan the interactions of formally independent market actors (Rilinger, forthcoming). To truly understand why the experiences of workers on platforms vary, we may therefore need to study how the technocratic vision of the market as search algorithm collides with the organizational and political reality of design work in companies that try to survive in an uncertain environment.

To conclude, this paper suggests that the literature about the platform economy should turn to the designers of platform architectures. Because platform architectures condition endogenous dynamics in markets, this research promises to reveal scope conditions of existing theories, reveal new social mechanisms behind the operation of digital markets, and explain why experiences of control and resistance differ between platforms. Given that so much of our economic life is now mediated by platforms whose markets transcend the boundaries of nation states and are not beholden to their constituents, I believe that this is a project worthy of sociological attention.

Notes

https://www.craigslist.org, last visited on 02/27/2024.

https://www.craigslist.org/about/mission_and_history, last accessed 03/05/2024.

http://www.etsy.com, last accessed 03/05/2024. Since 2013, the page has allowed mass manufactured goods on its website, but it continues to reserve certain categories (e.g. vintage) for its traditional user base.

C.f. https://www.wikihow.com/List-an-Item-in-Your-Etsy-Shop, last accessed 03/05/2024.

Gretta Krippner has pointed out that the embeddedness literature comprises two distinct theoretical projects – one that seeks to embed markets into broader social systems and one that seeks to understand the relational foundations of economic action (Krippner & Alvarez, 2007). Though these explanatory projects are distinct, they both invoke the effects of social macro-structures which are not presumed to be given exogenously (Beckert, 2012).

Many public platform organizations have moved to privatized and centralized organizational models in the last thirty years. Examples are stock exchanges, credit card syndicates, and even more marginal commodity markets such as Flora Holland, a Dutch flower exchange (Baldwin, 2023)

If the network effects are sufficiently powerful, they can dominate the larger market environment and effectively displace alternative marketplaces. At this point, a platform becomes the only game in town and can dictate the terms of trade. This is the ‘winner take all logic’ of platform services.

This explains the collapse of the very first platforms in the early 2000s.

https://qz.com/1367800/ubernomics-is-ubers-semi-secret-internal-economics-department/, last accessed 9/30/22.

References

Agre, P. E. (1994). Surveillance and capture: Two models of privacy. The Information Society, 10(2), 101–127.

Aspers, P., Bengtsson, P., & Dobeson, A. (2020). Market fashioning. Theory and Society, 49(3), 417–438.

Aspers, P., & Darr, A. (2022). The social infrastructure of online marketplaces: Trade, work and the interplay of decided and emergent orders. The British Journal of Sociology, 73(4), 822–838. https://doi.org/10.1111/1468-4446.12965

Bailey, D. E., & Barley, S. R. (2020). Beyond design and use: How scholars should study intelligent technologies. Information and Organization, 30(2), 100286.

Bailey, D. E., Faraj S., Hinds P. J., Leonardi P. M., & von Krogh G. (2022). We are all theorists of technology now: A relational perspective on emerging technology and organizing. Organization Science, 33(1), 1–18.

Baldwin, C. (2023). Capturing value in digital exchange platforms. Harvard Business School.

Bapna, R., Day, B., Ketter, W., & Bichler, M. (2022). Introduction to the information systems research special section on market design and analytics. Information Systems Research, 33(4), 1126–1129.

Barach, M. A., Golden, J. M., & Horton, J. J. (2020). Steering in online markets: The role of platform incentives and credibility. Management Science, 66(9), 4047–4070.

Barley, S. R. (1986). Technology as an occasion for structuring: Evidence from observations of CT Scanners and the social order of radiology departments. Administrative Science Quarterly, 31(1), 78–108.

Barrett, M., Oborn, E., & Orlikowski, W. (2016). Creating value in online communities: The sociomaterial configuring of strategy, platform, and stakeholder engagement. Information Systems Research, 27(4), 704–723.

Beckert, J. (2009). The social order of markets. Theory and Society, 38(3), 245–269.

Beckert, J. (2010). How do fields change? The interrelations of institutions, networks, and cognition in the dynamics of markets. Organization Studies, 31(5), 605–627.

Beckert, J. (2012). The social order of markets approach: A reply to Kurtulu Gemici. Theory and Society, 41(1), 119–125.

Bichler, M. (2017). Market design: A linear programming approach to auctions and matching. Cambridge University Press.

Bichler, M., & Goeree, J. K. (2017). Frontiers in spectrum auction design. International Journal of Industrial Organization, 50, 372–391.

Black, J. (2002). Regulatory conversations. Journal of Law and Society, 29(1), 163–196.

Bockman, J. (2011). Markets in the name of socialism. Stanford University Press.

Boudreau, K. (2010). Open platform strategies and innovation: Granting access vs. devolving control. Management science, 56(10), 1849–1872.

Boudreau, K. J., & Hagiu, A. (2009). Platform rules: Multi-sided platforms as regulators. Platforms Markets and Innovation, 1, 163–191.

Braverman, H. (1998). Labor and monopoly capital: The degradation of work in the twentieth century. NYU Press.

Budish, E., & Kessler, J. B. (2016). Can agents report their types? An experiment that changed the course allocation mechanism at Wharton. Chicago Booth, Chicago Booth Research Papers, pp 15-08.

Burawoy, M. (1982). Manufacturing consent: Changes in the labor process under monopoly capitalism. University of Chicago Press.