Abstract

We construct a distraction measure based on extreme industry returns to gauge whether analysts’ attention is away from certain stocks under coverage. We find that temporarily distracted analysts make less accurate forecasts, revise forecasts less frequently, and publish less informative forecast revisions, relative to undistracted analysts. Further, at the firm level, analyst distraction carries real negative externalities by increasing information asymmetry for stocks that suffer from a larger extent of analyst distraction during a given quarter. Our findings thus augment our understanding of the determinants and effects of analyst effort allocation and broaden the literature on distraction and information spillover in financial markets.

Similar content being viewed by others

Notes

In our research design, we rely on different broad industry classification schemes, such as the Fama-French 12 and 17 industry classifications or GICS sectors to determine our distraction proxy. We find that our results are unaffected by this choice.

In untabulated tests, we find that our results hold if we restrict our sample to analysts we can identify by their last name on I/B/E/S and analysts who presumably are less likely to be part of a team.

Bradley et al. (2017a) find that analysts with previous industry experience issue earnings forecasts that are on average 1.6% more accurate than forecasts issues by analysts lacking this experience. Harford et al. (2019) find that analysts issue earnings forecasts that are on average 1.9% more accurate for firms for which they have high career concerns versus other firms. Finally, Fang and Hope (2021) show that analyst teams generate more accurate earnings forecasts than individual analysts (with a low-bound estimate of 2.6%). In additional unreported analyses, we find that distraction also affects the rank of analysts in terms of forecast error for a given firm-quarter. Being distracted leads to a decrease in rank by one notch for 42% of the distribution.

The nonbehavioral factors considered in the literature include the analyst’s forecasting experience (e.g., Clement 1999), the coverage portfolio complexity (e.g., Clement 1999), the prestige of the brokerage (e.g., Clement 1999), the geographical location (e.g., Malloy 2005; O’Brien and Tan 2015), the analyst’s industry expertise (Bradley et al. 2017a), the analyst’s career concerns (e.g., Hong and Kubik 2003; Harford et al. 2019), the analyst’s cultural background (e.g., Du et al. 2017; Merkley et al. 2020), and the changing business model of sell-side research (Drake et al. 2020).

Examples of this literature include a focus on attribution bias (Hilary and Menzly 2006), anchoring bias (Cen et al. 2013), seasonal affective disorder (Lo and Wu 2018), weather-induced inactivity (DeHaan et al. 2017), availability heuristic (Bourveau and Law 2021), and the affect heuristic (Antoniou et al. 2021). Other academic research has studied the role of characteristics such as the economic conditions when analysts grew up (Clement and Law 2018) and their political ideology (Jiang et al. 2016) in permanently shaping analysts’ future forecasting toward conservative forecasts.

Hirshleifer et al. (2019) explicitly discuss the nonrandom ranking rule that analysts potentially use to allocate effort on days with multiple forecasts and conclude that it could be consistent with findings on decision fatigue.

We focus on analysts’ limited attention for two reasons. First, anecdotal evidence and recent academic work have suggested that analysts do not always exert the same effort for all of their stocks under coverage, and that this level of effort often relates to the market behavior of those stocks. Analysts realize that, when they publish their notes and forecasts, those pertaining to stocks that have recently exhibited noticeable market behavior in terms of returns or trading will typically receive most of the attention from their internal and external clients. Second, our focus on analyst distraction is a natural extension of recent work in finance that documents the role of distraction in the context of institutional investors.

In additional robustness analyses, we also compute a value-weighted measure of analyst distraction. See Section 4.

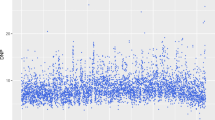

Analysts in our sample on average have 13 stocks in their portfolios. For a given stock-quarter, a value of our measure of analyst distraction greater than or equal to 20 percent for this analyst implies that at least three of the 12 other stocks in the portfolio belong to attention-grabbing industries. Our results are qualitatively similar when we use alternative thresholds (e.g., 15 percent or 30 percent).

Our measure of analyst distraction is based on extreme industry-wide returns rather than on extreme returns for individual stocks. Therefore, while shared analyst coverage may create firm connections (e.g., Ali and Hirshleifer 2020), our measure of analyst distraction remains plausibly exogenous to the fundamentals of the stocks for which the analysts will be considered distracted.

In unreported tests, we find that our main results are robust to using two-year-ahead EPS forecasts. Admittedly, they are more sensitive to the inclusion of fixed effects, presumably because our panel data become less balanced (~ 30% smaller). We cannot run a similar analysis for three-year-ahead and beyond EPS forecasts, because the number of unique analyst forecasts for the same firm-quarter becomes too small, making it hard to identify the effect of distraction.

Comparing the forecast accuracy of analysts using forecast errors expressed as nominal values or as a percentage of the actual values of the earnings is potentially misleading because of differences in scale. The measure does become meaningless when analyst coverage of the firm is equal to one. Therefore, we exclude from the sample firms covered by fewer than two analysts in a given quarter.

We focus on one-year-ahead earnings forecasts as opposed to multi-year forecasts not only to maximize our sample size but also because these forecasts likely receive the most attention from analysts. Consistent with this assumption, Bradshaw et al. (2012) find that, on average, naïve extrapolations of one-year-ahead EPS forecasts outperform two-year-ahead and three-year-ahead analysts’ forecasts.

We use several initial rules to drop observations from the sample: 1) observations for which the variable cusip is equal to “00,000,000” or missing; 2) observations with missing values for the variables ticker and analys; 3) observations for which the forecast date (anndats) is posterior to the announcement date of the earnings (actdats); 4) observations for which either the value for the forecast (value) or the value of the actual earnings (actual) is missing.

Our results are qualitatively unchanged when we do not exclude forecasts with a horizon shorter than 30 days.

We are grateful to Kenneth French for sharing this data on his website.

Within the sample, we winsorize the forecast accuracy, the accounting, and the continuous market control variables at the 1st and 99th percentiles.

An alternative approach to controlling for firm-year fixed effects is to adjust variables by their firm-year means (e.g., Clement 1999; Malloy 2005; Clement et al. 2007; Bradley et al. 2017a). Gormley and Matsa (2014) show that a potential concern with de-meaning variables is that this may produce inconsistent estimates and distort the results. They suggest using the raw value of the variables and controlling for fixed effects. In robustness tests, we check that our results hold if we adjust variables by their firm-year means instead of controlling for firm-quarter effects.

In untabulated tests, we find similar results when we exclude from our sample analysts covering stocks concentrated exclusively in one industry. In our main tests, these analysts serve as a benchmark only. Indeed, when they do experience extreme returns, they have, by definition, no stocks in their portfolio that would receive our “distraction treatment.”.

Following the recommendation of Mummolo and Peterson (2018) and DeHaan (2021), we also compute the standard deviation of analyst distraction shock after residualizing it with respect to firm-quarter fixed effects. The economic effect of the distraction shocks on the forecast accuracy of analysts becomes smaller (about 1%) when we consider the likelihood of finding distracted analysts within a given firm-quarter.

In unreported analyses, we also estimate the models with a stricter fixed effect structure by including firm-quarter and firm-analyst fixed effects. Our results remain qualitatively similar, but the level of significance drops to 10% or 8% for the coefficients on the continuous and the dummy distraction variables. We do not prefer this stricter FE structure, as we want to avoid adopting a specification that creates a lot of zero within-unit variation. In his section 1.2., DeHaan (2021) cautions against creating so-called zero-variation firms, as these zero-variation firms do not contribute to the estimation of the coefficients of interest; therefore, only a subset of the entire sample maps into the coefficient estimation. There is a risk then that these contributing observations differ from the dropped observations.

As an illustration, consider an analyst covering two firms, A and B, in a given quarter. If firm A is affected by an attention-grabbing shock during the quarter, by construction analyst distraction is equal to 50 percent for the analyst’s forecasts for firm B. Intuitively, however, when an analyst covers two (or a low number of) firms, the attention-grabbing stock(s) will shift attention toward firm A, but the analyst is still likely to be able to dedicate enough time and resources to firm B.

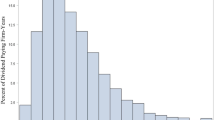

Untabulated statistics show that an industry experiences extreme returns over two consecutive quarters (quarters q and q + 1) only 10 percent of the time, and over three consecutive quarters (quarters q, q + 1 and q + 2) only 1 percent of the time. We thus expect the distraction shocks to vary significantly from one quarter to the other and affect the analyst information production in a specific quarter in a timely fashion.

Some analysts were working before the start of our sample period, which might create a bias against finding an effect of the first distraction event. In our sample, 5.36 percent of the analysts were already active in 1985, the first year of our sample period. We find similar results if we exclude these analysts from this test.

Descriptive statistics reported in Table 1 show that the fraction of forecasts made by analysts affected by first-distraction events is roughly equal to the one made by analysts affected by nonfirst-distraction events (about 3% in each case). Our results on first-time distraction are therefore unlikely to be driven by the relative scarcity of nonfirst distraction events.

Similarly, in an unreported test, we find that the probability of revising a forecast at least once is significantly lower for distracted analysts.

We drop observations for which there are several forecast revisions on the same day, because in this case it is unclear which forecast the market reacts to. We also exclude absolute cumulative abnormal returns greater than 5 percent.

Like Ivković and Jegadeesh (2004), we set the denominator equal to 0.01 if the absolute value of the previous forecast is smaller. We also multiply values by 100 and truncate observations between 50 percent and −50 percent. Our results are robust to deflating the forecast revision by stock price instead.

As mentioned, our findings relate to but differ from the results of Han et al. (2020), as our evidence suggests that resource constraints affect analysts’ forecast revision frequency.

Our results are robust to alternative definitions of earnings surprises, such as the difference between the actual earnings per share and the average of all analysts’ latest forecasts made within a [−180, −4] day window prior to the earnings announcement date, rounded to the nearest cent (Caskey and Ozel 2017).

We drop observations for firms with SIC codes 49 and 60–69. Our results remain qualitatively the same if we keep these observations.

Our results hold when we control for lagged average analyst distraction over the past quarter or the past two quarters. The coefficients on the lagged variables are insignificant, which further indicates that our effect precisely coincides with the distraction of the analysts covering a given stock.

In the same vein, Bochkay and Joos (2021) find that analysts weigh distinct types of information (soft versus hard) differently when forming risk forecasts as a function of changing underlying macroeconomic uncertainty at the time of the forecast. Abis (2022) compares investment decisions made by humans and machines: consistent with quantitative funds having more learning capacity but less flexibility to adapt to changing market conditions than discretionary funds, she finds that quantitative funds hold more stocks, specialize in stock picking, and engage in more overcrowded trades.

References

Abis, S. 2022. Man vs. machine: quantitative and discretionary equity management. Available at SSRN 3717371.

Abramova, I., J.E. Core, and A. Sutherland. 2020. Institutional investor attention and firm disclosure. The Accounting Review 95: 1–21.

Ali, U., and D. Hirshleifer. 2020. Shared analyst coverage: unifying momentum spillover effects. Journal of Financial Economics 136 (3): 649–675.

Amihud, Y. 2002. Illiquidity and stock returns: cross-section and time-series effects. Journal of Financial Markets 5: 31–56.

Antoniou, C., A. Kumar, and A. Maligkris. 2021. Terrorist attacks, analyst sentiment, and earnings forecasts. Management Science 67: 2579–2608.

Balakrishnan, K., M.B. Billings, B. Kelly, and A. Ljungqvist. 2014. Shaping liquidity: on the causal effects of voluntary disclosure. Journal of Finance 69: 2237–2278.

Barber, B., and T. Odean. 2008. All that glitters: the effect of attention and news on the buying behavior of individual and institutional investors. Review of Financial Studies 21: 785–818.

Bhojraj, S., C. Lee, and D. Oler. 2003. What’s my line? A comparison of industry classification schemes for capital markets research. Journal of Accounting Research 41: 745–774.

Blankespoor, E., E. deHaan, and C. Zhu. 2018. Capital market effects of media synthesis and dissemination: evidence from robo-journalism. Review of Accounting Studies 23: 1–36.

Bochkay, K., and P.R. Joos. 2021. Macroeconomic uncertainty and quantitative versus qualitative inputs to analyst risk forecasts. The Accounting Review 96: 59–90.

Boni, L., and K. Womack. 2006. Analysts, industries, and price momentum. Journal of Financial and Quantitative Analysis 41: 85–109.

Bourveau, T., and K. Law. 2021. Do disruptive life events affect how analysts assess risk? Evidence from deadly hurricanes. The Accounting Review 96: 121–140.

Bradley, D., S. Gokkaya, and X. Liu. 2017a. Before an analyst becomes an analyst: does industry experience matter? Journal of Finance 72: 751–792.

Bradley, D., S. Gokkaya, X. Liu, and F. Xie. 2017b. Are all analysts created equal? Industry expertise and monitoring effectiveness of financial analysts. Journal of Accounting and Economics 63: 179–206.

Bradshaw, M.T., M.S. Drake, J.N. Myers, and L.A. Myers. 2012. A re-examination of analysts’ superiority over time-series forecasts of annual earnings. Review of Accounting Studies 17: 944–968.

Bradshaw, M.T., L.D. Brown, and K. Huang. 2013. Do sell-side analysts exhibit differential target price forecasting ability? Review of Accounting Studies 18: 930–955.

Bradshaw, M.T., Y. Ertimur, and P. O’Brien. 2017. Financial analysts and their contribution to well-functioning capital markets. Foundations and Trends in Accounting 11: 119–191.

Brennan, M.J., and A. Subrahmanyam. 1995. Investment analysis and price formation in securities markets. Journal of Financial Economics 38: 361–381.

Brown, L.D., A.C. Call, M.B. Clement, and N.Y. Sharp. 2015. Inside the “black box” of sell-side financial analysts. Journal of Accounting Research 53: 1–47.

Caskey, J., and N.B. Ozel. 2017. Earnings expectations and employee safety. Journal of Accounting and Economics 63: 121–141.

Cao, S., W. Jiang, J. L. Wang, and B. Yang. 2021. From man vs. machine to man+ machine: the art and ai of stock analyses. Available at SSRN 3840538.

Cen, L., G. Hilary, and K.C. Wei. 2013. The role of anchoring bias in the equity market: evidence from analysts’ earnings forecasts and stock returns. Journal of Financial and Quantitative Analysis 48: 47–76.

Chiu, P.C., B. Lourie, A. Nekrasov, and S.H. Teoh. 2021. Cater to thy client: analyst responsiveness to institutional investor attention. Management Science 67: 7455–7471.

Clement, M.B. 1999. Analyst forecast accuracy: do ability, resources, and portfolio complexity matter? Journal of Accounting and Economics 27: 285–303.

Clement, M.B., and S.Y. Tse. 2003. Do investors respond to analysts’ forecast revisions as if forecast accuracy is all that matters? The Accounting Review 78: 227–249.

Clement, M.B., and S.Y. Tse. 2005. Financial analyst characteristics and herding behavior in forecasting. Journal of Finance 60: 307–341.

Clement, M. B., and K. Law. 2018. Labor market dynamics and analyst ability. Available at SSRN 2833954.

Clement, M.B., L. Rees, and E.P. Swanson. 2003. The influence of culture and corporate governance on the characteristics that distinguish superior analysts. Journal of Accounting, Auditing, and Finance 18: 593–618.

Clement, M.B., L. Koonce, and T.J. Lopez. 2007. The roles of task-specific forecasting experience and innate ability in understanding analyst forecasting performance. Journal of Accounting and Economics 44: 378–398.

Coleman, B., K. J. Merkley, and J. Pacelli. 2021. Do robot analysts outperform traditional research analysts. The Accounting Review, forthcoming.

Core, J.E., W.R. Guay, and T.O. Rusticus. 2006. Does weak governance cause weak stock returns? An examination of firm operating performance and investors’ expectations. Journal of Finance 61: 655–687.

Costello, A.M., A.K. Down, and M.N. Mehta. 2020. Machine + man: a field experiment on the role of discretion in augmenting AI-based lending models. Journal of Accounting and Economics 70: 101360.

Cowen, A., B. Groysberg, and P. Healy. 2006. Which types of analyst firms are more optimistic? Journal of Accounting and Economics 41: 119–146.

Dechow, P.M., and H. You. 2020. Understanding the determinants of analyst target price implied returns. The Accounting Review 95: 125–149.

De Franco, G., and Y. Zhou. 2009. The performance of analysts with a CFA® designation: the role of human-capital and signaling theories. The Accounting Review 84: 383–404.

DeHaan, E. 2021. Using and interpreting fixed effects models. Available at SSRN 3699777.

DeHaan, E., T. Shevlin, and J. Thornock. 2015. Market (in)attention and the strategic scheduling and timing of earnings announcements. Journal of Accounting and Economics 60: 36–55.

DeHaan, E., J. Madsen, and J.D. Piotroski. 2017. Do weather-induced moods affect the processing of earnings news? Journal of Accounting Research 55: 509–550.

DellaVigna, S., and J.M. Pollet. 2009. Investor inattention and Friday earnings announcements. Journal of Finance 64: 709–749.

Derrien, F., and A. Kecskés. 2013. The real effects of financial shocks: evidence from exogenous changes in analyst coverage. Journal of Finance 68: 1407–1440.

Dessaint, O., and A. Matray. 2017. Do managers overreact to salient risks? Evidence from hurricane strikes. Journal of Financial Economics 126: 97–121.

Dessaint, O., T. Foucault, L. Frésard, and A. Matray. 2018. Noisy stock prices and corporate investment. Review of Financial Studies 32: 2625–2672.

Dong, G. N., and Y. Heo. 2014. Flu epidemic, limited attention and analyst forecast behavior. Available at SSRN 3353255.

Drake, M., P. Joos, J. Pacelli, and B. Twedt. 2020. Analyst forecast bundling. Management Science 66: 4024–4046.

Driskill, M., M. Kirk, and J.W. Tucker. 2020. Concurrent earnings announcements and analysts’ information production. The Accounting Review 95: 165–189.

Du, Q., F. Yu, and X. Yu. 2017. Cultural proximity and the processing of financial information. Journal of Financial and Quantitative Analysis 52: 2703–2726.

Falkinger, J. 2008. Limited attention as a scarce resource in information-rich economies. The Economic Journal 118: 1596–1620.

Fang, B., and O.K. Hope. 2021. Analyst teams. Review of Accounting Studies 26: 425–467.

Foucault, T., O. Kadan, and E. Kandel. 2013. Liquidity cycles and make/take fees in electronic markets. Journal of Finance 68: 299–341.

Garel, A., J. Martin-Flores, A. Petit-Romec, and A. Scott. 2021. Institutional investor distraction and earnings management. Journal of Corporate Finance 66: 101801.

Gleason, C.A., and C.M. Lee. 2003. Analyst forecast revisions and market price discovery. The Accounting Review 78: 193–225.

Gormley, T.A., and D.A. Matsa. 2014. Common errors: How to (and not to) control for unobserved heterogeneity. Review of Financial Studies 27: 646–651.

Green, T.C., R. Jame, S. Markov, and M. Subasi. 2014. Access to management and the informativeness of analyst research. Journal of Financial Economics 114: 239–255.

Groysberg, B., P.M. Healy, and D.A. Maber. 2011. What drives sell-side analyst compensation at high-status investment banks? Journal of Accounting Research 49: 969–1000.

Han, Y., C. X. Mao, H. Tan, and C. Zhang. 2020. Climatic disasters and distracted analysts. Available at SSRN 3625803.

Hand, J. R., H. Laurion, A. Lawrence, A., and N. 2021. Explaining firms’ earnings announcement stock returns using FactSet and I/B/E/S data feeds. Review of Accounting Studies, forthcoming.

Harford, J., F. Jiang, R. Wang, and F. Xie. 2019. Analyst career concerns, effort allocation, and firms’ information environment. Review of Financial Studies 32: 2179–2224.

Healy, P.M., and K.G. Palepu. 2001. Information asymmetry, corporate disclosure, and the capital markets: a review of the empirical disclosure literature. Journal of Accounting and Economics 31: 405–440.

Hilary, G., and L. Menzly. 2006. Does past success lead analysts to become overconfident? Management Science 52: 489–500.

Hirshleifer, D., Y. Levi, B. Lourie, and S.H. Teoh. 2019. Decision fatigue and heuristic analyst forecasts. Journal of Financial Economics 133: 83–98.

Hong, H., and J.D. Kubik. 2003. Analyzing the analysts: career concerns and biased earnings forecasts. Journal of Finance 58: 313–351.

Hong, H., and M. Kacperczyk. 2010. Competition and bias. Quarterly Journal of Economics 125: 1683–1725.

Ivković, Z., and N. Jegadeesh. 2004. The timing and value of forecast and recommendation revisions. Journal of Financial Economics 73: 433–463.

Jacob, J., T.Z. Lys, and M.A. Neale. 1999. Expertise in forecasting performance of security analysts. Journal of Accounting and Economics 28: 51–82.

Jiang, D., A. Kumar, and K. Law. 2016. Political contributions and analyst behavior. Review of Accounting Studies 22: 37–88.

Kacperczyk, M., S. Van Nieuwerburgh, and L. Veldkamp. 2016. A rational theory of mutual funds’ attention allocation. Econometrica 84: 571–626.

Kadan, O., L. Madureira, R. Wang, and T. Zach. 2012. Analysts’ industry experience. Journal of Accounting and Economics 54: 95–120.

Ke, B., and Y. Yu. 2006. The effect of issuing biased earnings forecasts on analysts’ access to management and survival. Journal of Accounting Research 44: 965–999.

Kelly, B., and A. Ljungqvist. 2012. Testing asymmetric-information asset pricing models. Review of Financial Studies 25: 1366–1413.

Kempf, E., A. Manconi, and O. Spalt. 2017. Distracted shareholders and corporate actions. Review of Financial Studies 30: 1660–1695.

Kothari, S.P., E. So, and R. Verdi. 2016. Analysts’ forecasts and asset pricing: a survey. Annual Review of Financial Economics 8: 197–219.

Lo, K., and S.S. Wu. 2018. The impact of seasonal affective disorder on financial analysts. The Accounting Review 93: 309–333.

Loh, R., and R. Stulz. 2011. When are analyst recommendation changes influential? Review of Financial Studies 24: 593–627.

Loh, R., and R. Stulz. 2019. Is sell-side research more valuable in bad times? Journal of Finance 73: 959–1013.

Malloy, C.J. 2005. The geography of equity analysis. Journal of Finance 60: 719–755.

Merkley, K.J., R. Michaely, and J. Pacelli. 2020. Cultural diversity on Wall Street: evidence from consensus earnings forecasts. Journal of Accounting and Economics 70: 101330.

Mikhail, M.B., B.R. Walther, and R.H. Willis. 1997. Do security analysts improve their performance with experience? Journal of Accounting Research 35: 131–157.

Mummolo, J., and E. Peterson. 2018. Improving the interpretation of fixed effects regression results. Political Science Research and Methods 6 (4): 829–835.

O’Brien, P., and H. Tan. 2015. Geographic proximity and analyst coverage decisions: evidence from IPOs. Journal of Accounting and Economics 59: 41–59.

Petersen, M.A. 2009. Estimating standard errors in finance panel data sets: comparing approaches. Review of Financial Studies 22: 435–480.

Pisciotta, K. 2021. Analyst workload and information production: Evidence from IPO assignements. Available at SSRN 2826911.

Schneemeier, J. 2018. Shock propagation through cross-learning in opaque networks. Available at SSRN 2864608.

Acknowledgements

We thank Patricia Dechow (the editor) and two anonymous referees for valuable comments and suggestions. We also thank Alberta Di Giuli, Bart Frijns, Trevor Harris, Alberto Manconi, José Martin-Flores, Alireza Tourani-Rad, Armin Schwienbacher, and Alexander Wagner for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Appendix 2

Appendix 3

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bourveau, T., Garel, A., Joos, P. et al. When attention is away, analysts misplay: distraction and analyst forecast performance. Rev Account Stud 29, 916–958 (2024). https://doi.org/10.1007/s11142-022-09733-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11142-022-09733-w