Abstract

Purpose

To evaluate the feasibility of implementing systematic patient symptom monitoring during treatment using a smartphone.

Methods

Endometrial [n = 50], ovarian [n = 70] and breast [n = 193] cancer patients participated in text-based symptom reporting for up to 12 months. In order to promote equity, patients without a smartphone were provided with a device, with the phone charges paid by program funds. Each month, patients completed the Patient Health Questionnaire (PHQ-9), and 4 single items assessing fatigue, sleep quality, pain, and global quality of life during the past 7 days rated on a 0 (low) –10 (high) scale. Patients’ responses were captured using REDCap, with oncologists receiving monthly feedback. Lay navigators provided assistance to patients with non-medical needs.

Results

Patients utilizing this voluntary program had an overall mean age of 60.5 (range 26–87), and 85% were non-Hispanic white. iPhones were provided to 42 patients, and navigation services were used by 69 patients. Average adherence with monthly surveys ranged between 75–77%, with breast patients having lower adherence after 5 months. The most commonly reported symptoms across cancer types were moderate levels (scores of 4–7) of fatigue and sleep disturbance. At 6 months, 71–77% of all patients believed the surveys were useful to them and their health care team.

Conclusions

We established the feasibility of initiating and managing patients in a monthly text-based symptom-monitoring program. The provision of smartphones and patient navigation were unique and vital components of this program.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Symptom management utilizing patient-reported outcomes is an important area of focus in cancer care [1]. Cancer patients often experience symptoms related to their treatment regimens [2] and/or the disease itself [3], as well as psychosocial concerns [4, 5]. Common cancer-related symptoms, such as pain, fatigue, and depression [3, 6], may resolve after treatment completion or may persist. Past research indicates that health care providers systematically underestimate their patients’ moderate or severe symptoms compared to what patients report themselves [7]. Under-estimation, which tends to be more common than the over-estimation of patients’ symptoms [7], leads to poorer health outcomes and the under-treatment of patients [8, 9].

Routine monitoring of patients’ physical and psychological symptoms is becoming increasingly more common [5]. The use of patient-reported outcome (PRO) measures during cancer treatment has been shown to improve patients’ survival rates and result in fewer emergency department (ED) visits and hospitalizations, and less symptom burden during hospital stays [10, 11]. When patients have their symptoms addressed or at least relayed to their physicians, it has been found to improve patient satisfaction with care [12], and can help reduce patient anxiety and promote self-care [13]. In addition, monitoring patients’ health-related quality of life has been found to improve communication between patients and their health care providers [12, 14, 15].

While there are many ways to track patients’ symptoms, such as in-clinic assessments and telephone calls between visits, technological advances are making it easier to collect symptoms and adverse events using electronic devices, such as computers, tablets, or mobile phones. The use of these devices can result in faster relay times of patient information to providers [16, 17], more accurate detection of adverse events [18], and may assist in reducing the use of avoidable services like ED visits or hospitalizations [19].

Recent work has shown that health systems and providers are increasingly likely to adopt the use of electronic patient-reported outcomes (PROs) with their patients [18, 20, 21]. Successful remote assessments of PROs using electronic devices (ePROs) have used email or text-message reminders with direct links to patient forms [20], invitations through patient communication portals (e.g., MyChart) [18], and REDCap with automated emails [22, 23]. However, routine cancer care has been slower to adopt ePROs. Challenges to implementing ePROs in clinical practice have been identified, with attempts to address these barriers by involving stakeholders (physicians/staff and patients) [19, 24], and interviewing patients, caregivers, and providers to ensure the relevance of measures selected for PRO assessments [20, 25, 26].

In 2014, a systematic review was conducted on ePRO use in clinical oncology settings (n = 27) [27]. Results indicated that 30% of the ePRO systems reviewed were accessible from the home, and 37% were accessible from both the home and the clinic. Many of the assessments were conducted on computers or tablets, but few used cell phones. Most of the ePROs were designed to be completed by patients during active treatment (63%), but others were also used for follow-up care (40%). Reminders to complete the ePRO surveys were sent in 63% of the systems, with email being the most common method (53%), and 33% using phone, text, or letter reminders. Real-time alerts were used in 85% of systems to send patients’ responses to their providers. The systems reviewed collected a variety of PRO data that were reported to providers (e.g., current scores, longitudinal changes, population norms, or reference values), and varied by the needs of the health care providers in caring for their patients.

The current study reports on a text-based, symptom-monitoring program with patient navigation to assist endometrial, ovarian, and breast patients during treatment. The purpose of the program was to identify patients’ symptoms and needs in a timely manner, before symptoms or problems intensified compromising effective treatment. This paper reports on the feasibility of implementing the program in these patient populations. The a priori goals for program success were that (1) ≥ 85% of patients approached would participate in the monitoring program; and (2) adherence to the surveys during the 12-month period would be ≥ 75% for all cancer types.

Methods

Overview

The aims of this program were to (1) monitor patients’ symptoms and needs for up to 12 months during cancer treatment; (2) encourage the use of the patient portal (MyChart) to assist patients in communicating with their health care team and managing their care; and (3) provide navigation services to patients with personal needs that might impede treatment adherence, such as reliable transportation to clinic visits. This program was exempted from human informed consent guidelines by the Institutional Review Board of The Ohio State University as quality improvement. However, all patients had the right to refuse to take part in this clinical quality improvement program.

Patients participated from the Gynecologic Oncology and the Breast Oncology clinics at The Ohio State University Comprehensive Cancer Center (OSUCCC) in Columbus, Ohio. In Gynecologic Oncology, post-operative ovarian and endometrial patients were identified by program staff using the electronic health record (EHR) EPIC. The five participating gynecologic oncologists gave final approval to approach their patients for symptom monitoring. The program was initiated in February 2018 through October 2018. Patients completed symptom surveys using a smartphone, computer, or with staff, and were monitored monthly for 12 months or until the end of active therapy, entry into hospice, or patient or physician request to stop the surveys, whichever came first. English proficiency was not an inclusion criterion. However, we did not have any patients, for whom English was a second language, who could not complete the monthly surveys.

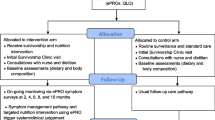

The same procedures were followed in the breast oncology clinic, with the distinction that the three participating oncologists elected to identify patients themselves for the symptom-monitoring program (i.e., the EHR was not used systematically by program staff for patient identification). These patients included those who were either currently undergoing adjuvant therapy or were judged by their health care teams as being able to benefit from additional monitoring or patient navigation. Patients in breast oncology were monitored beginning in December 2018 through June 2019, with follow-up through 12 months or until the end of active therapy or patient or physician request to stop the surveys, whichever came first. Figure 1 provides the schema of the symptom-monitoring program, with details described below.

At program entry, patients were asked to complete brief text-based surveys once a month for up to 12 months. Patients were also encouraged to get an account for the OSU MyChart online patient portal, if they were not currently enrolled, and to sign up to receive text-message or phone call appointment reminders. Program staff assisted interested patients with MyChart and appointment reminder set-ups and provided education in how to use MyChart for their personal care.

Patients completed the first survey in clinic on an iPad using REDCap. Questions were formatted in the same way they would appear in the monthly text surveys to familiarize the patients with the items and the formats. Patients also had the option of completing paper forms or having an interviewer read the questions to them, if they preferred those modes of survey administration. Patient demographic characteristics were obtained/verified by the patients, and included age, race, ethnicity, education, income, employment, and marital status. The patients’ cancer stage was obtained from the OSUCCC cancer registry.

In addition, two subscales from the James Supportive Care Screening [28] measure were given to the patients to complete at program entry, as well as at 6 months (mid-treatment), to identify factors that might impede effective treatment. The 4-item “Health Care Decision-Making and Communication Issues” subscale examines decision-making concerns, problems communicating with the medical team, long-term health care planning, and lack of information about treatment or conditions. The 6-item “Social/Practical Problems” subscale included the patient’s living situation, housing problems, lack of support, financial or insurance problems, transportation problems, and problems obtaining medications. Items on both subscales were scored as 0 = none, 1 = mild, 2 = moderate, 3 = severe. Total subscale scores were calculated by summing the individual subscale items. However, alert values on each individual item were designated as item responses ≥ 2 (i.e., moderate or higher). These high alerts were handled by program patient navigators who telephoned the patients to assess difficulties and provide assistance or referral. The patient navigators worked closely with the social workers and patient care resource managers (PCRM) on each clinical service, as needed. Issues related to clinical concerns were generally handled by the PCRMs, with practical problems handled by the navigators. This patient navigation model was patterned after our past experiences in navigator programs [29]. All patient navigation encounters were documented in REDCap, including the patients’ problems/concerns and services provided.

Monthly survey administration

Monthly symptom assessments were scheduled and administered through REDCap for up to 12 months. Patients received a survey link via text message or email, and up to three reminders were automated using REDCap if the survey remained incomplete. Survey texts were always sent on a Monday in order for staff to better manage patient alert values on weekdays, if needed. If no surveys were completed in month 1 and/or 2, program staff called patients to ensure receipt of the texts and to adjust their preferred method of contact, if desired. However, after this two-month time period, reminder messages were still delivered monthly, but no additional telephone calls were made to the patient by program staff. For patients who were not comfortable with technology, monthly surveys were conducted by phone or in-person during clinic visits by program staff. Each monthly survey contained the following common items:

Physical symptom items

Patients completed four items, rated from 0 (low) to 10 (high) during the past 7 days, for pain, fatigue, sleep quality, and overall quality of life [3, 6]. Single-item, numerical linear analogue self-assessment (LASA) scales were used, because they have advantages of being reliable and valid, easily understood by most persons with differing educational backgrounds, and are easier to translate into multiple languages [30, 31]. Patients reporting scores of ≥ 4 for pain or fatigue, or sleep or quality of life < 4 were flagged as patient alert values. These values were pre-determined from the participating clinicians, with in particular, a lower threshold set for symptoms for pain and fatigue, so that these symptoms could be addressed earlier in treatment before symptoms persisted and/or became severe.

Patient health questionnaire-9 items (PHQ-9)

Gynecologic Oncology patients completed the PHQ-9 for the assessment of depressive and psychological symptoms. This measure is recommended as a valid and reliable screening tool for cancer patients by the American Society of Clinical Oncology [32]. Patients reported on symptoms during the past two weeks, using the following response categories: 0 = not at all, 1 = several days, 2 = more than half the days, and 3 = nearly every day. Scores on the PHQ-9 range from 0 to 27, with scores indicating 0–4 no or minimal depression, 5–9 mild depression, 10–14 moderate depression, 15–19 moderately severe depression, and 20–27 severe depression. Patients were flagged for an alert value if they scored 10 or higher on the PHQ-9 or marked “1” or higher on a single questionnaire item concerning suicidal ideation (i.e., “Thoughts that you would be better off dead or of hurting yourself in some way”). Patients in the breast clinic did not receive the PHQ-9 in their monthly surveys, because this measure was already given routinely as part of their clinical care.

Other symptoms or needs

Every month, patients were also asked to self-report other major/bothersome symptoms or treatment concerns that they wanted forwarded to their health care team, as well as if they needed assistance with any non-treatment concerns prior to their next clinic visit. Non-treatment concerns (for example, transportation issues, locating supportive services in the community) were forwarded to the patient navigators for follow-up directly with the patient. Treatment or clinic-related issues were sent to each physician’s designated staff person by sending an email or “in-basket” message through the Integrated Healthcare Information System (IHIS) in Epic. The clinic staff person then followed up with the patients regarding their concerns.

Smartphone provision

Smartphones were used to facilitate communication and to optimize the management of patients’ therapy. Through a partnership with a national wireless company, patients were provided with an iPhone 6 s or 7 if either the patient did not have a smartphone or had a calling plan with limited data or minutes for calling or texting each month. The wireless company provided the phones at zero cost, and program funds paid for phone service for 12 months, including unlimited text messaging and cellular data. At the end of the 12-month program period, the patients were able to keep the iPhones, but had to secure their own phone plan, if desired, or use the phone in venues where wireless internet service was available to complete non-calling or texting functions using the internet.

Program staff helped patients set up the iPhones, including providing basic education on the features of the phone, completed a walkthrough of the MyChart application, signed the patient up for clinic text messages and appointment reminders, if the patients agreed, and installed phone numbers for the oncology clinic, program staff, and supportive services at the cancer center. The iPhone set-up encounters lasted between 30 min to 1 h.

Symptom reporting to the physicians

Patients’ symptom scores on the monthly surveys were exported from REDCap to a spreadsheet of monthly scores for all measures, and sent to their health care teams using secure email. Alert values for pain, fatigue, poor sleep quality, and quality of life were highlighted on reports, as well as any patient self-reported issues. Program staff also sent the oncology team a direct message in the EHR within 24 h for patients reporting moderate-severe depression on the PHQ-9 (for gynecologic oncology patients) or severe pain (≥ 7 or higher). Oncology teams completed follow-up with the patients and/or placed referrals as necessary, following standard of care procedures. The cut-off scores used for the alert values, the content and presentation of the information put in the spreadsheets, and the process of relaying and responding to the reports were modified over time, based on feedback from the oncology staff and physicians, as well as the patients themselves. For example, patients with spikes in worsening symptoms were highlighted in relation to their past months’ symptom levels to better indicate changes, as well as patients with chronic moderate to severe depressive symptoms who often scored high on the screenings, but were re-verified to ensure that they were receiving follow-up for their depressive symptoms. Individual physicians also could specify what information they wanted reported each month (i.e., only provide high alert values on patients and do not provide any information on patients doing well), and so there was not uniform reporting of information across all physicians after the first several months of monitoring.

Program evaluation

Both the patients and the participating physicians and lead staff completed structured questionnaires to provide feedback on the content and conduct of the monitoring program. Patients provided feedback as part of the 6-month text-based survey, and provider/lead staff evaluations surveys were emailed to them to complete at 12 months.

Results

Across the three cancer types, 346 patients were approached to take part in the program with 313 agreeing (90.5%). Program declines by cancer type were 9/79 (11.4%) ovarian; 5/55 (9.1%) endometrial; and 19/213 (9.8%) breast. There were no significant differences in program declines or the reasons for refusing by age, race, ethnicity, or cancer type. The major reasons for not participating were lack of interest (36.4%) or believing the program would not be useful (33.3%).

Demographic and clinical characteristics of the participating patients are provided in Table 1. The breast cancer patients were younger on average than either the ovarian or endometrial patients and were more likely to be married, have higher educational attainment, and be employed. Of note is the wide age range of patients across all three cancer types, with the oldest patients over age 80. The majority of patients were non-Hispanic white, which is indicative of the catchment area of the OSUCCC [33], which is 22% rural and includes the Ohio Appalachian region. Greater than 20% of patients across all cancer types reported incomes below $35,000 per year.

A summary of key program components is provided in Table 2.

MyChart

At program entry, 74.1% of all patients were already enrolled in MyChart. Patients not enrolled were asked to enroll, with refusals ranging between 37 and 50% of the non-enrolled patients. Reasons for refusing were that they did not have reliable access to the internet or computers, preferred to call or talk to health professionals in person, or simply were not interested in using MyChart. Approximately 55% of patients over age 65 refused enrollment in MyChart, primarily among the ovarian and endometrial patient groups.

iPhone provision

iPhones were provided to 42 (13.4%) patients across all cancer types. Demographic characteristics of the patients who received iPhones were compared with those who already had a smartphone, with patients receiving iPhones having incomes below $50,000/year (p = 0.03) and an educational level of high school or less (p < 0.0001). Program staff had few difficulties training patients to operate the phones correctly or in patients’ adherence to completing surveys after receiving the iPhones. Phone service charges for patients receiving iPhones averaged approximately $40 per month or $500 per person for the 12-month period.

Survey adherence and mode of administration

Figure 2 shows the proportion of patients who completed the surveys at each time point. Adherence averaged between 75 and 77% overall with responses varying by cancer type, as well as the month of assessment. Patients were censored at the time of their formal withdrawal from the program or death, so that the monthly percentages only include active patients who completed the surveys at each time point. At month 5, there began a decline in monthly survey adherence, particularly among the breast patients, coinciding with the completion of chemotherapy/radiation treatments, as patients completed active therapy. However, unless patients asked to be formally withdrawn from the program, they continued to receive the monthly surveys. Formal patient withdraws were highest among ovarian and endometrial cancer patients due to death, disease progression, or entering hospice during the monitoring period (Table 2).

Of note is that there was no difference in response rates to the monthly surveys by age or in older patients’ abilities to use or be trained to use the iPhone to complete the surveys. Only a small number of patients (n = 21, 6.7%) preferred to have one or more of the monthly surveys administered by program staff via telephone or in-person. The majority of these patients were > age 70 and/or without reliable internet access, which is not uncommon in rural areas and the Appalachian region in Ohio. No patients completed the monthly surveys on paper forms after baseline.

Symptom alert values

Alert values for PHQ-9 scores ≥ 10 occurred in roughly one-third of the gynecologic oncology patients, with 3% expressing suicidal ideation. The majority of these patients were already receiving behavioral health services, with those not under care referred to behavioral health services in their areas. Graphs of the mean scores for the fatigue, sleep quality, pain, and quality of life 0–10 items are presented in Figs. 3, 4, and 5. The major persistent symptoms over the 12-month period, across all cancer types, were moderate levels (i.e., 4–7) of fatigue and poorer sleep quality. Pain was generally well controlled for all patient groups, and overall quality of life averaged between 6 and 8 for all patient groups.

Patient navigation

Navigation was used by approximately 13% of the ovarian, 14% of the endometrial cancer patients, and 27% of breast cancer patients. Navigators contacted the patients based on their responses to the monthly text-based surveys, as well as their responses to the two subscales of the James Supportive Care Questionnaire at baseline and month 6. Types of services for which patients needed assistance included transportation to and from clinic visits, information about cancer support groups and supportive services, cancer-related information, treatment questions, financial or insurance concerns, such as assistance with paying for medications or monthly bills, and dealing with insurance issues. Questions about treatment, medications, and insurance were forwarded to the nurse PCRMs in each clinic, after the navigator had talked with the patients to better understand their needs. Assistance with transportation, information about supportive services, or social programs to assist with monthly bills or housing were handled by the navigators. The average numbers of encounters the navigators had in working with each patient was between 2 and 3, with the majority of these encounters handled by telephone rather than in-person. Reported problems decreased between program entry and 6 months, as patient problems and needs were addressed (Table 3).

Patient evaluation

Formal quantitative evaluations of the delivery and value of the program were conducted with the patients at month 6 (all cancer types) (Table 4). Between 97.5 and 100% found it easy to complete the surveys on their phone/computer, and 71–77% found the program to be useful to themselves and their health care teams. Approximately 81% of the breast and 77.6% of the gynecologic oncology patients believed the monthly symptom questions helped them communicate better with their health care team. Approximately 86% of the endometrial and ovarian, and 92% of the breast cancer patients also believed other patients would benefit from the program during their treatment. Patients not finding the monthly surveys useful primarily commented that the questions were too redundant with assessments during treatment visits, that they were already cognizant of their symptom levels, and were not hesitant to talk to their health care provider regarding their concerns during clinic visits.

Patients were also asked at 6 months about the frequency of receiving the surveys. 92% of ovarian and endometrial, and 81% of breast patients believed that receiving the surveys once a month was “just about right.” The remainder suggested completing surveys at 6-week to 3-month intervals, depending on the stage of a patient’s treatment and when they were scheduled to be seen in clinic.

Oncologist/lead staff evaluation

The oncologists and lead staff provided feedback on the symptom-monitoring program through an emailed survey, with a follow-up interview or further email correspondence used with some providers to better understand suggestions/concerns for program improvement. In both gynecologic oncology and breast oncology, we had a lead oncologist or “clinic champion” who helped design the program for use with the target populations, and bring other oncologists onboard to participate in the program. Components considered to be the most effective were patients completing the surveys on smartphones/electronic devices, encouraging patients to use MyChart, and the ability for patients to be linked to a patient navigator for assistance. Several oncologists/staff were surprised to learn that some patients were more forthcoming on the surveys than when they talked to them in clinic, which opened up better communication with their patients. However, an unintended consequence was that a small number of patients (< 10) waited until their monthly survey was due to report severe symptoms to their oncologist, instead of reporting concerns to their health care team when they occurred. This sometimes led to a delay in treating symptoms.

Suggestions for improving the program were to find more succinct ways to report patient alert values to the health care team, including using patient graphs of symptoms over time; only reporting on patients each month who had alert values; focusing primarily on severe versus moderate symptoms; timing some assessments to be completed a week prior to the patients’ next clinic visit instead of only at monthly intervals; allowing oncologists to tailor the timing and content of the symptom monitoring to match specific patient’s needs; and including a report of the services provided by the patient navigator in the monthly report. In addition, several oncologists were uncertain of the value of continuing the monthly surveys after their patients had completed active therapy, given that patients were only being scheduled to come back to clinic at 3- or 6-month intervals for follow-up visits, and patients should be transitioning back to primary care or their routine health care providers.

Discussion

This paper reported on the feasibility of implementing text-based symptom monitoring with patient navigation to assist ovarian, endometrial, and breast cancer patients undergoing treatment. A major focus was on developing a symptom-monitoring system that could be utilized by most patients, and did not perpetuate biases against patients who lacked electronic devices. Unique aspects of this program included being able to provide smartphones and training to patients without these devices, as well as institute alert values to trigger patient navigators to triage patients’ clinical and non-clinical care needs. Our focus was primarily on larger health systems or academic medical centers that may have resources either through research grant funds or other sources to support these programs. We also sought to utilize or build on existing resources to offset costs of this program. For example, REDCap is available to many academic health centers in the U.S., and can support these types of monitoring program economically.

Successes of this program were that greater than 90% of patients in all three clinics elected to participate in this voluntary activity and complete the text-based surveys for up to 12 months. Adherence to the monthly surveys averaged to approximately 75%, but adherence was lowest among breast patients after 5 months, coinciding in part, with the completion of active therapy. In general, the majority of patients reported value in completing the monthly surveys and having another means to communicate with their health care team. The oncologists and staff in the participating clinics provided critical feedback. They found merit in being able to monitor patients remotely between clinic visits, although the frequency and the timing of the patient assessments, instructions given to patients to contact their health care team directly with severe symptoms or concerns, and the presentation of the survey results back to the health care team will need further streamlining. In addition, “real-time” symptom reporting to the health care team was requested, and the ability to focus on select patients with customized monitoring was believed to be an important use of this technology moving forward. The provision of cell phones to patients, as well as providing navigation services, went smoothly with no difficulties. A limitation of this program, however, was that since this was a pilot quality improvement program and not a randomized intervention study, there were no control groups for comparison purposes. In addition, although we made headway in training older patients to use smartphones or other electric devices to complete the monthly surveys, we still had greater numbers of older patients who preferred to complete these assessments by telephone administration and/or to refuse to enroll in MyChart. These results are similar to those reported by other investigators [21, 23, 27].

A recent review of mobile health interventions/programs found a positive impact among application users in the area of improved symptom control, and determined that changing the patterns of communication between patients and providers is one of the most beneficial aspects of mobile health [25]. Patients in our text-based program also reported similar benefits with more than 70% of patients indicating that completing the symptom surveys helped them communicate better with their providers. In addition, greater than 85% thought that other patients would benefit from this type of symptom monitoring during treatment.

Our original intent was to develop a system that could utilize MyChart to collect patients’ symptoms and needs over time. However, a major drawback of using MyChart is that it lacks flexibility in being able to more quickly add or modify questionnaire items, unlike REDCap. In addition, lower enrollment in MyChart among older adult patients, who constitute the majority of cancer patients, and/or those without electronic devices, again excludes patients from such monitoring and perpetuates health disparities. This is particularly problematic in the state of Ohio, given the large rural and Appalachian populations with sometimes unreliable internet service. Thus, we elected to use REDCap as our mode of survey delivery and data capture. This system worked well for our program purposes, and was very efficient and easy to manage. We will continue to refine this program to discern who might benefit the most from this type of monitoring, how best to meet the needs of the oncologists and staff in treating their patients, and explore options to integrate these data into the EHR, if desired by the health care teams, or use MyChart for some program components.

The value of this and other similar programs will be determined by whether they result in cost savings in terms of fewer hospitalizations, emergency department visits, having patients with better mental health and social support, or assist patients to solve personal/economic barriers to treatment through the use of patient navigators. Not all health care systems can access all of these program components, but routine symptom monitoring using a smart phone or computer/website may be accessible to many. Monitoring can be done in a variety of different ways when patients are not in clinic. This program was just one of the ways patient’ symptoms could be assessed in “real time” using a common technology to address patient needs while undergoing therapy.

Conclusion

This study established the feasibility of implementing a text-based, symptom management program with navigation support for cancer patients undergoing treatment. Future reports will examine the outcomes of this program on clinic flow and metrics (emergency department visits, hospitalizations), as well as more in-depth analyses of the impacts on patient quality of life.

References

Levit, L., et al. (Eds.). (2013). Delivering high-quality cancer care: Charting a new course for a system in crisis. Washington DC: Academic Press.

Cheng, K. K., & Yeung, R. M. (2013). Impact of mood disturbance, sleep disturbance, fatigue and pain among patients receiving cancer therapy. European Journal of Cancer Care (Engl), 22(1), 70–78.

Cleeland, C. S. (2007). Symptom burden: Multiple symptoms and their impact as patient-reported outcomes. Journal of the National Cancer Institute Monographs, 37, 16–21.

NCCN practice guidelines for the management of psychosocial distress. National Comprehensive Cancer Network. Oncology (Williston Park), 1999. 13(5A), 113–47.

Jacobsen, P. B., & Wagner, L. I. (2012). A new quality standard: the integration of psychosocial care into routine cancer care. Journal of Clinical Oncology, 30(11), 1154–1159.

Pachman, D. R., et al. (2012). Troublesome symptoms in cancer survivors: Fatigue, insomnia, neuropathy, and pain. Journal of Clinical Oncology, 30(30), 3687–3696.

Laugsand, E. A., et al. (2010). Health care providers underestimate symptom intensities of cancer patients: A multicenter European study. Health and Quality of Life Outcomes, 8, 104.

Khuri, S. F., et al. (2005). Determinants of long-term survival after major surgery and the adverse effect of postoperative complications. Annals of Surgery, 242(3), 326–341. discussion 341-3.

Rochefort, M. M., & Tomlinson, J. S. (2012). Unexpected readmissions after major cancer surgery: An evaluation of readmissions as a quality-of-care indicator. Surgical Oncology Clinics of North America, 21(3), 397–405.

Basch, E., et al. (2016). Symptom monitoring with patient-reported outcomes during routine cancer treatment: A randomized controlled trial. Journal of Clinical Oncology, 34(6), 557–565.

Nipp, R. D., et al. (2019). Pilot randomized trial of an electronic symptom monitoring intervention for hospitalized patients with cancer. Annals of Oncology, 30(2), 274–280.

Kotronoulas, G., et al. (2014). What is the value of the routine use of patient-reported outcome measures toward improvement of patient outcomes, processes of care, and health service outcomes in cancer care? A systematic review of controlled trials. Journal of Clinical Oncology, 32(14), 1480–1501.

Donaldson, M. S. (2008). Taking PROs and patient-centered care seriously: Incremental and disruptive ideas for incorporating PROs in oncology practice. Quality of Life Research, 17(10), 1323–1330.

Velikova, G., et al. (2004). Measuring quality of life in routine oncology practice improves communication and patient well-being: A randomized controlled trial. Journal of Clinical Oncology, 22(4), 714–724.

Yang, L. Y., et al. (2018). Patient-reported outcome use in oncology: A systematic review of the impact on patient-clinician communication. Supportive Care in Cancer, 26(1), 41–60.

Cleeland, C. S., et al. (2011). Automated symptom alerts reduce postoperative symptom severity after cancer surgery: A randomized controlled clinical trial. Journal of Clinical Oncology, 29(8), 994–1000.

Basch, E., Bennett, A., & Pietanza, M. C. (2011). Use of patient-reported outcomes to improve the predictive accuracy of clinician-reported adverse events. Journal of the National Cancer Institute, 103(24), 1808–1810.

Garcia, S. F., et al. (2019). Implementing electronic health record-integrated screening of patient-reported symptoms and supportive care needs in a comprehensive cancer center. Cancer, 125, 4059.

Stover, A. M., et al. (2019). Using stakeholder engagement to overcome barriers to implementing patient-reported outcomes (PROs) in cancer care delivery: Approaches from 3 prospective studies. Medical Care, 57(Suppl 5), S92–S99.

Avery, K. N. L., et al. (2019). Developing a real-time electronic symptom monitoring system for patients after discharge following cancer-related surgery. BMC Cancer, 19(1), 463.

Albaba, H., et al. (2019). Acceptability of routine evaluations using patient-reported outcomes of common terminology criteria for adverse events and other patient-reported symptom outcome tools in cancer outpatients: Princess margaret cancer centre experience. The Oncologist, 24, 0830.

Nielsen, L. K., et al. (2019). Strategies to improve patient-reported outcome completion rates in longitudinal studies. Quality of Life Research, 29, 335.

Harris, P. A., et al. (2009). Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics, 42(2), 377–381.

Skovlund, P. C., et al. (2020). The development of PROmunication: A training-tool for clinicians using patient-reported outcomes to promote patient-centred communication in clinical cancer settings. Journal of Patient Reported Outcomes, 4(1), 10.

Osborn, J., et al. (2020). Do mHealth applications improve clinical outcomes of patients with cancer? A critical appraisal of the peer-reviewed literature. Supportive Care in Cancer, 28(3), 1469–1479.

Warrington, L., et al. (2019). Electronic systems for patients to report and manage side effects of cancer treatment: Systematic review. Journal of Medical Internet Research, 21(1), e10875.

Jensen, R. E., et al. (2014). Review of electronic patient-reported outcomes systems used in cancer clinical care. Journal of Oncology Practice, 10(4), e215–e222.

Wells-Di Gregorio, S., et al. (2013). The james supportive care screening: Integrating science and practice to meet the NCCN guidelines for distress management at a comprehensive cancer center. PsychoOncology, 22(9), 2001–2008.

Paskett, E. D., et al. (2012). The Ohio patient navigation research program: Does the American Cancer Society patient navigation model improve time to resolution in patients with abnormal screening tests? Cancer Epidemiology, Biomarkers & Prevention, 21(10), 1620–1628.

Buchanan, D. R., et al. (2005). Quality-of-life assessment in the symptom management trials of the National cancer institute-supported community clinical oncology program. Journal of Clinical Oncology, 23(3), 591–598.

Singh, J. A., et al. (2014). Normative data and clinically significant effect sizes for single-item numerical linear analogue self-assessment (LASA) scales. Health and Quality of Life Outcomes, 12, 187.

Andersen, B. L., et al. (2014). Screening, assessment, and care of anxiety and depressive symptoms in adults with cancer: An American society of clinical oncology guideline adaptation. Journal of Clinical Oncology, 32(15), 1605–1619.

The Ohio Department of Health. (2015). Cancer incidence surveillance system. Ohio Department of Health (Editor): Columbus, Ohio.

Acknowledgements

The program was supported by a grant from the Merck Foundation’s Alliance to Advance Patient-Centered Cancer Care. REDCap services were provided by the Recruitment, Intervention, and Survey Shared Resource (RISSR) of the Ohio State University Comprehensive Cancer Center (P30CA16058).

Author information

Authors and Affiliations

Contributions

Conceptualization: MN, RS, ML, EP, CD. Methodology: MN, JM, HL, CD. Formal analysis and investigation: JP, MN. Writing—original draft preparation: MN, CB, JM, HL, EP. Writing—review and editing: MN, JM, HL, CB, EP, RS, ML, CD, JP. Funding acquisition: MN and EP.

Corresponding author

Ethics declarations

Conflict of interest

Electra Paskett held stock ownership in Pfizer during part of the conduct of this study. The other authors declare that they have no conflicts of interest relevant to this manuscript.

Ethical approval

All procedures performed involving patients were in accordance with the ethical standards of The Ohio State University Institutional Review Board and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was not obtained from all individual patients included in the study. This was a quality improvement program and was deemed as exempt from Institutional Review Board (IRB) human subjects review by The Ohio State University IRB. All patients could refuse participation in the clinical quality improvement program at any time.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Naughton, M.J., Salani, R., Peng, J. et al. Feasibility of implementing a text-based symptom-monitoring program of endometrial, ovarian, and breast cancer patients during treatment. Qual Life Res 30, 3241–3254 (2021). https://doi.org/10.1007/s11136-020-02660-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-020-02660-w