Abstract

A number of indications, such as the number of Nobel Prize winners, show Japan to be a scientifically advanced country. However, standard bibliometric indicators place Japan as a scientifically developing country. The present study is based on the conjecture that Japan is an extreme case of a general pattern in highly industrialized countries. In these countries, scientific publications come from two types of studies: some pursue the advancement of science and produce highly cited publications, while others pursue incremental progress and their publications have a very low probability of being highly cited. Although these two categories of papers cannot be easily identified and separated, the scientific level of Japan can be tested by studying the extreme upper tail of the citation distribution of all scientific articles. In contrast to standard bibliometric indicators, which are calculated from the total number of papers or from sets of papers in which the two categories of papers are mixed, in the extreme upper tail, only papers that are addressed to the advance of science will be present. Based on the extreme upper tail, Japan belongs to the group of scientifically advanced countries and is significantly different from countries with a low scientific level. The number of Clarivate Citation laureates also supports our hypothesis that some citation-based metrics do not reveal the high scientific level of Japan. Our findings suggest that Japan is an extreme case of inaccuracy of some citation metrics; the same drawback might affect other countries, although to a lesser degree.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Citation bibliometrics is a widely used and well-established procedure for research assessment (van Raan 2019); it is used by the National Science Board of US (e.g., National Science Board 2020), The European Commission (e.g., European Commission 2022), and the CWTS of Leiden (e.g., https://www.leidenranking.com/ranking/2021) among others well-known institutions. Despite this and multiple demonstrations of its accuracy, bibliometric assessment of Japan fails to identify its unquestionably high research level. This problem is clearly summarized in a review by Pendlebury (2020, p. 134):

National science indicators for Japan present us with a puzzlement. How can it be that an advanced nation, a member of the G7, with high investment in R&D, a total of 18 Nobel Prize recipients since 2000, and an outstanding educational and university system looks more like a developing country than a developed one by these measures? The citation gap between Japan and its G7 partners is enormous and unchanging over decades. Japan’s underperformance in citation impact compared to peers seems unlikely to reflect a less competitive or inferior research system to the degree represented.

Among the reasons proposed by several authors to explain the poor bibliometric performance of Japan, Pendlebury (2020, p. 134) highlights “modest levels of international collaborations and comparatively low levels of mobility” and “Japan’s substantial volume of publication in nationally oriented journals that have limited visibility and reduced citation opportunity.” If these explanations are correct, citation-based metrics, which are widely used, could not be used in Japan for predicting the contribution to the advancement of knowledge.

There are also other explanations that are based on calculation failures that might affect to all countries. Inadequate normalization procedures (Bornmann and Leydesdorff 2013) is the most evident failure, but even if normalization is correct, the mix of different types of research might still be the cause. Research focused on different objectives produce different citation distributions with different probabilities of achieving highly cited publications; after aggregation, in the resulting citation distribution, the visibility of the research with higher probability of being highly cited may be obscured.

This might to occur in Japan because a significant amount of its research activity is applied to achieving incremental innovations of processes and products. This type of research is unlikely to receive a high number of citations and might conceal the research that is focused on pushing the boundaries of knowledge (Rodríguez-Navarro and Brito 2021a). Therefore, frequently used bibliometric indicators, such as the top 10% and 1% of cited papers, would be lower than expected from the size of the system if all publications were for the advancement of science. Consequently, Japan appears as a developing country. If it were possible to separate those papers on incremental innovations from the papers that are addressed to push the boundaries of knowledge, the bibliometric analysis of these papers would reveal the real, high scientific level of Japan.

This last cause has a general character and would affect to all countries. In this case, Japan would be only an extreme and visible case. In other countries the effects might remain unnoticed, because either the causes are less relevant or they affects similarly to many countries. Consequently, the study of the causes that originate the poor results of citation-based metrics in Japan has a general interest in scientometrics. The results would be especially relevant if the causes of Japan’s erroneous assessments affected to most countries and were avoidable by improving the metrics.

2 Aim of this study

The aim of this study is to demonstrate that the apparent poor performance of Japan in national science indicators can be corrected with appropriate citation-based metrics, which would place Japan at the same level as other developed countries. We conjecture that inaccurate research evaluations of Japan are not the result of specific publication habits, but rather constraints of common citation metrics in describing complex research systems in which two research systems coexist, each pursuing different objectives. If this conjecture is correct, inaccurate research assessments may occur in many countries, among which Japan is only an extreme and noticeable case.

Our working hypothesis is that the number of publications that push the boundaries of knowledge in Japan is comparable to the numbers in other advanced countries, but that using standard bibliometric indicators their weight is concealed by a large proportion of publications that are lowly cited. Unfortunately, it is not feasible to identify the publications that push the boundaries of knowledge, but, alternatively, the scientific level of Japan will be correctly identified if the number of papers in the extreme upper tail of the citation distribution of Japan is similar to those of scientifically advanced countries, and not to those of scientifically developing countries.

Before addressing this hypothesis we investigated if the citation distribution of Japanese publications is different from those from other countries, especially the USA for its dominant role in science and technology.

3 Materials and methods

3.1 Data retrieval

Publication data and number of citations were retrieved from the Web of Science (WoS) Core Collection database (Clarivate Analytics), Edition: Science Citation Index Expanded, using the Advanced Search feature.

In the first part of this study, we obtained the citation distributions for publications from Japan, the USA, and Germany in the topics (TS=) of semiconductors and lithium batteries. The citation window was 2020–2022, and the publication window was separated approximately six years of the citation window. The number of years of this window was chosen so that the number of publications was similar in all cases, around 1000, but varied across cases due to the variability in the number of publications per year. In lithium batteries: Germany, 2010–2014; the USA, 2011–2012; Japan, 2011–2013. In semiconductors: Germany, 2013–2014; the USA, 2014; Japan, 2014. We would like to emphasize that the selection of the citation and publication windows does not affect to the qualitative analysis regarding the proportion of papers that are uncited or have a very low number of citations, which is rather independent of these windows.

The second part of the study was addressed to identify very highly cited papers and, because of the infrequency of these publications, we used a publication window of 10 years and selected the “Research Areas” of WoS (SU=): chemistry, physics, polymer science, meteorology & atmospheric sciences, biochemistry & molecular biology, biotechnology & applied microbiology, cell biology, general & internal medicine, genetics & heredity, energy & fuels, engineering, materials science, and science & technology - other topics.

We restricted the search to “Articles,” because of the abundance of review papers in the extreme upper tail (Miranda and Garcia-Carpintero 2018) that are unlikely to be a source of knowledge. We also recorded domestic papers. Although fractional counting is recommended for ranking purposes (Waltman and van Eck 2015), international collaborations “create false impression of the real contribution of countries” (Zanotto et al. 2016, p. 1790) by increasing the number of highly cited papers (Allik et al. 2020), most probably in different proportions across countries. More importantly, because it has been proposed that the low citation-based performance of Japan is a low visibility of its publications (Sect. 1), international collaborations could hide, at least partially, this problem, making it difficult to investigate.

To retrieve domestic papers, we created a list of the 50 countries with the highest number of publications, which publish 98% of all the papers in the selected research areas. The country query (CU=) was constructed with one country while excluding all others. We used a time span of 10 years (2008–2017) so that small countries with competitive research could be studied; the total number of papers was 5,922,804. As explained above, our study is centered on publications that occur with very low frequency.

3.2 Calculation of the Ptop 10%/P ratio

The Ptop 10%/P ratio was used to confirm that Japan looks like a developing country under the terms of our search. However, in a period of 10 years, the large number of global papers in our list did not allow its calculation from the limited list of papers offered by Clarivate Analytics. Therefore, we calculated the Ptop 1%/P ratio and obtained the Ptop 10%/P ratio by using the following equation (Rodríguez-Navarro and Brito 2019, 2021b):

For comparison we also calculated Ptop 10%/P ratio in a single year.

3.3 Upper tail analyses

In our initial analysis of the high citation tails, we used the number of citations within the three-year window 2018–2020 for the publications within the 10-year window 2008–2017. This timeframe enable us to study the 15 selected countries (Sect. 3.1). First, we recorded the number of country papers in the global list of the most cited papers (starting with the highest), considering the top 5000, 2000, 1000, and 500 papers. This method is equivalent to percentile metrics for very narrow percentiles (e.g. 5000 is equivalent to the top 0.084 most cited papers). Next, to conduct a more complete analysis of these tails, we studied the double rank plot in each country (Rodríguez-Navarro and Brito 2018a). To obtain the double rank of each paper, in both the global and local lists, papers were ranked according to their number of citations in descending order. The same paper in both lists was identified by its title and characterized by its ranks in both lists. Double rank plots follow a power law, which is also obtained when the number of papers is plotted against the top percentiles (Brito and Rodríguez-Navarro 2018).

In the double rank power law, the last few data points of countries often deviate from the trend of the rest of the data points (Rodríguez-Navarro and Brito 2018a). Therefore, to address this issue without losing the valuable information that can be derived from double rank analysis, we recorded four datasets from the extreme upper tail: the global rank of papers in local ranks 1, 15, and 40, and the constant ep calculated from the data points with local ranks between 15 and 40. This constant was calculated from the fitted power laws (Rodríguez-Navarro and Brito 2018b). The constant ep equals 0.1 raised to a power that is the exponent of the power law. In a statistically fit double rank power law, the constant ep equals Ptop 10%/P. Therefore, when only Ptop 10% and P are known, Ptop 10%/P is a proxy of ep. If a country had the two categories of papers described in Sect. 2, the constant ep of the data points in the extreme upper tail would be different from the constant ep of the majority of the papers.

It is important to note that fitting a power law to 25 data points (from ranks 15 to 40) that vary by less than two orders of magnitude in the global rank, must be taken with caution (Clauset et al. 2009). However, the differences in the constant ep that are significant for our study are much higher than any possible error of the fitting.

3.4 Nobel prizes and citation laureates

A notable contrast in the research evaluation of Japan occurs between standard bibliometric indicators and the number of Nobel Prizes (Pendlebury 2020). Therefore, we complemented our bibliometric study recording the number of Citation laureates (https://clarivate.com/citation-laureates/hall-of-citation-laureates/, visited on 11/10/2021) and the number of Nobel Prizes. Because in several cases the country where the prize-winning work was done and where it was awarded are different (Schlagberger et al. 2016), we used the list of where the prize-winning work was done.

4 Results

4.1 Citation distributions

As explained in Sect. 1, two causes have been given to explain the low citation-based performance of Japan: high proportion of technological research and low visibility of Japanese publications. Both causes can affect to many citation-based metrics, but is unlikely that they affect to the number of the very highly cited publications reporting breakthroughs. Therefore, our working hypothesis is that the number of publications that push the boundaries of knowledge in Japan is comparable to the numbers in other advanced countries, but using standard bibliometric indicators their significance is obscured by a large proportion of other publications that are cited infrequently (Sect. 2).

As a first step to test our hypothesis, we investigated the distribution of citations of Japanese publications in two technologies, semiconductors and lithium batteries, in which Japanese researchers obtained Nobel Prizes in 2014 and 2019, respectively. We studied the data plots using logarithmic binning, along with the numbers of papers uncited and those with one and two citations (Fig. 1). In both technologies, it is evident that the sets of these publications are much higher than would be expected from lognormal distributions. Even the number of publications with 3–4 citations is much higher than expected from the lognormal distribution. These results are consistent with our working hypothesis (Sect. 2). It appears that two distributions overlap: a lognormal distribution (a normal distribution with logarithmic binning) and a distribution with a progressive decrease in the number of papers as the number of citation increases from zero to four. For comparison, we also consider two other countries: Germany and USA (Fig. 2). In these two countries, the distributions are qualitatively similar to those of Japan, although with significant quantitative differences. For example, in the distribution of the US publications in lithium batteries, the proportion of the publications with a very low number of citations is very low.

Plots of the number of articles versus the citations received for papers produced by Japan in two leading technologies: semiconductors and lithium batteries. Solid lines are nonlinear curve fitting to lognormal distributions of the data for papers with more than 5 citations. Searching conditions are described in the text

These results show that Japan is not qualitatively different from the USA and Germany in terms of the papers with a medium or high number of citations. The difference is in the proportion of uncited publications or with a very low number of citations. These simple comparisons suggest that counting the number of publication in the extreme upper tail of the citation distribution might provide a solution to evaluate the contribution of Japan to the progress of knowledge.

4.2 Standard citation metrics

Before focusing our analysis on the very highly cited publications in several research areas (Sect. 3.1), we checked whether, under our search conditions and evaluating by standard citation metrics, Japan was classified as a developing country. For this purpose, a convenient indicator was the Ptop 10%/P ratio.

Plots of the number of articles versus the citations received for papers produced by Germany and USA in semiconductors and lithium batteries. Solid lines are nonlinear curve fitting to lognormal distributions of the data for papers with more than 5 citations. Searching conditions are described in the text

Unfortunately, in our 10-year window, the number of papers in the global top 10% of cited papers is very large and it is not possible to calculate the Ptop 10%/P ratio using the papers listed by Clarivate Analytics. Therefore, we calculated the Ptop 10%/P ratio within the 10-year window by taking the square root of the Ptop 1%/P ratio (Eq. 1). Additionally, for comparison, we also examined the Ptop 10%/P ratio for a single year, specifically 2012, which falls in the middle of the 10-year window. The disparities between the two calculations of Ptop 10%/P ratio can be attributed to deviations of percentile data from the theoretical power law (Brito and Rodríguez-Navarro 2018). These deviations are not relevant to this study. Nevertheless, assuming the deviation of the power law that is inherent to our hypothesis regarding the two distributions of papers, the calculation of the Ptop 10%/P ratio from Ptop 1%/P will lead to an improved ranking for Japan, as the Ptop 1% is positioned higher in the upper tail than the Ptop 10%.

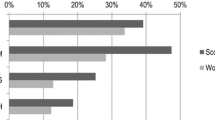

Table 1 displays a selection of 15 countries in which, under the conditions of our study. As expected, Japan is at the bottom of the table. In a single year window (2012), the Ptop 10%/P ratio in Japan was 0.059, similar to that of India, 0.056, much lower than those of the USA, Switzerland, the Netherlands, and the UK, all in the range of 0.153 − 0.121, and lower than that of even Italy, 0.073. These results are consistent with the Ptop 10%/P ratios in the 10-year window.

These results confirm that based on to the Ptop 10%/P ratio, Japan does not look like a highly industrialized developed country. However, it is worth noting that, in the research areas evaluated in this study, domestic papers from other countries such as Germany, France, and Canada also have low indicator values. Therefore, we checked the accuracy of these results by performing an analysis in InCites Benchmarking and Analytics (Clarivate Analytics), reproducing the conditions of our analysis performed using the Advanced Search feature. The Ptop 10%/P ratios in Table 1 were similar to those found from the InCite analysis (results not shown).

4.3 Number of papers in the extreme upper tail

Next we analyzed the presence of each country in the upper tail of world publications by recording the numbers of papers among the global top 5,000, 2,000, 1,000, and 500 most-cited papers (Table 2). Japan is the sixth country by number of papers in the top 5,000, 1,000, and 500, and fifth in top 2,000, ahead of countries with top positions in international scientific rankings, including Switzerland, France, and the Netherlands. It is worth noting that the results presented in Table 2 depend on the probability that the research system has of publishing a highly cited paper and on the size of the system that pursues the advancement of knowledge. This size remains unknown, if a part of the whole research system pursues incremental innovations for the national industry (Sect. 2).

Ignoring this difficulty, an intuitive approach is to obtain the ratios between the numbers of top and total papers in each country (Table 3). According to these ratios, Japan is 10th in the global top 5,000, 2,000, and 1,000 papers, and ninth in the global top 500 papers. Although according to this ratio Japan still keeps a reasonably position among developed countries, its decline in the ranking was evident.

4.4 Double rank analysis

Next, we studied the double rank plot of the publications in the high citation tail (Sect. 3.3). First, we recorded the global ranks of the papers in country ranks 1, 15, and 40. Then we fit 25 data points to the power law (local ranks 15 to 40) and calculated the constant ep from the exponent of the power law; Table 4 presents the results. It is worth noting that these global ranks of the country papers are size dependent, while the constant ep is size independent.

According to the global ranks, the USA shows the best performance (ranks 6-48-123) better than the UK and Germany (ranks 5-342-1192 and 3-198-1224, respectively), but with 5.5 and 4.1 times more publications than the UK and Germany, respectively. China publishes 1.3 more papers than the USA, but its ranks (40-473-1233) demonstrate a worse performance than the USA. Germany publishes 1.4 times more papers than France, but this difference in size may not be sufficient to explain the notable differences in global rankings (3-198-1224 versus 33-1320-3946). Japan publishes twice as many papers as France and shows better global ranks (16-759-3092 versus 33-1320-3946), which could be explained by the difference in size. India publishes 1.8 times more papers than France, but despite this difference, its global ranks are worse than those of France (858-4487-8795 versus 33-1320-3946). Japan only publishes 11% more papers than India, but its global ranks have much lower values (16-759-3092 versus 858-4487-8795), which indicates a much better performance of Japan, in contrast with the data in Table 1.

The comparisons of the constant ep are easier to interpret because this constant is size independent. In this case, Japan lies in second position after Switzerland, matched with the Netherlands and ahead of France, Germany, Australia, the UK and the USA.

4.5 Citation laureates

Our citation-based study contradicts common research rankings and reveals the high scientific level of Japan. This is corroborated by other approaches that do not rely on common citation metrics. The number of Nobel laureates is a prominent example, but Clarivate Analytics provides further evidence. More than 50 years ago, Eugene Garfield established a connection between Nobel Prizes and very high number of citations (Garfield and Malin 1968; Garfield and Welljams-Dorof 1992). More recently, based on citations, Thomson Reuters and Clarivate Analytics have published lists of candidates to win the Nobel Prize; currently the list of Citation laureates from 2002 to 2021 is available (https://clarivate.com/citation-laureates/hall-of-citation-laureates/, visited on 11/10/2021). The selection of Citation laureates is based on a very high number of citations and the resulting list is further refined with other criteria. Table 5 shows that the high position of Japan in Citation laureates is consistent with its high position in Nobel Prizes.

5 Discussion

Standard citation-based metrics do not identify Japan to be the scientifically advanced country that it is (Pendlebury 2020); we conjecture that the failure might be due to the existence of two categories of papers, one with a low probability of being highly cited that prevents citation-based metrics from revealing the relevance of the other category of papers with a higher probability of being highly cited (Sects. 1 and 2).

Our first step to investigate this failure was to characterize the citation distribution of Japanese publications in two technological fields in which Japan won Nobel Prizes in recent years: semiconductors and lithium batteries. Therefore, it can be assumed that Japan’s contribution to the progress of knowledge in these two fields has to be high, comparable or even superior to that of other developed countries; a reliable citation metric should reveal this conclusion.

Although it has been proposed that citations to scientific publications show a universal lognormal distribution (Radicchi et al. 2008; reviewed by Golosovsky 2021), Waltman and van Eyck (2012) have shown that this claiming is not warranted for all research fields. Our results show that citations to publications in two research fields that are highly cited and in which Nobel Prizes are awarded do not show a lognormal distribution of citations (Figs. 1 and 2). There are apparently two populations of papers; above a certain number of citations the distribution of citations seems to be lognormal, but for zero and low number of citations, the number of papers shows an obvious decrease when the number of citations increases.

The causes that originate these two populations of publications is out of the scope of this study, but only the shape of these complex distributions shows that the most common bibliometric indicators are misleading. It seems clear that dividing the number of papers with high or medium numbers of citations (e.g., Ptop 1% or Ptop 10%) by the total number of papers is not going to provide reliable information about the real probability that a country has for achieving important breakthroughs. Comparison of countries using these ratios will produce reliable results only if their citation distributions are similar, but not if they are very different. Everything seems to indicate that in Japan the proportion of publications in which the citation distribution is lognormal is smaller than in other countries, which suggests that indicators such as Ptop 10%/P and Ptop 1%/P will not produce a reliable comparison of Japan with other countries.

To overcome these bibliometric difficulties to evaluate the contribution to the progress of knowledge, it is currently impossible to identify the population of papers whose citation distribution is lognormal. Therefore, we centered our study on the upper tail of citation distribution where the population with almost zero probability of being highly cited will be absent. We selected a series of research areas that include both technological and scientific papers and a long period (10 years), to obtain information about publications that are infrequent. Before addressing the key question, we checked that under the conditions considered herein and consistently with many previous studies (Pendlebury 2020), Japan is classified as a scientifically developing rather than advanced country. For this purpose, we used the common bibliometric indicator Ptop 10%/P ratio (Table 1). Our general analysis based on the Ptop 10%/P ratio indicates that Japan looks like a developing country, in contradiction with its high research success that is deduced from the number of Nobel Prizes (Pendlebury 2020; Schlagberger et al. 2016).

In the first analysis of the extreme upper tail of the citation distribution, we used the number of papers from a country that makes it into the global top 5,000, 2,000, 1,000 and 500 papers (Table 2). In addition, because the numbers of these top papers are obtained from very different numbers of total papers, we also calculated the ratios between the number of these papers and the total number of papers (Table 3). According to the number of papers, Japan is the fifth or sixth in the list of 15 countries, ahead of Switzerland and the Netherlands; based on the ratio with respect to the total number of publications, Japan drops to 10th or 11th position. This does not imply that the Japan’s research that is addressed to the progress of science is not highly efficient. In fact, it is what would be expected if our hypothesis were correct because the total number of papers includes those with almost zero or extremely low probability of being highly cited.

Due to the complexity of the citation distributions shown in Figs. 1 and 2, the only conclusion that can be drawn from the data presented in Tables 2 and 3 is that the contribution to the progress of Japan’s knowledge is much greater than that obtained from the analysis of the data in Table 1.

In the second approach we used the double rank analysis. For a country or institution, the plot of the local rank of its papers versus their global rank (in both cases, the most cited papers rank first) is a power law. The problem with this approach is that the last few data points of citation upper tails are noisy (Rodríguez-Navarro and Brito 2018a). Therefore, we fitted the power law to the data points between ranks 15 and 40 in all countries. Furthermore, to provide another view of the competitiveness of the countries, we also recorded the global rank of those papers whose local ranks are 1, 15 and 40 (Table 4). The smaller these values, the higher the contribution of the country to the progress of knowledge. It is worth noting that the global ranks of these papers are size dependent while the constant ep of the power law is size independent.

According to the global rank of the paper ranking first in the local list, Japan lies in fourth position after Germany, the UK, and the USA; in the global ranks of papers in positions 15 and 40, Japan is fifth after the same countries plus China. On the bases of the constant ep, Japan and the Netherlands have the same and highest value after that of Switzerland.

Taken together, these analyses of the extreme upper tail of the citation distribution indicate that Japan is unquestionably a scientific advanced country, which is coincident with other types of assessments (Table 5). Our analyses of the extreme upper tail do not allow the production of a ranking of countries, but this was not our intention. The purpose of our study was to investigate whether the failure of citation-based metrics with Japan is due to a general failure produced by the low visibility of Japan’s scientific publications (Sect. 1) or a failure of the citation-based metrics that are normally used. The results indicate that a general failure can be ruled out and that appropriate metrics could correctly evaluate Japan. To explain the failure of some citation-based metrics, the hypothesis of the two types of paper categories is consistent with the results presented in Figs. 1 and 2.

6 Implications

The failure of common citation metrics to reveal the high scientific level of Japan suggests that similar shortcomings may exist in other countries. In some countries, this might be due to a significant proportion of research aimed at incremental innovations. In others, it may occur because a certain proportion of research is focused on teaching or simply lacks competitiveness. Regardless of the reason, the key point is that in country rankings, the existence of these types of research should not overshadow the research that advances the boundaries of knowledge, if such research exists.

Our results indicate that this issue can be addressed by designing citation metrics that focus on the extreme upper tail of the citation distribution. This does not imply excluding common citation metrics, but rather complementing them to provide comprehensive information, which can be crucial for accurate research assessment in certain countries.

References

Allik, J., Lauk, K., Realo, A.: Factors predicting the scientific wealth of nations. Cross Cult. Reserach. 54, 364–397 (2020)

Bornmann, L., Leydesdorff, L.: Macro-indicators of citation impacts of six prolific countries: InCites data and the statistical significance of trends. PLoS ONE 8, e56768 (2013)

Brito, R., Rodríguez-Navarro, A.: Research assessment by percentile-based double rank analysis. J. Informetrics. 12, 315–329 (2018)

Clauset, A., Shalizi, C.R., Newman, M.E.J.: Power-law distributions in empirical data. SIAM Rev. 51, 661–703 (2009)

European Commission: Science, Reserach and Innovation Performance of the EU. Building a Sustainable Future in Uncertain Times. Publication Office of the EU, Luxembourgh (2022)

Garfield, E., Malin, M.V.: Can Nobel Prize winners be predicted. 135th Annual Meeting, American Association for the Advancement of Science, Dallas, Texas, December 26–31 (1968)

Garfield, E., Welljams-Dorof, A.: Of Nobel class: A citation perspective on high impact research authors. Theoret. Med. 13, 117–135 (1992)

Golosovsky, M.: Universality of citation distributions: A new understanding. Quant. Sci. Stud. 2, 527–543 (2021)

Miranda, R., Garcia-Carpintero, E.: Overcitation and overrepresentation of review papers in the most cited papers. J. Informetrics. 12, 1015–1030 (2018)

National Science Board, N. S. F.: Science and Engineerin Indicators 2020: The State of U.S. Science and Engineering. NSB-2020-1. Alexandria, VA: (2020)

Pendlebury, D.A.: In: Daraio, C., Glanzel, W. (eds.) When the data don’t mean what they say: Japan’s Comparative Underperformance in Citation Impact. The Art of Metrics-based Research Assessment, Evaluative Informetrics (2020)

Radicchi, F., Fortunato, S., Castellano, C.: Universality of citation distributions: Toward an objective measure of scientific impact. Proc. Natl. Acad. Sci. USA. 105, 17268–17272 (2008)

Rodríguez-Navarro, A., Brito, R.: Double rank analysis for research assessment. J. Informetrics. 12, 31–41 (2018a)

Rodríguez-Navarro, A., Brito, R.: Technological research in the EU is less efficient than the world average. EU research policy risks europeans’ future. J. Informetrics. 12, 718–731 (2018b)

Rodríguez-Navarro, A., Brito, R.: Probability and expected frequency of breakthroughs – basis and use of a robust method of research assessment. Scientometrics. 119, 213–235 (2019)

Rodríguez-Navarro, A., Brito, R.: The link between countries’ economic and scientific wealth has a complex dependence on technological activity and research policy. Scientometrics. 127, 2871–2896 (2021a)

Rodríguez-Navarro, A., Brito, R.: Total number of papers and in a single percentile fully describes reserach impact-revisiting concepts and applications. Quant. Sci. Stud. 2, 544–559 (2021b)

Schlagberger, E.M., Bornmann, L., Bauer, J.: At what institutions did Nobel lauretae do their prize-winning work? An analysis of bibliographical information on Nobel laureates from 1994 to 2014. Scientometrics. 109, 723–767 (2016)

van Raan, A.F.J.: In: Glänzel, W., Moed, H.F., Schmoch, U., Thelwall, M. (eds.) Measuring Science: Basid Principles and Application of Advanced Bibliometrics. Springer Hanbook of Science and Tecnology Indicators (2019)

Waltman, L., van Eck, N.J.: Field-normalized citation impact indicators and the choice of an appropriate counting mehod. J. Informetrics. 9, 872–894 (2015)

Waltman, L., van Eck, N.J., van Raan, A.F.J.: Universality of citation distributions revisited. J. Am. Soc. Inform. Sci. 63, 72–77 (2012)

Zanotto, S.R., Haeffner, C., Guimaraes, J.A.: Unbalanced international collaboration affects adversely the usefulness of countries’ scientific output as well as their technological and social impact. Scientometrics. 109, 1789–1814 (2016)

Funding

This work was supported by the Spanish Ministerio de Ciencia e Innovación [grant number PID2020-113455GB-I00]

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Contributions

AR-N: conceptualization, methodology, and writing original draft preparation and editing; RB: methodology, writing original draft preparation and editing, and funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rodríguez-Navarro, A., Brito, R. The extreme upper tail of Japan’s citation distribution reveals its research success. Qual Quant (2024). https://doi.org/10.1007/s11135-024-01837-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s11135-024-01837-6