Abstract

The rise in longevity in the twentieth century has led to a growing interest in modeling mortality, and new advanced techniques such as machine learning have recently joined to more traditional models, such as the Lee–Carter or the Age Period Cohort. However, the performances of these models, in terms of fitting to the observed data, are difficult to compare in a unified framework. The goodness-of-fit measures summarizing the discrepancy between the estimates from the model and the observed values are different for traditional mortality models and machine learning. We, therefore, employ a new technique, Contrast trees, which, leveraging on decision trees, provides a general approach for evaluating the quality of fit of different kinds of models by detecting the regions in the input space where models work poorly. Once the low-performance regions are detected, we use Contrast boosting to improve the inaccuracies of mortality estimates provided by each model. To verify the ability of this approach, we consider both standard stochastic mortality models and machine learning algorithms in the estimate of the Italian mortality rates from the Human Mortality Database. The results are discussed using both graphical and numerical tools, with particular attention to the high-error regions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since 1980, innovative approaches and developments in mortality modeling have been constantly proposed. Mortality analysis has received a considerable contribution from statistical science, building solid foundations for the evolution of mortality methods. Estimating longevity is not a trivial task; accuracy depends on the particular situation or trends, and figuring out if or when a certain method will be effective is not straightforward. Indeed, new mortality models will appear in the literature but may take years before they can be fully evaluated. As stated by Booth and Tickle (2008), the accuracy of mortality estimates should be regularly tested to set the improvement evidence. Researchers appear to be more focused on technical progress of a method rather than on the accuracy of the estimation provided, focusing on minimizing the bias.

Several approaches have been used to model the mortality surface, determining how death rates change over time. Until the 1980 s, relative simplicity and personal judgment were common features of mortality models (see Pollard (1987) for a detailed review on this aspect). Since then, mortality data became more easily available, which, jointly with the development of a wider array of statistical-mathematical tools, led to the creation of increasingly complex mortality models. According to Booth and Tickle (2008), three main paradigms for demographic modeling can be identified. The first one (explanation) is based on structural or epidemiological models from certain causes of death. A widely known example is the connection between lung cancer and tobacco smoking. Expectation, the second paradigm, relies on expert opinion and involves varying degrees of formality. Finally, making use of the regular structures typically found in age patterns and trends over time leads to the widely adopted extrapolative paradigm. This last approach includes the more complex stochastic mortality models such as the Lee–Carter (Lee and Carter 1992) and, more generally, the Generalized Age Period Cohort (GAPC) model. Despite the Lee–Carter model being recognized as one of the most influential proposals for mortality modeling and forecasting, in recent years scholars suggested additional approaches that sparked interest in actuarial and demographic literature (Brouhns et al. 2002; Renshaw and Haberman 2006; Cairns et al. 2006, 2009).

Despite models like the Lee–Carter and its variants having been widely used, becoming a benchmark for many newly proposed methodologies, they present several shortfalls. In this line, Cairns et al. (2008) tried to address the issue of what would be the best way to estimate mortality, exhibiting interesting criteria that a good mortality model should hold. They referred to good-practice guidelines such as the consistency with historical data and the long-term dynamics, biologically reasonable. Following this line of research, recent longevity literature stimulated the use of machine learning techniques in demographic research allowing the integration of stochastic models into a data-driven approach.

Machine learning techniques can support and integrate traditional mortality models in order to significantly reduce forecasting errors, both for application and research purposes. The main contributions in this area, from Deprez et al. (2017); Levantesi and Pizzorusso (2019); Levantesi and Nigri (2020), take advantage of machine learning algorithms to improve the fitting accuracy of canonical models. That is to say, the mortality surface produced by standard stochastic mortality models is corrected by adjusting mortality rates estimated by the original model. Such adjustments are obtained by calibrating a machine learning estimator. As shown by these authors, machine learning better captures complex patterns that traditional models fail to identify. Other notable contributions in this area are from Bjerre (2022), which uses random forests and gradient boosting to produce more accurate mortality forecasts compared with forecasts from traditional stochastic mortality models, and from Perla and Scognamiglio (2023), which develop a neural network model requiring the coherence of the mortality forecasts within sub-groups of similar populations, stressing the similarities among countries.

The need for new tools for comparing models’ performances is evident to understand mortality evolution more accurately.

Despite the growing literature on tree-based machine learning and mortality [(beyond the above-cited papers, see also the recent contribution from Wen et al. (2023)], tree-based methodologies are not commonly used to compare estimations from different mortality models. Compared to the existing literature related to mortality modeling, this paper explores an innovative approach belonging to the tree-based machine learning techniques that demographers have not yet investigated. This approach, namely Contrast trees, recently proposed by Friedman (2020), and here applied to mortality data, helps evaluate the accuracy of the mortality estimates (fitted mortality rates) given by models that are not treatable with model selection criteria based on the likelihood function. Given the wide range of applications, we believe the proposed methodology may provide a unified framework to assess and compare the goodness-of-fit to historical data of conventional mortality models. Using this methodology in evaluating mortality estimates is definitively new, and it is the main contribution of this paper.

Instead of being concerned with mortality forecasting, the proposed methodology is a diagnostic tool that improves the literature on the mortality estimates evaluation given by the mortality models. The main ability of Contrast trees is to identify the regions in the predictor variables space that show very high values of the error rate quantified by a discrepancy measure. The regions’ width and shape change from model to model.

Moreover, in addition to evaluating the accuracy of the models, the Contrast trees enable improving the performance of the models through a boosting procedure that reduces the inaccuracies. We use this methodology, namely Contrast boosting, to enhance the fitting of historical mortality data. According to the demographic literature, the reliable estimation of mortality data may refer not only to the extrapolation but also to an accurate fitting of the historical mortality surface. For instance, in longevity analysis is common to deal with subpopulations i.e. regions or provinces, characterized by a high level of stochasticity often due to a small number of count data at single ages. This is the case in which specific ages or years are not covered with data information, making the mortality estimation challenging. Our approach is crucial to evaluate the mortality matrix estimation provided by a mortality model and to ensure estimation effectiveness by comparing different methods.

To summarize, through this new technique based on Contrast trees, we aim to find the best model that fits observed mortality rates by grasping and detecting the inaccuracies of any model and boosting its predictive power.

The remainder of this paper is organized as follows: Sect. 2 introduces the model framework, both Contrast trees and Contrast boosting. In Sect. 3, we describe the numerical implementation, also providing an overview of the mortality models, expressed in a regression framework, which we assess by the Contrast trees approach. We devote a specific sub-section to explanation and discussion of the numerical results. Section 4 concludes the paper, providing other possible practical implementations of the method in mortality assessment and the limitations of our research.

2 Materials and methods

2.1 Data source

We consider the Italian mortality data available in the Human Mortality Database (HMD) over the period 1950–2018. We refer to the male population aged 0–90, analyzing the age groups 0–29, 30–60, and 61–90 separately to provide further evidence of the differences in mortality that characterizes the younger ages, the adult ages, and the older ages. Data are split into a training set and a test set, according to the common splitting rule 70–30%. We use the training set to obtain the parameters’ estimate of each model. We apply the parameters’ estimate in the test set to evaluate the out-of-sample performance. Finally, we will calculate the out-of-sample errors using data from the test set. The dataset is partitioned using the dissimilarity-based compound selection proposed in Willett (1999).

2.2 Mortality rate

We calculate the central death rates \(m_{x,t}\) for each age x and year t according to the following formula:

where \(D_{x,t}\) is the number of deaths aged x in year t, and \(E_{x,t}\) are the exposures-to-risk aged x in year t.

2.3 Mortality models

In the following, we briefly describe the four models to which the Contrast trees methodology is applied. The scope is to evaluate the models’ quality of fit. The first two models belong to the family of generalized age-period-cohort (GAPC) that are expressed in a regression framework to be suitable for applying Contrast trees, which requires data organized in columns. The last two are well-known machine learning techniques also used for regression tasks.

2.3.1 Lee–Carter (LC) model

We consider the extension of the LC model (Lee and Carter 1992) proposed by Brouhns et al. (2002), which assume that the number of deaths is a Poisson random variable. The Lee–Carter model under the specification of Brouhns et al. (2002) describes the logarithm of the central death rate at age x and time t as:

The age-specific parameter \(\alpha _x\) provides the average age profile of mortality, the age-period term \(\beta _{x}\cdot \kappa _t\) describes the mortality trends, with \(\kappa _t\) the time index and \(\beta _{x}\) modifying the effect of \(\kappa _t\) across ages. The model is subject to the following constraints on \(\kappa _t\) and \(\beta _x\): \(\sum _{t}{\kappa _t}=0\) and \(\sum _{x}{\beta _x}=1\). This model can be reformulated into a Generalized Non-linear Model (GNM) framework, as in Villegas et al. (2018). The authors use a GNM and apply the maximum likelihood method to fit the model to historical data. Under this specification, the LC model can be seen as a non-linear regression model where mortality rates are the target variable, predicted using features (age and time) (Richman and Wüthrich 2021).

2.3.2 Age-period-cohort (APC)

We use the model’s version reformulated into a Generalized Linear Models (GLM) framework (Alai and Sherris 2014):

Where the regression coefficients \(\beta _{1,x}\), \(\beta _{2,t}\), \(\beta _{3,t-x}\) are the age trend, the period trend and the cohort trend (\(t-x\) represents the year of birth).

2.3.3 Gradient boosting machine (GBM)

GBM is a tree-based algorithm proposed by Friedman (2001) that uses fixed-size decision trees as weak learners. The prediction is obtained by a sequential approach, where each decision tree uses the information from the previous one to improve the current fit. Given a current model fit, \(F_{m}({\textbf{x}})\), the algorithm provides a new estimate, \(F_{m+1}({\textbf{x}})=F_{m}({\textbf{x}})+ h_m({\textbf{x}})\), where \(h_m({\textbf{x}})\) is the weak learner fitted on the model residuals \(y-F_{m}({\textbf{x}})\) with y target variable.

2.3.4 eXtreme gradient boosting machine (XGBM)

XGBM is an efficient implementation of gradient boosting decision trees proposed by Chen et al. (2015), and designed to be fast to execute and highly effective. To verify if a simple data preprocessing has some meaningful effect on the quality of models, we apply XGBM to both raw and preprocessed data: the latter is obtained by centering and scaling the raw data using mean and standard deviation.

2.4 Traditional diagnostic tools

In the following, we briefly mention some traditional diagnostic tools that are often used in the literature to assess the goodness-of-fit of a mortality model.

-

Analysis of mortality residuals (or standardized mortality residuals) calculated as the difference between the crude estimate of mortality rate by age and year based on observed data and the corresponding estimated mortality rate using a specified mortality model. For example, Cairns et al. (2010) verified that they are consistent with the hypothesis of i.i.d. N(0, 1) and have zero correlation both across adjacent ages and across adjacent years.

-

Proportion of variance explained (\(R^2\)) by the model or the parameters of the model (see, e.g., Bongaarts (2005))

-

Model selection criteria that penalize the log-likelihood with the increase in number of parameters: Akaike Information Criterion (AIC), Schwarz-Bayes Criterion (SBC) [or Bayes Information Criterion (BIC)] and Likelihood-ratio test (LRT) (Li et al. 2009). Note that in this case the evaluation of the goodness-of-fit is given on the basis of the log-likelihood.

-

Qualitative model selection criteria: Cairns et al. (2008) provide a list of criteria that might be considered desirable in a mortality model, such as, e.g., ease of implementation, parsimony, and transparency. Relating to the fitting ability to the observed data, the model should be consistent with historical data, and parameter estimates should be robust relative to the range of data used. For example, Djeundje et al. (2022) consider consistency, stability, and parsimony in addition to standard goodness-of-fit indices (deviance residual, BIC, and residual patterns).

-

Checking for the absence of autocorrelation in the residuals of the model by the Portmanteau test (see, e.g., Torri (2011)).

2.5 Contrast trees

Contrast trees is an innovative approach that, leveraging tree-based machine learning techniques, allows for deeply assessing the goodness-of-fit of a model by identifying where the model performs worse. Specifically, the goal of the Contrast trees method is to uncover regions in the predictor variables space presenting very high values of the error rate quantified by a discrepancy measure (Friedman 2020). In the context of mortality modeling, the main feature that distinguishes this method from the traditional diagnostic methods mentioned above is the ability to automatically identify the regions in which a given model provides a high error for certain combinations of ages and calendar years. Furthermore, Contrast trees have the advantage of being easy to interpret and can be used as a diagnostic tool to detect the inaccuracies of every kind of model, for example, both those whose parameters estimate is based on a likelihood function and those based on machine learning algorithms. Our analysis shows how Contrast trees can be used for assessing the goodness-of-fit of different mortality models to observed data.

Suppose to have a set of predictor variables \(x = (x_1, x_2,...,x_p)\) and two outcome variables y and z for each x. We aim to find those values of x for which the respective distributions of y|x and z|x, or some statistics such as mean or quantiles, are most different. In summary, Contrast trees provide a lack-of-fit measure for the conditional distribution \(p_y(y | x)\), or some statistics.

Consider the \(M^{th}\) iteration, where the tree splits the space of the predictor variables into M disjoint regions \(\lbrace R_m\rbrace ^M_{m=1}\), each one containing a subset of the data. We denote \(f_m^{(l)}\) and \(f_m^{(r)}\) the fraction of observations in the left and right region with respect to \(R_m\), respectively. While, the quantities \(d_m^{(l)}\), \(d_m^{(r)}\) respectively represent the discrepancy measures associated to the fractions \(f_m^{(l)}\) and \(f_m^{(r)}\). Given a specified subset of the data \(\lbrace x_i,y_i,z_i \rbrace _{x_i\in R_m}\), a discrepancy measure between y and z values can be generally defined as:

The quality of a split is quantified by the following measure:

The factor \(\left( f_m^{(l)} \cdot f_m^{(r)}\right) \) discourages highly asymmetric splits in anticipation of further splitting, while the other factor \(max\left( d_m^{(l)}, d_m^{(r)} \right) ^{\beta }\) attempts to isolate the \(R_m^{(l)}\) and \(R_m^{(m)}\) regions with high discrepancy. The parameter \(\beta \) regulates the relative influence of the two factors but, as stated by Friedman (2020), results are insensitive to its value. We will use \(\beta =2\) in our analysis.

The choice of the discrepancy measure depends on the problem to be solved, allowing Contrast trees to be applied to a variety of problems (Friedman 2020). They are similar to loss criteria in prediction problems. The discrepancy measures that could be appropriate to represent the problem under investigation are the following:

where \(N_m\) is the number of observations in the region \(R_m\), \(t_{(i)}\) is the \(i^{th}\) value of t in sorted order, and \({\hat{F}}_y\) and \({\hat{F}}_z\) are the respective empirical cumulative distributions of y and z. See Friedman (2020) for further details about the tree split procedure.

In numerical applications, for sake of simplicity, we use the discrepancy measure \(d_m^{[1]}\).

2.5.1 Lack-of-fit contrast curves

The results obtained by applying the Contrast trees to different models can be summarized in the lack-of-fit contrast curves, which have point coordinates

where \(f_m=\frac{1}{N}\sum _{d_j\ge d_m}N_j\) is the fraction of observations in the region \(R_m\) containing \(N_m\) observations, and \({\bar{d}}_m=\frac{\sum _{d_j\ge d_m}d_j N_j}{\sum _{d_j\ge d_m}N_j}\) is the average discrepancy.

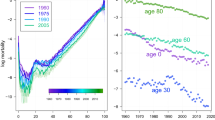

From the above expressions, we can deduce that the lack-of-fit curves by construction are decreasing. By way of example, we show a typical pattern of this curve in Fig. 1, where the leftmost point on the abscissa-axis provides the fractions of observations that fall into the regions with the higher discrepancy, while the rightmost point corresponds to all the observations (\(f_m=1\)). Looking at the ordinate-axis, the leftmost point on each curve represents the \({\bar{d}}_m\) value of the largest discrepancy region of its corresponding tree; the rightmost point provides the \({\bar{d}}_m\) value across all regions. Points in between give a \({\bar{d}}_m\) value over the regions with the highest discrepancy that contain the corresponding fraction of observations (Friedman 2020).

2.6 Contrast boosting

To improve the models’ accuracy, Friedman (2020) proposes a contrast-boosting technique that enables models to provide more accurate predictions, by means of an iterative procedure that reduces uncovered errors. Boosting gradually modifies a starting value of z using an additive term, reducing its discrepancy with y. The resulting prediction for z is then adjusted accordingly. We now shortly describe the Estimation Contrast Boosting technique, which takes z as an estimate of a parameter of the full conditional distribution of a target variable given a set of predictor variables, \(p_y(y | x)\). The procedure consists starts with the estimation of a contrast tree. The z values within a certain region \(R_m^{(1)}\) of the CT are then updated, so that their discrepancy with y is zero, i.e. \(d_m=0\) in Eq. 4. The resulting update \(\delta ^{(1)}_m\) is then used to adjust the prediction from z to \(z^{(1)}=z+\delta ^{(1)}_m\) for \(x\in R^{(1)}_m\). The updated predictions \(z^{(1)}\) are the used as input for a second CT, and then updated from \(z^{(1)}\) to \(z^{(2)}=z+\delta ^{(2)}_m\) for \(x\in R^{(2)}_m\), and so on. The final z values boosted estimate is then \({\tilde{z}}(x)=z(x)+\sum _{k=1}^{K}\delta _{m}^{(K)}\), where K is the maximum number of iterations. In practice, each updated value of z is contrasted with y producing new regions \(R^{(k)}_m\) (\(1\le k\le K\)) with corresponding updates \(\delta _{m}^{(k)}\).

3 Results

We implement the analyses using the conTree R package developed by Friedman and Narasimhan (2020). We set to 100 the maximum tree size corresponding to the number of regions. It is worth noting that the choice of this parameter is not straightforward because it involves a trade-off between discrepancy and interpretability. The smaller the trees, the larger the regions (defined by simple rules and easy to be interpreted). The larger the trees, the higher the potential to uncover small high discrepancy regions (defined by complex rules).

The models’ performance results on the test set are summarized in the lack-of-fit contrast curves, deduced by contrasting the observed mortality data to the estimates provided by each model. These curves are shown in Figs. 2, 3, and 4 for the age group 0–29, 30–60, and 61–90, respectively. The panels (a) of these figures refer to the lack-of-fit curves obtained without applying the Contrast boosting (Base models), while panels (b) refer to the lack-of-fit curves obtained after applying Contrast boosting to the output of the models (Boosted models). For the 0–29 age group (see Fig. 2), both APC and XGBM model have the lowest discrepancy values for each fraction of observations, providing the best fitting. The average discrepancy for this age group is higher than for the 30–60 age group. The 0–29 age group is known to be characterized by high accidental mortality, the so-called “accident hump” around age 20–25, due to accidental deaths or suicides caused by increased risk-taking behavior. Mortality at age 0–29 is therefore hard to predict, and Contrast boosting is not able to actually reduce the average discrepancy. For the 30–60 age group (see Fig. 3), the APC model seems to best perform across all regions since the discrepancy values are consistently lower than those of the other models. For the XGBM models, we can observe that the model applied to preprocessed data (XGBM_preproc) performs better in the regions with the highest average discrepancy with respect to the model applied to raw data. From the scale of the plots, we can see that Contrast boosting reduces discrepancy across almost all regions for the GBM and LC models, where the relative effect of boosting is particularly evident. For the 61–90 age group (see Fig. 4), the GBM model seems by far the worst performing model. Albeit the application of Contrast boosting significantly reduces the discrepancy, the GBM continues to be less accurate than the other models. It should also be noted that the effect of Contrast boosting in high-discrepancy regions for the other models is negligible, except for the APC.

Table 1 reports the values of the average discrepancy measure for both the base and the boosted models considered in the analysis. The APC and the XGBM base models provide the lowest average discrepancy values (0.000410 and 0.000417, respectively), which remain substantially unchanged after the Contrast boosting procedure. The APC model shows the lowest value of \({\bar{d}}_m\) also for the age group 30–60, in line with the dynamics of the lack-of-fit curves depicted in panel (b) of Fig. 2 and panel (a) of Fig. 4. However, the lack-of-fit curves provide more structured information than the average discrepancy, in particular, regarding how and how much \({\bar{d}}_m\) varies across the input space. For example, for the age group 61–90 in the base model (Fig. 2, panel a), we can appreciate that the main difference among models (except for GBM, which is out of range) measured by the average discrepancy is caused by the high discrepancy regions (where the fraction of observation is less than about 0.20). For ages 61–90, the GBM base model shows the worst fitting to the observed mortality data. Although Contrast boosting produces a strong improvement in the discrepancy measure, GBM remains the worst model in terms of discrepancy. Contrast boosting is very effective also for the GBM model in the age group 30–60, as it heavily lowers (−30%) the average discrepancy between observed and estimated values.

For a comparison with the average discrepancy, we also calculate the Root Mean Square Error (RMSE) and Mean Absolute Percentage Error (MAPE) on the base model and the boosted one. Intuitively, the three measures \({\bar{d}}_m\), RMSE, and MAPE quantify the "distance" between the estimates and the actual observations. However, the average discrepancy is an innovative measure summarizing the discrepancy over all the regions identified by the Contrast trees, while RMSE and MAPE are commonly used error measures calculated on the overall input space without distinguishing by region.

By comparing Table 2 showing the values of RMSE and MAPE with Table 1 reporting the values of the average discrepancy, we note a greater convergence of the error measures in the boosted models rather than in the base models. This result is intuitively straightforward since the boosted models are obtained by just reducing the discrepancy measure.

We also calculate average discrepancy, RMSE, and MAPE on the logarithm of the central death rates (Tables 3, 4). These measures assign a relatively large weight to errors at young ages, while error measures calculated on the central death rates assign a large weight to errors at older ages. Indeed, for the age group 0–29, all the errors reported in Tables 3, 4 are significantly higher than those in Tables 1, 2. The errors calculated on the logarithm of the central death rates highlight the ability of Contrast boosting to reduce the inaccuracy of GBM and XGBM_preproc in fitting observed mortality at ages 0–29.

The most interesting feature of the application of Contrast trees to the field of mortality estimate is the automatic identification of the regions of the predictors’ space where a given model provides high discrepancy values for certain combinations of ages-years obtained by comparing the model estimates with the observed mortality rates. These regions can be easily detected and possibly interpreted, providing a further explanation of the model performances as well as helping to assess whether a model can be reliable or not. Figures 5 and 6 show the heatmap of all the error regions for the base model and the boosted one, respectively. Low discrepancy regions are painted in green, while high discrepancy regions are painted in red. For the sake of plot readability, we colored in purple the regions presenting a discrepancy value exceeding 3e-04, 6e-04, and 0.008 for the age groups 0–29, 30–60, and 61–90, respectively.

We can generally observe that the regions’ width and shape change from model to model. Some regions show remarkable mortality estimation errors in specific age groups, others in specific intervals of years, others in a specific range of cohorts. All the models considered show high discrepancy values in the first year of age (Fig. 5, age group 0–29, left panels), confirming the difficulty of adequately estimating the mortality of newborns. This situation remains unchanged after the application of Contrast boosting, which, in this case, seems to be not effective (Fig. 6, age group 0–29, left panels). For the age group 30–60 in the base model (Fig. 5, central panels), the two XGBM models show high discrepancy values after age 45–46, while GBM in the years 2000–2018. The LC model instead evidences high errors in estimating the mortality of cohorts born between 1920 and 1932. Considering the 61–90 age group (Fig. 5, right panels), we notice that the GBM model continues to fail in estimating mortality rates in the years 2000–2018, while the LC model (and also APC) mortality rates in the cohorts born between 1920 and 1932. By comparing the results for the base models (Fig. 5) with those for the boosted ones (Fig. 6), we observe a clear effect of boosting on the GBM model for the 30–60 and 61–90 age groups and the XGBM for the 30–60 age group.

4 Discussion

Evaluating, and thus eventually improving, the fit of mortality models is crucial for both demographers and actuaries. Indeed, in particular situations, common in actuarial practice, data quality can turn the mortality estimate difficult. A prime example is the case of small subpopulations where a common method such as the Lee–Carter may not guarantee reliable estimation. In mortality modeling, the objective of diagnostic checking is to ascertain whether the model fits the historical data by obeying an underlying probabilistic hypothesis. This procedure is carried out using residuals diagnosis checking with a Gaussian or more often a Poisson assumption (see, e.g., Renshaw and Haberman (2006)). Leveraging (Friedman 2020), who introduces contrast trees to estimate the full conditional probability distribution without any parametric assumptions, we propose a prominent alternative, with particular regard to the intersection of Machine Learning and Mortality modeling fields. In this sense, our proposal fills the gap between mortality modeling and model diagnostics, particularly for nontraditional modeling as a machine learning framework.

Contrast trees consist of a general method based on machine learning that can be applied to any model, expressed as a regression model, to evaluate the goodness of fit and identify the worst-performing regions in the input space. The main characteristic that discriminates this method from traditional diagnostic tools is automatically identifying the regions in which a given model produces a high error for certain combinations of ages and calendar years. Well-known diagnostic tools often used in the literature to assess the goodness-of-fit of a mortality model, such as BIC and AIC, require the likelihood function, which is not available for machine learning models. Therefore, Contrast trees provide a unified approach for assessing and comparing the accuracy of traditional mortality models with machine learning algorithms.

In Contrast trees, the detection of the regions in which a model worst performs can be considered an evolution of the standard analysis on residuals, in which the detection of the highest residuals is typically assigned to graphical analyzes using heatmaps and scatter plots (Cairns et al. 2009; Villegas et al. 2018), and to summary measures like RMSE and MAPE calculated on the overall input space and not by region. Conversely, the decision tree structure of Contrast trees enables quantifying the discrepancy between the estimates provided by a model and the actual observations in each region identified by Contrast trees.

References

Alai, D.H., Sherris, M.: Rethinking age-period-cohort mortality trend models. Scandinavian Actuarial J. 3, 208–227 (2014)

Bjerre, D.S.: Tree-based machine learning methods for modeling and forecasting mortality. ASTIN Bull. 52(3), 765–787 (2022)

Bongaarts, J.: Long-range trends in adult mortality: models and projection methods. Demography 42(1), 23–49 (2005)

Booth, H., Tickle, L.: Mortality modelling and forecasting: a review of methods. Ann. Actuarial Sci. 3(1–2), 3–43 (2008). https://doi.org/10.1017/S1748499500000440

Brouhns, N., Denuit, M., Vermunt, J.: A Poisson log-bilinear approach to the construction of projected life tables. Insurance Math. Econ. 31, 373–393 (2002)

Chen, T., He, T., Benesty, M., Khotilovich, V., Tang, Y., Cho, H.: Xgboost: extreme gradient boosting. R Package Version 0.4–2, 1(4), 1–4 (2015)

Cairns, A.J.G., Blake, D., Dowd, K.: A two-factor model for stochastic mortality with parameter uncertainty: theory and calibration. J. Risk Insurance 73, 687–718 (2006)

Cairns, A.J.G., Blake, D., Dowd, K.: Modelling and management of mortality risk: a review. Scandinavian Actuarial J. 73(2–3), 79–113 (2008)

Cairns, A.J.G., Blake, D., Dowd, K., Coughlan, G.D., Epstein, D., Ong, A., Balevich, I.: A quantitative comparison of stochastic mortality models using data from England and Wales and the United States. North Am. Actuarial J. 13, 1–35 (2009)

Cairns, A.J.G., Blake, D., Dowd, K., Coughlan, G.D., Epstein, D., Khalaf-Allah, M.: Evaluating the goodness of fit of stochastic mortality models. Insurance Math. Econ. 47(3), 255–265 (2010)

Deprez, P., Shevchenko, P.V., Wúthrich, M.V.: Machine learning techniques for mortality modeling. Eur. Actuarial J. 7, 337–352 (2017). https://doi.org/10.1007/s13385-017-0152-4

Djeundje, V.B., Haberman, S., Bajekal, M., et al.: The slowdown in mortality improvement rates 2011–2017: a multi-country analysis. Eur. Actuarial J. (2022). https://doi.org/10.1007/s13385-022-00318-0

Friedman, J.H.: Greedy function approximation: a gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001)

Friedman, J.H.: Contrast trees and distribution boosting. Proc. Nat. Acad. Sci. 117(35), 21175–21184 (2020). https://doi.org/10.1073/pnas.1921562117

Friedman, J.H., Narasimhan, B.: conTree: contrast trees and distribution boosting. R package version 0.2–8 (2020)

Lee, R.D., Carter, L.R.: Modeling and forecasting US mortality. J. Am. Stat. Assoc. 87(419), 659–671 (1992)

Levantesi, S., Nigri, A.: A random forest algorithm to improve the Lee-Carter mortality forecasting: impact on q-forward. Soft Comput. 24, 8553–8567 (2020). https://doi.org/10.1007/s00500-019-04427-z

Levantesi S., Pizzorusso, V.: Application of machine learning to mortality modeling and forecasting. Risks 7(1), 26. ISSN: 2227-9091. (2019). https://doi.org/10.3390/risk7010026

Li, J.S.H., Hardy, M.R., Tan, K.S.: Uncertainty in mortality forecasting: an extension to the classical Lee-Carter approach. Astin Bull. 39(1), 137–164 (2009)

Nigri, A., Barbi, E., Levantesi, S.: The relay for human longevity: country-specific contributions to the increase of the best-practice life expectancy. Qual. Quant. 56, 4061–4073. https://doi.org/10.1007/s11135-021-01298-1

Perla, F., Scognamiglio, S.: Locally-coherent multi-population mortality modelling via neural networks. Decis. Econ. Finance 46, 157–176 (2023). https://doi.org/10.1007/s10203-022-00382-x

Pollard, J.H.: Projection of age-specific mortality rates. In: Population Bulletin of the United Nations 21/22, 55–69 (1987)

Renshaw, A.E., Haberman, S.: A cohort-based extension to the Lee-Carter model for mortality reduction factors. Insurance Math. Econ. 38(3), 556–570 (2006)

Richman, R., Wüthrich, M.: A neural network extension of the Lee-Carter model to multiple populations. Ann. Actuarial Sci. 15, 346–366 (2021)

Scognamiglio, S., Marino, M.: Backtesting stochastic mortality models by prediction interval-based metrics. Qual. Quant. https://doi.org/10.1007/s11135-022-01537-z

Torri, T.: Building blocks for a mortality index: an international context. Eur. Actuar. J. 1(Suppl 1), S127–S141 (2011)

Villegas, A.M., Kaishev, V., Millossovich, P.: StMoMo: An R Package for Stochastic Mortality Modelling. J. Stat. Softw. 84(3), 1–38 (2018)

Wen, J., Cairns, A., Kleinow, T.: Modelling socio-economic mortality at neighbourhood level. ASTIN Bull. 53(2), 285–310 (2023). https://doi.org/10.1017/asb.2023.12

Willett, P.: Dissimilarity-based algorithms for selecting structurally diverse sets of compounds. J. Comput. Biol. 6(3–4), 447–457 (1999)

Acknowledgements

A preliminary version of this paper was presented at the 10th International Conference IES 2022 Innovation & Society 5.0: Statistical and Economic Methodologies for Quality Assessment. An extended previous version was published in the Book of short papers of the conference, edited by Rosaria Lombardo, Ida Camminatiello and Violetta Simonacci.

Funding

Open access funding provided by Università di Foggia within the CRUI-CARE Agreement. The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Andrea Nigri, declares that he is a member of the editorial board. However, He does not benefit from the position in the journal at any point during the submission to publication process.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Levantesi, S., Lizzi, M. & Nigri, A. Enhancing diagnostic of stochastic mortality models leveraging contrast trees: an application on Italian data. Qual Quant 58, 1565–1581 (2024). https://doi.org/10.1007/s11135-023-01711-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11135-023-01711-x