Abstract

This study provides a systematic comparative analysis of seven common cross-national measures of state capacity by focusing on three measurement issues: convergent validity, interchangeability, and case-specific disagreement. The author finds that the convergent validity of the measures is high, but the interchangeability of the measures is low. This means that even highly correlated measures of state capacity can lead to completely different statistical inferences. The cause of this puzzling finding lies in strikingly large disagreements on some of the country scores. The author shows that these disagreements depend on two factors: differences in underlying components and the level of state capacity. Considering the findings of this study, users of measures of state capacity must not assume that any highly correlated indicator is appropriate. They should instead look at what the indicators actually measure and ensure that a given definition of state capacity matches the chosen indicator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The state has been “brought back in” and today the scholarship on state capacity is flourishing in many fields of social science. State capacity, typically defined as the ability of the state to reach its objectives (Acemoglu and Robinson 2019), has been linked to numerous important social, political, and economic factors. Just to give a few examples, empirical studies have shown that higher state capacity is related to economic growth (e.g., Dincecco 2015), public goods provision (e.g., D’Arcy and Nistotskaya 2017), democratisation (e.g., Wang and Xu 2018), international conflicts (e.g., Besley and Persson 2008), human rights protection (Englehart 2009), regime stability (Andersen et al. 2014), welfare state generosity (Rothstein et al. 2012), and positive pandemic outcomes (Serikbayeva et al. 2021).

Despite an ever-growing number of quantitative studies on the topic, there is a serious lack of consensus on how state capacity should be measured in cross-national comparative research. Scholars have used a variety of measures to operationalise the concept, ranging from judgement-based proxies such as Varieties of Democracy’s Rigorous and Impartial Public Administration (e.g., Grundholm and Thorsen 2019), Transparency International’s Corruption Perceptions Index (e.g., Englehart 2009), and World Bank’s Government Effectiveness (e.g., Serikbayeva et al. 2021) to fully observational proxies such as life expectancy (e.g., DeRouen and Bercovitch 2008), GDP/capita (e.g., Fearon and Laitin 2003), and total taxes/GDP (e.g., Andersen et al. 2014). What we know about state capacity is thus based on quite a heterogeneous set of measures.

While some scholars have argued that “exactly how the quality of government is measured is not so important” (Tabellini 2008, p. 264), considering the apparent diversity of these proxies of state capacity in terms of what they actually measure, a natural question arises: can we draw collective conclusions about state capacity from studies that use such different measures? The study at hand addresses this question by providing a systematic comparative analysis of currently relevant cross-national measures of state capacity and by analysing the empirical implications of choosing one measure over another through three specific measurement issues: convergent validity, interchangeability, and case-specific disagreement. If measures of state capacity lead to similar inferences, then indeed “it makes sense to talk about the quality of government as a general feature of countries” (Tabellini 2008, p. 263) and we do not need to worry about selecting one measure over another.Footnote 1 If this is not the case however, the process of data selection becomes of fundamental importance.

Even if we have been warned that “we often lack the concrete knowledge of how the specific measures we select affect the empirical inferences we draw” (Mudde and Schedler 2010, p. 410), the literature on the measurement of state capacity has not adequately addressed the above problems. While previous comparative studies on measures of other core concepts relevant for multiple fields of social science research such as democracy (e.g., Vaccaro 2021), rule of law (e.g., Møller and Skaaning 2011), and ethnicity (e.g., McDoom and Gisselquist 2016) have investigated the empirical implications of the choice of the measure, existing comparisons on cross-national measures of state capacity (e.g., Cingolani 2018; Hanson 2018; Hendrix 2010; Fortin 2010; Savoia and Sen 2015) have focused predominantly on conceptual questions rather than the sensitivity of results to different measures of state capacity. The study at hand then adds to this literature by examining the sensitivity of both descriptive and inferential results on state capacity to the chosen measure and also by providing an account of currently relevant proxies of state capacity.

I want to emphasise that the objective of my study is to scrutinise the numbers that scholars commonly use to represent state capacity, not to address conceptual issues on the topic. The reasons of such choice are twofold. First, I follow the advice of Adcock and Collier (2001, p. 533), according to whom “arguments about the background concept and those about validity can be addressed adequately only when each is engaged on its own terms”. Second, I address the problem raised by Jerven (2013, p. 119), according to whom “scholars pay great attention to defining the concepts and devote great effort to theorizing the existence of the phenomenon and spend comparatively little time critically probing the numbers that are supposed to represent them”. Simply put, concepts are important but so are numbers because our knowledge on state capacity is affected by how it is quantified.

Finally, before moving on to the next section, it is important to make plain that if researchers regularly opted for a measure that closely matched their definition of state capacity, the sensitivity of the results to the chosen measure would be less of a problem. Nevertheless, more often than not data users are uncritical and irrational when choosing among competing measures (Mudde and Schedler 2010). Sometimes proxies of state capacity are chosen out of convenience, as in DeRouen and Bercovitch (2008), who choose life expectancy over GDP/capita because of better data availability. Other times, it remains unclear why a measure is chosen over another. For instance, Serikbayeva et al. (2021) and Shyrokykh (2017) operationalise state capacity via World Bank’s Government Effectiveness but do not explain why this particular measure is chosen over other options and Dincecco (2015) remains silent on his choice of using Brookings Institute’s State Weakness Index to operationalise state capacity. When the fit between measure and concept remains unclear, we might end up studying many different things under the name of state capacity with obvious negative consequences to the accumulation of knowledge on the topic.

2 Selecting measures of state capacity

Since it is impossible to analyse in detail each and every proxy of state capacity in a single paper, I have selected only some measures for further analysis. To carry out the selection process, I have extensively reviewed the literature and selected common measures of state capacity according to five criteria.

First, selected measures have current academic relevance. This criterion is met if a measure has been used to proxy state capacity by different authors in many (≥ 5) recent (≥ 2015) cross-national comparative studies. Second, fully observational measures are excluded a priori because “in many areas of governance, there are a few alternatives to relying on perceptions data” (Kaufmann et al. 2011, p. 240). In other words, observational measures are inherently different from subjective measures and fully observational measures cannot capture many aspects of state capacity. Given that state capacity is a latent concept, perception-based measures should be able to capture the concept in a comprehensive way. Third, I select exclusively measures that provide annual scores over time for most countries worldwide. Fourth, I scrutinise only measures that are publicly available free of charge. Fifth, if a given data producer offers multiple proxies of state capacity, I select only the most frequently used measure. This last criterion should minimise the influence of potential bias originating from so-called method factors on our results.

Ultimately, seven measures fulfil the above criteria. These measures and their main characteristics are presented below. Table 1 provides a summary of the measures and a list of studies in which these measures are used to proxy state capacity.

The Quality of Government Institute, University of Gothenburg, publishes the well-known Quality of Government Index (QOG) (Teorell et al. 2019). The index is based entirely on data from PRS Group’s (2018) International Country Risk Guide (ICRG), and more specifically, on the ICRG indicators Bureaucracy Quality, Corruption, and Law and Order. It captures thus primarily procedural aspects of state capacity, such as corruption and impartiality. QOG is computed as the simple average of its three sub-indicators, which are all coded in-house by the ICRG staff. The final index ranges from 0 (low) to 1 (high) and provides data for almost 150 countries in the world from 1984 on.

Hanson and Sigman’s (2013) State Capacity Index (HSI) has quickly become one of the most popular indices of state capacity in comparative cross-national studies. It has been explicitly created to measure the “three dimensions of state capacity that are minimally necessary to carry out the functions of contemporary states: extractive capacity, coercive capacity, and administrative capacity” (Hanson and Sigman 2013, p. 3). These three dimensions are captured by 24 different sub-indicators and synthesised into a single latent variable through Bayesian factor analysis. HSI runs from low to high on a standardised (z-score) scale with a mean of 0 and a standard deviation of 1. It provides annual data for up to 163 countries in 50 years (1960–2009).Footnote 2

Government Effectiveness (WGI) is one of the six widely used World Bank’s Worldwide Governance Indicators. The index “captures perceptions of the quality of public services, the quality of the civil service and the degree of its independence from political pressures, the quality of policy formulation and implementation, and the credibility of the government’s commitment to such policies” (Kaufmann et al. 2011, p. 4) by aggregating numerous sub-indicators (48 in 2018) on the quality of public services and public administration. Therefore, WGI focuses strongly on the administrative aspects of state capacity. The index is standardised (z-score) to have a mean of 0 and a standard deviation of 1, and runs from low to high. It covers nearly all countries in the world and is available annually from 2003 on.Footnote 3

The State Fragility Index (SFI) is developed by the Center for Systemic Peace. The index captures state capacity in a broad sense and measures the “capacity to manage conflict, make and implement public policy, and deliver essential services” (Marshall and Elzinga-Marshall 2017, p. 51). SFI is based on two sub-dimensions, state effectiveness and state legitimacy, which are additively aggregated into the final index. These two dimensions in turn aggregate 14 sub-indicators related to four aspects (political, social, economic, security) of state performance. SFI focuses especially on coercive aspects of state capacity such as political violence and war. It ranges from 0 to 25, where a lower score indicates more capacity, and provides annual scores for all countries in the world with a population of at least 500,000 since 1995.

The Fragile States Index (FSI), produced by the Fund for Peace, is conceived to provide an entry point “into deeper interpretative analysis by civil society, government, businesses and practitioners alike—to understand more about a state’s capacities and pressures which contribute to levels of fragility and resilience” (Fund for Peace 2019, p. 33). It is based on a mix of expert evaluations, content analysis of articles and reports, and quantitative secondary data on 12 dimensions such as security, public services, and rule of law. The final index synthesises more than 100 indicators, but the Fund for Peace does not reveal complete information on these sub-indicators. FSI has been published annually since 2005 and it ranked in its 2019 report 178 countries in the world. Its overall score ranges from 0 to 120, where a lower score indicates more capacity.

Transparency International’s Corruption Perceptions Index (CPI) aggregates some of the most important existing measures of corruption and closely related issues, such as transparency, accountability, and professionalisation of the bureaucracy. “The CPI scores and ranks countries/territories based on how corrupt a country’s public sector is perceived to be by experts and business executives” (Transparency International 2019, p. 1). CPI has been published annually since 1995, it is based on secondary expert survey data from multiple sources, and the 2018 edition covers 180 countries in the world. It ranges from 0 (low) to 10 (high) until 2011 and from 0 (low) to 100 (high) from 2012 on.

Varieties of Democracy Institute’s Rigorous and Impartial Public Administration (VDEM) provides information about “the extent to which public officials generally abide by the law and treat like cases alike, or conversely, the extent to which public administration is characterized by arbitrariness and biases” (Coppedge et al. 2019, p. 162). VDEM is based on evaluations by multiple country experts—mainly academics—and provides annual data from 1789 on for almost all countries in the world. It is approximately normally distributed with a mean of − 0.11 and ranges from − 3.69 to 4.46. A higher score denotes higher state capacity.

Even if the above seven measures have been all commonly used to capture state capacity, it might be useful to recall that most of them were not created to capture state capacity in the first place. In fact, only HSI and SFI mention state capacity in their “descriptions”. Measures of fragility (SFI and FSI) have been used to proxy state capacity because “fragility is closely associated with a state’s capacity to make and implement public policy” (Hiilamo and Glantz 2015, p. 242) and because sometimes state capacity is seen as a dimension of state fragility (Grävingholt et al. 2015). The remaining four measures—CPI, QOG, VDEM, and WGI—focus in one way or another on administrative procedures, and in fact, “traditionally, state capacity indicators would focus on the competence and ability of bureaucracy” (Savoia and Sen 2015, pp. 442–443). The rationale of using these measures to proxy state capacity is that without a well-functioning bureaucracy the state is unlikely to be able to reach its objectives. Measures of corruption have been also used to proxy state capacity simply because it has been argued that corruption is “a fundamental component of state capacity” (Fortin 2010, p. 665).

3 Research strategy

Now that we have selected some of the most currently relevant measures of state capacity we can proceed to their empirical analysis. To ease comparison, measures are normalised (min–max) to range from 0 (low) to 1 (high). FSI and SFI are reversed so that higher scores indicate more state capacity.

I begin the exercise by assessing the convergent validity of the measures through correlations. Correlation analysis is a conventional tool to assess the convergent validity of instruments measuring the same construct (e.g., Seawright and Collier 2014). Since correlations provide us information only about the strength of bivariate associations, I use also principal component analysis (PCA) to explore the multivariate association of the measures and the structure of the data. “The main objective of a PCA is to reduce the dimensionality of a set of data” (Jolliffe 2002, p. 87). Thus, if the bulk of the total variance in the data is best explained by one single component, we are induced to conclude that common measures of state capacity are closely related among one another and represent statistically one single unidimensional construct.

Second, after evaluating the convergence of the selected measures, I assess their interchangeability. To be considered as interchangeable, “equivalent measures should produce similar causal inferences” (Seawright and Collier 2014, p. 124). In practice, such interchangeability can be evaluated through regressions. To give an example, in assessing the interchangeability of seven measures of rule of law, Møller and Skaaning (2011, p. 384) “test whether the results are relatively similar or dissimilar when the seven measures are used interchangeably as dependent variables in multiple OLS regression analyses”. Following their approach, I evaluate the interchangeability of common measures of state capacity by regressing the measures on the same set of external predictors in a multivariate setting.

Gaining knowledge not only on the convergence of the measures but also on their interchangeability is essential because previous studies show that even highly correlated measures can lead to completely different inferences (e.g., Casper and Tufis 2003; Vaccaro 2021). In fact, if one’s goal is feasible statistical inference, assessing interchangeability might be more important than assessing convergence (Seawright and Collier 2014). Then again, a competing view suggests that if measures capturing broadly similar institutional features are highly correlated, picking any of these measures will do (see e.g., Tabellini 2008). If my results indicate substantial divergence between convergence and interchangeability, researchers who use measures of state capacity for inferential purposes should not rely only on correlations in evaluating the empirical equivalence of the measures.

I assess the interchangeability of the selected measures of state capacity by replicating two influential studies on the relationship between state capacity and democracy. The choice of replicating studies on this specific topic is not casual but determined by the fact the state-democracy literature constitutes one of the largest strains of research in which state capacity is examined as an outcome. As previously said, the aim of these regressions is to assess whether different proxies of state capacity lead to similar conclusions. Anyhow, as a consequence, we are also able to evaluate the external validity of the replicated studies. If the choice of the measure of state capacity matters substantially for the conclusions to be drawn, we can deduce not only that the interchangeability of the measures is weak but also that the replicated studies have weak external validity, and thus, that the findings of these studies cannot be generalised across frequently used measures of state capacity.

As we shall see, different measures do indeed lead to different interpretations (i.e., are not interchangeable). This means that common measures of state capacity must disagree substantially on the scores of at least some of the countries. Hence, finally, drawing on the so-called case-based approach to measurement validity, where “attention centers on fine-grained empirical detail for each case” (Seawright and Collier 2014, p. 113), I explore our measures of state capacity through individual country scores. First, these scores are analysed in bivariate settings. Then, by creating an original indicator of multivariate case-specific disagreement, I identify the countries that have particularly dissimilar (or similar) scores across the measures. Last, I briefly discuss the possible causes of case-specific disagreement.

4 Results and discussion

4.1 Convergent validity

Correlation coefficients in years of common coverage (Table 2) show that the measures are highly related to each other. Correlations are computed with both Pearson’s and Spearman’s methods, to ensure the robustness of the results. Pearson’s method measures the strength of the linear association between two variables, whereas Spearman’s method measures the strength of the monotonic association between two variables. The interpretation of the results is not significantly affected by the chosen method. In general, the results show that measures of state capacity are strongly associated among each other.

With Pearson’s method, the weakest correlations are between SFI and VDEM (0.70) and HSI and VDEM (0.72), whereas the strongest correlations are between CPI and WGI (0.94) and QOG and WGI (0.93). Likewise, with Spearman’s method, the weakest correlations are between SFI and VDEM (0.70) and HSI and VDEM (0.70). The strongest correlations, instead, are with CPI and WGI (0.92), QOG and WGI (0.90), and HSI and WGI (0.90). VDEM is somewhat less strongly associated to the other measures, because its correlation coefficient never exceeds 0.83, regardless of the method. Nonetheless, overall, these findings indicate high convergence among the measures.

So far, we have examined the convergent validity of measures of state capacity in years of common coverage. We have found that measures of state capacity are strongly related to each other from 2005 to 2009. Yet, strong correlations hold also over a longer time periodFootnote 4 (Tables S1–S7, Supplementary Material). In fact, the over-time consistence of the bivariate relationships among measures of state capacity is astonishing. Only with two pairs of measures the strength of the correlation varies more than 0.10 over the examined period of time: the correlation between QOG and SFI ranges from 0.73 to 0.84, and the correlation between QOG and CPI ranges from 0.82 to 0.93. Interestingly, the bivariate correlations between CPI and the other measures take a relatively pronounced leap from 1995 to 1996, suggesting that there might be something anomalous in the CPI scores of 1995. The comparatively small number of countries (40) rated by CPI in 1995 might also affect the results of the correlations.

The above bivariate correlations provide information about the relationship between two given variables. Anyhow, we can analyse the relationship among our measures of state capacity with multivariate methods as well. PCA is commonly used as a variable-reduction technique but it can also help to understand better the association among multiple variables and the structure of a set of data.

The results of the PCA (Table 3) show that around 87% of the total variance can be attributed to one single component. The second component explains less than 5% of the total variance. According to the Kaiser criterion, components with eigenvalues under 1.00 should not be retained. Thus, the PCA indicates that our measures of state capacity are best represented by one single component and suggests that statistically the measures capture the same phenomenon. Robustness tests with extended year coverage (Tables S8–S9, Supplementary Material) do not change our interpretations and the bottom line remains the same: measures of state capacity are strongly related to each other and seem to capture empirically a unidimensional concept of state capacity.

4.2 Interchangeability

So far, we have found that common measures of state capacity are highly convergent and seem to quantify the same concept. Nevertheless, as some previous studies on measurement validity have evidenced, strong correlations do not necessarily translate into high interchangeability. Therefore, to assess more thoroughly the empirical consequences of choosing one measure instead of another, I replicate two longitudinal regression models published in two influential studies on the relationship between state capacity and democracy. These studies are Bäck and Hadenius (2008) and Carbone and Memoli (2015).

Replication data is available only for Carbone and Memoli (2015). The model taken from Bäck and Hadenius (2008) is replicated to the best of my ability by following scrupulously the procedure described by the authors. I want to stress that with these replications I do not intend to criticise in any way the concerned studies. In fact, I believe that the replicated studies have made an impressive contribution to the literature on the state-democracy nexus.

First, I replicate Bäck and Hadenius’ (2008) study (Table S10, Supplementary Material), where the authors find evidence of a curvilinear relationship between democracy and state capacity: at low levels of democracy the relationship is negative, whereas at high levels of democracy the relationship is positive. To operationalise state capacity the authors of the original study aggregate Bureaucracy efficiency and Corruption from PRS Group’s ICRG into an additive index, which covers the years from 1984 to 2002. Only three (QOG, HSI, VDEM) of our seven common measures of state capacity cover the entire period of Bäck and Hadenius’s study, and thus, the original study is re-estimated only with three “alternative” models.

The “original” Model 1 confirms that the relationship between democracy and state capacity is curvilinear. As claimed by Bäck and Hadenius (2008), at low levels of democracy the “effect” of democracy is negative, whereas at high levels of democracy it is positive. In Model 2 state capacity is measured with QOG. Now the predicted effect is similar, but the main democracy term is significant only at a lower (90%) level. The strong equivalence between the two models is not surprising because Bäck and Hadenius’ measure of state capacity and QOG are based on almost the same sub-indicators. The curvilinear effect however does not hold even closely in Model 3, in which state capacity is measured with HSI. Model 4, in which state capacity is measured with VDEM, provides even more astonishing results compared to the original study. Model 4 finds a curvilinear relationship between democracy and state capacity (although the democracy2 term is significant only at the 90% level) but the curvilinear relationship is completely opposite than in the original model. Weakly but still with VDEM democracy and state capacity are positively related at low levels of democracy but at higher levels of democracy this positive relationship seems to fade out.

Average marginal effect plots (Fig. 1) paint a more detailed picture of the consequences of choosing one measure over another. With Bäck and Hadenius’ measure of state capacity the effect of democracy is negative in countries with a complete absence of democracy (e.g., North Korea from 1994 to 2002 and Saudi Arabia from 1992 to 2002), nonsignificant in countries with a low level of democracy, and positive and statistically significant in countries with an intermediate or high level of democracy (≥ 5). Considering the levels of democracy in 2002, this means that already in countries such as Russia, Nigeria, and Burkina Faso the relationship between democracy and state capacity is positive and statistically significant.

Average marginal effects of democracy on state capacity, conditional on the level of democracy: replications of Bäck and Hadenius (2008)

The results are more or less equivalent when state capacity is measured with QOG. With VDEM instead the results point towards the opposite direction. From low to intermediate levels of democracy (< 6) the relationship between democracy and state capacity is positive and statistically significant. Considering again the levels of democracy in 2002, this means that the effect of democracy is positive both in completely undemocratic countries such as North Korea, Saudi Arabia, and Iraq and partially democratic countries like Russia, Nigeria, and Burkina Faso. With VDEM however the relationship between democracy and state capacity becomes nonsignificant as the level of democracy increases. When state capacity is measured with HSI instead the results provide no evidence of any statistically significant curvilinear association between democracy and state capacity.

When it comes to the other independent variables, GDP/capita is positively and significantly related to state capacity with HSI and VDEM but not with the original measure of state capacity and QOG. Trade openness is instead negatively related to state capacity with VDEM but not with the other three measures of state capacity. All the four models agree at least on the relationship between state capacity and British colony, which is nonsignificant regardless of the chosen measure of state capacity, albeit with a positive sign with the original measure, QOG, and HSI but a negative sign with VDEM.

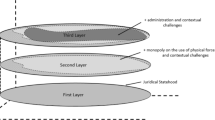

Second, I test whether Carbone and Memoli’s (2015) findings are sensitive to the choice of the measure of state capacity (Table S11, Supplementary Material). Model 1 is replicated with the original measure used in Carbone and Memoli’s study, where Monopoly on the Use of Force and Basic Administration from Bertelsmann Stiftung are multiplicatively aggregated. The original model finds a curvilinear effect of democracy on state capacity. At extremely low levels the effect is negative, but it turns positive after a certain level of democracy has been reached.

In Models 2–8 the original measure is replaced, one by one, with our seven measures of state capacity. Even if both the main democracy and the democracy2 terms share the same positive signs across models, choosing one measure over another can produce completely different statistical inferences. In the re-estimated models, the strong curvilinear association between democracy and state capacity holds only with FSI (Model 2) and SFI (Model 5). When state capacity is measured with CPI (Model 7), neither of the democracy terms is statistically significant. When state capacity is measured with WGI (Model 6) and VDEM (Model 8) only the main democracy term is statistically significant. With QOG (Model 3) and HSI (Model 4) both democracy terms are statistically significant at conventional levels, but the curvilinear relationship is much weaker than with FSI and SFI.

As before, average marginal effect plots (Fig. 2) can guide us in a more exhaustive interpretation of the results. The plots show that the main finding of the original model holds only in two of the re-estimated models. Using FSI or SFI leads to relatively similar findings compared to the original model. Using QOG, HSI, or VDEM instead suggests that the positive effect of democracy on state capacity begins only after a country has reached an intermediate level of democracy. Anyhow, with none of these three measures, democracy has a statistically significant negative effect on state capacity autocracies. On the contrary, models with WGI and CPI do not support any of the above findings. With WGI the statistically significant effect of democracy on state capacity holds only at intermediate levels of democracy. With CPI instead the relationship between democracy and state capacity does not depend on the level of democracy at all.

Average marginal effects of democracy on state capacity, conditional on the level of democracy: replications of Carbone and Memoli (2015)

The original study investigates also the role of democratic duration in the relationship between democracy and state capacity. Model 1 confirms that “democratic duration becomes a crucial [positive] factor when combined with the degree of democracy” (Carbone and Memoli 2015: 18),Footnote 5 but such interpretation is not supported by any of the re-estimated models. Carbone and Memoli (2015, p. 15) find also that “both a country’s level of development and its size matter for state consolidation, whereas ethnic diversity largely seems to play no role”. The replications show however that in addition to the original model ethnic diversity plays no role only with QOG, CPI, and VDEM, and a country’s size matters only with FSI, SFI, WGI, and CPI. GDP/capita instead is significantly and positively related to state capacity in all models.

The replication and re-analysis of two influential studies with several measures of state capacity has demonstrated convincingly that common measures of state capacity are weakly interchangeable. The choice of the measure plays a key role for the conclusions to be drawn, undermining the generalisability of these studies. Since measures of state capacity do not cover exactly the same sample of countries, my findings could be driven by different samples rather than different measures. To rule out so-called “selection bias” I run the previous sets of models with a common sample of observations. The results (Tables S12–S13, Supplementary Material) are not substantially affected by restricting the models to the same sample. Selection bias does not thus influence the interpretation of our findings. Different conclusions are driven by heterogeneity in measures, not samples.

We have found strong evidence that frequently used measures of state capacity are highly correlated and seem to tap empirically into the same unidimensional concept. It is commonly thought that highly correlated variables are interchangeable. Nevertheless, my findings suggest that researchers should not take for granted that strongly correlated measures lead to similar inferences. My findings show in fact that the interchangeability of measures of state capacity is generally weak. As a consequence, quantitative studies on state capacity are not likely to be externally valid to alternative measures of state capacity. Not even one single pair of measures produces consistently similar results across the replicated models. It is worrisome that previous findings on the state-democracy nexus are so sensitive to the chosen measure.

4.3 Case-specific disagreement

We have found robust evidence that the interchangeability of measures of state capacity is weak. Put differently, many research results are idiosyncratic to the measure of state capacity. This is true, even if measures are strongly correlated among each other. These somewhat contradictory findings require further investigation, and it is likely that we will better understand what causes our contradictory findings, if we turn our attention at individual country-level scores.

With bivariate scatter plots of state capacity measures (Fig. S1, Supplementary Material) we can see how similarly single countries are rated in the most recent year of common observations. Overall, as previously suggested by the correlation analysis, many countries are rated with high consistency by each pair of measures. For example, Somalia has an extremely low score in all measures, whereas the Nordic Countries, Switzerland, and New Zealand have extremely high scores in all measures. Yet it becomes evident that there are also countries rated with substantial disagreements by our measures. Keeping in mind that the measures are normalised to range from 0 to 1, some of the bivariate case-specific disagreements are incredibly large (Table S14, Supplementary Material).

The most substantial disagreements in country-scores seem to be between SFI and CPI, and SFI and VDEM. In fact, there are as many as 45 countries that SFI rates at least 0.40 units higher than CPI. In seven of these, the discrepancy between the two ratings is more than 0.60 units: Argentina (0.70), Belarus (0.68), Jamaica (0.65), Albania (0.63), Ukraine (0.63), Greece (0.63), and Italy (0.61). Likewise, differences between SFI and VDEM are substantial: SFI rates Belarus 0.71 units higher than VDEM, and in total there are 34 countries that SFI rates at least 0.40 units higher than VDEM.

In many cases, SFI disagrees with most of the other measures as well. SFI rates Belarus 0.60 units higher than WGI, and there are six other countries that are rated at least 0.40 units higher by SFI than by WGI. SFI rates Albania 0.55 units higher than QOG, and there are 20 countries that are rated at least 0.40 units higher by SFI than by QOG. SFI rates Belarus 0.47 units higher than FSI, and there are five other countries that are rated with a discrepancy of at least 0.40 units between SFI and FSI. Case-specific disagreements between SFI and HSI instead are smaller. The most discrepantly rated country is Argentina, which is rated 0.36 units higher by SFI than by HSI.

Differences in country ratings between HSI and the other five measures are relatively large. HSI rates 21 countries at least 0.40 units higher than CPI. Six of these countries are rated at least 0.50 units higher: Iran (0.59), Russia (0.54), Venezuela (0.53), Belarus (0.52), Armenia (0.50), and Kazakhstan (0.50). For example, Iran has a score of 0.67 with HSI but a score of only 0.08 with CPI. HSI rates seven countries at least 0.50 units higher than VDEM: Egypt (0.66), Belarus (0.55), Kuwait (0.55), Malaysia (0.53), Tunisia (0.50), Azerbaijan (0.50), and Kazakhstan (0.50). Iran is the country with the largest rating discrepancy between HSI and FSI. HSI rates Iran 0.44 units higher than FSI. Venezuela instead is the country with the largest discrepancy between HSI and QOG. HSI rates it 0.50 units higher than QOG. As for the disagreements between HSI and WGI, Belarus is rated 0.44 units higher by HSI; it is the only country rated by the two indices with a case-specific disagreement of 0.40 units or more.

CPI seems to have comparatively low scores. There are nine countries rated at least 0.30 units higher by FSI than by CPI, but no countries rated at least 0.30 units higher by CPI than by FSI. The country with the largest disagreement between the two measures is Argentina, rated 0.50 units higher by FSI. A somewhat similar pattern can be found when comparing the country ratings of CPI and VDEM. Four countries are rated at least 0.30 units higher by VDEM, and only one country is rated at least 0.30 units higher by CPI. As for the ratings in CPI and QOG, Iran is the country with the highest discrepancy. QOG rates Iran 0.45 while CPI rates Iran 0.08, meaning that its score is 0.37 units higher with QOG. WGI and CPI rate countries in a fairly similar way. Philippines is the country with the largest disagreement between the two measures: its score is 0.33 units higher with WGI than with CPI.

The country with the largest disagreement between WGI and FSI is Cyprus, which is rated 0.81 by WGI and 0.48 by FSI. WGI and QOG tend to rate countries relatively homogeneously: there are no country scores with a discrepancy of more than 0.30. Differences in country scores between WGI and VDEM are more pronounced. There are three countries with a disagreement of more than 0.40 units between the two measures: Tunisia (0.45), Malaysia (0.45), and Egypt (0.42). In each of the three cases WGI assigns a higher score than VDEM. As for QOG and VDEM, only Egypt is rated with a difference of more than 0.40 units between the two measures. With VDEM its score is 0.01 whereas with QOG its score is 0.42. Differences in country scores between QOG and FSI are relatively small too. Only two countries are rated with a disagreement of more than 0.30 units. The largest case-specific disagreement between FSI and VDEM concerns Libya, which is rated 0.05 by VDEM and 0.47 by FSI. Hence, the level of state capacity in Libya is 0.42 units higher with FSI than VDEM. There are no other countries that FSI rates more than 0.40 units higher than VDEM, or vice versa.

The above analysis proves that on the whole common measures of state capacity do not rate countries similarly, even if they are highly correlated. Given that some bivariate case-specific disagreements between measures are so large, it becomes more understandable that the interchangeability of measures is weak. Generally, to sum up, the most discrepantly rated countries (Table 4) have relatively high scores with SFI or HSI and relatively low scores with CPI and VDEM.

We can suspect that countries that are repeatedly among the most divergently rated ones bivariately, such as Belarus and Kuwait, stand out also in multivariate discrepancy. To determine multivariate case-specific disagreement, I compute the country-specific standard deviations of all country scores. A higher standard deviation indicates that the ratings of a given country are more spread out across measures, and a lower standard deviation indicates the opposite.

In line with our expectations, Belarus, Albania, and Kuwait are among the countries with the largest multivariate disagreement (Fig. 3, left panel). This group of countries seems to have fairly heterogeneous characteristics. There are both developed and developing countries and there are both democratic and authoritarian countries, although there are no liberal democracies besides Italy and Greece. Politico-geographically, most of these countries are located in Eastern Europe, the Middle East/North Africa, or Latin America/the Caribbean. Sub-Saharan African countries, instead, are completely absent from the left panel. Nearly half of the 20 most discrepantly rated countries have a Muslim-majority population.

Countries with the smallest multivariate disagreement (Fig. 3, right panel) can be more straightforwardly categorised into two distinct groups: highly dysfunctional states (e.g., Somalia, Iraq, Liberia) and Western liberal democracies (e.g., Denmark, France, Australia). These countries have either very low or very high capacity, but they share in common the characteristic that their scores are more or less equivalent across measures.

Figure 4 provides illustrative multivariate information about country scores in the most discrepantly rated countries. It confirms a pattern that was previously suggested by bivariate comparisons: most of these countries have relatively high scores with SFI and HSI, but relatively low scores with CPI and VDEM. Countries with similar-looking “nets” have also fairly similar scores across different measures (i.e., are multivariately equivalent). For instance, the shapes of the nets of Italy, Greece, Albania, and Ukraine match quite closely: all the four countries have higher ratings with SFI, HSI, and FSI, but lower ratings with the other four measures. Russia, Belarus, and Kazakhstan seem to share some interesting analogies as well: comparatively high ratings with SFI and HSI, intermediate levels of state capacity with QOG, FSI, and WGI, but relatively low scores with CPI and VDEM. Tunisia, Egypt, Libya, and Cuba instead are rated particularly low with VDEM.

Some of these discrepancies are likely to be determined by differences in the defining attributes of the measures. VDEM and CPI focus on corruption and other related procedural aspects. SFI and HSI capture a broader set of dimensions. In both indices the coercive dimension of state capacity plays a relatively important role, and both SFI and HSI contain sub-indicators related to violence, security, and stability. WGI and QOG focus mainly on the quality of the bureaucracy, although the former emphasises also the quality of public services, whereas the latter gives importance also to corruption and rule of law. FSI takes into consideration various aspects related to state capacity, such as the provision of public services, the influence of external actors, the ability to collect taxes, rule of law, environmental pressures, structural inequality, and public finances. Hence, with FSI state capacity is understood more broadly than with the other measures.

If we examine the ratings in relation to the aspects covered by each measure in individual countries, we can indeed comprehend better some of the causes of the rating inconsistencies. It seems that many of the countries with large multivariate disagreement have a highly corrupt bureaucracy but exert a strong control on the society (e.g., Belarus, Russia, Kazakhstan, Cuba, Venezuela, Malaysia, Egypt, Kuwait). All these countries tend to have comparatively high scores with SFI and HSI, but lower scores with the other measures.

For instance, Belarus—the country with both the largest multivariate and bivariate rating discrepancy—is a stable and ethnically homogeneous country with powerful state institutions (Way 2005) and a high capacity to control the society (Silitski 2005). Its public apparatus is characterised by low autonomy and a lack of impartiality, and its public services are broad ranging but qualitatively deficient (Dimitrova et al. 2021). Given these characteristics, it is unlikely a coincidence that Belarus has relatively high scores with SFI (0.84) and HSI (0.68) but much lower scores with QOG (0.36), CPI (0.16), WGI (0.24), VDEM (0.13), and FSI (0.37). SFI and HSI focus on some of the areas in which Belarus performs well, but neither of the two measures is focused on corruption or rule of law, which instead play a bigger role in the other five measures.

The comparative analysis of country ratings and the analysis of rating discrepancy have shown that measures disagree considerably on the level of state capacity in certain countries. Some of these disagreements can be attributed to the different aspects of state capacity quantified by each instrument, which is positive news. By rigorously matching a chosen definition of state capacity with a chosen measure, and by making these choices clear to the reader, scholars can effectively push forward the research agenda on state capacity. Anyhow, it is less promising to find that case-specific disagreements depend systematically on the level state capacity (Fig. 5).

Regardless of the measure there is a non-linear relationship between the level of state capacity and multivariate disagreement. Measures tend to agree about countries with extreme levels of state capacity, but the largest case-specific divergences are systematically at intermediate levels of state capacity. This is understandable, because survey experts and coders are more likely to agree about clear-cut cases on the extreme ends of the spectrum. Less clear cases are simply harder to code, and experts can be expected to have diverging perceptions about state capacity in these countries. Thus, systematic discrepancy can be attributed to the subjective nature of our measures, but it affects our knowledge on state capacity even when a given working definition matches perfectly with the selected measure.

5 Conclusions

This study has analysed and compared comprehensively seven frequently used operationalisations of state capacity. More specifically, I have examined the empirical implications of the choice of the measure of state capacity by evaluating the convergent validity, interchangeability, and case-specific disagreement of the measures. The main findings of this paper are manifold. In general terms, the study at hand provides important guidance for future quantitative research on the topic through one of the first systematic quantitative comparisons of cross-national measures of state capacity, and shows persuasively that we should desist from drawing common conclusions about state capacity from studies that use different measures.

First, we found that the convergent validity of the seven surveyed measures of state capacity is high. All measures are positively correlated among each other, and the bivariate correlations are strong and consistent over time. The multivariate association and empirical unidimensionality of the measures are confirmed by PCA. Qualitatively each measure captures slightly different aspects of state capacity but statistically speaking they seem to tap into the same concept.

Second, despite the strong associations among measures of state capacity, the set of replicated regression models revealed that the interchangeability between our measures is weak and that findings are sensitive to the chosen measure. In the most worrisome cases, we found that different measures of state capacity can lead to completely opposing interpretations. Scholars working on state capacity must be aware that their results are unlikely to be generalisable across common measures unless explicit evidence that results are robust to multiple measures is provided. The results of the replications cast also doubt on the extant knowledge on the state-democracy nexus. How solid is our knowledge on the topic, if findings are so sensitive to the chosen measure?

Third, to get a clearer view of the somewhat contradictory findings about strongly correlated but weakly interchangeable measures, we shifted the level of analysis to individual countries and found striking differences in country scores among measures. By creating an indicator of multivariate disagreement, we determined the countries that our seven measures of state capacity most agree or disagree upon. The countries with the highest rating discrepancy were further analysed against each measure. We found that high case-specific disagreement can be attributed at least to two factors: the different aspects of state capacity that each measure captures and the systematic disagreement at intermediate levels of state capacity.

Despite high convergent validity, my findings demonstrate that measures of state capacity are not equivalent by any means. SFI and HSI rate countries comparatively high, CPI and VDEM rate countries comparatively low, and in many cases individual disagreements are overwhelming. This study has focused on country scores in 2009, the most recent year of common observations. Anyhow, an analysis of the scores over time could provide additional insights on the disagreements among the measures. For instance, between 1999 and 2013, under Chávez’s rule, Venezuela’s level of state capacity increases slightly with HSI and FSI, stays more or less the same with SFI, and decreases with WGI, CPI, VDEM, and QOG. In particular, with QOG the decrease is substantial. If one’s theoretical approach would presume that the level of state capacity in Venezuela decreased under Chávez’s rule, then probably HSI, FSI, and SFI should not be used, given that these three measures claim the opposite. Scholars need to be aware of these disagreements and the empirical implications of using one measure instead of another. At the very least, the choice of a given measure should be theoretically justified and scholars should prove that the chosen measure fits their working definition of state capacity.

Last, the findings of this study provide an interesting methods-related implication. Even if convergent validity is traditionally assessed with correlations, study at hand has proved that high convergence does not automatically imply equivalence or interchangeability. As we have seen, highly correlated measures can actually rate countries in surprisingly different ways, and thus, produce completely contrasting statistical inferences. Scholars should thus not erroneously assume that highly correlated measures (of any given concept) portray the same picture.

Notes

According to some scholars, state capacity and quality of government are not exactly the same thing but given that the two concepts are at the minimum highly interconnected the same reasoning applies for both concepts.

An updated version of the index has been recently published in Hanson and Sigman (2021), but the study at hand concerns the 2013 version of the index, which is the one that has been extensively used in the literature.

WGI is also available biannually from 1996 to 2002.

1995–2017; data before 1995 is not analysed because most of the measures do not cover earlier years.

Text in square brackets added by the author.

References

Acemoglu, D., Robinson, J.A.: The Narrow Corridor: States, Societies, and the Fate of Liberty. Penguin Press, New York (2019)

Adcock, R., Collier, D.: Measurement validity: a shared standard for qualitative and quantitative research. Am. Polit. Sci. Rev. 95(3), 529–546 (2001)

Anaya-Muñoz, A., Murdie, A.: The will and the way: how state capacity and willingness jointly affect human rights improvement. Hum Rights Rev (2021). https://doi.org/10.1007/s12142-021-00636-y

Andersen, D., Møller, J., Rørbæk, L.L., Skaaning, S.-E.: State capacity and political regime stability. Democratization 21(7), 1305–1325 (2014)

Bäck, H., Hadenius, A.: Democracy and state capacity: exploring a J-shaped relationship. Governance 21(1), 1–24 (2008)

Besley, T., Persson, T.: Wars and state capacity. J. Eur. Econ. Assoc. 6(2–3), 522–530 (2008)

Bizzarro, F., Gerring, J., Knutsen, C.H., Hicken, A., Bernhard, M., Skaaning, S.E., Coppedge, M., Lindberg, S.I.: Party strength and economic growth. World Polit. 70(2), 275–320 (2018)

Carbone, G., Memoli, V.: Does democratization foster state consolidation? Democratic rule, political order, and administrative capacity. Governance 28(1), 5–24 (2015)

Casper, G., Tufis, C.: Correlation versus interchangeability: the limited robustness of empirical findings on democracy using highly correlated data sets. Polit. Anal. 11(2), 196–203 (2003)

Cingolani, L.: The role of state capacity in development studies. J. Dev. Perspect. 2(1–2), 88–114 (2018)

Conway, B., Spruyt, B.: Catholic commitment around the globe: a 52-country analysis. J. Sci. Study Relig. 57(2), 276–299 (2018)

Coppedge, M., Gerring, J., Knutsen, C.H., Lindberg, S.I., Teorell, J., Altman, D. et al.: V-Dem Codebook v9. Varieties of Democracy (V-Dem) Project (2019)

D’Arcy, M., Nistotskaya, M.: State first, then democracy: using cadastral records to explain governmental performance in public goods provision. Governance 30(2), 193–209 (2017)

Daxecker, U., Prins, B.C.: Enforcing order: territorial reach and maritime piracy. Confl. Manag. Peace Sci. 34(4), 359–379 (2017)

DeRouen, K.R., Bercovitch, J.: Enduring internal rivalries: a new framework for the study of civil war. J. Peace Res. 45(1), 55–74 (2008)

Dimitrova, A., Mazepus, H., Toshkov, D., Chulitskaya, T., Rabava, N., Ramasheuskaya, I.: The dual role of state capacity in opening socio-political orders: assessment of different elements of state capacity in Belarus and Ukraine. East Eur. Polit. 37(1), 19–42 (2021)

Dincecco, M.: The rise of effective states in Europe. J. Econ. Hist. 75(3), 901–918 (2015)

Englehart, N.A.: State capacity, state failure, and human rights. J. Peace Res. 46(2), 163–180 (2009)

Fearon, J.D., Laitin, D.D.: Ethnicity and civil war. Am. Polit. Sci. Rev. 97(1), 75–90 (2003)

Fortin, J.: A tool to evaluate state capacity in post-communist countries, 1989–2006. Eur. J. Polit. Res. 49(5), 654–686 (2010)

Fund for Peace: Fragile States Index Annual Report 2019. Fund for Peace, Washington, DC (2019)

Gjerlow, H., Knutsen, C.H., Wig, T., Wilson, M.C.: One Road to Riches? How State Building and Democratization Affect Economic Development. Cambridge University Press, Cambridge (2021)

Grassi, D., Memoli, V.: Political determinants of state capacity in latin America. World Dev. 88, 94–106 (2016)

Grävingholt, J., Ziaja, S., Kreibaum, M.: Disaggregating state fragility: a method to establish a multidimensional empirical typology. Third World q. 36(7), 1281–1298 (2015)

Grundholm, A.T., Thorsen, M.: Motivated and able to make a difference? The reinforcing effects of democracy and state capacity on human development. Stud. Comp. Int. Dev. 54(3), 381–414 (2019)

Hanson, J.K.: State capacity and the resilience of electoral authoritarianism: conceptualizing and measuring the institutional underpinnings of autocratic power. Int. Polit. Sci. Rev. 39(1), 17–32 (2018)

Hanson, J.K., Sigman, R.: Leviathan’s latent dimensions: measuring state capacity for comparative political research. Unpublished Manuscript. Version: September 2013 (2013)

Hanson, J.K., Sigman, R.: Leviathan’s latent dimensions: measuring state capacity for comparative political research. J. Polit. 83(4), 1495–1510 (2021)

Hendrix, C.S.: Measuring state capacity: theoretical and empirical implications for the study of civil conflict. J. Peace Res. 47(3), 273–285 (2010)

Hiilamo, H., Glantz, S.A.: Implementation of effective cigarette health warning labels among low and middle income countries: state capacity, path-dependency and tobacco industry activity. Soc. Sci. Med. 124, 241–245 (2015)

Jerven, M.: Poor Numbers. Cornell University Press, Ithaca, NY (2013)

Jimenez-Ayora, P., Ulubaşoğlu, M.A.: What underlies weak states? The role of terrain ruggedness. Eur. J. Polit. Econ. 39, 167–183 (2015)

Jolliffe, I.T.: Principal Component Analysis, 2nd edn. Springer, New York (2002)

Joshi, D.K., Hughes, B.B., Sisk, T.D.: Improving governance for the post-2015 sustainable development goals: scenario forecasting the next 50 years. World Dev. 70, 286–302 (2015)

Kaufmann, D., Kraay, A., Mastruzzi, M.: The worldwide governance indicators: methodology and analytical issues. Hague J. Rule Law 3(2), 220–246 (2011)

Lee, M.M., Zhang, N.: Legibility and the informational foundations of state capacity. J. Polit. 79(1), 118–132 (2017)

Lin, T.H.: Governing natural disasters: state capacity, democracy, and human vulnerability. Soc. Forces 93(3), 1267–1300 (2015)

Marshall, M.G., Elzinga-Marshall, G.: Global Report 2017: Conflict, Governance, and State Fragility. Center for Systemic Peace, Vienna, VA (2017)

McDoom, O.S., Gisselquist, R.M.: The measurement of ethnic and religious divisions: spatial, temporal, and categorical dimensions with evidence from Mindanao, the Philippines. Soc. Indic. Res. 129(2), 863–891 (2016)

Mogues, T., Erman, A.: Institutional arrangements to make public spending responsive to the poor: when intent meets political economy realities. Dev. Policy Rev. 38(1), 100–123 (2020)

Møller, J., Skaaning, S.-E.: On the limited interchangeability of rule of law measures. Eur. Polit. Sci. Rev. 3(3), 371–394 (2011)

Mudde, C., Schedler, A.: Introduction: rational data choice. Polit. Res. q. 63(2), 410–416 (2010)

Povitkina, M., Bolkvadze, K.: Fresh pipes with dirty water: how quality of government shapes the provision of public goods in democracies. Eur. J. Polit. Res. 58(4), 1191–1212 (2019)

PRS Group: International Country Risk Guide Methodology. Political Risk Group (2018). https://www.prsgroup.com/wp-content/uploads/2018/01/icrgmethodology.pdf

Rothstein, Bo., Samanni, M., Teorell, J.: Explaining the welfare state: power resources vs. the quality of government. Eur. Polit. Sci. Rev. 4(1), 1–28 (2012)

Savoia, A., Sen, K.: Measurement, evolution, determinants, and consequences of state capacity: a review of recent research. J. Econ. Surv. 29(3), 441–458 (2015)

Seawright, J., Collier, D.: Rival strategies of validation: tools for evaluating measures of democracy. Comp. Pol. Stud. 47(1), 111–138 (2014)

Serikbayeva, B., Abdulla, K., Oskenbayev, Y.: State capacity in responding to COVID-19. Int. J. Public Adm. 44(11–12), 920–930 (2021)

Shyrokykh, K.: Effects and side effects of European Union Assistance on the former soviet republics. Democratization 24(4), 651–669 (2017)

Silitski, V.: Preempting democracy: the case of Belarus. J. Democr. 16(4), 83–97 (2005)

Tabellini, G.: Institutions and culture. J. Eur. Econ. Assoc. 16(2–3), 255–294 (2008)

Teorell, J., Dahlberg, S., Holmberg, S., Rothstein, B., Pachon, N.A., Svensson, R.: The QoG Standard Dataset 2019 (2019)

Transparency International: Corruption Perceptions Index 2019: Frequently Asked Questions (2019). https://www.transparency.org/en/cpi

Vaccaro, A.: Comparing measures of democracy: statistical properties, convergence, and interchangeability. Eur. Polit. Sci. 20, 666–684 (2021)

Van Ham, C., Seim, B.: Strong states, weak elections? How state capacity in authoritarian regimes conditions the democratizing power of elections. Int. Polit. Sci. Rev. 39(1), 49–66 (2018)

Walther, D., Hellström, J., Bergman, T.: Government instability and the state. Polit. Sci. Res. Methods 7(3), 579–594 (2019)

Wang, E.H., Xu, Y.: Awakening leviathan: the effect of democracy on state capacity. Res. Polit. 5(2), 1–7 (2018)

Way, L.A.: Authoritarian state building and the sources of regime competitiveness in the fourth wave: the cases of Belarus, Moldova, Russia, and Ukraine. World Polit. 57(2), 231–261 (2005)

White, D., Herzog, M.: Examining state capacity in the context of electoral authoritarianism, regime formation and consolidation in Russia and Turkey. J. Southeast Eur. Black Sea 16(4), 551–569 (2016)

Acknowledgements

The author would like to thank the participants of the QoG Lunch Seminar at the University of Gothenburg on April 29th 2020 and the participants of the WIDER Seminar Series event at the United Nations University World Institute for Development Economics Research on April 29th 2020. The author is particularly grateful to (in alphabetical order) Nicholas Charron, Agnes Cornell, Rachel M. Gisselquist, Marcia Grimes, Victor Lapuente, Marina Nistotskaya, Luigi M. Solivetti, and Jan Teorell for their helpful comments and suggestions on earlier versions of this paper. The author is also grateful to Giovanni Carbone and Vincenzo Memoli for kindly sharing their replication dataset.

Funding

Open access funding provided by Università degli Studi dell'Insubria within the CRUI-CARE Agreement. The author declares that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vaccaro, A. Measures of state capacity: so similar, yet so different. Qual Quant 57, 2281–2302 (2023). https://doi.org/10.1007/s11135-022-01466-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11135-022-01466-x