Abstract

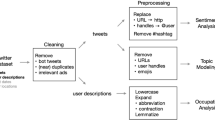

Due to the recent advances in natural language processing, social scientists use automatic text classification methods more and more frequently. The article raises the question about how researchers’ subjective decisions affect the performance of supervised deep learning models. The aim is to deliver practical advice for researchers concerning: (1) whether it is more efficient to monitor coders’ work to ensure a high quality training dataset or have every document coded once and obtain a larger dataset instead; (2) whether lemmatisation improves model performance; (3) if it is better to apply passive learning or active learning approaches; and (4) if the answers are dependent on the models’ classification tasks. The models were trained to detect if a tweet is about current affairs or political issues, the tweet’s subject matter and the tweet author’s stance on this. The study uses a sample of 200,000 manually coded tweets published by Polish political opinion leaders in 2019. The consequences of decisions under different conditions were checked by simulating 52,800 results using the fastText algorithm (DV: F1-score). Linear regression analysis suggests that the researchers’ choices not only strongly affect model performance but may also lead, in the worst-case scenario, to a waste of funds.

Similar content being viewed by others

Data availability

Data available on request from the author.

Code availability

Not applicable.

References

Baden, C., Pipal, C., Schoonvelde, M., van der Velden, M.A.C.G.: Three gaps in computational text analysis methods for social sciences: a research agenda. Commun. Methods Meas. (2021). https://doi.org/10.1080/19312458.2021.2015574

Bail, C.A.: The cultural environment: measuring culture with big data. Theory Soc. 43, 465–482 (2014). https://doi.org/10.1007/s11186-014-9216-5

Barberá, P., Boydstun, A.E., Linn, S., McMahon, R., Nagler, J.: Automated text classification of news articles: a practical guide. Polit. Anal. 29, 1–24 (2020). https://doi.org/10.1017/pan.2020.8

Boyd, D., Crawford, K.: Six Provocations for Big Data (SSRN Scholarly Paper No. ID 1926431). Social Science Research Network, Rochester (2011).https://doi.org/10.2139/ssrn.1926431

Denny, M.J., Spirling, A.: Text preprocessing for unsupervised learning: why it matters, when it misleads, and what to do about It. Polit. Anal. 26, 168–189 (2018). https://doi.org/10.1017/pan.2017.44

Di Franco, G., Santurro, M.: Machine learning, artificial neural networks and social research. Qual. Quant. 55, 1007–1025 (2020). https://doi.org/10.1007/s11135-020-01037-y

DiMaggio, P.: Adapting computational text analysis to social science (and vice versa). Big Data Soc. 2, 2053951715602908 (2015). https://doi.org/10.1177/2053951715602908

Evans, J.A., Aceves, P.: Machine translation: mining text for social theory. Annu. Rev. Sociol. 42, 21–50 (2016). https://doi.org/10.1146/annurev-soc-081715-074206

FastText. Facebook Res. https://research.fb.com/downloads/fasttext/ (2021). Accessed 3.17.21.

Fussey, P., Roth, S.: Digitizing sociology: continuity and change in the internet era. Sociology (2020). https://doi.org/10.1177/0038038520918562

Goldenstein, J., Poschmann, P.: A quest for transparent and reproducible text-mining methodologies in computational social science. Sociol. Methodol. 49, 144–151 (2019a). https://doi.org/10.1177/0081175019867855

Goldenstein, J., Poschmann, P.: Analyzing meaning in big data: performing a map analysis using grammatical parsing and topic modeling. Sociol. Methodol. 49, 83–131 (2019b). https://doi.org/10.1177/0081175019852762

Grimmer, J., Stewart, B.M.: Text as data: the promise and pitfalls of automatic content analysis methods for political texts. Polit. Anal. 21, 267–297 (2013). https://doi.org/10.1093/pan/mps028

He, Z., Schonlau, M.: Automatic coding of text answers to open-ended questions: should you double code the training data? Soc. Sci. Comput. Rev. 38, 754–765 (2020a). https://doi.org/10.1177/0894439319846622

He, Z., Schonlau, M.: Automatic coding of open-ended questions into multiple classes: whether and how to use double coded data. Surv. Res. Methods 14, 267–287 (2020b). https://doi.org/10.18148/srm/2020b.v14i3.7639

Hopkins, D.J., King, G.: A method of automated nonparametric content analysis for social science. Am. J. Polit. Sci. 54, 229–247 (2010). https://doi.org/10.1111/j.1540-5907.2009.00428.x

Ignatow, G.: Theoretical foundations for digital text analysis. J. Theory Soc. Behav. 46, 104–120 (2016). https://doi.org/10.1111/jtsb.12086

Jacobs, T., Tschötschel, R.: Topic models meet discourse analysis: a quantitative tool for a qualitative approach. Int. J. Soc. Res. Methodol. 22, 469–485 (2019). https://doi.org/10.1080/13645579.2019.1576317

Jemielniak, D.: Socjologia 2.0: o potrzebie łączenia Big Data z etnografią cyfrową, wyzwaniach jakościowej socjologii cyfrowej i systematyzacji pojęć. Stud. Socjol. 2, 7–29 (2018)

Jordan, M., Mitchell, T.: Machine learning: trends, perspectives, and prospects. Science 349, 255–260 (2015). https://doi.org/10.1126/science.aaa8415

Joulin, A., Grave, E., Bojanowski, P., Mikolov, T.: Bag of Tricks for Efficient Text Classification. ArXiv160701759 Cs (2016).

Krippendorff, K.H.: Content Analysis: An Introduction to Its Methodology, 2nd, edition Sage Publications Inc, Thousand Oaks, Calif (2003)

Lin, C., He, Y.: Joint sentiment/topic model for sentiment analysis. In: Proceedings of the 18th ACM Conference on Information and Knowledge Management, CIKM ’09, pp. 375–384. Association for Computing Machinery, New York (2009). https://doi.org/10.1145/1645953.1646003

Miller, B., Linder, F., Mebane, W.R.: Active learning approaches for labeling text: review and assessment of the performance of active learning approaches. Polit. Anal. 28, 532–551 (2020). https://doi.org/10.1017/pan.2020.4

Mohammad, S.M., Sobhani, P., Kiritchenko, S.: Stance and Sentiment in Tweets. ArXiv160501655 Cs (2016).

Monroe, B.L.: The meanings of “meaning” in social scientific text analysis. Sociol. Methodol. 49, 132–139 (2019). https://doi.org/10.1177/0081175019865231

Mozetič, I., Grčar, M., Smailović, J.: Multilingual twitter sentiment classification: the role of human annotators. PLoS ONE 11, e0155036 (2016). https://doi.org/10.1371/journal.pone.0155036

Murthy, D.: Towards a sociological understanding of social media: theorizing twitter. Sociology (2012). https://doi.org/10.1177/0038038511422553

Murthy, D., Bowman, S.A.: Big data solutions on a small scale: evaluating accessible high-performance computing for social research. Big Data Soc. (2014). https://doi.org/10.1177/2053951714559105

Nelson, L.K.: To measure meaning in big data, don’t give me a map, give me transparency and reproducibility. Sociol. Methodol. 49, 139–143 (2019). https://doi.org/10.1177/0081175019863783

Neuendorf, K. (ed.): The Content Analysis Guidebook, 2nd edn. SAGE Publications Inc, California (2016)

Tharwat, A.: Classification assessment methods. Appl. Comput. Inform. 17, 168–192 (2020). https://doi.org/10.1016/j.aci.2018.08.003

Tinati, R., Halford, S., Carr, L., Pope, C.: Big data: methodological challenges and approaches for sociological analysis. Sociology 48, 663–681 (2014). https://doi.org/10.1177/0038038513511561

Weller, K., Bruns, A., Burgess, J., Mahrt, M. (eds.): Twitter and Society. Peter Lang Publishing Inc., New York (2013)

Williams, M.L., Burnap, P., Sloan, L.: Towards an ethical framework for publishing twitter data in social research: taking into account users’ views, online context and algorithmic estimation. Sociology 51, 1149–1168 (2017). https://doi.org/10.1177/0038038517708140

Funding

The research leading to these results received funding from National Science Centre (Cracow/Poland) under Grant Agreement No 2019/03/X/HS6/00882.

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Conflict of interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Matuszewski, P. How to prepare data for the automatic classification of politically related beliefs expressed on Twitter? The consequences of researchers’ decisions on the number of coders, the algorithm learning procedure, and the pre-processing steps on the performance of supervised models. Qual Quant 57, 301–321 (2023). https://doi.org/10.1007/s11135-022-01372-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11135-022-01372-2