Abstract

Consider a stable M/G/1 system in which, at time \(t=0\), there are exactly n customers with residual service times equal to \(v_1,v_2,\ldots ,v_n\). In addition, assume that there is an extra customer c who arrives at time \(t=0\) and has a service requirement of x. The externalities which are created by c are equal to the total waiting time that others will save if her service requirement is reduced to zero. In this work, we study the joint distribution (parameterized by \(n,v_1,v_2,\ldots ,v_n,x\)) of the externalities created by c when the underlying service distribution is either last-come, first-served with preemption or first-come, first-served. We start by proving a decomposition of the externalities under the above-mentioned service disciplines. Then, this decomposition is used to derive several other results regarding the externalities: moments, asymptotic approximations as \(x\rightarrow \infty \), asymptotics of the tail distribution, and a functional central limit theorem.

Similar content being viewed by others

1 Introduction

Consider a stable M/G/1 queue with an arrival rate \(\lambda \) and a service distribution \(G(\cdot )\). In addition, assume that at time \(t=0\), there are \(n\ge 0\) customers in the system whose residual service times are respectively equal to \(v_1,v_2,\ldots ,v_n\). Now, assume that there is an arrival of an additional customer with a service requirement \(x\ge 0\) at time \(t=0\). In general, we say that the externalities which are generated by this additional customer are equal to the total amount of waiting time that others would save if she reduced her service requirement from x to zero. Some motives for the research about the properties of the externalities are in the context of, e.g., choice of a management scheme [18], regulation of queues with discretionary services [22, 23] and server-allocation (scheduling) problems in multiclass queues (especially with dynamic class types) [3, 20, 21, 29]. For an extra elaboration regarding these aspects, see [24, Sect. 1.1].

Naturally, the externalities which are created by the additional customer are stochastic and the corresponding analysis relies heavily on the underlying service discipline. In previous work [24], there is an analysis of the externalities under the assumption that the service discipline is first-come, first-served (FCFS). The main motivation for the current research has been to examine the externalities under a different service discipline, viz., the last-come, first-served with preemption (LCFS-PR), and to obtain insight into the effect of the service discipline on externalities. This is triggered by the observation that FCFS and LCFS-PR are in some respect two extreme cases w.r.t. externalities: In FCFS, the additional customer only affects later arrivals, whereas in LCFS-PR she only affects the customers who are already present. To the best of our knowledge, the only existing result under the LCFS-PR discipline is about the expected externalities in a stationary queue [18, Theorem 2.2].

The analysis of the current work is based on a joint representation of the externalities under LCFS-PR and FCFS in terms of a bivariate compound Poisson process. The arrival rate of this process is \(\lambda \) and its bivariate jump distribution is determined uniquely by the primitives of the model (\(\lambda \) and \(G(\cdot )\)) in a way which is to be explained in the sequel. Notably, this decomposition is a generalization of the univariate decomposition which was introduced in [24, Corollary 2] for the externalities under the FCFS discipline. In order to give a formal statement of this decomposition, a precise definition of the externalities under both LCFS-PR and FCFS disciplines should be addressed. This is done in Sect. 2. Then, Sect. 3 is dedicated to the statement and the proof of the decomposition result. In other sections of this work, there are various applications of the above-mentioned decomposition:

-

1.

In Sect. 4, the decomposition is used to derive the first and second moment of the externalities under the LCFS-PR discipline, and the correlation with the externalities under the FCFS discipline. In particular, the results include: (i) a generalization of [18, Theorem 2.2] and (ii) an explicit expression for the variance of the externalities in a stationary M/M/1 LCFS-PR queue.

-

2.

In Sect. 5, the decomposition is used to derive a first order approximation of the externalities under LCFS-PR when \(x\rightarrow \infty \). In addition, it is applied to determine the rate at which the externalities under FCFS tend to infinity as \(x\rightarrow \infty \).

-

3.

In Sect. 6, utilizing the decomposition, we study the tail asymptotics of the externalities under both LCFS-PR and FCFS. In particular, we show that if the service times belong to a specific large subclass of the subexponential distributions, then so do the externalities.

-

4.

In Sect. 7, a functional central limit theorem (CLT) for the externalities under the LCFS-PR service discipline is derived. This result is analogous to [24, Theorem 4] which states a functional CLT for the externalities under the FCFS discipline.

Finally, Sect. 8, and also Sects. 6.1 and 7.2, contain a discussion about open problems which arise from the current research and might be interesting to consider in the future.

2 Model description

Assume \(\lambda >0\) and \(G(\cdot )\) a distribution function such that \(G(0-)=0\). In addition, denote its LST (Laplace-Stieltjes transform) by

and let for each \(m \ge 1\)

In particular, assume that \(\rho \equiv \lambda \mu _1<1\).

Let \(\{J(t); t \ge 0\}\) be a compound Poisson process with rate \(\lambda \) and jump distribution \(G(\cdot )\). Also, let \({\varvec{v}}\equiv (v_1,\dots ,v_n)\) be a vector with positive coordinates and \(v\equiv v_1+v_2+\ldots +v_n\). Then, define the process

and denote its reflection at the origin (for more details, see, e.g., [7, Sect. 2.4]) by \(W_v(t)\). Note that \(W_v(t)\) represents the workload process of an M/G/1 system with strong service discipline (i.e., the order in which customers are served is not a function of their service requirements; cf. [18]), an arrival rate \(\lambda \) and a service distribution \(G(\cdot )\) under the following assumption: At time \(t=0\), there are \(n\ge 1\) customers \(c_1,c_2,\ldots , c_n\) such that for every \(1\le i\le n\), the remaining service time of \(c_i\) is equal to \(v_i\). In addition, without loss of generality we shall assume that for every \(1\le i<j\le n\), \(c_i\) arrived to the queue before \(c_j\).

2.1 The externalities under LCFS-PR

Assume that the service discipline is LCFS-PR and observe that the preemption implies that an arrival of an additional customer c with a service requirement \(x\ge 0\) at time \(t=0\) increases the waiting times of \(c_1,c_2,\ldots ,c_n\) while the waiting times of the customers who arrive after time \(t=0\) remain the same. Now, for every \(0\le j\le n\) let \({\overline{v}}_j=\sum _{s=1}^j v_s\) (an empty sum being zero) and observe that the additional waiting time of \(c_k\) (\(1\le k\le n\)) due to the arrival of the additional customer at time \(t=0\) is equal to

It is common to refer to \(E_k(x, {\varvec{v}})\) as the externalities which are imposed on \(c_k\) and correspondingly to define the total externalities by

Here and in the sequel we generally suppress n when denoting externalities.

2.2 The externalities under FCFS

Assume that the service discipline is FCFS and for every \(i\ge 1\), let \(\tau _i\) be the time when \(J(\cdot )\) has its i-th jump. Assume that an additional customer c with a service requirement \(x\ge 0\) arrives at time \(t=0\). Then, her arrival can only affect the waiting times of those who arrive after time \(t=0\). Specifically, for every \(i\ge 1\), the externalities which are imposed on the customer who arrived at \(\tau _i\) are equal to \(W_{v+x}(\tau _i)-W_{v}(\tau _i)\). Thus, the total externalities are

and it is instructive to notice that they depend on \({\varvec{v}}\) only through v. A thorough analysis of (6) is provided in [24].

3 Decomposition

Define the externalities vector

and note that once the vector \({\varvec{v}}\) is fixed, then \(\left\{ {\mathcal {E}}(x,{\varvec{v}});x\ge 0\right\} \) is a bivariate stochastic process. In Sect. 3.1 we present a decomposition of this process in terms of a compound Poisson process; cf. Theorem 1. In Sect. 3.2 we briefly discuss such a decomposition for non-preemptive LCFS.

3.1 LCFS-PR and FCFS

Consider a FCFS M/G/1 queue with an arrival rate \(\lambda \) and a service distribution \(G(\cdot )\) which is empty at \(t=0\). Let B be the length of the first busy period and let N be the number of customers who received service during this period. The LST of its joint distribution is given on p. 250 of [5]; see also Formula (28) below. Additionally, let \(w_j \equiv v-{\overline{v}}_{j-1}\) for every \(1\le j\le n\).

Theorem 1

In the probability space of the model, there exists a bivariate compound Poisson process \(S\equiv \left( S_1,S_2\right) \) with rate \(\lambda \) and jumps which are distributed like the vector (B, N) such that the equations ((8)), (9) and ((10)) below hold pointwise:

-

1.

For each \(1\le k\le n\),

$$\begin{aligned} E_k(x,{\varvec{v}})=x+S_1(x+w_k)-S_1(w_k),\ \, \ \ x\ge 0, \end{aligned}$$(8)and hence

$$\begin{aligned} {\mathcal {E}}_L(x,{\varvec{v}})=nx+\sum _{k=1}^n\left[ S_1(x+w_k)-S_1(w_k)\right] ,\ \, \ \ x\ge 0. \end{aligned}$$(9) -

2.

$$\begin{aligned} {\mathcal {E}}_F(x,{\varvec{v}})=\int _0^xS_2(v+y)\textrm{d}y,\ \, \ \ x\ge 0. \end{aligned}$$(10)

Remark 1

Assume that the initial workload is \(v+x\). In addition, consider the customers whose arrival times determine the descending ladder process (of the workload). Due to the strong Markov property of the workload process, each of these customers initiates a busy period which ends when the workload level returns to its original level (the level just before the first arrival epoch of that busy period). For each of these busy periods we may compute: (i) The length of the busy period and (ii) the number of customers who arrive until it ends. Since these busy periods are independent, the resulting bivariate random vectors are independent. Furthermore, standard properties of the Poisson process imply that the number of customers whose arrival times determine the descending ladder process has a Poisson distribution with rate \(\lambda (v+x)\) and it is independent of the busy periods initialized by these customers. Thus, the sum of the bivariate random vectors has a bivariate compound Poisson distribution. This observation is the basis of the construction of \(S=(S_1,S_2)\). In [24], it was shown that \({\mathcal {E}}_F(x,{\varvec{v}})\) is determined uniquely by \(S_2(\cdot )\) via the integral (10). Here, we complete the picture by expressing \({\mathcal {E}}_L(x,{\varvec{v}})\) as a functional of \(S_1(\cdot )\). Remarkably, the statement of Theorem 1 is about a joint decomposition of \({\mathcal {E}}(x,{\varvec{v}})\) as a functional of \(S(\cdot )\). We will demonstrate the additional value of having this joint decomposition by computing the cross covariance (and correlation) of \({\mathcal {E}}_L(x,{\varvec{v}})\) and \({\mathcal {E}}_F(x,{\varvec{v}})\) in Sect. 4.2. In particular, this computation reveals that the coordinates of \({\mathcal {E}}(x,{\varvec{v}})\) are positively correlated, and quite strongly correlated in heavy traffic. This is not surprising in view of the fact that the number of customers and the length of each busy period in an M/G/1 queue are positively dependent. Furthermore, in Sects. 6 and 7 we mention two open problems for which we suspect that the joint decomposition in Theorem 1 might be useful in their solutions.

Remark 2

Note that the stationary increments of the compound Poisson process imply that for every \(x\ge 0\) and \(1\le k\le n\),

Notably, the distribution of the right-hand side is invariant with respect to \({\varvec{v}}\) and k, which is a bit surprising. In practice, this means that when the service discipline is LCFS-PR, then for every \(1\le k\le n\), the distribution of the externalities which are imposed on \(c_k\) is invariant with respect to: (i) the vector of the remaining service times \({\varvec{v}}\) and (ii) the place k of \(c_k\) in the order of service.

Remark 3

In [24] it is observed that for fixed \({\varvec{v}}\) the externalities process \(x\mapsto {\mathcal {E}}_F(x,{\varvec{v}})\) is an integral of a nondecreasing right-continuous process and hence it is convex. An open problem that is discussed there concerns the characterization of the set of service disciplines for which the externalities process is convex. Notably, LCFS-PR does not belong to this set: The sample paths of \(x\mapsto {\mathcal {E}}_L(x,{\varvec{v}})\) are composed of a positive drift and positive jumps, such that \(x\mapsto {\mathcal {E}}_L(x,{\varvec{v}})\) is neither convex nor concave in x.

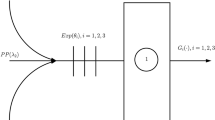

Proof

(Theorem 1) Fix \({\varvec{v}}\) and let \(T_1\) equal \(\tau _1\), the time of the first jump of the process \(J(\cdot )\). Under LCFS-PR, this arrival interrupts the service of one of the customers present at time 0, whose service is resumed once the workload process is back at level \(X_{{\overline{v}}_n+x}(T_1)\), a time which we denote by \(U_1\). Now, let \(T_2\) be the time of the first jump of the process \(J(\cdot )\) after \(U_1\), and \(U_2\) the first time after \(T_2\) the process \(X_{{\overline{v}}_n+x}(\cdot )\) is back at level \(X_{{\overline{v}}_n+x}(T_2)\). We may continue recursively with this construction, yielding the sequences \((T_i)_{i \ge 1}\) and \((U_i)_{i \ge 1}\). An illustration of these sequences for a single sample path of \(J(\cdot )\) is given in Fig. 1.

Let \(U_0\equiv 0\) and, for each \(i \ge 1\), \(I_i\equiv T_i - U_{i-1}\). Note that \(I_1,I_2,\dots \) is an iid sequence of exponentially distributed random variables with rate \(\lambda \). Furthermore, define for each \(i \ge 1\)

and observe that \((B_1,N_1),(B_2,N_2),\ldots \) are iid bivariate random vectors with the distribution of (B, N). Importantly, the sequences \(I_1,I_2,\ldots \) and \((B_1,N_1),(B_2,N_2),\ldots \) are independent, and hence may be used to construct a bivariate compound Poisson process. Specifically, with

a Poisson process with rate \(\lambda \), we consider the bivariate process

Let

and observe that

In addition, for every \(0\le y\le v\),

and hence by combining (15) and (16), deduce (8). Finally, [24, Corollary 2] implies (10). \(\square \)

The blue graph illustrates a path of the workload process from level \(v+x\) to the lower level v. Note that \(U_1-T_1,U_2-T_2\) and \(U_3-T_3\) are three iid random variables which are distributed like B. In addition, observe that \(x=(v+x)-v=T_1+T_2-U_1+T_3-U_2+{\theta _{v+x}}(v)-U_3\) (Color figure online)

3.2 Non-preemptive LCFS

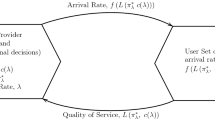

One may wonder why we did not include the non-preemptive LCFS (LCFS-NP) in the decomposition which was stated in Theorem 1. The reason is that there exist some crucial differences between LCFS-PR and LCFS-NP. In order to explain these differences, we also provide a concrete example with a pictorial illustration in Fig. 2.

Consider an M/G/1 queue under the LCFS-NP discipline, and recall that for every \(1\le i<j\le n\), \(c_i\) arrived to the queue before \(c_j\). Then, \(c_n\) was not preempted by the additional customer c who arrives at \(t=0\) with a service requirement \(x\ge 0\). Thus, the externalities which were imposed on \(c_n\) by c equal zero. In addition, for every \(1 \le k < n\), there were no interruptions during the service period of \(c_k\). Therefore, the externalities which were imposed on \(c_k\) by c equal

While this is in line with the decomposition (8), now a big difference is showing up: Unlike in the case of the LCFS-PR discipline, under LCFS-NP the additional customer might influence the waiting times of the customers who arrived after time \(t=0\) and the computation of the externalities for these customers is very complicated. This is mainly because their waiting times might be affected by the customer who was in the service position when they arrived. In fact, externalities could be negative for LCFS-NP.

To demonstrate the possibility of having negative externalities, consider a LCFS-NP M/G/1 queue with \(n=2\), \(v_1=3\) and \(v_2=2\), to which an additional customer c with service requirement x arrives. In addition, assume that there is a customer \({\hat{c}}\) who arrived at \(t=2.5\), and that besides him, no one else arrived during the time interval (0, 5]. If \(x=1\), the service of \({\hat{c}}\) would have been initiated at \(t=3\). If \(x=0\), the service of \({\hat{c}}\) would have been initiated at \(t=5\). For convenience, we also provide a graphical illustration of the timelines under both scenarios (\(x=1\) versus \(x=0\)) in Fig. 2 below.

Comparison between two timelines representing the service periods of customers under different service requirements (\(x=1\) versus \(x=0\)) of the customer c who arrives at time \(t=0\). In both timelines it is assumed that upon arrival of c there are only two customers (\(c_1\) and \(c_2\)) with service requirements which are respectively given by \(v_1=3\) and \(v_2=2\). Also, it is assumed that there is only one customer \({\hat{c}}\) who arrives during the time interval (0, 5]

4 The moments of \({\mathcal {E}}(x,{\varvec{v}})\)

When \({\varvec{v}}\) is fixed, the moments of \({\mathcal {E}}_F(\cdot ,{\varvec{v}})\) are given in [24, Sect. 4]. If \({\varvec{v}}\) is a random vector which is independent of the arrival process and the service demands of the customers, then the moments of \({\mathcal {E}}_F(\cdot ,{\varvec{v}})\) are given in [24, Sect. 7]. In Sect. 4.1 we analyse the first two moments of \({\mathcal {E}}_L(\cdot ,{\varvec{v}})\) and in Sect. 4.2 the joint moment of the coordinates of \({\mathcal {E}}(x,{\varvec{v}})\). A mean-variance analysis for LCFS-PR is performed in Sect. 4.3. Initially, \({\varvec{v}}\) is assumed to be fixed, but this assumption is relaxed in Sect. 4.4.

4.1 Mean and auto-covariance of \({\mathcal {E}}_L(\cdot ,{\varvec{v}})\)

It is well-known (cf. [5], Sect. II.4.4) that

Thus, (11) implies that

Also, a standard calculation yields that

and for simplicity of notations write

Therefore, for every \(1\le k, l\le n\) and \(x_1,x_2\ge 0\) deduce that

where we have used the standard notations \(u^+\equiv \max \{u,0\}\) and \(u^-\equiv \max \{-u,0\}\). As a result, this formula implies that

where

For future reference, we mention that taking \(x_2=0\) in (23) gives

Remark 4

Observe that \(R_{{\varvec{v}}}(x_1,x_1+x_2)\) is positive whenever \(x_1>0\).

Remark 5

The auto-correlation function of \(E_k(\cdot , {\varvec{v}})\) is easily seen to equal

Notably, this expression is invariant with respect to the parameters of the model, i.e., the arrival rate and the service distribution. The auto-correlation function of the total externalities satisfies the same invariance. The auto-correlation function of \({\mathcal {E}}_F(x,{\varvec{v}})\) has the same property (see, [24, Eq. (33)]).

4.2 Cross-covariance of \({\mathcal {E}}_L(\cdot ,{\varvec{v}})\) and \({\mathcal {E}}_F(\cdot ,{\varvec{v}})\)

We first mention two results from [24]:

Next consider the joint distribution of the length B of an M/G/1 busy period and the number of customers N served in it. It is well-known (cf. p. 250 of [5]) that for every \(\alpha , \beta \ge 0\),

Observe that

Therefore, differentiating both sides of (28) once with respect to \(\beta \), then with respect to \(\alpha \) and taking both \(\alpha \) and \(\beta \) equal to zero yields

In addition, observe that for every \(u,y > 0\),

The last step follows from the fact that, for \(0 \le t_1 \le t_2 < \infty \),

Therefore, using (26) in the third step below,

Together with the expression (27) for the variance of \({\mathcal {E}}_F(x,{\varvec{v}})\) this directly yields that

which is easily seen to be positive. In particular, denote the variance of the service distribution by \(\sigma ^2\) and observe that

which is bounded from above by \(\sqrt{3}/2\) (the value that is attained in heavy traffic). Note that the positive correlation between the externalities processes is due to their bivariate decomposition in terms of a compound Poisson process with jumps which are distributed like (B, N).

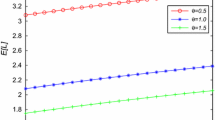

4.3 Mean-variance analysis

Fix \(x>0\), some positive integer n, and set \(V({\varvec{v}};x,n)\equiv \text{ Var }\left[ {\mathcal {E}}_L(x,{\varvec{v}})\right] \). Recall that (19) implies that the mean of \({\mathcal {E}}_L(x, {\varvec{v}})\) is determined uniquely by the parameters x and n (which is the length of \({\varvec{v}}\)). This motivates the following natural question: Given fixed values of x and n, what are the extreme values for the variance of \({\mathcal {E}}_L(x,{\varvec{v}})\)?

Corollary 1

For every x and n,

Proof

Observe that \(V({\varvec{v}};x,n)\), given by (25), is determined uniquely by \(v_1,v_2,\ldots ,v_{n-1}\), i.e., it is invariant with respect to the value of \(v_{n}\). Furthermore, \(V(\cdot ;x,n)\) is decreasing in each of the coordinates \(v_1,v_2,\ldots ,v_{n-1}\). This immediately yields the result. \(\square \)

Remark 6

The current discussion resembles the situation in the classical mean-variance analysis in which the aim is to decompose a portfolio with minimal variance under the constraint that the expected return is fixed (see, e.g., [30]).

Remark 7

Note that for every \(n>1\) and \(v>0\),

This means that gradual reduction of the remaining service times of the customers towards zero does not have the same effect as removing these customers from the system. In particular, Corollary 1 implies that by reducing the remaining service times of \(c_1,c_2,\ldots ,c_{n-1}\) to be arbitrarily close to zero, the variance of the total externalities becomes arbitrarily close to its maximum. However, it is important to notice that once the remaining service times of customers are elapsed, they leave the system. Thus, in that sense the maximum which appears in the statement of Corollary 1 should be considered as a supremum.

4.4 Random initial condition

Assume that the vector \({\varvec{v}}\) is a random vector with a random size M such that \({\varvec{v}}\) is independent of all other stochastic objects of the model. An application of (19) with proper conditioning implies that

In addition, observe that for every \(x_1,x_2\ge 0\):

Therefore, a proper conditioning with the help of (23) and the law of total covariance yields that

Especially, by setting \(x_1=x\ge 0\) and \(x_2 = 0\), deduce that:

Moreover, observe that for every \(x \ge 0\),

and hence the law of total variance can be used to find that

4.4.1 The stationary distribution as an initial condition

Assume that \({\varvec{v}}\) is distributed according to the stationary distribution of the remaining service times and the queue length under LCFS-PR. By applying [26, Corollary 1] and PASTA, this distribution is as follows:

-

1.

M is a geometric random variable, i.e.,

$$\begin{aligned} {\mathbb {P}}\left\{ {M=m}\right\} ={(1-\rho )\rho ^m},\ \, \ \ {m\ge 0}. \end{aligned}$$(40) -

2.

For every \(m\ge 1\), \((v_1,v_2,\ldots ,{v_m})|\{{M=m}\}\) is a random vector with iid coordinates such that \(v_1\) is distributed according to the residual time distribution of \(G(\cdot )\).

Consequently, (37) implies that

which yields a new derivation for [18, Theorem 2.2]. Also, (39) yields the following result.

Proposition 1

If the service distribution is exponential with rate \(\nu \) such that \(\frac{\lambda }{\nu }<1\), then the variance of the externalities in the stationary LCFS-PR M/G/1 queue is equal to

Proof

For each \(i\ge 1\), let \(X_i\) be a random variable which is distributed according to

i.e., an Erlang distribution with shape i and rate \(\nu \). Then, conditioning on M yields:

It is well-known that a compound geometric distribution with a probability of success p and an exponential distribution with rate \(\nu \) is an exponential distribution with rate \(p\nu \), and hence

This identity may also be used to obtain that

Thus, the expression in (44) equals

Insertion of this formula into (39) yields the desired result.\(\square \)

Remark 8

The variance of the externalities is very sensitive to a high traffic load. Concretely, notice that

and hence \(\text{ Var }\big [{\mathcal {E}}_L(x,{\varvec{v}})\big ]\) is of the order \((1-\rho )^{-4}\) as \(\rho \uparrow 1\).

5 Rates of increase

Note that \(x\mapsto {\mathcal {E}}_L(x,{\varvec{v}})\) (resp. \(x\mapsto {\mathcal {E}}_F(x,{\varvec{v}})\)) is representing the evolution of the externalities generated by the additional customer as a function of her service requirement under the LCFS-PR (resp. FCFS) discipline. By definition, under both of these service disciplines, the corresponding externalities processes are nondecreasing. Theorem 2 below includes some approximations of these processes as \(x\uparrow \infty \). Specifically, it asserts that the externalities process under LCFS-PR is approximated by an increasing linear function while its analogue under FCFS is approximated by an increasing quadratic function. An intuitive explanation for the difference is as follows: The mean number of customers who are affected by the additional customer under LCFS-PR is fixed in x while under FCFS this number is linear in x. More results about the externalities process under FCFS in an M/G/1 setup appear in [24]. For an additional discussion about externalities processes in the context of spectrally-positive Lévy queues, see [15, Sect. 4].

Theorem 2

For every \({\varvec{v}}\) (with nonnegative coordinates whose sum is positive), the following statements hold with probability one:

-

1.

$$\begin{aligned} {\mathcal {E}}_L(x,{\varvec{v}})\sim \frac{x}{1-\rho } \ \ \text {as}\ \ x\rightarrow \infty . \end{aligned}$$(45)

-

2.

$$\begin{aligned} \frac{\lambda }{4(1-\rho )}\le \lim _{x\rightarrow \infty }\inf \frac{{\mathcal {E}}_F(x;{\varvec{v}})}{x^2}\le \lim _{x\rightarrow \infty }\sup \frac{{\mathcal {E}}_F(x;{\varvec{v}})}{x^2}\le \frac{\lambda }{(1-\rho )}. \end{aligned}$$(46)

Remark 9

Note that Theorem 2 states that \(x\mapsto {\mathcal {E}}_L(x,{\varvec{v}})\) (resp. \(x\mapsto {\mathcal {E}}_F(x,{\varvec{v}})\)) increases at the same rate in which \(x\mapsto {\mathbb {E}}{\mathcal {E}}_L(x,{\varvec{v}})\) (resp. \(x\mapsto {\mathbb {E}}{\mathcal {E}}_F(x,{\varvec{v}})\)) increases (see (19) and also [24, Eq. (28)]).

Remark 10

It is important to notice that the rate which is stated in (45) and the bounds on the rate which are given in (46) are all uniform in \({\varvec{v}}\). Since \({\mathbb {E}}{\mathcal {E}}_L(x,{\varvec{v}})\) is invariant with respect to \({\varvec{v}}\) (see (19)), it is not surprising that \({\varvec{v}}\) has no effect on the first order approximation of \(x\mapsto {\mathcal {E}}_L(x,{\varvec{v}})\) as \(x\rightarrow \infty \). However, [24, Eq. (28)] implies that \({\varvec{v}}\) influences \({\mathbb {E}}{\mathcal {E}}_F(x,{\varvec{v}})\) and hence we suspect that the bounds in (46) are sub-optimal.

Remark 11

Theorem 2 implies that

is finite with probability one.

Proof

(Theorem 2) Consider some \(x>0\) and observe that for each \(1\le k\le n\), Theorem 1 and the renewal reward theorem imply that with probability one:

This yields (45) via summation over k.

In addition, observe that Theorem 1 and the renewal reward theorem might be applied once again in order to deduce that with probability one:

In a similar fashion, deduce that for every \(\alpha \in (0,1)\) with probability one:

Finally, an insertion of \(\alpha =\frac{1}{2}\) completes the proof. \(\square \)

6 Tail asymptotics

Our goal in this section is to determine the tail behavior of \({\mathcal {E}}_L(x,{\varvec{v}})\) and \({\mathcal {E}}_F(x,{\varvec{v}})\) in the case of heavy-tailed service times. Consider \(x>0\) and for every distribution function \(F(\cdot )\), let \({\overline{F}}\equiv 1-F\). In addition, denote by \(\star \) a convolution between two distribution functions. In this section, we assume that \(G(\cdot )\) is subexponential, i.e.,

We have already seen that the M/G/1 busy period length B plays a key role in \({\mathcal {E}}_L(x,{\varvec{v}})\), and that the corresponding number of customers N in that busy period plays a key role in \({\mathcal {E}}_F(x,{\varvec{v}})\). In the literature it was first shown [8] for the M/G/1 queue with regularly varying service times (a subclass of the class of subexponential distributions) that

Exactly the same result was subsequently derived for the larger classes of intermediate regularly varying service times [34] and of service times with a tail that is heavier than \(\textrm{e}^{-\sqrt{u}}\); see [25] and finally [2]. An explanation why it is impossible to get (53) when \({\overline{G}}(u)\) is lighter than \(\textrm{e}^{-\sqrt{u}}\) as \(u\rightarrow \infty \) can be found in [1, Sect. 6.2].

More or less equivalently, very similar conditions (basically either intermediate regular variation or a tail heavier than \(\textrm{e}^{-\sqrt{u}}\), and some moment conditions) have been provided [9, 10] under which

The precise conditions for both formulas to hold are rather delicate; for details we refer to Theorem 1.2 of [2] (for B) and Proposition 3.2 of [9] (for N). In the remainder of this section we assume that these conditions are satisfied, so that (53) and (54) hold. For clarity, the statements of the main results (Theorems 3 and 4) appear in Sect. 6.1 while the proofs are included in Sect. 6.2.

6.1 Results and some open problems

6.1.1 LCFS-PR

Assume that \({\varvec{v}}=(v_1,v_2,\ldots ,v_n)\) is such that \(v_i>0\) for every \(1\le i\le n\). For the statement of the upcoming Theorem 3, we introduce the set \({\mathcal {D}} \equiv \{w_1,\ldots ,w_n\} \cup \{w_1+x,\ldots ,w_n+x\}\) and its cardinality \(d \equiv |{\mathcal {D}}|\). Also, let \({\widetilde{w}}_1< {\widetilde{w}}_2< \ldots < {\widetilde{w}}_d\) be the elements of \({\mathcal {D}}\) in an increasing order and for each \(1\le j\le d-1\), let \(q_j\) be the number of k’s (\(1\le k\le n\)) such that \(\left[ {\widetilde{w}}_j,{\widetilde{w}}_{j+1}\right] \subseteq [w_k,w_k+x]\), i.e.,

Respectively, let \({\mathcal {J}}\equiv \left\{ 1\le j\le d-1;q_j>0\right\} \), which is a non-empty set. To see that, if \(1\le j\le d-1\) and \({\widetilde{w}}_j=w_k\) for some \(1\le k\le n\), then \({\widetilde{w}}_{j+1}\le w_k+x\) and hence \(\left[ {\widetilde{w}}_{j},{\widetilde{w}}_{j+1}\right] \subseteq \left[ w_k,w_k+x\right] \) which implies that \(q_j\ge 1\).

Theorem 3

Assume that (53) holds. Then:

-

1.

For each \(1\le k\le n\), \(E_k(x,{\varvec{v}})\) has a subexponential distribution such that

$$\begin{aligned} {\mathbb {P}}\left\{ E_k(x,{\varvec{v}}) > u\right\} \sim \frac{\lambda x}{1-\rho }{\overline{G}}\left[ u(1-\rho )\right] \ \ \text {as}\ \ u\rightarrow \infty . \end{aligned}$$(56) -

2.

\({\mathcal {E}}_L(x,{\varvec{v}})\) has a subexponential distribution such that

$$\begin{aligned} {\mathbb {P}}\left\{ {\mathcal {E}}_L(x,{\varvec{v}}) > u\right\} \sim \frac{\lambda }{1-\rho }\sum _{j\in {\mathcal {J}}}({\widetilde{w}}_{j+1}-{\widetilde{w}}_j){\overline{G}}\left[ \frac{u(1-\rho )}{q_j}\right] \ \ \text {as}\ \ u\rightarrow \infty . \end{aligned}$$(57)

Remark 12

Since \({\overline{G}}(\cdot )\) is nonincreasing, (57) implies that

This means that as \(u\rightarrow \infty \), \({\mathbb {P}}\left\{ {\mathcal {E}}_L(x,{\varvec{v}}) > u\right\} \) vanishes at the same rate (up to a multiplicative constant) that \({\overline{G}}\left[ \frac{u(1-\rho )}{\max \left\{ q_j;j\in {\mathcal {J}}\right\} }\right] \) tends to zero.

Remark 13

Observe that \({\mathcal {E}}_L(x,{\varvec{v}})=\sum _{k=1}^nE_k(x,{\varvec{v}})\) is a sum of dependent random variables. There is an extensive literature about the tail asymptotics of sums of dependent random variables, e.g., [14, 19, 32, 33] and the references therein. Having said that, we are not aware of any existing result which might be useful in order to prove Theorem 3.

6.1.2 FCFS

The analogue of Theorem 3 for the externalities under the FCFS discipline is the following Theorem 4.

Theorem 4

Assume that (54) holds. In addition, let

Then \({\mathcal {E}}_F(x,{\varvec{v}})\) has a subexponential distribution such that

Remark 14

There are cases in which \(\omega _1=\omega _2\). In such cases, Theorem 4 yields a first order approximation of the tail distribution of \({\mathcal {E}}_F(x,{\varvec{v}})\). For example, when \(G(\cdot )\) is regularly varying, i.e.,

for some \(\alpha >0\) and \(L:(0,\infty )\rightarrow (0,\infty )\) such that \(L(uy)\sim L(u)\) as \(u\rightarrow \infty \) for every \(y>0\) (i.e., \(L(\cdot )\) is slowly varying at infinity). In that case, standard calculations yield that \(\omega _1=\omega _2=\frac{1}{1+\alpha }\).

6.1.3 Open problem: Is \({\mathcal {E}}(x,{\varvec{v}})\) bivariate regularly varying?

Theorems 3 and 4 include certain conditions on heavy-tailed \(G(\cdot )\) under which the (univariate) tail distributions of \({\mathcal {E}}_L(x,{\varvec{v}})\) and \({\mathcal {E}}_F(x,{\varvec{v}})\) are just as heavy as \({\overline{G}}(\cdot )\). It would be interesting to also study the bivariate tail asymptotics of \(\left( {\mathcal {E}}_L(x,{\varvec{v}}),{\mathcal {E}}_F(x,{\varvec{v}})\right) \). For example, assume that \(G(\cdot )\) is regularly varying; does it imply that the joint distribution of \(\left( {\mathcal {E}}_L(x,{\varvec{v}}),{\mathcal {E}}_F(x,{\varvec{v}})\right) \) is also regularly varying? For details about regularly varying random vectors, see, e.g., [28, Sect. 2].

We believe that this query should start by figuring out whether a regularly varying \(G(\cdot )\) implies a bivariate regularly varying distribution of (B, N). Then, once such a relation is established, it might be applied in order to derive conditions on the service distribution under which the distribution of \(\left( {\mathcal {E}}_L(x,{\varvec{v}}),{\mathcal {E}}_F(x,{\varvec{v}})\right) \) is bivariate regularly varying. To the best of our knowledge, there are no existing results in this direction.

6.1.4 Open problem: the stationary distribution as an initial condition

If the additional customer arrives when the M/G/1 LCFS-PR queue is in steady state, then she sees a geometric(\(\rho \)) distributed number of customers (cf. Sect. 4.4.1). If, moreover, the service time of the additional customer has distribution \(G(\cdot )\), then all customers already present experience the same M/G/1 busy period as extra delay. One can now use the above-mentioned known results about the tail behavior of such a busy period to conclude that the externalities distribution is just as heavy-tailed as \(G(\cdot )\).

For FCFS, however, the situation is more complicated. Now the remaining workload v of the customers already present plays a role in the externalities. Considering the tail behavior of (cf. (10)) \(\int _v^{v+x} S_2(y) \textrm{d}y\) when v, x are heavy-tailed random variables, the ‘principle of the one big jump’ (cf. [13]) suggests that the workload v will be dominant, and will determine the tail behavior of the externalities. For example, when \({\overline{G}}(\cdot )\) is a regularly varying function at infinity of index \(-\nu \in (-2,-1)\), then the steady-state workload v is regularly varying of index \(1-\nu \) [6], and we conjecture that \({\mathcal {E}}_F\) is then also regularly varying at infinity of index \(1-\nu \), i.e., one degree heavier than the service time and the busy period.

6.2 Proofs

In general, the proof of Theorem 3 (resp. Theorem 4) is based on the decomposition which was stated in Theorem 1 (resp. [24, Theorem 2]) with an application of some known results from the theory about subexponential distributions. The next lemma is a summary of the existing results which are needed.

Lemma 1

Assume that X is a nonnegative random variable with a subexponential distribution. In addition, let Y be a nonnegative random variable which is independent of X. Then:

-

(i)

For every \(h>0\), hX also has a subexponential distribution.

-

(ii)

For every \(h>0\),

$$\begin{aligned} {\mathbb {P}}\left\{ X>u\right\} \sim {\mathbb {P}}\left\{ X>u+h\right\} \ \ \text {as} \ \ u\rightarrow \infty . \end{aligned}$$(62) -

(iii)

If Y is bounded, then XY has a subexponential distribution.

-

(iv)

Assume that Y is unbounded and there are \(0<z_1< z_2<\infty \) such that

$$\begin{aligned} z_1\le \frac{{\mathbb {P}}\left\{ X>u\right\} }{{\mathbb {P}}\left\{ Y>u\right\} }\le z_2 \end{aligned}$$(63)for every \(u>0\). Then, Y has a subexponential distribution.

-

(v)

Assume that Y is unbounded such that

$$\begin{aligned} \sup _{u>0}\frac{{\mathbb {P}}\left\{ Y>u\right\} }{{\mathbb {P}}\left\{ X>u\right\} }<\infty , \end{aligned}$$(64)and for every \(h>0\),

$$\begin{aligned} {\mathbb {P}}\left\{ Y>u\right\} \sim {\mathbb {P}}\left\{ Y>u+h\right\} \ \ \text {as}\ \ u\rightarrow \infty . \end{aligned}$$(65)Then,

$$\begin{aligned} {\mathbb {P}}\left\{ X+Y>u\right\} \sim {\mathbb {P}}\left\{ X>u\right\} +{\mathbb {P}}\left\{ Y>u\right\} \ \ \text {as}\ \ u\rightarrow \infty . \end{aligned}$$(66) -

(vi)

Assume that Y is distributed according to a Poisson distribution with rate \(\gamma >0\). If \(X,X_1,X_2,\dots \) is an iid sequence of random variables, which are independent of Y, then \(S_Y\equiv X_1+X_2+\ldots +X_Y\) has a subexponential distribution such that

$$\begin{aligned} {\mathbb {P}}\left\{ S_Y> u\right\} \sim \gamma {\mathbb {P}}\{X > u\}\ \ \text {as}\ \ u\rightarrow \infty \,. \end{aligned}$$(67)

Proof

(Lemma 1) (i) is an immediate consequence of the definition in (52). (ii) is [13, Lemma 3.2], (iii) is [4, Corollary 2.5], (iv) is due to [27, Theorem 2.1(a)], (v) is [12, Lemma 2] and (vi) is due to [11, Theorem 3]. \(\square \)

Proof

(Theorem 3) We provide only a proof for (57) since the proof of (56) follows from similar arguments and it is more standard.

It is given that \(G(\cdot )\) is subexponential. Thus, due to (53), Lemma 1(iv) implies that B has a subexponential distribution. Next, for every \(1\le i\le d-1\), let

Therefore, Theorem 1 yields that

Notably, since \(S_1(\cdot )\) has stationary independent increments, then:

-

1.

\(\left\{ q_j{\hat{S}}_j;j\in {\mathcal {J}}\right\} \) is a collection of independent random variables.

-

2.

For each \(j\in {\mathcal {J}}\), the random variable \(q_j{\hat{S}}_j\) has a compound Poisson distribution with rate \(\lambda \left( {\widetilde{w}}_{j+1}-{\widetilde{w}}_j\right) \) and jumps which have the distribution of \(q_jB\).

Especially, observe that for each \(j\in {\mathcal {J}}\), Lemma 1(vi) and the fact that \(q_jB\) has a subexponential distribution (due to Lemma 1(i)) imply that \(q_j{\hat{S}}_j\) has a subexponential distribution such that

Let \(r\equiv \left| {\mathcal {J}}\right| \) and without loss of generality assume that \(q_1\le q_2\le \ldots \le q_r\). In particular, observe that for every \(1\le i_1<i_2\le r\),

Thus, Lemma 1(v) implies that

In particular, (72) and the assumption that \(G(\cdot )\) is subexponential imply that \(q_1{\hat{S}}_1+q_2{\hat{S}}_2\) is a nonnegative random variable which is independent of \(q_3{\hat{S}}_3\) and satisfies the condition (65). Therefore, Lemma 1(v) with (71) may be applied once again in order to deduce that

such that \(q_1{\hat{S}}_1+q_2{\hat{S}}_2+q_3{\hat{S}}_3\) is a nonnegative random variable which is independent of \(q_4{\hat{S}}_4\) and satisfies the condition (65). Then, this argument may be repeated until getting

such that \(\sum _{i=1}^rq_i{\hat{S}}_i\) satisfies the condition (65). Therefore, (57) follows via (69) with Lemma 1(ii). Finally, since \(q_r{\hat{S}}_r\) has a subexponential distribution, (57) implies that \({\mathcal {E}}_L(x,{\varvec{v}})\) has a subexponential distribution via Lemma 1(iv) (see also Remark 12).

\(\square \)

The proof of Theorem 4 is based on an application of [24, Theorem 2] which is now provided.

Theorem 5

(Jacobovic and Mandjes [24]) Assume that \(M_1\), \(M_2\), \((\psi _{m,1})_{m=1}^\infty \), \((\psi _{m,2})_{m=1}^\infty \), \((\varphi _m)_{m=1}^\infty \) are independent random variables such that:

-

1.

\(M_1\sim \text {Poi}(\lambda v)\) and \(M_2\sim \text {Poi}(\lambda x)\).

-

2.

\((\psi _{m,1})_{m=1}^\infty \) and \((\psi _{m,2})_{m=1}^\infty \) are two iid sequences of random variables which are distributed like N.

-

3.

\((\varphi _m)_{m=1}^\infty \) is an iid sequence of random variables which are distributed uniformly on [0, 1].

Then,

Proof

(Theorem 4) Assume that \(v>0\). Otherwise, when \(v=0\), the proof follows from the same arguments and becomes much simpler.

Consider the random variables which appear in the statement of Theorem 5. In addition, \(G(\cdot )\) has a subexponential distribution such that (54) is valid and hence Lemma 1(iv) implies that N has a subexponential distribution. Therefore, Lemma 1(vi) implies that \(\sum _{m=1}^{M_1}\psi _{m,1}\) has a subexponential distribution such that

In addition, as was shown above, \(\psi _{1,2}\) has a subexponential distribution and \(\varphi _1\) is bounded from above. As a result, Lemma 1(iii) implies that \(\psi _{1,2}\varphi _1\) has a subexponential distribution. Consequently, Lemma 1(vi) yields that \(\sum _{m=1}^{M_2}\psi _{m,2}\varphi _m\) has a subexponential distribution such that

To evaluate the probability in the RHS, Fatou’s lemma and (54) yield

In a similar fashion, we may apply the reverse Fatou’s lemma with (54) in order to get

Now, for every \(u>0\)

and observe, using Lemma 1(vi), that the upper bound in (80) converges to \(\frac{x}{v}\) as \(u\rightarrow \infty \). Therefore, deduce that

and hence Lemma 1(v) implies that

from which (60) follows.

Due to Lemma 1(iv), this yields that \({\mathcal {E}}_F(x,{\varvec{v}})/x \) has a subexponential distribution, and hence \({\mathcal {E}}_F(x,{\varvec{v}})\) also has a subexponential distribution via Lemma 1(i). \(\square \)

7 Functional CLT

The model which was described in Sect. 2 is characterized by the triplet \((\lambda , G(\cdot ), {\varvec{v}})\). Fix \({\varvec{v}} = (v_1,\dots ,v_n)\) with positive coordinates, and consider a sequence (in m) of models

such that the m-th model is associated with an arrival rate \(\lambda _m\) and service distribution \(G_m(\cdot )\). Respectively, for each \(m\ge 1\) let:

In addition, for each \(m \ge 1\), denote the externalities processes under LCFS-PR in the m-th model by \({\mathcal {E}}_L^{(m)}(\cdot ,{\varvec{v}},n)\) and let \(B_m\) be a random variable which is distributed like the length of the busy period in the m-th model. In particular, the fixed-point relation (28) yields that for each \(m\ge 1\) (see also [5, p. 251, (II.4.66)])

and hence

Theorem 6

For \(m\ge 1\), define a stochastic process (in x):

In addition, let \(W(\cdot )\) be a standard Wiener process on \([0,\infty )\) and define a stochastic process (in x):

Assume that all of the following conditions are satisfied:

- (I):

-

\(\lambda _m \rightarrow \infty \) as \(m \rightarrow \infty \),

- (II):

-

There is \(m' \ge 1\) such that \(\rho _m < 1\) for every \(m \ge m'\),

- (III):

-

\(\frac{\nu _m}{\sqrt{\lambda _m\sigma _m^3}} \rightarrow 0\) as \(m \rightarrow \infty \) .

Then,

where \(\Rightarrow \) denotes weak convergence on \({\mathcal {D}}[0,\infty )\) equipped with the uniform metric (on compacta).

Proof

For each \(m\ge 1\), let \(S^{(m)}(\cdot )\) be a compensated compound Poisson process with rate \(\lambda _m\) and jumps which are distributed like \(B_m\).

Let \(K>0\) and according to [24, Theorem 5], existence of Conditions (I)-(III) all together implies that

where \(\Rightarrow \) denotes weak convergence in \({\mathcal {D}}[0,\infty )\) equipped with the uniform metric on compacta. Since the limit process is concentrated on \(C[0,x+v]\), the representation theorem [31, Theorem IV.13] yields that there is a probability space with stochastic processes \({\widetilde{W}}(\cdot )\) and \(\left\{ {\widetilde{S}}_m(\cdot );m\ge 1\right\} \) such that:

-

1.

\({\widetilde{W}}(\cdot ) \overset{d}{=}\ W(\cdot )\).

-

2.

\({\widetilde{S}}_m(\cdot ) \overset{d}{=}\ S^{(m)}(\cdot )\) for every \(m\ge 1\).

-

3.

$$\begin{aligned} \lim _{m\rightarrow \infty }\sup _{0 \le y \le v + x} \left| \frac{{\widetilde{S}}_m(y)}{\sqrt{\lambda _m \sigma _m}} - {\widetilde{W}}(y) \right| \rightarrow 0\ \, \ \ \text {w.p.\ 1}. \end{aligned}$$(86)

Then, notice that

and the RHS tends to zero with probability one. As a result, due to Theorem 1, deduce that the sequence of processes \(\hat{{\mathcal {E}}}_{{\varvec{v}}}^{(m)}(\cdot )\) admits weak convergence to \(H_{{\varvec{v}}}(\cdot )\) on \({\mathcal {D}}[0,K]\) equipped with the uniform metric. Since this convergence holds for every positive K and the limiting process \(H_{{\varvec{v}}}(\cdot )\) is concentrated in \(C[0,\infty )\), this convergence can be extended to \({\mathcal {D}}[0,\infty )\) via [31, Theorem V.23] and the result follows. \(\square \)

7.1 The conditions

The pre-conditions of Theorem 6 are not straightforward. Namely, Condition (I) states that \(\lambda _m\rightarrow \infty \) as \(m\rightarrow \infty \). Thus, Condition (II) implies that \(\mu _{1,m}\) vanishes as \(m\rightarrow \infty \). In addition, since \(\lambda _m\rightarrow \infty \) as \(m\rightarrow \infty \), it must be that \(\mu _{2,m}\rightarrow \infty \) as \(m\rightarrow \infty \) in order to make the first term of (85) vanish as \(m\rightarrow \infty \).

Below, there is an example in which the pre-conditions of Theorem 6 are all satisfied. This illustrates that Theorem 6 is informative. Having said that, it might be interesting to look for more general conditions under which the asymptotic distribution of the externalities process can be identified.

7.1.1 Example

For each \(m\ge 1\), assume that \(G_m(\cdot )\) is a distribution function of a random variable \(\tau _m\) such that

for some \(p,k>0\). Then, for each \(m\ge 1\), observe that

Also, let \(\lambda _m\equiv q m^{p-k}\) for some \(q\in (0,1)\). Thus, in order to ensure that Condition (I) is satisfied, require that \(p>k\) and observe that \(\rho _m=q\) which means that Condition (II) is also valid. It is left to check Condition (III). To this end, note that

and hence we require \(6p-11k<0\). Similarly, note that

and hence we require \(-5.5k+3p<0\). Thus, for any \(p,k>0\) such that \(\frac{6p}{11}<k<p\), all the pre-conditions of Theorem 6 are satisfied. Indeed, one may verify that for such p and k, we get that \(\mu _{1,m}\rightarrow 0\) as \(m\rightarrow \infty \) and \(\mu _{2,m}\rightarrow \infty \) as \(m\rightarrow \infty \).

7.2 Open problem: Is \({\mathcal {E}}(x,{\varvec{v}})\) asymptotically Gaussian?

Theorem 6 is a functional CLT for the process \(x\mapsto {\mathcal {E}}_L(x,{\varvec{v}})\). In previous work [24, Theorem 4], there is an analogous result for \(x\mapsto {\mathcal {E}}_F(x,{\varvec{v}})\). Both of these univariate results are based on the bivariate decomposition which is given in Theorem 1 with an application of [24, Theorem 5]. Since the decomposition in Theorem 1 is bivariate, it looks promising to reach a unified version of both of these results, i.e., a bivariate functional CLT of \(x\mapsto \left( {\mathcal {E}}_L(x,{\varvec{v}}),{\mathcal {E}}_F(x,{\varvec{v}})\right) \). The proof of such a result might be based on a complicated bivariate version of [24, Theorem 5] which should be developed. Since we prefer not to shift the balance of the current work by developing this generalization of [24, Theorem 5], here we just mention the possibility of having a bivariate functional CLT of \(x\mapsto \left( {\mathcal {E}}_L(x,{\varvec{v}}),{\mathcal {E}}_F(x,{\varvec{v}})\right) \) in passing.

8 Discussion

This work concerns the externalities in an M/G/1 queue under the LCFS-PR and FCFS disciplines. The cornerstone of the current analysis is Theorem 1: The externalities have a joint decomposition in terms of a bivariate compound Poisson process with an arrival rate \(\lambda \) and jumps which are distributed like (B, N). We demonstrated that this decomposition is useful via several applications, including: moments computations, externalities approximations as \(x\rightarrow \infty \), tail asymptotics of the externalities and a functional CLT. The rest of this section is dedicated to a discussion about the special meaning of the externalities analysis under FCFS and LCFS-PR.

Observe that when FCFS is implemented, then the additional customer affects only customers who arrive after him. On the contrary, when LCFS-PR is implemented, the additional customer would affect only the customers who arrived before him. In that sense, FCFS and LCFS-PR are edge cases. As demonstrated in Sect. 3.2, there are service disciplines under which the additional customer affects both some customers who were in the queue at time \(t=0\) as well as some other customers who arrive after this time. The externalities in these intermediate cases have not been analysed. It might be interesting to examine in what sense their properties mitigate the properties of the externalities under FCFS and LCFS-PR.

References

Asmussen, S., Klüppelberg, C., Sigman, K.: Sampling at subexponential times, with queueing applications. Stoch. Process. Appl. 79, 265–286 (1999)

Baltrunas, A., Daley, D.J., Klüppelberg, C.: Tail behaviour of the busy period of a GI/GI/1 queue with subexponential service times. Stoch. Process. Appl. 111, 237–258 (2004)

Chan, C.W., Huang, M., Sarhangian, V.: Dynamic server assignment in multiclass queues with shifts, with applications to nurse staffing in emergency departments. Oper. Res. 69, 1936–1959 (2021)

Cline, D.B., Samorodnitsky, G.: Subexponentiality of the product of independent random variables. Stoch. Process. Appl. 49, 75–98 (1994)

Cohen, J.W.: The Single Server Queue. North-Holland Publishing Company, Amsterdam (1969)

Cohen, J.W.: Some results on regular variation for distributions in queueing and fluctuation theory. J. Appl. Probab. 10, 343–353 (1973)

Dȩbicki, K., Mandjes, M.: Queues and Lévy Fluctuation Theory. Springer, New York (2015)

De Meyer, A., Teugels, J.L.: On the asymptotic behaviour of the distributions of the busy period and service time in M/G/1. J. Appl. Probab. 17, 802–813 (1980)

Denisov, D.E., Shneer, V.: Global and local asymptotics for the busy period of an M/G/1 queue. Queueing Systems 64, 383–393 (2010)

Doney, R.A.: On the asymptotic behaviour of first passage times for transient random walks. Probab. Theory Relat. Fields 81, 239–246 (1989)

Embrechts, P., Goldie, C.M., Veraverbeke, N.: Subexponentiality and infinite divisibility. Probab. Theory Relat. Fields 49, 335–347 (1979)

Embrechts, P., Goldie, C.M.: On closure and factorization properties of subexponential and related distributions. J. Aust. Math. Soc. 29, 243–256 (1980)

Foss, S., Korshunov, D., Zachary, S.: An Introduction to Heavy-Tailed and Subexponential Distributions. Springer, New York (2011)

Geluk, J., Tang, Q.: Asymptotic tail probabilities of sums of dependent subexponential random variables. J. Theor. Probab. 22, 871–882 (2009)

Glynn, P.W., Jacobovic, R., Mandjes, M.: Moments of polynomial functionals in Lévy-driven queues with secondary jumps. Unpublished manuscript (2023)

Goldie, C.M.: Subexponential distributions and dominated-variation tails. J. Appl. Probab. 15, 440–442 (1978)

Grandell, J.: Mixed Poisson Processes, vol. 77. CRC Press, Boca Raton (1997)

Haviv, M., Ritov, Y.A.: Externalities, tangible externalities and queue disciplines. Manage. Sci. 44, 850–858 (1998)

Hazra, R.S., Maulik, K.: Tail behavior of randomly weighted sums. Adv. Appl. Probab. 44, 794–814 (2012)

Hu, Y., Chan, C.W., Dong, J.: Optimal scheduling of proactive service with customer deterioration and improvement. Manage. Sci. 68, 2533–2578 (2022)

Huang, J., Carmeli, B., Mandelbaum, A.: Control of patient flow in emergency departments, or multiclass queues with deadlines and feedback. Oper. Res. 63, 892–908 (2015)

Jacobovic, R.: Internalization of externalities in queues with discretionary services. Queueing Syst. 100, 453–455 (2022)

Jacobovic, R.: Regulation of a single-server queue with customers who dynamically choose their service durations. Queueing Syst. 101, 245–290 (2022)

Jacobovic, R., Mandjes, M.: Externalities in queues as stochastic processes: the case of FCFS M/G/1. Stoch. Syst. (2022). https://doi.org/10.48550/arXiv.2207.02599

Jelenkovic, P.R., Momcilovic, P.: Large deviations of square root insensitive random sums. Math. Oper. Res. 29, 398–406 (2004)

Kelly, F.P.: The departure process from a queueing system. Math. Proc. Camb. Philos. Soc. 80, 283–285 (1976)

Klüppelberg, C.: Subexponential distributions and integrated tails. J. Appl. Probab. 25, 132–141 (1988)

Kulik, R., Soulier, P.: Heavy-Tailed Time Series. Springer, New York (2020)

Liu, Y., Sun, X., Hovey, K.: Scheduling to differentiate service in a multiclass service system. Oper. Res. 70, 527–544 (2022)

Markowitz, H.M., Todd, G.P.: Mean-Variance Analysis in Portfolio Choice and Capital Markets, vol. 66. Wiley, Hoboken (2000)

Pollard, D.: Convergence of Stochastic Processes. Springer, New York (2012)

Tang, Q.: Insensitivity to negative dependence of asymptotic tail probabilities of sums and maxima of sums. Stoch. Anal. Appl. 26, 435–450 (2008)

Zhang, Y., Shen, X., Weng, C.: Approximation of the tail probability of randomly weighted sums and applications. Stoch. Process. Appl. 119, 655–675 (2009)

Zwart, A.P.: Tail asymptotics for the busy period in the GI/G/1 queue. Math. Oper. Res. 26, 485–493 (2001)

Acknowledgements

This research is funded by the NWO Gravitation project networks under grant no. 024.002.003.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jacobovic, R., Levering, N. & Boxma, O. Externalities in the M/G/1 queue: LCFS-PR versus FCFS. Queueing Syst 104, 239–267 (2023). https://doi.org/10.1007/s11134-023-09878-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-023-09878-8