Abstract

Quantum annealing has the potential to find low energy solutions of NP-hard problems that can be expressed as quadratic unconstrained binary optimization problems. However, the hardware of the quantum annealer manufactured by D-Wave Systems, which we consider in this work, is sparsely connected and moderately sized (on the order of thousands of qubits), thus necessitating a minor-embedding of a logical problem onto the physical qubit hardware. The combination of relatively small hardware sizes and the necessity of a minor-embedding can mean that solving large optimization problems is not possible on current quantum annealers. In this research, we show that a hybrid approach combining parallel quantum annealing with graph decomposition allows one to solve larger optimization problem accurately. We apply the approach to the Maximum Clique problem on graphs with up to 120 nodes and 6395 edges.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum annealers are devices that aim to use quantum mechanical fluctuations to search for low energy solutions to combinatorial optimization problems [1,2,3,4]. Quantum annealers have been physically manufactured, such as those manufactured by D-Wave Systems, Inc. [5,6,7,8,9], and allow approximate solutions of NP-hard problems to be computed that are hard to solve classically. D-Wave quantum annealers are designed to find high quality solutions of so-called quadratic unconstrained binary optimization (QUBO) and Ising problems by minimizing the function

where the linear weights \(a_i \in {\mathbb {R}}\), \(i \in \{1,\ldots ,n\}\), and the quadratic couplers \(a_{ij} \in {\mathbb {R}}\) for \(i<j\) are chosen by the user to define the problem under investigation. The variables \(x_i\), \(i \in \{1,\ldots ,n\}\), are binary and unknown. The function of Eq. (1) is called a QUBO if \(x_i \in \{0,1\}\), and an Ising problem if \(x_i \in \{-1,+1\}\) for all \(i \in \{1,\ldots ,n\}\). Both QUBO and Ising formulations are equivalent [10, 11].

After mapping Eq. (1) to the physical quantum system, the D-Wave quantum annealer aims to find the values of \(x_1,\ldots ,x_n\) that minimize H by trying to obtain a minimum-energy state of the quantum system. To this end, the coefficients of the linear terms in Eq. (1) are mapped onto the corresponding qubits’ physical parameters, and the coefficients for the quadratic terms are mapped onto parameters of the links connecting the corresponding qubits. However, this process is subject to a variety of constraints in practice, which limit the applicability of the quantum annealer. First, the number of available hardware qubits is relatively small, which restricts the admissible problem size of Eq. (1). Second, the connectivity of the hardware qubits on the D-Wave quantum chip is limited and local. Therefore, for two logical qubits \(x_i\) and \(x_j\) with \(a_{ij} \ne 0\) in Eq. (1), it is not guaranteed that a physical link between qubits i and j exists on the quantum hardware. To alleviate this issue, a minor embedding of the connectivity structure of Eq. (1) onto the D-Wave qubit hardware graph is needed, where one logical qubit is represented by a connected set of physical qubits, called a chain. However, the existence of chains often results in a severe reduction in the number of available qubits [11, 12]. Third, excessive chain lengths may cause the solution quality to decrease [13,14,15]. Therefore, the quality of the solutions found by the D-Wave annealer is typically higher for smaller problems and, specifically, for problems with shorter chain lengths.

This research shows that, by combining an exact classical graph decomposition algorithm [16] with a method for solving multiple smaller problems on the quantum annealing in parallel [17], one can solve accurately problems of sizes larger than what fits on the quantum annealer hardware. We focus on solving the Maximum Clique (MC) problem, an NP-hard problem with important applications in network analysis, bioinformatics, and computational chemistry [16]. We test the method on Erdős-Rényi random graphs [18] of up to 120 vertices. The decomposition algorithm splits a given graph instance for which MC is to be computed into subproblems that are strictly smaller than the input graph. By applying the decomposition in a recursive fashion, an arbitrary sized input can be broken down to subproblems suitable for the D-Wave quantum annealer. Importantly, though the algorithm of [16] has an exponential worst-case complexity, it is exact, in the sense that an optimal solution of the input problem is guaranteed given all generated subproblems are solved exactly by the quantum annealer.

This work is an extension of the two articles of [16, 19], in which the decomposition algorithm for the MC problem was introduced. However, both previous articles only investigated the decomposition from a classical point of view, in the sense that the generated subproblems were never actually solved on the D-Wave quantum annealer. In contrast to the previous works, in this contribution we employ disjoint clique embeddings to actually solve the generated problems simultaneously on the D-Wave quantum hardware. This is possible due to the advancement in the quantum annealing hardware, and particularly the availability of the D-Wave Advantage computers with over 5000 qubits, and the parallel quantum annealing method introduced in [17]. Parallel quantum annealing can be equivalently referred to as tiling.Footnote 1 We note that a similar paradigm of parallel computation exists for universal gate model quantum computers [20,21,22]. We investigate the performance and solution quality of the proposed quantum algorithms to solve the MC problem on multiple graphs. Performance measures include the number of subgraphs generated as a function of the recursion cutoff, the number of disjoint clique embeddings used for parallel quantum annealing, the success rate of finding ground states (optimal solutions) at certain subgraph sizes, and time-to-solution (TTS) measurements for finding a maximum clique.

This work is structured as follows. After a literature review in Sects. 1.1 and 2 introduces the MC problem and its formulation as a QUBO and introduces the decomposition method for MC, as well as discusses a generalization of the TTS metric for solving MC instances with parallel embeddings. All experimental results on using D-Wave in connection with decomposition and parallel embeddings can be found in Sect. 3. The article concludes with a discussion in Sect. 4.

1.1 Literature review

Exact algorithms for solving NP-hard problems on real-life problems of practical importance have received continuous attention in the literature [23,24,25]. One can broadly distinguish between three flavors of such methods: exact exponential-time algorithms to decompose NP-hard problems, (polynomial-time) algorithms for special cases of NP instances, and hybrid algorithms relying on both decomposition and quantum annealing.

The idea of an exact decomposition for NP-hard problems, which also lays at the heart of the present contribution, dates back to at least [26]. Similar techniques for other NP-hard problems such as graph coloring are known [27]. For MC, the exact decomposition algorithm that serves as the basis of the present work has been proposed in [11]. In [16], the authors consider several techniques to speed up the decomposition by pruning subproblems that cannot contribute to the optimal solution, for instance by computing bounds on clique sizes in subproblems. Although bounding and pruning subproblems can considerably speed up the computations and allow one to solve problems of practical importance exactly, there is no guarantee that exact decomposition techniques terminate in reasonable time, and they do not asymptotically lower the exponential runtime complexity.

For the Maximum Independent Set problem, the complimentary problem of MC, a variety of exact algorithms are available [28,29,30,31]. Additionally, special cases can be solved in polynomial-time [32,33,34]. Some known algorithms for such special cases rely on graph decomposition [35, 36]. Similarly to before, the aforementioned exact techniques still have an exponential runtime complexity, and the special cases that can be solved in polynomial time are rather specific, meaning they usually do not apply to problems of practical importance.

The algorithm of [16] has been generalized in [37] to decompose large Minimum Vertex Cover (MVC) problems, a related problem to MC, and turned into a framework for decomposing certain NP-hard graph problems that does not explicitly consider quantum annealing [19]. Further exponential-time algorithms for MVC have been proposed [38,39,40,41], including some aiming to reduce the size of an MVC instance [42, 43], and some considering the weighted MVC problem [44, 45].

The related problem of enumerating all maximum cliques of a graph has been addressed in the literature with the algorithms of [46, 47], which both do not rely on graph decomposition. A parallel version of the algorithm of [46] can be found in [48].

The present work falls under the area of branch-and-prune algorithms, specifically those that employ a different solver once the generated subproblems of the decomposition are sufficiently small. A survey of such algorithms can be found in [49] and [50]. Recent works that specifically employ the D-Wave quantum annealer in connection with a classical decomposition include [51], who use the heuristic D-Wave tool “QBsolv”.

Although having their merits for practical use, the aforementioned algorithms cannot solve arbitrary instances in time that is asymptotically lower than exponential. Moreover, techniques using decomposition in connection with quantum annealing do not give a guarantee of optimality, as solutions of subproblems solved via quantum annealing are of a heuristic nature.

Finally, a survey of classical simplification techniques for QUBO problems, including problem-agnostic decomposition algorithms for QUBOs that are applicable in special cases, can be found in [52, 53].

2 Methods

This section introduces the MC problem that we aim to solve (Sect. 2.1) and the DBK algorithm, which is able to decompose an MC instance recursively into smaller instances of prespecified size (Sect. 2.2). Moreover, we introduce the D-Wave Advantage System 4.1 and its hardware (Sect. 2.3), as well as the idea of parallel quantum annealing (Sect. 2.4), which allows one to solve several problem instances in a single call to the quantum annealer. A generalization of the time-to-solution (TTS) metric for measuring the performance of the DBK algorithm is introduced in Sect. 2.5.

2.1 The maximum clique problem

Let \(G=(V,E)\) be an undirected graph with vertex set V and edge set \(E \subseteq V \times V\). A subgraph G(S) of G induced by a subset \(S \subseteq V\) is called a clique of G if it is a complete subgraph, that is, if \((v,w) \in E\) for all \(v,w \in S\), \(v \ne w\). A maximum clique of G is a clique of G of maximum size.

Many NP-hard problems can be easily reformulated as QUBO or Ising problems of the form of Eq. (1). This includes, for instance, graph coloring, graph partitioning, or the MC problem [10]. As given in [16], the QUBO formulation for the MC problem on a graph \(G=(V,E)\) is given by

where \({\overline{E}}\) denotes the edge set of the complement graph of G and the constants \(A>0\) and \(B>0\) need to satisfy \(A<B\). Without loss of generality, we fix \(A=1\) and \(B=2\) in the remainder of this work. Each binary variable \(x_v \in \{0,1\}\) for \(v \in V\) indicates if vertex v belongs to the maximum clique (\(x_v=1\)) or not (\(x_v=0\)).

2.2 The DBK algorithm

The DBK algorithm [16, 19, 37] was designed to decompose an input graph recursively into smaller subgraphs such that, in each recursion level, (a) each generated subgraph is strictly smaller than the graph that was split, and (b) the maximum clique of the input graph can be reconstructed exactly from the maximum cliques determined for the leaf graphs, given all subgraphs can be solved exactly. The name of the algorithm stands for decomposition, bounds, and k-core. These three components work as follows.

First, the decomposition of an input graph G is illustrated in Fig. 1. We choose a random vertex \(v \in G\) and use it to split G into two graphs, the subgraph \(G_v\) induced by the neighbor vertices of v, and the subgraph \(G'\) obtained by removing v from G including all its incident edges. If v is part of a maximum clique then that maximum clique, minus vertex v, will be contained in \(G_v\). Hence, the size of the maximum clique of \(G_v\) will be one less than the size of the maximum clique of G. On the other hand, if v is not part of a maximum clique, then v can be removed from G, resulting in a new graph \(G'\) that contains the same maximum clique as G. Therefore, the maximum clique can be reconstructed after determining the maximum clique in both \(G_v\) and \(G'\), with each of these graphs being strictly smaller than G. The decomposition is valid irrespective of the choice of the vertex v used for splitting the graph, though some choices might be advantageous in that they make the algorithm terminate faster. As shown in [16, 19], choosing v as the vertex of lowest degree yields the fastest solutions.

Second, before recursing into any of the generated subgraphs, the size of the clique contained in a subgraph can be lower and upper bounded using various techniques. For instance, the chromatic number computed with a fast heuristic (we use the Python module NetworkX of [54]), and the upper bound of [55] provide efficiently computable upper bounds. Likewise, a trivial lower bound on any subproblem in the decomposition tree is obtained by simply solving the other subbranch. These upper and lower bounds are employed in the DBK algorithm. These bounds were selected due to their low runtime complexity and good performance compared to other bounds (thus allowing one to prune many subproblems).

Third, the DBK algorithm attempts to simplify any newly generated subproblem in the decomposition tree before solving it further in a recursive fashion. After exploring several techniques, the k-core algorithm was selected in [16]. This algorithm works as follows. The k-code of a graph is the subgraph consisting of all vertices having degree at least k. Clearly, at any point during the decomposition, one can simplify a subgraph by replacing it with its k-core, where k is set to the largest clique number found so far, thus potentially reducing the size of some of the subproblems before continuing the recursion.

The complete algorithm is summarized in Algorithm 1, which uses a stack called subgraphs instead of a recursion. Starting from the input graph G, Algorithm 1 first queries a lower bound on the maximum clique and then simplifies G by replacing it with its k-core. Next, G is split at the lowest degree vertex of G. Both resulting subgraphs, denoted \(g'\) and \(g''\), are then examined recursively. After replacing them with their k-core as done before, the maximum clique size is lower bounded using the MCs found in \(g'\) and \(g''\) and k is updated if necessary. If the size of a subgraph is at most L, the size cutoff at which we attempt to solve the maximum clique problem, we query the provided solver method, otherwise a subgraph is added to the stack subgraph for further decomposition. Though proven to be exact, the DBK algorithm has a worst-case exponential runtime (which is to be expected since the MC problem is NP-hard).

The DBK algorithm can be specifically defined based on what Maximum Clique solver method is used. For example a heuristic Maximum Clique solver could be used, in which case, despite the decomposition being exact, the solution may not be optimal. In this case we use two different versions of DBK.

The first we call DBK-pQA; here the Maximum Clique problem is sampled using the D-Wave Advantage System 4.1 quantum annealer with parallel quantum annealing. In this case the solver method that is used in the DBK algorithm (line 16 of Algorithm 1) is querying the quantum annealer for 1000 samples, which are then post processed into solutions for the maximum clique problem. The largest clique found in those 1000 samples is returned to the main DBK algorithm. The DBK-pQA method is a hybrid quantum-classical algorithm.

The second variant of DBK we call DBK-fmc. In this case the Maximum Clique solver method (line 16 of Algorithm 1) is the Fast Maximum Clique solver of [56], which is an exact classical solver. Therefore the DBK-fmc algorithm is guaranteed to find the Maximum Clique of the given graph.

Illustration of the DBK vertex splitting applied to vertex v, resulting in the induced subgraph \(G_v\) of v, and a graph \(G'=(V',E')\) with \(V'=V \setminus \{v\}\) and all edges incident to v removed from E. Figure taken from [16]

2.3 The D-wave advantage system 4.1

The hardware connectivity of the D-Wave Advantage System systems is referred to as Pegasus [57], and the connectivity graphs of the D-Wave 2000Q systems are referred to as Chimera [58, 59]. Both of these connectivity graphs are relatively sparse, and they do not allow for the direct mapping of problem QUBO or Ising formulations with arbitrary connectivity onto the hardware. As an alternative to the direct embedding, a minor embedding allows a problem with arbitrary connectivity (up to the hard limit of the size of the hardware) to be embedded [12]. In a minor embedding, a representation of the logical problem is created in which sets of physical qubits are linked together into chains [60], where each chain represents a single logical qubit. Usually, the programmed weight of the logical variable is uniformly distributed across the chain qubits, although alternative weight distributions are possible [61, 62]. Computing a minor embedding with minimum chain length is NP-hard, but there are heuristics that can be used in order to efficiently generate minor embeddings [15]. Another viable method to circumvent repeatedly generating minor-embeddings is to generate a fixed clique minor-embedding [58], which then allows one to embed an arbitrary graph of any connectivity so long as its size is at most the one of the clique embedding.

One of the disadvantages of minor embeddings is that at large chain lengths, the chains may begin to disagree on the logical variable state when the final state of the qubits is read out. We call these instances broken chains. Broken chains typically indicate poor solution quality [63, 64], and the solutions with broken chains also need to either be repaired in some way or discarded entirely. Repairing broken chains is referred to as unembedding [65]. A simple method for resolving broken chains, and thus to form a logical variable state, is to simply take the majority vote on the qubit states of all physical qubits in a chain. Note that in the edge case of a broken chain being evenly split between states then a random choice with \(p=0.5\) is used to resolve the chain. In this research we always apply the majority vote unembedding method.

2.4 Parallel quantum annealing

One of the other disadvantages of minor embeddings is that a fixed clique embedding does not typically make maximal use of the hardware available (i.e., many qubits and couplers available on the hardware stay idle as nothing is mapped onto them). This problem, in conjunction with poorer solution quality at larger embedding sizes, gives rise to the natural idea of embedding multiple smaller (disjoint) cliques onto the quantum annealing hardware and thus solving multiple minor-embedded problems on the quantum annealer during the same anneal [17]. This idea is referred to as parallel quantum annealing, which makes better use of the available hardware compared to the sequentially solving of all individual problems. Parallel quantum annealing can be applied in order to solve a set of different QUBOs on a quantum annealer, or it can be used to solve the same QUBO multiple times on the quantum annealer. In this research we apply the method of solving the same maximum clique QUBO on as many embeddings (this is determined by the size of the QUBOs) as can be fit using the heuristic minor-embedding tool minorminer [15].

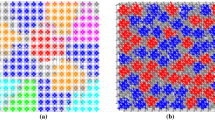

The quantum annealing backend used is the D-Wave Advantage System 4.1, hereafter referred to as D-Wave. Figure 2 shows the disjoint clique minor embeddings on the Pegasus connectivity graph of sizes ranging from two 100-vertex clique embeddings to twelve 50-vertex clique embeddings. Each of the disjoint embeddings can be used to solve either the same QUBO repeatedly in the same anneal, or to solve different QUBOs.

For usage in conjunction with the DBK algorithm, we solve each subproblem that DBK generates by computing the QUBO of the MC problem for that graph and embedding the QUBO as many times as possible given the fixed disjoint clique embeddings displayed in Fig. 2. The size of the clique embeddings being used is always equal to the DBK cutoff value used. This increases the probability that a maximum clique will be found during a single D-Wave call [17]. This is different from previous DBK approaches [16, 19] which solved subproblems using sequential quantum annealing.

While running the experiments in the context of the DBK algorithm, there is a potential inefficiency in the embedding usage, which is due to the fact that the sizes of the subgraphs generated by the DBK algorithm can be less than the DBK cutoff value. In such a case, the utilized embedding is larger than required, thus resulting in inefficient usage of the available qubits and unnecessarily long chains, which in turn potentially decreases the solution quality. However, for the purposes of being able to solve multiple problems simultaneously, this is a reasonable cost to take on.

Disjoint minor embeddings for parallel problem solving on the quantum annealer. The chip topology is for the D-Wave Advantage System 4.1. The minor-embeddings are of cliques of sizes \(N \in \{100, 90, 80, 70, 60, 50\}\) from top left to bottom. Red and Blue coloring is used (randomly) in order to help to visually differentiate neighboring minor embeddings

2.5 The time-to-solution (TTS) metrics

Since the D-Wave quantum annealer is a probabilistic solver and because of its imperfect hardware, it only samples a ground state solution for a QUBO or Ising problem with a certain probability. Therefore, many samples are usually necessary to obtain an optimal, or at least a sufficiently good, solution. In order to estimate the time it takes for D-Wave to find an optimal solution, one can use the so-called time-to-solution (TTS) metric [66, 67]. TTS is a measure of the time it takes to reach an optimal solution with a 99 percent probability.

When using parallel quantum computing, i.e., when solving \(N \in {\mathbb {N}}\) problem instances simultaneously on the D-Wave hardware, the standard method to compute the TTS does not apply. Let \(A_i \in {\mathbb {N}}\) be the number of samples for problem \(i \in \{1,\ldots ,N\}\), let \(T_{\text {QPU}_i}\) be the QPU access time (in seconds), and \(T_{\text {unembed}_i}\) be the classical processing time (in seconds) to unembed the solution vectors across all disjoint minor-embeddings. Since the same problem is embedded multiple times on the hardware chip, it is possible that a single anneal might have found the ground state solution multiple times. However, for the purposes of computing the ground state probability \(p_i\), we solely count the number of samples (among the total \(A_i\) samples) that found the ground state solution at least once. If \(p_i = 0\) we can not compute \(\text {TTS}_\text {opt}\). The quantity \(p_i\) is also called the ground state probability (GSP) for subgraph \(i \in \{1,\ldots ,N\}\). Lastly, we record the DBK processing time required to carry out the DBK decomposition (i.e., excluding the time required to solve each subgraph), denoted as \(T_\text {DBK\_proc\_time}\). We obtain the formula

if \(0<p_i<1\). The coefficient \(\frac{\log (0.01)}{\log (1-p_i)}\) corresponds to the number of times the algorithm should be run for the corresponding subgraph to ensure \(99\%\) probability of finding an optimal solution. In the special case where \(p_i = 1\) for some \(i \in \{1,\ldots ,N\}\), we set the TTS for that subgraph to be \((T_{\text {QPU}_i} + T_{\text {unembed}_i})/A_i\). Also, since we use a fixed minor-embedding, we can set the minor embedding computation time to zero because the embedding can be pre-computed.

Measuring the time-to-solution of a probabilistic algorithm is a useful way of comparing it to other algorithms. However, in computing the TTS for an algorithm that uses a quantum annealer, there are some important assumptions to note. First, when estimating the time to run an anneal (and the time to unembed that solution), we are assuming that the QPU time (and unembedding time) are proportional to the number of samples. This assumption is not necessarily true, in particular because one can gain efficiency by batching samples together into the same job. Second, the TTS formula aims to compute the minimum amount of time that is required to reach the optimal solution with \(99\%\) confidence. However this does not take into account that determining the optimal number of samples to take in order to reach this ideal TTS in general is not known before actually running the algorithm. Therefore, quantifying TTS in this manner gives a lower bound on the ideal computation time.

When using a quantum annealer in order to solve optimization problems in practice, it is easier to set the number of samples per problem to some fixed number. However, this means that when the quantum annealer is used as a subsolver in a larger hybrid algorithm (for instance DBK), then the optimal TTS given by formula (3) will not be achieved. In this case, we can consider computing the TTS of the DBK algorithm, where the success rate p is the number of trials where DBK succeeded in finding the maximum clique and \(T_{\text {QPU}_i}\) and \(T_{\text {unembed}_i}\) will be the same for all problems (since they only depend on the number of samples \(A_i\)). Importantly in this case the number of samples to use when querying the quantum annealer \(A_i=A\) can be selected by the user. The resulting TTS formula for fixed number of samples is

Here, \(T_\text {classical}\) is the classical time used by D-Wave, e.g., mapping the problem onto the D-Wave hardware and postprocessing the samples.

In the case \(p=1\), we set \(\text {TTS}_\text {fixed} = T_\text {QPU}+T_\text {classical}\). If \(p=0\), then \(\text {TTS}_\text {fixed}\) is not defined.

3 Experiments

This section presents some experimental results. After introducing the experimental setup in Sect. 3.1, we apply the DBK-fmc algorithm of Sect. 2.2 to a set of test graphs. We study how the number of subgraphs as well as their size and density behaves during the decomposition (Sect. 3.2), and we investigate the probability of finding an optimal solution at the leaf level of the decomposition tree, when using parallel quantum annealing, as a function of the DBK-fmc cutoff value (Sect. 3.3). Moreover, we consider a comparison of the DBK-fmc algorithm where the sub-problems are solved using parallel quantum annealing and the classical FMC solver using a modified TTS measure that emulates an optimal usage of quantum annealing in conjunction with DBK (Sect. 3.4). The experiments conclude with an application of the DBK-pQA algorithm where a quantum annealer is used as a real time subsolver with a fixed number of samples (Sect. 3.5).

3.1 Experimental set-up

All experiments are performed on a series of 60 Erdős-Rényi random graphs [18]. Each of these 60 graphs has 120 vertices and density sampled from a continuous uniform distribution in [0.1, 0.9]. Additionally, we ensure that each of the test graphs is connected, meaning there is a path between any pair of vertices.

We apply the DBK algorithm to each of the 60 graphs using different cutoff sizes for the subgraphs. To be precise, we study the behavior of the DBK algorithm for the cutoff values \(\{ 110, 100, 90, 80, 70, 60, 50\}\), where the solver used is the classical Fast Maximum Clique solver of [56]. Then, we investigate under what parameters the D-Wave quantum annealer would be able to solve the generated subgraph problems (as well as what the precise Time-to-Solution characteristics are). In this way, similarly to [16, 19, 37], we can emulate the expected quantum annealer behavior in the DBK algorithm. Next, using the information gained from the previous step we run DBK using the quantum annealer as the real-time subsolver.

The D-Wave settings we use are annealing time 50 microseconds, 1000 samples per backend call, readout thermalization 0 microseconds, programming thermalization 0 microseconds, and the reduce intersample correlation Boolean flag is set to True. The chain strength value is dynamically computed based on the individual QUBO properties using the D-Wave Ocean SDK function uniform torque compensation [68] (with a user-specified prefactor of 0.2). The uniform torque compensation method attempts to provide a chain strength that reduces the number of broken chains, chosen as the square root of the mean of the quadratic couplers of the QUBO. We found empirically that for the large Maximum Clique minor embedded problems, a uniform torque compensation prefactor less than 0.5 and greater than 0.1 gave the overall best results; we chose to use 0.2, which resulted in favorable approximation ratios overall (see Fig. 4). Because the settings across these experiments are constant, the resulting QPU time (specifically qpu-access-time) is relatively constant in these experiments at about 1 second per backend call.

In Figs. 3, 5, and 7 we color code lower input graph densities with dark blue, intermediate densities (0.5) are colored orange and yellow corresponds to higher input graph densities (before decomposition).

3.2 Number, density, and size of subgraphs with DBK-fmc

Figure 3 shows the number of subgraphs, the average subgraph density, as well as the average size of the subgraphs generated at each cutoff level of the DBK-fmc algorithm. Note that the number of generated subgraphs can be zero (in particular, if a generated subgraph is a clique itself then it is not necessary to solve it, and therefore it not counted as a subgraph to be solved).

First, Fig. 3 (top left) shows that the number of generated subgraphs generally increases as the DBK cutoff decreases, as one should expect, especially for higher density graphs. At lower densities, the number of subgraphs is generally quite small (sometimes even zero) and does not greatly increase as the cutoff level decreases. An interesting case occurs for some high density graphs, whereby the trend of increasing generated subgraph counts actually reverses at a cutoff value of 60. The precise reason why the reversal occurs at a cutoff value of 60 is not clear, but the existence of a reversal is somewhat expected. This is due to the fact that two phenomena work against each other. A lower cutoff value causes the decomposition to run longer, thus producing more subgraphs. At the same time, an increase in the number of subgraphs results in all bounds working better, thus leading to more pruning.

Second, Fig. 3 (top right) shows that on average, as the cutoff decreases, the subgraph density increases in comparison to the density of the input graph. This is again a result of the design of the DBK algorithm, as the DBK algorithm preferentially removes lower degree nodes, both with the help of the k-core reduction and the low degree vertex removal when partitioning.

Third, Fig. 3 (bottom) shows the average subgraph size at each cutoff level. By construction of the DBK algorithm, the size of the subgraphs will be either equal to or smaller than the cutoff level. The latter case happens if either the bounds or the k-core reduction worked particularly well. As can be seen, the size of most subgraphs is indeed roughly equal to the cutoff for high densities, while subgraph sizes for lower densities vary widely.

3.3 Solving the maximum clique problem on the subgraphs

We are interested in exploring the accuracy of the solutions found by parallel quantum annealing on the leaf level of the DBK-fmc decomposition. First, for the test graphs of Sect. 3.1, Fig. 2 shows the disjoint minor-embeddings for different all-to-all problem graphs embedded on the Advantage System 4.1 Pegasus connectivity graph. We observe that a single anneal can find the maximum clique multiple times in a single anneal when using parallel embeddings. However, when calculating the ground state probability p in Eq. (3) over multiple samples, we only consider a binary indicator (maximum clique found at least once vs. not found) per sample. Note that, typically, single set of samples will contain a small number of optimal solutions (e.g., once or twice), if any.

Figure 4 shows the approximation ratios (specifically the approximation ratio of the largest clique found among the 1000 anneals) across all subgraphs generated during decomposition across the varying DBK cutoff values. Importantly, the approximation ratios consistently decrease as cutoff gets larger, and at a DBK cutoff of 50, all of the subgraphs can be solved exactly. The difficult task is that for the original large graphs there is not a consistent way to know a-priori if the quantum annealer will find the maximum clique or not; this approximation ratio plot shows that decomposing the graph into smaller subgraphs increases the approximation ratio of the solutions found, up to being able to always find the maximum clique (at least for these 60 random graphs). The approximation ratios in Fig. 4 at a DBK cutoff of 120 correspond to the non-parallel QA results from embedding a single 120 node clique onto the hardware and running the MC QUBO for each of the 60 graphs on the hardware. Once the DBK decomposition is applied we see a gradual decrease in the failure rate to reach the optimal solution.

Scatter plot of the Maximum Clique approximation ratios across all subgraphs that were solved as a function of the DBK cutoff. Computed by taking the best Maximum Clique solution (unembedded with majority vote) out of the 1000 samples used for each subgraph. The data at a DBK cutoff of 120 corresponds to the original input graphs

Next, Fig. 5 looks at the failure rate for finding ground state solutions over all 60 test graphs as a function of the DBK cutoff value. We observe that for higher graph densities, ground state solutions are almost never found when using a DBK cutoff of 90 or more, while for graphs decomposed down to sizes 70 or less, the ground state of the subproblems is almost always found. As already observed in Sect. 3.2, the size and density of the subgraphs for lower input densities varies widely, resulting in either very easy or very hard MC problems for the quantum annealer. This causes the failure rate to be very volatile for lower input densities.

Left: Failure rate (failure to reach a ground state solution) as a function of the DBK cutoff values (after majority vote unembedding). Graph densities ranging from 0.1 to 0.9 (see color legend). Right: Averaged results for four groups of input graph densities; 0.1-\(-\)0.3, 0.3-\(-\)0.5, 0.5-\(-\)0.7, and 0.7-\(-\)0.9 (the coloring corresponds to the median graph density of those ranges)

Last, we visualize the number of subgraphs stratified by the proportion of samples that correctly found the optimal solution, referred to as the ground state probability (GSP) of the Maximum Clique QUBO(s), as a histogram. Figure 6 shows that, as seen before, hardly any subproblem can be solved for a DBK cutoff of 110. The lower the cutoff, the most subproblems can be solved to optimality by D-Wave. As seen in Fig. 5, the GSP for a DBK cutoff of 50 is always nonzero, meaning that when decomposing down to a subproblem size of 50, all problems could be solved at least once to optimality in the 1000 samples from the quantum annealer.

3.4 Comparison of the DBK algorithm and FMC with the TTS measure

We compare the full DBK algorithm to the classical FMC solver using the TTS measure introduced in Eq. (3).

We note that the current noisy intermediate-scale quantum (NISQ) technology [69], including D-Wave’s quantum annealers, is not advanced enough and, hence, not competitive with classical computers on solving NP-hard optimization problems when dedicated algorithms are used. Regardless, we compare our algorithm against a highly optimized MC solver, the Fast Maximum Clique (FMC) solver from [56] in order to determine in which cases the quantum algorithm performance gets closer to the classical one.

Figure 7 shows several aspects of the comparison. First, Fig. 7 (left) shows the the TTS time of Eq. (3) for the full DBK algorithm (including time for decomposition and unembedding) as a function of the DBK cutoff. We observe that the lower the density, the faster a test graph can be solved by the DBK algorithm. Moreover, high density graphs can only be solved when decomposing them to relatively low DBK cutoffs of 50 to 60, whereas lower density graphs can be decomposed at higher cutoffs as well. Importantly, at larger DBK cutoffs we have a lack of TTS values in the figure (especially at higher densities); this is because those TTS values could not be computed because for at least one subgraph \(p=0\). This means that none of the 1000 quantum annealing samples found the maximum clique at least once, which causes \(p_i=0\) in Eq. (3) which means a TTS value cannot be computed. Thus another important aspect of Fig. 7 is showing at what densities and DBK cutoff values can all subgraphs, that were generated by DBK-fmc, be solved by the quantum annealer in 1000 samples.

Second, Fig. 7 (right) shows the ratio of the classical FMC process time over the TTS time for the full DBK algorithm. Here, values above one indicate that FMC is slower than the quantum annealer sampling all subgraphs generated by the DBK-fmc algorithm. Indeed, we observe that the DBK-fmc algorithm with quantum annealing is superior to the entirely classical approach for high densities at low cutoff values of around 50 or 60, and computes maximum cliques up to two orders of magnitude faster than FMC. For either higher densities, or for higher cutoff values than 60, the classical FMC algorithm is the better choice when solving the MC problem.

TTS as a function of the DBK cutoff for solving each of the 60 test graphs. Each line represents a single graph being decomposed over different DBK cutoff values. Log scale on the y-axis. Graph densities ranging from 0.1 to 0.9 (see color legend). TTS time for the full DBK algorithm (including time for decomposition and unembedding) according to Eq. 3 (left), and FMC time divided by TTS time for the full DBK algorithm (right)

3.5 Using D-wave as a real-time subsolver for DBK

In the previous experiments, we have emulated the procedure of executing DBK where the D-Wave quantum annealer is the subsolver. This was accomplished by exactly solving the problem graphs using FMC, and then determining what parameters (for example, annealing parameter and the DBK cutoff level) to use in order to consistently find the Maximum Clique.

In this section, we present results when using the quantum annealer, with the fixed disjoint clique embeddings for parallel quantum annealing, in the real time execution of DBK-pQA (with no classical solver). Based on our previous experiments (see Fig. 6), we would expect that with a DBK-pQA cutoff level of 50 and using 1000 samples per subgraph, the Maximum Clique solution would be consistently found.

In order to perform this experiment we ran the first 20 random graphs of the 60 generated for consistent experiments a total of 5 times, while using the quantum annealer (with majority vote unembedding) as the subsolver. Using Eq. (4) in order to compute the \(TTS_{\text {fixed}}\), we can use the 5 different runs for each graph in order to compute p. However, in all 100 experiments, the algorithm found the maximum clique upon termination. Thus, \(p=1\) in all cases, and therefore the time-to-solution was simply the average sum of the used QPU time plus the used classical processing time. Figure 8 shows the Time-to-Solution results.

The \(TTS_{\text {fixed}}\) metric quantifies the success rate of the DBK-pQA algorithm, as opposed to the success rate of the individual samples from the quantum annealer. Therefore, for a large number of anneal samples (i.e., 1000), we would expect the \(TTS_{\text {fixed}}\) metric to be larger than the \(TTS_{\text {opt}}\) metric. We observe this to be the case in Fig. 8. Figure 8 shows that the DBK-pQA TTS when using the quantum annealer as a real-time subsolver is exponential with respect to the graph density.

Most importantly, for all 20 random graphs used in this experiment, DBK-pQA found an optimal solution to each original graph. This is despite the fact that D-Wave is a probabilistic sampler and DBK may generate up to 10,000 subgraphs for each input graph that are each sent to D-Wave for finding a MC.

4 Discussion

In this work, we consider solving the Maximum Clique problem using a combination of graph decomposition and parallel quantum annealing. To be precise, we base our solution on the DBK algorithm [16] to decompose an arbitrary input graph to subgraphs of any pre-specified cutoff level sizes. We then employ the D-Wave Advantage System 4.1, a quantum annealer manufactured by D-Wave Systems, Inc., to solve the subproblems generated during the decomposition. In order to best leverage the capabilities of the D-Wave annealer, we embed and solve several of the generated subproblems simultaneously, an idea previously introduced under the name of parallel quantum annealing in the literature [17]. Therefore, this work shows the end-to-end process required to solve large maximum clique problems to (near) optimality using a quantum annealer.

Using the DBK algorithm in connection with D-Wave Advantage System 4.1, we are able to sample ground state solutions of MC problems of up to 120 vertices, and additionally employ parallel quantum annealing to speed up the computations. We demonstrate that in some cases, the resulting algorithm can compute maximum cliques in high density graphs up to around two to three orders of magnitude faster than a classical solver. Current NISQ annealing devices are not necessarily expected to outperform classical algorithms, although a scaling advantage using quantum annealing has been demonstrated in [70]. The experiment reported in [70] considers the simulation of geometrically frustrated magnets (which reduces to a 0–1 integer programming problem on a given geometric lattice) using quantum annealing, and demonstrates that a quantum annealing processor can provide a computational advantage over the classical counterpart, path-integral Monte Carlo (PIMC).

This work leaves scope for further research avenues:

-

1.

The methodology of this article (the exact graph decomposition in connection with multiple embeddings) can be applied to other NP-hard problems such as the minimum vertex cover problem.

-

2.

The performance of the quantum annealing backend can be improved by using further tunable features such as h-gain schedules, anneal offsets, flux bias offsets, and spin reversals.

-

3.

It remains to be analyzed how different minor embeddings solve the same QUBO when they are all used in the same annealing cycle. In particular, it is unclear if some embeddings contribute more than others to finding the ground state solutions. If so, what characteristics of those embeddings cause them to perform better than the other embeddings? Additionally, the spatial and temporal correlations with regard to which embeddings are finding maximum cliques remain to be investigated.

-

4.

Do minor embeddings that have the same connectivity perform the same or differently during the same anneal(s)? In other words, if all of the disjoint embeddings used in the parallel quantum annealing method are exactly the same, just acting on different qubits, are the ground states found across the different embeddings with (nearly) equal probabilities?

-

5.

Utilizing an efficient algorithm to compute the minimum number of samples required in order to obtain an optimal TTS (as opposed to using a fixed number of samples) would significantly reduce the real time computation required to run DBK with a quantum annealer.

Data availability

Python implementation and data for parallel quantum annealing and the DBK graph decomposition algorithm can be found on Github [71].

References

Hauke, P., Katzgraber, H.G., Lechner, W., Nishimori, H., Oliver, W.D.: Perspectives of quantum annealing: methods and implementations. Rep. Prog. Phys. 83(5), 054401 (2020). https://doi.org/10.1088/1361-6633/ab85b8

Morita, S., Nishimori, H.: Mathematical foundation of quantum annealing. J. Math. Phys. 49(12), 125210 (2008). https://doi.org/10.1063/1.2995837

Kadowaki, T., Nishimori, H.: Quantum annealing in the transverse Ising model. Phys. Rev. E 58(5), 5355–5363 (1998). https://doi.org/10.1103/physreve.58.5355

Das, A., Chakrabarti, B.K.: Colloquium: quantum annealing and analog quantum computation. Rev. Mod. Phys. 80(3), 1061 (2008). https://doi.org/10.1103/revmodphys.80.1061

Johnson, M.W., Amin, M.H., Gildert, S., Lanting, T., Hamze, F., Dickson, N., Harris, R., Berkley, A.J., Johansson, J., Bunyk, P., et al.: Quantum annealing with manufactured spins. Nature 473(7346), 194–198 (2011)

D-Wave: Technical Description of the D-Wave Quantum Processing Unit. D-Wave. https://docs.dwavesys.com/docs/latest/doc_qpu.html (2022)

Kadowaki, T., Nishimori, H.: Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355–5363 (1998). https://doi.org/10.1103/PhysRevE.58.5355

Lanting, T., Przybysz, A.J., Smirnov, A.Y., Spedalieri, F.M., Amin, M.H., Berkley, A.J., Harris, R., Altomare, F., Boixo, S., Bunyk, P., Dickson, N., Enderud, C., Hilton, J.P., Hoskinson, E., Johnson, M.W., Ladizinsky, E., Ladizinsky, N., Neufeld, R., Oh, T., Perminov, I., Rich, C., Thom, M.C., Tolkacheva, E., Uchaikin, S., Wilson, A.B., Rose, G.: Entanglement in a quantum annealing processor. Phys. Rev. X 4, 021041 (2014). https://doi.org/10.1103/PhysRevX.4.021041

Boixo, S., Albash, T., Spedalieri, F.M., Chancellor, N., Lidar, D.A.: Experimental signature of programmable quantum annealing. Nat. Commun. 4(1), 1–8 (2013). https://doi.org/10.1038/ncomms3067

Lucas, A.: Ising formulations of many NP problems. Front. Phys. 2, 5 (2014). https://doi.org/10.3389/fphy.2014.00005

Chapuis, G., Djidjev, H., Hahn, G., Rizk, G.: Finding maximum cliques on a quantum annealer. In: Proceedings of the Computing Frontiers Conference, pp. 63–70. New York, NY, USA. https://doi.org/10.1145/3075564.3075575. arXiv:1801.08649 (2017)

Choi, V.: Minor-embedding in adiabatic quantum computation: I. The parameter setting problem. Quantum Inf. Process. 7, 193–209 (2008)

Marshall, J., Di Gioacchino, A., Rieffel, E.G.: Perils of embedding for sampling problems. Phys. Rev. Res. 2, 023020 (2020). https://doi.org/10.1103/PhysRevResearch.2.023020

Grant, E., Humble, T.: Benchmarking embedded chain breaking in quantum annealing. Quantum Sci. Technol. https://doi.org/10.1088/2058-9565/ac26d2 (2022)

Cai, J., Macready, W.G., Roy, A.: A practical heuristic for finding graph minors. arXiv:1406.2741 (2014)

Pelofske, E., Hahn, G., Djidjev, H.N.: Solving large maximum clique problems on a quantum annealer. In: Proceedings of the First International Workshop on Quantum Technology and Optimization Problems QTOP’19. arXiv:1901.07657 (2019)

Pelofske, E., Hahn, G., Djidjev, H.N.: Parallel quantum annealing. Sci. Rep. 12, 4499 (2022)

Erdős, P., Rényi, A.: On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci. 5, 17–61 (1960)

Pelofske, E., Hahn, G., Djidjev, H.N.: Decomposition algorithms for solving NP-hard problems on a quantum annealer. J. Signal Process. Syst. 93(4), 405–420 (2021). https://doi.org/10.1007/s11265-020-01550-1

Ohkura, Y., Satoh, T., Van Meter, R.: Simultaneous execution of quantum circuits on current and near-future NISQ systems. IEEE Trans. Quantum Eng. 3, 1–10 (2022). https://doi.org/10.1109/TQE.2022.3164716

Niu, S., Todri-Sanial, A.: Enabling multi-programming mechanism for quantum computing in the NISQ era. Quantum 7, 925 (2023). https://doi.org/10.22331/q-2023-02-16-925

Das, P., Tannu, S.S., Nair, P.J., Qureshi, M.: A case for multi-programming quantum computers. In: Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture. MICRO ’52, pp. 291–303. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3352460.3358287 (2019)

Johnson, D.S., Tricks, M.A.: Cliques, Coloring and Satisfiability, Second DIMACS Implementation Challenges, vol. 26. American Mathematical Society, Providence (1996)

DIMACS: Workshop on Faster Exact Algorithms for NP-Hard Problems. Princeton, NJ (2000)

Woeginger, G.J.: Open problems around exact algorithms. Discrete Appl. Math. 156(3), 397–405 (2008)

Tarjan, R.E.: Decomposition by clique separators. Discrete Math. 55(2), 221–232 (1985)

Rao, M.: Solving some NP-complete problems using split decomposition. Discrete Appl. Math. 156(14), 2768–2780 (2008)

Robson, J.M.: Algorithms for maximum independent sets. J. Algorithms 7, 425–440 (1986)

Robson, J.M.: Finding a maximum independent set in time \(O(2^{n/4})\). https://www.labri.fr/perso/robson/mis/techrep.html (2001)

Fomin, F.V., Grandoni, F., Kratsch, D.: Measure and conquer: a simple \(O(2^{0.288n})\) independent set algorithm. In: SODA ’06: Proceedings of the Seventeenth Annual ACM-SIAM Symposium on Discrete Algorithm, pp. 18–25 (2006)

Xiao, M., Nagamochi, H.: Exact algorithms for maximum independent set. In: Cai, L., Cheng, S.W., Lam, T.W. (eds.) Algorithms and Computation. ISAAC 2013. Lecture Notes in Computer Science, vol. 8283. Springer, Berlin, Heidelberg (2013)

Minty, G.J.: On maximal independent sets of vertices in claw-free graphs. J. Comb. Theory Ser. B 28(3), 284–304 (1980)

Grötschel, M., Lovász, L., Schrijver, A.: Geometric Algorithms and Combinatorial Optimization. Springer, Berlin (1988)

Dabrowski, K., Lozin, V., Müller, H., Rautenbach, D.: Parameterized Algorithms for the Independent Set Problem in Some Hereditary Graph Classes. Combinatorial Algo. Lecture Notes in Comp Sc. Springer, Berlin (2011)

Giakoumakis, V., Vanherpe, J.: On extended P4-reducible and extended P4-sparse graphs. Theoret. Comput. Sci. 180, 269–286 (1997)

Courcelle, B., Makowsky, J.A., Rotics, U.: Linear time solvable optimization problems on graphs of bounded clique-width. Theory Comput. Syst. 33(2), 125–150 (2000)

Pelofske, E., Hahn, G., Djidjev, H.N.: Solving large minimum vertex cover problems on a quantum annealer. In: Proceedings of the Computing Frontiers Conference CF’19. arXiv:1904.00051 (2019)

Balasubramanian, R., Fellows, M., Raman, V.: An improved fixed parameter algorithm for vertex cover. Inf. Process. Lett. 65, 163–168 (1998)

Stege, U., Fellows, M.: An improved fixed-parameter-tractable algorithm for vertex cover. Technical Report 318, Department of Computer Science, ETH Zurich (1999)

Chen, J., Liu, L., Jia, W.: Improvement on vertex cover for low degree graphs. Networks 35, 253–259 (2000)

Chen, J., Kanj, I., Jia, W.: Vertex cover: further observations and further improvements. J. Algorithms 41, 280–301 (2001)

Niedermeier, R., Rossmanith, P.: Upper bounds for vertex cover further improved. In: Annual Symposium on Theoretical Aspects of Computer Science (STACS). https://doi.org/10.1007/3-540-49116-3_53 (2007)

Chen, J., Kanj, I., Xia, G.: Improved upper bounds for vertex cover. Theoret. Comput. Sci. 411, 3736–3756 (2010)

Niedermeier, R., Rossmanith, P.: On efficient fixed-parameter algorithms for weighted vertex cover. J. Algorithms 47, 63–77 (2003)

Xu, H., Kumar, T., Koenig, S.: A new solver for the minimum weighted vertex cover problem. In: Quimper, C.G. (ed.) Integration of AI and OR Techniques in Constraint Programming. CPAIOR 2016. Lecture Notes in Computer Science, vol. 9676. Springer, Cham (2016)

Bron, C., Kerbosch, J.: Algorithm 457: finding all cliques of an undirected graph. Commun. ACM 16(9), 575–577 (1973)

Carraghan, R., Pardalos, P.: An exact algorithm for the maximum clique problem. Oper. Res. Lett. 9(6), 375–382 (1990)

Rossi, R., Gleich, D., Gebremedhin, A.: Parallel maximum clique algorithms with applications to network analysis. SIAM J. Sci. Comput. 37(5), 589–616 (2015)

Hou, Y.T., Shi, Y., Sherali, H.D.: hou_shi_sherali_2014. In: Applied Optimization Methods for Wireless Networks, pp. 95–121. Cambridge, Cambridge University Press (2014). https://doi.org/10.1017/CBO9781139088466

Morrison, D., Jacobson, S., Sauppe, J., Sewell, E.: Branch-and-bound algorithms: a survey of recent advances in searching, branching, and pruning. Discrete Optim. 19, 79–102 (2016). https://doi.org/10.1016/j.disopt.2016.01.005

Bass, G., Henderson, M., Heath, J., Dulny III, J.: Optimizing the Optimizer: Decomposition Techniques for Quantum Annealing. arXiv:2001.06079 (2020)

Boros, E., Hammer, P.L.: Pseudo-boolean optimization. Discrete Appl. Math. 123(1–3), 155–225 (2002)

Boros, E., Hammer, P.L., Tavares, G.: Preprocessing of Unconstrained Quadratic Binary Optimization. Rutcor Research Report RRR 10-2006, vol. 1–58 (2006)

Hagberg, A., Schult, D., Swart, P.: Exploring network structure, dynamics, and function using NetworkX. In: Proceedings of SciPy2008, pp. 11–15 (2008)

Budinich, M.: Exact bounds on the order of the maximum clique of a graph. Discrete Appl. Math. 127(3), 535–543 (2003)

Pattabiraman, B., Patwary, M.A., Gebremedhin, A.H., Liao, W.-k., Choudhary, A.: Fast algorithms for the maximum clique problem on massive sparse graphs. In: Algorithms and Models for the Web Graph, pp. 156–169. Springer International Publishing (2013)

Boothby, K., Bunyk, P., Raymond, J., Roy, A.: Next-Generation Topology of D-Wave Quantum Processors. arXiv:2003.00133 (2020)

Boothby, T., King, A.D., Roy, A.: Fast clique minor generation in chimera qubit connectivity graphs. Quantum Inf. Process. 15(1), 495–508 (2016)

Vert, D., Sirdey, R., Louise, S.: On the limitations of the chimera graph topology in using analog quantum computers. In: Proceedings of the 16th ACM International Conference on Computing Frontiers, pp. 226–229 (2019)

King, A.D., McGeoch, C.C.: Algorithm engineering for a quantum annealing platform. arXiv preprint arXiv:1410.2628 (2014)

Raymond, J., Stevanovic, R., Bernoudy, W., Boothby, K., McGeoch, C., Berkley, A.J., Farré, P., King, A.D.: Hybrid quantum annealing for larger-than-QPU lattice-structured problems. https://doi.org/10.48550/ARXIV.2202.03044. arXiv:2202.03044 (2022)

Barbosa, A., Pelofske, E., Hahn, G., Djidjev, H.N.: Optimizing embedding-related quantum annealing parameters for reducing hardware bias. In: International Symposium on Parallel Architectures, Algorithms and Programming, pp. 162–173 (2020). Springer

Grant, E., Humble, T.S.: Benchmarking embedded chain breaking in quantum annealing. Quantum Sci. Technol. 7(2), 025029 (2022)

Marshall, J., Mossi, G., Rieffel, E.G.: Perils of embedding for quantum sampling. Phys. Rev. A 105(2), 022615 (2022). https://doi.org/10.1103/physreva.105.022615

Pelofske, E., Hahn, G., Djidjev, H.: Advanced unembedding techniques for quantum annealers. In: 2020 International Conference on Rebooting Computing (ICRC), pp. 34–41. https://doi.org/10.1109/ICRC2020.2020.00001 (2020)

Venturelli, D., Kondratyev, A.: Reverse quantum annealing approach to portfolio optimization problems. Quantum Mach. Intell. 1, 17–30 (2019)

Perdomo-Ortiz, A., Fluegemann, J., Narasimhan, S., Biswas, R., Smelyanskiy, V.N.: A quantum annealing approach for fault detection and diagnosis of graph-based systems. Eur. Phys. J. Spec. Top. 224, 131–148 (2015)

Inc., D.-W.S.: Uniform torque compensation. https://docs.ocean.dwavesys.com/projects/system/en/stable/reference/generated/dwave.embedding.chain_strength.uniform_torque_compensation.html (2022)

Preskill, J.: Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018). https://doi.org/10.22331/q-2018-08-06-79

King, A.D., Raymond, J., Lanting, T., Isakov, S.V., Mohseni, M., Poulin-Lamarre, G., Ejtemaee, S., Bernoudy, W., Ozfidan, I., Smirnov, A.Y., Reis, M., Altomare, F., Babcock, M., Baron, C., Berkley, A.J., Boothby, K., Bunyk, P.I., Christiani, H., Enderud, C., Evert, B., Harris, R., Hoskinson, E., Huang, S., Jooya, K., Khodabandelou, A., Ladizinsky, N., Li, R., Lott, P.A., MacDonald, A.J.R., Marsden, D., Marsden, G., Medina, T., Molavi, R., Neufeld, R., Norouzpour, M., Oh, T., Pavlov, I., Perminov, I., Prescott, T., Rich, C., Sato, Y., Sheldan, B., Sterling, G., Swenson, L.J., Tsai, N., Volkmann, M.H., Whittaker, J.D., Wilkinson, W., Yao, J., Neven, H., Hilton, J.P., Ladizinsky, E., Johnson, M.W., Amin, M.H.: Scaling advantage over path-integral Monte Carlo in quantum simulation of geometrically frustrated magnets. Nat. Commun. 12(1), 1113 (2021)

Pelofske, E.: Python implementation of DBK and Parallel QA. https://github.com/lanl/Parallel-Quantum-Annealing and https://github.com/lanl/Decomposition-Algorithms-for-Scalable-Quantum-Annealing (2022)

Acknowledgements

This work was supported by the U.S. Department of Energy through the Los Alamos National Laboratory. Los Alamos National Laboratory is operated by Triad National Security, LLC, for the National Nuclear Security Administration of U.S. Department of Energy (Contract No. 89233218CNA000001). The research presented in this article was supported by the Laboratory Directed Research and Development program of Los Alamos National Laboratory under project number 20220656ER. The work of Hristo Djidjev has been also partially supported by the Grant No BG05M2OP001\(-\)1.001-0003, financed by the Science and Education for Smart Growth Operational Program (2014–2020) and co-financed by the European Union through the European structural and Investment funds. This research used resources provided by the Darwin testbed at Los Alamos National Laboratory (LANL), which is funded by the Computational Systems and Software Environments subprogram of LANL’s Advanced Simulation and Computing program (NNSA/DOE). This research used resources provided by the Los Alamos National Laboratory Institutional Computing Program. Research presented in this article was supported by the NNSA’s Advanced Simulation and Computing Beyond Moore’s Law Program at Los Alamos National Laboratory.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no Competing Interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pelofske, E., Hahn, G. & Djidjev, H.N. Solving larger maximum clique problems using parallel quantum annealing. Quantum Inf Process 22, 219 (2023). https://doi.org/10.1007/s11128-023-03962-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-023-03962-x