Abstract

The entropic uncertainty relations are a very active field of scientific inquiry. Their applications include quantum cryptography and studies of quantum phenomena such as correlations and non-locality. In this work we find entanglement-dependent entropic uncertainty relations in terms of the Tsallis entropies for states with a fixed amount of entanglement. Our main result is stated as Theorem 1. Taking the special case of von Neumann entropy and utilizing the concavity of conditional von Neumann entropies, we extend our result to mixed states. Finally we provide a lower bound on the amount of extractable key in a quantum cryptographic scenario.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Formulated by Heisenberg [1], the uncertainty relation gives insight into differences between classical and quantum mechanics. According to the relation, simultaneous measurements of some non-commuting observables of a particle cannot be predicted with arbitrary precision.

Numerous studies over the uncertainty relations led to entropic formulation by Białynicki-Birula and Mycielski [2,3,4], as a sum of two continuous Shannon entropies, for probability distributions of position and momentum. As our goal is to consider general observables, let us choose two Hermitian non-commuting operators X and Y. The first uncertainty relation that holds for a pair of arbitrary observables was derived by Deutsch [5]

where H(X) and H(Y) denote the Shannon entropies of the probability distributions obtained during measurements of X and Y, respectively. If \(|\phi _j\rangle \), \(|\psi _k\rangle \) are the eigenvectors of X and Y, then \(c=\max _{j,k}|\langle {\psi _j}|{\phi _k}\rangle |\). Kraus conjectured [6] and Maassen and Uffink [7] proved a stronger result

where H(X), H(Y) and c are the same as in relation proposed by Deutsch.

The entropic uncertainty relations are a very active field of scientific inquiry [8, 9]. One of the reasons is the applications in quantum cryptography [10,11,12]. Another area where entropic uncertainty relations are widely used is studies of quantum phenomena such as correlations and non-locality [13,14,15]. Some results were generalized; hence, entropic formulations of the uncertainty relation in terms of Rényi entropies are included in [16]. Uncertainty relations for mutually unbiased bases and symmetric informationally complete measurements in terms of generalized entropies of Rényi and Tsallis can be found [17].

In [18] it was shown that entropic uncertainty relations can be derived for binary observables from effective anti-commutation, which can be important in device-independent cryptography. This result was generalized in [19] for entropic uncertainty relations in the presence of quantum memory.

The majorization-based bounds of uncertainty relation were first introduced by Partovi in [20], which was generalized in [21, 22]. In [21], majorization techniques were applied to obtain lower bound of the uncertainty relation, which can give the bound stronger than the well-known result of Massen and Uffink. The formulation of strong majorization uncertainty relation presented in [23] is involved, but in the case of qubits it can be expressed as

The asymptotic analysis of entropic uncertainty relations for random measurements has been provided in [24] with the use majorization bounds. Some interesting results along these lines are included in [25,26,27].

In [28], Berta et al. considered the uncertainty relation for a system with the presence of a quantum memory. In this setup, the system is described by a bipartite density matrix \(\rho _{AB}\). Quantum conditional entropy can be defined as

where S(B) denotes the von Neumann entropy of the state \(\rho _B=\mathrm {Tr}_A \rho _{AB}\). Equation (4) is also known as the chain rule. We also introduce the states \(\rho _{XB}\) and \(\rho _{YB}\) as

which are post-measurement states, when the measurements were performed on the part A. Berta et al. [28] showed that a bound on the uncertainties of the measurement outcomes depends on the amount of entanglement between measured particle and the quantum memory. As a consequence, they formulated a conditional uncertainty relation given as

Entropy S(A|B) quantifies the amount of entanglement between the particle and the memory. The bound of Berta et al. [28] was improved by Coles and Piani in [29] through replacing the state-dependent value \(B_{MU}\) with larger parameter. The result of Coles and Piani was improved in [30]. This relation was also generalized for Rényi entropies, and several important result can be found in [31,32,33]. The uncertainty relation is also considered in the context of quantum-to-classical randomness extractors (QC-extractors) [34]. It is proved that QC-extractors give rise to uncertainty relation with the presence of a quantum memory.

In the absence of the quantum memory bound (6) reduces to (2) for pure \(\rho _{AB}\). The results by Berta et al. [28] and by Li et al. [35] can be applied to witnessing entanglement. This is a consequence of the fact that S(A|B) is negative for an entangled state \(\rho _{AB}\). Another field of application of entropic uncertainty relations with the presence of quantum memory is quantum cryptography [9]. The bound quantified by Berta et al. [28] was experimentally validated [36].

In this paper we aim at finding entanglement-dependent entropic uncertainty relations in terms of von Neumann and Tsallis entropies. Our results apply to states with a fixed amount of entanglement, described by parameter \(\lambda \). This allows us to find non-trivial bounds for the entropic uncertainty relation. Otherwise we would obtain a lower bound equal to zero. This bound is achieved in the case of the maximally entangled state. Notice that Berta et al. formulated the bound in a similar way. In their approach the information about entanglement was hidden in terms of H(A|B). In this case the bound is also zero for the maximally entangled state.

Let us now recall the notion of Tsallis entropy [37] which is a non-additive generalization of von Neumann entropy, and for a state \(\rho _X\), it is defined as

where \(\nu _i\) are the eigenvalues of \(\rho _X\) and \(q\in [0, \infty )\). Tsallis entropy is identical to the Havrda–Charvát structural \(\alpha \) entropy [38] in information theory. Note that when \(q\rightarrow 1\) we have \(T_q(X) \rightarrow S(X)\). The chain rule applies to Tsallis entropies, hence

We will use the following notation for Tsallis point entropy

In the limit \(q \rightarrow 1\) we recover

where \(\eta (x)=-x\log x\).

2 Qubit conditional uncertainty relations

Without a loss of generality let us assume that we start with an entangled state \(\rho _{AB}=|\psi _{AB}\rangle \langle \psi _{AB}|\), where \(|\psi _{AB}\rangle =\sqrt{\lambda }|00\rangle +\sqrt{1-\lambda }|11\rangle \). In this case, the parameter \(\lambda \) describes the entanglement between the parties A and B. We chose the eigenvectors of X and Y as \(|\phi _i\rangle = O(\theta )|i\rangle \) and \(|\psi _i\rangle = O(\theta +\epsilon )|i\rangle \), where

is a real rotation matrix. Hence, instead of optimizing the uncertainty relation over all possible states \(\rho _{AB}\), we will instead optimize over \(\theta \). Hereafter we assume \(\theta ,\varepsilon \in [0, \pi /2]\). In this case we have

It is important to notice that we can restrict our attention to real rotation matrices. This follows from the fact that any unitary matrix is similar to real rotation matrix. Matrices are similar, \(U \sim V\), if for some permutation matrices \(P_1,P_2\) and diagonal unitary matrices \(D_1,D_2\), we have \(V=P_1D_1 U D_2 P_2\) [21]. Next we note that the eigenvalues of states \(\rho _{XB}\) are invariant with respect to the equivalence relation.

We should also note here that the two-qubit scenario, simple as it is, may be easily generalized to an arbitrary dimension of system B.

As we are interested in binary measurements, the states \(\rho _{XB}\) and \(\rho _{YB}\) are rank-2 operators. The nonzero eigenvalues of \(\rho _{XB}\) can be easily obtained as

To obtain the eigenvalues of \(\rho _{YB}\) we need to replace \(\theta \) with \(\theta +\varepsilon \).

2.1 Analytical minima

Using eigenvalues of \(\rho _{XB}\) and \(\rho _{YB}\), we arrive at

Let us perform detailed analysis on the case when \(q\rightarrow 1\), i.e., the von Neumann entropy case. We get

In order to obtain an uncertainty relation, we need to minimize this quantity over the parameter \(\theta \). This is a complicated task even in the case \(\lambda =0\) and has been studied earlier [39]. We guess that \(\theta = \pi /2-\varepsilon /2\) is an extremal point of (14). Unfortunately, this point is the global minimum only when

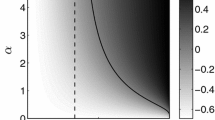

A numerical solution of this inequality is shown in Fig. 1. When this condition is satisfied, the uncertainty relation is

When the condition in Eq. (16) is not satisfied, our guessed extreme point becomes a maximum and two minima emerge, symmetrically to \(\theta =\pi /2-\varepsilon /2\). The reasoning can be generalized to \(T_q\) in a straightforward, yet cumbersome way. The details are presented in “Appendix.” The solutions of inequality (16) along with inequality (32) for various values of q are shown in Fig.1

Numerical solution of inequality (16) (\(q=1\)) as a function of \(\lambda \) along with solution of a corresponding inequality for chosen other values of q

2.2 Bounding the conditional entropies

In order to study the case of general Tsallis entropies \(T_q\), we introduce the following proposition

Proposition 1

Let \(\alpha \in [0,1]\) and \(q \in [0, 2] \cup [3,\infty )\), then

In the cases \(q=2\) and \(q=3\) we have an equality.

Proof

We define

Next we note that \(f(0) = f(\frac{1}{2})=0\). We will show that f has no other zeros on interval \((0, \frac{1}{2})\). We calculate

which is positive for \(q\in [0,2)\cup (3,\infty )\) and \(p \in [0, \frac{1}{2}]\). Therefore we obtain that \(f'(x)\) is strictly convex on \((0, \frac{1}{2})\) and \(f'(1/2)=0\).

Now let us assume that for \(x_0 \in (0,1/2)\) we have \(f(x_0) = 0\). Then by Rolle’s theorem, there exist points \(0< y_0< x_0< y_1 < \frac{1}{2}\) such that \(f'(y_0) = f'(y_1) = 0\). Together with fact that \(f'(1/2)=0\) we obtain a contradiction with the convexity of \(f'\) on \((0,\frac{1}{2})\).

Last thing to show is that for some \(\varepsilon \in (0,\frac{1}{2})\) we have \(f(\varepsilon ) >0\). To show it we write

Now we note that \(g(\alpha )\) is positive for \(\alpha \in (0,1) \setminus \left\{ \frac{1}{2} \right\} \) and \(q \in [0,2)\cup (3,\infty )\). This follows from convexity of g on these sets and the fact, that it has a minimum, \(g\left( \frac{1}{2}\right) = 0\). From this fact there exist \(\varepsilon >0\) such that \(f(\varepsilon )>0\).

The equalities in the case \(q=2,3\) follow from a direct inspection. \(\square \)

Now we are ready to state and prove the main result of this work

Theorem 1

Let \(\rho _{AB}=|\psi _{AB}\rangle \langle \psi _{AB}|\), where \(|\psi _{AB}\rangle =\sqrt{\lambda }|00\rangle +\sqrt{1-\lambda }|11\rangle \). Let us choose two observables X and Y with eigenvectors \(|\phi _i\rangle = O(\theta )|i\rangle \) and \(|\psi _i\rangle = O(\theta +\epsilon )|i\rangle \), where \(O(\theta )\) is as in Eq. (11). Then, the Tsallis entropic conditional uncertainty relation is

Proof

Applying Proposition 1 to Eq. (14) we get

The right-hand side achieves a unique minimum \(\theta =\pi /2-\varepsilon /2\) for \(\varepsilon \le \pi /4\) and \(\theta =\pi /4-\varepsilon /2\) for \(\varepsilon > \pi /4\). Inserting this value we recover Eq. (22). \(\square \)

Remark 1

In the limit \(q\rightarrow 1\) we get the following uncertainty relation for Shannon entropies

Remark 2

Using the concavity of the conditional von Neumann entropy, we may generalize bound (24) to mixed states \(\rho _{AB}\). We get

In order to see it, we consider a system in a mixed state \(\rho _{AB}\) and its decomposition into pure states \(\rho _{AB} = \sum p_i \rho ^{(i)}_{AB}\). We will use a lower index which will indicate the state of the system. The post-measurement states \(\rho _{XB},\rho _{YB}\) are defined as in Eq. (5). Now we write

The first inequality above follows from the concavity of the conditional von Neumann entropy. The second one is the usage of (24), while the third one follows from the concavity of the von Neumann entropy.

Remark 3

The state-dependent entropic uncertainty relation for \(q\rightarrow 1\) reads

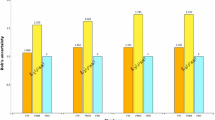

A comparison with the known entropic uncertainty relations for \(\lambda =0\) and \(q\rightarrow 1\) is shown in Fig. 2. As can be seen, our result gives a tighter bound than the one obtained by Massen and Uffink for all values of \(\varepsilon \). The bound is also tighter than \(B_{Maj2}\) when \(\varepsilon \) is in the neighborhood of \(\pi /4\).

A comparison of exact value (15), state-dependent lower bound (27) and \(B_{BCCRR}\) for different parameters \(\lambda \), \(\theta \) and \(\epsilon \) is presented in Figs. 3 and 4.

3 Security of quantum key distribution protocols

One of the possible applications of the uncertainty relation is quantum cryptography, where the relation allows us to bound of the amount of key the parties are able to extract per state.

Assume that an eavesdropper creates a quantum system \(\rho _{ABE}\). Next, parts A and B are distributed to Alice and Bob. The generation of a secret key is based on measurements X, Y and \(X',Y'\) performed by Alice and Bob, respectively. Subsequently, Alice and Bob inform each other of their choices of measurements. The security of the key depends on the correlation between the measurement outcomes.

According to the investigations of Devetak and Winter [40], the amount of extractable key is quantified as \(K\ge H(X|E)-H(X|B)\). Using our bound we are able to bound the amount of extractable key in terms of von Neumann entropies by

In the above \(S(X|X')\) is the conditional entropy of the state shared by Alice and Bob, when both parties execute the measurement schemes \(X,X'\), respectively. This relates our result to [41]. In our case Alice and Bob need to upper bound entropies \(S(A|B), S(X|X')\) and \(S(Y|Y')\). The former entropies can be bounded by quantities such as frequency of the agreement of the outcomes.

4 Conclusion

In this paper, we have derived new entanglement-dependent uncertainty relations in terms of von Neumann and Tsallis entropies for qubits and binary observables with respect to quantum side information. Our bounds were compared with well-known bounds derived by Massen and Uffink [7], Rudnicki et al. [23] and Berta et al. [28]. This paper can be also treated as a generalization of results included in [39].

Presented results are expected to have application to witnessing entanglement or in quantum cryptography as a measure of information in quantum key distribution protocols. Verification of our results in potential applications seems to be interesting task.

References

Heisenberg, W.: Über den anschaulichen Inhalt der quantentheoretischen Kinematik und Mechanik. Zeitschrift für Physik 43(3–4), 172–198 (1927)

Hirschman, I.I.: A note on entropy. Am. J. Math. 79(1), 152–156 (1957)

Beckner, W.: Inequalities in Fourier analysis. Ann. Math. 102, 159–182 (1975)

Białynicki-Birula, I., Mycielski, J.: Uncertainty relations for information entropy in wave mechanics. Commun. Math. Phys. 44(2), 129–132 (1975)

Deutsch, D.: Uncertainty in quantum measurements. Phys. Rev. Lett. 50(9), 631 (1983)

Kraus, K.: Complementary observables and uncertainty relations. Phys. Rev. D 35(10), 3070 (1987)

Maassen, H., Uffink, J.B.: Generalized entropic uncertainty relations. Phys. Rev. Lett. 60(12), 1103 (1988)

Wehner, S., Winter, A.: Entropic uncertainty relations-a survey. New J. Phys. 12(2), 025009 (2010)

Coles, P.J., Berta, M., Tomamichel, M., Wehner, S.: Entropic uncertainty relations and their applications. Rev. Mod. Phys. 89(1), 015002 (2017)

Koashi, M.: Simple security proof of quantum key distribution via uncertainty principle. New J. Phys. 11, 045018 (2009)

DiVincenzo, D.P., Horodecki, M., Leung, D.W., Smolin, J.A., Terhal, B.M.: Locking classical correlations in quantum states. Phys. Rev. Lett. 92(6), 067902 (2004)

Damgård, I.B., Fehr, S., Salvail, L., Schaffner, C.: Cryptography in the bounded-quantum-storage model. SIAM J. Comput. 37(6), 1865–1890 (2008)

Gühne, O.: Characterizing entanglement via uncertainty relations. Phys. Rev. Lett. 92(11), 117903 (2004)

Oppenheim, J., Wehner, S.: The uncertainty principle determines the nonlocality of quantum mechanics. Science 330(6007), 1072–1074 (2010)

Rastegin, A.E.: Separability conditions based on local fine-grained uncertainty relations. Quantum Inf. Process. 15(6), 2621–2638 (2016)

Zozor, S., Bosyk, G.M., Portesi, M.: On a generalized entropic uncertainty relation in the case of the qubit. J. Phys. A: Math. Theor. 46(46), 465301 (2013)

Rastegin, A.E.: Uncertainty relations for MUBs and SIC-POVMs in terms of generalized entropies. Eur. Phys. J. D 67(12), 269 (2013)

Kaniewski, J., Tomamichel, M., Wehner, S.: Entropic uncertainty from effective anticommutators. Phys. Rev. A 90(1), 012332 (2014)

Xiao, Y., Jing, N., Li-Jost, X.: Uncertainty under quantum measures and quantum memory. Quantum Inf. Process. 16(4), 104 (2017)

Partovi, M.H.: Majorization formulation of uncertainty in quantum mechanics. Phys. Rev. A 84(5), 052117 (2011)

Puchała, Z., Rudnicki, Ł., Życzkowski, K.: Majorization entropic uncertainty relations. J. Phys. A: Math. Theor. 46(27), 272002 (2013)

Friedland, S., Gheorghiu, V., Gour, G.: Universal uncertainty relations. Phys. Rev. Lett. 111(23), 230401 (2013)

Rudnicki, Ł., Puchała, Z., Życzkowski, K.: Strong majorization entropic uncertainty relations. Phys. Rev. A 89(5), 052115 (2014)

Adamczak, R., Latała, R., Puchała, Z., Życzkowski, K.: Asymptotic entropic uncertainty relations. J. Math. Phys. 57(3), 032204 (2016)

Rudnicki, Ł.: Majorization approach to entropic uncertainty relations for coarse-grained observables. Phys. Rev. A 91(3), 032123 (2015)

Rastegin, A.E., Życzkowski, K.: Majorization entropic uncertainty relations for quantum operations. J. Phys. A: Math. Theor. 49(35), 355301 (2016)

Puchała, Z., Rudnicki, Ł., Chabuda, K., Paraniak, M., Życzkowski, K.: Certainty relations, mutual entanglement, and nondisplaceable manifolds. Phys. Rev. A 92(3), 032109 (2015)

Berta, M., Christandl, M., Colbeck, R., Renes, J.M., Renner, R.: The uncertainty principle in the presence of quantum memory. Nat. Phys. 6(9), 659–662 (2010)

Coles, P.J., Piani, M.: Improved entropic uncertainty relations and information exclusion relations. Phys. Rev. A 89(2), 022112 (2014)

Xiao, Y., Jing, N., Fei, S.-M., Li-Jost, X.: Improved uncertainty relation in the presence of quantum memory. J. Phys. A: Math. Theor. 49(49), 49LT01 (2016)

Coles, P.J., Colbeck, R., Yu, L., Zwolak, M.: Uncertainty relations from simple entropic properties. Phys. Rev. Lett. 108(21), 210405 (2012)

Müller-Lennert, M., Dupuis, F., Szehr, O., Fehr, S., Tomamichel, M.: On quantum Rényi entropies: a new generalization and some properties. J. Math. Phys. 54(12), 122203 (2013)

Tomamichel, M., Berta, M., Hayashi, M.: Relating different quantum generalizations of the conditional Rényi entropy. J. Math. Phys. 55(8), 082206 (2014)

Berta, M., Fawzi, O., Wehner, S.: Quantum to classical randomness extractors. IEEE Trans. Inf. Theory 60(2), 1168–1192 (2014)

Li, C.-F., Xu, J.-S., Xu, X.-Y., Li, K., Guo, G.-C.: Experimental investigation of the entanglement-assisted entropic uncertainty principle. Nat. Phys. 7, 752–756 (2011)

Prevedel, R., Hamel, D.R., Colbeck, R., Fisher, K., Resch, K.J.: Experimental investigation of the uncertainty principle in the presence of quantum memory and its application to witnessing entanglement. Nat. Phys. 7, 757–761 (2011)

Tsallis, C.: Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 52(1), 479–487 (1988)

Havrda, J., Charvát, F.: Quantification method of classification processes. Concept of structural \(a\)-entropy. Kybernetika 3(1), 30–35 (1967)

Bosyk, G.M., Portesi, M., Plastino, A., Zozor, S.: Comment on “Improved bounds on entropic uncertainty relations”. Phys. Rev. A 84(5), 056101 (2011)

Devetak, I., Winter, A.: Distillation of secret key and entanglement from quantum states. In: Proceedings of the Royal Society of London A: Mathematical, Physical and Engineering Sciences, vol. 461, pp. 207–235. The Royal Society, (2005)

Shor, P.W., Preskill, J.: Simple proof of security of the BB84 quantum key distribution protocol. Phys. Rev. Lett. 85(2), 441 (2000)

Acknowledgements

The authors acknowledge the support by the Polish National Science Center under the Project Numbers 2013/11/N/ST6/03090 (D. K.), 2015/17/B/ST6/01872 (Ł. P.) and 2016/22/E/ST6/00062 (Z. P.).

Author information

Authors and Affiliations

Corresponding author

Appendix: Generalization of Eq. (14) to Tsallis entropy case

Appendix: Generalization of Eq. (14) to Tsallis entropy case

We start by introducing the following notation

Using this notation we note that

Now we recall Eq. (14)

Again, we guess that the right-hand side as a minimum at \(\theta =\pi /2-\varepsilon /2\). Similar to the case \(q\rightarrow 1\) we get that this is a minimum only when

This follows from the second derivative of Eq. (14) with respect to \(\theta \). The plots of the solutions to this inequality are shown in Fig. 1. Note that when \(q \rightarrow 1\) we recover bound (16). When this is a minimum, we obtain

In the case when \(q\rightarrow 1\) we recover Eq. (17).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kurzyk, D., Pawela, Ł. & Puchała, Z. Conditional entropic uncertainty relations for Tsallis entropies. Quantum Inf Process 17, 193 (2018). https://doi.org/10.1007/s11128-018-1955-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-018-1955-1