Abstract

Many sexual health programs transitioned to virtual implementation during the COVID-19 pandemic. Despite its devastation, the pandemic provided an opportunity to learn about virtual compared to in-person implementation of a sexual health promotion program—El Camino. This study assessed differences in program attendance, engagement, quality, and student ratings for virtual versus in-person implementation of El Camino as part of a rigorous evaluation in high schools with high Latino populations in Maryland. Drawing on positive youth development practices, El Camino helps participants identify personal goals and learn about sexual reproductive health and healthy relationships. This mixed-methods study incorporates data from performance measures, baseline and post-intervention participant surveys, observations, monthly implementation reports, and debriefs with facilitators to describe and compare virtual and in-person program implementation. At baseline, participants were an average of 16.2 years old; between 8 and 12th grade; 61% female; 79% Hispanic, Latino, or of Spanish origin; and 54% spoke mostly Spanish at home. Recruitment and retention of students outside of school classes were challenging for both forms of implementation. However, attendance was higher during in-person implementation and in schools where the organization implementing El Camino had a strong presence before the pandemic. Findings indicate high fidelity, excellent quality ratings, and positive student perceptions of the program and facilitators in both the virtual and in-person cohorts, which suggest that both forms of implementation were comparable and furthermore highlight the strength of the virtual adaptation of the El Camino program.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The COVID-19 pandemic was linked to declines in school attendance, increases in mental health issues among adolescents, and spikes in school failure, particularly among those most vulnerable (Cockerham et al., 2021; Centers for Disease Control and Prevention [CDC], 2022; Office for Civil Rights, 2021). During the pandemic, Latino youth and English language learners were especially likely to live in families that experienced economic, physical, and mental health hardships that could affect school engagement (Padilla & Thomson, 2021). Although maintaining or increasing knowledge gains when transitioning from in-person to virtual implementation was possible, organizations experienced several disadvantages during virtual implementation, including efforts to adapt curricula for virtual implementation, time constraints, difficulties building relationships in a virtual environment, and maintaining student engagement in programming outside of regular school classes or activities (Fernandez et al., 2021; Ogletree & Bey, 2021). Existing research specifically examining the adaptation of sexual health curricula to virtual implementation primarily focuses on its reception among youth participants, finding it to be generally positive (Patel et al., 2022). The current study expands previous research by examining facilitator experiences delivering a sexual health curriculum among participants largely comprised of Latino youth and English language learners in addition to participants’ ratings of the program and its mode of implementation.

El Camino is an 11-lesson research-based and positive youth development–informed sexual health promotion curriculum designed for adolescents, particularly Latino youth (Child Trends, 2021a). Originally developed for in-person implementation, the program was adapted for virtual implementation in Fall 2020 in response to the pandemic. As part of a randomized controlled trial, Child Trends (program developer) partnered with Identity, Inc. (Identity; implementation partner) and the University of Maryland (UMD; independent evaluator) to implement El Camino in high schools with large Latino populations in Montgomery County, MD, beginning in February 2021 until early 2023. The pandemic worsened educational disparities for Black, Latino, and low-income students in Montgomery County Public Schools [MCPS] (MCPS, n.d.; Lewin & Roy, 2020), and there was a marked increase in the proportion of MCPS students with limited English proficiency who received failing grades during remote learning (St. George, 2020). Furthermore, MCPS students wanted and needed regular interaction with adults who could provide support as well as frequent and more effective communication (Lewin & Roy, 2020).

This study describes and compares virtual and in-person implementation of El Camino and explores how program delivery during the pandemic impacted both forms of implementation by providing information about participant and facilitator experiences. This study uses data from the first three cohorts: Spring 2021 (virtual, February–May 2021), Summer 2021 (in-person, July 2021), and Fall 2021 (in-person, October 2021–January 2022). We used quantitative data from participants’ baseline and post-intervention surveys, observer reporting, student attendance; and qualitative data from facilitators’ fidelity logs, monthly reports from Identity’s program manager, and notes from discussions with facilitators.Footnote 1

Methods

Intervention

The El Camino evaluation study was reviewed and approved by the Child Trends Institutional Review Board (FWA00005835) and started in February 2021 and is ongoing through 2023. El Camino can be implemented in both English and Spanish and consists of eleven 45-min lessons divided into three sections (or arcs) that focus on goal setting, sexual and reproductive health, and healthy relationships (see Table 1). Throughout the curriculum, youth are encouraged to identify and set goals, make informed reproductive health choices, and have healthy relationships (Child Trends, 2022). Child Trends collaborated with curriculum writers, Identity, and UMD to adapt, pilot, and revise the curriculum for virtual implementation while preserving the core componentsFootnote 2 of the in-person curriculum (Parekh et al., 2021) (see Table 2).

The current study took place in nineFootnote 3 high schools with large Latino, including Spanish-speaking, populations in Montgomery County, MD. Three of the implementation sites took place at MCPS high schools with School Based Health and Wellness Centers (Wellness Center schools), which Identity operates and has an established relationship with the school and student community. The remaining five schools were the result of new partnerships Identity established for the study (non-Wellness Center schools). Each school recruited students to participate in one of two groups (5–18 students per group) that was to be randomly assigned. Parental consent and student assent were obtained prior to student enrollment into the study. Once enrolled students completed the baseline survey, groups were randomly assigned to receive either the intervention curriculum (El Camino) or a different, unrelated leadership development program of the same length (11 lessons) called PODER. Randomization of the condition was altered by semester. Each curriculum was delivered by two trained facilitators, and implementation was conducted in English and Spanish (as appropriate) as well as virtually and in-person. For the Spring 2021 and Fall 2021 cohorts, most schools implemented El Camino once a week, although there were some exceptions when implementation occurred twice a week. The Summer 2021 cohort was implemented during a 2-week period and was notably smaller (n = 19).Footnote 4

Data Collection and Analysis

Quantitative Data

Student survey data were collected at baseline prior to randomization and post-intervention immediately following program implementation. This paper focuses on the experiences of 185 students (17 classes) who received El Camino (76 students in 8 classes during the Spring 2021 virtual cohort; 109 students in 9 classes during the Summer 2021 and Fall 2021 in-person cohorts). Surveys were self-administered and included questions on student demographics, psychological distress, and student ratings of the program and facilitators. Psychological distress was assessed using the six-item Kessler Screening Scale for Psychological Distress (K6; Kessler et al., 2002), which has been validated in youth samples and shown to have excellent internal consistency reliability (Cronbach’s α = 0.89). The K6 is scored using a 5-level response scale, ranging from 0 (none of the time) to 4 (all of the time), which generates a scoring scale with a range of 0 to 24. Study data were collected and managed using REDCap electronic data capture tools hosted at Child Trends (Harris et al., 2009, 2019). Participants received a $10 gift card for completing each survey and for each session they attended. Survey procedures were the same for virtual and in-person implementation.

Facilitators monitored attendance and tracked the percentage of students who attended at least 75% of lessons (9 out of 11 sessions) as a performance measure. Trained observers from Child Trends and UMD (n = 15) observed 29 sessions across the three cohorts. Prior to conducting an observation, all observers completed an annual training on conducting culturally responsive observations provided by UMD. Each implementation site was observed at least once, and observations were spread throughout the implementation period to ensure that a variety of lessons were observed. Observers completed an observation form via REDCap, which included questions with corresponding Likert scale responses to assess student engagement, facilitator qualities, and overall quality of the session, which were reported as performance measures. Observers noted different actions indicating student engagement between virtual and in-person implementation. For virtual implementation, engagement included instances of a student turning on their camera, verbally sharing, using the chat, or using the reaction feature, whereas for in-person implementation, engagement included students verbally sharing, following the lesson in their workbook, participating in activities, and completing worksheets.

Qualitative Data

Facilitators completed fidelity forms (n = 182) via REDCap after every lesson to monitor how closely their program delivery adhered to the written program. Facilitators were trained on the importance of fidelity and how to complete the fidelity logs prior to implementation (Child Trends, 2021b). Facilitators provided open-ended feedback whenever they indicated activity adaptations or noncompletion and gave additional feedback as relevant. Child Trends conducted debriefs (n = 6) with facilitators after lessons 4, 8, and 11 during the Spring and Fall 2021 semesters. During these debriefs, facilitators reflected on the successes, challenges, and overall experiences implementing each curriculum arc, and exchanged strategies related to engagement and retention. Monthly reports (n = 13) were written by Identity’s program manager with input from facilitators and included information about activities completed and successes and challenges encountered. The reports were informed by conversations and email updates from facilitators about implementation in their respective schools.

Quantitative Analysis

We assessed statistically significant differences between virtual and in-person participant baseline characteristics, attendance, and post-test program ratings using two sample t-tests for continuous measures and chi-squared tests for categorical measures. We conducted significance tests of student program ratings by implementation type (virtual vs. in-person), controlling for grade level, length of time in the USA, and whether they ever had sex. Control variables were selected due to observed differences in baseline responses between implementation type. We assessed statistically significant differences in student attendance of 75% or more lessons by implementation setting and school type (Wellness Center vs. non-Wellness Center) using the chi-squared test of independent proportions. Data from observation forms were used to calculate the mean observed quality ratings by implementation type. Statistical significance was not tested due to small sample sizes. All analyses were completed using SAS Studio [v5.4, 2019].

Qualitative Analysis

Monthly reports, facilitator debrief notes, and responses to open-ended questions from the facilitator logs were transferred into Excel for thematic analysis (Maguire & Delahunt, 2017). Two trained researchers coded the data and identified commonalities across the three data sources, which included attendance, incentives, student engagement, staffing/capacity, uncertainty amid the pandemic, working with school partners, changes in modality, and fidelity. Through extensive discussion, the coders agreed upon the larger themes presented in this paper.

Results

Student Characteristics

A self-administered baseline survey showed that over half the participants in virtual and in-person implementation (61.4%) were female and on average 16.2 years old (see Table 3). Most (79.0%) participants were of Hispanic origin and either spoke mostly Spanish at home (54.4%) or both Spanish and English at home (21.7%). Almost one quarter (22.9%) of the sample had ever had sex, and 9.7% had sex in the last 3 months. On average, a marginally lower percentage of in-person participants ever had sex than virtual participants. There was no difference in psychological distress reported between in-person compared to virtual participants, with a mean K6 score of 13.4 in both groups. The overall mean K6 score of 13.4 is higher than what has been reported in general adolescent samples in the USA (Mewton et al., 2016; Peiper et al., 2015). Compared with virtual participants, in-person participants were younger. Additionally, a higher percentage of virtual students reported having recently arrived to the USA (< 3 years). Finally, virtual participants (94.7%) were more likely to be in 9th to 11th grade than in-person participants (82.6%).

Program Implementation Findings: Comparing Virtual and In-Person Implementation

Our analysis identified three main themes: recruitment, attendance, and student engagement. Notably, all aspects of program implementation were challenging during the pandemic for both virtual and in-person cohorts.

The Pandemic Presented Challenges to Recruitment for both the Virtual and In-Person Cohorts

Staff at Identity described challenges in identifying and reaching potential participants in a virtual setting. Recruitment was conducted via Zoom or email, and school partners reported overall low engagement and attendance of students at prospective information sessions. Identity staff shared, “COVID-19 devastated our client community as [a] disproportionate number of partners and caregivers lost jobs and became ill or both. Students who were already challenged are struggling to succeed with remote schooling.” As a result, Identity expanded recruitment beyond the initially targeted classrooms to reach an ideal enrollment of at least 10 students per classroom and extended the amount of time scheduled for recruitment to at least three weeks.

After returning to in-person implementation for the Summer and Fall 2021 cohorts, schools limited in-person time for students, parents, and outside program providers to reduce the risk of COVID-19 exposure. Identity had limited access to activities that would normally aid recruitment, such as new student orientation, back to school nights, and regular school class visits. The program manager reported, “Due to the restrictions that have resulted from the pandemic, staff has limited ability to devote time to engaging students, building relationships, and checking in with them face-to-face.” They also shared that recruitment, including time needed to consent participants, required more time and staff than originally allocated.

Attendance Was Higher in Person and Among Wellness Center schools, Which Had Existing Relationships with the Implementation Partner

While facilitators made substantial efforts to increase and maintain attendance, student attendance was significantly higher for in-person (45.8%) than virtual implementation (29.0% attended 75% or more lessons; see Table 4). This difference was driven by higher in-person (77.6%) than virtual attendance (33.3%) in Wellness Center schools.Footnote 5 In contrast, attendance among students in non-Wellness Center schools was lower than 30% for both virtual (25.6%) and in-person (19.0%) implementation. Across cohorts, facilitators expressed that attendance was higher when students in classes knew each other and were friends, which was more challenging in virtual implementation.

In general, student attendance was significantly higher in Wellness Center schools than other schools (59.8% vs. 21.8% attended at least nine of 11 sessions). The program manager attributed the higher attendance at Wellness Center schools to existing relationships with school partners and students: “Many of the students recruited had a personal relationship with Identity staff or were part of a class where the teacher worked closely with Identity staff and were intimately familiar with programs available.”

During virtual implementation, most El Camino lessons were held on weekdays when students were not in school classes. Lower attendance during virtual implementation was due in part to other student responsibilities, such as needing to provide childcare, work, or illness. Program staff offered flexible options, such as make-up sessions for students who could not attend scheduled sessions. However, Zoom fatigue (Peper et al., 2021) challenged program planning, delivery, and implementation. The program manager shared:

Since Identity operates as voluntary, extracurricular programming, much of what Identity does has become an additional effort and demand, in some cases. Pre-pandemic, teachers, school staff and students alike showed more enthusiasm for after school extracurricular activities. During these times, … they are ready to check out.

During in-person implementation, most El Camino sessions were held during lunch in both types of schools, which also caused challenges. Students often spent their lunch periods meeting with teachers or guidance counselors, making up assignments, taking tests, or utilizing their free time. Facilitators also noted that lunchtime implementation in non-Wellness Center schools was challenging due to student unfamiliarity.

To maintain regular student attendance during virtual and in-person implementation, facilitators sent reminders to participants via text or called the morning of each session. These reminders were particularly important when the school district began allowing students to opt-in to in-person instruction during the Spring 2021 cohort, and to ensure facilitators provided an appropriate amount of food for participants during in-person implementation.

Student Engagement Looked Different Across Implementation Modalities

Facilitators shared that engaging youth was crucial for both attendance and participation. They also noted key differences in student engagement based on whether implementation occurred virtually or in-person. For both implementation modes, facilitators used many different methods (as described above) to stay connected with participants between lessons and throughout implementation. Facilitators stated that the participants who attended lessons became more engaged as time progressed if they had friends in the group or during more interactive lesson activities, such as skits and role playing. One facilitator noted that some participants were “apprehensive about discussing or opening up but as [the] group went on and they felt comfortable, they started to open up.” Another facilitator shared, “we are seeing the students participate more and more as the lessons got more intense. They use their voice more, participate well with one another. [I’m] excited to see how the next weeks will go as the lessons become more interactive.”

Student engagement looked different virtually versus in-person as did the measurement criteria for student engagement during virtual implementation, accounting for differences in lesson delivery. During virtual implementation, facilitators shared that building rapport as well as engaging students required more time, patience, effort, and creativity than past experiences with in-person implementation (Parekh et al., 2021). In fidelity logs, facilitators reported difficulty engaging students in discussion in virtual sessions, particularly in the beginning of the semester. One facilitator reported there was “low student participation” in Lesson 1, and another facilitator said “participation is still a little slow and minimal” for Lesson 2. Facilitators noted that students used Zoom’s chat and reaction features more than their camera or microphone. Facilitators actively thought of ways to better engage students in the first few lessons, including encouraging (but not requiring) cameras to be on, asking students to elaborate on their feedback in the chat, or reacting to other students’ responses to create more space for participation. Facilitators noted that student engagement in virtual sessions improved as the program went on, perhaps due to increased comfort with other students in their class or the program itself. For example, one facilitator wrote in their fidelity log for Lesson 7: “The kids were very engaged in this lesson. It was challenging to have this important conversation over Zoom because the kids are around their family sometimes but given the challenges people participated well.”

When implementation returned to in person, facilitators reported that students seemed more engaged but noted that this could be due to ease in assessing engagement when everyone is in the same room. Facilitators shared that in-person participants were quicker to respond during group discussions, appeared interested, asked questions, and seemed excited to be back in-person. The program manager reported, “Program facilitators noted a stark improvement from virtual delivery to this in-person group in participation… Besides facilitators observing increased participation, there was a noticeable increase in use of the question drop-box use from participants.” While lunchtime implementation had low attendance at times, students who attended were highly engaged. The program manager shared, “By all accounts from facilitators, engagement and participation by students is high. Participants attending the groups are interested, interactive and responsive to activities and questions during session.”

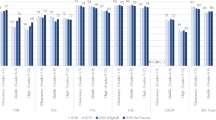

Despite differences in how students engaged with the curriculum, observation ratings of overall student engagement were similar across cohorts. On a scale of 1 (less than 25% of participants) to 5 (75–100% of participants), most students actively participated in discussions and activities (4.0 for virtual vs. 4.6 for in-person) and appeared interested (4.0 for virtual vs. 4.6 for in-person). Observers also reported that facilitators had similar above average to near excellent levels of enthusiasm, rapport, and communication with participants, regardless of implementation setting (see Table 5). Additionally, while student participation was challenging in virtual settings, students in both groups rated the program and facilitators highly and gave El Camino high-quality ratings at post-test. The majority of virtual (88.9%, see Table 6) and in-person (92.1%) students rated the program as excellent or very good. More than nine in 10 students liked the facilitators (96.6% virtual and 96.0% in-person). Virtual (68.8%) and in-person (80.0%) students also reported that they learned a lot. In-person participants (89.3%) reported that discussions helped them learn more often than virtual participants (77.8%); however, this difference was not statistically significant difference at the 0.05 level and was thus only marginally higher (p = 0.065). While student engagement was challenging for both virtual and in-person implementation, experience and quality were comparable across both modalities.

Discussion

This paper expands previous research by comparing successes and challenges for in-person and virtual implementation of the El Camino sexual health promotion program. Using data from student surveys, facilitator and observer logs, and facilitator and program reports, we found higher attendance during in-person vs. virtual programming, but similarly high observer quality and student ratings of the program facilitators in both implementation modes. We also identified challenges with recruitment and engagement in both implementation settings.

Maintaining high recruitment and attendance can be a challenge with voluntary student programming outside of regular classes (Afterschool Alliance, 2009). Overall, we found lower attendance in virtual than in-person classes, which has been found in other studies (Goldstein et al., 2020; Meeter et al., 2020; Weijers et al., 2022). However, the implementation team noted unique challenges with recruitment and attendance during the pandemic for both virtual and in-person implementation. The high levels of student mental health issues in our sample echoed national reports during the pandemic (CDC, 2022) and may have reduced student engagement in programming. For in-person programming, program staff had limited access to the schools for recruitment, and many schools limited after-school activities. For virtual programming, students also faced Zoom fatigue (Peper et al., 2021) after participating in a full day of virtual school.

Previous research has highlighted relationship-building between facilitators and students as key to encourage student recruitment, attendance, and engagement (Luo et al., 2017). The dramatic differences in recruitment and attendance for schools in which the facilitators already had a presence (Wellness Center schools) were striking. They had especially high attendance relative to other schools during in-person implementation. This finding supports the benefit of having a physical presence in the school, outside of the specific after-school program.

While facilitators described difficulty engaging students virtually in early lessons, high student and observer ratings of the program and facilitators suggest that a well-developed adaptation of a sexual health curriculum can be feasible and high quality.Footnote 6 Recent research has described best practices for transitioning to virtual implementation, such as using interactive activities; using a platform that allows participants to virtually raise their hands; having two facilitators with one monitoring platform logistics and the other focusing on program content and engagement; having live sessions to encourage interaction between students and facilitators; and having a variety of ways for students to engage in virtual programming (such as using the chat or reactions) to allow for more voices in group-based discussions (Caprara & Caprara, 2022; Domina et al., 2021; Ogletree & Bey, 2021; Parekh et al., 2021; Sweetman, 2020). Beyond the pandemic, virtual programming can reach students in states that do not have such programs or students who are unable to attend programs locally. Some of these alternatives may also benefit in-person programming, especially for students who are introverted or are uncomfortable speaking in class (Callahan, 2021; Tuovinen et al., 2020; Yu, 2021).

High observer ratings of student engagement in virtual programming may reflect differences in how observers assessed engagement across the two types of implementation modes. Facilitators and observers noted that most students kept their cameras off during virtual implementation—as students may share space with other family members—but could reduce the ability to assess student-facilitator and student-peer engagement, which is key in in-person implementation (Parekh et al., 2021). Program staff specifically reported higher enthusiasm and greater engagement of students and facilitators in in-person programming, which aligns with other research citing lower engagement in virtual programming (Domina et al., 2021).

Limitations

There were several limitations to this research due in part to the changes in school policies in response to the pandemic. During the Spring 2021 cohort, students were given the option to return to in-person learning midway through implementation (MCPS, n.d.), so many students returned to school in-person, which affected program attendance. For the Fall 2021 cohort, a surge of COVID-19 cases in December 2021 forced all extracurricular activities online, including El Camino, which resulted in attendance being lower than expected for the last 2–3 lessons. The team also had difficulty gathering student input via focus groups to learn more about their experiences participating in this program during the pandemic, so we only have student input from post-test surveys. Because we only analyzed participants that received the El Camino curriculum during the pandemic, our sample size is relatively small, which limits our ability to detect differences. Finally, we did not incorporate a comparison of implementation modality into the evaluation study design and instead conducted ad hoc analyses of data collected during the pandemic. However, these limitations were offset by rich information available to compare virtual and in-person implementation.

Conclusion

Conducting an evaluation during the COVID-19 pandemic allowed us to compare virtual and in-person implementation. While attendance was lower in virtual than in-person implementation, we found high fidelity and positive observer and student ratings of the program and facilitators in both implementation modes, highlighting the strength of the in-person and virtual implementation. In future research, we plan to compare the impacts of virtual with in-person implementation of El Camino. Additional evaluations should assess the efficacy of virtual sexual health program implementation post-pandemic, which could ultimately expand the types of programming available to youth living in low-resource areas.

Notes

Facilitators are the people who implement the El Camino program. Facilitators either work for or are contracted by Identity, Inc., our implementation partner.

Core components of the program are summarized in Child Trends. (2021a, 2021b). El Camino: A goal-setting sexual health promotion curriculum. Key Background Information for Implementing El Camino. https://www.childtrends.org/wp-content/uploads/2021/04/ElCaminoFrontMatterAppendices_ChildTrends_June2021.pdf.

Eight schools (3 Wellness Center, 5 non-Wellness Center) participated in Spring 2021; 1 school (Wellness Center) participated in Summer 2021; and 8 schools (3 Wellness Center, 5 non-Wellness Center) participated in Fall 2021. One of the non-Wellness Center schools that participated in the Spring 2021 cohort was replaced with a different school in Fall 2021. Thus, a total of nine schools were engaged throughout the study period with a maximum of eight schools represented in each cohort.

Given the potential of the shorter implementation period of the Summer 2021 cohort to impact curriculum experience and quality, sensitivity analysis excluding the Summer 2021 cohort was conducted. Findings were remarkably consistent.

Sensitivity analyses found that there were no significant demographic or sexual behavior differences between virtual and in-person attendees among Wellness Center schools.

Based on our lessons learned, we have published strategies to help facilitators engage students virtually. This resource can be found via Child Trends. (2021b). El Camino: A goal-setting sexual health promotion curriculum—Key background information for implementing El Camino. https://www.childtrends.org/wp-content/uploads/2021/04/ElCaminoFrontMatterAppendices_ChildTrends_June2021.pdf

References

Afterschool Alliance. (2009). Afterschool: The challenges of recruiting and retaining older youth (Issue Brief No. 37). Retrieved May 5, 2022, from http://afterschoolalliance.org//documents/issue_briefs/RecruitmentandRetention%20Brief%2037%20FINAL.pdf

Callahan, K. (2021). Valuing and supporting introverted students in the virtual design classroom. International Journal of Art & Design Education, 40(4), 714–722. https://doi.org/10.1111/jade.12383

Caprara, L., & Caprara, C. (2022). Effects of virtual learning environments: A scoping review of literature. Education and Information Technologies, 27(3), 3683–3722. https://doi.org/10.1007/s10639-021-10768-w

Centers for Disease Control and Prevention. (2022). Adolescent behaviors and experiences survey – United States, January-June 2021. MMWR Supplement, 71(3). Retrieved May 5, 2022, from https://www.cdc.gov/mmwr/volumes/71/su/pdfs/su7103a1-a5-h.pdf

Child Trends. (2021a). El Camino: A goal-setting sexual health promotion program. Retrieved May 5, 2022, from https://www.childtrends.org/publications/el-camino-a-goal-setting-sexual-health-promotion-program

Child Trends. (2021b). El Camino: A goal-setting sexual health promotion curriculum—Key background information for implementing El Camino. Retrieved May 5, 2022, from https://www.childtrends.org/wp-content/uploads/2021b/04/ElCaminoFrontMatterAppendices_ChildTrends_June2021b.pdf

Child Trends. (2022). El Camino: Helping teens set life goals and promote sexual health. Retrieved May 5, 2022, from https://www.childtrends.org/publications/el-camino-helping-teens-set-life-goals-and-promote-sexual-health

Cockerham, D., Lin, L., Ndolo, S., & Schwartz, M. (2021). Voices of the students: Adolescent well-being and social interactions during the emergent shift to online learning environments. Education and Information Technologies, 26(6), 7523–7541. https://doi.org/10.1007/s10639-021-10601-4

Domina, T., Renzulli, L., Murray, B., Garza, A. N., & Perez, L. (2021). Remote or removed: Predicting successful engagement with online learning during COVID-19. Socius, 7. https://doi.org/10.1177/2378023120988200

Fernandez, C. S. P., Green, M. A., Noble, C. C., Brandert, K., Donnald, K., Walker, M. R., Henry, E., Rosenberg, A., Dave, G., & Corbie-Smith, G. (2021). Training “pivots” from the pandemic: Lessons learned transitioning from in-person to virtual synchronous training in the clinical scholars leadership program. Journal of Healthcare Leadership, 13, 63–75. https://doi.org/10.2147/jhl.s282881

Goldstein, D., Popescu, A., & Hannah-Jones, N. (2020). As school moves online, many students stay logged out. The New York Times. https://www.nytimes.com/2020/04/06/us/coronavirus-schools-attendance-absent.html

Harris, P. A., Taylor, R., Thielke, R., Payne, J., Gonzalez, N., & Conde, J. G. (2009). Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics, 42(2), 337–381. https://doi.org/10.1016/j.jbi.2008.08.010

Harris, P. A., Taylor, R., Minor, B. L., Elliott, V., Fernandez, M., O’Neal, L., McLeod, L., Delacqua, G., Delacqua, F., Kirby, J., Duda, S. N., & REDCap Consortium. (2019). The REDCap consortium: Building an international community of software platform partners. Journal of Biomedical Informatics, 95, 103208. https://doi.org/10.1016/j.jbi.2019.103208

Kessler, R. C., Andrews, G., Colpe, L. J., Hiripi, E., Mroczek, D. K., Normand, S. L., Walters, E. E., & Zaslavsky, A. M. (2002). Short screening scales to monitor population prevalences and trends in non-specifc psychological distress. Psychological Medicine, 32(6), 959–976. https://doi.org/10.1017/s0033291702006074

Lewin, A., & Roy, K. (2020). Securing educational equity: Learning from the lived experiences of Black, Latino, and low-income families during the COVID-19 pandemic and beyond. The Black and Brown Coalition for Educational Equity and Excellence and University of Maryland School of Public Health, Department of Family Science. Retrieved May 5, 2022, from https://identity-youth.org/wp-content/uploads/2020/10/FINAL-Securing-Educational-Equity-Report-Black-and-Brown-Coalition-10-6-20-.pdf

Luo, N., Zhang, M., & Qi, D. (2017). Effects of different interactions on students’ sense of community in e-learning environment. Computers & Education, 115, 153–160. https://doi.org/10.1016/J.COMPEDU.2017.08.006

Maguire, M., & Delahunt, B. (2017). Doing a thematic analysis: A practical, step-by-step guide for learning and teaching scholars. All Ireland Journal of Teaching and Learning in Higher Education (AISHE-J), 9(3), 3351–3354. http://ojs.aishe.org/index.php/aishe-j/article/view/335

Meeter, M., Bele, T., Hartogh, C. d., Bakker, T., de Vries, R. E., & Plak, S. (2020). College students’ motivation and study results after COVID-19 stay-at-home orders. PsyArxiv. https://doi.org/10.31234/osf.io/kn6v9

Mewton, L., Kessler, R. C., Slade, T., Hobbs, M. J., Brownhill, L., Birrell, L., Tonks, Z., Teesson, M., Newton, N., Chapam, C., Allsop, S., Hides, L., McBride, N., & Andrews, G. (2016). The psychometric properties of the Kessler Psychological Distress Scale (K6) in a general population sample of adolescents. Psychological Assessment, 28(10), 1232–1242. https://doi.org/10.1037/pas0000239

Montgomery County Public Schools. (n.d.). MCPS spring 2021: Recovery of education. Retrieved June 2, 2022 from https://www.montgomeryschoolsmd.org/reopening/#INSTRUCTIONAL

Office for Civil Rights, U.S. Department of Education. (2021). Education in a pandemic: The disparate impacts of COVID-19 on America’s Students. Retrieved May 5, 2022 from https://www2.ed.gov/about/offices/list/ocr/docs/20210608-impacts-of-covid19.pdf

Ogletree, S. L., & Bey, Y. (2021). Academy for future teachers: Transitioning to virtual delivery. School-University Partnerships, 14(3), 191–215. https://www.researchgate.net/publication/359270183

Padilla, C. M., & Thomson, D. (2021). More than one in four Latino and Black households with children are experiencing three or more hardships during COVID-19. Child Trends. Retrieved May 5, 2022, from https://www.childtrends.org/publications/more-than-one-in-four-latino-and-black-households-with-children-are-experiencing-three-or-more-hardships-during-covid-19

Patel, H., Masten, K., Chambers, R., Edwards, A., Fleszar, L., Harvey, B., Dunn, J., Nelson, D., Goldtooth, T., James, J., Huskon, R., Tsosie, A., Richards, J., & Tingey, L. (2022). Feasibility and acceptability of virtual implementation of a sexual reproductive health teen pregnancy prevention program for Native youth. American Indian and Alaska Native Mental Health Research, 29(2), 63–84. https://doi.org/10.5820/aian.2902.2022.63

Parekh, J., McClay, A., Faccio, B., Gates, C., Garcia, J., Coryell, A., & Manlove, J. (2021). Adapting an in-person sexual health program for a virtual setting. Child Trends. https://doi.org/10.56417/5085e1747r

Peiper, N., Clayton, R., Wilson, R., & Illback, R. (2015). The performance of the K6 Scale in a large school sample. Psychological Assessment, 27(1), 228–238. https://doi.org/10.1037/pas0000025

Peper, E., Wilson, V., Martin, M., Rosegard, E., & Harvey, R. (2021). Avoid zoom fatigue, be present and learn. NeuroRegulation, 8(1), 47–56. https://doi.org/10.15540/NR.8.1.47

St. George, D. (2020, December 3). Failing grades double and triple—some rising sixfold—amid pandemic learning. The Washington Post. https://www.washingtonpost.com/local/education/montgomery-county-failing-grades/2020/12/03/913affd0-34fb-11eb-8d38-6aea1adb3839_story.html

Sweetman, D. S. (2020). Making virtual learning engaging and interactive. FASEB BioAdvances, 3(1), 11–19. https://doi.org/10.1096/fba.2020-00084

Tuovinen, S., Tang, X., & Salmela-Aro, K. (2020). Introversion and social engagement: Scale validation, their interaction, and positive association with self-esteem. Frontiers in Psychology, 11, 590748. https://doi.org/10.3389/fpsyg.2020.590748

Weijers, R. J., Ganushchak, L., Ouwehand, K., & de Konig, B. B. (2022). “I’ll be there”: Improving online class attendance with a commitment nudge during COVID-19. Basic and Applied Social Psychology, 44(1), 12–24. https://doi.org/10.1080/01973533.2021.2023534

Yu, Z. (2021). The effects of gender, educational level, and personality on online learning outcomes during the COVID-19 pandemic. International Journal of Educational Technology in Higher Education, 18(14). https://doi.org/10.1186/s41239-021-00252-3

Acknowledgements

The data presented in this study are not publicly available due to privacy restrictions. The authors would like to thank Kristin A. Moore at Child Trends for her review of this article. We would like to acknowledge the incredible efforts and work of the facilitators at Identity, Inc., who implemented the program during the COVID-19 pandemic, including Olivia Delaplaine, Lesly Alvarado, Jennifer Gonzalez, and Addison Coryell, among others. We would also like to thank Amy Lewin, Ph.D., Martha Yumiseva, Sahra Ibrahimi, and Salwa Shan from the University of Maryland for their work as the independent evaluators.

Funding

This research was made possible with grant funding from the HHS Office of Population Affairs, Phase 2 Rigorous Evaluation of Promising TPP Interventions (TPP20 Tier 2 Phase 2) – 2020–2023 (FAIN# TP2AH000077). Contents are solely the responsibility of the authors and do not necessarily represent the official views of the Department of Health and Human Services nor the Office of Population Affairs.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards. This study was reviewed and approved by the Child Trends Institutional Review Board (FWA00005835).

Consent to Participate

Informed consent was obtained from the participants’ parents or participants who were ages 18 or older. Written informed assent was obtained from all participants in the study.

Conflict of Interest

Bianca Faccio and Jennifer Manlove were involved in the development of the El Camino program. There are no other conflicts of interest to report.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Faccio, B., McClay, A., McConnell, K. et al. Comparing Virtual and In-Person Implementation of a School-Based Sexual Health Promotion Program in High Schools with Large Latino Populations. Prev Sci 24 (Suppl 2), 251–261 (2023). https://doi.org/10.1007/s11121-023-01526-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11121-023-01526-0