Abstract

Uncertainty often plays an important role in dynamic flow problems. In this paper, we consider both, a stationary and a dynamic flow model with uncertain boundary data on networks. We introduce two different ways how to compute the probability for random boundary data to be feasible, discussing their advantages and disadvantages. In this context, feasible means, that the flow corresponding to the random boundary data meets some box constraints at the network junctions. The first method is the spheric radial decomposition and the second method is a kernel density estimation. In both settings, we consider certain optimization problems and we compute derivatives of the probabilistic constraint using the kernel density estimator. Moreover, we derive necessary optimality conditions for an approximated problem for the stationary and the dynamic case. Throughout the paper, we use numerical examples to illustrate our results by comparing them with a classical Monte Carlo approach to compute the desired probability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

In this paper, we present a method which describes how to deal with uncertain loads in the context of flow networks. The modeling and simulation of flow networks like gas flow, water flow and the diffusion of harmful substances inside flow networks become more and more important. So in this paper, we analyze the gas flow through a pipeline network in a stationary setting and the contamination of water in a dynamic setting. The aim of this paper is to solve probabilistic constrained optimization problems and to derive necessary optimality conditions for them in the context of flow networks.

Gas transport resp. general flow problems have been a goal of many studies. In general, such a flow problem is modeled as a system of hyperbolic balance laws based on e.g. the isothermal Euler equations (for gas flow, see e.g. Banda et al. 2006a, b; Gotzes et al. 2016; Gugat et al. 2015, 2018; Gugat and Schuster 2018) or the shallow water equations (for water flow, see e.g. Bastin et al. 2009; Coron 2002; Gugat and Leugering 2003; Gugat et al. 2004; Leugering and Schmidt 2002). The model in the stationary setting in this paper is based on the stationary isothermal Euler equations for modeling the gas flow through a pipeline network. In Domschke et al. (2017), Koch et al. (2015) one can find a great overview about the topic of gas transport, existing models, and network elements. The existence of a unique stationary state is shown in Gugat et al. (2015), the stationary states for real gas are analyzed in Gugat et al. (2018) and Gugat and Wintergerst (2018). The existence of solutions for the dynamic case have been analyzed in Gugat and Ulbrich (2017, 2018). Optimal control problems in gas networks have been studied e.g. in Bermúdez et al. (2015), Colombo et al. (2009) and Gugat and Herty (2009). For the problem of contamination of water by harmful substances, we use a linear scalar hyperbolic balance law. This has also been analyzed in Fügenschuh et al. (2007) and Gugat (2012).

An important aspect of this paper is that we consider random boundary data. In the context of gas transport, that means that the loads (i.e., the gas demand) are random. In the context of water contamination, that means that the contaminant injection is random. This leads to optimization problems with probabilistic constraints (see e.g. Prékopa 1995; Shapiro et al. 2009). We also assume box constraints for the solution of the balance law at the network nodes and we define the set of feasible loads M as all loads, for which the solution of the balance law meets these box constraints. Our aim is to compute the probability for a random load vector to be feasible, i.e., we want to compute the probability for a random vector to be in a certain set M. So we identify the load vector with some random vector \(\xi\) on an appropriate probability space \((\varOmega , {\mathcal {A}}, {\mathbb {P}})\) and we want to compute the probability

which we write as

A direct approach to compute this probability is to integrate the probability density function of the balance law solution over the box constraints. However this density function may not be known. We use a kernel density estimator (see e.g. Nadaraya 1965; Parzen 1962; Scott and Terrell 1992) to obtain an approximation of this function. In Duller (2018) and Härdle et al. (2004) one can get a great overview about the area of nonparametric statistics. The authors in Gotzes et al. (2016) and Gugat and Schuster (2018) use the spheric radial decomposition (see e.g. van Ackooij et al. 2018; van Ackooij and Henrion 2014; Farshbaf-Shaker et al. 2018; Gotzes et al. 2016; Gradón et al. 2016) for a similar gas flow problem to compute the desired probability.

Theorem 1

(Spheric radial decomposition, see Gotzes et al. 2016, Theorem 2) Let \(\xi \sim {\mathcal {N}}(0, R)\) be the n-dimensional standard Gaussian distribution with zero mean and positive definite correlation matrix R. Then, for any Borel measurable subset \(M \subseteq {\mathbb {R}}^n\) it holds that

where \({\mathbb {S}}^{n-1}\) is the \((n-1)\)-dimensional sphere in \({\mathbb {R}}^n\), \(\mu _\eta\) is the uniform distribution on \({\mathbb {S}}^{n-1}\), \(\mu _\chi\) denotes the \(\chi\)-distribution with n degrees of freedom and L is such that \(R = L L^\top\) (e.g., Cholesky decomposition).

This result can be applied to general Gaussian distributions easily: For \(\xi \sim {\mathcal {N}}(\mu , \varSigma )\), set \(\xi ^* = D^{-1} (\xi - \mu ) \sim {\mathcal {N}}(0,R)\) with \(D = {\text {diag}}\left( \sqrt{ \varSigma _{ii} } \right)\) and \(R = D^{-1} \varSigma D^{-1}\). Then it follows \({\mathbb {P}}(\xi \in M) = {\mathbb {P}}(\xi ^* \in D^{-1} (M - \mu ))\). An algorithmic formulation of the spheric radial decomposition is given in Gotzes et al. (2016) and Gugat and Schuster (2018).

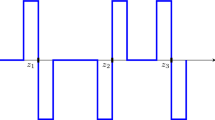

From now on we use SRD instead of spheric radial decomposition and KDE instead of kernel density estimator. The difference in both methods is shown in Fig. 1.

An advantage of the SRD is that we exploit almost all information we can get from the model. Thus the only numerical error occurs while approximating the spherical integral. The big disadvantage of the SRD is that we need to know an analytical solution of our model which we cannot always guarantee. Therefore we introduce a KDE, which estimates the probability density function of a random variable by using a sampling set of the variable.

Definition 1

(Kernel density estimator, see Gramacki (2018)) Let y be a n-dimensional real-valued random variable with an absolutely continuous distribution and probability density function \(\varrho\) with respect to the Lebesgue-measure. Moreover, let \({\mathcal {Y}} = \{ y^{S,1}, \ldots , y^{S,N} \}\) be an independent and identically distributed sample of y. Then, the kernel density estimator \(\varrho _N:{\mathbb {R}}^n \rightarrow {\mathbb {R}}_{\ge 0}\) is defined as

with a symmetric positive definite bandwidth matrix \(H \in {\mathbb {R}}^{n \times n}\) and a n-variate density \(K:{\mathbb {R}}^n \rightarrow {\mathbb {R}}_{\ge 0}\) called kernel.

We apply the kernel density estimation to the balance law solution. If an analytical solution of the model is not known, we can compute the solution numerically and use a sampling set of approximated solutions. Then the desired probability can be computed by integrating the kernel density estimator over the box constraints. That means, we get an approximation error but we can analytically work with numerical solutions of our model. A KDE approach was used in Caillau et al. (2018) for solving chance constrained optimal control problems with ODE constraints. But it was neither used in optimal control problems with PDE constraints and random boundary data nor in the context of continuous optimization with hyperbolic balance laws on networks. Throughout this paper, we illustrate the idea of the KDE in both, the stationary and the dynamic case, such that we can state necessary optimality conditions for optimization problems with probabilistic constraints. This paper is structured as follows:

In the next section, we consider stationary gas networks, similar to Gotzes et al. (2016) and Gugat and Schuster (2018). We first consider a simple model on a graph with only one edge to explain the ideas of the SRD and the KDE. We compare both results in a numerical computation with a classical Monte Carlo method (All numerical tests have been done with MATLAB\(^{\text{\textregistered}}\), version 2015a). Next we use both methods, the SRD and the KDE, to solve a model on a general tree-structured graph and again we compare both methods with a classical Monte Carlo method. Finally, we state necessary optimality conditions for probabilistic constrained optimization problems related to our stationary model. Last in this section we solve a probabilistic constrained optimization problem on a realistic gas network setting.

In Sect. 3, we consider a dynamic water network, in which the contaminant injection occurs at the boundaries. We consider a general linear hyperbolic balance law, which models the diffusion of harmful substances on a linear graph in order to discuss probabilistic constraints in the time dependent case and, whether the SRD can be expanded to this case. We also use the KDE for this model. Finally, we state necessary optimality conditions for probabilistic constrained optimization problems related to our dynamic model and solve a probabilistic constrained optimization problem for a realistic water contamination network setting.

2 Gas networks in a stationary setting

In this section, we consider stationary states in gas networks. As mentioned before, the model here is similar to the model in Gotzes et al. (2016) and Gugat and Schuster (2018). The main difference is that we fix an inlet pressure for our model, which the authors in Gotzes et al. (2016) and Gugat and Schuster (2018) did not. The network is described by a connected, directed, tree-structured graph \(G = ({\mathcal {V}}, {\mathcal {E}})\) (i.e., the graph does not contain cycles) with the vertex set \({\mathcal {V}} = \{v_0, \ldots , v_n \}\) and the set of edges \({\mathcal {E}} = \{e_1, \ldots ,e_n \} \subseteq {\mathcal {V}} \times {\mathcal {V}}\). We assume that the graph has only one inflow node \(v_0\) and that the other nodes are outflow nodes. Let node \(v_0\) be the root of the graph orientated away from the root. The graph is numbered from the root \(v_0\) using breadth-first search or depth-first search. Every edge \(e \in {\mathcal {E}}\) represents a pipe with positive length \(L^e\). For \(x \in [0,L^e]\) we consider the stationary semi-linear isothermal Euler equations for horizontal pipes and ideal gases

With \(p^e = p\left| _{e}\right.\) we represent the restriction of the pressure defined over the network to a single edge e and \(p^e_x\) resp. \(q^e_x\) is the derivative of \(p^e\) resp. \(q^e\) w.r.t. x. Here, \(q^e\) is the flow along edge e and \(c^e, \lambda ^e, D^e \in {\mathbb {R}}_{>0}\) denote the sound speed, the friction coefficient and the pipe diameter. The parameters \(R_S\) and T denote the specific gas constant of natural gas and the (constant) temperature. Note that \(q^e\) is constant on every edge. With \(q^e \ge 0\) we denote that gas flows along the orientation of edge e and with \(q^e \le 0\) we denote that gas flows against the orientation of edge e.

We consider conservation of mass for the flow at the nodes (cf. Kirchhoff’s first law). Let \({\mathcal {E}}_+(v)\) resp. \({\mathcal {E}}_-(v)\) be the set of all outgoing resp. ingoing edges at node \(v \in {\mathcal {V}}\). Let \(b^v \in {\mathbb {R}}\) be the load at node \(v \in {\mathcal {V}}\). With \(b^v \ge 0\) we denote that gas leaves the network at node v (exit node) and with \(b^v \le 0\) that gas enters the network at node v (entry node). The equation for mass conservation for every node \(v \in {\mathcal {V}}\) is given by

Let \(p_i\) denote the pressure at the node \(v_i\) for \(i = 0, \ldots , n\). We assume continuity in pressure at every node, i.e., for all \(v_i \in {\mathcal {V}}\) it holds

Therefore the pressure \(p_i\) is defined by the pressure \(p^e(L^e)\) resp. \(p^e(0)\) with ingoing resp. outgoing edge e at node \(v_i\). We consider (positive) box constraints for the pressures at all outflow nodes \(v_1, \ldots , v_n\), s.t.

In addition, we impose pressure \(p_0\) at node \(v_0\). So for the full graph, we consider the following model:

Our aim is now to find loads \(b = (b^v)_{v \in {\mathcal {V}} \backslash \{ v_0\}} \in {\mathbb {R}}^n_{\ge 0}\) (corresponding to the outflow nodes \(v_1, \ldots , v_n\)), s.t. the model (6) has a solution. We do not consider the load \(b^{v_0}\) at the inflow node \(v_0\), because Eq. (3) provides the relation \(b^{v_0} = - \sum _{v \in {\mathcal {V}} \backslash \{ v_0\}} b^v\). We define

as the set of feasible loads. To go one step further we assume that b is random. This is motivated by reality. Because of the liberalization of the gas market,Footnote 1 the gas network is independent of the consumers and the gas companies. That means a gas network company must guarantee that the required gas can be transported through the network, but the company does not know the exact amount of gas a priori, so it can be seen as random. We assume

with mean value \(\mu \in {\mathbb {R}}_+^n\) and positive definite covariance matrix \(\varSigma \in {\mathbb {R}}^{n \times n}\) on an appropriate probability space \((\varOmega , {\mathcal {A}}, {\mathbb {P}})\). Motivated by the application, we assume \(\mu\) and \(\varSigma\) are chosen s.t. the probability that b takes positive values is almost 1. This assumption would not be needed if the gas demand would be modeled e.g. by a truncated Gaussian distribution but we focus on the non truncated case in this work. In Gotzes et al. (2016) and in Koch et al. (2015), Chapter 13, the authors explain why a multivariate Gaussian distribution is a good choice for the random load vector. So we want to know the probability that for a Gaussian distributed load vector b, the model (6) has a solution, i.e.,

In the next subsections we will use two different approaches for computing this probability. First, to explain the ideas of our approaches we consider a graph with only one edge and later we use the introduced tree-structured graph with one input.

2.1 Uncertain load on a single edge

As mentioned before, we will give two different ways to compute the probability for a random load value to be feasible. Here, we consider a single edge as graph. For the boundary conditions \(p(0) = p_0\) and \(q(L) = b\) model (2) has an analytical solution. Therefore our problem simplifies to verifying the following inequalities:

In fact, that means a random value \(b \in {\mathbb {R}}_{\ge 0}\) is feasible, if the pressure at the end satisfies the box constraints, i.e.

with \(\phi = \frac{\lambda }{c^2 D} (R_S T)^2 L\). We can rewrite the box constraints for the pressure as inequalities. That means \(p_1 \in \left[ p^{\min }, p^{\max } \right]\) iff

Now we can use the SRD (introduced in Sect. 1) in an algorithmic way. We consider \(b \sim {\mathcal {N}}(\mu , \sigma ^2)\) with mean value \(\mu \in \mathbb {R_+}\) and standard deviation \(\sigma \in {\mathbb {R}}_+\). Because the unit sphere in this example is given by \(\{ -1, 1 \}\), we need not to sample N points, we can use the unit sphere itself as sampling. For \(s \in {\mathbb {S}}^0\), we set

Note that in the multivariate Gaussian distribution, the covariance \(\varSigma\) is given, which contains the variances of the random variables on its diagonal. In the one dimensional case, the standard deviation is given, which is a Cholesky decomposition of the variance.

To guarantee, that \(b_s(\hat{r})\) is positive, we define the regular range

Thus we have

We insert \(b_s(\hat{r})\) in the inequalities in (8). Since \(b_s(\hat{r}) \ge 0\) on \(R_{s,\text {reg}}\), we can write \(b_s^2\) instead of \(b_s \vert b_s \vert\). Thus we have quadratic inequalities in r:

So we can write the set \(M_s\) as a union of \(\kappa \in \mathbb {N}\) disjoint intervals, i.e.

with intervals \(I_{s,j} = [ \underline{a}_{s,j}, \overline{a}_{s,j} ]\) and interval bounds \(\underline{a}_{s,j}, \overline{a}_{s,j} \in {\mathbb {R}}\), \(\underline{a}_{s,j} \le \overline{a}_{s,j}\) (\(j = 1, \ldots , \kappa\)). Since the unit sphere contains only two values and

where \(\mathcal {F}_{\chi }(\cdot )\) is the cumulative distribution of the \(\chi\)-distribution, we can compute the probability for a random vector to be feasible. As mentioned before, the SRD gives us an efficient way to compute this probability, but we need to know the analytical solution of our system (6).

Another way to compute the probability for a random load vector to be feasible is the kernel density estimator. It is more general and does not require the analytical solution of our model. We consider the stochastic equation corresponding to (2) with random boundary condition b and coupling conditions (3), (4) which has also a solution \({\mathbb {P}}\)-almost surely. Hence the pressure at node \(v_1\) is a random variable which we denote with \(p_1\). The probability that a random load is feasible is equal to the probability that the pressure \(p_1\) is in the interval \(\left[ p^{\min }, p^{\max } \right]\). We assume that the variance of \(p_1\) is positive and that its distribution of the pressure \(p_1\) is absolutely continuous with probability density function \(\varrho _p\). Now, the probability \({\mathbb {P}}(p_1 \in \left[ p^{\min }, p^{\max } \right] )\) can be computed by integrating the probability density function \(\varrho _p\) over the pressure bound, so we get

If the exact probability density function \(\varrho _p\) is not known, we can approximate the function by a kernel density estimator. Then we integrate this estimator over the interval \([ p^{\min }, p^{\max } ]\) to get an approximation of the probability \({{\mathbb {P}}(b \in M) }\).

As before, we consider

with mean value \(\mu \in \mathbb {R_+}\) and standard deviation \(\sigma \in {\mathbb {R}}_{+}\).

Let \(\mathcal {B} = \{b^{\mathcal {S},1}, \ldots , b^{\mathcal {S},N}\} \subseteq {\mathbb {R}}_{\ge 0}\) be independent and identically distributed samples of the random variable b. Let \(\mathcal {P}_{\mathcal {B}} = \{p_1(b^{\mathcal {S},1}), \ldots , p_1(b^{\mathcal {S},N}) \} \subseteq {\mathbb {R}}\) be the pressures at the end of the edge for the different loads \(b^{\mathcal {S},i} \in \mathcal {B}\) (\(i = 1, \ldots , N\)), which are also independent and identically distributed. We use the KDE in one dimension with bandwidth \(h \in \mathbb {R_+}\) and with a Gaussian kernel (see e.g. Duller 2018; Scott and Terrell 1992) to get an approximation of the density function \(\rho _p\):

Remark 1

The choice of the bandwidth h is a separate topic. We refer to Duller (2018), Chapter 8 and Härdle et al. (2004), Chapter 3 for studies about optimal bandwidths for KDEs. Here we use the heuristic formula for the bandwidth given by

where \(\sigma _N\) is the standard deviation of the sampling and N is the number of samples. The idea is, that we compute the bandwidth depending on the variance of the sampling. This is stated and explained e.g. in Gramacki (2018), Chapter 4.2 and in Turlach (1993).

For the previous bandwidth it holds \((h + (Nh)^{-1}) \rightarrow 0\) \({\mathbb {P}}\)-almost surely for \({ N \rightarrow \infty }\). Therefore, Devroye and Gyorfi (1985) (Chapter 6, Theorem 1) provides the L\(^1\)-convergence of the estimator:

Thus, for an appropriate choice of the bandwidth h, we can use the KDE as an approximation for the exact probability density function of the pressure. From Scheffé’s lemma (see Devroye and Gyorfi 1985), it follows

So with (11) we can approximate the probability for a random load vector to be feasible as follows:

In this example, we can compute the sampling set \(\mathcal {P}_{\mathcal {B}}\) analytically, because the analytical solution of model (2) is known. If this is not the case, e.g., for more complex systems like the stationary full Euler equations or Navier-Stokes equations (see Domschke et al. 2017), one can use numerical methods to solve the PDE and to get an approximated sampling set \(\mathcal {P}_{\mathcal {B}}\).

Example 1

We give an example to illustrate that the results of the KDE and the SRD are similar. Therefore we use the values (without units) from Table 1. With the inequalities (8) and the values given in Table 1 we can see that b is feasible iff \(b \in [0, \sqrt{20} ]\). For comparing the probability we use a classical Monte Carlo (MC) method, in which we check the percentage number of points inside \([0, \sqrt{20}]\). For both, the MC method and the KDE approach, we use the same sampling of \(5 \cdot 10^4\) points. The bandwidth for the KDE is given by (12). The result for 8 tests is shown in Table 2.

The sphere of the SRD in this example is finite (\({\mathbb {S}}^0 = \{-1,1\}\)), so the SRD gives always the exact probability (except numerical errors due to quadrature) of \(82.75\%\). Also, the probabilities computed by MC and the KDE are quite similar. The mean probability in MC resp. KDE is \(82.87\%\) resp. \(82.40\%\) and the variances are 0.0238 resp. 0.0218, which is very close to the (exact) result of the SRD. Further we provide confidence intervals with confidence level \(95\%\) for the results stated in Table 2. The confidence level of \(95\%\) is quite common in statistics. An introduction to confidence intervals can be found in (Linde 2016, Chapter 8). The confidence interval for the MC probabilities is \([82.74\%, 82.99\%]\) and the confidence interval for the KDE probability is \([82.58\%, 82.83\%]\). One can see that the (exact) probability (computed via SRD) is contained in both intervals. Thus one can see, that the KDE with the heuristic bandwidth (12) provides a suitable method to compute the desired probability.

A remaining question is how to choose the sample size s.t. the result is sufficiently accurate. In general it holds the larger the sample size the more accurate is the solution. A good sample size can be found by observing the sample error. In Roache (1998) and Schwer (2007) the authors suggest comparing the numerical result with two other numerical results for larger sample sizes. This mesh-to-mesh comparison is often used in numerics to determine the rate of convergence of the solution. With this approach an appropriate sample size can be chosen for the desired accuracy.

2.2 Uncertain loads on tree-structured graphs

For this subsection, we consider a tree-structured graph (i.e., the graph does not contain cycles) \(G = ({\mathcal {V}}, {\mathcal {E}})\) with one entry \(v_0\), as introduced in the beginning of Sect. 2. Let the graph be numbered from the root \(v_0\) by breadth-first search or depth-first search and let the model (6) holds on every edge.

First we rewrite the system (6) using the solution of the isothermal Euler equations. We use the incidence matrix \(A^+ \in {\mathbb {R}}^{(n+1) \times n}\) of the graph. For an edge \(e_\ell \in {\mathcal {E}}\), which connects the nodes \(v_i\) and \(v_j\) starting from node \(v_i\), we have

A formal definition of the incidence matrix can be found e.g., in Gotzes et al. (2016), Gugat and Schuster (2018) and Gugat et al. (2020). We set \(A \in {\mathbb {R}}^{n \times n}\) as \(A^+\) without the first row (which corresponds to the root resp. the only entry node of the graph). The equation for mass conservation (3) can equally be written as

where \(q_j\) is the (constant) flow on the edge \(e_j\) and \(b_i\) is the load at node \(v_i\) (\(i,j = 1, \ldots , n\)). Due to the tree-structuredness of the graph, A is a square matrix with full rank and thus invertible. Numbering the graph by breadth-first search or depth-first search implies that the matrix A is upper triangular, just like its inverse. Further in (Gotzes et al. 2016, Section 3.2), the authors mention, that \(A^{-1}_{i,j}\) is one if and only if the edge \(e_j\) is on the (unique) path from the root to node \(v_i\), otherwise \(A^{-1}_{i,j}\) is zero. Motivated by (7) we define the function

where \(\varPhi \in {\mathbb {R}}^{n \times n}\) is a diagonal matrix with the values \(\phi ^e= \frac{\lambda ^e}{(c^e)^2 D^e} (R_S T)^2 L^e\) (\(e \in {\mathcal {E}}\)) at its diagonal. The product on the right has to be understood componentwise. The i-th component of this function states the pressure loss from the root \(v_0\) to node \(v_i\). The term \((A^{-1} b)\) comes from the equation for mass conservation (16) and contains the (constant) flows at the edges. With this function we get the following equivalence for feasible loads:

Lemma 1

A load vector \(b \in {\mathbb {R}}_{\ge 0}^n\) is feasible, i.e., there exists a solution of (6), iff the following system of inequalities holds for all \(k = 1, \ldots , n\):

Proof

The result follows from (Gotzes et al. 2016, Corollary 1). In their setting, the inlet pressure \(p_0\) is not given explicitly, it is given inside a range \([p_0^{\min }, p_0^{\max }]\). Then Corollary 1 in Gotzes et al. (2016) states, that a load vector \(b \in {\mathbb {R}}_{\ge 0}^n\) is feasible, iff the following system of inequalities holds:

In our setting the inlet pressure \(p_0\) is explicitly given, which means \(p_0^{\min } = p_0^{\max }\). Then the third inequality directly follows from the first one and the second one and thus, our lemma is a special case of Corollary 1 in Gotzes et al. (2016). \(\square\)

Lemma 2

If \(p_i^{\min } \le p_0 \le p_j^{\max }\) for all \(i,j = 1, \ldots , n\) , then the set of feasible loads M is convex.

Proof

The proof is equal to the proof of Theorem 11 in Gugat et al. (2020) for linear graphs, i.e., graphs without junctions. Here, the proof also works for tree-structured networks, because the pressure at the inflow node is explicitly given. Thus, the inequality, where convexity for tree-structured networks breaks in Gugat et al. (2020), is redundant here (see Lemma 1). \(\square\)

Now we use the SRD to compute the probability for a random load vector to be feasible. For this setting, the SRD approach is explained in detail in Gotzes et al. (2016) and Gugat and Schuster (2018). Let \(b \sim {\mathcal {N}}(\mu , \varSigma )\) with mean value \(\mu \in {\mathbb {R}}_+^n\) and positive definite covariance matrix \(\varSigma \in {\mathbb {R}}^{n \times n}\) for an appropriate probability space \((\varOmega , {\mathcal {A}}, {\mathbb {P}})\) be given. The next steps are similar to the case of one edge. For a point s of a sample \(\mathcal {S} := \{s_1, \ldots , s_N \} \subseteq {\mathbb {S}}^{n-1}\) of \(N \in \mathbb {N}\) uniformly distributed points at the unit sphere \({\mathbb {S}}^{n-1}\), we set

where \(\mathcal {L}\) is s.t. \(\mathcal {L} \mathcal {L}^\top = \varSigma\). Because we only consider outflows, \(b_s(\hat{r})\) must be positive. We define the regular range

From this, it follows that \(b_s(\hat{r}) \in R_{s, \text {reg}}\) is feasible, iff \(b_s(\hat{r})\) satisfies the inequalities in Lemma 1. The inequalities are quadratic in the variable r and we can write the sets \(M_s\) as unions of disjoint intervals. Thus, (9) gives us the probability for a random load vector to be feasible.

As in the previous subsection, we consider the stochastic equation corresponding to (2) with random load b and coupling conditions (3), (4). The n-dimensional random vector p denotes the pressure at the nodes \(v_1, \ldots , v_n\). The probability that b is feasible is equal to the probability that the pressure p at the nodes is in the pressure bounds, which we denote by \({\mathbb {P}}(p \in P^{\max }_{\min })\) with \(P^{\max }_{\min } := \bigotimes _{i=1}^n [p_i^{\min }, p_i^{\max }]\). We assume that the covariance matrix of the random vector p is positive definite and that its distribution is absolutely continuous with probability density function \(\varrho _p\). Now, it holds

However the exact probability density function \(\varrho _p\) is not known. But we can approximate the function using a multidimensional kernel density estimation.

Let \(\mathcal {B} = \{b^{\mathcal {S},1}, \ldots , b^{\mathcal {S},N}\}\) be independent and identically distributed nonnegative samples of the random load vector \(b \sim {\mathcal {N}}(\mu , \varSigma )\). Then, let \(\mathcal {P}_{\mathcal {B}} = \{ p(b^{\mathcal {S},1}), \ldots , p(^{\mathcal {S},N}) \} \subseteq {\mathbb {R}}^n\) be the pressures at the nodes \(v_1, \ldots , v_n\) for the different loads \(b^{S,i} \in \mathcal {B}\) \((i = 1,\ldots ,N)\). These samples are also independent and identically distributed. Note that \(p_j(b^{\mathcal {S},i})\) (\(i \in \{1, \ldots , N\}\), \(j \in \{1, \ldots , n\}\)) is the pressure at node \(v_j\) and the j-th component of the pressure vector \(p(b^{\mathcal {S},i})\). We introduce the general n-dimensional multivariate kernel density estimator (see e.g. Gramacki 2018):

with a symmetric positive definite bandwidth matrix \(H \in {\mathbb {R}}^{n \times n}\) and a n-variate density function \(K:{\mathbb {R}}^n \rightarrow {\mathbb {R}}_{\ge 0}\) called kernel. We choose the the standard multivariate normal density function as kernel. This kernel can be written as the product \(K(x) = \prod _{i=1}^n \mathcal {K}(x_i)\), where the univariate kernel \({ \mathcal {K}:{\mathbb {R}} \rightarrow {\mathbb {R}} }\) is the standard univariate normal density function. Let \(\sigma _{N,i}^2\) denote the positive sample variance of the ith variable. Moreover, let \(V_N\) denote the diagonal matrix \(V_N={\text {diag}}(\sigma _{N,1}^2, \ldots , \sigma _{N,n}^2)\). As suggested in Gramacki (2018), we use the bandwidth matrix

This choice simplifies the estimator to the following form

As mentioned in Scott (2015) and Wand and Jones (1993) such product kernels are recommended and adequate in practice. But, in some situations using only diagonal bandwidth matrices could be insufficient and then general full bandwidth matrices are needed, e.g., depending on the sample covariance matrix.

In order to show the convergence of the multivariate KDE (19), we transform the random vector p via \(y = V_N^{-1/2} p\). Thus we get the transformed sampling set

For the approximation of the probability density function of the transformed variable we use the multivariate KDE with the previous settings adapted to the data \({\mathcal {Y}}_B\). Thus we get the bandwidth matrix \(H = h_y^2 I_{n \times n}\), because the transformed data have unit variance. This leads to a KDE with one single bandwidth \(h_y\) given by

Due to \((h_y + (N h_y^n)^{-1} ) \xrightarrow {N \rightarrow \infty } 0\) \({\mathbb {P}}\text {-almost surely}\), we can apply Chapter 6, Theorem 1 in Devroye and Gyorfi (1985) to the estimator \(\varrho _{y,N}\). Thus, it holds

where \(\varrho _y\) is the exact probability density function of the random variable y. This density function is given by \(\varrho _y(z) = \varrho _p ( V_N^{1/2} z ) \vert \det (V_N) \vert ^{1/2}\) according to the transformation. There is also a similar relation between the estimators: \(\varrho _{y,N}(z) = \varrho _{p,N}( V_N^{1/2} z) \det (V_N)^{1/2}\). Using the previous relations the \(L^1\)-convergence for the KDE \(\varrho _{p,N}\) follows:

Applying Scheffé’s lemma (see Devroye and Gyorfi 1985) yields

Thus, the integral of the estimator over the pressure bounds converges \({\mathbb {P}}\text {-almost}\) surely to the probability \({\mathbb {P}}(p \in P^{\max }_{\min })\). Now, we can use this integral as an approximation for the probability \({\mathbb {P}}( b \in M)\) in (17):

This multidimensional integral has some useful properties, which we will need when we derive the necessary optimality conditions. We want to mention again, that we can compute the sampling set \(\mathcal {P}_{\mathcal {B}}\) analytically, because we know the solution of the stationary isothermal Euler equations.

Example 2

This short example should illustrate the results of both approaches applied to the tree-structured graph with three nodes and two edges shown in Fig. 2. The values (without units) are given in Table 3.

From the inequalities in Lemma 1 we get

As in the last example, we compare both methods, the SRD and the KDE, with a classical Monte Carlo (MC) method. For the MC method and the KDE approach, we use the same sampling of \(1 \cdot 10^5\) points. The result for 8 tests is shown in Table 4.

The sampling of the SRD consists of \(1 \cdot 10^4\) points uniform distribution of the sphere \({\mathbb {S}}^1\). Thus the SRD gives always the same (good) result of \(74.95\%\), when rounded to 4 digits. Again, the MC method and the KDE approach are quite close. The mean probability in MC resp. KDE is \(75.10\%\) resp. \(74.79\%\) and the variance is 0.0235 resp. 0.0215. As in the example with one edge we provide the confidence intervals for confidence level \(95\%\) here. For the MC probability the confidence interval is \([74.97\%, 75.23\%]\) and for the KDE probability it is \([74.66\%, 74.91\%]\). The (good) result of the SRD close to both intervals. Thus, also in the two dimensional case, the KDE approach is quite good for computing the desired probability. The computing time in every test is quite reasonable. The computation time of the MC method and the KDE approach needs less than one second, while the SRD needs almost two seconds, but the focus of the implementation was on correctness, not on efficiency. So the computing time of the implementation of course can be improved.

2.3 Stochastic optimization on stationary gas networks

In this subsection, we formulate necessary conditions for optimization problems with approximated probabilistic constraints. Both, MC and SRD, give algorithmic ways to compute the probability for a random load vector to be feasible. With a KDE approach, which provides a sufficiently good approximation of the probability (if the sample size is sufficiently large), we can get necessary optimality conditions for certain optimization problems with approximated probabilistic constraints. Define the set

and let a function

be given. For a probability level \(\alpha \in (0,1)\) consider the optimization problems

and

Normally, \(\alpha\) is chosen large, s.t. \(\alpha\) is almost 1. As mentioned before, our aim here is to write down the necessary optimality conditions in an appropriate way. In Sect. 2.1 and Sect. 2.2 we stated the \({\mathbb {P}}\)-almost surely convergence of the KDE to the exact probability density. Further the numerical examples provide good and accurate results. In fact, we formulate the optimality conditions for the approximated optimization problems

and

We mention again that due to the convergence results stated before, the approximated probabilistic constraint converges \({\mathbb {P}}\)-almost surely to the exact probabilistic constraint for \(N \rightarrow \infty\). We define

Our aim is now to integrate the kernel density estimator of the pressure at the nodes \(v_1, \ldots , v_n\) over the pressure bounds. Since the following computations hold for the probability in (26) as well as in (27), we neglect the argument of M from here on. We have

with \(\varrho _{p,N}\) as in (19). Since \(P^{\max }_{\min }\) is a n-dimensional cuboid and \(\varrho _{p,N}(z)\) is continuous we can use Fubini’s Theorem. Thus we have

and as the density estimation of the pressure is a product of an exponential function in every dimension, we can exchange the integral and the product. It follows

We define

and we set \(\tau _{i,j} := \varphi _{i,j}(z_j)\) and use integration by substitution. Then with \(\varphi '_{i,j}(x) = (\sqrt{2}\ h_j)^{-1}\) we get

This formula contains the Gauss error function (see e.g. Andrews 1998):

We insert the Gauss error function in the previous integral term and we obtain

Now we consider problem (26). For \(\alpha \in (0,1)\) we define

Thus we have

We compute the partial derivatives of \(g_\alpha\). For \(k \in \{1, \ldots , n\}\) we have

and with the Gauss error function (28) it follows

Then, the k-th component of the gradient \(\nabla g_\alpha (p^{\max }) \in {\mathbb {R}}^n\) is given by (30). Note that for \(b^{S,1}, \ldots , b^{S,N} \in {\mathbb {R}}^n\) (\(N > 1\)) and \(p_i^{\max } > p_i^{\min }\) (\(i = 1,\ldots ,n\)), the partial derivatives in (30) are negative for all \(p^{\max } \in {\mathbb {R}}^n\).

Remark 2

Since (26) has only one constraint, the linear independent constraint qualification (LICQ) holds for every \(\tilde{p}^{\max } > p^{\min }\) (componentwise) with \(g_\alpha (\tilde{p}^{\max }) = 0\).

Now we can state necessary optimality conditions for the optimization problem (26):

Corollary 1

Let \(p^{*,\max } \in {\mathbb {R}}^n\) be a (local) optimal solution of (26). Since the LICQ holds in \(p^{*,\max }\), there exists a multiplier \(\mu ^* \ge 0\), s.t.

Thus, \((p^{*,\max }, \mu ^*) \in {\mathbb {R}}^{n+1}\) is a Karush–Kuhn–Tucker point.

Now we consider problem (27). We slightly change the notation to add the explicit dependence on \(p_0\), so we write \(p(b^{\mathcal {S},i},p_0)\) (\(i = 1, \ldots , N\)) instead of \(p(b^{\mathcal {S},i})\) for the samples in the set \(\mathcal {P}_{\mathcal {B}}\). We redefine the function \(\varphi _{i,j}\) (\(i = 1, \ldots , N\), \(j = 1, \ldots , n\)) as

and we define the constraint of (27) as

Thus we have

For the derivative with respect to \(p_0\), it follows

Due to the dominated convergence theorem we can exchange the integral and the derivative, thus we have

We define \(\tau _{i,j} := \varphi _{i,j}(z_j, p_0)\) and since \(p_k(b_i, p_0)\) is independent of \(z_k\), it follows

The second integral can be solved analytically and yields:

Hence, we have

In the setting of the stationary gas networks it is true that

Corollary 2

Let \(p^*_0 \in {\mathbb {R}}\) be a (local) optimal solution of (27). Since the LICQ holds in \(p^*_0\) (cf. Remark 2), then there exists a multiplier \(\mu ^* \ge 0\), s.t.

Thus the point \((p^*_0, \mu ^*) \in {\mathbb {R}}^2\) is a Karush–Kuhn–Tucker point.

If the objective function f is strictly convex and the feasible set is convex, then all necessary conditions stated here are sufficient. In this case, Corollary 1 and Corollary 2 give a characterization of the (unique) optimal solution of the approximated problems (26) and (27).

Remark 3

The question whether the solutions of the approximated problems (26) and (27) converge to the solutions of (24) and (25), is out of scope of this work but all numerical results and tests hypothesize the convergence if the sample size goes to infinity.

2.4 Application to a realisitic gas network

The GasLibFootnote 2 promotes research on gas networks by providing realistic benchmark instances. We use the GasLib-11 as a meaningful example. A scheme of the GasLib-11 is shown in Fig. 3 and more information can be found at http://gaslib.zib.de/testData.html.

The GasLib-11 consists in 11 nodes and 11 edges. Two of the edges represent compressor stations and one edge represents a valve. Compressor stations counteract the pressure loss caused by friction in the pipes. Here the compressor stations are modeled as frictionless pipes, that satisfy the equation

as it is done in Gugat and Schuster (2018). This model for compressor stations is also suggested in Koch et al. (2015), where one gets an excellent overview about the details on how to model a compressor station. For our system we assume that the compressor at edge \(e_2\) is switched off, i.e., \(u_{e_2} = 1\) (so this edge can be modeled as frictionless pipe) and that the compressor station at edge \(e_8\) increases the pressure by \(20\%\), i.e., \(u_{e_8} = 1.2\). The valve is also modeled as a frictionless pipe in which gas can be transported if the valve is opened and which cannot be used for gas transportation if the valve is closed. We assume that the valve is closed, so this edge vanishes in our implementation. For the remaining edges (\(e_1, e_3, e_4, e_5, e_6, e_7, e_9, e_{10}\)) we assume \(\phi _{e_i} = 1\).

Further gas enters the network at the nodes \(v_0\), \(v_1\), \(v_5\) and is transported through the network to the nodes \(v_6\), \(v_9\) and \(v_{10}\). The values for the inlet pressure \(p_0 = [p_{v_0}, p_{v_1}, p_{v_5}]\), the lower pressure bound \(p^{\text {min}} = [p^{\text {min}}_{v_6}, p^{\text {min}}_{v_9}, p^{\text {min}}_{v_{10}}]\) and the probability distribution at the exit nodes \(\mu = [\mu _{v_6}, \mu _{v_9}, \mu _{v_{10}}]\) and \(\varSigma\) with \({\text {diag}}(\varSigma ) = [\sigma ^2_{v_6}, \sigma ^2_{v_9}, \sigma ^2_{v_{10}}]\) are given in Table 5.

Consider the linear function

with \(c = \mathbb {1}_3\). We first solve the deterministic problem

where the load vector b is given by the mean value \(\mu\). We use default setting of the MATLAB\(^{\text{\textregistered}}\)-routine fmincon to solve (31), which is an interior-point algorithm. It returns

as optimal deterministic solution, i.e., as the lowest upper pressure bound for the nodes \(v_6\), \(v_9\) and \(v_{10}\). Now we consider the uncertain outflow at the nodes \(v_6\), \(v_9\) and \(v_{10}\). We compute the probability for a random load vector to be feasible with respect to the optimal deterministic pressure bounds by using (29). The probability \({\mathbb {P}}(b \in M(p^{\max }_{\text {det}}))\) for 8 tests (each with \(1 \cdot 10^5\) samples) is shown in Table 6. The probabilities for the deterministic optimal pressure bounds are unsatisfactory. The mean MC probability is 35.77% and the mean KDE probability is 35.40%. For a confidence level of 95% the confidence interval for the MC probability is \([35.56\%, 35.98\%]\) and the confidence interval for the KDE probability is \([35.16\%, 35.64\%]\). So if the boundary data (i.e., the gas demand) is uncertain, the optimal deterministic pressure bounds are unserviceable in the sense that these bounds do not provide a good operating gas network for uncertain gas demand.

Next we consider the probabilistic constrained optimization problem (26). We set

For arbitrary starting points the MATLAB\(^{\text{\textregistered}}\)-routine fmincon sometimes struggles with finding a solution of (26) but the optimal deterministic solution appears to be a good choice for the starting point of the routine. The results of 8 Tests with \(1 \cdot 10^5\) sampling points, i.e., the optimal upper pressure bounds \(p^{\text {max}}\) at the nodes \(v_6\), \(v_9\) and \(v_{10}\), are shown in Table 7. In 8 more Tests we solve (26) by using Corollary 1. The points that satisfy the necessary optimality conditions are always good candidates for the optimal solution. To be more precise on that we solve the following optimization problem using again fmincon:

Here, we get values that are almost equal to the optimal solution stated in Table 7, they vary in a range of \(1 \cdot 10^{-6}\). From this fact one could expect that the necessary optimality conditions stated in Corollary 1 are sufficient but we do not analyze this here.

One can see, that all results are almost equal. The optimal upper pressure bounds of the stochastic optimization problem (26) are slightly larger than the optimal upper pressure bounds of the deterministic optimization problem (31) but the probability for a random load vector to be feasible is 75%, as it is required in the probabilistic constraint. The computation time for a single test was about 20 min, where the optimization time was much less than a second. The 20 min were almost only needed to solve the GasLib-11 \(1 \cdot 10^5\) times. The solution of the necessary optimality conditions needed a little bit more time than the direct solution using fmincon and (29), but solving the necessary optimality conditions leads to a solution more often even if the starting point of fmincon is badly chosen.

3 Dynamic flow networks

In this section, we extend the in Sect. 2 introduced methods to dynamic systems. We first discuss probabilistic constraints in a dynamic setting and time dependent random boundary data. Then we consider a model, which we can solve analytically to use the idea of the SRD for dynamic systems and compare it with the idea of the KDE. Last we also formulate necessary optimality conditions for optimization problems with probabilistic constraints in a dynamic setting.

3.1 Time dependent probabilistic constraints and random boundary data

In Sect. 2, we computed the probability for a random vector to be feasible. So if we fix a point in time \(t^* \in [0,T]\), we can use a similar procedure. But if we do not fix a point in time, we need an extension to the probabilistic constraint how it has to be understood for a time period. For a time dependent uncertain boundary function b(t) and a (time dependent) feasible set M(t), a possible formulation (the one, that we will use later) for the probabilistic constraint is

That means, we want to guarantee, that a percentage \(\alpha\) of all possible random boundary functions (in an appropriate probability space (\(\varOmega , {\mathcal {A}}, \mathcal {P})\)) is feasible in every point in time \(t \in [0,T]\). This is a very strong condition. In fact (32) is a so-called probust constraint, which means it is a mix between a probabilistic constraint and a robust constraint. This class of constraints has been developed recently and is currently of big interest in research (see e.g. van Ackooij et al. 2020; Adelhütte et al. 2020). Another possibility is

which means, that a random boundary function must be feasible with a percentage of \(\alpha\) in every time point \(t \in [0,T]\). For our applications, this might not make sense, because we want to guarantee that problems for a gas consumer only occur in worst case scenarios. This probabilistic constraint only states, that even in these worst case scenarios, the problems for a consumer stay small, but these small problems can occur in every point in time. Probabilistic constraints of this type have been discussed in van Ackooij et al. (2016). A third possibility for the time dependent probabilistic constraint is an ergodic formulation:

That means the ergodic probability during the time period [0, T] must be large enough. This formulation might make sense in other applications, but not for the flow problems which are considered here (with the same argument as before). Thus we use the formulation (32) for time dependent probabilistic constraints.

Next we discuss the uncertain boundary data. For a random boundary data, we use a representation as Fourier series as it is done in Farshbaf-Shaker et al. (2020). So for a deterministic boundary function \(b_D: [0,T] \rightarrow {\mathbb {R}}\) with \(b_D(0) = 0\) and for \(m = 0, 1, 2, \ldots\), we define the orthonormal series

and the coefficients

Then we can write the boundary function \(b_D(t)\) in a series representation

Now for \(m \in \mathbb {N}_0\), we consider the Gaussian distributed random variables \(a_m \sim {\mathcal {N}} (1, \sigma ^2)\) for a mean value 1 and a standard deviation \(\sigma \in {\mathbb {R}}_+\) on an appropriate probability space \((\varOmega ,{\mathcal {A}},{\mathbb {P}})\). Then we consider the random boundary data

Since the random variables \(a_m\) are all independent and identically distributed, we can use the fact that for \(b_D \in L^2(0,T)\), we have also \(b \in L^2(0,T)\) \({\mathbb {P}}\)-almost surely. In Marcus and Pisier (1981), Hill (2012) and Farshbaf-Shaker et al. (2020) the authors state that this approach even guarantees better regularity and it also holds for a larger class of random variables.

Remark 4

For the numerical tests, we truncate the Fourier series after \(N_F \in \mathbb {N}\) terms. Thus we use

instead of (35) for the implementation of \(b_D\). Because it holds

this truncated Fourier series is a sufficient good expression for \(b_D(t)\) for \(N_F\) large enough. The question how to choose \(N_F\) strongly depends on the data \(b_D\) and on the desired accuracy of the Fourier series. In general one has to guarantee, that the truncation error is small. One criteria for finding a sufficient large number \(N_F\) is to state a bound for the \(L^2\)-truncation error. For \(\vartheta \in (0,1)\) we require that \(N_F\) is chosen large enough, s.t.

Due to the convergence of the Fourier series it is always possible to find \(N_F\) large enough, s.t. the \(L^2\)-error bound is satisfied for all \(\vartheta \in (0,1)\). Another criteria for an sufficient large number \(N_F\) is a bound for the \(L^\infty\)-truncation error. For \(\vartheta \in (0,1)\) we require that \(N_F\) is chosen large enough s.t.

The Gibbs phenomenon might cause problems regarding the \(L^\infty\)-error if \(b_D\) contains discontinuities (see e.g. Thompson 1992), so in this case the \(L^2\)-error is the better choice. For continuous functions \(b_D\) both estimates can be used to find a sufficient large number \(N_F\). Usually \(\vartheta\) is chosen small, even close to zero, i.e., \(\vartheta = 1\%\) or \(\vartheta = 0.1\%\), but this choice depends on the operator. Similarly we use

with \({\mathcal {N}}(1, \sigma ^2)\)-distributed random variables \(a_0, \ldots , a_{N_F}\) instead of (36) as random boundary data for the implementation.

This representation of a random boundary function as Fourier series requires \(b_D(0) = 0\). If this is not given, i.e., if \(b_D(0) \ne 0\), one can shift \(b_D\) by \(b_D(0)\), get the representation as Fourier series and shift this Fourier representation back by \(b_D(0)\), as we do later in Example 3.

3.2 Deterministic loads for a scalar PDE

For \((t,x) \in [0,T] \times [0,L]\) and constants \(d < 0\), \(m \le 0\), we consider the deterministic scalar linear PDE with initial condition and boundary condition

Here, r is the concentration of the contamination. The term \(d r_x\) describes the transport of the contamination according to the water flow and the term mr describes the decay of the contamination. This equation models the flow of contamination in water along a pipe or in a network (see Gugat 2012; Fügenschuh et al. 2007). Assume \(C^0\)-compatibility between the initial and the boundary condition, which is \(r_0(L) = b(0)\). We will specify the boundary condition later. We state \(b(t) \ge 0\), if the water gets polluted and \(b(t) < 0\) if the water gets cleaned.

For initial data \(r_0 \in L^2(0,L)\) and boundary data \(b \in L^2(0,T)\), a solution of (37) is in \(C([0,T], L^2(0,L))\) and it is analytically given by

Now, we consider a linear graph \(G = ({\mathcal {V}}, {\mathcal {E}})\) with vertex set \({\mathcal {V}} := \{v_0, \ldots , v_n \}\) and the set of edges \({\mathcal {E}} = \{e_1, \ldots , e_n\} \subseteq {\mathcal {V}} \times {\mathcal {V}}\). Every edge \(e_i \in {\mathcal {E}}\) has a positive length \(L_i\). Linear means here, that every node has at most one outgoing edge (see Fig. 4). For a formal definition see Gugat et al. (2020).

Equation (37) holds on every edge. We assume conservation of the flow at the nodes, i.e.

where \(r_i\) denotes the contamination concentration on edge \(e_i\) and \(b_i\) denotes the boundary data at node \(v_i\).

For constants \(d_k < 0\), \(m_k \le 0\), the full model can be written as follows (with \((t,x) \in [0,T] \times [0,L_k]\) on the k-th edge and \(k = 1, \ldots , n\)):

The model can be interpreted as follows: The graph represents a water network, where the water is contaminated at the nodes \(v_i\) (\(i = 1, \ldots n\)). This contamination is distributed in the graph in a negative way (due to \(d_k < 0\)). We want to know the contamination rate at node \(v_0\). Later, we assume the contamination rate at the nodes to be Gaussian distributed. Then for a time \(t^* \in [0,T]\), we want to compute the probability for the contamination rate at node \(v_0\) to fulfill box constraints using both, the SRD and the KDE. In both cases, we also consider the general time dependent chance constraints discussed before. The next theorem states an analytical solution for the model (38).

Theorem 2

Let initial states \(r_{k,0} \in H^1(0,L_k)\) and boundary conditions \(b_k \in H^1(0,T)\) for \(k = 1, \ldots , n\) be given. Then the solution of the k-th edge of (38) is in \(C^1([0,T], H^1(0,L_k))\) and it is analytically given for \(x \ge d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) by

For the case \(x < d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) the solution is given by

for \(d_k t + d_k \sum _{j=k}^{\ell -1} \frac{L_j}{d_j} \le x < d_k t + d_k \sum _{j=k}^{\ell } \frac{L_j}{d_j}\) and \(\ell \in \{k, \ldots , n\}\) (with \(d_k < 0\) for \(k = 1, \ldots , n\)).

Remark 5

We set \(t^* := \sum _{j=1}^n \frac{L_j}{\vert d_j \vert }\). For points in time \(t \le t^*\) the solution can depend explicitly on the initial condition. If we assume that \(\vert d_i \vert\) are absolute velocities and \(L_i\) are lengths, the information from the right boundary needs \(\frac{L_n}{ \vert d_n \vert }\) seconds to travel along the n-th edge. Then after \(\frac{L_n}{\vert d_n \vert }\) seconds, the solution of the n-th edge only depends on the boundary data, but the solution of edge \(n-1\) can still depend on the initial condition of the n-th edge. A scheme of characteristics for a graph with 4 edges is shown in Fig. 5.

Characteristics of (38) on a graph with 4 edges

Remark 6

In the analytical solution of (38) we only distinguish if the solution depends on the initial or the boundary condition, depending on the time and the location in the pipe. So for the solution of edge 4 in Fig. 5, we distinguish between

That means, at the beginning of edge 4 (for \(x = 0\)), for \(0 \le t \le -\frac{L_4}{d_4}\), the solution of edge 4 depends on the initial condition of edge 4. From this, it follows, that the solution of edge 3 in Fig. 5 depends only on the initial condition of edge 3 for

It depends on the boundary conditions of edge 3 and the initial condition of edge 4 (due to the coupling condition) for

and it depends on the boundary conditions of edge 3 and edge 4 for

This leads to the differentiation in Theorem 2 in the case \(x < d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) (\(k = 1, \ldots , n\)).

Remark 7

With the result of Theorem 2 one can also derive analytical solutions of (38) for tree-structured graphs, but one has to take into account, that the flow at the end of an edge (due to coupling conditions) can depend on more than one outgoing edges. That means the solution on a tree-structured graph is basically the sum over all paths of the solution stated in Theorem 2.

Proof of Theorem 2

We define the following functions:

We consider the k-th edge in a linear graph with n edges (\(k \in \{1, \ldots , n\}\).

Step 1: The PDE in (38) holds

For \(x \ge d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) we have

and

Thus it follows

So the PDE in the system (38) holds in the marked area in Fig. 6a. For \(x < d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) and \(\ell \in \{k, \ldots , n\}\), we have

and

It follows

and the PDE in system (38) also holds in the marked area in Fig. 6b.

Areas in which the PDE of system (38) holds

Step 2: The initial conditions in (38) hold

Next we show, that the initial conditions hold. For \(x < d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) and \(\ell = k\), we have

Since sums from k to \(k-1\) are equal to 0, this leads to \(\gamma _{k,k}(0,x) = 0\) and \(\delta _{k,k}(0,x) = x\). Thus the initial conditions are fulfilled (see Fig. 7a).

Step 3: The boundary conditions in (38) hold

For checking the boundary condition we consider (i.e. \(k = n\) and \(x \ge d_n t + L_n\)), we have

since \(\alpha _{n,n}(L_n) = 1\) and \(\beta _{n,n}(t,L_n) = t\) (see Fig. 7b).

Areas in which the initial and the boundary conditions of (38) hold

Step 4: The coupling conditions in (38) hold

Finally, we have to check the coupling conditions. For \(k = 1, \ldots , n-1\) and \(x \ge d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) it is \(\alpha _{k,i}(L_k) = \alpha _{k+1,i}(0)\) and \(\beta _{k,i}(t,L_k) = \beta _{k+1,i}(t,0)\). Thus we have

and the coupling conditions are fulfilled (see Fig. 8a). For \(k = 1, \ldots , n-1\), \(x < d_k t + d_k \sum _{j=k}^n \frac{L_j}{d_j}\) and \(\ell \in \{k+1, \ldots , n\}\) we have \(\gamma _{k,\ell }(t,L_k) = \gamma _{k+1,\ell }(t,0)\) and \(\delta _{k,\ell }(t,L_k) = \delta _{k+1,\ell }(t,0)\). It follows

So the coupling conditions also hold in this case (see Fig. 8b) and the theorem is proven. \(\square\)

Areas in which the coupling conditions of (38) hold

In the next section, we consider model (38) with uncertain boundary data.

3.3 Stochastic loads for a scalar PDE

The model (38) describes the distribution of the water contamination in a network which is contaminated by the consumers at the nodes except \(v_0\). At the end of the network (at node \(v_0\)), there are restrictions on the contamination rate. But because the contamination rate at the nodes cannot be known a priori, it can be seen as random. Of course one can expect a certain value from statistics or measurements, but this value is never exact. Therefore we use the random boundary data in a Fourier series representation, which was introduced before. In this section we first fix a point in time \(t^* \in [0,T]\) and compute the probability, that the solution of (38) with random boundary data satisfies box constraints at single point in time \(t^* \in [0,T]\). Then we generalize this approach and compute the probability, that the box constraints are satisfied for all times \(t \in [0,T]\) (cf. (32)).

For \(m \in \mathbb {N}_0\), we consider the Gaussian distributed random variables \(a_m \sim {\mathcal {N}}(\mathbb {1}_n, \varSigma )\) with mean value \(\mathbb {1}_n \in {\mathbb {R}}_+^n\) and positive definite covariance matrix \(\varSigma \in {\mathbb {R}}^{n \times n}\) on an appropriate probability space \((\varOmega , {\mathcal {A}}, {\mathbb {P}})\). Then the random boundary data at node \(v_k\) (for \(k \in \{1, \ldots , n\}\)) is given by

with coefficients

and \(\psi _m\) defined in (33). Note again, that for the implementation, we cut the series after \(N_F \in \mathbb {N}\) terms, which is a good approximation of b for \(N_F\) large enough (see Remark 4) and as mentioned before, if \((b_D)_k \in L^2(0,T)\), then \(b_k \in L^2(0,T)\) \({\mathbb {P}}\)-almost surely. Because water cannot get cleaned at the nodes, we are only interested in positive boundary values (cf. Sect. 2). Therefore, we assume that \(b_D \in L^2(0,T)\) with \(b_D \ge 0\) and that the parameter \(\varSigma\) of the distribution of \(a_m\) are chosen s.t. the probability that \(b \ge 0\) is almost 1. In practice this can be done as follows: Let \(\gamma _k^* := \text {argmin}_{t \in [0,T]} b_k^D(t)\) and let \(\mathfrak {I}_{k,_+}\) resp \(\mathfrak {I}_{k,-}\) be the set of indices where \(a_{m,k}^0 \psi _m(\gamma _k^*) \ge 0\) resp. where \(a_{m,k}^0 \psi _m(\gamma _k^*) < 0\). We split the Fourier series in positive and negative terms, it follows

Mention that the \(a_m\) all are identically distributed and \(a_{m,k}\) has the variance \(\sigma ^2_k\). We use that fact, that a random Gaussian number \(a_{m,k}(\omega )\) is in \([1 - 3\sigma _k, 1 + 3\sigma _k]\) with probability \(99.73\%\). The worst case for a random scenario with random numbers \(a_{k,m}(\omega ) \in [1 - 3\sigma _k, 1 + 3\sigma _k]\) would be, if the positive terms get smaller and the negative terms get larger, i.e.,

From this it follows, that \(b_k(\gamma _k^*) \ge 0\), if

For the implementation this is a quite cheap task since the terms of the Fourier series have to be computed anyway. When we would use a truncated Gaussian distribution for the \(a_{m,k}\) bounded from below by \(1 - 3 \sigma _k\) and bounded from above by \(1 + 3 \sigma _k\), then we could guarantee that \(b_k(t, \omega )\) is non negative on [0, T]. As it is mentioned before, we want the solution at a time \(t^* \in [0,T]\) at the node \(v_0\) to satisfy box constraints, s.t.

So the full model in this subsection is given in (38). For this model, we define the set of feasible loads as

Our aim in this subsection is, for a time \(t^* \in [0,T]\), to compute the probability

which is the probability, that for a random boundary function \(b \in L^2(0,T)\), the solution of the linear system (38) satisfies the box constraints (39) at a point in time \(t^* \in [0,T]\). From Theorem 2 we know that

where \(b^\omega\) denotes the realization \(b(\omega )\) of the random boundary data for \(\omega \in \varOmega\). For \(i = 1, \ldots , n\), we define the (time dependent) values

and

Then, b is feasible at time \(t^* \in [0,T]\), iff

for \(t^* \ge - \sum _{j=1}^n \frac{L_j}{d_j}\) and it is feasible, iff

for \(-\sum _{j=k}^{\ell -1} \frac{L_j}{d_j} \le t^* < - \sum _{j=1}^n \frac{L_j}{d_j}\) (\(\ell \in \{1, \ldots , n\}\)). Due to the distribution of the random values \(a_m\) (\(m = 0, 1, \ldots\)), we have

with \(\mu _b(\cdot ) \in {\mathbb {R}}_+^n\) and \(\varSigma _b(\cdot ) \in {\mathbb {R}}^{n \times n}\) positive definite. To compute the desired probability for a point in time \(t^* \in [0,T]\), we use the idea of the SRD. For a point \(s \in {\mathbb {S}}^{n-1}\) at the unit sphere, we set

with \(\pi _b(t) = \mathcal {L}_b(t) v\) and \(\mathcal {L}\), s.t. \(\mathcal {L}_b(t) \mathcal {L}_b^\top (t) = \varSigma _b(t)\). Because we are only interested in positive boundary values, we define the regular range as

Thus, similar to the stationary case, the (time dependent) one-dimensional sets

at a point in time \(t^*\) can be computed by intersecting the regular range with the inequality (41) resp. (42). If \(t^* \ge - \sum _{j=1}^n \frac{L_j}{d_j}\), then from (41) it follows

Define the values

and

Then we have

If \(t^* < - \sum _{j=1}^n \frac{L_j}{d_j}\) (\(\ell = 1, \ldots , n\)), then from (42) it follows

Define the values

and

Then we have

Thus, for every point in time \(t^* \in [0,T]\), the set \(M_s(t^*)\) can be represented as a union of disjoint intervals, which we can use to compute the probability for the random boundary function b to be feasible, like in (9).

Next, we approximate the probability \({\mathbb {P}}( b \in M(t^*))\) by using the KDE approach introduced in Sect. 2.1. We consider the stochastic equation corresponding to (38) with random boundary data. Note that this equation has also a solution \({\mathbb {P}}\)-almost surely. We assume that the distribution of the random variable \(r_1(t^*,0)\) is absolutely continuous with probability density function \(\varrho _{r,t^*}\) for \(t^* \in [0,T]\). Thus the point in time \(t^*\) has to be large enough, so that \(r_1(t^*,0)\) depends on the random boundary data as it is explained in Remark 5. Similar to Sect. 2.1, it holds

In order to approximate the unknown probability density function by the KDE, we need a sampling set for the random variable \(r_1(t^*,0)\). Therefore, let

for \(m=1,\ldots ,N_F\) be independent and identically distributed sampling sets of \(a_m \sim {\mathcal {N}}(\mu , \varSigma )\) (with mean value \(\mu \in {\mathbb {R}}_+^n\) and \(\varSigma \in {\mathbb {R}}^{n \times n}\) positive definite). Let

with \(b^{S,i} = \sum _{m=0}^{N_F} a_m^{S,i} a_m^0 \phi _m\) be the corresponding sampling of the random boundary function, where \(b^{S,i} \in L^2(0,T)\) with \(b^{S,i} \ge 0\) (\(i = 1, \ldots , N\)) \({\mathbb {P}}\)-almost surely. With this and Theorem 2 (or an appropriate numerical method for solving the system (38)), we define the sample

where \(r_1(t^*,0,b^{\mathcal {S},i})\) (\(i = 1, \ldots , N\)) is the solution of (38) at node \(v_0\) at time \(t^* \in [0,T]\) with boundary function \(b^{S,i} \in \mathcal {B}_{{\mathcal {A}}}\). The samplings \(\mathcal {B}_{{\mathcal {A}}}\) and \(\mathcal {R}_*\) are also independent and identically distributed. Then for a bandwidth \(h \in {\mathbb {R}}_+\), the probability density function \(\varrho _{r,t^*}\) is approximately given by

We choose the bandwidth according to (12). Therefore, we get the same convergence results for the KDE resp. the approximated probability as in (13) resp. (14). So we can approximate the probability for a random boundary function b to be feasible at time \(t^* \in [0,T]\) by

So far, the computation of the desired probability in this subsection was only for box constraints at a certain point in time \(t^* \in [0,T]\), e.g., the end time \(t^* = T\). As mentioned before, we are interested in box constraints for the full time period, which leads to a probabilistic constraint given in (32). The idea is, that \(r_1(t,0)\) satisfies the box constraints for all \(t \in [0,T]\), iff the maximum and the minimum value of \(r_1(t,0)\) in [0, T] satisfies the box constraints:

We define the values

and

For the SRD we use a similar procedure as above. We have

That means, we need to intersect the regular range with two inequalities of the form (43) resp. (44) to get the set \(M_s(\underline{t}) \cap M_s(\overline{t})\) and to compute the desired probability. This is only possible, if one compute \(\underline{t}\) and \(\overline{t}\) for the deterministic boundary function \(b_D(t)\). Otherwise, due to the randomness of the boundary functions b(t), the argmin and the argmax can be shifted and thus, the \(\underline{t}\) and \(\overline{t}\) depend on this uncertainty. So a general \(\underline{t}\) and \(\overline{t}\) does not exist and it is not clear, where to evaluate the mean and the variance in (43) resp. (44). But if we use the deterministic boundary function, the \(\underline{t}\) and the \(\overline{t}\) does not meet the minimal and maximal values of the random boundary functions and thus, the result may not be significant.

The KDE uses only the solution of the model for estimating an analytical probability density function, which we can use later for the optimization. To extend the KDE to probabilistic constraints like (32), we consider the minimal and maximal contamination concentration at node \(v_0\) in the time period [0, T]. We assume that the distribution of the random vector \(R:= \left( \min _{t \in [0,T]}r_1(t,0), \max _{t \in [0,T]} r_1(t,0) \right) ^T\) is absolutely continuous with probability density function \(\varrho _R\). Using this time independent variable, we get

We use now a two dimensional KDE like in the stationary case for tree-structured graphs. For the set \(\mathcal {B}_{{\mathcal {A}}}\) we define the sampling set of the random variable R by

with

and

This sampling is also independent and identically distributed. Using the KDE given in (19) with bandwidth (18), the probability density function of the minimal and maximal contamination rate at node \(v_0\) in the time period [0, T] is approximately given by

where \((\sigma _{N,1}^{\min })^2\) and \((\sigma _{N,1}^{\max })^2\) are the variances of \(\underline{r}_1^{S,i}\) and \(\overline{r}_1^{S,i}\) (\(i = 1, \ldots , N\)). For this estimator we get the same convergence results for the KDE resp. the approximated probability as in (21) and (22). Thus we approximate the desired probability as follows:

Remark 8

If \(\underline{r}_1^{S,i}\) or \(\overline{r}_1^{S,i}\) is taken in the time period, in which the solution depends on the initial data, then the distribution function of the minimal resp. maximal contamination rate contains a discontinuity. Thus, a probability density function in the classical sense does not even exist. So one has to guarantee, that \(\underline{r}_1^{S,i}\) and \(\overline{r}_1^{S,i}\) is not taken in the beginning of the time period, e.g., by excluding this part from the probabilistic constraint. As it is mentioned in Remark 5, for times \(t \ge t^*\) with

the solution does not depend on the initial condition anymore. So instead of solving (32) one can solve

Motivated by the application this makes sense since the initial state is either given a priori or can be chosen a priori s.t. all bounds are satisfied for small times.

Example 3

Consider the graph with one edge shown in Fig. 9.

For the computation, we use \(r_0(x) = 5 \exp \left( \frac{m}{d} (x-L) \right)\) as initial function, \(b_D(t) = -2 \sin (2t) + 5\) and \(b(t,\omega ) = \sum _{m=0}^{\infty } a_m(\omega ) a^0_m \psi _m(t) + 5\) as random boundary function. The coefficients \(a^0_m\) for the shifted function \(b_D(t) - 5\) are given by (34). The initial function is chosen s.t. the solution is constant at \(x = 0\) for small times, so we guarantee, that all minimal values are below this constant value and all maximal values are above this constant value (see Remark 8). The other values are given in Table 8.

Further, we use 101 points for the time discretization and we cut the Fourier series of the random boundary data after 30 terms. A sampling of 10 random boundary functions and the corresponding solutions are shown in Fig. 10.

For the MATLAB\(^{\text{\textregistered}}\) implementation, we use a sampling of \(1 \cdot 10^5\) boundary functions in terms of Fourier series. We compare the probabilities of the KDE again with a classical Monte Carlo method (MC). The MC method checks for a random boundary function, if the bounds are satisfied for every point of the time discretization. If this is not the case, this boundary function is not feasible. The results of the tests are shown in Table 9.

One can see, that the results of MC and the KDE are almost equal. The mean probability in MC resp. KDE is \(74.38\%\) resp. \(74.37\%\) and the variance is 0.0141 resp. 0.0142. For a confidence level of \(95\%\) the confidence interval for the MC probability is \([74.26\%, 74.42\%]\), which is also the confidence interval of the KDE probability. Both methods are still quite fast. MATLAB\(^{\text{\textregistered}}\) needs much more time (\(\sim 1\) minute) for the sampling and computing the random boundary data than for computing the probabilities, which is less then one second.

In this subsection, we have considered the SRD and the KDE in a dynamic setting based on the results from Sect. 2. First the box constraints only hold for a certain point in time \(t^* \in [0,T]\) and then, the box constraints hold for the full time period [0, T].

3.4 Stochastic optimization on dynamic flow networks

In this subsection, we formulate necessary optimality conditions for the dynamic hyperbolic system introduced before. In the subsection before, we introduced different ways to compute the probability for a random boundary function to be feasible. Using the KDE gives us a good approximation of this probability. Define the set

and the function

For a probability level \(\alpha \in (0,1)\), consider the optimization problem with the approximated probabilistic constraints

Similar to the stationary case, for \(k = 1, \ldots , n\) and \(i = 1, \ldots , N\), we define

where

with samples \(b^{\mathcal {S},i} \in \mathcal {B}_{{\mathcal {A}}}\). Then we can rewrite the computation of the desired probability using the error function as

We define the function

Difference to the stationary case is, that the terms in both dimensions depend on the same upper bound, thus we have to use product rule to compute the derivative. It follows

As it is mentioned in Remark 2, the LICQ is always fulfilled and we can state the necessary optimality conditions for the approximated problem (46):

Corollary 3

Let \(r_0^{*,\max } \in {\mathbb {R}}\) be a (local) optimal solution of (46). Since the LICQ holds in \(r_0^{*,\max }\), there exists a multiplier \(\mu ^* \ge 0\), s.t.

Thus, \((r_0^{*,\max },\mu ^*) \in {\mathbb {R}}^2\) is a Karush–Kuhn–Tucker point.

Remark 9

In the n-dimensional case, in which we have bounds at n nodes, the computation of the desired probability is the following:

Mention that this is an 2n-dimensional KDE since every boundary function provides two samples, one for the minimal values and one for the maximal values. The partial derivatives with respect to \(r_j^{\max }\) are given by

where \((\sigma _{N,k}^{\max })^2\) resp. \((\sigma _{N,k}^{\min })^2\) are the variances of the sampling of the maximal values resp. of the minimal values.

3.5 Application to a realistic network

For this section we basically use the graph of the GasLib-11 but since this graph was designed for gas transportation we slightly vary it. First we assume that the compressor edges are normal edges. As in section Sect. 2.4 we assume that the valve is closed, s.t. the edge between node \(v_2\) and \(v_4\) vanishes. The water is contaminated at the nodes \(v_6\), \(v_9\) and \(v_{10}\) and the pollution distributes in the graph. We assume that the pollution equally distributes at node \(v_7\), i.e., half of the pollution distributes in edge \(e_6\), the other half in \(e_7\). We define pollution bounds \(r_0^{\min }, r_0^{\max } \in {\mathbb {R}}_{\ge 0}\) for node \(v_0\), \(r_1^{\min }, r_1^{\max } \in {\mathbb {R}}_{\ge 0}\) for node \(v_1\) and \(r_5^{\min }, r_5^{\max } \in {\mathbb {R}}_{\ge 0}\) for node \(v_5\). We want these bounds to be satisfied. A scheme of this network is shown in Fig. 11. For simplicity we assume that \(m = -0.1\), \(d = -1\) and \(L = 1\) for every edge.

The boundary functions are given by

The initial conditions are given by

The initial conditions are chosen, s.t. the solution at the nodes is constant as long as information from the boundary nodes needes to reach the nodes and the initial conditions satisfy the \(C^0\)-compatibility with the boundary conditions, which is

The boundary functions and the solution at the nodes \(v_0\), \(v_1\) and \(v_5\) are shown in Fig. 12. Since the boundary functions do not satisfy \(b_i(0) = 0\) (\(i \in \{6,9,10\}\)), we compute the Fourier series for the functions \((b_i(t)-b_i(0))\), randomize them by multiplying a Gaussian distributed random number (with \(\mu = 1\) and \(\sigma ^2 = 0.1\)) to every summand of the series and then we add the constants \(b_i(0)\) to the random Fourier series. For the implementation we use the first 30 terms of the Fourier series, i.e., \(N_F = 30\). Some random scenarios for the boundary functions are shown in Fig. 13.

The lower pollution bounds are given by

Consider the linear function

with \(c = \mathbb {1}_3\). We first solve the deterministic problem

with \(T = 10\) and the boundary functions are given in (47). As in the stationary case we use the default setting of the MATLAB\(^{\text{\textregistered}}\)-routine fmincon to solve (48), which is an interior-point algorithm. It returns

as optimal deterministic solution, i.e., as the lowest upper pollution bound for the nodes \(v_0\), \(v_1\) and \(v_5\). Now we consider uncertain water contamination at the nodes \(v_6\), \(v_9\) and \(v_{10}\). We compute the probability that the random contamination satisfies the bounds \([r^{\min }, r^{\max }_{\text {det}}]\) at the nodes \(v_0\), \(v_1\) and \(v_5\), which is \({\mathbb {P}}(b(t) \in M(r^{\max }_{\text {det}})\ \forall t \in [0,T])\). This is shown for 8 tests (each with \(1 \cdot 10^5\) samples) in Table 10.

The mean MC probability is \(37.78\%\) and the mean KDE probability is \(37.63\%\). For a confidence level of \(95\%\) the confidence interval for the MC probability is \([37.65\%, 37.90\%]\) and the confidence interval for the KDE probability is \([37.51\%, 37.76\%]\). Just like in the stationary case, the deterministic upper bound is unsatisfactory, so we consider the probabilistic constrained optimization problem (46) with \(\alpha := 0.75\). The problems described in Sect. 2.4 also occur here but fmincon returns a solution if we choose the optimal deterministic solution as starting point. The results of 8 Tests with \(1 \cdot 10^5\) scenarios are shown in Table 11. This time we show 3 decimal places because otherwise the solutions would be equal. In 8 more Tests we solve (46) by using Corollary 3. The results vary from the optimal solutions computed by fmincon in a range of \(1 \cdot 10^{-7}\). So here one could also expect that the necessary optimality conditions are sufficient but we do not analyze this here.

One can see, that all results are almost equal. The optimal upper pollution bounds of the probabilistic constrained optimization problem (46) are larger then the optimal upper pollution bounds of the deterministic optimization problem (48). The computation time is quite similar to the stationary case with the difference that solving the necessary optimality conditions needed much more time here (about 2 min per test).

4 Conclusion

In this paper, we have shown two different ways to evaluate probabilistic constraints in the context of hyperbolic balance laws on graphs for both, a stationary and a dynamic setting with box constraints for the solution.

The spheric radial decomposition provides a good method for computing the probabilities for random boundary data to be feasible in the stationary case and in the dynamic case for box constraints for a certain point in time. Because the spheric radial decomposition is explicitly based on the analytical solution, it leads to good results. But as soon as the analytical solution is not given, the inequalities cannot be derived and so the spheric radial decomposition becomes an almost purely numerical method.

A kernel density estimator does not need the analytical solution, a numerically computed solution is sufficient, and it provides an estimated, but explicit representation of the probability density function, which can be used for computing the probabilistic constraint and for deriving necessary optimality conditions for probabilistic constrained optimization problems. In addition the kernel density estimator is smooth even if the exact probability density function is nonsmooth. The examples showed, that the kernel density estimator provides results almost as good as the results from the spheric radial decomposition and a classical Monte Carlo approach. Of course, the Monte Carlo approach is faster and easier to use, but it cannot be used to get any kind of analytical result like e.g. the necessary optimality conditions.