Abstract

A nonlinear MPC framework is presented that is suitable for dynamical systems with sampling times in the (sub)millisecond range and that allows for an efficient implementation on embedded hardware. The algorithm is based on an augmented Lagrangian formulation with a tailored gradient method for the inner minimization problem. The algorithm is implemented in the software framework GRAMPC and is a fundamental revision of an earlier version. Detailed performance results are presented for a test set of benchmark problems and in comparison to other nonlinear MPC packages. In addition, runtime results and memory requirements for GRAMPC on ECU level demonstrate its applicability on embedded hardware.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Model predictive control (MPC) is one of the most popular advanced control methods due to its ability to handle linear and nonlinear systems with constraints and multiple inputs. The well-known challenge of MPC is the numerical effort that is required to solve the underlying optimal control problem (OCP) online. In the recent past, however, the methodological as well as algorithmic development of MPC for linear and nonlinear systems has matured to a point that MPC nowadays can be applied to highly dynamical problems in real-time. Most approaches of real-time MPC either rely on suboptimal solution strategies (Scokaert et al. 1999; Diehl et al. 2004; Graichen and Kugi 2010) and/or use tailored optimization algorithms to optimize the computational efficiency.

Particularly for linear systems, the MPC problem can be reduced to a quadratic problem, for which the optimal control over the admissible polyhedral set can be precomputed. This results in an explicit MPC strategy with minimal computational effort for the online implementation (Bemporad et al. 2002a, b), though this approach is typically limited to a small number of state and control variables. An alternative to explicit MPC is the online active set method (Ferreau et al. 2008) that takes advantage of the receding horizon property of MPC in the sense that typically only a small number of constraints becomes active or inactive from one MPC step to the next.

In contrast to active set strategies, interior point methods relax the complementary conditions for the constraints and therefore solve a relaxed set of optimality conditions in the interior of the admissable constraint set. The MPC software packages FORCES (PRO) (Domahidi et al. 2012) and fast_mpc (Wang and Boyd 2010) employ interior point methods for linear MPC problems. An alternative to active set and interior point methods are accelerated gradient methods (Richter et al. 2012) that originally go back to Nesterov’s fast gradient method for convex problems (Nesterov 2003). A corresponding software package for linear MPC is FiOrdOs (Jones et al. 2012). An augmented Lagrangian framework for convex quadratic problems as they arise in linear MPC is implemented in the toolbox DuQuad (Necoara and Kvamme 2015) with an analysis of the computational complexity in Nedelcu et al. (2014).

For nonlinear systems, one of the first real-time MPC algorithms was the continuation/GMRES method (Ohtsuka 2004) that solves the optimality conditions of the underlying optimal control problem based on a continuation strategy. The well-known ACADO Toolkit (Houska et al. 2011) uses the above-mentioned active set strategy in combination with a real-time iteration scheme to efficiently solve nonlinear MPC problems. Another recently presented MPC toolkit is VIATOC (Kalmari et al. 2015) that employs a projected gradient method to solve the time-discretized, linearized MPC problem.

Besides the real-time aspect of MPC, a current focus of research is on embedded MPC, i.e. the neat integration of MPC on embedded hardware with limited ressources. This might be field programmable gate arrays (FPGA) (Ling et al. 2008; Käpernick et al. 2014; Hartley and Maciejowski 2015), programmable logic controllers (PLC) in standard automation systems (Kufoalor et al. 2014; Käpernick and Graichen 2014b) or electronic control units (ECU) in automotive applications (Mesmer et al. 2018). For embedded MPC, several challenges arise in addition to the real-time demand. For instance, numerical robustness even at low computational accuracy and tolerance against infeasibility are important aspects as well as the ability to provide fast, possibly suboptimal iterates with minimal computational demand. In this regard, it is also desirable to satisfy the system dynamics in the single MPC iterations in order to maintain dynamically consistent iterates. Low code complexity for portability and a small memory footprint are further important aspects to allow for an efficient implementation of MPC on embedded hardware. The latter aspects, in particular, require the usage of streamlined, self-contained code rather than highly complex MPC algorithms. To meet these challenges, gradient-based algorithms become popular choices due to their general simplicity and low computational complexity (Giselsson 2014; Kouzoupis et al. 2015; Necoara 2015). A comprehensive overview on literature on first-order methods for embedded optimization can be found in the survey papers (Ferreau et al. 2017; Findeisen et al. 2018) as well as in the aforementioned references (Nedelcu et al. 2014; Necoara and Kvamme 2015).

Following this motivation, this paper presents a software framework for nonlinear MPC that can be efficiently used for embedded control of nonlinear and highly dynamical systems with sampling times in the (sub)millisecond range. The presented framework is a fundamental revision of the MPC toolbox GRAMPC (Käpernick and Graichen 2014a) (Gradient-Based MPC – [græmp\('\)si:]) that was originally developed for nonlinear systems with control constraints. The revised algorithm of GRAMPC presented in this paper significantly extends the applicability of the initial GRAMPC version by accounting for general nonlinear equality and inequality constraints as well as terminal constraints. Beside “classical” MPC, GRAMPC can now be used for MPC on shrinking horizon, general optimal control problems, moving horizon estimation and parameter optimization problems including free end time problems. The new algorithm employs an augmented Lagrangian formulation in connection with a real-time gradient method and tailored line search and multiplier update strategies that are optimized for a time and memory efficient implementation on embedded hardware. The performance and effectiveness of augmented Lagrangian methods for embedded nonlinear MPC was recently demonstrated for various application examples on rapid prototyping and ECU hardware level (Harder et al. 2017; Mesmer et al. 2018; Englert and Graichen 2018). Beside the presentation of the augmented Lagrangian algorithm and the general usage of GRAMPC, the paper compares its performance to the nonlinear MPC toolkits ACADO and VIATOC for different benchmark problems. Moreover, runtime results are presented for GRAMPC on dSPACE and ECU level including its memory footprint to demonstrate its applicability on embedded hardware.

The paper is organized as follows. Section 2 presents the general problem formulation and exemplarily illustrates its application to model predictive control and moving horizon estimation. Section 3 describes the augmented Lagrangian framework in combination with a gradient method for the inner minimization problem. Section 4 gives an overview on the structure and usage of GRAMPC. Section 5 evaluates the performance of GRAMPC for different benchmark problems and in comparison to ACADO and VIATOC, before Sect. 6 closes the paper.

Some norms are used inside the paper, in particular in Sect. 3. The Euclidean norm of a vector \(\varvec{x}\in {\mathbb{R}}^n\) is denoted by \(\Vert \varvec{x}\Vert _2\), the weighted quadratic norm by \(\Vert \varvec{x}\Vert _{\varvec{Q}}=(\varvec{x}^{\mathsf{T}}\varvec{Q}\varvec{x})^{1/2}\) for some positive definite matrix \(\varvec{Q}\), and the scalar product of two vectors \(\varvec{x},\varvec{y}\in {\mathbb{R}}^n\) is defined as \(\langle \varvec{x},\varvec{y}\rangle =\varvec{x}^{\mathsf{T}}\varvec{y}\). For a time function \(\varvec{x}(t)\), \(t\in [0,T]\) with \(T<\infty\), the vector-valued \(L^2\)-norm is defined by \(\Vert \varvec{x}\Vert _{L_2}=\big (\sum _{i=1}^n \Vert x_i \Vert _{L_2}\big )^{1/2}\) with \(\Vert x_i \Vert _{L_2}=\big (\int _0^T x_i^2(t)\,{\mathrm{d}}t\big )^{1/2}\). The supremum-norm is defined componentwise in the sense of \(\Vert \varvec{x}\Vert _{L_\infty }=\big [\Vert x_1\Vert _{L_\infty } \ldots \Vert x_n\Vert _{L_\infty } \big ]^{\mathsf{T}}\) with \(\Vert x_i\Vert _{L_\infty }=\sup _{t\in [0,T]} |x_i(t)|\). The inner product is denoted by \(\langle \varvec{x},\varvec{y}\rangle =\int _0^T \varvec{x}^{\mathsf{T}}(t)\varvec{y}(t)\,{\mathrm{d}}t\) using the same (overloaded) \(\langle \,\rangle\)-notation as in the vector case. Moreover, function arguments (such as time t) might be omitted in the text for the sake of enhancing readability.

2 Problem formulation

This section describes the class of optimal control problems that can be solved by GRAMPC. The framework is especially suitable for model predictive control and moving horizon estimation, as the numerical solution method is tailored to embedded applications. Nevertheless, GRAMPC can be used to solve general optimal control problems or parameter optimization problems as well.

2.1 Optimal control problem

GRAMPC solves nonlinear constrained optimal control problems with fixed or free end time and potentially unknown parameters. Consequently, the most generic problem formulation that can be adressed by GRAMPC is given by

with state \(\varvec{x}\in {\mathbb{R}}^{N_{\varvec{x}}}\), control \(\varvec{u} \in {\mathbb{R}}^{N_{\varvec{u}}}\), parameters \(\varvec{p}\in {\mathbb{R}}^{N_{\varvec{p}}}\) and end time \(T\in {\mathbb{R}}\). The cost to be minimized (1a) consists of the terminal and integral cost functions \(V:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}\) and \(l:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{u}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}\), respectively. The dynamics (1b) are given in semi-implicit form with the (constant) mass matrix \(\varvec{M}\), the nonlinear system function \(\varvec{f}:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{u}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}^{N_{x}}\), and the initial state \(\varvec{x}_0\). The system class (1b) includes standard ordinary differential equations for \(\varvec{M}=\varvec{I}\) as well as (index-1) differential-algebraic equations with singular mass matrix \(\varvec{M}\). In addition, (1c) and (1d) account for equality and inequality constraints \(\varvec{g}:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{u}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}^{N_{\varvec{g}}}\) and \(\varvec{h}:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{u}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}^{N_{\varvec{h}}}\) as well as for the corresponding terminal constraints \(\varvec{g}_T:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}^{N_{\varvec{g}_T}}\) and \(\varvec{h}_T:{\mathbb{R}}^{N_{\varvec{x}}}\times {\mathbb{R}}^{N_{\varvec{p}}}\times {\mathbb{R}} \rightarrow {\mathbb{R}}^{N_{\varvec{h}_T}}\), respectively. Finally, (1e) and (1f) represent box constraints for the optimization variables \(\varvec{u}=\varvec{u}(t)\), \(\varvec{p}\) and T (if applicable).

In comparison to the previous version of the GRAMPC toolbox (Käpernick and Graichen 2014a), the problem formulation (1) supports optimization with respect to parameters, a free end time, general state-dependent equality and inequality constraints, as well as terminal constraints. While the original GRAMPC version was based on a real-time gradient method, the new algorithm presented in this paper employs an augmented Lagrangian framework to account for the aforementioned general constraints. Furthermore, semi-implicit dynamics with a constant mass matrix \(\varvec{M}\) can be handled using the Rosenbrock solver RODAS (Hairer and Wanner 1996) as numerical integrator. This extends the range of possible applications besides MPC to general optimal control, parameter optimization, and moving horizon estimation. However, the primary target is embedded model predictive control of nonlinear systems, as the numerical solution algorithm is optimized for time and memory efficiency.

2.2 Application to model predictive control

Model predictive control relies on the iterative solution of an optimal control problem of the form

with the MPC-internal time coordinate \(\tau \in [0,T]\) over the prediction horizon T. The initial state value \(\varvec{x}_k\) is the measured or estimated system state at the current sampling instant \(t_k=t_0+k \varDelta t\), \(k \in {\mathbb{N}}\) with sampling time \(0<\varDelta t\le T\). The first part of the computed control trajectory \(\varvec{u}(\tau )\), \(\tau \in [0,\varDelta t)\) is used as control input for the actual plant over the time interval \(t\in [t_k,t_{k+1})\), before OCP (2) is solved again with the new initial state \(\varvec{x}_{k+1}\).

A popular choice of the cost functional (2b) is the quadratic form

with the desired setpoint \((\varvec{x}_{\text {des}},\varvec{u}_{\text {des}})\) and the positive (semi-)definite matrices \(\varvec{P}\), \(\varvec{Q}\), \(\varvec{R}\). Stability is often ensured in MPC by imposing a terminal constraint \(\varvec{x}(T)\in \varOmega _\beta\), where the set \(\varOmega _\beta =\{\varvec{x}\in {\mathbb{R}}^{N_{\varvec{x}}}\,|\, V(\varvec{x})\le \beta \}\) for some \(\beta >0\) is defined in terms of the terminal cost \(V(\varvec{x})\) that can be computed from solving a Lyapunov or Riccati equation that renders the set \(\varOmega _\beta\) invariant under a local feedback law (Chen and Allgöwer 1998; Mayne et al. 2000). In view of the OCP formulation (1), the terminal region as well as general box constraints on the state as given in (2c) can be expressed as

Note, however, that terminal constraints are often omitted in embedded or real-time MPC in order to minimize the computational effort (Limon et al. 2006; Graichen et al. 2010; Grüne 2013). In particular, real-time feasibility is typically achieved by limiting the number of iterations per sampling step and using the current solution for warm starting in the next MPC step, in order to incrementally reduce the suboptimality over the runtime of the MPC (Diehl et al. 2004; Graichen et al. 2010; Graichen 2012).

An alternative to the “classical” MPC formulation (2) is shrinking horizon MPC, see e.g. Diehl et al. (2005), Skaf et al. (2010) and Grüne and Palma (2014), where the horizon length T is shortened over the MPC steps. This can be achieved by formulating the underlying OCP (2) as a free end time problem with a terminal constraint \(\varvec{g}_T(\varvec{x}(T))=\varvec{x}(T)-\varvec{x}_{\text {des}}=\varvec{0}\) to ensure that a desired setpoint \(\varvec{x}_{\text {des}}\) is reached in finite time instead of the asymptotic behavior of fixed-horizon MPC.

2.3 Application to moving horizon estimation

Moving horizon estimation (MHE) can be seen as the dual of MPC for state estimation problems. Similar to MPC, MHE relies on the online solution of a dynamic optimization problem of the form

that depends on the history of the control \(\varvec{u}(t)\) and measured output \(\varvec{y}(t)\) over the past time window \([t_k-T, t_k]\). The solution of (5) yields the estimate \(\varvec{\hat{x}}_k\) of the current state \(\varvec{x}_k\) such that the estimated output function (5c) best matches the measured output \(\varvec{y}(t)\) over the past horizon T. Further constraints can be added to the formulation of (5) to incorporate a priori knowledge.

GRAMPC can be used for moving horizon estimation by handling the system state at the beginning of the estimation horizon as optimization variables, i.e. \(\varvec{p}=\varvec{{\hat{x}}} (t_k - T)\). In addition, a time transformation is required to map \(t \in [t_k-T, t_k]\) to the new time coordinate \(\tau \in [0, T]\) along with the corresponding coordinate transformation

with the initial condition \(\varvec{{\tilde{x}}}(0) = \varvec{0}\). The optimization problem (5) then becomes

The solution \(\varvec{p}=\varvec{{\hat{x}}} (t_k - T)\) of (7) and the coordinate transformation (6) are used to compute the current state estimate with

where \(\varvec{{\tilde{x}}}(T)\) is the end point of the state trajectory returned by GRAMPC. In the next sampling step, the parameters can be re-initialized with the predicted estimate \(\varvec{\hat{x}}(t_k-T+\varDelta t) = \varvec{p} + \varvec{{\tilde{x}}}(\varDelta t)\).

Note that the above time transformation can alternatively be reversed in order to directly estimate the current state \(\varvec{p}=\varvec{{\hat{x}}}(t_k)\). This, however, requires the reverse time integration of the dynamics, which is numerically unstable if the system is stable in forward time. Vice versa, a reverse time transformation is to be preferred for MHE of an unstable process.

3 Optimization algorithm

The optimization algorithm underlying GRAMPC uses an augmented Lagrangian formulation in combination with a real-time projected gradient method. Though SQP or interior point methods are typically superior in terms of convergence speed and accuracy, the augmented Lagrangian framework is able to rapidly provide a suboptimal solution at low computational costs, which is important in view of real-time applications and embedded optimization. In the following, the augmented Lagrangian formulation and the corresponding optimization algorithm are described for solving OCP (1). The algorithm follows a first-optimize-then-discretize approach in order to maintain the dynamical system structure in the optimality conditions, before numerical integration is applied.

3.1 Augmented Lagrangian formulation

The basic idea of augmented Lagrangian methods is to replace the original optimization problem by its dual problem, see for example Bertsekas (1996), Nocedal and Wright (2006) and Boyd and Vandenberghe (2004) as well as Fortin and Glowinski (1983), Ito and Kunisch (1990), Bergounioux (1997), de Aguiar et al. (2016) for corresponding approaches in optimal control and function space settings.

The augmented Lagrangian formulation adjoins the constraints (1c), (1d) to the cost functional (1a) by means of multipliers \(\varvec{\bar{\mu }}=(\varvec{\mu }_{\varvec{g}}, \varvec{\mu }_{\varvec{h}}, \varvec{\mu }_{\varvec{g}_T}, \varvec{\mu }_{\varvec{h}_T})\) and additional quadratic penalty terms with the penalty parameters \(\varvec{\bar{c}}=(\varvec{c}_{\varvec{g}}, \varvec{c}_{\varvec{h}}, \varvec{c}_{\varvec{g}_T}, \varvec{c}_{\varvec{h}_T})\). A standard approach in augmented Lagrangian theory is to transform the inequalities (1d) into equality constraints by means of slack variables, which can be analytically solved for (Bertsekas 1996). This leads to the overall set of equality constraints (see Appendix 1 for details)

with the transformed inequalities

and the diagonal matrix syntax \(\varvec{C}={\mathrm{diag}}(\varvec{c})\). The vector-valued \(\varvec{\max }\)-function is to be understood component-wise. The equalities (9a) are adjoined to the cost functional

with the augmented terminal and integral cost terms

and the stacked penalty and multiplier vectors \(\varvec{\mu }_T = [\varvec{\mu }_{\varvec{g}_T}^{\mathsf{T}},\varvec{\mu }_{\varvec{h}_T}^{\mathsf{T}}]^{\mathsf{T}}\), \(\varvec{c}_T = [\varvec{c}_{\varvec{g}_T}^{\mathsf{T}},\varvec{c}_{\varvec{h}_T}^{\mathsf{T}}]^{\mathsf{T}}\), and \(\varvec{\mu }= [\varvec{\mu }_{\varvec{g}}^{\mathsf{T}},\varvec{\mu }_{\varvec{h}}^{\mathsf{T}}]^{\mathsf{T}}\), \(\varvec{c}= [\varvec{c}_{\varvec{g}}^{\mathsf{T}},\varvec{c}_{\varvec{h}}^{\mathsf{T}}]^{\mathsf{T}}\), respectively.

The augmented cost functional (11) allows one to formulate the max–min-problem

Note that the multipliers \(\varvec{\mu }= [\varvec{\mu }_{\varvec{g}}^{\mathsf{T}},\varvec{\mu }_{\varvec{h}}^{\mathsf{T}}]^{\mathsf{T}}\) corresponding to the constraints (1d), respectively (10), are functions of time t. In the implementation of GRAMPC, the corresponding penalties \(\varvec{c}_{\varvec{g}}\) and \(\varvec{c}_{\varvec{h}}\) are handled time-dependently as well.

If strong duality holds and \((\varvec{u}^*,\varvec{p}^*,T^*)\) and \(\varvec{\bar{\mu }}^*\) are primal and dual optimal points, they form a saddle-point in the sense of

On the other hand, if (14) is satisfied, then \((\varvec{u}^*,\varvec{p}^*,T^*)\) and \(\varvec{\bar{\mu }}^*\) are primal and dual optimal and strong duality holds (Boyd and Vandenberghe 2004; Allaire 2007). Moreover, if the saddle-point condition is satisfied for the unaugmented Lagrangian, i.e. for \(\varvec{\bar{c}}=\varvec{0}\), then it holds for all \(\varvec{\bar{c}}>\varvec{0}\) and vice versa (Fortin and Glowinski 1983).

Strong duality typically relies on convexity, which is difficult to investigate for general constrained nonlinear optimization problems. However, the augmented Lagrangian formulation is favorable in this regard, as the duality gap that may occur for unpenalized, nonconvex Lagrangian formulations can potentially be closed by the augmented Lagrangian formulation (Rockafellar 1974).

The motivation behind the algorithm presented in the following lines is to solve the dual problem (13) instead of the original one (1) by approaching the saddle-point (14) from both sides. In essence, the max–min-problem (13) is solved in an alternating manner by performing the inner minimization with a projected gradient method and the outer maximization via a steepest ascent approach. Note that the dynamics (13b) are captured inside the minimization problem instead of treating the dynamics as equality constraints of the form \(\varvec{M} \varvec{\dot{x}} - \varvec{f}(\varvec{x}, \varvec{u}, \varvec{p}, t) = \varvec{0}\) in the augmented Lagrangian (Hager 1990). This ensures the dynamical consistency of the computed trajectories in each iteration of the algorithm, which is important for an embedded, possibly suboptimal implementation.

3.2 Structure of the augmented Lagrangian algorithm

The basic iteration structure of the augmented Lagrangian algorithm is summarized in Algorithm 1 and will be detailed in Sects. 3.3–3.5. The initialization of the algorithm concerns the multipliers \(\varvec{\bar{\mu }}^1\) and penalties \(\varvec{\bar{c}}^1\) as well as the definition of several tolerance values that are used for the convergence check (Sect. 3.4) and the update of the multipliers and penalties in (17) and (18), respectively.

In the current augmented Lagrangian iteration i, the inner minimization is carried out by solving the OCP (15) for the current set of multipliers \(\varvec{\bar{\mu }}^i\) and penalties \(\varvec{\bar{c}}^i\). Since the only remaining constraints within (15) are box constraints on the optimization variables (15c) and (15d), the problem can be efficiently solved by the projected gradient method described in Sect. 3.3. The solution of the minimization step consists of the control vector \(\varvec{u}^{i}\) and of the parameters \(\varvec{p}^{i}\) and free end time \(T^{i}\), if these are specified in the problem at hand. The subsequent convergence check in Algorithm 1 rates the constraint violation as well as convergence behavior of the previous minimization step and is detailed in Sect. 3.4.

If convergence is not reached yet, the multipliers and penalties are updated for the next iteration of the algorithm, as detailed in Sect. 3.5. Note that the penalty update in (18) relies on the last two iterates of the constraint functions (16). In the initial iteration \(i=1\) and if GRAMPC is used within an MPC setting, the constraint functions \(\varvec{g}^0\), \(\varvec{h}^{0}\), \(\varvec{g}_T^{0}\), \(\varvec{h}_T^{0}\) are warm-started by the corresponding last iterations of the previous MPC run. Otherwise, the penalty update is started in iteration \(i=2\).

Note that if the OCP or MPC problem to be solved is defined without the nonlinear constraints (1c) and (1d), the overall augmented Lagrangian algorithm reduces to the projected gradient method for solving the minimization problem (15), for which linear convergence results exist under the assumption of convexity (Dunn 1996).

If the end time T is treated as optimization variable as shown in Algorithm 1, the evaluation of (18) would formally require to redefine the constraint functions \(\varvec{g}^{i-1}(t)\) and \(\varvec{h}^{i-1}(t)\), \(t\in [0,T^{i-1}]\) from the previous iterations to the new horizon length \(T^{i}\) by either shrinkage or extension. This redefinition is not explicitly stated in Algorithm 1, since the actual implementation of GRAMPC stores the trajectories in discretized form, which implies that only the discretized time vector must be recomputed, once the end time \(T^i\) is updated.

3.3 Gradient algorithm for inner minimization problem

The OCP (15) inside the augmented Lagrangian algorithm corresponds to the inner minimization problem of the max–min-formulation (13) for the current iterates of the multipliers \(\varvec{\bar{\mu }}^i\) and \(\varvec{\bar{c}}^i\). A projected gradient method is used to solve OCP (15) to a desired accuracy or for a fixed number of iterations.

The gradient algorithm relies on the solution of the first-order optimality conditions defined in terms of the Hamiltonian

with the adjoint states \(\varvec{\lambda }\in {\mathbb{R}}^{N_{\varvec{x}}}\). In particular, the gradient algorithm iteratively solves the canonical equations, see e.g. Cao et al. (2003),

consisting of the original dynamics (20a) and the adjoint dynamics (20b) in forward and backward time and computes a gradient update for the control in order to minimize the Hamiltonian in correspondence with Pontryagin’s Maximum Principle (Kirk 1970; Berkovitz 1974), i.e.

If parameters \(\varvec{p}\) and/or the end time T are additional optimization variables, the corresponding gradients have to be computed as well. Algorithm 2 lists the overall projected gradient algorithm for the full optimization case, i.e. for the optimization variables \((\varvec{u},\varvec{p},T)\), for the sake of completeness.

The gradient algorithm is initialized with an initial control \(\varvec{u}^{i|1}(t)\) and initial parameters \(\varvec{p}^{i|1}\) and time length \(T^{i|1}\). In case of MPC, these initial values are taken from the last sampling step using a warmstart strategy with an optional time shift in order to compensate for the horizon shift by the sampling time \(\varDelta t\).

The algorithm starts in iteration \(j=1\) with computing the corresponding state trajectory \(\varvec{x}^{i|j}(t)\) as well as the adjoint state trajectory \(\varvec{\lambda }^{i|j}(t)\) by integrating the adjoint dynamics (22) in reverse time. More detailed information on the integration schemes can be found in the GRAMPC manual. In the next step, the gradients (23) are computed and the step size \(\alpha ^{i|j}>0\) is determined from the line search problem (24). The projection functions \(\varvec{\psi }_{\varvec{u}}(\varvec{u})\), \(\varvec{\psi }_{\varvec{p}}(\varvec{p})\), and \(\psi _{T}(T)\) project the inputs, the parameters, and the end time onto the feasible sets (15c) and (15d). For instance, \(\varvec{\psi }_{\varvec{u}}(\varvec{u})=[\psi _{\varvec{u},1}(u_1) \ldots \psi _{\varvec{u},N_{\varvec{u}}}(u_{N_{\varvec{u}}})]^{\mathsf{T}}\) is defined by

The next steps in Algorithm 2 are the updates (25) of the control \(\varvec{u}^{i|j+1}\), parameters \(\varvec{p}^{i|j+1}\), and end time \(T^{i|j+1}\) as well as the update of the state trajectory \(\varvec{x}^{i|j+1}\) in (26).

The convergence measure \(\eta ^{i|j+1}\) in (27) rates the relative gradient changes of \(\varvec{u}\), \(\varvec{p}\), and T. If the gradient scheme converges in the sense of \(\eta ^{i|j+1} \le \varepsilon _{\text {rel,c}}\) with threshold \(\varepsilon _{\text {rel,c}}>0\) or if the maximum number of iterations \(j_{\text {max}}\) is reached, the algorithm terminates and returns the last solution to Algorithm 1. Otherwise, j is incremented and the gradient iteration continues.

An important component of Algorithm 2 is the line search problem (24), which is performed in all search directions simultaneously. The scaling factors \(\gamma _{\varvec{p}}\) and \(\gamma _{T}\) can be used to scale the step sizes relative to each other, if they are not of the same order of magnitude or if the parameter or end time optimization is highly sensitive. GRAMPC implements two different line search strategies, an adaptive and an explicit one, in order to solve (24) in an accurate and robust manner without involving too much computational load.

The adaptive strategy evaluates the cost functional (24) for three different step sizes, i.e. \((\alpha _i,{\bar{J}}_i)\), \(i=1,2,3\) with \(\alpha _1<\alpha _2<\alpha _3\), in order to compute a polynomial fitting function \(\Phi (\alpha )\) of the form

where the constants \(p_i\) are computed from the test points \((\alpha _i,{\bar{J}}_i)\), \(i=1,2,3\). The approximate step size can then be analytically derived by solving

The interval \([\alpha _1,\alpha _3]\) is adapted in the next gradient iteration, if the step size \(\alpha ^{j}\) is close to the interval’s borders, see Käpernick and Graichen (2014a) for more details.

Depending on the OCP or MPC problem at hand, the adaptive line search method may not be suited for time-critical applications, since the approximation of the cost function (29) requires to integrate the system dynamics (15b) and the cost integral (15a) three times. This computational load can be further reduced by an explicit line search strategy, originally discussed in Barzilai and Borwein (1988) and adapted to the optimal control case in Käpernick and Graichen (2013). Motivated by the secant equation in quasi-Newton methods (Barzilai and Borwein 1988), this strategy minimizes the difference between two updates of the optimization variables \((\varvec{u},\varvec{p},T)\) for the same step size and without considering the corresponding box constraints (15c) and (15d). The explicit method solves the problem

where \(\varDelta\) denotes the difference between the last and current iterate, e.g. \(\varDelta \varvec{u}^{i|j}=\varvec{u}^{i|j}-\varvec{u}^{i|j-1}\). The analytic solution is given by

Alternatively, the minimization

can be carried out, similar to (31), leading to the corresponding solution

Both explicit formulas (32) and (33) are implemented in GRAMPC as an alternative to the adaptive line search strategy.

3.4 Convergence criterion

The gradient scheme in Algorithm 2 solves the inner minimization problem (15) of the augmented Lagrangian algorithm and returns the solution \((\varvec{u}^i,\varvec{p}^i,T^i)\) as well as the maximum relative gradient \(\eta ^i\) that is computed in (27) and used to check convergence inside the gradient algorithm. The outer augmented Lagrangian iteration in Algorithm 1 also uses this criterion along with the convergence check of the constraints, i.e.

The thresholds \(\varvec{\varepsilon _{\varvec{g}}},\varvec{\varepsilon _{\varvec{g_T}}}, \varvec{\varepsilon _{\varvec{h}}}, \varvec{\varepsilon _{\varvec{h_T}}}\) are vector-valued to rate each constraint individually. If the maximum number of augmented Lagrangian iterations is reached, i.e. \(i=i_{\text {max}}\), the algorithm terminates in order to ensure real-time feasibility. Otherwise, i is incremented and the next minimization of problem (15) is carried out.

Note that the convergence criterion is typically deactivated in MPC applications and a fixed iteration count is used to ensure real-time feasibility. The last solution is then used as warm-start in the next MPC step. The incremental improvement of this suboptimal solution strategy in a real-time MPC setting is investigated e.g. in Diehl et al. (2004) and Graichen and Kugi (2010). In particular, it is shown in Graichen and Kugi (2010) that incremental improvement and asymtptotic stability is ensured for a sufficient number of iterations provided that the underlying optimization is linearly convergent.

If the convergence criterion is replaced by a fixed number of iterations, convergence of the augmented Lagrangian algorithm cannot be guaranteed in general, since the saddle-point condition (14) is based on the optimal solution of the inner problem. To remedy this issue, the multiplier update is adapted accordingly, which is explained in detail in the next section.

3.5 Update of multipliers and penalties

The multiplier update (17) in Algorithm 1 is carried out via a steepest ascent approach, whereas the penalty update (18) uses an adaptation strategy that rates the progress of the last two iterates. In more detail, the multiplier update function for a single equality constraint \(g^i:=g(\varvec{x}^i,\varvec{u}^i,\varvec{p}^i,t)\) is defined by

The steepest ascent direction is the residual of the constraint \(g^i\) with the penalty \(c_g^i\) serving as step size parameter. The additional damping factor \(0\le \rho \le 1\) is introduced to increase the robustness of the multiplier update in the augmented Lagrangian scheme. In the GRAMPC implementation, the multipliers are additionally limited by an upper bound \(\mu _{\text {max}}\), in order to avoid unlimited growth and numerical stability issues. The update (35) is skipped if the constraint is satisfied within the tolerance \(\varepsilon _g\) or if the gradient method is not sufficiently converged, which is checked by the maximum relative gradient \(\eta ^i\), cf. (27), and the update threshold \(\varepsilon _{\text {rel,u}}>0\). GRAMPC uses a larger value for \(\varepsilon _{\text {rel,u}}\) than the convergence threshold \(\varepsilon _{\text {rel,c}}\) of the gradient scheme in Algorithm 2. This accounts for the case that the gradient algorithm might not have converged to the desired tolerance before the maximum number of iterations \(j_{\text {max}}\) is reached, e.g. in real-time MPC applications, where only one or two gradient iterations might be applied. In this case, \(\varepsilon _{\text {rel,u}}\gg \varepsilon _{\text {rel,c}}\) ensures that the multiplier update is still performed provided that at least “some” convergence was reached by the inner minimization.

The penalty \(c_g^i\) corresponding to the equality constraint \(g^i\) is updated according to the heuristic update function

that is basically motivated by the LANCELOT package (Conn et al. 1992; Nocedal and Wright 2006). The penalty \(c_g^i\) is increased by the factor \(\beta _{\text {in}}\ge 1\), if the last gradient scheme converged, i.e. \(\eta ^{i}\le \varepsilon _{\text {rel,u}}\), but insufficient progress (rated by \(\gamma _{\text {in}}>0\)) was made by the constraint violation compared to the previous iteration \(i-1\). The penalty is decreased by the factor \(\beta _{\text {de}}\le 1\) if the constraint \(g^i\) is sufficiently satisfied within its tolerance with \(0<\gamma _{\text {de}}<1\). The setting \(\beta _{\text {de}}=\beta _{\text {in}}=1\) can be used to keep \(c_g^i\) constant. Similar to the multiplier update (35), GRAMPC restricts the penalty to upper and lower bounds \(c_{\text {min}}\le c_g^i \le c_{\text {max}}\), in order to avoid negligible values as well as unlimited growth of \(c_g^i\). The lower penalty bound \(c_{\text {min}}\) is particularly relevant in case of MPC applications, where typically only a few iterations are performed in each MPC step. GRAMPC provides a support function that computes an estimate of \(c_{\text {min}}\) for the MPC problem at hand.

The updates (35) and (36) define the vector functions \(\varvec{\zeta }_{\varvec{g}}\), \(\varvec{\zeta }_{\varvec{g}_T}\) and \(\varvec{\xi }_{\varvec{g}}\), \(\varvec{\xi }_{\varvec{g}_T}\) in (17a) and (18a) with \(N_{\varvec{g}}\) and \(N_{\varvec{g}_T}\) components, corresponding to the number of equality and terminal equality constraints. Note that the multipliers for the equality and inequality constraints are time-dependent, i.e. \(\varvec{\mu }_{\varvec{g}}(t)\) and \(\varvec{\mu }_{\varvec{h}}(t)\), which implies that the functions \(\varvec{\zeta }_{\varvec{g}}\) and \(\varvec{\xi }_{\varvec{g}}\), resp. (35) and (36), are evaluated pointwise in time.

The inequality constrained case is handled in a similar spirit. For a single inequality constraint \({\bar{h}}^i:={\bar{h}}(\varvec{x}^i,\varvec{u}^i,\varvec{p}^i,t,\mu _h,c_h)\), cf. (10a), the multiplier and penalty updates are defined by

and

and constitute the vector functions \(\varvec{\zeta }_{\varvec{h}}\), \(\varvec{\zeta }_{\varvec{h}_T}\) and \(\varvec{\xi }_{\varvec{h}}\), \(\varvec{\xi }_{\varvec{h}_T}\) in (17b) and (18b) with \(N_{\varvec{h}}\) and \(N_{\varvec{h}_T}\) components. The condition \({\bar{h}}^i < 0\) in (37) ensures that the Lagrangian multiplier \(\mu _h^i\) is reduced for inactive constraints, which corresponds to either \(h^i < 0\) or \(-\frac{\mu _h}{c_h}\) in view of the transformation (10).

4 Structure and usage of GRAMPC

This section describes the framework of GRAMPC and illustrates its general usage. GRAMPC is designed to be portable and executable on different operating systems and hardware without the use of external libraries. The code is implemented in plain C with a user-friendly interface to C++, Matlab/Simulink, and dSpace. The following lines give an overview of GRAMPC and demonstrate how to implement and solve a problem.

4.1 General structure

Figure 1 shows the general structure of GRAMPC and the steps that are necessary to compile an executable GRAMPC project. The first step in creating a new project is to define the problem using the provided C function templates, which will be detailed more in Sec 4.2. The user has the possibility to set problem specific parameters and algorithmic options concerning the numerical integrations in the gradient algorithm, the line search strategy as well as further preferences, also see Sec 4.3.

A specific problem can be parameterized and numerically solved using C/C++, Matlab/Simulink, or dSpace. A closer look on this functionality is given in Fig. 2. The workspace of a GRAMPC project as well as algorithmic options and parameters are stored by the structure variable grampc. Several parameter settings are problem-specific and need to be provided, whereas other values are set to default values. A generic interface allows one to manipulate the grampc structure in order to set algorithmic options or parameters for the problem at hand. The functionalities of GRAMPC can be manipulated from Matlab/Simulink by means of mex routines that are wrappers for the corresponding C functions. This allows one to run a GRAMPC project with different parameters and options without recompilation.

4.2 Problem definition

The problem formulation in GRAMPC follows a generic structure. The essential steps for a problem definition are illustrated for the following MPC example

with \(\varDelta \varvec{x}=\varvec{x}-\varvec{x}_{\text {des}}\), \(\varDelta u=u-u_{\text {des}}\), and the weights

The dynamics (39b) are a simplified linear model of a single axis of a ball-on-plate system (Richter 2012) that is also included in the testbench of GRAMPC (see Sect. 5). The horizon length and the sampling time are set to \(T=0.3\) s and \(\varDelta t=10\,\)ms, respectively.

The problem formulation (39) is provided in the user template probfct.c. The following listing gives an expression of the function structure and the problem implementation for the ball-on-plate example (39).

The naming of the functions follows the nomenclature of the general OCP formulation (1), except for the function ocp_dim, which defines the dimensions of the optimization problem. Problem specific parameters can be used inside the single functions via the pointer userparam. For convenience, the desired setpoint (xdes,udes) to be stabilized by the MPC is provided to the cost functions separately and therefore does not need to be passed via userparam.

In addition to the single OCP functions, GRAMPC requires the derivatives w.r.t. state \(\varvec{x}\), control \(\varvec{u}\), and if applicable w.r.t. the optimization parameters \(\varvec{p}\) and end time T, in order to evaluate the optimality conditions in Algorithm 2. Jacobians that occur in multiplied form, see e.g. \((\frac{\partial \varvec{f}}{\partial \varvec{x}}\big )^{\mathsf{T}}\varvec{\lambda }\) in the adjoint dynamics (22), have to be provided in this representation. This helps to avoid unnecessary zero multiplications in case of sparse Jacobians. The following listing shows an excerpt of the corresponding gradient functions.

4.3 Calling procedure and options

GRAMPC provides several key functions that are required for initializing and calling the MPC solver. As shown in Fig. 2, there exist mex wrapper functions that ensure that the interface for interacting with GRAMPC is largely the same under C/C++ and Matlab.

The following listing gives an idea on how to initialize GRAMPC and how to run a simple MPC loop for the ball-on-plate example under Matlab.

The listing also shows some of the algorithmic settings, e.g. the number of discretization points Nhor for the horizon [0, T], the maximum number of iterations \((i_{\text {max}},j_{\text {max}})\) for Algorithm 1 and 2, or the choice of integration scheme for solving the canonical equations (22), (26). Currently implemented integration methods are (modified) Euler, Heun, 4th order Runge–Kutta as well as the solver RODAS (Hairer and Wanner 1996) that implements a 4th order Rosenbrock method for solving semi-implicit differential-algebraic equations with possibly singular and sparse mass matrix \(\varvec{M}\), cf. the problem definition in (1). The Euler and Heun methods use a fixed step size depending on the number of discretization points (Nhor), whereas RODAS and Runge–Kutta use adaptive step size control. The choice of integrator therefore has significant impact on the computation time and allows one to optimize the algorithm in terms of accuracy and computational efficiency. Further options not shown in the listing are e.g. the settings (xScale,xOffset) and (uScale,uOffset) in order to scale the input and state variables of the optimization problem.

The initialization and calling procedure for GRAMPC is largely the same under C/C++ and Matlab. One exception is the handling of user parameters in userparam. Under C, userparam can be an arbitrary structure, whereas the Matlab interface restricts userparam to be of type array (of arbitrary length). Moreover, the Matlab call of grampc_run_Cmex returns an updated copy of the grampc structure as output argument in order to comply with the Matlab policy to not manipulate input arguments.

5 Performance evaluation

The intention of this section is to evaluate the performance of GRAMPC under realistic conditions and for meaningful problem settings. To this end, an MPC testbench suite is defined to evaluate the computational performance in comparison to other state-of-the-art MPC solvers and to demonstrate the portability of GRAMPC to real-time and embedded hardware. The remainder of this section demonstrates the handling of typical problems from the field of MPC, moving horizon estimation and optimal control.

5.1 General MPC evaluation

The MPC performance of GRAMPC is evaluated for a testbench that covers a wide range of meaningful MPC applications. For the sake of comparison, the two MPC toolboxes ACADO and VIATOC are included in the evaluation, although it is not the intention of this section to rigorously rate the performance against other solvers, as such a comparison is difficult to carry out objectively. The evaluation should rather give a general impression about the performance and properties of GRAMPC. In addition, implementation details are presented for running the MPC testbench examples with GRAMPC on dSpace and ECU level.

5.1.1 Benchmarks

Table 1 gives an overview of the considered MPC benchmark problems in terms of the system dimension, the type of constraints (control/state/general nonlinear constraints), the dynamics (linear/nonlinear and explicit/semi-implicit) as well as the respective references. The MPC examples are evaluated with GRAMPC as well as with ACADO Toolkit (Houska et al. 2011) and VIATOC (Kalmari et al. 2015).

The testbench includes three linear problems (mass-spring-damper, helicopter, ball-on-plate) and one semi-implicit reactor example, where the mass matrix \(\varvec{M}\) in the semi-implicit form (1b) is computed from the original partial differential equation (PDE) using finite elements, also see Sect. 5.2.3. The nonlinear chain problem is a scalable MPC benchmark with m masses. Three further MPC examples are defined with nonlinear constraints. The permanent magnet synchronous machine (PMSM) possesses spherical voltage and current constraints in dq-coordinates, whereas the crane example with three degrees of freedom (DOF) and the vehicle problem include a nonlinear constraint to simulate a geometric restriction that must be bypassed (also see Sect. 5.2.1 for the crane example). Three of the problems (PMSM, 2D-crane, vehicle) are not evaluated with VIATOC, as nonlinear constraints cannot be handled by VIATOC at this stage.

For the GRAMPC implementation, most options are set to their default values. The only adapted parameters concern the horizon length T, the number of supporting points for the integration scheme and the integrator itself as well as the number of augmented Lagrangian and gradient iterations, \(i_{\text {max}}\) and \(j_{\text {max}}\), respectively. Default settings are used for the multiplier and penalty updates for the sake of consistency, see Algorithm 1 as well as Sect. 3.5. Note, however, that the performance and computation time of GRAMPC can be further optimized by tuning the parameters related to the penalty update to a specific problem. All benchmark problems listed in Table 1 are available as example implementations in GRAMPC.

ACADO and VIATOC are individually tuned for each MPC problem by varying the number of shooting intervals and iterations in order to either achieve minimum computation time (setting “speed”) or optimal performance in terms of minimal cost at reasonable computational load (setting “optimal”). The solution of the quadratic programs of ACADO was done with qpOASES (Ferreau et al. 2014).

The single MPC projects are integrated in a closed-loop simulation environment with a fourth-order Runge–Kutta integrator with adaptive step size to ensure an accurate system integration regardless of the integration schemes used internally by the MPC toolboxes. The MPC is initialized with the stationary solution at the start of the simulation scenario. The evaluation was carried out on a Windows 10 machine with Intel(R) Core(TM) i5-5300U CPU running at \(2.3 \hbox {GHz}\) using the Microsoft Visual C++ 2013 Professional (C) compiler. Each simulation was run multiple times to obtain a reliable average computation time.

5.1.2 Evaluation results

Table 2 shows the evaluation results for the benchmark problems in terms of computation time and cost value integrated over the whole time interval of the simulation scenario. The cost values are normalized to the best one of each benchmark problem. The results for ACADO and VIATOC are listed for the settings “speed” and “optimal”, as mentioned above. The depicted computation times are the mean computation times, averaged over the complete time horizon of the simulation scenario. The best values for computation time and performance (minimal cost) for each benchmark problem are highlighted in bold font.

The linear MPC problems (mass-spring-damper, helicopter, ball-on-plate) with quadratic cost functions can be tackled well by VIATOC and ACADO due to their underlying linearization techniques. The PDE reactor problem contains a stiff system dynamics in semi-implicit form. ACADO can handle such problems well using its efficient integration schemes, whereas VIATOC relies on fixed step size integrators and therefore requires a relatively large amount of discretization points. While GRAMPC achieves the fastest computation time, the cost value of both ACADO settings as well as the VIATOC optimal setting is lower. A similar cost function, however, can be achieved by GRAMPC when deviating from the default parameters.

In case of the state constrained 2D-crane problem, the computation time is higher for ACADO than for GRAMPC. This appears to be due to the fact that almost over the complete simulation time a nonlinear constraint of a geometric object to be bypassed is active. A closer look at this problem is taken in Sect. 5.2.1.

The CSTR reactor example possesses state and control variables in different orders of magnitude and therefore benefits from scaling. Since GRAMPC supports scaling natively, the computation time is faster than for VIATOC, where the scaling would have to be done manually. Due to the Hessian approximation used by ACADO, it is far less affected by the different scales in the states and controls. Figure 3 shows the state and control trajectories of the three different solvers for the CSTR example. As indicated by the values of the cost function in Table 2, the computed trajectories are quite similar. Only for the first hour, small differences can be observed for the speed settings of ACADO and VIATOC. This can also be seen in Fig. 4, where the cost function value in each MPC step is shown. Slightly different values can be observed, but after about 0.4 h all solvers reach the same stationary value.

A large difference in the cost values occurs for the VTOL example (Vertical Take-Off and Landing Aircraft). Due to the nonlinear dynamics and the corresponding coupling of the control variables, it seems that the gradient method underlying the minimization steps of GRAMPC is more accurate and robust when starting in an equilibrium position than the iterative linearization steps undertaken by ACADO and VIATOC.

The scaling behavior of the MPC schemes w.r.t. the problem dimension is investigated for the nonlinear chain in Table 2. Four different numbers of masses are considered, corresponding to 21–57 state variables and three controls. Although the algorithmic complexity of the augmented Lagrangian/gradient projection algorithm of GRAMPC grows linearly with the state dimension, this is not exactly the case for the nonlinear chain, as the stiffness of the dynamics increases for a larger number of masses, which leads to smaller step sizes of the adaptive Runge–Kutta integrator that was used in GRAMPC for this problem. ACADO shows a more significant increase in computation time for larger values of m, which was to be expected in view of the SQP algorithm underlying ACADO. The computation time for VIATOC is worse for this example, since only fixed step size integrators are available in the current release, which requires to increase the number of discretization points manually. Figure 5 shows a logarithmic plot of the computation time for all three MPC solvers plotted over the number of masses of the nonlinear chain.

Computation time for the nonlinear chain example (also see Table 2)

The computation times shown in Table 2 are average values and therefore give no direct insight into the real-time feasibility of the MPC solvers and the variation of the computational load over the single sampling steps. To this end, Fig. 6 shows accumulation plots of the computation time per MPC step for three selected problems of the testbench. The computation times were evaluated after 30 successive runs to obtain reliable results. The plots show that the computation time of GRAMPC is almost constant for each MPC iteration, which is important for embedded control applications and to give tight upper bounds on the computation time for real-time guarantees. ACADO and VIATOC show a larger variation of the computation time over the iterations, which is mainly due to the active set strategy that both solvers follow and the varying number of QP iterations in each real-time iteration of ACADO, c.f. Houska et al. (2011).

In conclusion, it can be said that GRAMPC has overall fast and real-time feasible computation times for all benchmark problems, in particular for nonlinear systems and in connection with (nonlinear) constraints. Furthermore, GRAMPC scales well with increasing system dimension.

5.1.3 Embedded realization

In addition to the general MPC evaluation, this section evaluates the computation time and memory requirements of GRAMPC for the benchmark problems on real-time and embedded hardware. GRAMPC was implemented on a dSpace MicroLabbox (DS1202) with a \(2\,\hbox {GHz}\) Freescale QolQ processor as well as on the microntroller RH850/P1M from Renesas with a CPU frequency of \(160\,\hbox {MHz}\), \(2\,\hbox {MB}\) program flash and \(128\,\hbox {kB}\) RAM. This processor is typically used in electronic control units (ECU) in the automotive industry. The GRAMPC implementation on this microcontroller therefore can be seen as a prototypical ECU realization. As it is commonly done in embedded systems, GRAMPC was implemented using single floating point precision on both systems due to the floating point units of the processors.

Table 3 lists the computation time and RAM memory footprint of GRAMPC on both hardware platforms for the testbench problems in Tables 1 and 2. The settings of GRAMPC are the same as in the previous section, except for the floating point precision. Due to the compilation size limit of the ECU compiler (\(<10\) kB), the nonlinear chain examples as well as the PDE reactor could not be compiled on the ECU.

The computation times on the dSpace hardware are below the sampling time for all example problems. The same holds for the ECU implementation, except for the 2D-crane, the PMSM, and the VTOL example. However, as mentioned before, tuning of the algorithm can further reduce the runtime, as most of the multiplier and penalty update parameters are taken as default. Note that there is no constant scaling factor between the computation times on dSpace and ECU level, which is probably due to the different realization of the math functions by the respective floating point unit/compilerFootnote 1 on the different hardware.

The required memory is below 9 kB for all examples, except for the nonlinear chain and the PDE reactor, which is less than 7% of the available RAM on the considered ECU. Although the nonlinear chain and the PDE reactor could not be compiled on the ECU as mentioned above, the memory usage as well as the computation time increase only linearly with the size of the system (using the same GRAMPC settings). Overall, the computational speed and the small memory footprint demonstrate the applicability of GRAMPC for embedded systems.

5.2 Application examples

This section discusses some application examples, including a more detailed view on two selected problems from the testbench collection (state constrained and semi-implicit problem), a shrinking horizon MPC application, an equality constrained OCP as well as a moving horizon estimation problem.

5.2.1 Nonlinear constrained model predictive control

The 2D-crane example in Tables 1 and 2 is a particularly challenging one, as it is subject to a nonlinear constraint that models the collision avoidance of an object or obstacle. A schematic representation of the overhead crane is given in Fig. 7. The crane has three degrees-of-freedom and the nonlinear dynamics read as (Käpernick and Graichen 2013)

with the gravitational constant g. The system state \(\varvec{x}=[s_{\text {C}},\dot{s}_{\text {C}}, s_{\text {R}},\dot{s}_{\text {R}},\phi ,\dot{\phi }]^{\mathsf{T}}\in {\mathbb{R}}^6\) comprises the cart position \(s_{\text {C}}\), the rope length \(s_{\text {R}}\), the angular deflection \(\phi\) and the corresponding velocities. The two controls \(\varvec{u} \in {\mathbb{R}}^2\) are the cart acceleration \(u_1\) and the rope acceleration \(u_2\), respectively.

The cost functional (2b) consists of the integral part

which penalizes the deviation from the desired setpoints \(\varvec{x}_{\mathrm{des}} \in {\mathbb{R}}^6\) and \(\varvec{u}_{\mathrm{des}} \in {\mathbb{R}}^2\) respectively. The weight matrices are set to \(\varvec{Q}={\mathrm{diag}}(1,2,2,1,1,4)\) and \(\varvec{R} ={\mathrm{diag}}(0.05,0.05)\) (omitting units). The controls and angular velocity are subject to the box constraints \(|u_i| \le 2 \,{\text{ms}^{-2}},\,i\in {1,2}\) and \(|\dot{\phi }| \le 0.3\, \hbox {rad\,s}^{-1},\,i\in {1,2}\). In addition, the nonlinear inequality constraint

is imposed, which represents a geometric security constraint, e.g. for trucks, over which the load has to be lifted, see Fig. 8 (right).

The prediction horizon and sampling time for the crane problem are set to \(T = 2 \,\text {s}\) and \(\varDelta t = 2\,\hbox {ms}\), respectively. The number of augmented Lagrangian steps and inner gradient iterations of GRAMPC are set to \((i_{\text {max}}, j_{\text {max}}) = (1,2)\). These settings correspond to the computational results in Tables 2 and 3.

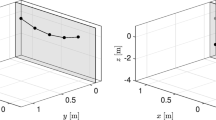

The right part of Fig. 7 illustrates the movement of the overhead crane from the initial state \(\varvec{x}_0 = \left[ -2 \,{\text{m}},0, 2\,{\text{m}},0,0,0 \right] ^{\mathsf{T}}\) to the desired setpoint \(\varvec{x}_{\text {des}} = \left[ 2\,{\text{m}},0, 2 \,{\text{m}},0,0,0 \right] ^{\mathsf{T}}\). Figure 8 shows the corresponding trajectories of the states \(\varvec{x}(t)\) and controls \(\varvec{u}(t)\) as well as the nonlinear constraint (41) plotted as time function \(h(\varvec{x}(t))\). This transition problem is quite challenging, since the nonlinear constraint (41) is active for more than half of the simulation time. One can slightly see an initial violation of the constraint \(h(\varvec{x})\), which should be negligible in practical applications. Nevertheless, one can satisfy the constraint to a higher accuracy by increasing the number of iterations \((i_{\text {max}}, j_{\text {max}})\), in particular of the augmented Lagrangian iterations. This behaviour is pictured in Fig. 9, where the cost function as well as the necessary computation time are shown with regard to the corresponding iteration count. It can be observed that the gradient algorithm quickly converges to a good solution and with increasing computation time (meaning increased iteration count, cf. the middle plot) the optimality of the solution improves further. The right plot of Fig. 9 shows the relative deviation from the optimal cost, i.e. \((J-J_{\mathrm{opt}})/J_{\mathrm{opt}}\), in relation to the necessary computation time. For each computation time there exists an optimal iteration combination, shown by the Pareto front (dashed black line) in the right plot of Fig. 9.

5.2.2 MPC on shrinking horizon

“Classical” MPC with a constant horizon length typically acts as an asymptotic controller in the sense that a desired setpoint is only reached asymptotically. MPC on a shrinking horizon instead reduces the horizon time T in each sampling step in order to reach the desired setpoint in finite time. In particular, if the desired setpoint is incorporated into a terminal constraint and the prediction horizon T is optimized in each MPC step, then T will be automatically reduced over the runtime due to the principle of optimality.

Shrinking horizon MPC with GRAMPC is illustrated for the following double integrator problem

with the state \(\varvec{ x}=[ x_1, x_2]^{\mathsf{T}}\) and control u subject to the box constraint (42d). The weight \(r>0\) in the cost functional (42a) allows a trade-off between energy optimality and time optimality of the MPC. The desired setpoint \(\varvec{x}_{\text {des}}\) is added as terminal constraint (42e), i.e. \(\varvec{g}_T(\varvec{ x}(T)) := \varvec{ x}(T) - \varvec{x}_{\text {des}} = \varvec{0}\) in view of (1c), and the prediction horizon T is treated as optimization variable in addition to the control \(u(\tau )\), \(\tau \in [0,T]\).

For the simulations, the weight in the cost functional is set to \(r = 0.01\) and the initial value of the horizon length is chosen as \(T=6\). The iteration numbers for GRAMPC are set to \((i_{\text {max}},j_{\text {max}})=(1,2)\) in conjunction with a sampling time of \(\varDelta t=0.001\) in order to resemble a real-time implementation. Figure 10 shows the simulation results for the double integrator problem with the desired setpoint \(\varvec{x}_{\text {des}}=\varvec{0}\) and the initial state \(\varvec{x}(0)=[-1,-1]^{\mathsf{T}}\). Obviously, the state trajectories reach the origin in finite time corresponding to the anticipated behavior of the shrinking horizon MPC scheme. The lower right plot of Fig. 10 shows the temporal evolution of the horizon length T over the runtime t. The initial end time of \(T=6\) is marked as a red circle. In the first MPC steps, the optimization quickly decreases the end time to approximately \(T=3.5\). In this short time interval, GRAMPC produces a suboptimal solution due to the strict limitation of the iterations \((i_{\text {max}},j_{\text {max}})\). Afterwards, however, the prediction horizon declines linearly, which concurs with the principle of optimality and shows the optimality of the computed trajectories after the initialization phase. In the simulated example, this knowledge is incorporated in the MPC implementation by substracting the sampling time \(\varDelta t\) from the last solution of T for warm-starting the next MPC step. The simulation is stopped as soon as the horizon length reaches its minimum value \(T_{\mathrm{min}} = \varDelta t = 0.1\).

MPC trajectories with shrinking horizon for the double integrator problem (42)

5.2.3 Semi-implicit problems

The system formulations that can be handled with GRAMPC include DAE systems with singular mass matrix \(\varvec{M}\) as well as general semi-implicit systems. An application example is the discretization of spatially distributed systems by means of finite elements. This is illustrated for a quasi-linear diffusion–convection–reaction system, which is also implemented in the testbench (PDE reactor example). The thermal behavior of the reactor is described on the one-dimensional spatial domain \(z=(0,1)\) using the PDE formulation (Utz et al. 2010)

with the boundary and initial conditions

for the temperature \(\theta =\theta (z,t)\). The process is controlled by the boundary control \(u=u(t)\). Diffusive and convective processes of the reactor are modeled by the nonlinear heat equation (43a) with the parameters \(q_1= {2}\), \(q_2= {-0.05}\), and \(\nu = {1}\), respectively. Reaction effects are included using the parameters \(r_0= {1}\) and \(r_1= {0.2}\). The Neumann boundary condition (43b), the Robin boundary condition (43c), and the initial condition (43) complete the mathematical description of the system dynamics. Both spatial and time domain are normalized for the sake of simplicity. A more detailed description of the system dynamics can be found in Utz et al. (2010).

The PDE system (43) is approximated by an ODE system of the form (16a) by applying a finite element discretization technique (Zienkiewicz and Morgan 1983), whereby the new state variables \(\varvec{x}\in {\mathbb{R}}^{N_{\varvec{x}}}\) approximate the temperature \(\theta\) on the discretized spatial domain z with \(N_{\varvec{x}}\) spatial grid points. The finite element discretization eventually leads to a finite-dimensional system dynamics of the form \(\varvec{M}\varvec{\dot{x}} = \varvec{f}(\varvec{x},u)\) with the mass matrix \(\varvec{M}\in {\mathbb{R}}^{N_{\varvec{x}}\times N_{\varvec{x}}}\) and the nonlinear system function \(\varvec{f}(\varvec{x}, u)\). In particular, \(N_{\varvec{x}}=11\) grid points are chosen for the GRAMPC simulation of the reactor (43). The upper right plot of Fig. 11 shows the sparsity structure of the mass matrix \(\varvec{M}\in {\mathbb{R}}^{11\times 11}\).

The control task for the reactor is the stabilization of a stationary profile \(\varvec{x}_{\text {des}}\) which is accounted for in the quadratic MPC cost functional

The desired setpoint \((\varvec{x}_{\text {des}},u_{\text {des}})\) as well as the initial values \((\varvec{x}_0,u_0)\) are numerically determined from the stationary differential equation

with the corresponding boundary conditions

The prediction horizon and sampling time of the MPC scheme are set to \(T= {0.2}\) and \(\varDelta t= {0.005}\). The number of iterations are limited by \((i_{\text {max}},j_{\text {max}})=(1,2)\). The box constraints for the control are chosen as \(|u(t)| \le 2\).

The numerical integration in GRAMPC is carried out using the solver RODAS (Hairer and Wanner 1996). The sparse numerics of RODAS allow one to cope with the banded structure of the matrices in a computationally efficient manner. Figure 11 shows the setpoint transition from the initial temperature profile \(\varvec{x}_0\) to the desired temperature \(\varvec{x}_{\mathrm{des}}=[2.00, 1.99, 1.97, 1.93, 1.88, 1.81, 1.73, 1.63, 1.51, 1.38, 1.23]^{\mathsf{T}}\) and desired control \(u_{\text {des}}=-1.57\).

Simulated MPC trajectories for the PDE reactor (33)

5.2.4 OCP with equality constraints

An illustrative example of an optimal control problem with equality constraints is a dual arm robot with closed kinematics, e.g. to handle or carry work pieces with both arms. For simplicity, a planar dual arm robot with the joint angles \((x_1, x_2, x_3)\) and \((x_4, x_5, x_6)\) for the left and right arm is considered. The dynamics are given by simple integrators

with the joint velocities as control input \(\varvec{u}\). Given the link lengths \(\varvec{a} = [a_1\), \(a_2\), \(a_3\), \(a_4\), \(a_5\), \(a_6]\), the forward kinematics of left and right arm are computed by

and

The closed kinematic chain is enforced by the equality constraint

A point-to-point motion from \(\varvec{x}_0 = [ \frac{\pi }{2}, -\frac{\pi }{2}, 0, -\frac{\pi }{2}, \frac{\pi }{2}, 0 ]^{\mathsf{T}}\) to \(\varvec{x}_f = [ -\frac{\pi }{2}\), \(\frac{\pi }{2}\), 0, \(\frac{\pi }{2}\), \(-\frac{\pi }{2},\)0\(]^{\mathsf{T}}\) is considered as control task, which is formulated as the optimal control problem

with the fixed end time \(T = 10 \, \text {s}\) and the control constraints \(-\varvec{u}_{\text {min}} = \varvec{u}_{\text {max}} = [1,1,1,1,1,1]^{\mathsf{T}}\text {s}^{-1}\), which limit the angular speeds of the robot arms. The cost functional minimizes the squared joint velocities with \(\varvec{R} = \varvec{I}_{6}\).

Table 4 shows the computation results of GRAMPC for solving OCP (49a) with increasingly restrictive values of the gradient tolerance \(\varepsilon _{\text {rel,c}}\) and constraint tolerance \(\varvec{\varepsilon }_{\varvec{g}}\) that are used for checking convergence of Algorithm 1 and 2. The required computation time \(t_{\text {CPU}}\) as well as the average number of (inner) gradient iterations and the number of (outer) augmented Lagrangian iterations are shown in Table 4. The successive reduction of the tolerances \(\varepsilon _{\text {rel,c}}\) and \(\varvec{\varepsilon }_{\varvec{g}}\) highlights that the augmented Lagrangian framework is able to quickly compute a solution with moderate accuracy. When further tightening the tolerances, the computation time as well as the required iterations increase clearly. This is to be expected as augmented Lagrangian and gradient algorithms are linearly convergent opposed to super-linear or quadratic convergence of, e.g., SQP or interior point methods. However, as the main application of GRAMPC is model predictive control, the ability to quickly compute suboptimal solutions that are improved over time is more important than the numerical solution for very small tolerances.

The resulting trajectory of the planar robot is depicted in Fig. 12 and shows that the solution of the optimal control problem (49a) involves moving through singular configurations of left and right arm, which makes this problem quite challenging.

5.2.5 Moving horizon estimation

Another application domain for GRAMPC is moving horizon estimation (MHE) by taking advantage of the parameter optimization functionality. This is illustrated for the CSTR reactor model listed in the MPC testbench in Table 1. The system dynamics of the reactor is given by Rothfuss et al. (1996)

with the state vector \(\varvec{x}=[c_{\mathrm{A}}, c_{\mathrm{B}},T, T_{\mathrm{C}}]^{\mathsf{T}}\) consisting of the monomer and product concentrations \(c_{\mathrm{A}}\), \(c_{\mathrm{B}}\) and the reactor and cooling temperature T and \(T_{\mathrm{C}}\). The control variables \(\varvec{u}=[u_1,u_2]^{\mathsf{T}}\) are the normalized flow rate and cooling power. The measured quantities are the temperatures \(y_1 = T\) and \(y_2 = T_{\mathrm{C}}\). The parameter values and a more detailed description of the system are given in Rothfuss et al. (1996).

The following scenario considers the interconnection of the MHE with the MPC from the testbench, i.e. the state \(\varvec{{\hat{x}}}_k\) at the sampling time \(t_k\) is estimated and provided to the MPC. The cost functional of the MPC is designed according to

where \(\varDelta \varvec{x} = \varvec{x} - \varvec{x}_{\mathrm{des}}\) and \(\varDelta \varvec{u} = \varvec{u} - \varvec{u}_{\mathrm{des}}\) penalize the deviation of the state and control from the desired setpoint \((\varvec{x}_{\mathrm{des}},\varvec{u}_{\mathrm{des}})\). The MHE uses the cost functional defined in Sect. 2.3, c.f. (5a). The sampling rate for both MPC and MHE is set to \(\varDelta t=1 \,\text{s}\). The MPC runs with a prediction horizon of \(T=20\) min and 40 discretization points, while the MHE horizon is set to \(T_{\mathrm{MHE}}=10 \,\text{s}\) with 10 discretization points. The GRAMPC implementation of the MHE uses only one gradient iteration per sampling step, i.e. \((i_{\text {max}},j_{\text {max}})=(1,1)\), while the implementation of the MPC uses three gradient iterations, i.e. \((i_{\text {max}},j_{\text {max}}) =(1,3)\).

The simulated scenario in Fig. 13 consists of alternating setpoint changes between two stationary points. The two measured temperatures are subject to a Gaussian measurement noise with a standard deviation of \(4\,^\circ\hbox {C}\). The initial guess for the state vector \(\varvec{x}\) of the MHE differs from the true initial values by 100 kmol m\(^{-3}\) for both concentrations \(c_{\text {A}}\) and \(c_{\text {B}}\) and by \(5\,^\circ\hbox {C}\), respectively \(-7\,^\circ\hbox {C}\), for the reactor and cooling temperature. This initial disturbance vanishes within a few iterations and the MHE tracks the ground truth (i.e. the simulated state values) closely, with an average error of \(\delta \varvec{{\hat{x}}} =\) [7.12 kmol m\(^{-3}\), 6.24 kmol m\(^{-3}\), 0.10 \(^\circ \hbox {C}\), 0.09 \(^\circ \hbox {C}]^{\mathsf{T}}\). This corresponds to a relative error of less than \(0.1\%\) for each state variable, when normalized to the respective maximum value. One iteration of the MHE requires a computation time of \(t_{\mathrm{CPU}}=11\,\upmu \text{s}\) to calculate a new estimate of the state vector \(\varvec{x}\) and therefore about one third of the time necessary for one MPC iteration.

6 Conclusions

The augmented Lagrangian algorithm presented in this paper is implemented in the nonlinear model predictive control (MPC) toolbox GRAMPC and extends its original version that was published in 2014 in several significant aspects. The system class that can be handled by GRAMPC are general nonlinear systems described by explicit or semi-implicit differential equations or differential-algebraic equations (DAE) of index 1. Besides input constraints, the algorithm accounts for nonlinear state and/or input dependent equality and inequality constraints as well as for unknown parameters and a possibly free end time as further optimization variables, which is relevant, for instance, for moving horizon estimation or MPC on a shrinking horizon. The computational efficiency of GRAMPC is illustrated for a testbench of representative MPC problems and in comparison with two state-of-the-art nonlinear MPC toolboxes. In particular, the augmented Lagrangian algorithm implemented in GRAMPC is tailored to embedded MPC with very low memory requirements. This is demonstrated in terms of runtime results on dSPACE and ECU hardware that is typically used in automotive applications. GRAMPC is available at http://sourceforge.net/projects/grampc and is licensed under the GNU Lesser General Public License (version 3), which is suitable for both academic and industrial/commercial purposes.

Notes

For example software or hardware realization of sine or cosine functions.

References

Allaire G (2007) Numerical analysis and optimization. Oxford University Press, Oxford

Barzilai J, Borwein JM (1988) Two-point step size gradient methods. SIAM J Numer Anal 8(1):141–148

Bemporad A, Borrelli F, Morari M (2002a) Model predictive control based on linear programming—the explicit solution. IEEE Trans Autom Control 47(12):1974–1985

Bemporad A, Morari M, Dua V, Pistikopoulos E (2002b) The explicit linear quadratic regulator for constrained systems. Automatica 38(1):3–20

Bergounioux M (1997) Use of augmented Lagrangian methods for the optimal control of obstacle problems. J Optim Theory Appl 95(1):101–126

Berkovitz LD (1974) Optimal control theory. Springer, New York

Bertsekas DP (1996) Constrained optimization and lagrange multiplier methods. Academic Press, Belmont

Boyd S, Vandenberghe L (2004) Convex optimization. Cambridge University Press, Cambridge

Cao Y, Li S, Petzold L, Serban R (2003) Adjoint sensitivity analysis for differential-algebraic equations: the adjoint DAE system and its numerical solution. SIAM J Sci Comput 24(3):1076–1089

Chen H, Allgöwer F (1998) A quasi-infinite horizon nonlinear model predictive control scheme with guaranteed stability. Automatica 34(10):1205–1217

Conn AR, Gould G, Toint PL (1992) LANCELOT: a fortran package for large-scale nonlinear optimization (release A). Springer, Berlin

de Aguiar M, Camponogara E, Foss B (2016) An augmented Lagrangian method for optimal control of continuous time DAE systems. In: Proceedings of the IEEE conference on control applications (CCA), Buenos Aires, pp 1185–1190

Diehl M, Findeisen R, Allgöwer F, Bock H, Schlöder J (2004) Nominal stability of real-time iteration scheme for nonlinear model predictive control. IEE Proc 152(3):296–308

Diehl M, Bock H, Schlöder J (2005) A real-time iteration scheme for nonlinear optimization in optimal feedback control. SIAM J Control Optim 43(5):1714–1736

Domahidi A, Zgraggen A, Zeilinger M, Morari M, Jones C (2012) Efficient interior point methods for multistage problems arising in receding horizon control. In: Proceedings of the IEEE conference on decision and control (CDC), Maui, pp 668–674

Dunn JC (1996) On \({\text{ l }}^2\) sufficient conditions and the gradient projection method for optimal control problems. SIAM J Control Optim 34(4):1270–1290

Englert T, Graichen K (2018) Nonlinear model predictive torque control of PMSMs for high performance applications. Control Eng Pract 81:43–54

Ferreau H, Bock H, Diehl M (2008) An online active set strategy to overcome the limitations of explicit MPC. Int J Robust Nonlinear Control 18(8):816–830

Ferreau H, Kirches C, Potschka A, Bock H, Diehl M (2014) qpOASES: a parametric active-set algorithm for quadratic programming. Math Program Comput 6(4):327–363

Ferreau H, Almér S, Verschueren R, Diehl M, Frick D, Domahidi A, Jerez J, Stathopoulos G, Jones C (2017) Embedded optimization methods for industrial automatic control. In: Proceedings of 20th IFAC world congress, pp 13736–13751

Findeisen R, Graichen K, Mönnigmann M (2018) Embedded optimization in control: an introduction, opportunities, and challenges. Automatisierungstechnik 66(11):877–902 (in German)

Fortin M, Glowinski R (1983) Augmented lagrangian methods: applications to the solution of boundary-value problems. North-Holland, Amsterdam

Giselsson P (2014) Improved fast dual gradient methods for embedded model predictive control. In: Proceedings of the 19th IFAC world congress, Cape Town, pp 2303–2309

Graichen K (2012) A fixed-point iteration scheme for real-time model predictive control. Automatica 48(7):1300–1305

Graichen K, Kugi A (2010) Stability and incremental improvement of suboptimal MPC without terminal constraints. IEEE Trans Autom Control 55(11):2576–2580

Graichen K, Egretzberger M, Kugi A (2010) A suboptimal approach to real-time model predictive control of nonlinear systems. Automatisierungstechnik 58(8):447–456

Grüne L (2013) Economic receding horizon control without terminal constraints. Automatica 49(3):725–734

Grüne L, Palma V (2014) On the benefit of re-optimization in optimal control under perturbations. In: Proceedings of the 21st international symposium on mathematical theory of networks and systems (MTNS), Groningen, pp 439–446

Hager W (1990) Multiplier methods for nonlinear optimal control. SIAM J Numer Anal 27(4):1061–1080

Hairer E, Wanner G (1996) Solving ordinary differential equations: stiff and differential-algebraic problems. Springer, Heidelberg

Harder K, Buchholz M, Niemeyer J, Remele J, Graichen K (2017) Nonlinear MPC with emission control for a real-world heavy-duty diesel engine. In: Proceedings of the IEEE international conference on advanced intelligent mechatronics (AIM), Munich, pp 1768–1773

Hartley E, Maciejowski J (2015) Field programmable gate array based predictive control system for spacecraft rendezvous in elliptical orbits. Optim Control Appl Methods 36(5):585–607

Houska B, Ferreau H, Diehl M (2011) ACADO toolkit—an open source framework for automatic control and dynamic optimization. Optim Control Appl Methods 32(3):298–312

Ito K, Kunisch K (1990) The augmented Lagrangian method for equality and inequality constraints in hilbert spaces. Math Program 46:341–360

Jones C, Domahidi A, Morari M, Richter S, Ullmann F, Zeilinger M (2012) Fast predictive control: real-time computation and certification. In: Proceedings of the 4th IFAC nonlinear predictive control conference (NMPC), Leeuwenhorst, pp 94–98

Kalmari J, Backman J, Visala A (2015) A toolkit for nonlinear model predictive control using gradient projection and code generation. Control Eng Pract 39:56–66