Abstract

Modern engineering design optimization often relies on computer simulations to evaluate candidate designs, a scenario which formulates the problem of optimizing a computationally expensive black-box functions. In such problems, there will often exist candidate designs which cause the simulation to fail, and this can degrade the optimization effectiveness. To address this issue, this paper proposes a new optimization algorithm which incorporates classifiers into the optimization search. The classifiers predict which candidate design are expected to cause the simulation to fail, and their prediction is used to bias the search towards valid designs, namely, for which the simulation is expected to succeed. However, the effectiveness of this approach strongly depends on the type of metamodels and classifiers being used, but due to the high cost of evaluating the simulation-based objective function it may be impractical to identify by numerical experiments the most suitable types of each. Leveraging on these issues, the proposed algorithm offers two main contributions: (a) it uses ensembles of both metamodels and classifiers to benefit from a diversity of predictions of different metamodels and classifiers, and (b) to improve the search effectiveness, it continuously adapts the ensembles’ topology during the search. The performance of the proposed algorithm was evaluated using an engineering problem of airfoil shape optimization. Performance analysis of the proposed algorithm using an engineering problem of airfoil shape optimization shows that: (a) incorporating classifiers into the search was an effective approach to handle simulation failures (b) using ensembles of metamodels and classifiers, and updating their topology during the search, improved the search effectiveness in comparison to using a single metamodel and classifier, and (c) it is beneficial to update the topology of the metamodel ensemble in all problem types, and it is beneficial to update the classifier ensemble topology in problems where simulation failures are prevalent.

Similar content being viewed by others

References

Abramson MA, Asaki TJ, Dennis JE, Magallanez R, Sottile M (2012) An efficient class of direct search surrogate methods for solving expensive optimization problems with CPU-time-related functions. Struct Optim 45(1):53–64

Acar E (2010) Various approaches for constructing an ensemble of metamodels using local measures. Struct Multidisc Optim 42(6):879–896

Alexandrov NM, Dennis JE, Lewis RM, Torczon V (1997) A trust region framework for managing the use of approximation models in optimization. Tech. Rep. NASA/CR-201745, ICASE Report No. 97-50, ICASE

Balabanov V, Young S, Hambric S, Simpson TW (2008) Axisymmetric vehicle nose shape optimization. In: Proceedings of the 12th AIAA/ISSMO multidisciplinary analysis and optimization conference, American Institute of Aeronautics and Astronautics, Reston, Virginia

Bishop CM (1995) Neural networks for pattern recognition. Oxford University Press, New York

Booker AJ, Dennis JE, Frank PD, Serafini DB, Torczon V, Trosset MW (1999) A rigorous framework for optimization of expensive functions by surrogates. Struct Optim 17(1):1–13

Büche D, Schraudolph NN, Koumoutsakos P (2005) Accelerating evolutionary algorithms with Gaussian process fitness function models. IEEE Trans Syst Man Cybern 35(2):183–194

Chipperfield A, Fleming P, Pohlheim H, Fonseca C (1994) Genetic algorithm TOOLBOX for use with MATLAB, version 1.2. Department of Automatic Control and Systems Engineering, University of Sheffield, Sheffield

Conn AR, Scheinberg K, Toint PL (1997) On the convergence of derivative-free methods for unconstrained optimization. In: Iserles A, Buhmann MD (eds) Approximation theory and optimization: tributes to M.J.D. Powell. Cambridge University Press, Cambridge, pp 83–108

Conn AR, Scheinberg K, Toint PL (1998) A derivative free optimization algorithm in practice. In: Proceedings of the seventh AIAA/USAF/NASA/ISSMO symposium on multidisciplinary analysis and optimization, American Institute of Aeronautics and Astronautics, Reston, Virginia, AIAA paper number AIAA-1998-4718

Conn AR, Scheinberg K, Vicente LN (2009) Introduction to derivative-free optimization., MPS-SIAM series on optimization, SIAM, Philadelphia

Dingding C, Zhong A, Gano J, Hamid S, De Jesus O, Stephenson S (2007) Construction of surrogate model ensembles with sparse data. In: Proceedings of the 2007 IEEE conference on evolutionary computation-CEC 2007. IEEE, Piscataway, New Jersey, pp 244–251

Drela M, Youngren H (2001) XFOIL 6.9 User Primer. Department of Aeronautics and Astronautics, Massachusetts Institute of Technology, Cambridge, MA

Emmerich MTM, Giotis A, Özedmir M, Bäck T, Giannakoglou KC (2002) Metamodel-assisted evolution strategies. In: Merelo Guervós JJ (ed) The 7th international conference on parallel problem solving from nature-PPSN VII, Springer, Berlin, no. 2439 in Lecture Notes in Computer Science, pp 361–370

Filippone A (2006) Flight performance of fixed and rotary wing aircraft, 1st edn. Elsevier, Amsterdam

Forrester AIJ, Keane AJ (2008) Recent advances in surrogate-based optimization. Prog Aerosp Sci 45(1–3):50–79

Goel T, Haftka RT, Shyy W, Queipo NV (2007) Ensembles of surrogates. Struct Multidisc Optim 33:199–216

Golberg MA, Chen CS, Karur SR (1996) Improved multiquadric approximation for partial differential equations. Eng Anal Boundary Elem 18:9–17

Handoko S, Kwoh CK, Ong YS (2010) Feasibility structure modeling: an effective chaperon for constrained memetic algorithms. IEEE Trans Evol Comput 14(5):740–758

Hansen N, Ostermeier A (2001) Completely derandomized self-adaptation in evolution strategies. Evol Comput 9(2):159–195

Hicks RM, Henne PA (1978) Wing design by numerical optimization. J Aircr 15(7):407–412

Jin Y, Sendhoff B (2004) Reducing fitness evaluations using clustering technqiues and neural network ensembles. In: Deb K et al (eds) Proceedings of the genetic and evolutionary computation conference-GECCO 2004. Springer, Berlin, pp 688–699

Jin Y, Olhofer M, Sendhoff B (2002) A framework for evolutionary optimization with approximate fitness functions. IEEE Trans Evol Comput 6(5):481–494

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Global Optim 13:455–492

Koehler JR, Owen AB (1996) Computer experiments. In: Ghosh S, Rao CR, Krishnaiah PR (eds) Handbook of statistics. Elsevier, Amsterdam, pp 261–308

Lim D, Ong YS, Jin Y (2007) A study on metamodeling techniques, ensembles and multi-surrogates in evolutionary computation. In: Thierens D (ed) Proceedings of the genetic and evolutionary computation conference-GECCO 2007. ACM Press, New York, pp 1288–1295

Madych WR (1992) Miscellaneous error bounds for multiquadric and related interpolators. Comput Math Appl 24(12):121–138

McKay MD, Beckman RJ, Conover WJ (1979) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21(2):239–245

Neri F, Kotilainen N, Vapa M (2008) A memetic-neural approach to discover resources in P2P networks. In: Cotta C, van Hemert J (eds) Recent advances in evolutionary computation for combinatorial optimization, studies in computational intelligence, vol. 153/2008. Springer, Berlin, pp 113–129

Okabe T (2007) Stabilizing parallel computation for evolutionary algorithms on real-world applications. Proceedings of the 7th international conference on optimization techniques and applications-ICOTA 7. Universal Academy Press, Tokyo, pp 131–132

Poloni C, Giurgevich A, Onseti L, Pediroda V (2000) Hybridization of a multi-objective genetic algorithm, a neural network and a classical optimizer for a complex design problem in fluid dynamics. Comput Methods Appl Mech Eng 186(2–4):403–420

Queipo NV, Haftka RT, Shyy W, Goel T, Vaidyanathan R, Tucker KP (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41:1–28

Rasheed K, Hirsh H, Gelsey A (1997) A genetic algorithm for continuous design space search. Artif Intell Eng 11:295–305

Ratle A (1999) Optimal sampling strategies for learning a fitness model. In: The 1999 IEEE congress on evolutionary computation-CEC 1999. IEEE, Piscataway, New Jersey, pp 2078–2085

Sheskin DJ (2007) Handbook of parametric and nonparametric statistical procedures, 4th edn. Chapman and Hall, Boca Raton

Simpson TW, Lin DKJ, Chen W (2001a) Sampling strategies for computer experiments: design and analysis. Int J Reliab Appl 2(3):209–240

Simpson TW, Poplinski JD, Koch PN, Allen JK (2001b) Metamodels for computer-based engineering design: survey and recommendations. Eng Comput 17:129–150

Tenne Y, Armfield SW (2008) A versatile surrogate-assisted memetic algorithm for optimization of computationally expensive functions and its engineering applications. In: Yang A, Shan Y, Thu Bui L (eds) Success in evolutionary computation, studies in computational intelligence, vol 92. Springer, Berlin, pp 43–72

Tenne Y, Goh CK (eds) (2010) Computational intelligence in expensive optimization problems. Evolutionary learning and optimization, vol 2., Springer, Berlin

Tenne Y, Izui K, Nishiwaki S (2010) Handling undefined vectors in expensive optimization problems. In: Di Chio C (ed) Proceedings of the 2010 EvoStar conference, Springer, Berlin, Lecture Notes in Computer Science, vol. 6024/2010, pp 582–591

Wu HY, Yang S, Liu F, Tsai HM (2003) Comparison of three geometric representations of airfoils for aerodynamic optimization. In: Proceedings of the 16th AIAA computational fluid dynamics conference, American Institute of Aeronautics and Astronautics, Reston, Virginia, AIAA 2003–4095

Wu X, Kumar V, Quinlan RJ, Ghosh J, Yang Q, Motoda H, McLachlan GJ, Ng A, Liu B, Yu PS, Zhou ZH, Steinbach M, Hand DJ, Steinberg D (2008) Top 10 algorithms in data mining. Knowl Inf Syst 14:1–37

Zahara E, Kao YT (2009) Hybrid Nelder–Mead simplex search and particle swarm optimization for constrained engineering design problems. Expert Syst Appl 36:3880–3886

Zhou X, Ma Y, Cheng Z, Liu L, Wang J (2010) Ensemble of metamodels with recursive arithmetic average. In: Luo Q (ed) Proceedings of the international conference on industrial mechatronics and automation-ICIMA 2010. IEEE, Piscataway, NJ, pp 178–182

Author information

Authors and Affiliations

Corresponding author

Appendices

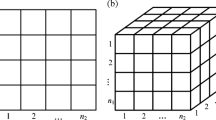

Appendix 1: Ensemble metamodels

Metamodels serve as computationally-cheaper mathematical approximations of the true expensive objective function, and are typically interpolants which have been trained by using previously evaluated vectors. In this study, the proposed algorithm used a metamodel ensemble comprising of two metamodel types (Simpson et al. 2001b):

-

Radial Basis Functions (RBF) The metamodel approximates the objective function by a superposition of basis functions of the form

$$\begin{aligned} \phi _{ i } ( {\varvec{x}}) = \phi ( ||{\varvec{x}}- {\varvec{x}}_{ i } ||_2 ) \,, \end{aligned}$$(18)where \({\varvec{x}}_i\) is an interpolation point. Given the training data \({\varvec{x}}_i \in \mathbb { R }^d,\) \(i = 1 \ldots n,\) and corresponding objective values \({f}( {\varvec{x}}_i), \) the RBF metamodel is then given by

$$\begin{aligned} {m}( {\varvec{x}}) = \sum _{i = 1}^{n} \alpha _i \phi _i( {\varvec{x}}) + c \,, \end{aligned}$$(19)where α i and c are coefficients determined from the interpolation conditions

$$\begin{aligned} {m}( {\varvec{x}}_i )&= {f}( {\varvec{x}}_i ) , \, i = 1 \ldots n\,,\end{aligned}$$(20a)$$\begin{aligned}&\sum _{i = 1 }^{ n } \alpha _i = 0 \, . \end{aligned}$$(20b)A common choice is the Gaussian basis function (Koehler and Owen 1996), defined as

$$\begin{aligned} \phi _i( {\varvec{x}}) = \exp \left( -\frac{ {\varvec{x}}- {\varvec{x}}_i }{ \tau } \right) \,, \end{aligned}$$(21)where τ controls the width of the Gaussians and is determined by CV (Golberg et al. 1996). The set of interpolation equations (20) is often ill-conditioned, and so the proposed algorithm solves them using the singular value decomposition (SVD) procedure (Simpson et al. 2001b).

-

Radial Basis Functions Network (RBFN) The metamodel aims to mimic the operation of a biological neural network. The metamodel uses RBFs as its ‘neurons’ or ‘processing units’, but in contrast to the RBF metamodel, it typically uses less RBFs than the number of sample points. This is done to improve the metamodel’s generalization ability, and therefore its accuracy of prediction. Given a set of sample points, \({\varvec{x}}_i,\) \(i = 1 \ldots n,\) and their respective objective values, \({f}( {\varvec{x}}_i ),\) the RBFN metamodel is given by

$$\begin{aligned} {m}( {\varvec{x}}) = \sum _{i = 1}^{p} \alpha _i \phi _i( {\varvec{x}}) \,, \end{aligned}$$(22)where the processing units \(\phi _i( {\varvec{x}})\) are

$$\begin{aligned} \phi _i( {\varvec{x}}) = \exp \left( -\frac{ {\varvec{x}}- \hat{ {\varvec{x}}}_i }{ \tau } \right) \,, \end{aligned}$$(23)and \(p \le n.\) The neurons’ centres, \(\hat{ {\varvec{x}}}_i,\) \(i = 1 \ldots p,\) need to be determined such that they provide a good representation of the sample points, and an established approach to achieve this is to determine them by clustering (Bishop 1995). Accordingly, the proposed algorithm uses the k-means clustering algorithm to determine the centres. The parameter τ, which determines the width of the Gaussian functions of the neurons, is selected by CV (Golberg et al. 1996). The coefficients α are determined as the least-squares solution of the underdetermined linear set of equations

$$\begin{aligned} \varPhi ^T \varPhi \varvec{ \alpha }&= \varPhi ^T {\varvec{ f }} \end{aligned}$$(24)where

$$\begin{aligned} \varPhi _{ i, j }&= \phi _j( {\varvec{x}}_i ) \end{aligned}$$(25)where \({\varvec{x}}_i,\) \(i = 1 \ldots n\) are the sample points, and f is the vector of corresponding objective function values. As mentioned in Sect. 3, the proposed algorithm used two RBFNs, one with \(p = 0.8n\) and another with \(p = 0.6n,\) which correspond to two different RBFN layouts, and therefore, two different approximations of the objective function.

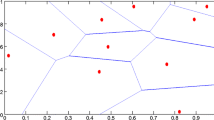

Appendix 2: Ensemble classifiers

Classifiers originated in the domain on machine learning, and their goal is predict the class of new vectors, that is, given a set of vectors where each is associated with a group, a classifier associates a new input vector with one of these groups. Mathematically, given a set of vectors \({\varvec{x}}_i \in \mathbb { R }^d,\) \( i = 1 \ldots n,\) which are grouped into several classes such that each vector has a corresponding class label \({F}( {\varvec{x}}_i ) \in \mathbb{I} ,\) for example, \(\mathbb{I} = \lbrace -1, +1 \rbrace \) in a two-class problem, a classifier performs the mapping

where \({c}( {\varvec{x}})\) is the class assigned by the classifier.

In this study, the proposed algorithm employs a classifier ensemble comprising of three established variants (Wu et al. 2008):

-

k Nearest Neighbours (KNN) The classifier assigns the new vector the same class as its closest training vector, namely:

$$\begin{aligned} {c}( {\varvec{x}}) = {F}( {\varvec{x}}_{\mathrm { NN} } ):d( {\varvec{x}}, {\varvec{x}}_{\mathrm { NN }} ) = \min \, d \bigl ( {\varvec{x}}, {\varvec{x}}_i \bigr ) , \; i = 1 \ldots n \, , \end{aligned}$$(27)where \(d({\varvec{x}}, {\varvec{y}} )\) is distance measure such as the \(l_2\) norm. An extension of this technique is to assign the class most frequent among the \(k>1\) nearest neighbours (KNN). In this study the classifier used \(k=3.\)

-

Linear Discriminant Analysis (LDA) In a two-class problem, where the class labels are \(F ( {\varvec{x}}_i ) \in \mathbb{I} = \lbrace -1, +1 \rbrace, \) the classifier attempts to model the conditional probability density functions of a vector belonging to each class, where the latter functions are assumed to be normally distributed. The classifier considers the separation between classes as the ratio of: (a) the variance between classes, and (b) the variance within the classes, and obtains a vector w which maximizes this ratio. The vector w is such that it is orthogonal to the hyperplane separating the two classes. A new vector x is classified based on its projection with respect to the separating hyperplane, that is,

$$\begin{aligned} {c}( {\varvec{x}}) = {{\mathrm{sign}}}{ \left( {\varvec{w}} \cdot {\varvec{x}} \right) } \, . \end{aligned}$$(28) -

Support Vector Machines (SVM) The classifier projects the data into a high-dimensional space where it can be more easily separated into disjoint classes. In a two-class problem, and assuming class labels \(F ( {\varvec{x}}_i ) \in \mathbb{I} = \lbrace -1, +1 \rbrace, \) an SVM classifier tries to find the best classification function for the training data. For a linearly separable training set, a linear classification function is the separating hyperplane passing through the middle of the two classes. Once this hyperplane has been fixed, new vectors are classified based on their relative position to this hyperplane, that is, whether they are “above” or “below” it. Since there are many possible separating hyperplanes, an SVM classifier adds the condition that the hyperplane should maximize its distance to the its nearest vectors from each class. This is accomplished by maximizing the Lagrangian

$$\begin{aligned} L_P = \frac{1}{2} ||{{\varvec{w}} } ||- \sum _{ i = 1 }^{ n } \alpha _i {F}( {\varvec{x}}_i ) ( {{\varvec{w}} } \cdot {{\varvec{x}} }_i + b ) + \sum _{ i = 1 }^{ n } \alpha _i \, , \end{aligned}$$(29)where n is the number of samples (training vectors), \({F}( {\varvec{x}}_i )\) is the class of the ith training vector, and \(\alpha _i \geqslant 0,\) \(i = 1 \ldots n,\) are the Lagrange multipliers, such that the derivatives of \(L_P\) with respect to α i are zero. The vector \({{\varvec{w}} }\) and scalar b define the hyperplane.

Rights and permissions

About this article

Cite this article

Tenne, Y. An adaptive-topology ensemble algorithm for engineering optimization problems. Optim Eng 16, 303–334 (2015). https://doi.org/10.1007/s11081-014-9260-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11081-014-9260-z