Abstract

The deepening integration of social-technical systems creates immensely complex environments, creating increasingly uncertain and unpredictable circumstances. Given this context, policymakers have been encouraged to draw on complexity science-informed approaches in policymaking to help grapple with and manage the mounting complexity of the world. For nearly eighty years, complexity-informed approaches have been promising to change how our complex systems are understood and managed, ultimately assisting in better policymaking. Despite the potential of complexity science, in practice, its use often remains limited to a few specialised domains and has not become part and parcel of the mainstream policy debate. To understand why this might be the case, we question why complexity science remains nascent and not integrated into the core of policymaking. Specifically, we ask what the non-technical challenges and barriers are preventing the adoption of complexity science into policymaking. To address this question, we conducted an extensive literature review. We collected the scattered fragments of text that discussed the non-technical challenges related to the use of complexity science in policymaking and stitched these fragments into a structured framework by synthesising our findings. Our framework consists of three thematic groupings of the non-technical challenges: (a) management, cost, and adoption challenges; (b) limited trust, communication, and acceptance; and (c) ethical barriers. For each broad challenge identified, we propose a mitigation strategy to facilitate the adoption of complexity science into policymaking. We conclude with a call for action to integrate complexity science into policymaking further.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

As the reliance on technology grows, our socio-technical systems become increasingly interconnected and complex. The resultant complexity makes behaviours of these systems harder to predict and possibly more vulnerable to unforeseen events. To counterbalance these challenges, for nearly eight decades, decision makers have been encouraged to make use of complexity science-informed approaches (including systems thinking, systems theory, cybernetics, and complexity theory) to understand and manage these complex and dynamic settings (Cairney & Geyer, 2015; Colander & Kupers, 2014; Gerrits, 2012; Haynes et al., 2020; Morçöl, 2012; Nel et al., 2018; Room, 2011; Taeihagh et al., 2009).

Numerous research groups worldwide increasingly recognise the promise of complexity science, which is reflected in the frequent use of 'complex systems' or ‘complexity’ as a descriptive keyword (Li Vigni, 2021). Despite the overall increased uptake of complexity science in policymaking (Barbrook-Johnson et al., 2019), it is yet to be fully integrated into the policy debate and the everyday decision-making process or toolbox of policymakers (Eppel, 2017). A point noted by Harrison and Geyer (2021a: 47), who state that “[t]o our knowledge, there are no ‘complexity units’ at the heart of major governments. Although there are some complexity-inspired policy documents, they are not the norm. At present, evidence-based approaches continue to dominate.” Several authors express the sentiment that, despite its potential, the adoption of complexity science into policymaking remains limited (Astbury et al., 2023; Barbrook-Johnson et al., 2020; Eppel, 2017; Kwamie et al., 2021; Nguyen et al., 2023). Often, complexity science is confined to the domain of analysis, rather than offering solutions to problems (Head & Alford, 2015). Furthermore, where complexity-informed approaches are adopted more widely, their use is primarily restricted to a few specialised applications, such as traffic management and epidemiology, while maintaining a limited scope (Wilkinson et al., 2013). Thus, this article aims to question why policy debate has not fully integrated complexity science and to explore actions that could facilitate its deeper integration into policy.

Many compelling works, including but not limited to those by Colander and Kupers (2014), Gerrits et al. (2021), Geyer and Cairney (2015), Morçöl (2012, 2023), Room (2011, 2016), and Taeihagh et al. (2013), actively advocate for the necessity of using complexity science in policymaking and planning. However, some suggest that complexity science may not yet be ready for widespread use in policymaking. From a conceptual perspective, critics have suggested that complexity science is often too vague in its definitions and rife with ambiguities to be effectively used within policy (Finegood, 2021; Harrison & Geyer, 2021a; Haynes et al., 2020; Stewart & Ayres, 2001). While methodologically, others have suggested that some of the dominant methods of complexity are not yet ready for full-fledged adoption into policy and decision support. For example, Axtell and Shaheen (2021) caution that agent-based models (ABM) are not yet mature enough to be effectively used in policymaking (despite the approach being around for nearly 30 years). This sentiment is also reflected by Loomis et al., (2008: 45–46), who state that “to some, ABM is not yet ready to become a full-fledged decision support tool at this time. Perhaps in another decade as modellers, other scientists and decision makers gain more experience with ABM, it will become a well-accepted decision support tool", and effectively underscoring further that ABM’s still require much more work before they can be reliably used for decision making. However, it has been 16 years since this statement, and ABM, among many other complexity science-informed approaches, are still not widely used, or accepted within policymaking and decision support.

This reality raises the question, is complexity science at risk of facing similar scepticism and doubts as nuclear fusion,Footnote 1 with predictions of its widespread use and availability constantly being a decade or two away? Our view is that this need not be the case, as there is evidence that complexity science is beginning to be taken more seriously and adopted more frequently within policymaking (Bicket et al., 2020; Gerrits et al., 2021; Rhodes et al., 2021). However, the question remains: why does complexity science (in one form or another) remain nascent and not used extensively as part and parcel of mainstream policymaking? What are the challenges, limits, or barriers to adopting complexity science in and for policymaking? What actions can we take to address these challenges?

We undertook a detailed review of the complexity-policy literature to address our research aims. In doing so, we note that the existing literature tends to focus on promoting the adoption of complexity science in policymaking while providing little critique or guidance on its implementation (Kwamie et al., 2021). It does so by generally taking on more of an optimistic view of complexity science and pointing out the limits of current approaches while highlighting what complexity science can offer as a remedy. While we fully support these efforts to promote complexity science, we also note that the existing literature offers a limited and mostly scattered discussion on the current barriers to implementing complexity science in policymaking. Furthermore, when discussions explicitly address the barriers to adopting complexity science, they tend to do so to a limited extent (Harrison & Geyer, 2021a; San Miguel et al., 2012; Stewart & Ayres, 2001). Alternatively, they frequently limit themselves to a single domain, like systems thinking (Haynes et al., 2020; Loosemore & Cheung, 2015; Nguyen et al., 2023) or agent-based models (Axtell & Shaheen, 2021; Elsawah et al., 2019; Levy et al., 2016), or confine themselves to specific fields like critical infrastructure (Ouyang, 2014) or non-communicable disease prevention (Astbury et al., 2023).

Thus, this article is our attempt to collect the scattered fragments of the literature discussing the challenges and barriers to adopting complexity science in policymaking and attempting to stitch them together into a structured framework. Our goal is that the proposed framework will help to highlight the current shortcomings within complexity science and how it is used and communicated within policy. We hope that by doing so, we might direct future research efforts and ease the pain of adopting complexity science while speeding up the integration and use of the powerful methods and applications that complexity science has to offer.

Given the scope and detail of the reviewed literature and the limited space, we have selected to limit our findings and discussion within this article to the non-technical issues identified. We define non-technical issues as challenges and barriers that pertain to issues not directly related to the technical aspects of complexity science (modelling, data constraints, hardware, or software), such as the lack of awareness, resistance to change, cultural barriers, and communication gaps. Non-technical challenges involve human and organisational factors that can impede the successful integration of complexity science into policymaking. Furthermore, we exclude theoretical and methodological challenges from our definition of non-technical challenges. The challenges associated with technical aspects, theory and methods tend to be more abstract or academic and are often aspects that policymakers are less concerned about (Hamill, 2010). We make this distinction as our evaluation of the literature points out that many of the technical, methodological, and theoretical issues have been previously identified and discussed in detail (Axtell & Farmer, 2022; Elsawah et al., 2019; Heath et al., 2009), with many scholars working hard to overcome some of the existing technical, methodological, and theoretical barriers. While we do not diminish the immense challenges and work still needed to overcome these aspects of complexity science, the non-technical facets, such as management and institutional barriers; utility and trust; communication and reporting; and ethical considerations, appear to be underrepresented, under-reported, and scattered within the literature, despite the apparent hurdles they pose. As such, we focus our attention on the non-technical aspects to address this literature gap.

The structure of the remaining article is as follows: Sect. 2 provides a brief overview of complexity science. Section 3 details the scoping review-informed method we employed to identify challenges to using complexity science in policymaking. Section 4 offers an overview of the literature review results. We then draw from these results in Sect. 5 to propose a framework for non-technical challenges. Section 6 explores possible solutions to mitigate these identified hurdles. Finally, Sect. 7 concludes by emphasising the contributions of our study and advocating for further research to overcome barriers to adopting complexity science in policymaking.

Complexity science

In this section, we present a concise overview of complexity science. Proponents of complexity science consider it to be a paradigm shift in worldviews (Ackoff, 1994; Dent, 1999) and a new scientific method (Mitchell, 2009). Complexity science challenges the reductionist worldview by focusing on holistic perspectives, studying relationships, and understanding non-linear interactions within systems. Scholars in this field explore how interactions generate novel and emergent behaviours and patterns beyond individual parts' actions (Geyer & Harrison, 2021). Despite this shared view, a definition of complexity science remains elusive (Mazzocchi, 2016; O’Sullivan, 2004). For example, Turner and Baker (2019) identify 30 definitions of complex adaptive systems (CAS), a prominent branch within complexity studies. This ambiguity arises from complexity science being an amalgamation of theories, frameworks, logics, mathematics, and methods rather than a unified discipline (Harrison & Geyer, 2021a).

The science of complexity encompasses diverse approaches, classified here as ‘complexity science’ or ‘complexity-informed approaches.’ This umbrella term includes, among others, complexity theory, systems theory, cybernetics, network science, game theory, and chaos theory (see the ‘Map of the Complexity Science’ by Castellani and Gerrits (2021) for a visual overview). While the approaches have many commonalities, clear distinctions exist, notably between systems theory and complexity theory.

Systems theory, forming the theoretical foundation for complexity science, studies systems from the top down. Systems theory seeks to understand systems' larger structure, interactions, and behaviours. Systems thinking, originating from systems theory but distinct from it (Richmond, 1994), approaches problems holistically, emphasising interconnections and feedback loops. Complexity theory, in contrast, takes a bottom-up approach, focusing on individual components and their interactions to explore emergent properties and unexpected phenomena (Mitchell, 2009). It prioritises understanding the emergence of order from disorder and the self-organisation of complex systems (Geyer & Harrison, 2021). Both systems and complexity theories reject reductionism and embrace holism, appreciating the complex interactions within systems (Manson, 2001).

Despite widespread discussion of complexity science in governance and policymaking (Cairney et al., 2019; Colander & Kupers, 2014; Geyer and Cairney, 2015; Geyer & Harrison, 2021; Innes & Booher, 2018; Morçöl, 2023; Room, 2011, 2016; Taeihagh et al., 2013; Taeihagh, 2017b), its full embrace remains elusive (Barbrook-Johnson et al., 2020; Eppel, 2017). The remainder of this article delves into the non-technical barriers hindering the broader adoption of complexity science in policymaking.

Method

To understand the non-technical challenges, barriers, and constraints preventing complexity science’s greater adoption into policymaking, we must undertake a detailed review of the relevant literature. However, undertaking a literature review on complexity science is no easy task. As noted above, complexity science is a collection of approaches (Harrison & Geyer, 2021a). As a result, the field and its related literature are fragmented into a multitude of disciplines and journals, with each a “continued evolution of the intuitive logics tradition and still emerging nature of complexity science” (Wilkinson et al., 2013: 701). While our aim is not to present a review article, we leverage the research design associated with scoping reviews, as they provide a systematic and structured procedure to identify and assess the existing knowledge base related to our research aims (Grant & Booth, 2009). Unlike systematic reviews, scoping reviews focus on examining evidence on a given topic's extent, range, and nature. As such, the methodological quality or risk of bias of individual sources is typically not appraised or deemed optional (Tricco et al., 2018). Below is a brief overview of the method used to identify and select the relevant literature and how the important aspects were extracted and synthesised. We comprehensively describe the method in the supplemental material, Sect. 1, titled Additional details on the method.

Literature inclusion and exclusion criteria

Using a scoping review approach, we developed a protocol to structure our inquiry. The first step in the protocol began with using our research aims to guide the development of a set of inclusion and exclusion criteria that we applied consistently to the literature identified through the search process (for details on the inclusion and exclusion criteria, see supplemental material, Sect. 1.1. Inclusion and exclusion criteria). The inclusion criteria included journal or review articles published in English; that use or discuss complexity science (such as systems thinking, complexity theory, agent-based models, and network theory); that discuss policy or have policy implications; and specifically discuss some form of challenge or barrier to the use of complexity science. We excluded articles that did not show sufficient engagement with the terms and concepts related to complexity science, that simply made superficial reference to the concepts, or only recommended the use of complexity science but did not discuss the challenges to its use in policy. Our inclusion and exclusion criteria were applied during the screening phase to consistently identify the most relevant studies while excluding those unrelated to our research aims.

The search strategy and data sources

The second step in the protocol involved developing a search string to query the SCOPUS database for relevant literature (see supplemental material, Sect. 1.2. Search strategy and data sources). Our search string was developed collaboratively through multiple rounds of discussion, brainstorming sessions, and workshops among the authors. We also consulted with library specialists before we finalised the search string. Our search string consists of three themes that aim to capture the relevant literature at the intersection of policy and complexity science while also identifying articles that discuss potential barriers. The first search theme consisted of 54 search terms and captured the concepts related to complexity science. In many ways, the number of terms required to capture the scope of complexity science reflects its broad and scattered nature and the challenges and ambiguities of defining it. The second search theme relates to policy and policymaking and comprises 62 search terms. The final search theme comprised 72 search terms and covered keywords related to challenges and issues. This search theme aimed to capture the breadth of the possible keywords to describe challenges, issues, or barriers. After applying the search sting within the SCOPUS database, the identified studies’ bibliographic information was downloaded (including the study title, abstract, and authors). The bibliographic information was loaded into PICO Portal (2023), a literature review platform that leverages artificial intelligence (AI) to aid in the structured review and screening of the articles.

Data collection, extraction, and analysis

The third step in the protocol involved screening the abstracts and title of the identified studies and applying our inclusion and exclusion criteria (details available in supplemental material, Sect. 1.3. Search strategy and data sources). The first author screened all abstracts, while the second independently audited 10%. Any discrepancies were discussed and resolved before moving on. With the abstracts filtered, we sourced full-text articles for the selected titles and uploaded them to PICO Portal. The first author then screened these full-texts against the inclusion and exclusion criteria. The second author audited 10% of the full-text articles. The research team held bi-weekly meetings throughout the screening process to discuss any uncertainties or conflicts in the full-text screening. For the protocol's fourth step (Sect. 1.3. Data collection, extraction, and analysis of supplemental material), we jointly developed and deployed a data extraction framework to obtain the relevant information from the selected studies. Our framework included basic information on the article, such as author(s), article title, publication year, and the type of challenge or issue mentioned within the article. The first author conducted the data extraction, and the second author audited 10% of the extracted texts. In the fifth step, the first author analysed the extracted data through exploratory data analysis, visualisation, and thematic synthesis. The first author conducted a thematic synthesis, identifying key themes and trends. The second author reviewed these themes. We resolved any discrepancies collaboratively. Finally, we combined our findings to solidify the core themes.

Limitations

Despite our efforts to capture the vast and fragmented literature on challenges associated with applying complexity science in policymaking, certain limitations require acknowledgement. The primary limitation stems from using a scoping review design. This approach restricts identified articles to those containing specific keywords and meeting our predefined inclusion criteria. This means that studies that do not explicitly use our search terms get missed during the initial search phase (Arksey & O’Malley, 2005). To minimise this factor, we utilised a diverse range of terms within our search strategy.

Similarly, during abstract and title screening, articles lacking an explicit mention of a challenge related to using complexity science were excluded from the final selection. This process of eliminating articles without reviewing their full text represents a limitation inherent to any structured review, particularly for research exploring abstract or unconventional areas. However, given the sheer volume of relevant research and manpower limitations, no readily available alternative to a structured review process exists, at least not until artificial intelligence applications can reliably screen articles and extract key information. Furthermore, focusing on non-technical aspects precluded us from delving into the technical, theoretical, and methodological aspects that might influence the adoption of complexity science in policy settings. Future research can explore these facets in greater depth and assess their impact on utilising complexity science for and within policymaking.

Results

Overview of the dataset and key characteristics

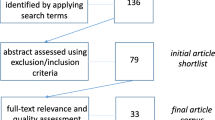

Applying our search string within SCOPUS resulted in the identification of 9,943 studies. Next, using the PICO Portal platform to aid in the screening process and leveraging its machine learning algorithm to order the abstracts from most to least relevant, we screened 5,670 studies (57% of total studies identified in the search process). We halted the abstract screening process after deciding that a saturation point in the screening had been reached, meaning that we found no new relevant studies. Our decision was also validated by the PICO Portal platform, which estimated that we had identified an estimated 98% of relevant studies. This aligns with Agai and Qureshi (2023) and Qureshi et al. (2023), who studied machine learning-assisted screening with PICO Portal and found its algorithms capable of identifying over 90% of relevant articles after screening just 55–60% of abstracts. Indeed, other research has shown that similar AI-assisted screening methods can speed up the identification of relevant articles by more than 30% (Dijk et al., 2023; Hempel et al., 2012). Given the supporting evidence, the authors agreed that identifying the remaining 2% of relevant studies in the remaining 4,273 abstracts would likely not benefit the study. Using our inclusion and exclusion criteria on the 5,670 articles screened, we identified 1,086 articles relevant to this study at the title and abstract level. At the full-text screening stage, we excluded 750 studies while retaining 336 for final data extraction. Figure 1 summarises the screening process and reasons for excluding studies.

All 336 articles we identified cited some form of obstacle or limitation to applying complexity science in a policy context. These issues encompass but are not limited to theoretical and methodological perspectives, social, institutional, and political roadblocks, as well as technical factors related to, but not limited to, methods, tools, approaches, or frameworks. In addition to the primary data extraction, we selected 56 articles out of the 336 for detailed data extraction. These articles contained more detailed and nuanced descriptions of the obstacles and challenges related to applying complexity science within policymaking that would be lost if the information was only captured within the primary data extraction framework.

Overview of the challenges to applying complexity science in policy

As noted previously, this article focuses on the non-technical barriers related to the use of complexity science in and for policymaking. However, we provide a brief overview of all potential impediments identified. We distinguished 141 unique challenges and barriers related to applying complexity science in and for policymaking from the literature. Out of the 141 different challenges identified, 44% (62 issues) can be considered technical, typically related to data issues or aspects of modelling complex systems. As defined in this study, non-technical challenges comprised 41.1% (58) of the total 141 challenges. The remaining 14.9% (21) of the challenges were theoretical or methodological, including challenges such as conceptualising system boundaries or the theory of complexity. Given the nature of theoretical and methodological issues, which can be very abstract or closely linked to technical challenges, attempting to describe these challenges in sufficient detail would require a dedicated article to do the topic justice.

To better understand the prevalence of the various challenges identified across the 336 studies, we also analysed the frequency with which the challenges appear. To ensure a fair and accurate representation of the relative importance of each challenge, we counted each challenge only once per study, regardless of how often it was mentioned within that study. This approach prevents overcounting common challenges and allows for a more balanced view of the landscape. From our survey, the 141 challenges were reported 1,651 times across the 336 studies, with an average of 4.9 challenges per article. To better synthesise the challenges, we grouped the 141 challenges into 16 main challenges that better reflect their overall nature and present the frequency of their occurrence within the text (for details, see the supplemental material Sect. 1.3.1. Data extraction and synthesis).

The challenges most frequently reported (out of 1651) include modelling issues (24.4%), conceptual and methodological challenges (12.5%) data related issues (9.6%), utility and trust (9.3%), and management and institutional difficulties (8.2%). While not as prevalent, other notable challenges included difficulties related to communication and reporting of complexity science (6.6%) and various barriers associated with costs (6.1%). We also note that some aspects, such as ethical considerations (0.4%), were not reported widely in the reviewed literature despite arguably being significant in policymaking.

In addition to the collective number of issues identified in the literature, we assessed the changes in challenges reported over time, as shown in Fig. 2. Specifically, focusing on the non-technical challenges and including theoretical and methodological factors in the assessment, conceptual and methodological considerations (frequency: 207) are the most frequently discussed challenges in the literature over time. This is an unsurprising result given the nature of complexity science in policymaking and that it is a relatively young approach (compared to established approaches to policymaking), rife with conceptual and methodological quandaries (Harrison & Geyer, 2021a). Since 2014, there has been an increase in articles reporting challenges related to management and institutional (frequency: 136) aspects of the use of complexity science for policy. Suggesting that complexity science is beginning to be explored more within the policy sphere in the past decade.

Additionally, concerns about communication and reporting complexity (frequency: 109) science concepts and results have become more prevalent since 2003. Conversely, our results show that theoretical challenges (frequency: 77) associated with complexity science have been reported less frequently since around 2010, possibly indicating that these challenges are being addressed or are simply being discussed less in the literature. Similarly, concerns about the utility, trust, and cost of complexity science (frequency: 153) have also declined in frequency since 2008.

A framework of non-technical barriers to complexity science’s use in policymaking

To interpret our findings coherently, we organised and consolidated the 141 unique challenges into a structured framework of 16 groups. To develop this framework, the first author conducted several rounds of thematic synthesis of the unique challenges by identifying, extracting, and grouping relevant challenges. The second author would independently review the groupings after each round. After several iterations and extensive internal discussions, a consensus was reached about the groupings, which captured the thematic challenges related to using complexity science in policymaking. In this article, we report exclusively on the non-technical challenges and barriers hindering the application of complexity science in policymaking. Our thematic synthesis identified three non-technical challenges hindering the use of complexity science in policymaking, outlined in Table 1. These overarching groups are management, cost, and adoption challenges (frequency: 295); limited trust, communication, and acceptance (frequency: 279); and ethical barriers (frequency: 6). The text to follow will delve deeper into each group, providing detailed descriptions of the specific and underlying challenges within each of the primary groupings.

Management, cost, and adoption challenges

This thematic group consists of challenges related to using complexity science within management and institutional settings (frequency: 136). Political and legal barriers are also highlighted throughout the discussion. We also draw attention to other challenges, such as the wide range of potential costs (frequency: 100) required for adopting complexity-informed approaches. We conclude this section by summarising some of the barriers to adopting and using complexity-based applications (frequency: 59).

Management and institutional barriers

The literature we surveyed suggests that a range of management and institutional barriers has hindered the integration of complexity science into policymaking. Among the most dominant of these is a concern that there is limited interest in or understanding of complexity science and its related concepts and tools among policymakers and practitioners (El-Jardali et al., 2014; Finegood, 2021; Otto, 2008). Compounding this challenge is the difficulty of embedding complexity science into a field or institution, as this would require a paradigm shift and a drastic departure from the conventional way of dealing with problems (De Greene, 1994b). Without this change in thinking, it becomes increasingly difficult for policymakers to understand, accept and use complexity science within their work (Bale et al., 2015; Collins et al., 2007).

Further exacerbating the above challenges is that complexity science has faced critiques for being too ‘conceptual for policy’ and ill-suited for practical policy applications (Kwamie et al., 2021). Here. Critics suggest that the language and concepts of complexity science are unclear, ambiguous, and ill-suited for decision-makers and practical policy applications (Bale et al., 2015; Loosemore & Cheung, 2015). This sentiment is reflected by Stewart and Ayre (2001: 82), who observed that “the language and perspectives of these [systems thinking] approaches has never been harmonised with that of the policy-maker.” Stewart and Ayre argue that complexity science’s counterintuitive nature can be difficult to grasp and can lead to ‘uncomfortable conclusions’ about the limitations of policymakers to intervene within the complex systems they are tasked to govern. Authors such as Cairney (2012) suggest that translating complexity science and its concepts to the policy domain is incomplete, a sentiment echoed by Morçöl (2014, 2023). Harrison and Geyer (2021a) suggest that among the reasons for this difficulty is that complexity is a relatively young field in policy studies and that it, therefore, still lacks a unifying conceptualisation and language of the approach.

The interplay between the knowledge gaps, limited interest, and misalignment between the language of complexity and the policy domain creates a self-reinforcing cycle, further discouraging the adoption of complexity-informed policies due to the constrained enthusiasm for building capacity, allocating resources, and creating opportunities to apply complexity science (Bale et al., 2015; Haynes et al., 2020; Summers et al., 2015).

Conversely, there are concerns about when complexity-informed approaches are actually used in policymaking. As Levin et al. (2015) highlight, using complexity science for policy development can lead to more holistic proposals but ultimately make them unattainable. Such proposals may exceed or fall outside of the implementing body's institutional, political, or financial purview or capability, resulting in implementation failure. Such issues can be further compounded by bureaucracies' tendency to incentivise efficiency, standardisation, linearity, compliance, and uniformity, further impeding complexity-informed approaches that embrace change and uncertainty (Kwamie et al., 2021; Young, 2017).

While not discussed as frequently as other challenges in the studies we reviewed, some authors have indicated that political considerations can be considerable hindrances to the adoption of complexity science into policymaking (Hartman, 2016). Currie et al. (2018) indicate that one such barrier is the incompatibilities between the time needed to build a model or develop a complexity-informed proposal and the timelines of politicians and public decision-making. Currie et al. suggest that such incompatibilities result in rushed and oversimplified proposals and models, hindering their effectiveness. Additionally, Currie et al. also emphasise that potential issues may also arise due to conflicting goals of the political process, which focuses on short-term outcomes (Barbrook-Johnson et al., 2019), and that of complexity approaches and modelling, which seek long-term and holistic solutions.

This dissonance is exacerbated further by the inherent incompatibility between complexity science and policymakers' traditional, reductionist worldview, who often seek to simplify and control complex systems by eliminating complexity rather than embracing it (Wilkinson et al., 2013). Harrison and Geyer (2021a) echo this sentiment but further highlight that embracing complexity would require governments (and politicians, by extension) to acknowledge their limited control and influence over the policy processes and outcomes within the systems they govern. However, Harrison and Geyer also observe that embracing a complexity approach and acknowledging limited control might reduce elected officials' accountability. Loosemore and Cheung (2015) echoed a similar sentiment by suggesting the potential for complexity-informed approaches to diffuse the allocation of risk and responsibility, thereby reducing accountability when issues arise. They note that this is particularly relevant in high-risk high-responsibility domains, such as construction. To address this concern, Harrison and Geyer (2021a) advocate for a balanced approach, stating that “we need to combine a governmental acknowledgement of the limits to its powers with a societal recognition of the complexity of the governance process and the need to still hold elected policy actors to account in a meaningful way” (Harrison & Geyer, 2021a: 50).

Similarly, other studies have highlighted that most complexity-informed approaches fail to account for power dynamics or the impact of human values in their frameworks (Houchin & MacLean, 2005; Levy et al., 2016). For example, Lane and Oliva (1998) note a lack of social-political theory within systems dynamics, a subbranch of complexity science. Haynes (2018) further suggests that one reason for neglecting values in complexity-informed approaches within social and political science is the historical importation of complexity science from the natural sciences, which typically disregard social norms and values.

The literature also identifies potential barriers to integrating complexity science into policymaking arising from institutional or legal considerations (Currie et al., 2018; Shepherd, 1997). Frohlich et al. (2018), for instance, describe how laws can sometimes impose legal restrictions on adaptive management practices, an approach informed by complexity science. They provide an example of legislation primarily focused on safeguarding an individual species instead of protecting and managing the larger system. They also cite several examples of legislation limiting adaptive management approaches, including regulatory fragmentation, spatial boundaries of jurisdiction, and the mismatch between legal land ownership boundaries and ecosystem boundaries.

Linked to institutional challenges, the interdisciplinary nature of complexity science presents significant challenges to its adoption in the policymaking process. Several authors have reported a lack of collaboration between researchers, institutional departments, and disciplines, hindering the effective implementation of complexity-informed approaches (Schimel et al., 2015; Schlüter et al., 2014). Such challenges highlight the crucial need for fostering interdisciplinary collaboration to bridge the gaps between disciplines and facilitate the effective integration of complexity science into policymaking.

Cost barriers

As with many things, certain costs are associated with adopting, using, or implementing a proposal, approach, or tool. Because of its nature, complexity science might be more vulnerable and disproportionately impacted by these costs compared to other established approaches. From the literature studied, we identified a wide range of potential costs associated with, among other things, the development of any tool or model, particularly those using complexity science. The costs identified include general financial costs and funding limitations (Druckenmiller et al., 2007; Lindkvist et al., 2020); financial cost of data collection, creation, or purchasing (Burgess et al., 2020; Summers et al., 2015); time needed to develop a model, collect data, or train users (Terzi et al., 2019); manpower and expertise requirements (Astbury et al., 2023; Balajthy, 1988; Zhuo & Han, 2020); computational costs to develop and run models (Pan et al., 2022; Wen & Li, 2021), which are compounded by computational complexity (Nguyen et al., 2021; Taeihagh et al., 2014).

Adoption and usability barriers

From a more practical perspective, the literature points to several challenges related to the limited adoption, utilisation, and deployment of complexity science and its related applications within organisations as an issue (Ligmann-Zielinska, 2009; Sharma-Wallace et al., 2018). One reason for the limited uptake of complexity science is that many complexity-informed applications are too domain-specific and cannot be used in broader contexts (Moallemi et al., 2021; Taeihagh et al., 2014). While complexity-informed approaches are not widely used in general policymaking, some limited exceptions exist where complexity-based approaches are the mainstream, such as traffic simulations and epidemiology (Wilkinson et al., 2013). Furthermore, Torrens et al. (2013) note that reductionist tools are easier to work with and understand than complexity-informed tools, even though they do not accurately represent reality. The reluctance to adopt new complexity-informed approaches and tools is likely further hindered because of existing tools, making users and policymakers more reluctant to use different and potentially more complex tools (Cockerill et al., 2007; Rich, 2020). This is especially true as many traditional tools (using statistical analysis) can produce predictions with confidence intervals, encouraging additional trust in the results (Maglio et al., 2014).

We also highlight a few other reasons for the limited uptake of complexity-informed approaches or tools identified from the literature. These challenges include usability issues of applications (Druckenmiller et al., 2007); time, effort, and data required to develop a tool or model (Ecem Yildiz et al., 2020); mismatches between a model's intended purpose (teaching) and the way the model is used (decision support) in practice (Allison et al., 2018); lack of a champion within an institution for complexity science or an application (Shepherd, 1997). We also noted issues of trust in complexity-informed tools and methods, which are linked to the challenges discussed here. However, issues of trust are discussed in more detail in the next section. To summarise the silent challenges identified under the broader thematic group of management, cost, and adoption challenges, a synopsis of the main points has been provided in Table 2.

Trust, communication, and acceptance of complexity science

Within this thematic group, we highlight the barriers associated with trust, communication and acceptance of complexity science and applications. We begin by discussing the challenges of building trust in complexity science and its methods and applications (frequency: 153). Linked to trust, we then discuss the barriers limiting the acceptance of complexity science (frequency: 17). We conclude this section by highlighting the considerations related to the challenges of communicating complexity science concepts and the results from applications (frequency: 109).

Limited trust in complexity science and its methods and applications

From the non-technical issues we identified from the literature, challenges related to the trust in complexity science and its applications and methods were among those cited most frequently. Trust in an approach, method, or application is essential, especially for policymakers (Lacey et al., 2018) who must make decisions that are often long-lasting, non-reversible, and can impact many people. Thus, building and maintaining trust in complexity science is essential before those making decisions are willing to embrace it and an alternative approach.

Trust in complexity-informed approaches can be undermined in numerous ways. For example, Ibrahim Shire et al. (2020) highlight, within the context of health care, that the participants not involved in a model building exercise (notably the managers) were less convinced of the model's validity and were unwilling to claim ownership compared to participants involved from the beginning of the process. Vermeulen and Pyka (2016) reflect a similar sentiment by suggesting that the lack of policymakers’ involvement in the modelling process erodes trust and understanding of the process and its outputs. As noted by Levy et al. (2016), the challenge of model validation, particularly in ABM, also contributes to the lack of trust in complexity-informed models. As a distinct issue related to trust, Currie et al. (2018) identify that the problem may not lie with the complexity science application itself but rather how the application is used. Their study implies that working in a multidisciplinary team would likely be most effective in some cases, such as group system dynamics modelling.

Several authors (Kwamie et al., 2021; Wainwright & Millington, 2010) highlight the scarcity of successful deployment of complexity-informed policies as a reason for the hesitancy to adopt complexity into policymaking. Thus, decision-makers are reluctant to put their trust in unproved systems or approaches and seek tangible examples of the efficacy of modelling or an approach (Haynes et al., 2020; Maglio et al., 2014). To gain acceptance, complexity and its modelling applications must showcase their value through successful real-world applications (Haynes, 2018). However, Lorscheid et al. (2019) highlight a compounding issue to this requirement: complexity applications, like Agent-Based Modelling (ABM), need time to mature. Lorscheid et al. cite the time required for calculus to be accepted as a historical parallel. From this point of view, can it be that complexity science is still not yet ready for widespread use despite being around for over 80 years? Lorscheid et al., however, also indicate that approaches such as ABM are becoming more accepted within ecology and that there are indications that the ABM method is starting to mature. Axtell and Shaheen (2021), while optimistic about the future of ABMs, also caution that methods like ABMs are not yet ready to be dependable tools for policymaking due to the technical challenges that still need to be addressed (see Axtell and Shaheen (2021) and Levy et al. (2016) for a discussion of the challenges related to ABM).

Beyond examples of success, Wainwright and Millington (2010) argue that models like ABM need to demonstrate explanatory power before they will be accepted. However, the literature often cites concerns regarding the results' limited predictability and reliability (Allison et al., 2018; Axtell & Shaheen, 2021; Burgess et al., 2020). While a technical issue, the limited predictability of the models primarily stems from the impacts of sensitivity to initial conditions, path dependencies, and stochasticity (Bale et al., 2015; Levy et al., 2016). Additionally, as Whitfield (2013) notes, the increasing complexity and limited reliability of predictions, especially for complex models like those associated with climate modelling, hinder non-experts from understanding the models and results. This erosion of trust and acceptance makes such models more open to politically motivated challenges like those increasingly captured by climate change deniers. Consequently, using the outcomes of such models is more difficult when used to inform climate policy. Astbury et al. (2023) highlight several additional challenges related to modelling: a lack of trust in models as evidence, model complexity that can alienate stakeholders, and stakeholder struggles with interpreting complex results. Notably, they identify the "tension around model complexity," where models must be complex enough to represent the system adequately while remaining interpretable.

Mercure et al. (2016) and Banozic-Tang and Taeihagh (2022) suggest that rather than focusing solely on the complexity or simplicity of models, greater emphasis needs to be placed on the importance of the research-policy interface in relaying the results of models and scientific research findings to policy circles, highlighting the challenges of communication and reporting (discussed in Sect. 5.2.3). These findings underscore the critical need to bridge the gap between the research community and policymakers. Researchers must effectively communicate complexity science's value and limitations, while policymakers must be open to embracing uncertainty and holistic perspectives to address complex societal challenges.

A different aspect of trust discussed across several studies was the lack of transparency in models (Lindkvist et al., 2020; Taeihagh et al., 2014). For example, Torrens et al. (2013) point out that ABMs have been critiqued for being ‘black-box’ models because the simulation does not explicitly show the mechanisms generating emergent behaviours. This lack of transparency can lead policymakers to question the internal validity of the model and doubt its value and related policy recommendations (Vermeulen & Pyka, 2016). Ligmann-Zielinska (2009) underscore the necessity for the transparency and availability of a model’s code to address these concerns. However, Iwanaga (2021) contend that mere access to a model's code doesn’t equate to transparency. Additional contextual information about the model is needed to assess its suitability regarding function and purpose (Burgess et al., 2020; Schlüter et al., 2019). While transparency is a legitimate concern, decision-makers must also consider security issues (Dorri et al., 2018). For example, Luck et al. (2004) describe trust concerns about the safety of multi-agent systems because of agents' self-adaptive nature.

In stark contrast to the challenges discussed above, some studies suggest that policymakers might readily accept the results of models without critical reflection on their purpose, reliability, assumptions, or value (Whitfield, 2013). Allison et al. (2018) and Mercure et al. (2016) note that some models initially designed to enhance social learning, understanding of a system, or built with unreliable data are used as decision-support systems to inform policy. In these cases, the models are used outside of the modeller’s intention, with factors such as the model limitations, reliability or predictability not considered. While no research has explicitly been done on the topic that the authors are aware of, the reliance on models not built for the purpose might also impact the long-term acceptance of complexity science and its applications.

Understanding and acceptance of complexity science

Understanding and accepting complexity science is arguably one of the most critical factors for its wider adoption within policymaking. However, as De Greene (1994b: 445) states,

“the basic challenge facing systems thinkers-and those policymakers, decision-makers, educators, and others who might benefit from systems advice – is not more data, more information systems, more computers, more money, and so on. The challenge is rather for all of us to restructure our very way of thinking.”

Despite its significance, the reviewed literature we studied seldom discusses the challenge of understanding or acceptance of complexity science, particularly within the context of policy and decision support (only 17 instances were identified within the text studied). The limited discussion might be because complexity challenges the ontological and epistemological assumptions of traditional ways of thinking (Morçöl, 2014). The limited discourse on the topic might be because altering beliefs and paradigms towards a more systemic approach is likely one of the most daunting barriers to the widespread adoption of complexity science in policymaking (Mann & Sherren, 2018). Similarly, Cockerill et al., (2007: 39) indicate that if challenges to a person’s beliefs are presented, through a model for example, then the output of such a model is more likely to be ignored than accepted. This suggests, as noted above, that it might be best to include those who are sceptical of complexity-informed approaches into the processes from the beginning to build trust and understanding in the process and outcomes.

Other studies discussing the challenge of improving the understanding and acceptance of complexity science have typically done so in the context of university teaching (York & Orgill, 2020) or training settings (Haynes et al., 2020). These studies highlight the lack of teaching material related to complexity science as a significant issue (Flynn et al., 2019). Additionally, educating students to use complexity science effectively requires support from educators, who themselves require training in complexity thinking before being able to teach students (Schultz et al., 2021). Communicating complexity science concepts to stakeholders and policymakers presents further challenges, similar to those faced in education (Collins et al., 2007; Ibrahim Shire et al., 2020). Stakeholders and policymakers involved in modelling processes can find the concepts or processes difficult and overly complex, leading to information overload (Morais et al., 2021; Weeks et al., 2022). Even well-educated professionals struggle to grasp certain complexity science concepts, such as accumulation (Cronin et al., 2009; Sterman et al., 2015).

A significant challenge to the broader adoption and application of complexity science lies in the inability of policymakers to understand what complexity is and what exactly it offers. While some may point the finger solely at policymakers for this lack of understanding, the blame cannot be laid entirely at their feet. Complexity science has been critiqued for its inherent ambiguity, terminology, and conceptual framework inconsistencies, as noted by several authors. This ambiguity manifests in several ways, hindering both comprehension and collaboration within the field, ultimately limiting its reach and impact. Firstly, the absence of a unifying definition and theoretical framework within complexity science creates a conceptual vacuum, leaving policymakers with little to grasp onto. This issue, highlighted by Kok et al. (2021) and O’Sullivan, (2004), is further compounded by the relative novelty of the field. Scholars are still grappling with its core concepts and methodologies, as Cairney (2012: 352) observes: "the first difficulty with complexity theory is that it is difficult to pin down when we move from conceptual to empirical analysis." Secondly, the terminology employed within complexity science is often plagued by vagueness and inconsistency. As Finegood (2021) and Haynes et al. (2020) emphasise, this lack of standardisation creates significant obstacles for researchers at all levels, hindering their ability to engage effectively with the field. Houchin and MacLean (2005) further echo this concern, critiquing the "variety of definitions, the doubts expressed as to whether it is a theory, theories or a framework, and the different meanings given to the terminology associated with complexity" as detrimental to the field's coherence and credibility. Finally, the terminology's ambiguity extends beyond individual words, impacting the overall conceptual landscape of complexity science. As Teixeira de Melo et al. (2019) point out, different approaches within the field can ascribe different meanings to the same concepts, leading to misinterpretations and hindering effective communication and collaboration between researchers from diverse backgrounds and disciplines. This lack of a shared language, as Harrison and Geyer (2021a: 47) emphasise, further exacerbates the challenges, as "[complexity] is not a unified field with a unifying interdisciplinary language." Given these arguments, addressing the issues of ambiguity and inconsistency is crucial to unlocking the potential of complexity science in policymaking.

The literature has also noted additional barriers to adopting complexity into policymaking. These include policymakers' lack of acceptance and willingness to use complexity-informed models (Levy et al., 2016). Cockerill et al. (2007) suggest that policymakers' limited willingness to use models is based on ‘intentional ignorance’, explaining that in cases where models address controversial topics and provide meaningful insights, decision-makers might ignore the results and fail to address the issue, opting to maintain the status quo, particularly if decision-makers already having existing models or tools (duelling models). Policymakers are, therefore, unwilling to adopt new applications, especially if existing applications suggest some form of certainty or predictability, which is harder to achieve with dynamic and systems models (Cockerill et al., 2007).

Communication and reporting of complexity science.

Science communication is a significant challenge for many domains of study (Bucchi, 2019), with complexity science being no exception. However, complexity science faces a more significant challenge than many other fields, as it can be challenging to understand and produce unexpected or counter-intuitive results (Stewart & Ayres, 2001). As noted previously, ambiguous language and terminology impact the understanding of complexity science. However, the same linguistic and conceptual inconsistencies, as well as the technical and complex nature of the concepts (Loosemore & Cheung, 2015), also hinder the ability of those using complexity to communicate the concepts and the results effectively (Bale et al., 2015).

Furthermore, Steger et al. (2021) and Taeihagh et al. (2013) highlight that without adequate tools and visualisations, non-technical stakeholders and participants might be overwhelmed by the amount of information required to use complexity-informed approaches. Therefore, as Šucha (2017: 23) states, “there is a job to do in helping policy makers and politicians to develop simple messages to persuade the public of the merits of the solutions arrived at using complex science”, as even the best and most accurate models are of no use if the results cannot be effectively communicated (Lehuta et al., 2016). However, the results of complexity science approaches can be challenging to communicate effectively. For example, describing how initial assumptions in a process can result in specific model outcomes is challenging, particularly as models become more complex (Burgess et al., 2020). Additionally, temporal disturbances, random perturbations, path dependencies, agent learning, model initialisation, and various types of uncertainty add to the burden of communication (Bale et al., 2015; Taeihagh, 2015; Vermeulen & Pyka, 2016).

Linked to the challenge of communication, some authors (Lehuta et al., 2016; Levy et al., 2016) have noted the lack of standards for the evaluation, benchmarking, or implementation of various complexity science models, which hinders trust in the approach and makes communication of a model and its results more challenging. Similarly, the lack of clear guidance on actually applying complexity science-informed approaches in policymaking is also an issue (Currie et al., 2018; Mora et al., 2012) and can produce messy or confusing implementation of an application (Zukowski et al., 2019). For example, many complexity-informed models allow policymakers to test various policy interventions. However, as Amagoh (2016: 3) states, such a “model gives little guidance as to which aspects of the system should be manipulated to achieve policy objectives. In other words, it fails to provide a way forward when constituents of a system are in conflict with each other”. For complexity-informed methodologies to be taken seriously and utilised effectively in policymaking, they must move beyond simply describing a system and its potential future outcomes. There is a critical need to develop methods that provide concrete guidance on how and where to intervene within a system to achieve desired policy goals while minimising unintended consequences and negative externalities.

Table 3 summarises the key sticking points related to the communication, implementation, and acceptance of complexity science in policymaking identified in the literature. Addressing these challenges is crucial to unlocking the transformative potential of this field and fostering its widespread application in policy development and implementation.

Ethical barriers

Ethical considerations reflect the moral implications of using complexity science and its related applications. Ethical considerations were the literature's least reported barriers related to complexity science (identified 6 times in the literature studied). The limited discussion on ethical considerations related to complexity science might be because, as Fenwick (2009: 110) notes, complexity science “does not indicate what is desirable beyond the survival of the system in some form”. Indeed, complexity science is a descriptive approach to understanding the interactions and processing within complex systems. It focuses on explaining how these systems work and change rather than developing specific, normative outcomes.

However, we note that a limited number of studies did report on ethical and moral matters related to complexity. These studies discussed the need for moral decision-making when using complexity science with decision-support systems (Partanen, 2010). For example, Midgley (1992) suggested that researchers and modellers must take moral responsibility when developing a model, including in the vision and use of the model or decision-support tool. Additionally, it is also the moral responsibility of the user or decision-maker not to accept the results of a decision-support tool or model without question (de Greene, 1994a).

Choi and Park (2021) highlight a few additional ethical considerations relevant to this debate. They indicated that careful consideration must be taken when modelling society with artificial agents based on biased real-world data. The potential for prejudice also needs to be considered to avoid generating certain stereotypes of particular groups when modelling social groups, which might have significant implications, as the results from such models might have real-world consequences. Choi and Park emphasise that this is especially true for models created to control human behaviour, a sentiment echoed by Anzola et al. (2022). Similarly, Leslie (2023) also notes several ethical challenges. These include aspects of data privacy and protection, managing user and modeller assumptions, erroneous data and the need to manage and mitigate bias, such as sampling bias within data (social media data, for example) and the misleading consequences or results generated from such data, the lack of transparency in models and applications, which, as noted previously, also raises concerns about trust (Lindkvist et al., 2020; Taeihagh et al., 2014). This lack of transparency raises ethical concerns since it prevents evaluating bias and assumptions within the model's inner workings (Leslie, 2023). Table 4 provides a summary of the main ethical barriers and considerations.

Discussion: Paths for overcoming non-technical challenges.

Most studies examined fail to offer definitive solutions for the problems they highlight. Among those providing solutions, they tended to promote adopting mixed methods approaches to address the challenges (Alderete Peralta et al., 2022; Nikas et al., 2019), or they predominantly concentrate on resolving technical difficulties rather than tackling non-technical matters. While technical challenges pose distinct barriers, solutions for such issues have already been discussed at length by many authors (Millington et al., 2017; Pan et al., 2021; Rand & Stummer, 2021). To explore potential pathways for overcoming the non-technical impediments identified in this study, we utilise the existing literature where possible for solutions. We also draw from our experience working with complexity science to suggest possible means to address or mitigate the barriers to using complexity science in and for policymaking.

Addressing management, cost, and adoption barriers

Among the most formidable challenges to address are those related to management, institutional capacity, and cost. These significant barriers intersect with and exacerbate many other potential obstacles, including technical hurdles, trust issues, communication difficulties, and the general acceptance of complexity science.

The limited use of complexity-informed approaches in institutional settings, coupled with concerns related to the limited understanding of complexity science and its application (Finegood, 2021; Otto, 2008), underscores the importance of promoting the general science of complexity at all levels. While fostering this understanding should start at the school and university level, it must primarily focus on institutional and government settings (King et al., 2012; York & Orgill, 2020). Additionally, demonstrating the tangible benefits of using complexity science while simultaneously acknowledging the limits of existing tools can build both trust and acceptance of complexity science within the policy community (Cosens et al., 2021).

Addressing the challenges posed by the limited capacity, skills, and knowledge necessitates investment in training and capacity-building initiatives tailored to policymakers and decision-makers. As Zukowski et al. (2019) note, practitioners and policymakers should engage with a range of different complexity-informed methods, learn from experienced practitioners, and identify and distribute successful case studies, demonstrating the value of using complexity-informed approaches. To achieve these outcomes, sustained funding and support for the research community is essential, enabling them to develop materials and conduct case study research. Additionally, the KISS (Keep it simple stupid) principle, which argues that models should be kept as simple as possible, should be used whenever possible (Johnson, 2015).

Policymakers should work closely with modellers when developing an application to inform policy. Doing so helps to build a deeper understanding of the model and build trust in the modelling process, thereby reducing policymakers' scepticism about the model (Balint et al., 2017). Furthermore, as indicated by Vermeulen and Pyka (2016), the modellers should be involved with the policy process as early as possible and not at the end to validate the policy. Conversely, policymakers should assist modellers by making time and resources available. Moreover, all assumptions, parameters, and processes should be well-documented and maintained (Vermeulen & Pyka, 2016).

Gathering successful examples of similar applications can further convince policymakers of the value of complexity-informed approaches and provide guidance on how complexity science can be applied practically in policymaking and decision support (Finegood, 2021; Loosemore & Cheung, 2015). Furthering policymakers' acceptance of complexity science can also be aided by clarifying confusing and ambiguous terminology (Yang, 2021) and better aligning the language of systems thinking and complexity science with that of policy (Stewart & Ayres, 2001). By clarifying the language and making complexity science more accessible for non-experts, critiques that ‘complexity is too vague or conceptual for policy’ (Kwamie et al., 2021) might be addressed.

Applying complexity-informed approaches might lead to politically uncomfortable conclusions (Stewart & Ayres, 2001) or unattainable goals given political or institutional structures and limits (Levin et al., 2015). While collaborative work with policymakers can help navigate politically sensitive outcomes, legal and institutional barriers present a more formidable challenge. Legislative changes can be complex and time-consuming, and institutional cultures often resist change (Čolić et al., 2022; Olsen, 2009). Consequently, implementing a complexity-informed intervention may necessitate planning and working within legal boundaries from the beginning. However, depending on the problem and the solution identified, policymakers might be convinced to pursue legal and legislative changes to facilitate system-level interventions that would otherwise be impossible. Alternatively, where it is infeasible to change legislation or work within spatial or institutional boundaries, inter-governmental and inter-institutional collaboration across multiple levels of government can go a long way to overcome the limits of a single institution and achieve goals that require system-wide interventions (Morçöl, 2023; Sharma-Wallace et al., 2018). Such collaboration allows for overcoming the limitations of single institutions and achieving goals that require system-wide interventions (Finegood, 2021; Haynes et al., 2020) and promoting adaptive governance (Cosens et al., 2021; Sharma-Wallace et al., 2018; Young, 2017).

While models are valuable tools for testing potential policy outcomes, other options exist. Regulatory sandboxes, instruments allowing experimentation and testing of policies with increased tolerance for error (Tan et al., 2023: 12), offer a promising alternative, primarily when used with a complexity-informed approach. Although commonly used to test new technology or services, their application can be expanded to pilot other policies. The resulting data can be compared to model outputs or used to update models, further refining their accuracy.

Policymakers are also encouraged to provide investment in research and training to alleviate the time and cost burden associated with developing and implementing complexity-based approaches. Additionally, crowdsourcing data can be one means of collecting data at a high volume and lower cost (Taeihagh, 2017a). At the same time, machine learning and natural language processing can aid in collecting and processing information, speeding up the process of model formulation. Similarly, standardised modelling protocols and platforms can reduce the time and financial cost required to build models.

Addressing barriers to communication, reporting, and acceptance of complexity science

Despite the broad scope and multifaceted nature of challenges surrounding communication, reporting, and acceptance of complexity science, steps can be taken to address or mitigate some of the identified issues. Several authors have identified methods to facilitate trust in complexity-informed methods. Beyond investing in stakeholders and policymaker training and education (Flynn et al., 2019; York & Orgill, 2020), actively engaging stakeholders and policymakers in the application's development and deployment from the outset can be highly effective. This participatory approach builds trust and ownership of the application (Ibrahim Shire et al., 2020; Vermeulen & Pyka, 2016). Additionally, the collaborative development process can demonstrate the application's practical value, further bolstering support, particularly if the application has undergone rigorous validation (Kolkman et al., 2016). Trust and acceptance can also be enhanced by showing examples of successful applications to policymakers (Maglio et al., 2014).

From an internal validity perspective, leveraging transparency and openness can foster trust in complexity-informed applications. To further enhance trust, the code of a model can be made open and available (Ligmann-Zielinska, 2009) or through clear documentation, utilising protocols such as the Overview, Design Concepts and Details (ODD) protocol (Grimm et al., 2006, 2020) or the expanded version, ODD + D, which includes decision-making in the protocol (Müller et al., 2013). Misuse of tools can significantly erode trust in complexity science and its applications. Enhancing transparency and documentation serves not only to build trust but also to limit misuse (Iwanaga et al., 2021). However, to the best of their ability, developers are responsible for ensuring their work is used for its intended purpose.

Finally, there is a need to develop better means of communicating the concepts of complexity science and the results of complexity-informed applications. Effective science communication and promotion are crucial for gaining acceptance in the field. However, without adequate means of communicating the results, users and participants can be easily overwhelmed by the amount of information. Therefore, the ongoing development of communication tools and visualisation methods specifically tailored to complexity science is essential (Steger et al., 2021; Taeihagh, 2017b). These methods should aim to clearly communicate aspects such as uncertainties, model assumptions and rules, and their impact on outcomes. Calenbuhr (2020) suggests that qualitative tools, visualisations, and metaphors associated with complexity science, such as fitness landscapes, can be effective tools for communication. Additionally, Taeihagh (2017b) indicates that network visualisations and metrics or visualising and understanding policy interactions and supporting policy design.

Addressing ethical barriers

Ethical concerns in complexity science for policymaking can manifest in many ways. Researchers and modellers must take moral responsibility when developing an application, including in the vision and use of the application (Goodman, 2016). Clearly defining the application's goals and vision beforehand is crucial, including assessing how it will be used. A thorough ethical evaluation of the proposed behaviours is required if the application is intended for human manipulation, such as encouraging certain behaviours. Additionally, the application must rely on reliable data that has been meticulously checked for errors and manipulated in no way. Simultaneously, ensuring data privacy, confidentiality, and protection is paramount (Leslie, 2023). Notably, Choi and Park (2021) highlight that real-world data is often biased, necessitating active measures to ensure that the data used is fair and balanced.

Leslie (2023) emphasises that stakeholder engagement fosters transparency in both the process and the outcome. Transparency is amplified further when the assumptions, algorithms, and processes are clearly documented and accessible. Such transparency minimises the possibility of unethical aspects being incorporated into the final product. These efforts can be further strengthened by adopting ‘design for values’ (Helbing et al., 2021) or ‘ethically aligned design’ (Van den Hoven et al., 2015) approaches, which aim to align the development and use of applications with ethical considerations. These are critical considerations, given that policy decisions can impact a vast number of people and have lasting consequences. Table 5 summarises mitigation strategies for addressing non-technical challenges associated with the use of complexity science in policymaking.

Conclusion

Our investigation initially sought to answer a fundamental question: whydoes complexity science remain largely absent from mainstream policymaking? Utilising a comprehensive literature review, we embarked on a journey to uncover the challenges and barriers hindering complexity science’s wider adoption. Through our review and synthesis of the literature, our investigation yielded 141 unique challenges, which we subsequently consolidated into three overarching themes: Management, cost, and adoption challenges; Trust, communication, and acceptance of complexity science; and Ethical barriers. Our exploration revealed a complex interplay between these themes, highlighting their interconnected and interdependent nature. For instance, issues of trust and acceptance are frequently intertwined with the need for data transparency, model validation, and reliable outcomes, which fall under the umbrella of technical challenges.

While the technical barriers are undoubtedly significant, our analysis underscores the importance of addressing non-technical issues. Communication, trust, and understanding are crucial for fostering acceptance and utilisation of complexity-informed approaches in policymaking. Neglecting these non-technical aspects can confine the application of complexity science to specialised domains and generate scepticism among non-experts. In turn, this can exacerbate existing challenges related to management, institutional capacity, and cost. We also note that much of the discussion related to the trust in complexity science and its applications touches on the need for reliability, validity, and predictability of applications, which are inherently technical challenges but have consequences for the acceptance of complexity science. As such, there is an evident tension between technical challenges and the continued persistence of some non-technical challenges, such as trust and acceptance of complexity science. However, even with this relationship, we argue that there is still no guarantee that if the technical barriers were overcome, the non-technical challenges would also be addressed, as issues like trust are essential in technology adoption (Bahmanziari et al., 2003) and potentially have more of a long-term impact on the ease of adoption of complexity science in and for policymaking. Therefore, if considerations such as trust, understanding, and communication are not addressed, then the utilisation of the complexity science will likely remain limited to specialised and highly technical domains while generating scepticism among non-experts, as seen in the climate debate (Whitfield, 2013). These factors highlight the interdependent nature of challenges like trust, as other non-technical issues, such as those associated with management and institutional barriers, are also linked to trust and the utility of complexity science.

Our research makes several key contributions to the body of knowledge. First, it offers a comprehensive and systematic analysis of the non-technical challenges hindering the adoption of complexity science in policymaking, drawing upon a broader range of literature than previous studies. Second, our study highlights the interconnected nature of technical and non-technical challenges, emphasising the need for a multi-pronged approach to address them. Third, this study identifies several gaps in the current literature, such as the limited focus on the non-technical aspects of application and the ethical implications of utilising complexity science for policymaking. While the ethical and moral concerns related to policy are explored elsewhere (Brall et al., 2019; Marshall, 2017), there has been little discussion on the broader ethical considerations related to the use of complexity science in and for policymaking. This research gap must be addressed if complexity science is to gain trust and be used effectively in policymaking.

This research also paves the way for addressing these challenges and promoting the broader utilisation of complexity science in policymaking. We suggest a range of potential solutions, encompassing strategies for improved communication, enhanced training and education, collaborative model development, and the adoption of ‘design for values’ approaches. Enhanced understanding equips policymakers to utilise the tools better when they are more readily available. Similarly, improved communication of concepts and results to policymakers and the public is crucial for building trust in the methods and outcomes (Banozic-Tang & Taeihagh, 2022; Whitfield, 2013). Moreover, we emphasise the need for institutional and governance changes (Morçöl, 2023) to facilitate cross-disciplinary collaboration and the implementation of system-level solutions (Cosens et al., 2021; Young, 2017).