Abstract

Consider the problem of solving systems of linear algebraic equations \(\varvec{A}\varvec{x}=\varvec{b}\) with a real symmetric positive definite matrix \(\varvec{A}\) using the conjugate gradient (CG) method. To stop the algorithm at the appropriate moment, it is important to monitor the quality of the approximate solution. One of the most relevant quantities for measuring the quality of the approximate solution is the \(\varvec{A}\)-norm of the error. This quantity cannot be easily computed; however, it can be estimated. In this paper we discuss and analyze the behavior of the Gauss-Radau upper bound on the \(\varvec{A}\)-norm of the error, based on viewing CG as a procedure for approximating a certain Riemann-Stieltjes integral. This upper bound depends on a prescribed underestimate \(\varvec{\mu }\) to the smallest eigenvalue of \(\varvec{A}\). We concentrate on explaining a phenomenon observed during computations showing that, in later CG iterations, the upper bound loses its accuracy, and is almost independent of \(\varvec{\mu }\). We construct a model problem that is used to demonstrate and study the behavior of the upper bound in dependence of \(\varvec{\mu }\), and developed formulas that are helpful in understanding this behavior. We show that the above-mentioned phenomenon is closely related to the convergence of the smallest Ritz value to the smallest eigenvalue of \(\varvec{A}\). It occurs when the smallest Ritz value is a better approximation to the smallest eigenvalue than the prescribed underestimate \(\varvec{\mu }\). We also suggest an adaptive strategy for improving the accuracy of the upper bounds in the previous iterations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Our aim in this paper is to explain the origin of the problems that have been noticed [1] when computing Gauss-Radau quadrature upper bounds of the A-norm of the error in the Conjugate Gradient (CG) algorithm for solving linear systems \(Ax=b\) with a symmetric positive definite matrix of order N.

The connection between CG and Gauss quadrature has been known since the seminal paper of Hestenes and Stiefel [2] in 1952. This link has been exploited by Gene H. Golub and his collaborators to bound or estimate the A-norm of the error in CG during the iterations; see [1, 3,4,5,6,7,8,9,10,11,12,13].

Using a fixed node \(\mu \) smaller than the smallest eigenvalue of A and the Gauss-Radau quadrature rule, an upper bound for the A-norm of the error can be easily computed. Note that it is useful to have an upper bound of the error norm to stop the CG iterations. In theory, the closer \(\mu \) is to the smallest eigenvalue, the closer is the bound to the norm.

Concerning the approximation properties of the upper bound, we observed in many examples that in earlier iterations, the bound is approximating the A-norm of the error quite well, and that the quality of approximation is improving with increasing iterations. However, in later CG iterations, the bound suddenly becomes worse: it is delayed, almost independent of \(\mu \), and does not represent a good approximation to the A-norm of the error any more. Such a behavior of the upper bound can be observed also in exact arithmetic. Therefore, the problem of the loss of accuracy of the upper bound in later iterations is not directly linked to rounding errors and has to be explained.

The Gauss quadrature bounds of the error norm were obtained by using the connection of CG to the Lanczos algorithm and modifications of the tridiagonal matrix which is generated by this algorithm and implicitly by CG. This is why we start in Section 2 with the Lanczos algorithm. In Section 3 we discuss the relation with CG and how the Gauss-Radau upper bound is computed. A model problem showing the problems arising with the Gauss-Radau bound in “exact” arithmetic is constructed in Section 4. In Sections 5 to 7 we give an analysis that explains that the problems start when the distance of the smallest Ritz value to the smallest eigenvalue becomes smaller than the distance of \(\mu \) to the smallest eigenvalue. We also explain why the Gauss-Radau upper bound becomes almost independent of \(\mu \). In Section 8 we present an algorithm for improving the upper bounds in previous CG iterations such that the relative accuracy of the upper bounds is guaranteed to be smaller than a prescribed tolerance. Conclusions are given in Section 9.

2 The Lanczos algorithm

Given a starting vector v and a symmetric matrix \(A\in \mathbb {R}^{N\times N}\), one can consider a sequence of nested Krylov subspaces

The dimension of these subspaces can increase up to an index n called the grade of v with respect to A, at which the maximal dimension is attained, and \(\mathcal {K}_{n}(A,v)\) is invariant under multiplication with A.

Lanczos algorithm.

Assuming that \(k<n\), the Lanczos algorithm (Algorithm 1) computes an orthonormal basis \(v_{1},\dots ,v_{k+1}\) of the Krylov subspace \(\mathcal {K}_{k+1}(A,v)\). The basis vectors \(v_{j}\) satisfy the matrix relation

where \(e_{k}\) is the last column of the identity matrix of order k, \(V_{k}=[v_{1}\cdots v_{k}]\) and \(T_{k}\) is the \(k\times k\) symmetric tridiagonal matrix of the recurrence coefficients computed in Algorithm 1:

The coefficients \(\beta _{j}\) being positive, \(T_{k}\) is a so-called Jacobi matrix. If A is positive definite, then \(T_{k}\) is positive definite as well. In the following we will assume for simplicity that the eigenvalues of A are simple and sorted such that

2.1 Approximation of the eigenvalues

The eigenvalues of \(T_{k}\) (Ritz values) are usually used as approximations to the eigenvalues of A. The quality of the approximation can be measured using \(\beta _{k}\) and the last components of the normalized eigenvectors of \(T_{k}\). In more detail, consider the spectral decomposition of \(T_{k}\) in the form

where \(I_k\) is the identity matrix of order k, and assume that the Ritz values are sorted such that

Denote \(s_{i,j}^{(k)}\) the entries and \(s_{:,j}^{(k)}\) the columns of \(S_{k}\). Then it holds that

\(j=1,\dots ,k\). Since the Ritz values \(\theta _{j}^{(k)}\) can be seen as Rayleigh quotients, one can improve the bound (1) using the gap theorem; see [14, p. 244] or [15, p. 206]. In particular, let \(\lambda _{\ell }\) be an eigenvalue of A closest to \(\theta _{j}^{(k)}\). Then

In the following we will be interested in the situation when the smallest Ritz value \(\theta _{1}^{(k)}\) closely approximates the smallest eigenvalue of A. If \(\lambda _{1}\) is the eigenvalue of A closest to \(\theta _{1}^{(k)}>\lambda _{1}\), then using the gap theorem and [14, Corollary 11.7.1 on p. 246],

giving the bounds

It is known (see, for instance, [16]) that the squares of the last components of the eigenvectors are given by

where \(\chi _{1,\ell }\) is the characteristic polynomial of \(T_{\ell }\) and \(\chi _{1,\ell }^{'}\) denotes its derivative, i.e.,

The right-hand side is positive due to the interlacing property of the Ritz values for symmetric tridiagonal matrices. In particular,

When the smallest Ritz value \(\theta _{1}^{(k)}\) converges to \(\lambda _{1}\), this last component squared converges to zero; see also (3).

2.2 Modification of the tridiagonal matrix

Given \(\mu < \theta _{1}^{(k)}\), let us consider the problem of finding the coefficient \(\alpha _{k+1}^{(\mu )}\) such that the modified matrix

has the prescribed \(\mu \) as an eigenvalue. The connection of this problem to Gauss-Radau quadrature rule will be explained in Section 3.

In [17, pp. 331-334] it has been shown that at iteration \(k+1\)

where \(\zeta _{k}^{(\mu )}\) is the last component of the vector y, solution of the linear system

From [10, Section 3.4], the modified coefficients \(\alpha _{k+1}^{(\mu )}\) can be computed recursively using

Using the spectral factorization of \(T_{k}\), we can now prove the following lemma.

Lemma 1

Let \(\mu <\theta _{1}^{(k)}\). Then it holds that

If \(\mu<\lambda <\theta _{1}^{(k)}\), then \(\alpha _{k+1}^{(\mu )}<\alpha _{k+1}^{(\lambda )}\). Consequently, if \(\mu <\theta _{1}^{(k+1)},\) then \(\alpha _{k+1}^{(\mu )}<\alpha _{k+1}\).

Proof

Since \(\mu <\theta _{1}^{(k)}\) the matrix \(T_{k}-\mu I\) in (6) is positive definite and, therefore, nonsingular. Hence,

so that (8) holds. From (8) it is obvious that if \(\mu<\lambda <\theta _{1}^{(k)}\), then \(\alpha _{k+1}^{(\mu )}<\alpha _{k+1}^{(\lambda )}\).

Finally, taking \(\lambda =\theta _{1}^{(k+1)}<\theta _{1}^{(k)}\) (because of the interlacing of the Ritz values) we obtain \(\alpha _{k+1}^{(\lambda )}=\alpha _{k+1}\) by construction. \(\square \)

3 CG and error norm estimation

When solving a linear system \(Ax=b\) with a symmetric and positive definite matrix A, the CG method (Algorithm 2) is the method of choice. In exact arithmetic, the CG iterates \(x_{k}\) minimize the A-norm of the error over the manifold \(x_{0}+\mathcal {K}_{k}(A,r_{0})\),

and the residual vectors \(r_{k}=b-Ax_{k}\) are proportional to the Lanczos vectors \(v_{j}\),

Conjugate gradient algorithm.

Thanks to this close relationship between the CG and Lanczos algorithms, it can be shown (see, for instance, [16]) that the recurrence coefficients computed in both algorithms are connected via \(\alpha _{1}=\gamma _{0}^{-1}\) and

Writing (10) in matrix form, we find out that CG computes implicitly the \(LDL^{T}\) factorization \(T_{k}=L_{k}D_{k}L_{k}^{T}\), where

Hence the matrix \(T_{k}\) is known implicitly in CG.

3.1 Modification of the factorization of \(T_{k+1}\)

Similarly as in Section 2.2 we can ask how to modify the Cholesky factorization of \(T_{k+1}\), that is available in CG, such that the resulting matrix \(T_{k+1}^{(\mu )}\) given implicitly in factorized form has the prescribed eigenvalue \(\mu \). In more detail, we look for a coefficient \(\gamma _{k}^{(\mu )}\) such that

This problem was solved in [10] leading to an updating formula for computing the modified coefficients

Moreover, \(\gamma _{k}^{(\mu )}\) can be obtained directly from the modified coefficient \(\alpha _{k+1}^{(\mu )}\),

and vice-versa, see [10, p. 173 and 181].

3.2 Quadrature-based bounds in CG

We now briefly summarize the idea of deriving the quadrature-based bounds used in this paper. For a more detailed description, see, e.g., [5,6,7, 10,11,12,13].

Let \(A=Q\Lambda Q^T\) be the spectral decomposition of A, with \(Q=[q_1,\dots ,q_N]\) orthonormal and \(\Lambda =\textrm{diag}(\lambda _1,\dots ,\lambda _N)\). As we said above, for simplicity of notation, we assume that the eigenvalues of A are distinct and ordered as \(\lambda _1< \lambda _2< \dots < \lambda _N\). Let us define the weights \(\omega _{i}\) by

and the (nondecreasing) stepwise constant distribution function \(\omega (\lambda )\) with a finite number of points of increase \(\lambda _{1},\lambda _{2},\dots ,\lambda _{N}\),

Having the distribution function \(\omega (\lambda )\) and an interval \(\langle \eta ,\xi \rangle \) such that \(\eta<\lambda _{1}<\lambda _{2}<\dots<\lambda _{N}<\xi \), for any continuous function f, one can define the Riemann-Stieltjes integral (see, for instance, [18])

For \(f(\lambda )=\lambda ^{-1}\), we obtain the integral representation of \(\Vert x-x_0\Vert _A^2\),

Using the optimality of CG it can be shown that CG implicitly determines nodes and weights of the k-point Gauss quadrature approximation to the Riemann-Stieltjes integral (14). The nodes are given by the eigenvalues of \(T_k\), and the weights by the squared first components of the normalized eigenvectors of \(T_k\). The corresponding Gauss quadrature rule can be written in the form

where \((T_k^{-1})_{1,1}\) represents the Gauss quadrature approximation, and the reminder is nothing but the scaled and squared A-norm of the kth error, i.e., the quantity of our interest.

To approximate the integral (14), one can also apply a modified quadrature rule. In this paper we consider the Gauss-Radau quadrature rule consisting in prescribing a node \(0<\mu \le \lambda _1\) and choosing the other nodes and weights to maximize the degree of exactness of the quadrature rule. We can write the corresponding Gauss-Radau quadrature rule in the form

where the reminder \({\mathcal {R}}_k^{(\mu )}\) is negative, and \({T}_k^{(\mu )}\) is given by (5).

The idea of deriving (basic) quadrature-based bounds in CG is to consider the Gauss quadrature rule (15) at iteration k, and a (eventually modified) quadrature rule at iteration \(k+1\),

where \(\widehat{T}_{k+1}=T_{k+1}\) when using the Gauss rule and \(\widehat{T}_{k+1}={T}_{k+1}^{(\mu )} \) in the case of using the Gauss-Radau rule. From the equations (15) and (16) we get

The term in square brackets represents either a lower bound on \(\Vert x-x_{k}\Vert _{A}^{2}\) if \(\widehat{T}_{k+1}=T_{k+1}\) (because of the positive reminder), or an upper bound if \(\widehat{T}_{k+1} = {T}_{k+1}^{(\mu )}\) (because of the negative reminder). In both cases, the term in square brackets can easily be evaluated using the available CG related quantities. In particular, the lower bound is given by \(\gamma _k \Vert r_k \Vert ^2\), and the upper bound by \(\gamma _{k}^{(\mu )}\Vert r_{k}\Vert ^{2}\), where \(\gamma _{k}^{(\mu )}\) can be updated using (12).

To summarize results of [5, 7, 12], and [1, 10, 11] related to the Gauss and Gauss-Radau quadrature bounds for the A-norm of the error in CG, it has been shown that

for \(k<n-1\), and \(\mu \) such that \(0<\mu \le \lambda _{1}\). Note that in the special case \(k=n-1\) it holds that \(\Vert x-x_{n-1}\Vert _{A}^{2}=\gamma _{n-1}\Vert r_{n-1}\Vert ^{2}\). If the initial residual \(r_{0}\) has a nontrivial component in the eigenvector corresponding to \(\lambda _{1}\), then \(\lambda _{1}\) is an eigenvalue of \(T_{n}\). If in addition \(\mu \) is chosen such that \(\mu =\lambda _{1}\), then \(\gamma _{n-1}=\gamma _{n-1}^{(\mu )}\) and the second inequality in (18) changes to equality. The last inequality is strict also for \(k=n-1\).

The rightmost bound in (18), that will be called the simple upper bound in the following, was derived in [1]. The norm \(\Vert p_k\Vert \) is not available in CG, but the ratio

can be computed efficiently using

Note that at an iteration \(\ell \le k\) we can obtain a more accurate bound using

by applying the basic bounds (18) to the last term in (20); see [1] for details on the construction of more accurate bounds. In practice, however, one runs the CG algorithm, and estimates the error in a backward way, i.e., \(k-\ell \) iterations back. The adaptive choice of the delay \(k-\ell \) when using the Gauss quadrature lower bound was discussed recently in [19].

In the following we will we concentrate on the analysis of the behavior of the basic Gauss-Radau upper bound

in dependence of the choice of \(\mu \). As already mentioned, we observed in many examples that in earlier iterations, the bound is approximating the squared A-norm of the error quite well, but in later iterations it becomes worse, it is delayed and almost independent of \(\mu \). We observed that this phenomenon is related to the convergence of the smallest Ritz value to the smallest eigenvalue \(\lambda _1\). In particular, the bound is getting worse if the smallest Ritz value approximates \(\lambda _1\) better than \(\mu \). This often happens during finite precision computations when convergence of CG is delayed because of rounding errors and there are clusters of Ritz values approximating individual eigenvalues of A. Usually, such clusters arise around the largest eigenvalues. At some iteration, each eigenvalue of A can be approximated by a Ritz value, while the A-norm of the error still does not reach the required level of accuracy, and the process will continue and place more and more Ritz values in the clusters. In this situation, it can happen that \(\lambda _1\) is tightly (that is, to a high relative accuracy) approximated by a Ritz value while the CG process still continues. Note that if A has well separated eigenvalues and we run the experiment in exact arithmetic, then \(\lambda _1\) is usually tightly approximated by a Ritz value only in the last iterations. The above observation is key for constructing a motivating example, in which we can readily observe the studied phenomenon also in exact arithmetic, and which will motivate our analysis.

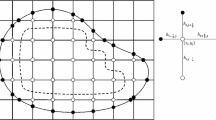

4 The model problem and a numerical experiment

In the construction of the motivating example we use results presented in [16, 20,21,22,23]. Based on the work by Chris Paige [24], Anne Greenbaum [20] proved that the results of finite precision CG computations can be interpreted (up to some small inaccuracies) as the results of the exact CG algorithm applied to a larger system with the system matrix having many eigenvalues distributed throughout “tiny” intervals around the eigenvalues of the original matrix. The experiments show that “tiny” means of the size comparable to \(\textbf{u}\Vert A\Vert \), where \(\textbf{u}\) is the roundoff unit. This result was used in [21] to predict the behavior of finite precision CG. Inspired by [20,21,22] we will construct a linear system \(Ax=b\) with similar properties as the one suggested by Greenbaum [20]. However, we want to emphasize and visualize some phenomenons concerning the behavior of the basic Gauss-Radau upper bound (21). Therefore, we choose the size of the intervals around the original eigenvalues larger than \(\textbf{u}\Vert A\Vert \).

We start with the test problem \(\Lambda y=w\) from [23], where \(w=m^{-1/2}(1,\dots ,1)^{T}\) and \(\Lambda =\textrm{diag}(\hat{\lambda }_{1},\dots ,\hat{\lambda }_{m})\),

The diagonal matrix \(\Lambda \) and the vector w determine the stepwise distribution function \(\omega (\lambda )\) with points of increase \(\hat{\lambda }_{i}\) and the individual jumps (weights) \(\omega _{j}=m^{-1}\),

We construct a blurred distribution function \(\widetilde{\omega }(\lambda )\) having clusters of points of increase around the original eigenvalues \(\hat{\lambda }_{i}\). We consider each cluster to have the same radius \(\delta \), and let the number \(c_i\) of points in the ith cluster grow linearly from 1 to p,

The blurred eigenvalues

are uniformly distributed in \([\hat{\lambda }_{i}-\delta ,\hat{\lambda }_{i}+\delta ]\), with the corresponding weights given by

i.e., the weights that correspond to the ith cluster are equal, and their sum is \(\omega _{i}\). Having defined the blurred distribution function \(\widetilde{\omega }(\lambda )\) we can construct the corresponding Jacobi matrix \(T\in {\mathbb R}^{N\times N}\) in a numerically stable way using the Gragg and Harrod rkpw algorithm [25]. Note that the mapping from the nodes and weights of the computed quadrature to the recurrence coefficients is generally well-conditioned [26, p. 59]. To construct the above-mentioned Jacobi matrix T we used Matlab’s vpa arithmetic with 128 digits. Finally, we define the double precision data A and b that will be used for experimenting as

where \(e_{1}\in {\mathbb R}^{N}\) is the first column of the identity matrix. We decided to use double precision input data since we can easily compare results of our computations performed in Matlab’s vpa arithmetic with results obtained using double precision arithmetic for the same input data.

In our experiment we choose \(m=12\), \(\hat{\lambda }_{1}=10^{-6}\), \(\hat{\lambda }_{m}=1\), \(\rho =0.8\), \(\delta =10^{-10}\), and \(p=4\), resulting in \(N=30.\) Let us run the “exact” CGQ algorithm of [10] on the model problem (24) constructed above, where exact arithmetic is simulated using Matlab’s variable precision with digits=128. Let \(\lambda _{1}\) be the exact smallest eigenvalue of A. We use four different values of \(\mu \) for the computation of the Gauss-Radau upper bound (21): \(\mu _{3}=(1-10^{-3})\lambda _{1}\), \(\mu _{8}=(1-10^{-8})\lambda _{1}\), \(\mu _{16}\) which denotes the double precision number closest to \(\lambda _{1}\) such that \(\mu _{16}\le \lambda _{1}\), and \(\mu _{50}=(1-10^{-50})\lambda _{1}\) which is almost like the exact value. Note that \(\gamma _{k}^{(\mu )}\) is updated using (12).

Figure 1 shows the A-norm of the error \(\Vert x-x_{k-1}\Vert _{A}\) (solid curve), the upper bounds for the considered values of \(\mu _{i}\), \(i=3,8,16,50\) (dotted solid curves), and the rightmost bound in (18) (the simple upper bound) for \(\mu _{50}\) (dashed curve). The dots represent the values \(\theta _{1}^{(k)}-\lambda _{1}\), i.e., the distances of the smallest Ritz values \(\theta _{1}^{(k)}\) to \(\lambda _{1}\). The horizontal dotted lines correspond to the values of \(\lambda _{1}-\mu _{i}\), \(i=3,8,16\).

The Gauss-Radau upper bounds in Fig. 1 first overestimate, and then closely approximate \(\Vert x-x_{k-1}\Vert _{A}\) (starting from iteration 5). However, at some point, the Gauss-Radau upper bounds start to differ significantly from \(\Vert x-x_{k-1}\Vert _{A}\) and represent worse approximations, except for \(\mu _{50}\). We observe that for a given \(\mu _{i}\), \(i=3,8,16\), the upper bounds are delayed when the distance of \(\theta _{1}^{(k)}\) to \(\lambda _{1}\) becomes smaller than the distance of \(\mu _{i}\) to \(\lambda _{1}\), i.e., when

If (25) holds, then the smallest Ritz value \(\theta _{1}^{(k)}\) is a better approximation to \(\lambda _{1}\) than \(\mu _{i}\). This moment is emphasized using vertical dashed lines that connect the value \(\theta _{1}^{(k)}-\lambda _{1}\) with \(\Vert x-x_{k-1}\Vert _{A}\) in the first iteration k such that (25) holds. Moreover, below a certain level, the upper bounds become almost independent of \(\mu _{i}\), \(i=3,8,16\), and visually coincide with the simple upper bound. The closer is \(\mu \) to \(\lambda _1\), the later this phenomenon occurs.

Depending on the validity of (25), we distinguish between phase 1 and phase 2 of convergence. If the inequality (25) does not hold, i.e., if \(\mu \) is a better approximation to \(\lambda _{1}\) than the smallest Ritz value, then we say we are in phase 1. If (25) holds, then the smallest Ritz value is closer to \(\lambda _{1}\) than \(\mu \) and we are in phase 2.

Obviously, the beginning of phase 2 depends on the choice of \(\mu \) and on the convergence of the smallest Ritz value to the smallest eigenvalue. Note that for \(\mu =\mu _{50}\) we are always in phase 1 before we stop the iterations.

In the given experiment as well as in many practical problems, the delay of the upper bounds is not large (just a few iterations), and the bounds can still provide a useful information for stopping the algorithm. However, we have also encountered examples where the delay of the Gauss-Radau upper bound was about 200 iterations; see, e.g., [1, Fig. 10] or [19, Fig. 2] concerning the matrix s3dkt3m2. Hence, we believe that this phenomenon deserves attention and explanation.

5 Analysis

The upper bounds are computed from the modified tridiagonal matrices (5) discussed in Section 2.2, that differ only in one coefficient at the position \((k+1,k+1)\). Therefore, the first step of the analysis is to understand how the choice of \(\mu \) and the validity of the condition (25) influences the value of the modified coefficient

see (8). We will compare its value to a modified coefficient for which phase 2 does not occur; see Fig. 1 for \(\mu _{50}\).

Based on that understanding we will then address further important questions. First, our aim is to explain the behavior of the basic Gauss-Radau upper bound (21) in phase 2, in particular, its closeness to the simple upper bound (18). Second, for practical reasons, without knowing \(\lambda _{1}\), we would like to be able to detect phase 2, i.e., the first iteration k for which the inequality (25) starts to hold. Finally, we address the problem of how to improve the accuracy of the basic Gauss-Radau upper bound (21) in phase 2.

We first analyze the relation between two modified coefficients \(\alpha _{k+1}^{(\mu )}\) and \(\alpha _{k+1}^{(\lambda )}\) where \(0<\mu<\lambda <\theta _{1}^{(k)}.\)

Lemma 2

Let \(0<\mu<\lambda <\theta _{1}^{(k)}\). Then

and

where

satisfies \(E_{k}^{(\lambda ,\mu )}=E_{k}^{(\mu ,\lambda )}.\)

Proof

From the definition of \(\eta _{i,k}^{(\mu )}\) and \(\eta _{i,k}^{(\lambda )}\), it follows immediately

which implies \(E_{k}^{(\lambda ,\mu )}=E_{k}^{(\mu ,\lambda )}\) and (27).

Note that \(0<\eta _{i,k}^{(\mu )}<\eta _{i,k}^{(\lambda )}\). Using (27), the difference of the coefficients \(\alpha \)’s is

which implies (28). \(\square \)

5.1 Assumptions

Let us describe in more detail the situation we are interested in. In the analysis that follows we will assume implicitly the following.

-

1.

\(\lambda _{1}\) is well separated from \(\lambda _{2}\) so that we can use the gap theorem mentioned in Section 2.1, in particular relation (3) bounding \(\eta _{1,k}^{(\lambda _1)}\).

-

2.

\(\mu \) is a tight underestimate to \(\lambda _{1}\) such that

$$\begin{aligned} \lambda _{1}-\mu \ll \lambda _{2}-\lambda _{1}. \end{aligned}$$(30) -

3.

The smallest Ritz value \(\theta _1^{(k)}\) converges to \(\lambda _1\) with increasing k so that there is an iteration index k from which

$$\begin{aligned} \theta _1^{(k)}-\lambda _1 \ll \lambda _1-\mu . \end{aligned}$$

Let us briefly comment on these assumptions. The assumption that \(\lambda _{1}\) is well separated from \(\lambda _{2}\) is used later to prove that \(\eta _{1,k}^{(\lambda _1)}\) is bounded away from zero; see (33). If there is a cluster of eigenvalues around \(\lambda _1\), one can still observe the discussed phenomenon of loss of accuracy of the upper bound, but a theoretical analysis would be much more complicated. Note that the first assumption is also often satisfied for a system matrix \(\hat{A}\) that models finite precision CG behavior, if the original matrix A has well separated eigenvalues \(\lambda _1\) and \(\lambda _2\). Using results of Greenbaum [20] we know that \(\hat{A}\) can have many eigenvalues distributed throughout tiny intervals around the eigenvalues of A. We have constructed the model matrix \(\hat{A}\) in many numerical experiments, using the procedure suggested in [20]. We found out that the constructed \(\hat{A}\) has usually clusters of eigenvalues around the larger eigenvalues of A while a smaller eigenvalue of A is usually approximated by just one eigenvalue of \(\hat{A}\). Therefore, the analysis presented below can then be applied to the matrix \(\hat{A}\) that models the finite precision CG behavior.

If \(\mu \) is not a tight underestimate, then the Gauss-Radau upper bound is usually not a very good approximation of the A-norm of the error. Then the condition (25) can hold from the beginning and phase 1 need not happen.

Finally, in theory, the smallest Ritz value need not converge to \(\lambda _1\) until the last iteration [27]. But, in that case, there won’t be any problem for the Gauss-Radau upper bound. However, in practical computations, we very often observe the convergence of \(\theta _1^{(k)}\) to \(\lambda _1\). In particular, in cases of matrices \(\hat{A}\) with clustered eigenvalues that model finite precision behavior of CG, \(\theta _1^{(k)}\) approximates \(\lambda _1\) to a high relative accuracy usually earlier before the A-norm of the error reaches the ultimate level of accuracy.

5.2 The modified coefficient \(\alpha _{k+1}^{(\mu )}\)

Below we would like to compare \(\alpha _{k+1}^{(\lambda _{1})}\) for which phase 2 does not occur with \(\alpha _{k+1}^{(\mu )}\)for which phase 2 occurs; see Fig. 1. Using (27) and (30), we are able to compare the individual \(\eta \)-terms. In particular, for \(i>1\) we get

where we have used \(\theta _i^{(k)} > \lambda _2\) for \(i>1\). Therefore,

Hence, \(\alpha _{k+1}^{(\mu )}\) can significantly differ from \(\alpha _{k+1}^{(\lambda _{1})}\) only in the first term of the sum in (26) for which

If \(\theta _{1}^{(k)}\) is a better approximation to \(\lambda _{1}\) than \(\mu \) in the sense of (25), then (31) shows that \(\eta _{1,k}^{(\lambda _{1})}\) can be much larger than \(\eta _{1,k}^{(\mu )}\). As a consequence, \(\alpha _{k+1}^{(\lambda _{1})}\) can differ significantly from \(\alpha _{k+1}^{(\mu )}\). On the other hand, if \(\mu \) is chosen such that

for all k we are interested in, then phase 2 will not occur, and

since \(\mu \) is assumed to be a tight approximation to \(\lambda _1\) and \(\eta _{i,k}^{(\lambda _1)}\approx \eta _{i,k}^{(\mu )}\) for all i.

In the following we discuss phase 1 and phase 2 in more detail.

In phase 1,

and, therefore, all components \(\eta _{i,k}^{(\mu )}\) (including \(\eta _{1,k}^{(\mu )}\)) are not sensitive to small changes of \(\mu \); see (27). In other words, the coefficients \(\alpha _{k+1}^{(\mu )}\) are approximately the same for various choices of \(\mu \).

Let us denote

In fact, we can write \(\theta _{1}^{(k)}-\mu =\theta _{1}^{(k)}-\lambda _{1}+\lambda _{1}-\mu \) and use the Taylor expansion of \(1/(1+{h_k})\). It yields

Obviously, \(h_k\) is an increasing function of the iteration number k; the numerator is constant while the denominator is decreasing in absolute value. The size of \(h_k\) depends also on how well \(\mu \) approximates \(\lambda _1\). If \(\mu \) is a tight approximation to \(\lambda _1\), then, at the beginning of the CG iterations, the denominator of \(h_k\) can be large compared to the numerator, \(h_k\) is small and the right-hand side of \(1/(\theta _{1}^{(k)}-\mu )\) is almost given by \(1/(\theta _{1}^{(k)}-\lambda _{1})\), independent of \(\mu \). We observed that the first term of the sum of the \(\eta _{i,k}^{(\mu )}\) is then usually the largest one.

Let us now discuss phase 2. First recall that for any \(0<\mu <\lambda _{1}\) it holds that

As before, suppose that \(\lambda _{1}\) is well separated from \(\lambda _{2}\) and that (30) holds. Phase 2 begins when \(\theta _{1}^{(k)}\) is a better approximation to \(\lambda _{1}\) than \(\mu \), i.e., when (25) holds. Since \(\theta _{1}^{(k)}\) is a tight approximation to \(\lambda _{1}\) in phase 2, (3) and (25) imply that

Therefore, using (30), \(\eta _{1,k}^{(\lambda _{1})}\) is bounded away from zero. On the other hand, (3) also implies that

and as \(\theta _{1}^{(k)}\) converges to \(\lambda _{1}\), \(\eta _{1,k}^{(\mu )}\) goes to zero. Therefore,

and the sum on the right-hand side is almost independent of \(\mu \). Note that having two values \(0<\mu<\lambda <\lambda _{1}\) such that

then one can expect that

because \(\eta _{1,k}^{(\mu )}\) and \(\eta _{1,k}^{(\lambda )}\) converge to zero and \(\eta _{i,k}^{(\mu )}\approx \eta _{i,k}^{(\lambda )}\) for \(i>1\) due to

where we have used (27) and the assumption (34). Therefore, \(\alpha _{k+1}^{(\mu )}\) is relatively insensitive to small changes of \(\mu \) and the same is true for the upper bound (21).

5.3 The coefficient \(\alpha _{k+1}\)

The coefficient \(\alpha _{k+1}\) can also be written as

and the results of Lemmas 1 and 2 are still valid, even though, in practice, \(\mu \) must be smaller than \(\lambda _{1}\). Using (28) we can express the differences between the coefficients, it holds that

If the smallest Ritz value \(\theta _{1}^{(k+1)}\) is close to \(\lambda _{1}\), then the second term of the right-hand side in (36) will be negligible in comparison to the first one, since

see (29), and since \(\eta _{1,k}^{(\lambda _{1})}\) is bounded away from zero; see (33). Therefore, one can expect that

The size of the term on the right-hand side is related to the speed of convergence of the smallest Ritz value \(\theta _{1}^{(k)}\) to \(\lambda _{1}\). Denoting

we obtain

For example, if the convergence of \(\theta _{1}^{(k)}\) to \(\lambda _{1}\) is superlinear, i.e., if \(\rho _{k}\rightarrow 0\), then \(\alpha _{k+1}\) and \(\alpha _{k+1}^{(\lambda _{1})}\) are close.

5.4 Numerical experiments

Let us demonstrate numerically the theoretical results described in previous sections using our model problem. To compute the following results, we, again, use Matlab’s vpa arithmetic with 128 decimal digits.

We first consider \(\mu =\mu _{3}=(1-10^{-3})\lambda _{1}\) for which we have \(\lambda _{1}-\mu =10^{-9}\). The switch from phase 1 to phase 2 occurs at iteration 13. Figure 2 displays the first term \(\eta _{1,k}^{(\mu )}\) and the maximum term \(\eta _{i,k}^{(\mu )}\) as well as the sum \(\zeta _{k}^{(\mu )}\) defined by (9), see Lemma 1, as a function of the iteration number k. In phase 1 the first term \(\eta _{1,k}^{(\mu )}\) is the largest one. As predicted, after the start of phase 2, the first term is decreasing quite fast.

Let us now use \(\mu =\mu _{8}=(1-10^{-8})\lambda _{1}\) for which we have \(\lambda _{1}-\mu =10^{-14}\). The switch from phase 1 to phase 2 occurs at iteration 15; see Fig. 3. The conclusions are the same as for \(\mu _{3}\).

The behavior of the first term is completely different for \(\mu =(1-10^{-50})\lambda _{1}\) which almost corresponds to using the exact smallest eigenvalue \(\lambda _{1}\).

The maximum term of the sum is then almost always the first one; see Fig. 4. Remember that, for this value of \(\mu \), we are always in phase 1.

Finally, in Fig. 5 we present a comparison of the sums \(\zeta _{k}^{(\mu )}\) for \(\mu _{3}\), \(\mu _{8}\), and \(\mu _{50}\). We observe that from the beginning up to iteration 12, all sums visually coincide. Starting from iteration 13 we enter phase 2 for \(\mu =\mu _{3}\) and the sum \(\zeta _{k}^{(\mu _{3})}\) starts to differ significantly from the other sums, in particular from the “reference” term \(\zeta _{k}^{(\mu _{50})}\). Similarly, for \(k=15\) we enter phase 2 for \(\mu =\mu _{8}\) and \(\zeta _{k}^{(\mu _{8})}\) and \(\zeta _{k}^{(\mu _{50})}\) start to differ. We can also observe that \(\zeta _{k}^{(\mu _{3})}\) and \(\zeta _{k}^{(\mu _{8})}\) significantly differ only in iterations 13, 14, and 15, i.e., when we are in phase 2 for \(\mu =\mu _{3}\) but in phase 1 for \(\mu =\mu _{8}\). In all other iterations, \(\zeta _{k}^{(\mu _{3})}\) and \(\zeta _{k}^{(\mu _{8})}\) visually coincide.

In Fig. 6 we plot the coefficients \(\alpha _{k}^{(\mu _{3})}\), \(\alpha _{k}^{(\mu _{8})}\), \(\alpha _{k}^{(\lambda _{1})}\) and \(\alpha _{k}\), so that we can compare the observed behavior with the predicted one. Phase 2 starts for \(\mu _{3}\) at iteration 13, and for \(\mu _{8}\) at iteration 15; see also Fig. 1. For \(k\le 13\) we observe that

as explained in Section 5.2 and \(\alpha _k\) is larger. For \(k\ge 16\), the first terms \(\eta _{1,k-1}^{(\mu _{3})}\) and \(\eta _{1,k-1}^{(\mu _{8})}\) are close to zero, and, as explained in Section 5.2,

For \(k=14\) and \(k=15\), \(\alpha _{k}^{(\mu _{3})}\) and \(\alpha _{k}^{(\mu _{8})}\) can differ significantly because \(\alpha _{k}^{(\mu _{3})}\) is already in phase 2 while \(\alpha _{k}^{(\mu _{8})}\) is still in phase 1.

We can also observe that \(\alpha _{k}\) can be very close to \(\alpha _{k}^{(\lambda _{1})}\) when the smallest Ritz value \(\theta _{1}^{(k)}\) is a tight approximation to \(\lambda _{1}\), i.e., in later iterations. We know that the closeness of \(\alpha _{k}\) to \(\alpha _{k}^{(\lambda _{1})}\) depends on the speed of convergence of the smallest Ritz value to \(\lambda _{1}\); see (37) and the corresponding discussion.

6 The Gauss-Radau bound in phase 2

Our aim in this section is to investigate the relation between the basic Gauss-Radau upper bound (21) and the simple upper bound; see (18). Recall the notation

see (19). In particular, we would like to explain why the two bounds almost coincide in phase 2. Note that using (13) we obtain

and from (8) it follows

Therefore,

In the following lemma we give another expression for \(e_{k}^{T}\left( T_{k}-\mu I\right) ^{-1}e_{k}\).

Lemma 3

Let \(0<\mu <\theta _{1}^{(k)}\). Then it holds that

Proof

Since \(\left\| \mu T_{k}^{-1}\right\| <1\), we obtain using a Neumann series

so that

We now express the terms on the right-hand side using the CG coefficients and the quantities from the spectral factorization of \(T_{k}\). Using \(T_{k}=L_{k}D_{k}L_{k}^{T}\) we obtain \(e_{k}^{T}T_{k}^{-1}e_{k}=\gamma _{k-1}\). After some algebraic manipulation, see, e.g., [28, p. 1369] we get

so that

Finally,

where the diagonal entries of the diagonal matrix

have the form

Hence,

\(\square \)

Based on the previous lemma we can now express the coefficient \(\gamma _{k}^{(\mu )}\).

Theorem 1

Let \(0<\mu <\theta _{1}^{(k)}\). Then it holds that

Proof

We start with (39). Using the previous lemma

where we have used relation (19). \(\square \)

Obviously, using (41), the basic Gauss-Radau upper bound (21) and the simple upper bound in (18) are close to each other if and only if

which can also be written as

Under the assumptions formulated in Section 5.1, in particular that \(\lambda _{1}\) is well separated from \(\lambda _{2}\), and that \(\mu \) is a tight underestimate to \(\lambda _{1}\) in the sense of (30), the sum of terms on the left-hand side of (43) can be replaced by its tight upper bound

which simplifies the explanation of the dependence of the sum in (43) on \(\mu \).

The second term in (44) is independent of \(\mu \) and its size depends only on the behavior of the underlying Lanczos process. Here

can be seen as the relative accuracy to which the ith Ritz value approximates an eigenvalue, and the size of the term

depends on the position of \(\theta _{i}^{(k)}\) relatively to the smallest eigenvalue. In particular, one can expect that the term (46) can be of size \(\mathcal {O}(1)\) if \(\theta _{i}^{(k)}\) approximates smallest eigenvalues, and it is small if \(\theta _{i}^{(k)}\) approximates largest eigenvalues.

Using the previous simplifications and assuming phase 2, the basic Gauss-Radau upper bound (21) and the rightmost upper bound in (18) are close to each other if and only if

From Section 5.2 we know that \(\eta _{1,k}^{(\mu )}\) goes to zero in phase 2. Hence, if

which will happen for k sufficiently large, then the first term in (47) is smaller than the term on the right-hand side.

As already mentioned, the sum of positive terms in (47) depends only on approximation properties of the underlying Lanczos process, that are not easy to predict in general. Inspired by our model problem described in Section 4, we can just give an intuitive explanation why the sum could be small in phase 2.

Phase 2 occurs in later CG iterations and it is related to the convergence of the smallest Ritz value to the smallest eigenvalue. If the smallest eigenvalue is well approximated by the smallest Ritz value (to a high relative accuracy), then one can expect that many eigenvalues of A are relatively well approximated by Ritz values. If the eigenvalue \(\lambda _{j}\) of A is well separated from the other eigenvalues and if it is well approximated by a Ritz value, then the corresponding term (45) measuring the relative accuracy to which \(\lambda _{j}\) is approximated, is going to be small.

In particular, in our model problem, the smallest eigenvalues are well separated from each other, and in phase 2 they are well approximated by Ritz values. Therefore, the corresponding terms (45) are small. Hence, the Ritz values that did not converge yet in phase 2, are going to approximate eigenvalues in clusters which do not correspond to smallest eigenvalues, i.e., for which the terms (46) are small; see also Figs. 3 and 2. In our model problem, the sum of positive terms in (47) is small in phase 2 because either (45) or (46) are small. Therefore, one can expect that the validity of (47) will mainly depend on the size of the first term in (47); see Fig. 7.

The size of the sum of positive terms in (47) obviously depends on the clustering and the distribution of the eigenvalues, and we cannot guarantee in general that it will be small in phase 2. For example, it need not be small if the smallest eigenvalues of A are clustered.

7 Detection of phase 2

For our model problem it is not hard to detect phase 2 from the coefficients that are available during the computations. We first observe, see Fig. 7, that the coefficients

and the corresponding bounds (21) and (18) visually coincide from the beginning up to some iteration \(\ell _{1}\). From iteration \(\ell _{1}+1\), the Gauss-Radau upper bound (21) starts to be a much better approximation to the squared A-norm of the error than the simple upper bound (18). When phase 2 occurs, the Gauss-Radau upper bound (21) loses its accuracy and, starting from iteration \(\ell _{2}\) (approximately when (48) holds), it will again visually coincide with the simple upper bound (18). We observe that phase 2 occurs at some iteration k where the two coefficients (49) significantly differ, i.e., for \(\ell _{1}<k<\ell _{2}.\) To measure the agreement between the coefficients (49), we can use the easily computable relative distance

We will consider this relative distance to be small, if it is smaller than 0.5.

The behavior of the relative distance in (50) for various values of \(\mu \)

The behavior of the term in (50) for various values of \(\mu \) is shown in Fig. 8. The index \(\ell _{1}=12\) is the same for all considered values of \(\mu \). For \(\mu _{3}\) we get \(\ell _{2}=15\) (red circle), for \(\mu _{8}\) we get \(\ell _{2}=18\) (magenta circle), for \(\mu _{16}\) \(\ell _{2}=25\) (blue circle), and finally, for \(\mu _{50}\) there is no index \(\ell _{2}\).

As explained in the previous section, in more complicated cases we cannot guarantee in general a similar behavior of the relative distance (50) as in our model problem. For example, in many practical problems we sometimes observe a staircase behavior of the A-norm of the error, when few iterations of stagnation are followed by few iterations of rapid convergence. In such cases, the quantity (50) can oscillate several times and it can be impossible to use it for detecting phase 2. Therefore, in general, we are not able to detect the beginning of phase 2 using (50) reliably. Nevertheless, in particular cases, the formulas (41) and (50) can be helpful.

8 Upper bounds with a guaranteed accuracy

In some applications it might be of interest to obtain upper bounds on the A-norm of the error that are sufficiently accurate. From the previous sections we know that the basic Gauss-Radau upper bound at iteration k can be delayed, and, therefore, it can overestimate the quantity of interest significantly. Nevertheless, going back in the convergence history, we can easily find an iteration index \(\ell \le k\) such that for all \(0\le i \le \ell \), the sufficiently accurate upper bound can be found. To find such \(\ell \), we will use the ideas described in [12] and [19].

For integers \(k\ge j \ge \ell \ge 0\), let us denote

Denoting \(\varepsilon _{j} \equiv \Vert x - x_j \Vert _A^2\), the relation (20) takes the form

A more accurate bound at iteration \(\ell \) is obtained such that the last term in (51) is replaced by the basic lower or upper bounds on \(\varepsilon _{k}\). In particular, the improved Gauss-Radau upper bound at iteration \(\ell \) can be defined as

and the improved Gauss lower bound is given by \(\Delta _{\ell :k}\).

To guarantee the relative accuracy of the improved Gauss-Radau upper bound, we would like to find the largest iteration index \(\ell \le k\) in the convergence history such that

where \(\tau \) is a prescribed tolerance, say, \(\tau =0.25\). Since

we can require \(\ell \le k\) to be the largest integer such that

If (54) holds, then also (53) holds. The just described adaptive strategy for obtaining \(\ell \) giving a sufficiently accurate upper bound is summarized in Algorithm 3.

CG with the improved Gauss-Radau upper bound.

Note that

i.e., if (54) holds, then \(\tau \) represents also an upper bound on the sum of relative errors of the improved lower and upper bounds. In other words, if \(\ell \) is such that (54) is satisfied, then both the improved Gauss-Radau upper bound as well as the improved Gauss lower bound are sufficiently accurate. For a heuristic strategy focused on improving the accuracy of the Gauss lower bound, see [19].

In the previous sections we have seen that the basic Gauss-Radau upper bound is delayed, in particular in phase 2. The delay of the basic Gauss-Radau upper bound can be defined as the smallest nonnegative integer j such that

Having sufficiently accurate lower and upper bounds (e.g., if (54) is satisfied), we can approximately determine the delay of the basic Gauss-Radau upper bound as the smallest j satisfying (55) where \(\varepsilon _{\ell }\) in (55) is replaced by its tight lower bound \(\Delta _{\ell :k}\).

9 Conclusions

In this paper we discussed and analyzed the behavior of the Gauss-Radau upper bound on the A-norm of the error in CG. In particular, we concentrated on the phenomenon observed during computations showing that, in later CG iterations, the upper bound loses its accuracy, it is almost independent of \(\mu \), and visually coincides with the simple upper bound. We explained that this phenomenon is closely related to the convergence of the smallest Ritz value to the smallest eigenvalue of A. It occurs when the smallest Ritz value is a better approximation to the smallest eigenvalue than the prescribed underestimate \(\mu \). We developed formulas that can be helpful in understanding this behavior. Note that the loss of accuracy of the Gauss-Radau upper bound is not directly linked to rounding errors in computations of the bound, but it is related to the finite precision behavior of the underlying Lanczos process. In more detail, the phenomenon can occur when solving linear systems with clustered eigenvalues. However, the results of finite precision CG computations can be seen (up to some small inaccuracies) as the results of the exact CG algorithm applied to a larger system with the system matrix having clustered eigenvalues. Therefore, one can expect that the discussed phenomenon can occur in practical computations not only when A has clustered eigenvalues, but also whenever orthogonality is lost in the CG algorithm.

Data Availability

All data generated or analyzed during this study are available from the corresponding author on reasonable request.

References

Meurant, G., Tichý, P.: Approximating the extreme Ritz values and upper bounds for the A-norm of the error in CG. Numer. Algorithms 82(3), 937–968 (2019)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Research Nat. Bur. Standards 49, 409–436 (1952)

Dahlquist, G., Eisenstat, S.C., Golub, G.H.: Bounds for the error of linear systems of equations using the theory of moments. J. Math. Anal. Appl. 37, 151–166 (1972)

Dahlquist, G., Golub, G.H., Nash, S.G.: Bounds for the error in linear systems. In: Semi-infinite Programming (Proc. Workshop, Bad Honnef, 1978). Lecture Notes in Control and Information Sci., vol. 15, pp. 154–172. Springer, Berlin (1979)

Golub, G.H., Meurant, G.: Matrices, moments and quadrature. In: Numerical Analysis 1993 (Dundee, 1993). Pitman Res. Notes Math. Ser., vol. 303, pp. 105–156. Longman Sci. Tech., Harlow (1994)

Golub, G.H., Meurant, G.: Matrices, moments and quadrature. II. How to compute the norm of the error in iterative methods. BIT 37(3), 687–705 (1997)

Golub, G.H., Strakoš, Z.: Estimates in quadratic formulas. Numer. Algorithms 8(2–4), 241–268 (1994)

Meurant, G.: The computation of bounds for the norm of the error in the conjugate gradient algorithm. Numer. Algo. 16(1), 77–87 (1998)

Meurant, G.: Numerical experiments in computing bounds for the norm of the error in the preconditioned conjugate gradient algorithm. Numer. Algo. 22(3–4), 353–365 (1999)

Meurant, G., Tichý, P.: On computing quadrature-based bounds for the \(A\)-norm of the error in conjugate gradients. Numer. Algo. 62(2), 163–191 (2013)

Meurant, G., Tichý, P.: Erratum to: On computing quadrature-based bounds for the A-norm of the error in conjugate gradients [mr3011386]. Numer. Algorithms 66(3), 679–680 (2014)

Strakoš, Z., Tichý, P.: On error estimation in the conjugate gradient method and why it works in finite precision computations. Electron. Trans. Numer. Anal. 13, 56–80 (2002)

Strakoš, Z., Tichý, P.: Error estimation in preconditioned conjugate gradients. BIT 45(4), 789–817 (2005)

Parlett, B.N.: The Symmetric Eigenvalue Problem. Classics in Applied Mathematics, vol. 20, p. 398. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (1998). Corrected reprint of the 1980 original

Demmel, J.W.: Applied Numerical Linear Algebra, p. 419. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (1997)

Meurant, G.: The Lanczos and Conjugate Gradient Algorithms. Software, Environments, and Tools, vol. 19, p. 365. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (2006)

Golub, G.H.: Some modified matrix eigenvalue problems. SIAM Rev. 15, 318–334 (1973)

Golub, G.H., Meurant, G.: Matrices, Moments and Quadrature with Applications, p. 698. Princeton University Press, USA (2010)

Meurant, G., Papež, J., Tichý, P.: Accurate error estimation in CG. Numer. Algorithms 88(3), 1337–1359 (2021)

Greenbaum, A.: Behavior of slightly perturbed Lanczos and conjugate-gradient recurrences. Linear Algebra Appl. 113, 7–63 (1989)

Greenbaum, A., Strakoš, Z.: Predicting the behavior of finite precision Lanczos and conjugate gradient computations. SIAM J. Matrix Anal. Appl. 13(1), 121–137 (1992)

O’Leary, D.P., Strakoš, Z., Tichý, P.: On sensitivity of Gauss-Christoffel quadrature. Numer. Math. 107(1), 147–174 (2007)

Strakoš, Z.: On the real convergence rate of the conjugate gradient method. Linear Algebra Appl. 154(156), 535–549 (1991)

Paige, C.C.: Accuracy and effectiveness of the Lanczos algorithm for the symmetric eigenproblem. Linear Algebra Appl. 34, 235–258 (1980)

Gragg, W.B., Harrod, W.J.: The numerically stable reconstruction of Jacobi matrices from spectral data. Numer. Math. 44(3), 317–335 (1984)

Gautschi, W.: Orthogonal Polynomials: Computation and Approximation. Oxford University Press, UK (2004)

Scott, D.S.: How to make the Lanczos algorithm converge slowly. Math. Comp. 33(145), 239–247 (1979)

Meurant, G.: On prescribing the convergence behavior of the conjugate gradient algorithm. Numer. Algorithms 84(4), 1353–1380 (2020)

Acknowledgements

The authors thank an anonymous referee for very helpful comments.

Funding

Open access publishing supported by the National Technical Library in Prague. The work of Petr Tichý was supported by the Grant Agency of the Czech Republic under grant no. 20-01074S.

Author information

Authors and Affiliations

Contributions

These authors contributed equally to this work.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The authors have read the Springer journal policies on authors responsibilities (including Ethical responsibilities of authors) and submit this manuscript in accordance with those policies.

Consent for publication

We have read and understood the publishing policy, and submit this manuscript in accordance with this policy.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Meurant, G., Tichý, P. The behavior of the Gauss-Radau upper bound of the error norm in CG. Numer Algor 94, 847–876 (2023). https://doi.org/10.1007/s11075-023-01522-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01522-z