Abstract

With the recent realization of exascale performance by Oak Ridge National Laboratory’s Frontier supercomputer, reducing communication in kernels like QR factorization has become even more imperative. Low-synchronization Gram-Schmidt methods, first introduced in Świrydowicz et al. (Numer. Lin. Alg. Appl. 28(2):e2343, 2020), have been shown to improve the scalability of the Arnoldi method in high-performance distributed computing. Block versions of low-synchronization Gram-Schmidt show further potential for speeding up algorithms, as column-batching allows for maximizing cache usage with matrix-matrix operations. In this work, low-synchronization block Gram-Schmidt variants from Carson et al. (Linear Algebra Appl. 638:150–195, 2022) are transformed into block Arnoldi variants for use in block full orthogonalization methods (BFOM) and block generalized minimal residual methods (BGMRES). An adaptive restarting heuristic is developed to handle instabilities that arise with the increasing condition number of the Krylov basis. The performance, accuracy, and stability of these methods are assessed via a flexible benchmarking tool written in MATLAB. The modularity of the tool additionally permits generalized block inner products, like the global inner product.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

Oak Ridge National Laboratory reported in May 2022 that its Frontier supercomputer is the first machine to have achieved true exascale performance.Footnote 1 That is, for the first time ever, a supercomputer performed more than 1 exaflop (i.e., 1018 double-precision floating-point operations) in a single second. This astounding development is clear motivation for our work. Exascale computing is no longer a next-generation dream; it is reality, and the need for highly parallelized algorithms that take full advantage of exaflop computational potential while reducing global communication between nodes is urgent.

To this end we build on the low-synchronization (“low-sync”) Gram-Schmidt methods of Barlow [1], Świrydowicz et al. [2], Yamazaki et al. [3], Thomas et al. [4], and Bielich et al. [5], as well as our own earlier work with block versions of these methods [6, 7]. Gram-Schmidt methods are an essential backbone in orthogonalization routines like QR factorization and in iterative methods like Krylov subspace methods for linear systems, matrix functions, and matrix equations [8,9,10]. Block Krylov subspace methods in particular make better use of L3 cache via matrix-matrix operations and feature often in communication-avoiding Krylov subspaces, such as s-step [11, 12], enlarged methods [13], and randomized methods [14].

As in most realms of life, there is no such thing as a free lunch here. While low-sync variations have the potential to speed up highly parallelized implementations of Gram-Schmidt [3], they introduce new floating-point errors and thus potential instability, due to the reformulation of inner products and normalizations. Instability surfaces in the loss of orthogonality between basis vectors and can lead to breakdowns or wildly inaccurate approximations in downstream applications [15, 16]. Stability bounds for some low-sync variants have been established, but it often takes much longer to carry out a rigorous stability analysis than to derive and deploy new methods [1, 4, 6, 7]. It can also happen that a backward error bound is established and later challenged by an obscure edge case [17, 18]. With this tension in mind, we have not only extended low-sync variants of block Gram-Schmidt to block Arnoldi but also developed a benchmarking tool for the community to explore the efficiency, stability, and accuracy of these new algorithms, in a similar vein as the BlockStabFootnote 2 comparison tool developed in tandem with a recent block Gram-Schmidt survey [7]. We refer to this new tool as LowSyncBlockArnoldiFootnote 3 and encourage the reader to explore the tool in parallel with the text.

Established in this earlier work is the fact that block variants of low-sync Gram-Schmidt are less stable than their column-wise counterparts. However, when these skeletons are transferred to block Arnoldi and used to solve linear systems, we gain the option to restart the process. Restarting can be effective at mitigating stability issues in communication-avoiding algorithms [19, 20]. As long as each node redundantly computes residual or error estimates and checks the stability via local quantities, restarting does not introduce additional synchronization points. Furthermore, adaptive restarting allows for robustness, as we can use basic look-ahead heuristics to foresee a breakdown and salvage progress without giving up completely at the first sign of trouble.

Given the modularity of our framework, we are also able to treat generalized block inner products, as described in [21, 22]. We focus in particular on the classical and global inner products.

The paper is organized as follows. In Section 2 we summarize terms, definitions, and concepts from high-performance (HPC) computing, generalized block inner products, block Gram-Schmidt algorithms, and block Krylov subspace methods with static restarting. We present new low-synchronization block Arnoldi skeletons in Section 3, and derive an adaptive restarting heuristic in Section 4. Section 5 features a more in-depth discussion of the LowSyncBlockArnoldi benchmarking tool as well as examples demonstrating how to compare different block Arnoldi variants. We summarize our findings in Section 6.

2 Background

This work is a combination of the generalized inner product framework of Frommer, Lund, and Szyld [21, 22] and the skeleton-muscle framework for block Gram-Schmidt (BGS) by Carson, Lund, Rozložník, and Thomas [6, 7]. Throughout the text, we focus on solving a linear system with multiple right-hand sides

where \(A \in {\mathbb {C}}^{n \times n}\) is large and sparse (i.e., with \(\mathcal {O}\left (n\right )\) nonzero entries) and \(\boldsymbol {B} \in {\mathbb {C}}^{n \times s}\) is a tall-skinny (i.e., s ≪ n) matrix.

We employ standard numerical linear algebra notation throughout. In particular, A∗ denotes the Hermitian transpose of A, \(\left \|\cdot \right \|\) refers to the Euclidean 2-norm, unless otherwise specified, and \({\widehat {e}_{k}}\) denotes the k th standard unit vector with the k th entry equal to 1 and all others 0.

In the following subsections, we define key concepts in HPC, block Gram-Schmidt methods, and block Krylov subspace methods.

2.1 Communication in high-performance computing

As floating-point operations have become faster and less energy-intensive, communication—the memory operations between levels of cache on a node or between parallelized processors on a network—has become a bottleneck in distributed computing. How expensive a memory operation is depends on the physical aspects of a specific system, specifically the latency, or the amount of time needed to pack and transmit a message, and the bandwidth, or how much information can be transmitted at a time. To improve algorithm performance in bandwidth-limited algorithms like Krylov subspace methods, it is therefore advantageous to increase the computational intensity, or the ratio between floating-point and memory operations [23]. We pay particular attention to synchronization points (“sync points”), i.e., the steps in an algorithm that initiate a broadcast or reduce pattern to synchronize a quantity on all processors. Reducing calls to kernels with sync points is a straightforward way to improve computational intensity [24].

Sync points in Krylov subspace methods arise primarily in the orthonormalization procedure, such as Arnoldi or Lanczos, both of which are reformulations of the Gram-Schmidt method, a standard method for orthonormalizing a basis one (block) vector at a time. For large n, vectors are typically partitioned row-wise and distributed among processors, meaning that any time an operation like an inner product or normalization is performed—which is at least once per (block) vector in Gram-Schmidt—a sync point is inevitable.

Other possibly communication-intensive kernels include applications of the operator AFootnote 4 and applications of \(\boldsymbol {\mathcal {V}}_{m}\), an n × ms Krylov basis matrix. We count each operation separately from sync points (block inner products and vector norms) in LowSyncBlockArnoldi; see Section 5.

2.2 Generalized block inner products

A block vector is a tall-skinny matrix \(\boldsymbol {X} \in {\mathbb {C}}^{n \times s}\), and a block matrix is a matrix of s × s matrices, e.g.,

We use a mixture of Matlab- and block-indexing notation to handle block objects. In particular, we write \(\boldsymbol {\mathcal {V}}_{k}\) to denote the first k block vectors of the block-partitioned matrix \(\boldsymbol {\mathcal {V}} = \begin {bmatrix} \boldsymbol {V}_{1} & \boldsymbol {V}_{2} & {\cdots } & \boldsymbol {V}_{m} \end {bmatrix}\) instead of \(\boldsymbol {\mathcal {V}}_{:,1:~ks}\) (i.e., the first ks columns). In a similar vein, s × s block entries of \({\mathscr{H}}\) are denoted as Hj,k instead of as H(j− 1)s+ 1:js,(k− 1)s+ 1:ks. We denote block generalizations of the standard unit vectors \({\widehat {e}_{k}}\) as \(\widehat {\boldsymbol {E}}_{k} := {\widehat {e}_{k}} \otimes I_{s}\), where ⊗ is the Kronecker product and Is the identity matrix of size s.

Blocking is a batching technique that can reduce the number of calls to the operator A applied to individual column vectors, maximize computational intensity by filling up the local cache with BLAS3 operations, and reduce the total number of sync points by performing inner products and normalization en masse [25, 26]. In the context of Krylov subspaces, blocking can also lead to enriched subspaces by sharing information across column vectors instead of treating each right-hand side as an isolated problem. How much information is shared across columns depends on the choice of block inner product.

Let \({{\mathbb {S}}}\) be a ∗-subalgebra of \({{\mathbb {C}}}^{s \times s}\) with identity; i.e., \(I \in {{\mathbb {S}}}\) and when \( S,T \in {\mathbb {S}}\), \(\alpha \in {\mathbb {C}}\), then \(\alpha S +T, ST, S^{*} \in {\mathbb {S}}\).

Definition 1

A mapping 〈〈⋅,⋅〉〉 from \({\mathbb {C}}^{n\times s} \times {\mathbb {C}}^{n \times s}\) to \({\mathbb {S}}\) is called a block inner product onto \({\mathbb {S}}\) if it satisfies the following conditions for all \(\boldsymbol {X},\boldsymbol {Y},\boldsymbol {Z} \in {\mathbb {C}}^{n \times s}\) and \(C \in {\mathbb {S}}\):

-

(i)

\({\mathbb {S}}\)-linearity: \({\langle \langle \boldsymbol {X}+\boldsymbol {Y},\boldsymbol {Z} C } \rangle \rangle _{{\mathbb {S}}}= {\langle \langle \boldsymbol {X},\boldsymbol {Z} \rangle \rangle }_{{\mathbb {S}}} C + {\langle \langle \boldsymbol {Y},\boldsymbol {Z} \rangle \rangle }_{{\mathbb {S}}} C\);

-

(ii)

symmetry: \({\langle \langle \boldsymbol {X},\boldsymbol {Y} \rangle \rangle _{{\mathbb {S}}} = \langle \langle \boldsymbol {Y},\boldsymbol {X} \rangle \rangle }_{{\mathbb {S}}}^{*}\);

-

(iii)

definiteness: \({\langle \langle \boldsymbol {X},\boldsymbol {X} \rangle \rangle }_{{\mathbb {S}}}\) is positive definite if X has full rank, and \({\langle \langle \boldsymbol {X},\boldsymbol {X} \rangle \rangle }_{{\mathbb {S}}} = 0\) if and only if X = 0.

Definition 2

A mapping N which maps all \(\boldsymbol {X} \in {\mathbb {C}}^{n \times s}\) with full rank on a matrix \(N(\boldsymbol {X}) \in {\mathbb {S}}\) is called a scaling quotient if for all such X, there exists \(\boldsymbol {Y} \in {\mathbb {C}}^{n \times s}\) such that X = YN(X) and \({\langle \langle \boldsymbol {Y},\boldsymbol {Y} \rangle \rangle }_{{\mathbb {S}}} = I_{s}\).

The scaling quotient is closely related to the intraorthogonalization routine discussed in Section 2.3. Block notions of orthogonality and normalization arise organically from Definitions 1 and 2.

Definition 3

Let \(\boldsymbol {X}, \boldsymbol {Y} \in {\mathbb {C}}^{n \times s}\) and \(\{\boldsymbol {X}_{j} \}_{j=1}^{m} \subset {\mathbb {C}}^{n \times s}\).

-

(i)

X,Y are block orthogonal, if \({\langle \langle \boldsymbol {X},\boldsymbol {Y} \rangle \rangle }_{{\mathbb {S}}} = 0_{s}\).

-

(ii)

X is block normalized if N(X) = Is.

-

(iii)

X1,…,Xm are block orthonormal if \({\langle \langle \boldsymbol {X}_{i},\boldsymbol {X}_{j} \rangle \rangle }_{{\mathbb {S}}} = \delta _{ij} I_{s}\).

A set of vectors \(\{\boldsymbol {X}_{j}\}_{j=1}^{m} \subset {\mathbb {C}}^{n \times s}\) block spans a space \({\mathscr{K}} \subseteq {\mathbb {C}}^{n \times s}\), and we write \({\mathscr{K}} = \text {span}^{\mathbb {S}}\{\boldsymbol {X}_{j}\}_{j=1}^{m}\) if

The set \(\{\boldsymbol {X}_{j}\}_{j=1}^{m}\) constitutes a block orthonormal basis for \({\mathscr{K}} = \text {span}^{\mathbb {S}}\{\boldsymbol {X}_{j}\}_{j=1}^{m}\) if it is block orthonormal.

In this work, we consider only the classical and global block paradigms, described in Table 1. These paradigms represent the two extremes of information-sharing, with the classical approach maximizing information shared among columns and the global approach minimizing it; see, e.g., [22, Theorem 3.3]. Moreover, the global paradigm leads to a lower complexity per iteration in Krylov subspace methods, because what are matrix-matrix products in the classical paradigm get reduced to scaling operations in the global one. Many other paradigms are also possible; see, e.g., [27, 28].

2.3 Block Gram-Schmidt

Block Gram-Schmidt (BGS) is a routine for orthonormalizing a set of block vectors \(\{\boldsymbol {X}_{j} \}_{j=1}^{m} \subset {\mathbb {C}}^{n \times s}\). Writing

we define a BGS method as one that returns a block orthonormal \(\boldsymbol {\mathcal {Q}} \in {\mathbb {C}}^{n \times ms}\) and a block upper triangular \(\mathcal {R} \in {\mathbb {C}}^{ms \times ms}\) such that \(\mathcal {X} = \mathcal {Q} \mathcal {R}\). Important measures in the analysis of BGS methods are the condition number of \(\boldsymbol {\mathcal {X}}\),

i.e., the ratio between the largest and smallest singular values of \(\boldsymbol {\mathcal {X}}\), and the loss of orthogonality (LOO),

where \({\langle \langle {\cdot ,\cdot } \rangle \rangle _{{\mathbb {S}}}}\) is a generalized inner product as described in Section 2.4.

When we discuss the stability of BGS methods, we refer to bounds on the loss of orthogonality in terms of machine precision, ε. We assume IEEE double precision here, so \(\varepsilon = \mathcal {O}\left (10^{-16}\right )\).

For categorizing BGS variants, we recycle the skeleton-muscle notation from [7, 12], where skeleton refers to the inter orthogonalization routine between block vectors, and the muscle refers to the intra orthogonalization routine between the columns of a single block vector. As a prototype, consider the Block Modified Gram-Schmidt (BMGS) skeleton, given by Algorithm 1. Here, IntraOrtho denotes a generic muscle that takes \(\boldsymbol {X} \in {\mathbb {C}}^{n \times s}\) and returns \(\boldsymbol {Q} \in {\mathbb {C}}^{n \times s}\) and \(R \in {\mathbb {C}}^{s \times s}\) such that \({\langle \langle \boldsymbol {Q},\boldsymbol {Q} \rangle \rangle } _{{\mathbb {S}}} = I_{s}\) and X = QR. For the classical paradigm, this could be any implementation of a QR factorization: a column-wise Gram-Schmidt routine, Householder QR (HouseQR), Cholesky QR (CholQR), etc. As for the global paradigm, there is only one possible muscle, given by the global scaling quotient, which effectively reduces to normalizing block vectors with a scaled Frobenius norm. Consequently, intraorthogonalization does not actually occur in the global paradigm, as the columns of block vectors are not orthogonalized with respect to one another at all.

We regard a single call to either \({\langle \langle {\cdot , \cdot }\rangle \rangle _{\mathbb {S}}}\) or IntraOrtho as one sync point, which is only possible in practice if single-reduce algorithms like CholQR [29] or TSQR/AllReduceQR [30, 31] are employed for IntraOrtho.

2.4 Block Krylov subspace methods

The m th block Krylov subspace for A andB (with respect to \({\mathbb {S}}\)) is defined as

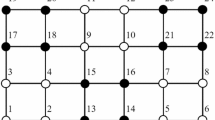

Block Arnoldi is often used to compute a basis for \({\mathscr{K}}^{{\mathbb {S}}}_{m}(A, \boldsymbol {B})\), and it is typically implemented with BMGS as the skeleton; see Algorithm 2. BMGS-Arnoldi accrues a high number of sync points due to the inner for-loop, where an increasing number of inner products is performed per block column.

Performing m steps of a block Arnoldi routine returns the block Arnoldi relation

where \(\boldsymbol {\mathcal {V}}_{m}\) \({\mathbb {S}}\)-spans \({\mathscr{K}}^{{\mathbb {S}}}_{m}(A, \boldsymbol {B})\) and \({\mathscr{H}}_{m}\) denotes the ms × ms principal submatrix of \({\mathscr{H}}_{m+1,m}\).

2.4.1 Block full orthogonalization methods with low-rank modifications

We define

where \(\widehat {\boldsymbol {E}}_{1} = \widehat {\boldsymbol {e}}_{1} \otimes I_{s}\) is a standard block unit vector, as the (modified) block full orthogonalization method (BFOM) for approximating (1). When \({\mathscr{M}} = 0\), we recover BFOM, which minimizes the error in the A-weighted \({\mathbb {S}}\)-norm for A hermitian positive definite [21]. There are infinitely many choices for \({\mathscr{M}}\), but perhaps only a few useful ones, some of which are discussed in [22]. We will concern ourselves here with just \({\mathscr{M}} = {\mathscr{H}}_{m}^{{-*}} \big (\widehat {\boldsymbol {E}}_{m} H_{m+1,m}^{*} H_{m+1,m}\big ) \widehat {\boldsymbol {E}}_{m}^{*}\), which gives rise to a block generalized minimal residual method (BGMRES) [32,33,34]. As in [22], we implement BGMRES as a modified BFOM here, with an eye towards downstream applications like f(A)B where the BFOM form is explicitly needed. In practice, there may be computational savings with a less modular implementation; see, e.g., [35,36,37].

2.4.2 Static restarting and cospatial factors

Restarting is a well-established technique for reconciling a growing basis with memory limitations. Define the residual of (6) as

The basic idea of restarts is to use Rm to build a new Krylov subspace, which we then use to approximate the error \(\boldsymbol {E}_{m} := A^{{-1}} \boldsymbol {B} - \boldsymbol {X}_{m}\), which solves AE = Rm in exact arithmetic. Building a new Krylov subspace from Rm directly is not a great idea, because it would require an extra computation with A. Furthermore, we need a cheap, accurate, and ideally locally computable way to approximate \(\left \|\boldsymbol {R}_{m}\right \|\) from one cycle to the next in order to monitor convergence. In [22] a static restarting method for low-rank modified BFOM is introduced that satisfies these requirements. By “static,” we mean the basis size m is fixed from one restart cycle to the next, in contrast to adaptive or dynamic restart cycle lengths. We restate [22, Theorem 4.1], which enables an efficient residual approximation and restarting procedure.

Theorem 2.1

Suppose \({\mathscr{M}} = \boldsymbol {M} \widehat {\boldsymbol {E}}_{m}^{*}\), where \(\boldsymbol {M} \in {\mathbb {C}}^{ms \times s}\) and \(\widehat {\boldsymbol {E}}_{m} = \widehat {\boldsymbol {e}}_{m} \otimes I_{s}\). Define \(\boldsymbol {U}_{m} := \boldsymbol {\mathcal {V}}_{m+1} \begin {bmatrix} \boldsymbol {M} \\ -H_{m+1,m} \end {bmatrix}\) and let \(\boldsymbol {\Xi }_{m} := ({\mathscr{H}}_{m} + {\mathscr{M}})^{{-1}} \widehat {\boldsymbol {E}}_{1} B\) be the block coefficient vector for the approximation \(\boldsymbol {X}_{m} = \boldsymbol {\mathcal {V}}_{m} \boldsymbol {\Xi }_{m}\) (6) of the system (1). With Rm as in (7) it then holds that

We refer to the s × s matrix \(\widehat {\boldsymbol {E}}_{m}^{*} \boldsymbol {\Xi }_{m}\) as a cospatial factor, and (8) as the cospatial residual relation. The term cospatial refers to the fact that the columns of Rm and those of Um span the same space. Moreover, in exact arithmetic, it is not hard to see that

and the right-hand term can be computed locally (and possibly redundantly on each processor) for m ≪ n.

If the approximate residual norm does not meet the desired tolerance, then we can compute the Arnoldi relation for \({\mathscr{K}}_{m}(A, \boldsymbol {U}_{m})\) to obtain \(\boldsymbol {\mathcal {V}}_{m+1}^{(2)}\), \({\mathscr{H}}_{m}^{(2)}\), \(H_{m+1,m}^{(2)}\), and B(2), where the superscript here and later denotes association to the restarted Krylov subspace. We then approximate Em as

and update Xm as

The process is repeated, applying Theorem 2.1 iteratively, until the desired residual tolerance is reached.

Remark 1

The analysis in [21, 22] is carried out in exact arithmetic. Therefore, when we replace Algorithm 2 with low-sync versions in Section 3, all the results summarized in this section still hold, because all block Gram-Schmidt variants generate the same QR factorization in exact arithmetic.

3 Low-synchronization variants of block Arnoldi

To distinguish between block Arnoldi variants, we default to the name of the underlying block Gram-Schmidt skeleton. We specify a configuration as ip-skel∘ (musc): inner product, skeleton, and muscle, respectively. This naturally leads to bit of an “alphabet soup,” for which we ask the reader’s patience, as it is crucial to precisely define algorithmic configurations for benchmarking. Please refer often to Table 2, which summarizes acronyms for all the Gram-Schmidt skeletons we consider in this text. Note that the coefficient in front of the number of sync points per cycle is often used to describe low-sync methods; e.g., BCGS-PIP is a “one-sync” method, while BMGS-SVL is a “three-sync” method.

Remark 2

The methods presented here are closely related to but not quite the same as the block methods used by Yamazaki et al. in [3], where BMGS, BCGS-PIP, and BCGSI+LS are employed as Gram-Schmidt skeletons in s-step Arnoldi (also known as communication-avoiding Arnoldi) [11, 12, 23], which is used to solve a linear system with a single right-hand side. Recall that we are solving (1), i.e., multiple right-hand sides simultaneously.

Remark 3

In the pseudocode for each algorithm, intermediate quantities like W and U are defined explicitly each iteration for readability. In general, we purposefully avoid redefining quantities in a given iteration and instead only set an output (i.e., entries in B, \(\boldsymbol {\mathcal {V}}_{m}\), or \({\mathscr{H}}_{m+1,m}\)) once all computations pertaining to that value are complete. This approach simplifies mathematical analysis. Exceptions include Algorithms 1 and 2, where W is redefined inside the for-loop as projected components are subtracted away from it. In practice, it is preferable to save storage by overwriting block vectors of \(\boldsymbol {\mathcal {V}}_{m}\) instead of allocating separate memory for W and U, for which there anyway may not be space.

3.1 BCGS-PIP and BCGS-PIO

A simple idea for reducing the number of sync points in BMGS is to condense the for-loop in lines 4–7 of Algorithm 2 into a single inner product and subtraction,

This exchange gives rise to what is commonly referred to as the block classical Gram-Schmidt (BCGS) method. It is, however, rather unstable, with a loss of orthogonality worse than \(\mathcal {O}\left (\varepsilon \right ) \kappa ^{2}([\boldsymbol {B} A\boldsymbol {\mathcal {V}}_{m}])\) [6]. However, by making a correction based on the block Pythagorean theorem (as derived in, e.g., Section 2.1 [6]), we can guarantee a loss of orthogonality bounded by \(\mathcal {O}\left (\varepsilon \right ) \kappa ^{2}([\boldsymbol {B} A\boldsymbol {\mathcal {V}}_{m}])\), as long as \(\mathcal {O}\left (\sqrt {\varepsilon }\right ) \kappa ([\boldsymbol {B} A\boldsymbol {\mathcal {V}}_{m}]) \leq 1\).

One version of the corrected algorithm is given as Algorithm 3. The acronym “PIP” stands for “Pythagorean (variant) with Inner Product,” due to how the factor Hk+ 1,k is computed. An alternative formulation based off BCGS-PIO (where “PIO” stands for “Pythagoren with IntraOrthogonalization”) is also possible and is given as Algorithm 4. Note that in line 5, we use \(\sim \) to denote that a full block vector need not be computed or stored here, just the 2s × 2s scaling quotient Ω. For subtle reasons, BCGS-PIO appears to be less reliable in practice (see Section 4).

3.2 BMGS-SVL and BMGS-LTS

Barlow developed and analyzed one of the first stabilized low-sync Gram-Schmidt methods by using the Schreiber-Van Loan representation of products of Householder transformations [1, 38]. Under modest conditions, this method—which we denote here as BMGS-SVL—has loss of orthogonality like BMGS. Its success depends on tracking the loss of orthogonality via an auxiliary matrix \(\mathcal {T}\) (as defined in lines 1, 2, 6, and 9 of Algorithm 5) and using this matrix to make corrections each iteration. A closely related method is BMGS-LTS, which is identical to BMGS-SVL except that the \(\mathcal {T}\) matrix is formed via lower-triangular solves instead of matrix products. A column version of BMGS-LTS was first developed by Świrydowicz et al. [2] and generalized to blocks by Carson et al. [7]. Although BMGS-LTS appears to behave identically to BMGS-SVL in practice, a formal analysis for the former remains open. We present Arnoldi versions of BMGS-SVL and BMGS-LTS as, with different colors highlighting the small differences between the methods. In both methods, the main inner product in line 4 is performed as in BCGS. Meanwhile \(\mathcal {T}\) acts as a kind of buffer, storing the loss of orthogonality per iteration, which is used in successive iterations to make small corrections to the computation in line 4. Balabanov and Grigori use a similar technique to stabilize randomized sketches of inner products, where instead of explicitly computing and storing \(\mathcal {T}\), they solve least squares problems to compute \({\mathscr{H}}_{1:k,k}\) [14, 39].

3.3 BMGS-CWY/BMGS-ICWY

A column-wise version of this algorithm was first presented by Świrydowicz et al. as [2, Algorithm 8]. To the best of our knowledge, we are the first to develop a block-wise formulation, which we refer to here as BMGS-CWY-Arnoldi, where CWY stands for “compact WY,” an alternative way to represent Householder transformations used to originally derive this algorithm. A related Arnoldi algorithm, not treated in either [2] or [4], is based on the inverse CWY (ICWY) form, and is given simultaneously with BMGS-CWY in Algorithm 6.

It is important to note that BMGS-CWY-Arnoldi would not reduce to [2, Algorithm 8] or [4, Algorithm 6] for s = 1, as we have one total sync point, due to the lack of a reorthonormalization step for Vk. Algorithm 6 was largely derived by transforming BMGS-CWY and BMGS-ICWY from [7] into a block Arnoldi routine. The most challenging part is tracking how the \(\mathcal {R}\) factor in the Gram-Schmidt formulation maps to \({\mathscr{H}}_{m+1,m}\) and determining where to scale by the off-diagonal entry Hk,k− 1 each iteration. It is also possible to compute only with \(\mathcal {R}\) and reconstruct \({\mathscr{H}}_{m+1,m}\) after \(\boldsymbol {\mathcal {V}}_{m+1}\) is finished; this approach proved to be much less stable in practice, however, due to the growing condition number of \(\mathcal {R}\).

3.4 BCGSI+LS

One of the most intriguing of all the low-sync algorithms is DCGS2 [5], referred to as CGSI+LS in [7]. This algorithm is a reformulation of reorthogonalized CGS with a single sync point derived by “delaying” normalization to the next iteration, where operations are batched in a kind of s-step approach (where s = 2). The column-wise version exhibits \(\mathcal {O}({\varepsilon })\) loss of orthogonality; a rigorous proof of the backward stability bounds remains open, however. The block version, BCGSI+LS, does not exhibit perfect \(\mathcal {O}({\varepsilon })\) LOO; see numerical results in [7].

Bielich et al. present a column-wise Arnoldi based on DCGS2 as Algorithm 4 in [5]. Our Algorithm 7 is a direct block generalization of this algorithm with slight reformulations to match the aesthetics of Algorithm 6 and principles of Remark 3. Note that, as in Algorithm 6, we are able to compute \({\mathscr{H}}_{m}\) directly, but we must track an auxiliary matrix J and scale several quantities by Hk− 1,k− 2. An alternative version of Algorithm 7 based more directly on BCGSI+LS from [7, Algorithm 7] is included in the code but not described here.

4 Adaptive restarting

Reproducibility and stability are not mutually exclusive. This realization is precisely the motivation for an adaptive restarting routine and can be demonstrated by a simple example.

Consider the tridiag test case from Section 5.1 with n = 100. Notably, both A and B are deterministic quantities; neither is defined with random elements. In Matlab, it is possible to specify the number of threads on which a script is executed via the built-in maxNumCompThreads function.Footnote 5 We solve AX = B with Algorithms 3 and 4 while varying the multithreading setting from 1 to 16 on a standard node of the Mechthild cluster; see the beginning of Section 5 for more details about the cluster. For both algorithms, we employ a variant of Matlab ’s Cholesky routine chol, which stores a flag when chol determines a matrix is too ill-conditioned to be factorized. This flag is fed to the linear solver driver of LowSyncBlockArnoldi (bfom), which halts the process when the flag is true. Through the following discussion, we refer to this flag as the “NaN-flag,” because ignoring it leads to computations with ill-defined quantities.

Figure 1 displays the loss of orthogonality (3) and \(\kappa ([\boldsymbol {B} A \boldsymbol {\mathcal {V}}_{k}])\) for different thread counts. The condition numbers for all thread counts and both methods are hardly affected, except for some slight deviation for BCGS-PIP and 16 threads. The LOO plots are more telling: for both methods, changing the thread count directly affects the LOO and how many iterations the method can compute before encountering a NaN-flag. We allowed for a maximum basis size of m = 50, but no method can compute that far. BCGS-PIO with 8 threads gives up first at 16 iterations; BCGS-PIP with 1 and 4 threads makes it all the way to 35 iterations. Among the BCGS-PIO methods, there are orders of magnitude differences between the attained LOO.

This situation is perplexing on the surface: the problem is static, and the same code has been run every time. The only variable is the thread count.

There are two subtle issues that affect reproducibility in this case: 1) the configuration of math kernel libraries according to the parameters of the operating system and hardware,Footnote 6 and 2) guaranteed stability bounds. As for stability bounds, it is important to note that both BCGS-PIO and BCGS-PIP have a complete backward stability analysis [6]. Both methods have \(\mathcal {O}\left (\varepsilon \right ) \kappa ^{2}([\boldsymbol {B} A \boldsymbol {\mathcal {V}}_{k}])\) loss of orthogonality, as long as \(\kappa ([\boldsymbol {B} A \boldsymbol {\mathcal {V}}_{k}]) \leq \mathcal {O}\left (\frac {1}{\sqrt {\varepsilon }}\right ) = \mathcal {O}\left (10^{8}\right )\) and as long as the IntraOrtho for BCGS-PIO behaves no worse than CholQR. (For this test, we used HouseQR, Matlab ’s built-in qr routine, which is unconditionally stable and therefore behaves better than CholQR [15].) For both methods, \(\kappa ([\boldsymbol {B} A \boldsymbol {\mathcal {V}}_{k}])\) exceeds \(\mathcal {O}\left (10^{8}\right )\) around iteration 15. At that point, the assumptions for the LOO bounds are no longer satisfied. The fact that either algorithm continues to compute something useful after that point is a lucky accident.

Computing \(\kappa ([\boldsymbol {B} A \boldsymbol {\mathcal {V}}_{k}])\) every iteration to check whether the LOO bounds are satisfied is not practical. We therefore propose a simple adaptive restarting regime based on whether chol raises a NaN-flag, which happens whenever chol is fed a numerically non-positive definite matrix. When a NaN-flag is raised, we give up computing a new basis vector and go back to the last safely computed basis vector, which is then used to restart. Simultaneously, the maximum basis size m is also reduced. It is possible that an algorithm exhausts its maximum allowed restarts and basis size before converging; indeed, we have observed this often for BCGS-PIP in examples not reported here. At the same time, there are many scenarios in which restarting is an adequate band-aid, thus allowing computationally cheap, one-sync algorithms line BCGS-PIP to salvage progress and converge, oftentimes faster than competitors. See Section 5 for demonstrations.

Remark 4

The restarted framework outlined in Section 2.4.2 does not change fundamentally with adaptive cycle lengths; only the notation becomes more complicated. We omit the details here.

5 Numerical benchmarks

Our treatment of BGS and block Krylov methods is hardly exhaustive. It is not our goal to determine the optimal block Arnoldi configuration at this stage, but rather to demonstrate the functionality of a benchmarking tool for the fair comparison of possible configurations on different problems. To this end, we restrict ourselves to the options below:

-

inner products: cl (classical), gl (global)

-

skeletons: Table 2

-

muscles: CholQR, which has \(\mathcal {O}\left (\varepsilon \right ) \kappa ^{2}\) loss of orthogonality guaranteed only for \(\mathcal {O}\left (\varepsilon \right ) \kappa ^{2} < 1\), but is a simple, single-reduce algorithm. In practice, we would recommend TSQR/AllReduceQR [30, 31], which has \(\mathcal {O}({\varepsilon })\) loss of orthogonality and the same number of sync points, but is difficult to program in Matlab due to limited parallelization and message-passing features. Other low-sync muscles are programmed in LowSyncBlockArnoldi as well, and the user can easily integrate their own. Note that BCGS-PIP does not require a muscle, and BMGS-CWY, BMGS-ICWY, and BCGSI+LS only call a muscle once, in the first iteration of a new basis. BMGS-SVL and BMGS-LTS are forced to use their column-wise counterparts MGS-SVL and MGS-LTS (both 3-sync), respectively, and global methods are forced to use the global muscle (i.e., normalization without intraorthogonalization via the scaled Frobenius norm).

-

modification: none (FOM), harmonic (GMRES)

All results are generated by the LowSyncBlockArnoldi Matlab package. A single script (paper_script.m) comprises all the calls for generating the results in this manuscript. LowSyncBlockArnoldi is written as modularly as possible, to facilitate the exchange of inner products, skeletons, muscles, and modifications. While the timings reported certainly do not reflect the optimal performance for any of the methods, they do reflect a fair comparison across implementations and provide insights for possible speed-ups when these methods are ported to more complex architectures. The code is also written so that sync points (inner_prod and intra_ortho) and other potentially communication-intensive operations (matvec and basis_eval) are separate functions that can be tuned individually.

Every test script (including the example from Section 4) has been executed in Matlab R2019b on 16 threads of a single, standard node of Linux Cluster Mechthild at the Max Planck Institute for Dynamics of Complex Technical Systems in Magdeburg, Germany.Footnote 7 A standard node comprises 2 Intel Xeon Silver 4110 (Skylake) CPUs with 8 Cores each (64KB L1 cache, 1024KB L2 cache), a clockrate of 2.1 GHz (3.0 GHz max), and 12MB shared L3 cache each. We further focus on small problems that easily fit in the L3 Cache, which is easy to guarantee with sparse A, n ≤ 104, and s ≤ 10. Given that the latency between CPUs on a single node is small relative to exascale machines, we expect small improvements observed in these test cases to translate to bigger gains in a more complex setting.

For the timings, we measure the total time spent to reach a specified error tolerance. We run each test 5 times and average over the timings. We also calculate several intermediate measures, namely counts for A-calls, applications of \(\boldsymbol {\mathcal {V}}_{k}\), and sync points. In addition, we plot the convergence history in terms of the following quantities per iteration: relative residual, relative error, \(\kappa ([\boldsymbol {B} A \boldsymbol {\mathcal {V}}_{k}])\), and loss of orthogonality (LOO) (3). When a ground truth solution X∗ is provided, the error is calculated as

For all our examples, X∗ is computed by Matlab ’s built-in backslash operator. The residual is approximated by (9) and is scaled by \(\left \|{\boldsymbol {B}}\right \|_{F}\). A summary of the parameters for all benchmarks can be found in Table 3. Except for tridiag and lapl_2d, all examples are taken from the SuiteSparse Matrix Collection [40]. Via the suite_sparse.m script, it is possible to run tests on any benchmark from this collection.

5.1 tridiag

The operator A is defined as a sparse, tridiagonal matrix with 1 on the off-diagonals and − 1,− 2,…,−n on the diagonal, where n is also the size of A. Clearly A is symmetric. The right-hand side B has two columns, where the first has identical elements \(\frac {1}{\sqrt {n}}\) and the second is 1,2,…,n. This example is actually procedural, in the sense that a user can choose a desired n. At the same time, a larger n necessarily leads to a worse condition number.

Figure 2 presents the total run time per configuration as well as operator counts as a bar chart; see Table 4 in the Appendix for more details. The fastest methods are the stabilized low-sync variants. Despite being the computationally cheapest classical method per iteration, cl-BCGS-PIP is notably slower than cl-BMGS, because its inherent instability requires restarting 3 times (and therefore additional applications of A and \(\boldsymbol {\mathcal {V}}_{k}\)) before converging. The method with the fewest \(\boldsymbol {\mathcal {V}}_{k}\) evaluations is cl-BMGS, which is to be expected, since the basis is split up and applied one block column at a time in the inner-most loop; see Algorithm 1.

The fastest global method, gl-BCGS-PIP, is significantly slower even than the slowest classical method. In fact, all global methods require over 6 times as many total iterations as the fastest classical method to converge; this is in line with the theory of Section 2.4. In this particular case, the floating-point savings per iteration do not outweigh the sheer amount of time needed for all the extra A-calls. Nevertheless, the one-sync global methods (gl-BCGS-PIP, gl-BMGS-CWY, gl-BMGS-ICWY, and gl-BCGSI+LS s) have relatively low sync counts, compared even to cl-BMGS.

Figures 3 and 4 display convergence histories for a subset of the methods in Table 4 in the Appendix. The convergence histories for all global BMGS variants are very similar; we omit BMGS-SVL and BMGS-CWY, as they are visually identical to BMGS-LTS and BMGS-ICWY, respectively. BMGS is identical to BMGS-SVL and BMGS-LTS and is therefore also omitted.

Both the classical and global variants of BCGS-PIP show the robustness of the adaptive restarting procedure in action. In the global case, the LOO exceeds \(\mathcal {O}\left (10^{-10}\right )\) and reaches \(\mathcal {O}\left (1\right )\) in cl-BCGS-PIP. Despite the loss of orthogonality, restarting allows the methods to recover and eventually converge. All other low-sync variants remain stable, only restarting once the basis size limit of m = 70 has been reached. Although hardly perceptible, BMGS-ICWY does have a slightly worse LOO than that of BMGS-LTS, which can be seen by zooming in on the last few iterations of the global plots in Fig. 3 or of the classical plots in Fig. 4.

We also note that the residual estimate (7) for all methods follows the same qualitative trend as that of the error. In the worst case, cl-BCGS-PIP, the residual is nearly 3 orders of magnitude lower than the error in some places, which could lead to premature convergence. For all other methods, the difference is between 1 and 2 orders of magnitude. We would thus recommend setting the residual tolerance a couple orders of magnitude lower in practice, to ensure that the true error is accurate enough.

5.2 1138_bus

Now we turn to a slightly more complicated matrix. The matrix A comes from a power network problem and is real and symmetric positive definite, while entries of B are drawn randomly from the uniform distribution. Moreover we apply an incomplete LU (ILU) preconditioner with no fill, using Matlab ’s built-in ilu.

Even with the preconditioner, none of the global methods converges. We adjusted the thread count to see if it would aid convergence, to no avail. This is perhaps an extreme case of [22, Theorem 3.3], wherein the global method is much less accurate than the classical method in the first cycle and cannot manage to catch up even after restarting. A preconditioner better attuned to the structure of the problem may alleviate stagnation for global methods, but we do not explore this here.

In Fig. 5 we see the performance results for the convergent classical methods; more details can be found in Table 5 in the Appendix. Most notably, the one-sync methods BMGS-CWY, BMGS-ICWY, and BCGSI+LS improve over BMGS only slightly in terms of timings. BCGS-PIP is much slower, due to a quick loss of orthogonality and need to restart more often. However, it is clear that sync counts for all one-sync methods are drastically reduced compared to that of BMGS.

We examine the convergence histories of cl-BCGS-PIP and cl-BMGS-ICWY more closely in Fig. 6. Although not discernible on the graph, we found that cl-BCGS-PIP actually restarts every 28 iterations, meaning in the first cycle it encountered a NaN-flag and reduced the maximum basis size to m = 28 for all subsequent cycles. Instability in the first cycle thus hinders cl-BCGS-PIP greatly. On the other hand, BMGS-ICWY (as well as the other variants) is stable enough to exhaust the entire basis size allowance, which allows for further error reduction in the first cycle.

5.3 circuit_2

The next example comes from a circuit simulation problem. The matrix A is real but not symmetric or positive definite. We again apply an ILU preconditioner with no fill.

All the one-sync classical and global methods converge, and their performance data is presented in Fig. 7 with further details in Table 6 in the Appendix. In fact, some global methods, like gl-BCGS-PIP, are even faster than some classical methods, due to the fact that they require the same number of iterations to converge, and therefore fewer floating-point operations.

Figure 8 demonstrates how close in accuracy the global and classical BCGS-PIP variants are for this problem. The global method even has a slightly better LOO, but it should be noted that global LOO is measured according to a different inner product than classical LOO; see Section 2.2 and (3).

5.4 rajat03

Another circuit simulation problem highlights slightly different behavior. In this case, A is again real but neither symmetric nor positive definite, and we again use an ILU preconditioner with no fill.

Figure 9 summarizes the performance results, with details given in Table 7 in the Appendix. It should be noted right away that cl-BCGS-PIP fails to converge for this problem, while gl-BCGS-PIP does not, and takes second place in terms of the timings. More specifically, cl-BCGS-PIP encounters a NaN-flag it cannot resolve, which means that every time it reduces the basis size, it cannot avoid a NaN-flag. However, because global methods do not use Cholesky at all, non-positive definite factors do not pose a problem, unless their trace is numerically zero, which occurs with very low probability. Otherwise, cl-BMGS-CWY shows a small improvement over cl-BMGS.

Table 7 in the Appendix confirms that none of the methods requires restarting despite how high the condition number becomes in later iterations; see also Fig. 10. It is again interesting to see how close the error and residual plots are between the global and classical methods. In fact, the residual for the global method underestimates convergence by a couple orders of magnitude.

5.5 Kaufhold

This example treats a nearly numerically singular matrix with an extremely high condition number. Also notable, the norm of A is nearly \(\mathcal {O}\left (10^{15}\right )\). The matrix is real, but neither symmetric nor positive definite, and it was designed to trigger a bug in Gaussian elimination in a 2002 version of Matlab. We again apply an ILU preconditioner with no fill.

Figure 11 shows cl-BCGS-PIP to be the fastest of the classical one-sync methods, but the improvement over cl-BMGS is small. The global methods are all much slower. A look at the convergence histories in Fig. 12 shows a stubborn error curve despite significant progress in the initial iterations. For both BCGS-PIP methods the LOO is moderately high in the first cycle, matching the high condition numbers, but the situation is not bad enough to trigger a NaN-flag, and the LOO drops after restarting.

5.6 t2d_q9

We now examine a nonlinear diffusion problem, specifically a biquadratic mesh of a temperature field. The matrix A is real but not symmetric or positive definite, and we again use an ILU preconditioner with no fill.

Figure 13 shows that both BCGS-PIP are the fastest overall, with cl-BMGS in second-to-last place; see Table 9 in the Appendix for more details. Interestingly, even gl-BMGS is faster than cl-BMGS in this scenario.

Both BCGSI+LS variants are rather slow in this example. Despite having just one sync per iteration, BCGSI+LS does generally have a higher complexity than its one-sync counterparts, which manifests here as a disadvantage.

The convergence behavior for the BCGS-PIP variants is given in Fig. 14. Here we see that despite the global condition number having a high variation relative to the classical method, the global LOO is overall much less. This phenomenon is not unique to this example, however, it just happens to be more noticeable.

5.7 lapl_2d

Our last problem is taken directly from [21, Section 5.4], a discretized two-dimensional Laplacian matrix. A is thus banded, real, and symmetric positive definite. We do not apply a preconditioner and look at all skeletons considered in the text.

Figure 15 shows the performance results; more details can be found in Table 4 in the Appendix. All one-sync classical methods except for cl-BCGSI+LS beat cl-BMGS, along with a number of global methods. The slowest classical methods are the three-sync ones, and some one-sync global methods follow behind. The fastest method, cl-BCGS-PIP also happens to have the highest A count and applications of \(\boldsymbol {\mathcal {V}}_{k}\), due to its high number of restarts. Both cl-BMGS-CWY and cl-BMGS-ICWY, however, have fewer sync counts, as well as A counts and \(\boldsymbol {\mathcal {V}}_{k}\) counts, and are very close in terms of timings.

The methods with the highest sync counts are cl-BMGS-SVL and cl-BMGS-LTS. The reason is that they cannot use CholQR as a muscle,Footnote 8 and this problem requires many iterations to converge. LowSyncBlockArnoldi is written to count sync points within the muscles as well, and with MGS-SVL and MGS-LTS each contributing 1 + 3s per call, the total number of sync points eventually passes that of cl-BMGS, which can use a communication-light muscle like CholQR.

6 Conclusions and outlook

Stability bounds and floating-point analysis are challenging to work out rigorously, and it is therefore simultaneously important to search for counterexamples and edge cases while trying to prove conjectured bounds. In general, rigorous loss of orthogonality and backward error bounds for all these methods could lead to new insights and improvements in the quest for a reliable, scalable Krylov subspace solver. Our flexible benchmarking tool can aid in that process, and it can easily be extended to accommodate new algorithm configurations, test cases, and measures.

At the same time, low-sync block Arnoldi algorithms with adaptive restarting are clearly already useful and robust enough for a wide variety of problems, especially where A is reasonably conditioned and memory limitations cap basis sizes. In every benchmark, we have observed that at least one low-sync method outperformed both the classical and global BMGS-based Arnoldi methods. More research is needed to determine which low-sync skeletons are best for which problems and architectures, particularly computational models that account not only for operation counts but also for performance variations relative to block size [26, 41, 42]. Most likely the best configuration allows for switching between skeletons and muscles depending on convergence behavior.

For scenarios where the basic adaptive restarting procedure is not sufficient to rescue convergence, it might be possible to improve the heuristics with a cheap estimate of the loss of orthogonality computed, e.g., a randomized sketched inner product [39]. With such a cheap estimate, we could not only decrease the basis size when there are problems, but increase it again in later cycles. Randomized algorithms themselves are known to reduce communication, and a thorough comparison and combination of the methods proposed here and in [39] could lead to powerful Krylov subspace method well suited for exascale architectures.

Global methods are unfortunately less promising. They are almost always slower than even the slowest classical method, due to requiring more cycles, and thus operator calls and sync points, to converge. However, the benchmarks do suggest that, in cases with a good preconditioner known to guarantee convergence in a few iterations, global methods may become competitive again, especially in single-node or “laptop” applications, where their reduced computational intensity per iteration is favorable.

Notes

http://www.top500.org/news/ornls-frontier-first-to-break-the-exaflop-ceiling/. Accessed 8 August 2022.

The term matvec is often used to refer to the multiplication of A with a vector. Because we will be focusing on block vectors, we refrain from this term to avoid confusion.

https://mathworks.com/help/matlab/ref/maxnumcompthreads.html. Accessed 8 August 2022.

https://www.mpi-magdeburg.mpg.de/cluster/mechthild. Accessed 8 August 2022.

References

Barlow, J.L: Block modified Gram-Schmidt algorithms and their analysis. SIAM J. Matrix Anal. Appl 40(4), 1257–1290 (2019). https://doi.org/10.1137/18M1197400

Świrydowicz, K., Langou, J., Ananthan, S., Yang, U., Thomas, S.: Low synchronization Gram-Schmidt and generalized minimum residual algorithms. Numer. Lin. Alg. Appl., 28(2), https://doi.org/10.1002/nla.2343 (2020)

Yamazaki, I, Thomas, S., Hoemmen, M., Boman, E.G, Świrydowicz, K, Eilliot, J.J: Low-synchronization orthogonalization schemes for s-step and pipelined Krylov solvers in Trilinos. In: Proceedings of the 2020 SIAM conference on parallel processing for scientific computing (PP), pp. 118–128, https://doi.org/10.1137/1.9781611976137.11 (2020)

Thomas, S., Carson, E., Rozložník, M., Carr, A., Świrydowicz, K.: Iterated-gauss-seidel GMRES. arXiv:2205.07805v2 (2022)

Bielich, D., Langou, J., Thomas, S., Świrydowicz, K., Yamazaki, I., Boman, E.G.: Low-synch gram–schmidt with delayed reorthogonalization for krylov solvers. Parallel Comput. 112, 102940 (2022). https://doi.org/10.1016/j.parco.2022.102940

Carson, E., Lund, K., RozloCzník, M.: The stability of block variants of classical Gram-Schmidt. SIAM J. Matrix Anal. Appl. 42(3), 1365–1380 (2021). https://doi.org/10.1137/21M1394424

Carson, E., Lund, K., Rozložník, M., Thomas, S.: Block Gram-Schmidt algorithms and their stability properties. Linear Algebra Appl. 638(20), 150–195 (2022). https://doi.org/10.1016/j.laa.2021.12.017

Saad, Y.: Iterative methods for sparse linear systems, 2nd edn., p. 528. SIAM. https://doi.org/10.1137/1.9780898718003 (2003)

Güttel, S.: Rational Krylov approximation of matrix functions: numerical methods and optimal pole selection. GAMM-Mitteilungen 36(1), 8–31 (2013). https://doi.org/10.1002/gamm.201310002

Simoncini, V.: Analysis of the rational Krylov subspace projection method for large-scale algebraic Riccati equations. SIAM J. Matrix Anal. Appl. 37 (4), 1655–1674 (2016). https://doi.org/10.1137/16M1059382

Carson, E.: Communication-Avoiding Krylov Subspace Methods in Theory And Practice. Ph.D. Thesis, Department of Computer Science. University of California, Berkeley (2015). http://escholarship.org/uc/item/6r91c407

Hoemmen, M.: Communication-avoiding Krylov subspace methods. Ph.D. Thesis, department of computer science university of california at berkeley. http://www2.eecs.berkeley.edu/Pubs/TechRpts/2010/EECS-2010-37.pdf (2010)

Grigori, L., Moufawad, S., Nataf, F.: Enlarged Krylov subspace conjugate gradient methods for reducing communicaiton. SIAM J. Matrix Anal. Appl. 37(2), 744–773 (2016). https://doi.org/10.1137/140989492

Balabanov, O., Grigori, L.: Randomized block Gram-Schmidt process for solution of linear systems and eigenvalue problems. arXiv:2111.14641 (2021)

Higham, N.J.: Accuracy and stability of numerical algorithms, 2nd edn. Appl. Math., p. 663. SIAM Publications, https://doi.org/10.1137/1.9780898718027 (2002)

Huckle, T., Neckel, T.: Bits and Bugs: a scientific and historical review of software failures in computational science. Softw. Environ. Tools, vol. 29. SIAM Publications, https://doi.org/10.1137/1.9781611975567 (2019)

Giraud, L., Langou, J., Rozložník, M., Van Den Eshof, J.: Rounding error analysis of the classical Gram-Schmidt orthogonalization process. Numer. Math. 101, 87–100 (2005). https://doi.org/10.1007/s00211-005-0615-4

Smoktunowicz, A., Barlow, J.L., Langou, J.: A note on the error analysis of classical Gram-Schmidt. Numer. Math. 105(2), 299–313 (2006). https://doi.org/10.1007/s00211-006-0042-1

Carson, E.: The adaptive s-step conjugate gradient method. SIAM J. Matrix Anal. Appl. 39(3), 1318–1338 (2018). https://doi.org/10.1137/16M1107942

Carson, E.C.: An adaptive s-step conjugate gradient algorithm with dynamic basis updating. Appl. Math. 65, 123–151 (2020). https://doi.org/10.21136/AM.2020.0136--19

Frommer, A., Lund, K., Szyld, D.B.: Block Krylov subspace methods for functions of matrices. Electron. Trans. Numer. Anal. 47, 100–126 (2017)

Frommer, A., Lund, K., Szyld, D.B.: Block Krylov subspace methods for functions of matrices II: modified block FOM. SIAM J. Matrix Anal. Appl. 41(2), 804–837 (2020). https://doi.org/10.1137/19M1255847

Ballard, G., Carson, E., Demmel, J.W., Hoemmen, M., Knight, N., Schwartz, O.: Communication lower bounds and optimal algorithms for numerical linear algebra. Acta Numer. 23(2014), 1–155 (2014). https://doi.org/10.1017/S0962492914000038

Anzt, H., Boman, E.G., Falgout, R., Ghysels, P., Heroux, M., Li, X., Curfman McInnes, L., Mills, R.T., Rajamanickam, S., Rupp, K., Smith, B., Yamazaki, I., Yang, U.M.: Preparing sparse solvers for exascale computing. Philos. Trans. Royal Soc. A 378(2166), 20190053 (2020). https://doi.org/10.1098/rsta.2019.0053

Baker, A.H., Dennis, J.M., Jessup, E.R.: On improving linear solver performance: a block variant of GMRES. SIAM J. Sci. Comput. 27(5), 1608–1626 (2006). https://doi.org/10.1137/040608088

Birk, S.: Deflated Shifted block Krylov subspace methods for hermitian positive definite matrices. Ph.d. Thesis, Fakultät für Mathematik und Naturwissenschaften, Bergische Universität Wuppertal. http://elpub.bib.uni-wuppertal.de/servlets/DocumentServlet?id=4880 (2015)

Dreier, N.-A.: Hardware-oriented Krylov methods for high-performance computing. Ph.D. thesis, Fachbereich Mathematik und Informatik der Mathematisch-Naturwissenschaftlichen Fakultät der Westfälische Wilhelms-Universität Münster. https://www.proquest.com/docview/2607316034/abstract/A334B3B058D24AF2PQ/1 (2020)

Dreier, N.-A., Engwer, C.: Strategies for the vectorized block conjugate gradients method. In: Vermolen, F.J., Vuik, C. (eds.) Numerical mathematics and advanced applications ENUMATH 2019. Lecture notes in computational science and engineering, vol. 139, pp. 381–388. Springer, https://doi.org/10.1007/978-3-030-55874-1_37 (2020)

Yamamoto, Y., Nakatsukasa, Y., Yanagisawa, Y., Fukaya, T.: Roundoff error analysis of the Cholesky QR2 algorithm. Electron. Trans. Numer. Anal. 44, 306–326 (2015)

Demmel, J., Grigori, L., Hoemmen, M., Langou, J.: Communication-optimal parallel and sequential QR and LU factorizations. SIAM J. Sci. Comput. 34(1), 206–239 (2012). https://doi.org/10.1137/08073.1992

Mori, D., Yamamoto, Y., Zhang, S.L.: Backward error analysis of the AllReduce algorithm for householder QR decomposition. Jpn. J. Ind. Appl. Math. 29(1), 111–130 (2012). https://doi.org/10.1007/s13160-011-0053-x

Simoncini, V.: Ritz and Pseudo-Ritz values using matrix polynomials. Linear Algebra Appl. 241-243, 787–801 (1996). https://doi.org/10.1016/0024-3795(95)00682-6

Simoncini, V., Gallopoulos, E.: Convergence properties of block GMRES and matrix polynomials. Linear Algebra Appl. 247, 97–119 (1996). https://doi.org/10.1016/0024-3795(95)00093-3

Simoncini, V., Gallopoulos, E.: A hybrid block GMRES method for nonsymmetric systems with multiple right-hand sides. J. Comput. Appl. Math. 66, 457–469 (1996). https://doi.org/10.1016/0377-0427(95)00198-0

Gutknecht, M.H.: Block Krylov space methods for linear systems with multiple right-hand sides: an introduction. In: Siddiqi, A.H., Duff, I.S., Christensen, O. (eds.) Mod. math. model. methods algorithms real world syst, pp. 420-447. Anamaya New Delhi (2007)

Gutknecht, M.H., Schmelzer, T.: Updating the QR decomposition of block tridiagonal and block Hessenberg matrices. Appl. Numer. Math. 58 (6), 871–883 (2008). https://doi.org/10.1016/j.apnum.2007.04.010https://doi.org/10.1016/j.apnum.2007.04.010

Gutknecht, M.H., Schmelzer, T.: The block grade of a block Krylov space. Linear Algebra Appl. 430, 174–185 (2009). https://doi.org/10.1016/j.laa.2008.07.008

Schreiber, R., Van Loan, C.: A storage-efficient WY representation for products of householder transformations. SIAM J. Sci. Statist. Comput. 10(1), 53–57 (1989). https://doi.org/10.1137/0910005

Balabanov, O., Grigori, L.: Randomized Gram–Schmidt process with application to GMRES. SIAM J. Sci. Comput. 44(3), 1450–1474 (2022). https://doi.org/10.1137/20M138870X

Davis, T.A., Hu, Y.: The University of Florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1–25 (2011). https://doi.org/10.1145/2049662.2049663

Boman, E.G., Higgins, A.J., Szyld, D.B.: Optimal size of the block in block GMRES on GPUs: computational model and experiments. e-print 22-04-30, department of mathematics, Temple University, Philadelphia, PA. https://www.math.temple.edu/szyld/reports/BGMRES_GPU_rev.report.pdf (2022)

Parks, M. L., Soodhalter, K. M., Szyld, D. B.: A block recycled GMRES method with investigations into aspects of solver performance. arXiv:1604.01713v1 (2016)

Acknowledgements

The author is indebted to Stéphane Gaudreault, Teodor Nikolov, and Erin Carson for stimulating discussions that inspired this work. The author is also grateful to Jens Saak and Martin Köhler for answering questions about the Mechthild cluster and multithreading in MATLAB and to two anonymous reviewers for their constructive feedback.

Funding

Open Access funding enabled and organized by Projekt DEAL. K. Lund is a contracted employee of Max Planck Institute for Dynamics of Complex Technical Systems and did not receive any additional funding to support this project.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The author certifies that this manuscript has been submitted to only one journal at this time, that the work is original, and that the results are not fabricated or skewed. The work is entirely the author’s own, and to the best of the author’s ability, the work is complete in its own right and without error or misappropriation.

Consent for publication

As the sole author, K. Lund provides consent for publication.

Competing interests

The author declares no competing interests.

Additional information

Author contribution

K. Lund is the sole author of the manuscript and associated code.

Availability of supporting data

All code and scripts to reproduce plots can be found at https://gitlab.mpi-magdeburg.mpg.de/lund/low-sync-block-arnoldi.

Human and animal ethics

Not applicable

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Raw data from tests

Appendix. Raw data from tests

A subset of raw data corresponding to the performance plots in Section 5 is provided below. Many headers are abbreviated for space reasons: “Accel.” refers to “acceleration” or “speed-up”; “Ct.” refers to “Count”; and “Iter.” refers to “Iteration”.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lund, K. Adaptively restarted block Krylov subspace methods with low-synchronization skeletons. Numer Algor 93, 731–764 (2023). https://doi.org/10.1007/s11075-022-01437-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01437-1

Keywords

- Gram-Schmidt

- Krylov subspace methods

- Arnoldi method

- Block methods

- Stability

- Loss of orthogonality

- Low-synchronization methods

- High-performance computing

- Communication-avoiding methods