Abstract

In this paper, we present modifications of the iterative hard thresholding (IHT) method for recovery of jointly row-sparse and low-rank matrices. In particular, a Riemannian version of IHT is considered which significantly reduces computational cost of the gradient projection in the case of rank-one measurement operators, which have concrete applications in blind deconvolution. Experimental results are reported that show near-optimal recovery for Gaussian and rank-one measurements, and that adaptive stepsizes give crucial improvement. A Riemannian proximal gradient method is derived for the special case of unknown sparsity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the seminal works on compressive sensing by Candès, Romberg, and Tao [7] and by Donoho [9], the question of recovering structured signals from subsampled random measurements has received significant attention. Two structural models of fundamental importance in applications are sparse signals and low-rank matrices. A sparse signal is one that can be well approximated by a linear combination of just a few elements in a given basis or dictionary, and has proven to be appropriate for example in magnetic resonance imaging or remote sensing. Low-rank matrix models have been successful, e.g., for recommender systems and in applications related to phase retrieval and wireless communication. In these last two areas, the low-rank model arises from lifting, that is, a quadratic or bilinear measurement is equivalently expressed as a linear function acting on the rank-one matrix formed from the outer product of the two inputs. Consequently, combined with a sparsity assumption for the underlying signal (or signals), this entails that the matrix to be recovered is simultaneously of low rank and row and/or column sparse.

In this paper, we focus on the low rank and row sparse scenario. Such a model arises for example in wireless communication as follows. When an encoded message is transmitted via an unknown channel, the received signal can be modeled as the convolution of the encoded message vector with a channel vector. For this vector, sparsity can be assumed when only few transmission paths are active. The goal is then to estimate both the message and the sparse channel vector from the received signal. This problem of blind deconvolution can be recast into a recovery problem for a row-sparse rank-one matrix from linear measurements (see Section 4.2). For a subspace model instead of a sparsity model (that is, when the active transmission paths are assumed to be known), a number of recent works have discussed solution strategies, including lifting [3, 21] and nonconvex methods [23]. Subsequently, these methods have been generalized to the more difficult case of multiple simultaneous transmissions [18, 25, 26], but again only for subspace models.

To make our model precise, we consider the space \(\mathbb {R}^{M \times N}\) of \(M \times N\) matrices and denote by \(\Vert X \Vert _{0}\) the number of nonzero rows of X. If \(\Vert X \Vert _{0} \le s\), we say that X is row s-sparse. The set of row s-sparse matrices is denoted by

The set of matrices of rank at most k is denoted by

In this paper, we focus on the intersection of these sets,

Throughout, we assume that

since otherwise the low-rank constraint is void. The problem we then consider is to recover a given matrix \(X \in \mathcal M_{k,s}\) from m linear measurements

where \(A_{1},\dots ,A_{m} \in \mathbb R^{M \times N}\), and \(\langle \cdot , \cdot \rangle _{F}\) is the usual Frobenius inner product. With a corresponding linear operator \({\mathbf {A}} : \mathbb {R}^{M \times N} \rightarrow \mathbb {R}^{m}\), this can be formulated as solving the problem

for a given \(y \in \mathbb {R}^{m}\).

The two simultaneous structural constraints defining \(\mathcal M_{k,s}\) significantly reduce the degrees of freedom and allow for injectivity of \(\mathbf {A}\) on \(\mathcal M_{k,s}\) given a considerably smaller number of measurements m as compared to constraining only on one of the two sets \(\mathcal M_{k}\) or \(\mathcal N_{s}\). Injectivity properties have been shown to require only \(O(k(s+N))\) measurements in various scenarios [10, 24]; for generic measurement operators, precise conditions on the number of measurements are known [19].

At the same time, the simultaneous objectives make it harder to practically recover at near-minimal sampling complexity. In particular, while both the low-rank and the sparsity objective on its own admit tractable convex relaxations with recovery guarantees under random measurements, it has been shown that no linear combination of these two objectives allows for comparable guarantees for the joint objective [29], see also [20]. Greedy-type methods are also difficult to generalize to the joint minimization problem. A typical key step in these methods is a projection onto the set of admissible signals. For sparsity and low-rank models, this projection can be efficiently implemented by restricting to the largest coefficients or the largest principal components, respectively. For the joint low-rank and (bi-)sparse model, however, this projection is an instance of the sparse principal component analysis problem, which is known to be NP hard in general [27].

For very special measurements, certain two-stage procedures can allow for guaranteed recovery. For phase retrieval, this works when measurements of the form \(|b_{i}^{*} \Phi x|^{2}\), i.e., \(A_{p}= b_{p}^{*}\Phi \Phi ^{*}b_{p}\) and \(X=xx^{*}\) in terms of the representation (1.1), are considered with \(\Phi\) representing a linear dimension reduction, and the number of measurements is larger than the embedding dimension of \(\Phi\) by at least a constant factor [17]. Namely, for \(\Phi \in \mathbb {R}^{m\times N}, m\gtrsim s\log \tfrac{N}{s}\), and \(b_{i}\) both chosen with i.i.d. Gaussian entries, such measurements allow the recovery of \(y=\Phi x\) via standard phase retrieval techniques, from which one can then infer x via compressive sensing. Similar nested measurements can also be constructed in the framework of bilinear problems [4]. While arguably such very special measurements cannot be assumed in many scenarios of interest, these observations show that solving sparse bilinear problems is not an intrinsically hard problem in all cases.

That said, some recent progress has been made also for more generic classes of measurements. A number of works have established local recovery guarantees for a near-optimal number of measurements, that is, convergence to the true solution is guaranteed from all starting points in a suitable neighborhood. For sparse phase retrieval, such guarantees have been established for gradient descent [32] and hard thresholding pursuit [6]. For unstructured Gaussian measurements, local guarantees are available for the alternating algorithms sparse power factorization [22] and alternating Tikhonov regularization and Lasso [11]. Suitable initialization procedures to complement these methods by constructing a starting point in a small enough neighborhood of the solution, however, are known only for certain special classes of signals such as signals with few dominant entries [14, 22]. In [28], a model of low-rank recovery with essentially sparse nonorthogonal factors is considered, for which a robust injectivity property for several types of measurements is established. We also mention the work [15] in which a rank-adaptive algorithm for finding global minima of nonconvex formulations of structured low-rank problems is presented.

Despite the recent progress, it remains largely an open problem whether and how joint (bi-)sparse and low-rank signals can be efficiently recovered from a near-minimal number of measurements when no such initialization is provided. For an in-depth discussion of what makes the problem difficult and some initial ideas regarding how to solve it, we refer the reader to [12].

1.1 Contribution and outline

In this paper, we consider a class of non-convex iterative methods based on modification of iterative hard thresholding (IHT) as proposed in the recent work [12]. In principle, under suitable RIP assumptions for the operator \(\mathbf {A}\), the standard IHT method could be used to approximate the solution of (1.1) at an exponential rate. The main obstacle is that the exact projections on the set \(\mathcal M_{k,s}\) are NP hard to compute as mentioned above. It is, however, possible to compute quasi-optimal projections on \(\mathcal M_{k,s}\) by simply using the successive projections on \(\mathcal M_{k}\) and \(\mathcal N_{s}\), or vice versa. This approach is taken in Section where we first derive quasi-optimality constants for such projections. These results complement some of the investigations in [12] on the bisparse case. We then consider a practical version of IHT that uses these quasi-optimal projections in combination with line search, and present a local convergence result for such a method.

The main contribution of this paper is a further modification of the IHT algorithm that makes use of the manifold properties (of the smooth part) of the set \(\mathcal M_{k}\) by applying a tangent space projection to the search direction. This idea is inspired by Riemannian low-rank optimization, which has been shown to be efficient in several applications, including matrix completion and matrix equations; see [33] for an overview. We demonstrate that in the important case of rank-one measurements, which includes problems of blind deconvolution, the additional tangent space projection allows for a significant reduction of computational cost since the projection of the gradient onto the tangent space can be efficiently realized even for large low-rank matrices. This observation does not specifically rely on the sparsity structure and should therefore be of interest for other low-rank recovery problems with rank-one measurements as well. The proposed Riemannian version of IHT is presented in Section 3.1, with a detailed discussion for the case of rank-one measurements in Section 3.2.

Lastly, we also consider the scenario that the sparsity parameter s is unknown. One can then replace the hard-thresholding operator for the rows with a soft-thresholding operator. As we show in Section 3.3, such a modification admits a natural interpretation as a manifold proximal gradient method on \(\mathcal M_{k}\) with the (1, 2)-norm as a penalty. Notably, in contrast to other recent generalizations of the proximal gradient method to manifolds [8, 16], the structural constraints considered in this work allow for a closed-form expression of the proximal step via soft-thresholding.

Finally, in Section 4, we present several numerical experiments. We test the three algorithms proposed in this work in scenarios with random measurements as well as rank-one measurements. This also includes a numerical experiment on blind deconvolution with Fourier measurements.

The main outcome of our results is that in practice, and in the noiseless case, the proposed variants of IHT are capable of recovering row-sparse low-rank matrices with a near optimal number of measurements, up to a constant oversampling factor. The theoretical guarantees are currently restricted to local convergence results, and will be subject to future research.

2 Review of iterative hard thresholding approaches

The sparse low-rank recovery problem (1.1) can be recast into the optimization problem

where \(\Vert \cdot \Vert _{2}\) denotes the Euclidean norm in \(\mathbb {R}^{m}\). Noting that

an intuitive approach to the sparse low-rank recovery is the iterative hard thresholding method, which takes the form

where \(\mathbf {P}_{\mathcal M_{k,s}}\) is a metric projection on \(\mathcal M_{k,s}\), which is characterized by the best approximation property

By the standard arguments, one can show that under a suitable RIP assumption this method is globally convergent to the solution \(X^{*}\).

A main obstacle is that the projection on the set \(\mathcal M_{k,s}\) is usually prohibitively expensive to compute, essentially at the cost of checking almost all possible subsets of s rows of X. For a subset \(S \subseteq \{ 1,\dots ,M\}\), we denote by \(X_{S}\) the projection of X where all rows not in S have been set to zero. We then have the following result, which has already been noted in [12] for the case \(k=1\).

Proposition 2.1

For given X, \(\mathbf {P}_{\mathcal M_{k,s}}(X)\) is given as a best rank k approximation of \(X_{S^{\prime }}\), where the submatrix \(X_{S^{\prime }}\) maximizes \({\sigma _{1}^{2}}(X_{S}) + \dots + {\sigma _{k}^{2}}(X_{S})\) (sum of squares of largest singular values) among all submatrices \(X_{S}\) of X with \({\left|S \right|} = s\).

Proof

For any row support set S with \({\left|S \right|} \le s\), the optimal closest point in \(\mathcal M_{k,s}\) with this support is obviously a best rank k approximation \(\mathbf {T}_{k}(X_{S})\) of \(X_{S}\) (which has the same row support). It has the squared distance

where \(\bar{S}\) is the complement of S. This shows that the minimum is achieved when \(\Vert \mathbf {T}_{k}(X_{S}) \Vert _{F}^{2}={\sigma _{1}^{2}}(X_{S}) + \dots + {\sigma _{k}^{2}}(X_{S})\) is maximal among all S with \({\left|S \right|} \le s\). However, since this quantity does not decrease when adding rows to a matrix, it suffices to take the maximum over \({\left|S \right|} = s\). \(\square\)

By the above proposition, the projection \(\mathbf {P}_{\mathcal M_{k,s}}\) is in principle available by computing the k largest singular vectors of all possible submatrices with s rows, which has combinatorial complexity. Even if a smaller set of candidates for the rows, say 2s of them, could be identified beforehand, the complexity remains exponential in s, not even counting the cost for computing the singular vectors. The computation of \(\mathbf {P}_{\mathcal M_{k,s}}\) should therefore be in general infeasible, which also makes it infeasible to compute (2.2).

2.1 Quasi-optimal projections

Feasible variants of IHT can be obtained by employing projections on \(\mathcal M_{k,s}\) that are only quasi-optimal, an idea already suggested in [12]. Such variants are derived from the fact that \(\mathcal M_{k,s}\) is the intersection of the two cones \(\mathcal N_{s}\) (row s-sparse matrices) and \(\mathcal M_{k}\) (rank-k matrices), and for both sets the metric projections are explicitly available. For \(\mathcal N_{s}\), it is given as

where \(S \subseteq \{1,\dots ,M\}\) contains indices of s rows of X with largest norm. For \(\mathcal M_{k}\), the best rank-k approximation of X can be computed from the dominant singular vectors as usual and is denoted by \(\mathbf {T}_{k}(X)\). Both \(\mathbf {H}_{s}\) and \(\mathbf {T}_{k}\) are nonlinear maps. They are possibly set-valued, in which case we assume that some specific selection rule is applied. Since computing a best rank-k approximation does not increase the row support of a matrix, the composition

always maps into the cone \(\mathcal M_{k,s}\). Similarly, projecting onto the largest s rows does not increase the rank, hence the map

also maps into \(\mathcal M_{k,s}\).

Computationally, \(\mathbf {P}_{k,s}(X)\) is obtained from X by first restricting to the submatrix consisting to the s rows of largest norm, and then computing a best rank-k approximation of that submatrix. Since this submatrix has only s rows, this reduces the cost of the SVD. In contrast, \({\hat{\mathbf {P}}}_{k,s}(X)\) requires first a truncated SVD \(U\Sigma V^{T}\) of X, which is in general more expensive. However, there is a potential scenario when \({\hat{\mathbf {P}}}_{k,s}(X)\) is applied to tangent vectors of the fixed rank-k manifold, where this step is cheap. Also note that for finding the largest s rows it is then sufficient to determine the largest rows of the matrix \(U \Sigma\), which has only k columns, so this step becomes slightly cheaper too.

The following proposition shows that both \(\mathbf {P}_{k,s}\) and \(\hat{\mathbf {P}}_{k,s}\) are quasi-optimal projections. This has already been shown in [12, Prop. 12]. We include a proof below, since the setting with only row sparsity that we consider in this paper allows to exploit a certain commutativity relation that is not available in the general bisparse case and leads to an improved quasi-optimality constant compared to the result in [12].

Proposition 2.2

For any \(X \in \mathbb {R}^{M \times N}\), the projections \(\mathbf {P}_{k,s}\) and \(\hat{\mathbf {P}}_{k,s}\) map into \(\mathcal M_{k,s}\) and are quasi-optimal in the sense that

Proof

We first observe that both nonlinear mappings \(\mathbf {H}_{s}\) and \(\mathbf {T}_{k}\) for every input X in fact act as linear orthogonal projections in the space \(\mathbb {R}^{M \times N}\). Indeed, for given X, we can write

where \(D_{X}\) is a binary diagonal matrix that selects s rows supporting the s largest (in norm) rows of X, and \(V_{X}\) consists of the leading k right singular vectors of X. In the rest of the proof, we write D instead of \(D_{X}\) and V instead of \(V_{X}\). We first consider the map \(X \mapsto D X V V^{\top }\) and show that it provides an (alternative) quasi-optimal projection. Note that

Since \(\mathcal M_{k,s} \subseteq \mathcal M_{k}\), we have

By an analogous argument, since \(\mathcal M_{k,s} \subseteq \mathcal N_{s}\), we also have \(\Vert X - DX \Vert _{F} \le \Vert X - \mathbf {P}_{\mathcal M_{k,s}} (X) \Vert _{F}\). Therefore, we obtain

To conclude the proof, it remains to show that

Since \(\mathbf {P}_{k,s}(X) = \mathbf {T}_{k}(DX)\) is supported in the same rows as DX, we have the orthogonal decomposition

The second term on the right can be estimated as

since \(DX VV^{\top }\) is a rank-k matrix. It thus follows that

Similarily, since \(\hat{\mathbf {P}}_{k,s} (X)= \mathbf {H}_{s}(X VV^{\top })\), we have that

The second term on the right is not larger than \(\Vert XVV^{\top } - D X VV^{\top } \Vert _{F}^{2}\), which likewise shows

as desired.

Remark 2.3

It is is interesting to note that for \(\mathbf {P}_{k,s}\) the constant \(\sqrt{2}\) is not attained for matrices X where \(\mathbf {H}_{s}(X)\) is single valued. If it were attained, then the proof shows that we have \(\Vert X - DX \Vert _{F} = \Vert X - \mathbf {P}_{\mathcal M_{k,s}} (X) \Vert _{F}\), that is \(\min _{Y \in \mathcal N_{s}} \Vert X - Y \Vert _{F}= \min _{Y \in \mathcal M_{k,s}} \Vert X - Y \Vert _{F}\). Then, however, \(\mathbf {P}_{k,s}(X) = \mathbf {H}_{s}(X)\) is an optimal projection. Similarly, the constant \(\sqrt{2}\) is not attained for \(\hat{\mathbf {P}}_{k,s}\) when \(\mathbf {T}_{k}\) is single valued.

2.2 IHT with adaptive stepsize

Using the quasi-optimal projector \(\mathbf {P}_{k,s}\), one obtains a modified version of IHT shown in Algorithm 1, in which we additionally include a step size control. In principle, one could use the projector \(\hat{\mathbf {P}}_{k,s}\) instead, but as noted above it should usually be more expensive to compute unless further structure can be exploited. In principle, any starting point \(X_{0} \in \mathcal M_{k,s}\) could be used, but we noted that in our experiments the proposed choice \(X_{0} = 0\) works well.

Algorithm 1 IHT with quasi-optimal projection.

Possible step sizes in Algorithm 1 are either \(\alpha _{\ell } = 1\) (as in classical IHT) or \(\alpha _{\ell } = \frac{ \Vert {\mathbf {A}}^{*}({\mathbf {A}}(X_{\ell }) - y)) \Vert ^{2}}{\Vert {\mathbf {A}}( {\mathbf {A}}^{*}({\mathbf {A}}(X_{\ell }) - y))) \Vert ^{2} },\) which yields the optimal step size without projection. In our experiments, this did however not significantly improve the success or speed of convergence. Instead, we found that an adaptive line search works well. We implemented an Armijo backtracking where \(\alpha _{\ell } = \beta ^{p}\) and p is the smallest nonnegative integer that fulfills

for parameters \(\beta \in (0,1), \gamma > 0\). We choose \(\beta = 0.5\) and \(\gamma = 10^{-4}\) in our experiments. Note that the projection is included in the Armijo condition, but the search direction is not guaranteed to be a descent direction for \(f\circ \mathbf {T}_{k} \circ \mathbf {H}_{s}\). In cases where the Armijo condition cannot be fulfilled, we resort to the regular stepsize rule \(\alpha _{\ell } = 1\).

The most costly steps in Algorithm 1 are the computation \(\mathbf {A}^{*}(\mathbf {A}(X)-y)\) and the quasi-optimal projections. We consider this in more detail in Section 3.2.

2.3 Convergence

To our knowledge, for general measurements, no global convergence result is currently available for Algorithm 1, nor for any other algorithm in a near-minimal parameter regime. However, in the noiseless case, and with constant step size \(\alpha _{\ell } = 1\), it is easy to state a qualitative local convergence result under a RIP assumption. We say that \(\mathbf {A}\) satisfies a \(\delta _{k,s}\)-RIP on \(\mathcal {M}_{k,s}\) if

One can show that Gaussian measurements will satisfy a \(\delta _{k,s}\)-RIP with high probability if the number of measurements is at least of order \(\delta ^{-2}_{k,s}k(s + N) \ln (MN)\), cf. [22, Theorem 2].

The local convergence proof is based on the simple observation that in a sufficiently small neighborhood of a matrix with s nonzero rows the quasi-optimal projection \(\mathbf {P}_{k,s}= \mathbf {T}_{k} \circ \mathbf {H}_{s}\) is indeed the optimal projection on \(\mathcal M_{k,s}\), since the correct rows are selected.

Lemma 2.4

Let \(X^{*}\in \mathcal {M}_{k,s}\) have exactly s nonzero rows and let \(\mu\) be the smallest norm among the nonzero rows of \(X^{*}\). If \(Y\in \mathbb {R}^{M\times N}\) satisfies \(\Vert Y-X^{*}\Vert _{F} < \frac{\mu }{2}\), then \((\mathbf {T}_{k}\circ \mathbf {H}_{s})(Y)=\mathbf {P}_{\mathcal {M}_{k,s}}(Y)\).

Proof

Let S be the row support of \(X^{*}\). Obviously the largest s rows of Y are supported in S and \((\mathbf {T}_{k}\circ \mathbf {H}_{s})(Y)\) provides the best approximation with respect to this row support. We therefore need to show that the best approximation \(\mathbf {P}_{\mathcal {M}_{k,s}}(Y)\) also has this row support. Indeed, let Z be any matrix with a different support of size at most s and let y be any row of Y not in the row support of Z (but supported in S). Then, \(\Vert Y-Z\Vert _{F}\ge \Vert y\Vert \ge \frac{\mu }{2} > \Vert Y-X^{*}\Vert _{F} \ge (\mathbf {T}_{k} \circ \mathbf {H}_{s}) (Y)\). This implies that \(\mathbf {P}_{\mathcal {M}_{k,s}}(Y)\) needs to be supported in S. \(\square\)

Corollary 2.5

Let \(\delta _{3k,3s}<0.5\) be the RIP constant of \({\mathbf {A}}\) for the set \(\mathcal {M}_{3k,3s}\). Let \(X^{*}\) be the (unique) solution of (1.1) with exactly s nonzero rows, and assume \(\Vert X_{0}-X^{*}\Vert _{F}\le \frac{\mu }{2\Vert {\mathbf {I}}-{\mathbf {A}}^{*}{\mathbf {A}}\Vert _{}}\), where \(\mu\) is the smallest norm among the nonzero rows of \(X^{*}\). Then, the sequence generated by Algorithm 1 with a fixed step-size \(\alpha _{\ell } = 1\) satisfies

Proof

The proof is adapted from [13, Thm. 6.15]. Let V be a linear subset of \(\mathcal M_{k,s} + \mathcal M_{k,s} +\mathcal M_{k,s}\subset \mathcal M_{3k,3s}\). The RIP implies the spectral bounds

for all \(X \in V\). Hence, the restricted operator \(\mathbf {A}_{V}\) satisfies the estimate

for the operator norm. This replaces the use of [13, Lem. 6.16] in the proof of [13, Thm. 6.15]. Lemma 2.4 implies, that for \(\Vert X_{\ell }-X^{*}\Vert _{F}\le \frac{\mu }{2\Vert {\mathbf {I}}-{\mathbf {A}}^{*}{\mathbf {A}}\Vert _{}}\), the quasi-optimal projection is indeed optimal. Hence, the proof technique of [13, Thm. 6.15] can be applied.

The asymptotic rate of convergence however is faster than suggested by Corollary 2.5. To see this, let \(\mathcal {M}_{k,S^{*}}\) denote the variety of matrices with rank at most k and a fixed row support \(S^{*}\subseteq \{1,\dots ,L\}\) with \({\left|S^{*} \right|} = s\) such that \(X^{*}\in \mathcal {M}_{k,S^{*}}\). If \(\text {rank}(X^{*}) = \min (k,s)\), then \(\mathcal {M}_{k,S^{*}}\) is a smooth manifold around \(X^{*}\) and the asymptotic rate depends on a RIP constant of the tangent space of \(T_{X^{*}}\mathcal {M}_{k,S^{*}}\).

Proposition 2.6

Let \(X^{*}\) be a solution of (1.1) with exactly s nonzero rows and assume \(\text {rank}(X^{*}) = \min (k,s)\). Assume the spectral norm of \(\mathbf {I}-\mathbf {A}^{*}\mathbf {A}\) on \(T_{X^{*}}\mathcal {M}_{k,S^{*}}\) is \(\delta < 1\). There exists an \(\epsilon >0\) such that if the the sequence \((X_{\ell })\) generated by Algorithm 1 with stepsize \(\alpha _{\ell }=1\) satisfies \(\Vert X_{\ell }-X^{*}\Vert _{F}\le \epsilon\) for some \(\ell\), then \(X_{\ell }\) converges to \(X^{*}\) and \(\limsup _{\ell \rightarrow \infty }\frac{\Vert X_{\ell +1}-X^{*}\Vert _{F}}{\Vert X_{\ell }-X^{*}\Vert _{F}}\le \delta\).

Note that \(T_{X^{*}}\mathcal {M}_{k,S^{*}} \subseteq \mathcal {M}_{2k,s}\) and hence \(\delta \le \delta _{2k,s}\) as for linear spaces the RIP constant and the spectral norm of \(\mathbf {I}-\mathbf {A}^{*}\mathbf {A}\) coincide. In fact, for Gaussian measurements, the embedding dimension needed to obtain a spectral norm bounded by \(\delta\) with high probability does not require a logarithmic factor, which is why one can generally expect \(\delta\) to be smaller than \(\delta _{2k,s}\) by a square root \(\log\) factor.

Proof

Lemma 2.4 implies that in proximity to the solution \(X^{*}\) the quasi-optimal projection \(\mathbf {T}_{k} \circ \mathbf {H}_{s}\) equals the best approximation \(\mathbf {P}_{\mathcal M_{k,S^{*}}}\) in Frobenius norm onto the manifold \({\mathcal M_{k,S^{*}}}\). For \(X_{\ell }\) close enough to \(X^{*}\), we then get

by linearizing the projection \(\mathbf {P}_{\mathcal M_{k,S^{*}}}\), see [2, Lemma 4]. Next, we exploit that \(X^{*} \in T_{X^{*}}\mathcal {M}_{k,S^{*}}\) and \((\mathbf {I}-\mathbf {P}_{T_{X^{*}}\mathcal {M}_{k,S^{*}}})(X_{\ell }-X^{*}) = o(\Vert X_{\ell }-X^{*}\Vert _{F})\) (see, e.g., [35, Lemma 4.1]) to get

By assumption, \(\Vert \mathbf {P}_{T_{X^{*}}\mathcal {M}_{k,S^{*}}}(\mathbf {I}-\mathbf {A}^{*}\mathbf {A} )\mathbf {P}_{T_{X^{*}}\mathcal {M}_{k,S^{*}}}\Vert _{F}=\delta <1\). This allows to prove to assertion by induction.

3 Riemannian optimization approaches

It is possible to exploit the structure of the set \(\mathcal M_{k,s}\) as an intersection of \(\mathcal {M}_{k}\) and \(\mathcal {N}_{s}\). Since the smooth part of the set \(\mathcal {M}_{k}\) (matrices of rank equal to k) is a connected manifold, it is reasonable to replace the negative gradient \(- \nabla f(X_{\ell }) = -{\mathbf {A}}^{*}({\mathbf {A}}(X_{\ell }) - y)\) by a Riemannian gradient, that is, by its projection on a tangent space. When using the Riemannian metric inherited from the embedding into Euclidean space, the Riemannian gradient is simply given as the orthogonal projection of the Euclidean gradient onto the tangent space of \(\mathcal M_{k}\) at \(X_{\ell }\). In this way, we obtain modifications of IHT with tangential search directions.

3.1 Riemannian IHT

Given the SVD \(X_{\ell }=U^{}_{\ell }\Sigma ^{}_{\ell } V_{\ell }^{\top }\), and assuming \(\text {rank} (X_{\ell }) = k\), the orthogonal projection onto the tangent space is the linear map

see, e.g., [34]. Note that if k is small, then the computation of the projection requires multiplication by tall matrices only. For the cost function (2.1), the projected gradient is

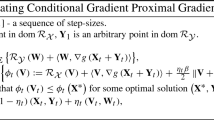

Replacing the gradient in IHT with this projected gradient results in the scheme displayed in Algorithm 2.

Algorithm 2 Riemannian IHT with quasi-optimal projection.

Possible step size rules are again constant steps \(\alpha _{\ell }=1\) or an Armijo-like condition

Without further structure, the tangent space projection has a cost of \(O((M+N)k^{2})\) flops. An advantage of this approach is that application of the quasi-optimal projection \(\mathbf {T}_{k} \circ \mathbf {H}_{s}\) then becomes somewhat cheaper. Indeed, since \(\mathbf {P}_{\ell }(X_{\ell }) = X_{\ell }\), and since elements in the tangent space are of rank at most 2k, a careful implementation of the tangent space projection (see [33, 34]) yields a decomposition

where \(\hat{U} \in {\mathbb R}^{M \times 2k}\) and \(\hat{V} \in {\mathbb R}^{N \times 2k}\) both have pairwise orthonormal columns. To apply \(\mathbf {H}_{s}\), one hence needs to find the s largest rows of \(\hat{U} K\), which has complexity \(O((s+k^{2})M)\) (since \(k \le s\)), as opposed to \(O((s+N)M)\) in Algorithm 1. Since \(\hat{V}\) is already orthogonal, the subsequent computation of a best rank-k approximation requires only an SVD of the resulting \(s \times 2k\) matrix (cost \(O(k^{2}s)\)), as opposed to an \(s \times N\) matrix (cost \(O(s^{2} N)\) if \(s \le N\)). A comparison including the cost of forming \(\mathbf {A}^{*}(\mathbf {A}(X_{\ell }) - y)\) is made in Section 3.2.

Remark 3.1

Formally Algorithm 2 is well defined only as long as the iterates remain of full rank k. In the special case where \(X_{\ell }\) has rank lower than k, we can slightly abuse the above notation and let \(\mathbf{P}_{\ell }\) denote the projection on the tangent cone, which is given, e.g., in [31]. Since the tangent cone is symmetric in 0, it indeed holds \(- \mathbf {P}_{\ell } \nabla f(X) = \mathbf {P}_{\ell } (-\nabla f(X))\). In practice, the rank usually never drops and this issue can be ignored, except for the starting point in zero, which for this reason we have stated explicitly as \(X_{1}= (\mathbf {T}_{k} \circ \mathbf {H}_{s}) (\alpha _{0} \mathbf {A}^{*} y)\). Here, the initial step size is calculated using the Armijo-rule (3.2) with \(X_{0} = 0\).

Remark 3.2

It would also make sense to use the quasi-projection \(\mathbf {H}_{s} \circ \mathbf {T}_{k}\) instead of \(\mathbf {T}_{k} \circ \mathbf {H}_{s}\), that is,

This can be interpreted as Riemannian gradient method on \(\mathcal M_{k}\) with retraction \(\mathbf {T}_{k}\), see [1, 2], but with additional thresholding by \(\mathbf {H}_{s}\). Depending on s and k, this order of projections can be implemented even more efficiently in many cases. On the other hand, our experiments have shown that the improvement is negligible unless \(s \gg 2k\), in particular taking the more costly gradient computation into account. For the choice of initial point \(X_{1}\), however, it appears to be very important to truncate the \(M-s\) smallest rows of \(\mathbf {A}^{*}(y)\) before the rank-k truncation as in Algorithm 2, and not the other way round, as this greatly improves the success rate. After that initialization, we did not observe a significant difference of the two orderings and therefore kept it consistent with Algorithm 1.

We now present a local convergence result for Algorithm 2 with constant stepsize \(\alpha _{\ell }=1\) and under similar RIP conditions as for Algorithm 1. Note that the statement is the same as in Proposition 2.6.

Proposition 3.3

Let \(X^{*}\) be a solution of (1.1) with exactly s nonzero rows and assume \(\text {rank}(X^{*}) = \min (k,s)\). Assume the spectral norm of \(\mathbf {I}-\mathbf {A}^{*}\mathbf {A}\) on \(T_{X^{*}}\mathcal {M}_{k,S^{*}}\) is \(\delta < 1\). There exists an \(\epsilon >0\) such that if the the sequence \((X_{\ell })\) generated by Algorithm 2 with stepsize \(\alpha _{\ell }=1\) satisfies \(\Vert X_{\ell }-X^{*}\Vert _{F}\le \epsilon\) for some \(\ell\), then \(X_{\ell }\) converges to \(X^{*}\) and \(\limsup _{\ell \rightarrow \infty }\frac{\Vert X_{\ell +1}-X^{*}\Vert _{F}}{\Vert X_{\ell }-X^{*}\Vert _{F}}\le \delta\).

Proof

The proof is similar to the one of Proposition 2.6. We first note that in a neighborhood of \(X^{*}\) the projection \(\mathbf {H}_{s}\) equals the projection \(D_{S^{*}}\) onto the row support of \(X^{*}\), which is a linear operator represented by a diagonal matrix. Next, we also linearize the projection \(\mathbf {T}_{k}\) at the point \(X^{*}\), and approximate the tangent space projection \(P_{T_{X_{\ell }}\mathcal M_{k}}\) by \(\mathbf {P}_{T_{X^{*}}\mathcal M_{K}}\) using \(\Vert \mathbf {P}_{T_{X_{\ell }}\mathcal M_{K}}- \mathbf {P}_{T_{X^{*}}\mathcal M_{K}}\Vert \le c\Vert X_{\ell }-X^{*}\Vert _{F}\) in spectral norm for some \(c > 0\) (for instance \(c = \frac{1}{\sigma _{k}(X^{*})}\), see, e.g., [35, Lemma 4.2]). We get

where for the last equality we have used \(X^{*} \in T_{X^{*}} \mathcal M_{k}\). Let now \(X^{*}=U\Sigma V^{\top }\) be a singular value decomposition. Since U has row support \(S^{*}\), we have

for any Z. Recalling the formula (3.1), this shows that the projections \(D_{S^{*}}\) and \(\mathbf {P}_{T_{X^{*}}\mathcal {M}_{k}}\) commute. Therefore, \(D_{S^{*}} \mathbf {P}_{T_{X^{*}}\mathcal {M}_{k}} = \mathbf {P}_{T_{X^{*}} \mathcal M_{k}} D_{S^{*}} = \mathbf {P}_{T_{X^{*}}\mathcal M_{k,S^{*}}}\). As in the proof of Proposition 2.6, we arrive at

which for any \(\varepsilon > 0\) can be bounded by \((\delta + \varepsilon )\Vert X_{\ell }-X^{*}\Vert _{F}\) for \(X_{\ell }\) close enough to \(X^{*}\). This implies the local convergence at the asserted asymptotic rate.

3.2 Improved numerical complexity for rank-one measurements

In practice, Algorithms 1 and 2 often perform equally well. The main difference is that Algoritm 2 uses the projected gradient on the tangent space of (the smooth part of) \(\mathcal M_{k}\). Thus, a potential performance gain is tied to the question whether the low dimensionality of these tangent spaces can be exploited to achieve a lower computational complexity. It turns out that this is the case in the important scenario of rank-one measurements, which occurs frequently in the literature.

The main bottleneck in both algorithms is forming \(\mathbf {A}^{*}(\mathbf {A}(X)-y)\) or its projected version. Rank-one measurements take the form

In this case, forming \({\mathbf {A}}(X_{\ell }) - y\) for an \(X\in \mathcal M_{k,s}\) that is already in the form \(X^{}_{\ell } = U^{}_{\ell } \Sigma ^{}_{\ell } V_{\ell }^{\top }\) with \(\Vert U_{\ell } \Vert _{0} \le s\) needs only \(O(k(s+N)m)\) flops. In the Riemannian version, the application of the dual operator and projection to the tangent space can be combined in the following way:

The cost for this is \(O(k(N+M)m)\) since \(U_{\ell }\) and \(V_{\ell }\) as well as the matrices in the sums are \(M\times k\) and \(N\times k\) matrices. Note that in a careful implementation, only the terms in the brackets need to be computed to represent the tangent vector. From this representation, it is possible to apply the projections \(\mathbf {H}_{s}\) and \(\mathbf {T}_{k}\) efficiently as mentioned above.

In the non-Riemannian version in Algorithm 1, the tangent space projection is not applied. For rank-one measurements, \({\mathbf {A^{*}}}(\mathbf {A}(X) - y)\) is a sum of m rank-one matrices, but this does not help since usually \(m \ge N\). The cost remains O(mNM).

We conclude that in the case of rank-one measurements, if k is much smaller than N, the Riemannian method should be computationally beneficial. This is confirmed by our numerical experiment in Section 4.2. Table 1 contains the complexities for the main steps in both algorithms. Note that unlike for Gaussian measurements, we usually cannot expect an RIP to hold for rank-one measurements and therefore even the local convergence result in Proposition 3.3 might not be applicable. It would be interesting to study under which conditions the contractivity of \(\mathbf {I }-\mathbf {A}^{*}\mathbf {A}\) on the tangent space \(T_{X^{*}}\mathcal {M}_{k,S^{*}}\) as required in this proposition can be guaranteed for rank-one measurements, but we do not pursue this here.

3.3 Soft-thresholding as a Riemannian proximal gradient method

In the following, we consider the case where the rank k is known but the sparsity parameter s is not. Our main application of blind deconvolution falls exactly into this category for the special case \(k=1\). Both methods proposed above can be made adaptive with respect to s by selecting in every step a threshold on the row norm to decide which rows to keep. A well-established approach is soft thresholding. Here we show that such an approach can be interpreted as a Riemannian proximal gradient method on the manifold \(\mathcal M_{k}\). We remark that soft thresholding could in principle also be applied to the rank if it is unknown but this case is not considered.

The method is derived as follows. For unknown s, to promote a row-sparse solution, it is common to use the (1, 2)-norm

as a convex penalty. Here, X(i, : ) denotes the i-th row of X. The task is then to minimize the function \(f(x) + \mu g(x)\) with a penalty parameter \(\mu > 0\). Note that g is not differentiable in points X having zero rows. Since both the function f and g are convex on \(\mathbb {R}^{M \times N}\), an intuitive approach would be to consider methods like the proximal gradient descent as presented, e.g., in [5, 30]. These methods consist in applying the so-called prox operator to \(\mu g\) after the gradient step for f. However, prox operators are usually defined on convex domains. In our case, we consider a non-convex definition on the manifold \(\mathcal M_{k}\) instead:

For general g, we cannot evaluate such an operator easily, as it technically involves optimization of a local Lipschitz function on a manifold. However, as it turns out, for the particular choice of the (1, 2)-norm, and for inputs \(Y \in \mathcal M_{k}\), the prox operator simply coincides with the prox operator on the full space \(\mathbb {R}^{M \times N}\), since the latter does not increase the rank. Its closed form solution is given via soft thresholding of rows. For completeness, we provide a proof of this observation.

Proposition 3.4

For given \(Y \in \mathbb {R}^{M \times N}\) and \(\mu > 0\), the prox operator for the function \(\mu g\) on \(\mathbb {R}^{M \times N}\) has the closed form

where for each row \(\mathbf{y}_{i} \in \mathbb {R}^{N}\) of Y, \(\mathcal S_{1,2}^{\mu }(Y)\) is the soft thresholding operator

In particular, if \(Y \in \mathcal M_{k}\), then also \(\text {prox}_{\mu g}^{\mathcal M_{k}}(Y) = \mathbf {S}_{1,2}^{\mu }(Y)\).

Proof

Since the function g is convex in the ambient space, there exists exactly one solution

The equivalent optimality condition is \(0 \in \partial g(X^{*}) + \frac{1}{\mu }(X^{*} - Y)\). For each nonzero row \(\mathbf{x}_{\ell }^{*} \ne 0\) of \(X^{*}\), this means

Due to \(\mu > 0\), this is only possible if \(\Vert \mathbf{y}\Vert > \mu\), in which case we must have \(\mathbf{x}_{\ell }^{*} = \frac{\Vert \mathbf{y}_{i} \Vert - \mu }{\Vert \mathbf{y}_{i} \Vert }\). This shows (3.6). For the second statement, we first note that \(\mathbf {S}_{1,2}(Y)\) acts on Y by multiplication of a diagonal matrix, and therefore does not increase the rank. Since the argmin in (3.4) is taken over a subset of \(\mathbb {R}^{M \times N}\), we must have \(\text {prox}_{\mu g} (Y) = \text {prox}^{\mathcal M_{k}}_{\mu g} (Y)\) if \(Y \in \mathcal M_{k}\).

By analogy to proximal gradient methods, we combine the prox operation on \(\mathcal M_{k}\) with a Riemannian gradient descent for minimizing f on \(\mathcal M_{k}\). Using again the inherited Euclidean metric on \(\mathcal M_{k}\) and \(\mathbf {T}_{k}\) as a retraction, this results in the following iteration

which can be regarded as a Riemannian version of proximal gradient descent. Note that this formulation differs from other possible generalizations of proximal gradient methods on manifolds [8, 16] which are based on minimization of quadratic models on the tangent spaces for finding an appropriate search direction. In our formulation above, while only applicable in this specific setup, the closed form solution of the prox operator on the manifold is available, which makes it a very intuitive alteration of the original algorithm. The full scheme is displayed in Algorithm 3.

Algorithm 3 A Riemannian proximal gradient method.

In the proposed algorithm, the thresholding parameter \(\mu\) is reduced by a factor \(\tau\) in each iteration. Different values of \(\tau\) can be used depending on the problem. Other heuristics for selecting \(\mu\) are possible as well. We comment on our implementation of the algorithm in the experiment section.

Again, we suggest to initialize the algorithm with \((\mathbf {T}_{k} \circ \mathbf {H}_{s_{0}})(\alpha _{0} \mathbf {A}^{*} y)\), where \(s_{0}\) is a guess for the a priori unknown sparsity s of the solution and \(\alpha _{0}\) is the Armijo step size. As we have already emphasized above, the choice of the starting point has proven to be an important step and the success of the Riemannian proximal gradient method will be somewhat limited by the missing knowledge of s. In the experiments, we picked \(s_{0} = \min (M,(m + k(k - N))/k)\), which is the maximal row sparsity that can in theory be detected with a given number of measurements m from the degrees of freedom, but there was no significant improvement compared to a non-sparse starting point.

For the soft thresholding parameter \(\mu\), we propose the rule \(\mu =\tau ^{\ell } \max _{k}(\Vert \mathbf {T}_{k}(X_{\ell } - \alpha _{\ell } \mathbf {P}_{\ell } \mathbf {A}^{*}(\mathbf {A}(X_{\ell }) - y))_{i} \Vert : i = 1,\ldots ,M )\), i.e., the norm of the k-th largest row of the current iterate. For sufficiently large \(\tau\), this will set all but k of the rows of \(X_{2}\) to zero but ensures that the ones with largest norms remain active.

For determining the step sizes \(\alpha _{\ell }\), we again propose to use a line search method based on gradient step only, that is,

Remark 3.5

We remark that Algorithm 3 can be formally derived from the Riemannian IHT method in Algorithm 2 by switching to the quasi-optimal projection \({\hat{\mathbf {P}}}_{k,s} = \mathbf {H}_{s} \circ \mathbf {T}_{k}\) (cf. Remark 3.2), and then replacing the hard thresholding operator \(\mathbf {H}_{s}\) with the operator \(\mathbf {S}^{\mu }_{1,2}\). For soft thresholding, this order of first truncating the rank before selecting the rows indeed is convenient due to Proposition 3.4. The potential alternative of applying first soft thresholding and then rank truncation caused inconsistent behavior in the Armijo line search in our experiments.

4 Numerical experiments

In this section, we present some results of numerical experiments with the proposed algorithms. In the first set of results, we consider recovery of synthetic data using Gaussian measurements. In the second, we use random rank-one measurements and also test the algorithms for a blind deconvolution problem.

4.1 Recovery with Gaussian measurements

For the recovery problem (1.1), we compare the success rates of Algorithms 1 and 2 for different row sparsity levels s and different column sizes N when using random Gaussian measurements. Specifically, we generate a random matrix \(X^{*} \in \mathcal M_{k,s}\) and take m measurements \(y_{p} = \langle A_{p}, X^{*} \rangle _{F}\) with normally distributed \(A_{p} \sim \mathcal N(0,\frac{1}{\sqrt{m}})\). We fix the row dimension \(M = 1000\) and the rank \(k = 3\).

Figure 1 shows a phase transition plot for different numbers of measurements (\(m = \mathrm {round}(1.2^{j}), j = 18,\ldots ,36\)) on the y-axis and different row-sparsity (\(s = \mathrm {round}(1.2^{j}), j = 6,\ldots ,15\)) on the x-axis. The values for m and s were empirically chosen because they yielded the most expressive results. The grayscale denotes the success rate for parameter setting, where white means no success and black means \(100\%\) success. Both algorithms were tested with a fixed stepsize \(\alpha _{\ell } = 1\) (on the left) and with an adaptive stepsize using an Armijo linesearch (on the right). The column size is always taken to be equal to the sparsity, that is, \(N = s\), and we performed each experiment 10 times. The initial points were taken as \(X_{1} = (\mathbf {T}_{k} \circ \mathbf {H}_{s})(\mathbf {A}^{*} y)\), which we found to be crucial for the overall performance.

Success rate of IHT (upper left), adaptive IHT (upper right), Riemannian IHT (lower left), and Riemannian adaptive IHT (lower right) out of 10 tries on a loglog scale. The row-sparsity s is on the x-axis and equals the column size N, and the number of measurements m is on the y-axis. The rank is \(k = 3\) and row size \(M = 1000\). The red line has slope one, indicating linear dependence on s and N

We can see that the algorithms with adaptive stepsize are in general more often successful. Note that the plots are provided on a loglog scale. Therefore, since we have set \(N= s\), a line with slope one (depicted in red) indicates linear dependence on \(s+N\) (as opposed to, e.g., linear dependence on sN, which would have slope two). The right plots therefore indeed suggest such a linear dependence \(m = O(s+N)\) for successful recovery when adaptive stepsizes are used. Recall that for a fixed rank k such a scaling is optimal. In the left plots with fixed stepsize, it is a bit more difficult to recognize the slope of the transition line, which could be slightly larger than one.

Success rate of IHT (upper left), adaptive IHT (upper right), Riemannian IHT (lower left), and Riemannian adaptive IHT (lower right) out of 10 tries. The row-sparsity s is on the x-axis, and the column size N is on the y-axis. The number of measurements is \(m = 300\), the rank is \(k = 3\), and the row size is \(M = 1000\)

In a second experiment, we fixed the number of Gaussian measurements \(m = 300\) and varied the row-sparsity s and the column size N independently. The row size was again \(M = 1000\) and rank \(k = 3\). The results are given in Fig. 2. Note that the axes have a linear scale in this experiment. The algorithms with adaptive stepsize perform clearly better. The precise relation between s and N for the transition curve is, however, difficult to assess from these plots.

We can also compare the convergence speed of each algorithm in terms of iteration numbers. Figure 3 shows the relative errors to the exact solution for two different parameter settings, one that was borderline in the previous experiments (on the left) and another one for which all algorithms find the solution with ease (on the right). The observed behavior, however, was actually almost the same for other cases. We can see that the methods with adaptive stepsize converge faster, perhaps even superlinearly, although they are of course more costly. For fixed stepsize, the Riemannian method outperforms its classical counterpart but the rate of convergence is the same.

Left: success rate of the Riemannian proximal gradient method out of 10 tries on a loglog scale. The sparsity s is equal to the matrix dimension N on the x-axis, and the number of measurements m is on the y-axis. The rank is \(k = 3\) and \(M = 1000\). The red line has again slope 1 and corresponds to linear dependence on s and N. Right: number of iterations against relative error. The number of measurements is \(m = 520\), the sparsity \(s = 20\), and the dimension \(N = 10\). The rank is \(k = 3\) and \(M = 1000\)

Finally, we present a proof of concept for the Riemannian proximal gradient method in Algorithm 3, see Fig. 4. Specifically, we tested this method in the same setting as the first experiment. The factor \(\tau\) that decreases the thresholding parameter \(\mu\) in each step was set to \(\tau = 0.99\), that is, \(\mu\) is decreased by 1% per step. We used adaptive stepsizes with linesearch and the initial guess \(X_{1} = (\mathbf {T}_{k} \circ \mathbf {H}_{s_{0}})(\alpha _{0} \mathbf {A}^{*} y)\), as discussed in Section 3.3. As can be seen, the success rate of this method is lower than for the previous algorithm, which is natural since the sparsity parameter s is unknown here. The deviation from the red line with slope 1 could be due to the effect of the different initialization, which is more prominent for small sparsity. Yet, for larger s, the dependence of the required measurements m on s and N seems linear and hence optimal as well. We also repeated the borderline case from Fig. 3 and observe slow but linear convergence.

4.2 Rank-one measurements and blind deconvolution

We now examine the case of rank-one measurements, where

As discussed in Section 3.2, using rank-one measurements enables a more efficient evaluation of gradients and tangent space projections. In particular, when implemented accordingly, we expect the Riemannian version of IHT to be faster than the standard version.

We consider two experiments with rank-one measurements. In the first, we take random rank-one measurements on synthetic data. Specifically we choose \(a_{p} \sim \mathcal N(0,1)\) and \(b_{p} \sim \mathcal N(0,\frac{1}{\sqrt{m}})\) to closely match the setting of random gaussian measurements (that is, with the correct scaling).

In the second experiment, we use deterministic rank-one measurements based on the discrete Fourier transform. This setting can be motivated from applications in blind deconvolution. Consider the convolution

of two real vectors of length m where the indices are to be considered modulo m. The inverse operation, where both w and z are reconstructed from their convolution \(w*z\), is called blind deconvolution. In general, this is of course an ill-posed problem. A common assumption that renders a recovery possible is that w and z lie in some known subspaces, that is, \(w= Bu\) and \(z=Cv\) for some \(B \in \mathbb {R}^{m \times M}\) and \(C \in \mathbb {R}^{m \times N}\). As suggested in [3], one can then recast the problem as a linear recovery task for a rank-one matrix. More precisely, one can diagonalize the action of \(*\) using the (unitary) discrete Fourier transform \(F = [\frac{1}{\sqrt{m}}\exp (- \frac{i 2 \pi (k-1)(j-1)}{ m})]_{jk}\) which yields

Here, the last equality implicitly defines the linear operator \(\mathbf {A}:\mathbb {R}^{M\times N}\rightarrow \mathbb {R}^{m}\). This is possible since every bilinear map in (u, v) can be lifted to a linear map acting on \(uv^{\top }\). In certain applications, the vector u can also be assumed to be sparse. We therefore obtain an instance of our problem (1.1) with \(k=1\). For further references, see, e.g., [18].

To see that the operator \(\mathbf {A}\) defined in this way performs rank-one measurements, one verifies that

\((FB)_{p,:}\) and \((FC)_{p:}\) denote the p-th row of FB and FC respectively. Indeed, after a suitable reshape, the operator \(\mathbf {A}\) effectively becomes the (row-wise) Khatri-Rao product of FB and FC, that is,

This representation allows us to show that \(\mathbf {A}^{*} \mathbf {A}\) is a real operator, since

Since B and C are real matrices, it suffices to show that the last sum is real. But this holds since for \(p,k,\ell \in \{1,\ldots ,m\}\), by elementary manipulations,

and the rows and columns of F are unitary. Therefore, while \(y = \mathbf {A}(uv^{\top })\) is a complex vector, the problem itself as well as all steps in the algorithm remain real. For the efficient implementation of the action of \(\mathbf {A}\) and \(\mathbf {A}^{*}\) as in (3.3), however, some obvious modifications are required.

Success rate of adaptive IHT (left), Riemannian adaptive IHT (middle), and Riemannian proximal gradient method (right) out of 20 tries using for random rank-one measurements (top) and the discrete Fourier transform (bottom). The matrix dimensions are \(M = 150\) and \(N = 50\). The sparsity s is on the x-axis, and the number of measurements m is on the y-axis. The rank is \(k = 1\)

In both experiments, we set \(M = 150\) and \(N = 50\). An exact solution \(X^{*} = u v^{\top }\) of rank \(k=1\) is generated by picking a random matrix \(\hat{X} \sim \mathcal N(0,1)\) of size \(s \times N\), computing its best rank-one approximation, and randomly distributing the resulting rows in a matrix of size \(M \times N\). We then run the three algorithms with input \(y = \mathbf {A}(X^{*})\) for different values m of rank-one measurements. For the Riemannian proximal gradient method, we chose the decrease of the thresholding to be \(\tau = 0.999\), that is, a 0.1% decrease in each step.

In Fig. 5, we show the phase transition plot for the two settings and the algorithms with adaptive stepsize, which performed better in the general setting and for the Riemannian proximal gradient method with unknown sparsity s. Again, the grayscale denotes the success rate for the different parameters m and s. We performed 20 tries for each setting as this yielded a sharper outline of the success rate. In Table 2, we report computational times and iteration numbers for both settings and the three algorithms in terms of the relative error. Here, the number of measurements was \(m = 200\) and the row sparsity \(s = 3\). Note that for \(m \sim M + N = 200\), one expects convergence even without the sparsity constraint; however, we have observed that this is true only up to a large constant. We implemented the three methods in a comparable fashion, exploiting the structure of the rank-one measurements as discussed in Section 3.2. The computing time was measured on an Intel Core i7-10510U with 16 GB memory.

We can see that the adaptive IHT and the adaptive Riemannian IHT perform well in these experiments, especially for the Fourier measurements. The Riemannian method can be slightly better in terms of recovery, and significantly faster than the adaptive standard IHT method (\(\sim 40\%\) improvement for random measurements and \(50-60\%\) for Fourier measurements).

The Riemannian proximal gradient method is capable of detecting the row sparsity but it has a lower success rate, and is also quite slow. We have found that this is almost entirely due to the choice of the starting point that can be chosen without the knowledge of the sparsity parameter s. Therefore, this algorithm can clearly be improved upon with some extra work on the start point. In any case, the relatively good success rate makes this a promising approach for further research in cases where the sparsity is not known a priori.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization algorithms on matrix manifolds. Princeton University Press, Princeton (2008)

Absil, P.-A., Malick, J.: Projection-like retractions on matrix manifolds. SIAM J. Optim. 22(1), 135–158 (2012)

Ahmed, A., Recht, B., Romberg, J.: Blind deconvolution using convex programming. IEEE Trans. Inform. Theory 60(3), 1711–1732 (2014)

Bahmani, S., Romberg, J.: Near-optimal estimation of simultaneously sparse and low-rank matrices from nested linear measurements. Inf. Inference 5(3), 331–351 (2016)

Beck, A.: First-order methods in optimization. PA; Mathematical Optimization Society, Philadelphia, PA, Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2017)

Cai, J.-F., Li, J., Lu, X., You, J.: Sparse signal recovery from phaseless measurements via hard thresholding pursuit. Appl. Comput. Harmon. Anal. 56, 367–390 (2022)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inform. Theory 52(2), 489–509 (2006)

Chen, S., Ma, S., So, A.M.-C., Zhang, T.: Proximal gradient method for nonsmooth optimization over the Stiefel manifold. SIAM J. Optim. 30(1), 210–239 (2020)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inform. Theory 52(4), 1289–1306 (2006)

Eldar, Y.C., Mendelson, S.: Phase retrieval: stability and recovery guarantees. Appl. Comput. Harmon. Anal. 36(3), 473–494 (2014)

Fornasier, M., Maly, J., Naumova, V.: Robust recovery of low-rank matrices with non-orthogonal sparse decomposition from incomplete measurements. Appl. Math. Comput. 392, 125702 (2021)

Foucart, S., Gribonval, R., Jacques, L., Rauhut, H.: Jointly low-rank and bisparse recovery: questions and partial answers. Anal. Appl. (Singap.) 18(1), 25–48 (2020)

Foucart, S., Rauhut, H.: A mathematical introduction to compressive sensing. Birkhäuser/Springer, New York (2013)

Geppert, J., Krahmer, F., Stöger, D.: Sparse power factorization: balancing peakiness and sample complexity. Adv. Comput. Math. 45(3), 1711–1728 (2019)

Haeffele, B.D., Vidal, R.: Structured low-rank matrix factorization: global optimality, algorithms, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 42(6), 1468–1482 (2020)

Huang, W., Wei, K.: Riemannian proximal gradient methods. Math. Program. 194(1-2, Ser. A), 371–413 (2022)

Iwen, M., Viswanathan, A., Wang, Y.: Robust sparse phase retrieval made easy. Appl. Comput. Harmon. Anal. 42(1), 135–142 (2017)

Jung, P., Krahmer, F., Stöger, D.: Blind demixing and deconvolution at near-optimal rate. IEEE Trans. Inform. Theory 64(2), 704–727 (2018)

Kech, M., Krahmer, F.: Optimal injectivity conditions for bilinear inverse problems with applications to identifiability of deconvolution problems. SIAM J. Appl. Algebra Geom. 1(1), 20–37 (2017)

Kliesch, M., Szarek, S.J., Jung, P.: Simultaneous structures in convex signal recovery – revisiting the convex combination of norms. Front. Appl. Math. Stat. 5 (2019)

Krahmer, F., Stöger, D.: On the convex geometry of blind deconvolution and matrix completion. Comm. Pure Appl. Math. 74(4), 790–832 (2021)

Lee, K., Wu, Y., Bresler, Y.: Near-optimal compressed sensing of a class of sparse low-rank matrices via sparse power factorization. IEEE Trans. Inform. Theory 64(3), 1666–1698 (2018)

Li, X., Ling, S., Strohmer, T., Wei, K.: Rapid, robust, and reliable blind deconvolution via nonconvex optimization. Appl. Comput. Harmon. Anal. 47(3), 893–934 (2019)

Li, Y., Lee, K., Bresler, Y.: Identifiability in blind deconvolution with subspace or sparsity constraints. IEEE Trans. Inform. Theory 62(7), 4266–4275 (2016)

Ling, S., Strohmer, T.: Blind deconvolution meets blind demixing: algorithms and performance bounds. IEEE Trans. Inform. Theory 63(7), 4497–4520 (2017)

Ling, S., Strohmer, T.: Regularized gradient descent: a non-convex recipe for fast joint blind deconvolution and demixing. Inf. Inference 8(1), 1–49 (2019)

Magdon-Ismail, M.: NP-hardness and inapproximability of sparse PCA. Inform. Process. Lett. 126, 35–38 (2017)

Maly, J.: Robust sensing of low-rank matrices with non-orthogonal sparse decomposition. arXiv:2103.05523 (2021)

Oymak, S., Jalali, A., Fazel, M., Eldar, Y.C., Hassibi, B.: Simultaneously structured models with application to sparse and low-rank matrices. IEEE Trans. Inform. Theory 61(5), 2886–2908 (2015)

Parikh, N., Boyd, S.: Proximal algorithms. Foundations and Trends in Optimization 1(3), 127–239 (2014)

Schneider, R., Uschmajew, A.: Convergence results for projected line-search methods on varieties of low-rank matrices via Łojasiewicz inequality. SIAM J. Optim. 25(1), 622–646 (2015)

Soltanolkotabi, M.: Structured signal recovery from quadratic measurements: breaking sample complexity barriers via nonconvex optimization. IEEE Trans. Inform. Theory 65(4), 2374–2400 (2019)

Uschmajew, A., Vandereycken, B.: Geometric methods on low-rank matrix and tensor manifolds. In: Grohs, P., Holler, M., Weinmann, A. (eds.) Handbook of Variational Methods for Nonlinear Geometric Data, pp. 261–313. Springer, Cham (2020)

Vandereycken, B.: Low-rank matrix completion by Riemannian optimization. SIAM J. Optim. 23(2), 1214–1236 (2013)

Wei, K., Cai, J.-F., Chan, T.F., Leung, S.: Guarantees of Riemannian optimization for low rank matrix recovery. SIAM J. Matrix Anal. Appl. 37(3), 1198–1222 (2016)

Funding

Open Access funding enabled and organized by Projekt DEAL. F.K. was partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation), project KR 4512/2-2, in the context of the priority program 1798; M.P. was partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – Projektnummern 448293816; 211504053 - SFB 1060.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eisenmann, H., Krahmer, F., Pfeffer, M. et al. Riemannian thresholding methods for row-sparse and low-rank matrix recovery. Numer Algor 93, 669–693 (2023). https://doi.org/10.1007/s11075-022-01433-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01433-5