Abstract

We extend results known for the randomized Gauss-Seidel and the Gauss-Southwell methods for the case of a Hermitian and positive definite matrix to certain classes of non-Hermitian matrices. We obtain convergence results for a whole range of parameters describing the probabilities in the randomized method or the greedy choice strategy in the Gauss-Southwell-type methods. We identify those choices which make our convergence bounds best possible. Our main tool is to use weighted ℓ1-norms to measure the residuals. A major result is that the best convergence bounds that we obtain for the expected values in the randomized algorithm are as good as the best for the deterministic, but more costly algorithms of Gauss-Southwell type. Numerical experiments illustrate the convergence of the method and the bounds obtained. Comparisons with the randomized Kaczmarz method are also presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Classical stationary iterations such as Jacobi and Gauss-Seidel (see, e.g., [7, 26, 34]) to solve a square linear system

nowadays are found to be useful in many situations, such as smoothers for multigrid methods (see, e.g., [25, 33]), in high-performance computing (see, e.g., [36]) and in particular as scaffolding for methods for discretized PDEs based on domain decomposition; see, e.g., [10, 21, 28].

In recent years, randomized algorithms have gained a lot of attention in numerical computation; see, e.g., the surveys [18] and [22]. Many different randomized methods and algorithms have in particular been suggested and analyzed for the solution of consistent and inconsistent, square and non-square linear systems; see, e.g., [11]. These methods are attractive in situations which typically arise in an HPC or a data science context when matrix products are considerably expensive or when the matrix is so large that it does not fit in main memory; see, e.g., the discussion in the recent paper [12].

For linear systems, the emphasis has been so far on randomized coordinate descent-type algorithms. These methods aim at finding the minimizer of a convex functional \(f: \mathbb {C} \to \mathbb {C}\), the minimizer being the solution of the linear system. The methods perform a sequence of relaxations, where in each relaxation a coordinate i is chosen at random and the current iterate x is modified to become x + tei,ei the i-th unit vector and t such that f(x + tei) is minimal. Many convergence results on randomized coordinate descent methods are known, see [11, 24], e.g., and the typical assumption is that f is at least differentiable.

If \(A \in \mathbb {C}^{n \times n}\) in (1) is Hermitian and positive definite (hpd), and we take f(x) = x∗Ax − 2x∗b, minimizing in coordinate i is equivalent to solving equation i of (1) with respect to xi. The resulting randomized coordinate descent method is thus a randomized version of the Gauss-Seidel method, and an analysis of this randomized method was given in [19] and also, in the more general context of randomized Schwarz methods in Hilbert spaces, in [13]. See also [1].

If A in (1) is not hpd, the typical approach to obtain a randomized algorithm is to consider randomized Kaczmarz methods, i.e., coordinate descent for one of the normal equations A∗Ax = A∗b or AA∗y = b with corresponding convex and differentiable functionals f1(x) = x∗A∗Ax − 2x∗A∗b and f2(y) = y∗AA∗y − 2y∗b, respectively. This approach can also be pursued when A is non-square, and the system may be consistent or inconsistent. Note that the original Kaczmarz method [17] corresponds to coordinate descent for f2 with an integrated back-transformation from the iterate y to x = A∗y. We emphasize that while coordinate descent for f1 is often also termed “Gauss-Seidel,” it is different from classical Gauss-Seidel directly applied to (1), which is what we focus on in this paper. There is a tremendous amount of literature dealing with randomized Kaczmarz-type algorithms which we cannot cite exhaustively here. Recent publications include [2,3,4,5,6, 8, 12, 14,15,16, 20, 29, 30, 35, 37].

Now, when A is square and nonsingular, considering a randomized version of the Gauss-Seidel method applied to the linear system (1) directly is an attractive alternative to the randomized Kaczmarz-type approaches, since under appropriate conditions on A it converges more rapidly and requires less work per iteration. This is known to be the case when A is hpd; see the papers [13] and [19] mentioned earlier. The main new contribution of the present work is a convergence analysis for randomized Gauss-Seidel also for the case when A is generalized diagonally dominant. An important methodological aspect of our work is that for A generalized diagonally dominant we do not directly relate Gauss-Seidel for (1) to an equivalent coordinate descent method for an appropriate convex functional. Our technique of proof will, nevertheless, rely on showing that the iterates x reduce—but, as opposed to gradient descent, do not necessarily minimize—a weighted ℓ1-norm of the residual b − Ax. Note that ℓ1-norms are not differentiable and that weighted ℓ1 (and \(\ell _{\infty }\)) -norms arise canonically in the context of generalized diagonal dominance; see, e.g., [7, 34]. As a “by-product” of our analysis, we will also obtain convergence results of greedy choice algorithms of Gauss-Southwell type, see below.

In this paper, we consider general methods based on a splitting A = M − N so that with H = M− 1N and c = M− 1b one obtains the (affine) fixed point iteration

as an iterative solution method for Ax = b. In particular, the solution x∗ of (1) is a fixed point of (2). Usually, the matrix H is never formed. Instead, a linear system with the coefficient matrix M is solved at each iteration m. In this general splitting framework, the Gauss-Seidel method is characterized by M being the lower triangular part of A, and the method is equivalent to relaxing one row at a time in the natural order 1,2,…,n. In other terms, if we write the classical Jacobi splitting A = D − B, D being the diagonal of A, then, with H = D− 1B = I − D− 1A, we have the following rendition of the Gauss-Seidel algorithm, using a “global” index k for each single relaxation.

Sequential relaxation for (2) (“Gauss-Seidel” if H = D− 1B).

Each update from k to k + 1 is termed one relaxation, and n such relaxations, since they are done one after the other, correspond to one iteration m in (2) with H = (D − L)− 1U, where − L and − U denote the lower and upper triangular part of A, respectively (and B = L + U). So, n successive relaxations of a “Jacobi-type,” i.e., with H = I − D− 1B, performed in the natural order 1,2,…,n, are identical to one iteration of Gauss-Seidel.

Gauss in fact proposed another method, later popularized by Southwell, and known either as Southwell method or as Gauss-Southwell method; see, e.g., the historic review of these developments in [27]. We describe this method in Algorithm 1 for a general splitting based method where H = M− 1N with A = M − N. The Gauss-Southwell method arises for H = D− 1B. The original Gauss-Southwell method selects the component i to be relaxed as the one at which the residual rk = b − Axk is largest, i.e., it takes i for which

As a consequence, we are not updating components in a prescribed order, but rather choose the row to relax to be the one for which the current residual has its largest component. This can be considered a greedy pick strategy, and we formulate Algorithm 2 in a manner to allow for general greedy pick rules.

Greedy relaxation for (2) (“Gauss-Southwell” if H = D− 1B).

A generalization of the greedy pick rule (3) is to fix weights βi > 0 and choose i such that

cf. [13], and we will see later that for appropriate choices of the βi we can prove better convergence bounds than for the standard greedy pick rule (3).

Both greedy pick rules (3) and (4) require an update of the residual after each relaxation, which represents extra work. Moreover, additional work is required for computing the maximum. Using the “preconditioned” residual \(\hat {r}^{k} = M^{-1}r^{k} = c - (I-H)x^{k}\) can to some extent reduce this overhead: Once \(\hat r^{k}\) is computed, the next relaxation \(x_{i}^{k+1} = {\sum }_{j=1}^{n} h_{ij}{x_{j}^{k}} + c_{i}\) can be obtained easily as

We might therefore want to use a greedy pick rule based on the preconditioned residuals, which, using fixed weights βi as before, can be formulated as

It has been demonstrated that Gauss-Southwell can indeed converge in fewer relaxations than Gauss-Seidel, but the total computational time is often higher, due to the computation of the maximum, and, in a parallel setting, the added cost of communication [36].

In this paper, we discuss the greedy relaxation scheme of Algorithm 2 as well as a randomized version of Algorithm 1, which for H = D− 1B is usually called randomized Gauss-Seidel. We give bounds on the expected value of the residual norm which match analogous convergence bounds for the greedy algorithm.

The randomized iteration derived from (2) fixes probabilities pi ∈ (0,1),

i = 1,…,n, with \({\sum }_{i=1}^{n} p_{i} = 1\) and proceeds as follows.

Randomized iteration for (2) (“randomized Gauss-Seidel” if H = D− 1B).

In the randomized Algorithm 3, the order of the relaxation does not follow a prescribed order, as in Gauss-Seidel, nor a greedy order depending on the entries in the current residual vector, as in Gauss-Southwell, but instead, each row i to relax is chosen at random with a fixed positive probability pi.

The paper is organized as follows. We first repeat the convergence results from [13, 19] for matrices which are hpd in Section 2, and then, in the rest of the paper, present new results for non-Hermitian matrices. As a byproduct of our investigation, we also show that some greedy choices other than (3) produce methods for which we obtain better bounds on the rate of convergence.

Our results are theoretical in nature and illustrated with numerical experiments. We are aware that other methods may be more efficient than those discussed here. But we believe that our results represent an interesting contribution to randomized and greedy relaxation algorithms since they show that we can deviate from the slowly converging Kaczmarz-type approaches not only when A is hpd but also when A is (generalized) diagonally dominant. We thus trust that on one hand, the results are interesting in and on themselves, and on the other they may form the basis for the analysis of other practical methods. Asynchronous iterative methods [9], for example, can be interpreted in terms of randomized iterations; see, e.g., [1, 31]. We expect that the theoretical tools developed here can also serve as a foundation for the analysis of such asynchronous methods, as well as for randomized block methods and randomized Schwarz methods for nonsingular linear systems; cf. [13].

For future use, we recall that rk = A(x∗− xk) where x∗ is the solution of the linear system (1) and x∗− xk is the error at the relaxation (or iteration) k.

2 The Hermitian and positive definite case

Consider the particular case that H in Algorithms 2 and 3 arises from the (relaxed) Jacobi splitting

where \(\omega \in \mathbb {R}\) is a relaxation parameter, i.e.,

Then, the fixed point iteration (2) is just the relaxed Jacobi iteration, which reduces to standard Jacobi if ω = 1, and the associated randomized iteration from Algorithm 3 is the randomized relaxed Gauss-Seidel method whereas the associated greedy Algorithm 2 is known as the relaxed Gauss-Southwell method if we take the greedy pick rule (3). Using the residual rk, the update in the third lines of Algorithms 2 and 3 for Hω can alternatively be formulated as

where ei denotes the i th canonical unit vector in \(\mathbb {C}^{n}\).

Now assume that A is hpd. The Jacobi iteration then does not converge unconditionally, a sufficient condition for convergenc being that with A = D − B the matrix D + B is hpd as well. The relaxed Gauss-Seidel iteration, on the other hand, is unconditionally convergent provided ω ∈ (0,2); see, e.g., [34].

For the randomized Gauss-Seidel and the Gauss-Southwell iterations, the following results are essentially known.

In fact, most of Theorem 2 is a special case of what was shown in [13] and [19] in the fairly more general context of (relaxed) randomized multiplicative Schwarz methods. For the sake of completeness, and to set the stage for our new results, we repeat the essentials of the proofs in [13] and [19] here.

We use the A-inner product and the A-energy norm which, for A hpd, are defined as

with 〈⋅,⋅〉 the standard inner product on \(\mathbb {C}^{n}\). Before we state the main theorem, we formulate the following useful result relating the harmonic and the arithmetic means of a sequence and the extrema of the product sequence.

Lemma 1

Let \(a_{i}, \gamma _{i} \in \mathbb {R}, i=1,\ldots , n\) with ai > 0,γi ≥ 0, i = 1,…,n. Then,

where

are the harmonic mean and the arithmetic mean, respectively.

Proof 1

Take the special convex combination of the ai with coefficients \(\hat {\gamma }_{i} = \frac {\alpha /n}{a_{i}}\). Then, \(a_{i} \hat {\gamma _{i}} = \alpha /n\), and for the convex combination of the ai with coefficients \(\tilde {\gamma }_{i} = \frac {\gamma _{i}}{n\gamma }\) there is at least one index, say j0, for which \(\tilde {\gamma }_{j_{0}} \geq \hat {\gamma }_{j_{0}}\), since otherwise \(\tilde {\gamma }_{i} < \hat {\gamma }_{i}\) for all i and thus \({\sum }_{i=1}^{n} \tilde {\gamma }_{i} < {\sum }_{i=1}^{n}\hat {\gamma }_{i} = 1\). This proves \(\max \limits _{i=1}^{n} \gamma _{i} a_{i} \geq \alpha /n \cdot n\gamma = \alpha \gamma \). The inequality for the minimum follows in a similar manner. □

Theorem 2

Let A be hpd and denote \(\lambda _{\min \limits } > 0\) its smallest eigenvalue.

-

In randomized relaxed Gauss-Seidel (Algorithm 3 with H = Hω = (1 − ω)I + ωD− 1B), the expected values for the squares of the norms of the errors ek = xk − x∗ satisfy

$$ \mathbb{E}\left( \|x^{k}-x^{*}\|_{A}^{2}\right) \leq (1- \alpha^{\text{rGS}})^{k} \| x^{0}-x^{*}\|_{A}^{2} $$(9)with

$$ \alpha^{\text{rGS}} = \omega(2-\omega)\lambda_{\min} \min\limits_{i=1}^{n} \frac{p_{i}}{a_{ii}} \cdot $$Herein, 1 − αrGS becomes smallest if we take pi = aii/tr(A) for all i, in which case (9) holds with

$$ \alpha^{\text{rGS}} = \alpha_{\text{opt}} = \omega(2-\omega)\frac{\lambda_{\min}}{\text{tr}(A)} ~\cdot $$(10) -

In relaxed Gauss-Southwell (Algorithm 2 with H = Hω = (1 − ω)I + ωD− 1B) and the greedy pick (4), we have

$$ \|x^{k}-x^{*}\|_{A}^{2} \leq (1- \alpha^{\text{GSW}} )^{k} \|x^{0}-x^{*}\|_{A}^{2} $$(11)with

$$\alpha^{\text{GSW}} = \omega(2-\omega)\lambda_{\min} \min\limits_{i=1}^{n} \frac{\pi_{i}}{a_{ii}}, \enspace \pi_{i} = \frac{1/{\beta_{i}^{2}}} {{\sum}_{j=1}^{n} 1/{\beta_{j}^{2}}} ~\cdot $$Herein, 1 − αGSW becomes smallest if we take \(\beta _{i} = {1}/{\sqrt {a_{ii}}}\) for all i in the greedy pick rule, i.e., we choose i such that

$$ \frac{|{r_{i}^{k}}|^{2}}{a_{ii}} = \max_{j=1}^{n} \frac{|{r_{j}^{k}}|^{2}}{a_{jj}}, $$(12)in which case (11) holds with αGSW the same optimal value as in (i), i.e., αGSW = αopt from (10).

Proof 2

If in relaxation k we choose to update component i, then using (8),

Therefore, in randomized Gauss-Seidel, the expected value for \(\| x^{k+1}-x^{*}\|_{A}^{2}\), conditioned to the given value for xk, is

with the last inequality holding due to \(\langle x^{k}-x^{*},x^{k}-x^{*} \rangle _{A} = \langle r^{k}, A^{-1}r^{k} \rangle \leq \frac {1}{\lambda _{\min \limits }} \langle r^{k}, r^{k} \rangle \). This gives (9). If we have pi = aii/tr(A), then \(\min \limits _{i=1}^{n} ({p_{i}}/{a_{ii}}) = {1}/{\text {tr}(A)}\), and this is larger or equal than \(\min \limits _{i=1}^{n} ({p_{i}}/{a_{ii}})\) for any choice of the probabilities pi by Lemma 1. This gives the second statement in part (i).

To prove part (ii), we observe that from the greedy pick rule \(\beta _{i} |{r_{i}^{k}}| \geq \max \limits _{j=1}^{n} \beta _{j} |{r_{j}^{k}}|\) we have \({\beta _{i}^{2}} |{r^{k}_{i}}|^{2}/\|r^{k}\|^{2} \geq {\beta _{j}^{2}} |{r^{k}_{j}}|^{2}/\|r^{k}\|^{2}\) for all j which, using Lemma 1 (with \(\gamma _{j} = |{r_{j}^{k}}|^{2}\)), gives

from which we deduce

So (14) this time yields

which results in (11). Finally, using Lemma 1 (with γi = πi), we obtain

and for the choice \(\beta _{i} = {1}/{\sqrt {a_{ii}}}\) we have πi = aii/tr(A) and thus

□

We note that if in randomized Gauss-Seidel we choose all probabilities to be equal, pi = 1/n for all i, then

in (9), which is smaller than αopt unless all diagonal elements aii are equal. We have a completely analogous situation for Gauss-Southwell: If we take the unweighted greedy pick rule (3), we obtain a value for αGSW which, interestingly, is the same than αrGS for randomized Gauss-Seidel with uniform probabilities. And this value is smaller than the value αopt that we obtain for the weighted greedy pick rule (12), a value which is, interestingly again, equal to what we obtain as the maximum value for αrGS in the randomized method (with the weighted probabilities pi = aii/tr(A)).

Note also that if one scales the hpd matrix A symmetrically so that it has unit diagonal, then the greedy pick (12) in Theorem 2 (ii) reduces to the standard Gauss-Southwell pick (3): Let G = D− 1/2AD− 1/2, then, the system (1) is equivalent to Gy = D− 1/2b with the change of variables x = D− 1/2y. Running Algorithm 2 for A and b in the variables x with the greedy pick (4) with \(\beta _{i} = 1/\sqrt {a_{ii}}\) is equivalent to running the same algorithm for G and D− 1/2b in the variables y with the standard greedy pick (3). One can then express the bounds of the theorem in the scaled variables in the appropriate energy norm, since we have ∥yk − y∗∥G = ∥D− 1/2yk − D− 1/2y∗∥A = ∥xk − x∗∥A; cf. [1, Section 3.1].

2.1 Numerical example

Throughout this paper, we give illustrative numerical examples based on the convection-diffusion equation for a concentration \(c = c(x,y,t): [0,1] \times [0,1] \times [0,T] \to \mathbb {C}\)

The positive diffusion coefficients α and β are allowed to depend on x and y, α = α(x,y),β = β(x,y), and this also holds for the velocity field (ν,μ) = (ν(x,y),μ(x,y)). We discretize in space using standard finite differences with N interior equispaced grid points in each direction. This leaves us with the semi-discretized system

where now c = c(t) is a two-dimensional array, each component corresponding to one grid point. Using the implicit Euler rule as a symplectic integrator means that at a given time t and a stepsize τ we have to solve

for c(t + 1). We illustrate the convergence behavior of the Gauss-Seidel variants considered in this paper when solving the system (16) for appropriate choices of α,β,μ and ν.

Since Theorem 2 deals with the hpd case, we now assume that there is no convection, μ = ν = 0. Then, B is the discretization of the diffusive term using central finite differences and as such it is an irreducible diagonally dominant M-matrix and thus hpd; see, e.g., [7]. Accordingly, \(A = I + \frac {\tau }{2}B\) is hpd as well. We took N = 100 which gives a spacing of \(h=\frac {1}{N+1}\), and τ = 0.5h2 and we consider two cases: constant diffusion coefficients

which gives a constant diagonal in A, and non-constant diffusion coefficients

which makes the entries on the diagonal of \(A = I + \frac {\tau }{2}B\) vary between \(1+\frac {4\tau }{2h^{2}}\) and \(1+\frac {38\tau }{2h^{2}}\), i.e., between 2 and 9.5.

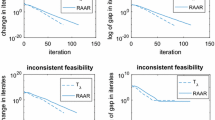

Figure 1 reports the numerical results. We chose the right-hand side \(\frac {\tau }{2} B c(t)\) in (16) as Az, where z is the discretized evaluation of the function xy(1 − x)(1 − y). So we know the exact solution, which allows us to report A-norms of the error, which is what we provided bounds for in Theorem 2. The figure displays these A-norms only after every n relaxations, which we treat as one “iteration”, since n relaxations indeed make make up one iteration in standard Gauss-Seidel.

The top row of Fig. 1 gives results for the constant diffusion case (17). The left diagram shows the relative A-norm of the errors for randomized Gauss-Seidel with uniform probabilities pi = 1/n, standard (“cyclic”) Gauss-Seidel and Gauss-Southwell with the greedy pick rule (3). For randomized Gauss-Seidel, we actually give here—as in all other experiments—the averages for ten runs which we regard as an approximation to the expected values. The plot to the right shows that the convergence behavior of these ten different runs exhibits only mild deviations. The plot on the left also contains the bound (1 − αopt)k/2 of Theorem 2. We see that randomized Gauss-Seidel converges approximately half as fast as cyclic Gauss-Seidel, that Gauss-Southwell converges somewhat faster than cyclic Gauss-Seidel, and that the theoretical bounds are not very tight.

The bottom row of Fig. 1 shows results for variable diffusion according to (18). Convergence is slower than in the constant diffusion case. The left plot has the results for the “optimal” probabilities \(p_{i} = \frac {a_{ii}}{\text {tr}(A)}\) and the “optimal” greedy pick (12), for which the bounds of Theorem 2 hold again and are also reported, whereas the right plot shows the results for the uniform probabilities pi = 1/n and the greedy pick rule (3). In this case, the bound of Theorem 2 holds with \(\alpha ^{\text {rGS}} = \alpha ^{\text {GSW}} = (\lambda _{\min \limits }/n) \min \limits _{i=1} 1/a_{ii}\), and this bound is also plotted. Interestingly, the two plots are virtually indistinguishable, except for a tiny improvement of randomized Gauss-Seidel when using “optimal” probabilities. We conclude that the choice of probabilities or the greedy pick rule has only a very marginal effect in this example. The plots also show that the proven bounds can be pessimistic in the sense that the actual convergence is significantly faster. This is not uncommon when dealing with randomized algorithms, and we will address this further when discussing the numerical results illustrating the new convergence theorems for diagonally dominant matrices in Section 3.

Although not being further addressed in this paper, we now shortly present basic numerical results on the performance of the various relaxation methods when used as a smoother in a multigrid method. This was mentioned as a possible application in the introduction. We consider a V-cycle multigrid method for the standard discrete Laplacian on a N × N grid (with N+ 1 a power of 2) with Dirichlet boundary conditions. Restriction and prolongation are done via the usual linear interpolation, doubling the grid spacing from one level to the next and going down to a minimum grid size of 7 × 7; see [33]. For each smoother, on each level ℓ with a grid size of Nℓ × Nℓ, we perform a constant number of s post- and s pre-smoothing “iterations” amounting to sNℓ relaxations. For standard Gauss-Seidel and Gauss-Southwell, we use s = 1, whereas for randomized Gauss-Seidel we tested s = 1,s = 1.5, and s = 2.

Figure 2 indicates that randomized Gauss-Seidel has its potential for being used as a smoother in multigrid, provided that its slower convergence can be outweighed by a more efficient implementation, as it might be possible in a parallel environment. The left plot gives convergence plots for N = 127. The right plot reports the number of V-cycles required to reduce the initial residual by a factor of 10− 6 for various grid sizes N. We see that the convergence speed with randomized Gauss-Seidel and Gauss-Southwell smoothing is independent of the grid size just as with standard Gauss-Seidel smoothing, thus preserving one of the most important properties of the multigrid approach. An interesting feature that random Gauss-Seidel shares with the Gauss-Southwell method is that we can prescribe a fractional number of smoothing iterations and thus adapt the computational work on a finer scale than with standard Gauss-Seidel. The figure also shows that for this example Gauss-Southwell yields faster convergence than standard Gauss-Seidel for the same number of relaxations.

Different relaxation schemes as smoothers in multigrid. Randomized GS 1, 2, and 3 correspond to s = 1, s = 1.5, and s = 2 smoothing “iterations” in randomized Gauss-Seidel. Left: relative residual norms for N = 127. Right: no. of V-cycles to reduce the initial residual by a factor of 10− 6 for different grid sizes

3 Results for non-Hermitian matrices

We now present several theorems which are counterparts to Theorem 2 for classes of not necessarily Hermitian matrices, and the iteration matrix H = M− 1N in (2) may arise from a general splitting A = M − N other than the (relaxed) Jacobi splitting. In place of the A-norm, we will now use weighted ℓ1-norms.

Definition 3

For a given vector \(u \in \mathbb {R}^{n}\) with positive components ui > 0, i = 1,…,n, the weighted ℓ1-norm on \(\mathbb {C}^{n}\) is defined as

Clearly, the standard ℓ1-norm is obtained for u = (1,…,1)T. It is easy to see that the associated operator norm for \(A \in \mathbb {C}^{n \times n}\) is the weighted column sum-norm

In the theorems to follow, we will state results in terms of the preconditioned residual

and we denote K the preconditioned matrix K = M− 1A = I − H.

Our first theorem assumes ∥H∥u,1 < 1 and gives bounds on the weighted ℓ1-norms of the preconditioned residuals in Algorithms 2 and 3 similar in nature to those in Theorem 2.

Theorem 4

Consider the weighted column sums

and assume that \(\|H\|_{1,u} = \max \limits _{j=1}^{n} \rho _{j} < 1\). Set γj := (1 − ρj)− 1,j = 1,…,n.

-

In randomized relaxation (Algorithm 3), putting

$$ \alpha^{\text{ra}} = \min_{j=1}^{n} \frac{p_{j}}{\gamma_{j}} , $$(19)the expected values for the weighted ℓ1-norm of the preconditioned residuals \(\hat r^{k}= M^{-1}r^{k} = c-Kx^{k}\) of the iterates xk satisfy

$$ \mathbb{E}(\|\hat r^{k}\|_{1,u}) \leq \left( 1-\alpha^{\text{ra}} \right)^{k} \| \hat r^{0} \|_{1,u}. $$(20)The quantity αra in (19) is maximized if one takes

$$ p_{i} = \gamma_{i}/{\sum}_{j=1}^{n} \gamma_{j}, \enspace j=1,\ldots,n; $$(21)its value then is \(\alpha ^{\text {ra}} = \alpha _{\text {opt}} := 1 / {\sum }_{j=1}^{n} \gamma _{j}\).

-

In greedy relaxation (Algorithm 2), with the greedy pick rule (5) based on the preconditioned residual, putting

$$ \alpha^{\text{gr}} = \min_{j=1}^{n} \frac{\pi_{j}}{\gamma_{j}}, \text{ where } \pi_{j} = \frac{u_{j}/\beta_{j}}{{\sum}_{\ell=1}^{n} u_{\ell}/\beta_{\ell}}, $$the weighted ℓ1-norms of the preconditioned residuals \(\hat r^{k}= M^{-1}r^{k} = c-Kx^{k}\) of the iterates xk satisfy

$$ \|\hat r^{k}\|_{1,u} \leq \left( 1-\alpha^{\text{gr}} \right)^{k} \| \hat r^{0} \|_{1,u}. $$(22)Moreover, αgr is maximized if we take

$$ \beta_{j} = u_{j}/\gamma_{j}, \enspace j=1,\ldots,n; $$(23)its maximal value is identical to αopt from part (i).

Proof 3

If i is the index chosen at iteration k, we have

which gives

We therefore have

To prove part (i), we use (24) to see that the expected value of the norm of the residual \(\hat r^{k+1}\), conditioned to the given value for \(\hat r^{k}\), satisfies

from which we get (20). Moreover, the minimum \(\alpha ^{\text {ra}} = \min \limits _{j=1}^{n} p_{j}/\gamma _{j}\) is not larger than the convex combination \({\sum }_{j=1}^{n} \left (\gamma _{j}/{\sum }_{\ell =1}^{n} \gamma _{\ell }\right ) \cdot p_{j}/\gamma _{j} = 1/ {\sum }_{\ell =1}^{n} \gamma _{\ell }\), and this value is attained for αra if we choose \(p_{i} = \gamma _{i}/{\sum }_{\ell =1}^{n} \gamma _{\ell }\).

To prove part (ii), we observe that due to the greedy pick rule (5) we have

which, using Lemma 1, gives

Together with (24) this gives (22). Finally, using Lemma 1 again, we obtain

which gives

And αopt is attained as value for αgr if we take βj = uj/γj for j = 1,…,n. □

The convergence results of Theorem 4 are given in terms of the weighted ℓ1-norm, since it is this norm for which we can prove a decrease in every relaxation due to the assumption ∥H∥u,1 < 1. As we will soon see, for randomized Gauss-Seidel and Gauss-Southwell, this assumption is equivalent to a (generalized) diagonal dominance assumption on A, a condition which is often fulfilled in applications and which can be checked easily, at least when the weights are all 1. In this context, it is worth mentioning that results like the bound (22) yield a bound on the R1 convergence factor of the sequence xk − x∗, the standard measure of the convergence rate for a linearly zero-convergent sequence defined as

see, e.g., [23]. The R1-factor is independent of the norm ∥⋅∥, and results like (22) may be interpreted in a norm-independent manner by saying that

From Theorem 4, we see that with the optimal choices for the probabilities pi or the weights βi, the proven bounds for randomized relaxation and greedy relaxation are identical. So from the point of view of the established theory we cannot conclude that randomized would outperform greedy or vice versa. In all our practical experiments, though, the greedy approach exposed faster convergence than the randomized approach.

Also note that if we just take pi = 1/n for all i in randomized relaxation, then

and the same value is attained for αgr in greedy relaxation if we take βi = ui for all i. The optimal value αopt is attained for the greedy pick rule (23). If we take the standard greedy pick rule (3), i.e., βi = 1 for all i, we have

which, depending on the values of uj, can be smaller or larger than \(\frac {1}{n}(1-\max \limits _{j=1}^{n} \rho _{j})\) but is certainly never larger than αopt obtained with the pick rule (23).

In Theorem 4, we need to know the weights ui and with them the weighted column sums ρi in order to be able to choose the probabilities pi or the greedy pick for which we get the strongest convergence bound, i.e., the largest value for αra and αgr. For example, it might be that we can take u = (1,…,1), such that ∥⋅∥1,u reduces to the standard ℓ1-norm. However, it might also be that we know that ∥H∥1,u < 1 for some u > 0 without knowing u explicitly. Theorem 4 tells us that we still have convergence for any choice of probabilities pi in randomized relaxation or weights βi in greedy relaxation, but the proven convergence bounds are weaker than for the “optimal” probabilities (21) or weights (23).

In light of this discussion, it is interesting that for a particular vector of weights u we can somehow reverse the situation, at least for the randomized iteration: We know how to choose the corresponding optimal values for the probabilities while we do not need to know u explicitly.

In order to prepare this result, we recall the following left eigenvector version of the Perron-Frobenius theorem; see, e.g., [34]. Note that a square matrix H is called irreducible if there is no permutation matrix P such that PTAP has a 2 × 2 block structure with a zero off-diagonal block. We also use the notation H ≥ 0 (“H is nonnegative”) if all entries hij of H are nonnegative. Similarly, a vector \(w \in \mathbb {R}^{n}\) is called nonnegative (w ≥ 0) or positive (w > 0), if all its components are nonnegative or positive, respectively.

Theorem 5

Let \(H \in \mathbb {R}^{n \times n}\), H ≥ 0, be irreducible. Then, there exists a positive vector \(w \in \mathbb {R}^{n}\), the “left Perron vector” of H, such that wTH = ρ(H)wT, where ρ = ρ(H) > 0 is the spectral radius of H. Moreover, w is unique up to scaling with a positive scalar.

A direct consequence of Theorem 5 is that for H ≥ 0 irreducible we have

and thus ∥H∥w,1 = ρ.

If H ≥ 0 is not irreducible, a positive left Perron vector needs not necessarily exist. However, we have the following approximation result which we state as a lemma for future reference.

Lemma 6

Assume that \(H \in \mathbb {R}^{n \times n}\) is nonnegative. Then, for any 𝜖 > 0, there exists a positive vector w𝜖 > 0 such that \(w_{\epsilon }^{T} H \leq (\rho +\epsilon )w_{\epsilon }^{T}\).

Proof 4

For given 𝜖 > 0, due to the continuity of the spectral radius, we can choose δ > 0 small enough such that the spectral radius of the irreducible matrix Hδ = H + δE, E the matrix of all ones, is less or equal than ρ + 𝜖. Now take w𝜖 as the left Perron vector of Hδ. □

We are now ready to prove the following theorem where we use the notation |H| for the matrix resulting from H when replacing each entry by its absolute value. Interestingly, the theorem establishes a situation where we know how to choose optimal probabilities (in the sense of the proven bounds) without explicit knowledge of the weights.

Theorem 7

Assume that ρ = ρ(|H|) < 1 and consider randomized relaxation (Algorithm 3).

-

If H is irreducible, then there exists a positive vector of weights w such that the weighted ℓ1-norm of the preconditioned residuals \(\hat r^{k}= M^{-1}r^{k}\) of the iterates xk satisfies

$$ \mathbb{E}(\|\hat r^{k}\|_{1,w}) \leq \left( 1-\alpha \right)^{k} \| \hat r^{0} \|_{1,w}, \text{ where } \alpha = (1-\rho)\min_{j=1}^{n} p_{j}. $$Moreover, α is maximized if one takes pj = 1/n for j = 1,…,n; its value then is αopt = (1 − ρ)/n.

-

If H is not irreducible, then for every 𝜖 > 0 such that ρ + 𝜖 < 1 there exists a positive vector of weights w𝜖 such that the weighted ℓ1-norm of the preconditioned residuals \(\hat r^{k}= M^{-1}r^{k}\) of the iterates xk satisfies

$$ \mathbb{E}(\|\hat r^{k}\|_{1,w_{\epsilon}}) \leq \left( 1-\alpha^{\epsilon} \right)^{k} \| \hat r^{0} \|_{1,w_{\epsilon}} \text{ where } \alpha^{\epsilon} = (1-(\rho+\epsilon))\min_{j=1}^{n} p_{j} $$Moreover, α𝜖 is maximized if one takes pj = 1/n for j = 1,…,n; its value then is \(\alpha ^{\epsilon }_{\text {opt}} = (1-\rho -\epsilon )/{n}\).

Proof 5

Part (i) follows immediately from Theorem 4 by taking w as the left Perron vector of |H|, noting that with this vector we have ρj = ρ for j = 1,…,n. Part (ii) follows from Theorem 4, too, now taking w𝜖 as the vector from Lemma 6, observing that for this vector we have ρj ≤ ρ + 𝜖 for j = 1,…,n for the weighted column sums

□

Interestingly, Theorem 7 cannot be transferred to greedy relaxation, at least not with the techniques used there. Indeed, in order to obtain a bound \(\| \hat r^{k+1}\|_{1,w} \leq (1-\alpha _{\text {opt}})^{k} \| \hat r^{k}\|_{1,w}\) when H is irreducible, e.g., the bounds given in Theorem 4 tell us that we would have to use the greedy pick rule

which requires the knowledge of w.

4 Randomized Gauss-Seidel and Gauss-Southwell for H-matrices

Building on Theorem 4, we now derive convergence results for the randomized Gauss-Seidel and the Gauss-Southwell method when A is an H-matrix.

Definition 8 (See, e.g., 7)

-

A matrix \(A =(a_{ij}) \in \mathbb {R}^{n \times n}\) is called an M-matrix if aij ≤ 0 for i≠j and it is nonsingular with A− 1 ≥ 0.

-

A matrix \(A \in \mathbb {C}^{n \times n}\) is called an H-matrix, if its comparison matrix 〈A〉 with

$$ \langle A \rangle_{ij} = \left\{ \begin{array}{rl} |a_{ii}| & \text{if $i = j$} \\ -|a_{ij}| & \text{if $i \neq j$} \end{array} \right. $$is an M-matrix.

Clearly, an M-matrix is also an H-matrix. For our purposes, it is important that H-matrices can equivalently be characterized as being generalized diagonally dominant.

Lemma 9

Let \(A \in \mathbb {C}^{n \times n}\) be an H-matrix. Then,

-

There exists a positive vector \(v\in \mathbb {R}^{n}\) such that A is generalized diagonally dominant by rows, i.e.,

$$ |a_{ii}|v_{i} > {\sum}_{j =1, j\neq i}^{n} |a_{ij}|v_{j} \text{ for } i = 1,\ldots,n. $$ -

There exists a positive vector u > 0 such that A is generalized diagonally dominant by columns, i.e.,

$$ u_{j} |a_{jj}| > {\sum}_{i =1, i\neq j}^{n} u_{i} |a_{ij}| \text{ for } j = 1,\ldots,n. $$

Proof 6

Part (i) can be found in many text books; one can take v = 〈A〉− 1e, with e = (1,…,1)T. Part (ii) follows similarly by taking uT as the row vector eT〈A〉− 1. □

The lemma implies the following immediate corollary.

Corollary 10

Let A be an H-matrix and let A = D − B be its Jacobi splitting with D the diagonal part of A. Then, the iteration matrix |D− 1B| belonging to the Jacobi splitting 〈A〉 = |D|−|B| of 〈A〉 satisfies ∥D− 1B|∥1,u < 1 with u the vector from Lemma 9(ii).

With these preparations, we easily obtain the following first theorem on the (unrelaxed) randomized Gauss-Seidel and Gauss-Southwell methods. We formulate it using the residuals b − Axk of the original equation.

Theorem 11

Let A be an H-matrix and let u be a positive vector such that uT〈A〉 > 0. Let A = D − B be the Jacobi splitting of A and put H = D− 1B. Moreover, let w = (w,…,wn) with wj = uj/|ajj|,j = 1,…,n. Then,

-

All weighted column sums

$$ \rho_{j} = \frac{1}{u_{j}}{\sum}_{i=1}^{n} |h_{ij}| u_{i} $$(25)are less than 1.

-

In the randomized Gauss-Seidel method, i.e., Algorithm 3 for H = D− 1B, the expected values of the w-weighted ℓ1-norm ∥rk∥1,w of the original residuals satisfy

$$ \mathbb{E}(\|r^{k}\|_{1,w}) \leq \left( 1-\alpha^{\text{rGS}} \right)^{k} \| r^{0} \|_{1,w}, $$where \(\alpha ^{\text {rGS}} = \min \limits _{j=1}^{n} (p_{j}/\gamma _{j}) >0\) and γj = (1 − ρj)− 1 for j = 1,…,n. The value of αrGS is maximized for the choice \(p_{i} = \gamma _{i} / {\sum }_{\ell =1}^{n} \gamma _{\ell }\), and the resulting value for αrGS is \(\alpha _{\text {opt}} = 1/{\sum }_{\ell =1}^{n} \gamma _{\ell }\).

-

In the Gauss-Southwell method (Algorithm 2 with H = D− 1B), using the greedy pick rule (5) based on the preconditioned residual, the w-weighted ℓ1-norm of the original residuals of the iterates xk satisfies

$$ \| r^{k}\|_{1,w} \leq \left( 1-\alpha^{\text{gr}} \right)^{k} \| r^{0} \|_{1,w}, $$with

$$ \alpha^{\text{gr}} = \min_{j=1}^{n} \frac{\pi_{j}}{\gamma_{j}}, \text{ where } \pi_{j} = \frac{u_{j}/\beta_{j}}{{\sum}_{\ell=1}^{n} u_{\ell}/\beta_{\ell}}. $$Moreover, αgr is maximized if we take

$$ \beta_{j} = u_{j}/\gamma_{j}, \enspace j=1,\ldots,n; $$its maximal value is then αopt from (i).

Proof 7

For (i), observe that we have \(|a_{jj}|u_{j} > {\sum }_{i=1, i\neq j}^{n} |a_{ij}| u_{i}\) for j = 1,…,n and thus, since hij = aij/aii for i≠j and hjj = 0,

Parts (ii) and (iii) now follow directly from Theorem 4, observing that for the residual \(\hat r^{k} = D^{-1}r^{k}\) we have \(\| \hat r^{k} \|_{1,u} = \| r^{k} \|_{1,w}\) . □

Note that for Gauss-Southwell the greedy pick rule (5) with weights βi based on the preconditioned residual is equivalent to the greedy pick rule (4) based on the original residual with weights βi/aii.

Instead of changing the weights from u to w, it is also possible to obtain a bound for the u-weighted ℓ1-norm, where, in addition, the same optimal choice for the pj in the randomized Gauss-Seidel iteration yields the same αopt as that of Theorem 11, and similarly for the Gauss-Southwell iteration.

Theorem 12

Let A be an H-matrix and let u be a positive vector such that uT〈A〉 > 0. Then,

-

In the randomized Gauss-Seidel method, the expected values of the u-weighted ℓ1-norm ∥rk∥1,u of the residuals satisfy

$$ \mathbb{E}(\|r^{k}\|_{1,u}) \leq \left( 1-\alpha \right)^{k} \| r^{0} \|_{1,u}, $$where \(\alpha = \min \limits _{j=1}^{n} ({p_{j}}/{\gamma _{j}}) >0\) and γj = (1 − ρj)− 1, ρj from (25), for j = 1,…,n. The value of α is maximized for the choice

$$ p_{i} = \gamma_{i} / {\sum}_{\ell=1}^{n} \gamma_{\ell}, $$(26)and the resulting value for α is \(\alpha _{\text {opt}} = 1/{\sum }_{\ell =1}^{n} \gamma _{\ell }\).

-

In the Gauss-Southwell method, if we take the same greedy pick as in Theorem 11, i.e.,

$$ {(1-\rho_{i})}\frac{u_{i}}{|a_{ii}|}{|{r^{k}_{i}}|} = \max_{j=1}^{n} {(1-\rho_{j})}\frac{u_{j}}{|a_{jj}|}{|{r^{k}_{j}}|}, $$(27)then

$$ \|r^{k}\|_{1,u} \leq \left( 1- \alpha_{\text{opt}} \right)^{k} \| r^{0} \|_{1,u}. $$

Proof 8

If i is the index chosen in iteration k in randomized Gauss-Seidel or Gauss-Southwell, we have from (8)

This gives

This is exactly the same relation as (24), but now for the original residuals rather than the preconditioned ones. Parts (i) and (ii) therefore follow exactly in the same manner as in the proof of Theorem 4. □

Let us mention that, if A and thus |H| = |D− 1B| is irreducible, the left Perron vector u of |H| is a vector with uT〈A〉 > 0. As was discussed after Theorem 4, this means that with respect to the weights from this vector we know the optimal probabilities in randomized Gauss-Seidel to be pi = 1/n,i = 1,…,n, i.e., we do not need to know u explicitly. According to Lemma 6, a similar observation holds in an approximate sense with arbitrary precision when A is not irreducible.

We also remark that the preconditioned residual \(\hat r^{k} = D^{-1}r^{k}\) satisfies \(\|\hat r^{k}\|_{1,u} = \| r^{k} \|_{1,w}\) with u,w from Theorems 11 and 12. So with these two theorems we have obtained identical convergence bounds for the u-weighted ℓ1-norm of the unpreconditioned and the preconditioned residuals.

Theorem 11 can be extended to relaxed randomized Gauss-Seidel iterations. We formulate the results only for the case where the weight vector is the left Perron vector. While this is not mandatory as long as the relaxation parameter ω satisfies ω ∈ (0,1], it is crucial for the part which extends the range of ω to values larger than 1.

Theorem 13

Let A be an H-matrix and let A = D − B be its Jacobi splitting. Put H = D− 1B and ρ = ρ(|H|) < 1. Assume that \(\omega \in (0,\frac {2}{1+\rho })\) and define ρω = ωρ + |1 − ω|∈ (0,1). Consider the relaxed randomized Gauss-Seidel iteration, i.e., Algorithm 3 with the matrix Hω from (7). Then,

-

If A and thus |H| is irreducible, then with u the left Perron vector of |H| and w the positive vector with components wi = ui/|aii|, the expected values for the w-weighted ℓ1-norm of the residuals satisfy

$$ \mathbb{E}(\|r^{k}\|_{1,w}) \leq \left( 1- \alpha_{\omega} \right)^{k} \| r^{0} \|_{1,w}, $$where \(\alpha _{\omega } = \min \limits _{j=1}^{n} p_{j}/\gamma _{\omega } >0\), γω = (1 − ρω)− 1. The value of αω is maximized for the choice pi = 1/n, and the resulting value for αω is \(\alpha _{\omega }^{\text {opt}} = (1-\rho _{\omega })/{n}\).

-

If A is not irreducible, then for every 𝜖 > 0 such that ρω + 𝜖 < 1 there exists a positive vector w𝜖 such that the expected values for the weighted ℓ1-norm of the residuals satisfy

$$ \mathbb{E}(\|r^{k}\|_{1,w_{\epsilon}}) \leq \left( 1- \alpha_{\omega}(\epsilon) \right)^{k} \| r^{0} \|_{1,w_{\epsilon}}, $$where \(\alpha _{\omega }(\epsilon ) = \min \limits _{j=1}^{n} p_{j}/\gamma _{\omega }(\epsilon ) >0\), γω(𝜖) = (1 − ρω − 𝜖)− 1. The value of αω(𝜖) is maximized for the choice pi = 1/n, and the resulting value for α is \(\alpha _{\omega }^{\text {opt}}(\epsilon ) = (1-\rho _{\omega } - \epsilon )/{n}\).

Proof 9

We indeed have ρ < 1 since by Corollary 10 the operator norm ∥|H|∥1,u is less than 1 for some vector u > 0. Assume first that H is irreducible and let u > 0 be the left Perron vector of |H|. Then, we have for all j = 1,…,n

which for the weighted column sums of the matrix Hω = (1 − ω)I + ωH belonging to the relaxed iteration gives

Since ρ < 1, we have that ρω < 1 for \(\omega \in (0,\frac {2}{1+\rho })\). The result now follows applying Theorem 4 with the weight vector u, using the facts that all weighted column sums ρj are now equal to ρω and that \(|\hat {r}_{i}^{k}| = \frac {1}{|a_{ii}|} | {r^{k}_{i}}|\), which gives the weights wi = ui/|aii| in (i).

If H is not irreducible, the proof proceeds in exactly the same manner, choosing u𝜖 > 0 as a vector for which |H|u𝜖 ≤ (ρ + 𝜖)u𝜖; see the discussion after Theorem 5. □

For the same reasons as those explained after Theorem 7, it is not possible to expand Theorem 13 to Gauss-Southwell unless we know the Perron vector u and include it into the greedy pick rule. We do not state this as a separate theorem.

4.1 Numerical example

We consider again the implicit Euler rule for the convection-diffusion (15), now with a non-vanishing and non-constant velocity field describing a re-circulating flow,

We will consider the two choices σ = 1 (weak convection) and σ = 400 (strong convection); the diffusion coefficients α and β are constant and equal to 1. With N = 100 and \(\tau = 0.5h^{2}, h = \frac {1}{N+1}\), as in the example in Section 2, the matrix \(A=I+\frac {\tau }{2}B\) from (16) is diagonally dominant for both σ = 1 and σ = 400. So we take the weight vector u to have all components equal to 1 and we report results on the ℓ1-norm of the residuals for randomized Gauss-Seidel and Gauss-Southwell as an illustration of Theorem 12. Since this time we are interested in the residuals rather than in the errors, it is not mandatory to fix the right-hand side such that we know the solution, but to stay in line with the earlier experiments we actually did so with the solution being again the discretization of xy(1 − x)(1 − y).

The top row of Fig. 3 displays, as before, these ℓ1-norms only after every n relaxations, considered as one iteration. For both values of σ, we take the probabilities pi from (26) in randomized Gauss-Seidel and the greedy pick rule (27) for Gauss-Southwell, so that Theorem 12 applies, and the plots also report the bounds for the ℓ1-norm of the residual given in that theorem.

The plots in the top row show a similar behavior of the different relaxation methods as in the hpd case, with Gauss-Southwell being fastest, especially for strong convection, and randomized Gauss-Seidel converging roughly half as fast as cyclic Gauss-Seidel—and this for both values of σ. As opposed to the hpd case, the theoretical bounds are now much closer to the actually observed convergence behavior of the randomized relaxations. For σ = 1, the bounds are actually that close that in the graph they are hidden behind the reported residual norms. For σ = 400, the bounds can be distinguished from the residual norms in the plots, but they are still remarkably close.

The bottom row of Fig. 3 contains results for the cyclic and the randomized Kaczmarz methods. With \(a_{i}^{*}\) denoting the i th row of A, and ai the corresponding column vector, relaxing component i in Kaczmarz amounts to the update

We report these results since they allow for a comparison, randomized Kaczmarz being one of the most prominent randomized system solvers for nonsymmetric systems.

The plots report the 2-norm of the error for which we know a bound given by the right-hand side in the inequality

where \(\sigma _{\min \limits }(A)\) is the smallest non-zero singular value of A. This bound holds if “optimal” probabilities pi are chosen, see [32], as \( p_{i} = \|a_{i}^{*}|_{2}^{2}/\|A\|_{F}^{2}\), which is what we did for these experiments.

We see that as with Gauss-Seidel, the cyclic algorithms converge approximately twice as fast than the randomized algorithm. The theoretical bounds are less sharp than for the Gauss-Seidel methods. For both matrices, the cyclic and randomized Kaczmarz methods converge significantly slower than their Gauss-Seidel counterparts while, moreover, one relaxation in Kaczmarz needs approximately twice as many operations than in Gauss-Seidel. Since the plots on the top row report ℓ1-norms rather than 2-norms of the error, let us just mention that in the weak convection case, σ = 1, the relative 2-norm of the residual in the randomized Gauss-Seidel run from the top row of Fig. 3 is 1.22 ⋅ 10− 6 at iteration 41, and in the strong convection case, σ = 400, it is 1.65 ⋅ 10− 6 at iteration 60. On the other hand, randomized Kaczmarz failed to converge to the desired tolerance of 10− 6 within 100 iterations.

5 Conclusion

We developed theoretical convergence bounds for both randomized and greedy pick relaxations for nonsingular linear systems. While we mainly reviewed results for the Gauss-Seidel relaxations in the case of a Hermitian positive definite matrix A, we presented several new convergence results for nonsymmetric matrices in the case where the iteration matrix has a weighted ℓ1-norm less than 1. From this, we could deduce several convergence results for randomized Gauss-Seidel and Gauss-Southwell relaxations for H-matrices. We also presented results which show how to choose the probability distributions (in the case of randomized relaxations) or the greedy pick rule (in the case of greedy iterations) which minimize our convergence bounds. Numerical experiments illustrate our theoretical results and also show that the methods analyzed are faster than Kaczmarz for square matrices.

References

Avron, H., Druinsky, A., Gupta, A.: Revisiting asynchronous linear solvers: provable convergence rate through randomization. J. ACM. 62(Article 51), 27 (2015)

Bai, Z.-Z., Wu, W.-T.: On convergence rate of the randomized Kaczmarz method. Linear Algebra Appl. 553, 252–269 (2018)

Bai, Z.-Z., Wu, W.-T.: On relaxed greedy randomized Kaczmarz methods for solving large sparse linear systems. Appl. Math. Lett. 83, 21–26 (2018)

Bai, Z.-Z., Wu, W.-T.: On greedy randomized augmented Kaczmarz method for solving large sparse inconsistent linear systems. SIAM J. Sci. Comput. 43, A3892–A3911 (2021)

Bai, Z.-Z., Wang, L., Wu, W.-T.: On convergence rate of the randomized Gauss-Seidel method. Linear Algebra Appl. 611, 237–252 (2021)

Bai, Z.-Z., Wang, L., Muratova, G.V.: On relaxed greedy randomized augmented Kaczmarz methods for solving large sparse inconsistent linear systems. East Asian J. Appl. Math. 12, 323–332 (2022)

Berman, A., Plemmons, R.J.: Nonnegative Matrices in the Mathematical Sciences, Volume 9 of Classics in Applied Mathematics. SIAM, Philadelphia (1994)

Du, K.: Tight upper bounds for the convergence of the randomized extended Kaczmarz and Gauss-Seidel algorithms. Numer. Linear Algebra Appl. 26, 14 (2019)

Frommer, A., Szyld, D.B.: On asynchronous iterations. J. Comput. Appl. Math. 123, 201–216 (2000)

Glusa, C., Boman, E.G., Chow, E., Rajamanickam, S., Szyld, D.B.: Scalable asynchronous domain decomposition solvers. SIAM J. Sci. Comput. 42, C384–C409 (2020)

Gower, R.M., Richtárk, P.: Randomized iterative methods for linear systems. SIAM J. Matrix Anal. Appl. 36, 1660–1690 (2015)

Gower, R.M., Molitor, D., Moorman, J., Needell, D.: On adaptive sketch-and-project for solving linear systems. SIAM J. Matrix Anal. Appl. 42, 954–989 (2021)

Griebel, M., Oswald, P.: Greedy and randomized versions of the multiplicative Schwarz method. Linear Algebra Appl. 437, 1596–1610 (2012)

Guan, Y.-J., Li, W.-G., Xing, L.-L., Qiao, T.-T.: A note on convergence rate of randomized Kaczmarz method. Calcolo. 57(Paper No. 26), 11 (2020)

Guo, J.H., Li, W.G.: The randomized Kaczmarz method with a new random selection rule. Numer. Math. J. Chin. Univ. Gaodeng Xuexiao Jisuan Shuxue Xuebao. 40, 65–75 (2018)

Haddock, J., Ma, A.: Greed works: an improved analysis of sampling Kaczmarz-Motzkin. SIAM J. Math. Data Sci. 3, 342–368 (2021)

Kaczmarz, S.: Angenäherte Auflösung von Systemen linearer Gleichungen. Bull. Int. Acad. Pol. Sci. Lettres. Cl. Sci. Math. Nat. A. 35, 355–357 (1937)

Kannan, R., Vempala, S.: Randomized algorithms in numerical linear algebra. Acta Numerica., 95–135 (2017)

Leventhal, D., Lewis, A.S.: Randomized methods for linear constraints: convergence rates and conditioning. Math. Oper. Res. 35, 641–654 (2010)

Ma, A., Needell, D., Ramdas, A.: Convergence properties of the randomized randomized extended Gauss-Seidel and Kaczmarz algorithms. SIAM J. Matrix Anal. Appl. 36, 1590–1604 (2015)

Magoulès, F., Szyld, D.B., Venet, C.: Asynchronous optimized Schwarz methods with and without overlap. Numer. Math. 137, 199–227 (2017)

Martinsson, P.-G., Tropp, J.A.: Randomized numerical linear algebra: foundations and algorithms. Acta Numerica., 403–572 (2020)

Ortega, J. M., Rheinboldt, W. C.: Iterative Solution of Nonlinear Equations in Several Variables, volume 30 of Classics in Applied Mathematics. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2000). Reprint of the 1970 original

Richtárik, P., Takáč, M.: Stochastic reformulations of linear systems: algorithms and convergence theory. SIAM J. Matrix Anal. Appl. 41, 487–524 (2020)

Rüde, U.: Mathematical and Computational Techniques for Multigrid Adaptive Methods. SIAM, Philadelphia (1993)

Saad, Y.: Iterative Methods for Sparse Linear Systems. PWS Publishing Co. Boston, (1966). Second edition, pp.701–702. SIAM, Philadelphia (2003)

Saad, Y.: Iterative methods for linear systems of equations: a brief historical journey. In: Brenner, S.C., Shparlinski, I., Shu, C.-W., Szyld, D.B. (eds.) Mathematics of Computation 75 Years. American Mathematical Society, Providence (2020)

Smith, B.F., Bjørstad, P.E., Gropp, W.: Domain Decomposition: Parallel Multilevel Methods for Elliptic Partial Differential Equations. Cambridge University Press (1996)

Steinerberger, S.: A weighted randomized Kaczmarz method for solving linear systems. Math. Comput. 90, 2815–2826 (2021)

Steinerberger, S.: Randomized Kaczmarz converges along small singular vectors. SIAM J. Matrix Anal. Appl. 42, 608–615 (2021)

Strikwerda, J.C.: A probabilistic analysis of asynchronous iteration. Linear Algebra Appl. 349, 125–154 (2002)

Strohmer, T., Vershynin, R.: A randomized Kaczmarz algorithm with exponential convergence. J. Fourier Anal. Appl. 15(2), 262–278 (2009). https://doi.org/10.1007/s00041-008-9030-4

Trottenberg, U., Oosterlee, C., Schuller, A.: Multigrid. Academic Press, New York (2000)

Varga, R.S.: Matrix Iterative Analysis. Prentice-Hall, Englewood Cliffs (1962). Second Edition, revised and expanded, Springer, Berlin (2000)

Wang, F., Li, W., Bao, W., Liu, L.: Greedy randomized and maximal weighted residual Kaczmarz methods with oblique projection. Electron. Res. Arch. 30, 1158–1186 (2022)

Wolfson-Pou, J., Chow, E.: Distributed Southwell: an iterative method with low communication costs. In: International Conference for High Performance Computing, Networking, Storage, and Analysis (SC17), (13 pages). Association for Computing Machinery, Denver (2017)

Yang, X.: A geometric probability randomized Kaczmarz method for large scale linear systems. Appl. Numer. Math. 164, 139–160 (2021)

Acknowledgements

We want to thank Karsten Kahl from the University of Wuppertal for sharing his Matlab implementations constructing convection-diffusion matrices with us. We also thank Vahid Mahzoon from Temple University for some preliminary experiments which helped guide our thinking. We express our gratitude to the two reviewers for their comments and questions, which helped improve our presentation.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Frommer, A., Szyld, D.B. On the convergence of randomized and greedy relaxation schemes for solving nonsingular linear systems of equations. Numer Algor 92, 639–664 (2023). https://doi.org/10.1007/s11075-022-01431-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01431-7

Keywords

- Randomized Gauss-Seidel

- Convergence bounds

- Greedy algorithms

- Gauss-Southwell algorithm

- Randomized smoother