Abstract

In this work, we consider symmetric positive definite pencils depending on two parameters. That is, we are concerned with the generalized eigenvalue problem \(\left (A(x)-\lambda B(x)\right )v=0\), where A and B are symmetric matrix valued functions in \(\mathbb {R}^{n\times n}\), smoothly depending on parameters \(x\in {\Omega }\subset \mathbb {R}^2\); furthermore, B is also positive definite. In general, the eigenvalues of this multiparameter problem will not be smooth, the lack of smoothness resulting from eigenvalues being equal at some parameter values (conical intersections). Our main goal is precisely that of locating parameter values where eigenvalues are equal. We first give general theoretical results for the present generalized eigenvalue problem, and then introduce and implement numerical methods apt at detecting conical intersections. Finally, we perform a numerical study of the statistical properties of coalescing eigenvalues for pencils where A and B are either full or banded, for several bandwidths.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work, we consider the eigenvalue problem for a pencil (A(x),B(x)), that is, the generalized eigenvalue problem

where \(x\in \mathbb {R}^2\) represents parameters varying in an open and connected subset Ω of \(\mathbb {R}^2\). The functions A and B are symmetric and take values in \(\mathbb {R}^{n\times n}\); B is also positive definite, a fact that we will indicate with B ≻ 0. Moreover, we will assume that A and B (and B− 1) are continuous and bounded functions of the parameters with k ≥ 0 continuous derivatives. We write \(M\in \mathcal {C}^k({\Omega },\mathbb {R}^{n\times n})\) to indicate a n × n continuous real valued matrix function M, depending on parameters in Ω, and having k ≥ 0 continuous derivatives; we write \(M\in \mathcal {C}\) if M is just continuous (i.e., k = 0), and \(M\in \mathcal {C}^{\omega }\) if M is real analytic.

We recall that the eigenvalues of (1) are roots of the characteristic polynomial

and since \(\det (B)\ne 0\), there are n eigenvalues of (1) and it is well known (see below) that they are all real. Furthermore, since the coefficients of the polynomial \(\det (A-\lambda B)\) are as smooth as the entries of A and B, and the roots of a polynomial of (exact) degree n depend continuously on its coefficients, then the n eigenvalues of (1) can be labeled so to be continuous functions of the parameter x, e.g., in decreasing order:

A main interest of ours is to locate parameter values where the eigenvalues of (1) coalesce: these are called conical intersections (or “CIs” for short), and it is well understood (and see below) that at these values one should not expect any smoothness for the eigenvalues (nor for the eigenvectors, of course). To locate CIs, our method will consist of the following steps.

Our task in this work is to validate Algorithm 1.1, both theoretically and computationally. In Section 3, we will validate “Step 1,” namely that for 2-parameter problems the restriction to a closed loop is generically giving distinct eigenvalues. In Section 2, we will validate “Step 2” and derive differential equations for V and Λ along the loop. Finally, in Section 3, we will validate “Step 3” by our main result, Theorem 3.13, which will give sufficient conditions for the existence inside Γ of parameter values where eigenvalues of the pencil coalesce, and which ones. But, beside our theoretical developments, we will also give and implement new algorithms for carrying out the above steps, and use these to perform a statistical study of the number of CIs for 2-parameter problems, for the cases of full and banded pencils; for banded problems, our study will show meaningful and unexpected differences with respect to the simpler case of the symmetric eigenproblem, i.e., B(t) = I in (1).

Remark 1.1

Since B is positive definite, it induces an inner product, and hence a concept of orthogonality. As a consequence, one may expect that results similar to the standard case (symmetric eigenproblem and Euclidean inner product) should follow. This is indeed correct, as we will see below. Yet, the required “modifications” are new, and of theoretical interest. Furthermore, the algorithmic developments for the generalized eigenproblem are quite a bit different from those of the standard eigenproblem, and this produces substantially more efficient techniques than those resulting from a conversion to a symmetric eigenproblem. See below.

Eigenproblems depending on parameters are receiving increasing interest in recent years. Beside our own work on the subject, chiefly related to the symmetric/Hermitian eigenproblem (see references at the end), Mehl et al. in [20] consider rank one perturbations of structured pencils, Jarlebring at al. in [13] and Kalinina in [14] focus on double eigenvalues of linear combinations A + μB, with arbitrary matrices \(A,B\in \mathbb {C}^{n\times n}\), Berkolaiko et al. in [1] consider the real symmetric eigenproblem from a numerical perspective, and Uhlig in [25] considers the same problem but focuses on coalescence of eigenvalues of 1 parameter families of matrices.

Remark 1.2

An important source of pencils like (1) arises from the second order problem

where the matrices \(M,\ D,\ C\ \in \mathbb {R}^{n\times n}\) are all symmetric and positive definite and typically arise from finite element discretization of beams’ structures and the like. For n ≫ 1, to obtain a computationally viable approach, a widely adopted technique consists in projecting (2) onto a subspace spanned by a restricted set of eigenvectors v of the generalized (frictionless) eigenproblem

For example, in [23] the projection is taken with respect to the m eigenvectors \(v_1,\dots , v_m\), associated to the m smallest eigenvalues of (3). Writing \(V=[{v_1 {\dots } v_m}]\in \mathbb {R}^{n\times m}\), instead of (2) one considers

where y = V z, \(z\in \mathbb {R}^m\), and \(\tilde M=V^TMV\), \(\tilde D =V^TDV\), \(\tilde C=V^TCV\), and \(\tilde M\) and \(\tilde C\) are diagonal (see [8]). Alas, in general, the projection is well defined only if there is a gap between the eigenvalues associated to the eigenspace onto which we are projecting, and the other eigenvalues. By finding parameter values where a pair of eigenvalues coalesce, our present work gives a precise indication of this spectral gap, among all possible sets of eigenvalues, in different parametric regions.

A plan of our paper follows. In Section 2, after reviewing some basic results on theory and techniques for the generalized eigenproblem when the given matrices do not depend on parameters, we give smoothness and periodicity results for the parameter dependent case. In particular, we give smoothness results about square roots and Cholesky factors when the matrices are smooth functions of several parameters, and specialized results when they are analytic function of one real parameter. We give a general block-diagonalization result, and, for the 1-parameter case, we derive novel differential equations for the smooth eigenfactors (see Theorem 2.11), and finally give relevant periodicity results. All of these results are needed for the development in Section 3, where we first discuss the codimension of having equal eigenvalues (which justifies, given our emphasis on locating CIs, why we consider pencils that depend on two parameters). Then, we give the key theoretical results about detection of parameter values where the eigenvalues of the generalized eigenproblem coalesce, validating the approach outlined in Steps 1-2-3 of Algorithm 1.1. In Section 4, we discuss algorithmic development for detection of coalescing eigenvalues. Finally, in Section 5, we give numerical results on locating conical intersections of random functions ensembles for pencils (A, B) depending on two parameters, and are either full or banded, and give evidence on the power law distribution of CIs in terms of the size of the problem.

2 Smoothness and periodicity results

Numerical methods for (1) when \(A,B\in \mathbb {R}^{n\times n}\), A = AT, B = BT ≻ 0, are given (constant) matrices are quite well developed (see [24] for a review). In essence, the standard techniques pass through taking either the square root of B or its Cholesky factorization, the latter being the most common choice in the numerical community (e.g., it is the method implemented in MATLAB). For convenience, we review these below for

-

(a)

Square root. It is always possible to reduce the problem (5) to a standard symmetric eigenvalue problem:

$$(A-{\lambda} B)v=0\iff B^{1/2}\bigl(B^{-1/2}AB^{-1/2}-\lambda I\bigr) B^{1/2}v=0 $$$$ \qquad \iff (\tilde A-{\lambda} I)w=0\ , w=B^{1/2}v\ ,$$where B1/2 is the unique symmetric positive definite square root of B, and \(\tilde A=B^{-1/2}AB^{-1/2}\). Clearly, from the eigenvalues/eigenvectors of this last problem, we can get those of (5). Since \(\tilde A=\tilde A^T\), we note that the eigenvalues of (5) (and those of (1) for any given value of x) are real, as previously stated.

-

(b)

Cholesky. Similarly, since B is positive definite, it admits a Cholesky factorization

$$ B=LL^T\ , L\quad \text{lower triangular} \ , $$(6)from which it is immediate to obtain

$$(A-{\lambda} B)v=0\iff L \bigl(L^{-1}AL^{-T}-\lambda I\bigr) L^T v=0 $$$$ \qquad \iff (\tilde A-{\lambda} I)w=0\ , \tilde A=L^{-1}AL^{-T}\ , w=L^{T}v\ ,$$and again from the eigenvalues/eigenvectors of this last problem, we can get those of (5). We note that the Cholesky factor is not unique, but it can be made unique by fixing the signs of Lii, the standard choice being Lii > 0. In this work, we will always restrict to this choice.

We conclude this review of the non-parametric case with the following simple (and well known) result, which says that the eigenvectors’ matrix V can be taken to be orthogonal with respect to the inner product induced by B. This property is called B-orthogonality.

Lemma 2.1

For (5), the eigenvector matrix \(V\in \mathbb {R}^{n\times n}\), \(V=[v_1,\dots , v_n]\), can be chosen so to satisfy the relation

If the eigenvalues are distinct, then, for a given ordering of the eigenvalues, the matrix V in (7) is unique up to the sign of its columns.

Proof

Regardless of having used the square root of B or its Cholesky factor, we saw that \((A-\lambda B)v=0\iff (\tilde A-\lambda I)w=0\), with \(\tilde A= B^{-1/2}AB^{-1/2}\) or \(\tilde A= L^{-1}AL^{-T}\). Since \(\tilde A=\tilde A^T\), then \(\tilde A\) has an orthogonal matrix of eigenvectors W: WTW = I, and thus V = B− 1/2W, or V = L−TW, satisfies VTBV = I. In case the eigenvalues are distinct, then it is well understood that, for a given ordering of the eigenvalues, the orthogonal matrix W is unique up to the sign of its columns; that is, if W1 and W0 are two orthogonal matrices giving the same ordered eigendecomposition of \(\tilde A\), we must have W1 = W0D with D = diag(± 1,…,± 1). Therefore, we will also have V1 = V0D. □

Corollary 2.2

With the notation of the proof of Lemma 2.1, we also have

Proof

(⇐) Since \(V_0^TBV_0=I\), then obviously \(DV_0^TBV_0=D\). (⇒) From \(V_0^TBV_0=I\) and \(V_1^TBV_0=D\), given invertibility of V0 and B, we get V1 = V0D at once. □

Next, we give several results for the generalized eigenvalue problem (1), extending results from the standard eigenvalue problem, that is, (1) with B = I:

It is well known (e.g., see [3, 6, 15]) that even for this standard eigenvalue problem the eigenvalues/eigenvectors cannot be expected to inherit the smoothness of A, unless eigenvalues are distinct. In the case of 2 parameters, in general, there is a total loss of smoothness when the eigenvalues coalesce (e.g., take \(A=\left [\begin {array}{cc}x_1 & x_2 \\ x_2 & -x_1 \end {array}\right ]\)), and even in the 1 parameter case there is a potential loss of smoothness of the eigenvectors when eigenvalues coalesce (see [3]).

Below, we show that, in reducing the pencil (1) to a symmetric eigenproblem like (8), smoothness is kept.

2.1 Square root

Before proceeding, we recall the following simple but important result (see [17, Theorem 1.5.3] and the discussion therein).

Lemma 2.3

Let \(a\in \mathcal {C}^k({\Omega }, \mathbb {R})\), k ≥ 0 an integer, be a strictly positive function of p real parameters x ∈Ω, where Ω is an open, bounded, and connected subset of \(\mathbb {R}^p\), and let a be continuous and uniformly bounded in \(\bar {\Omega }\). Then, the function \(\sqrt {a(x)}\), where \(\sqrt {a(x)}\) is the unique positive square root of a, is also a \(\mathcal {C}^k\) function of x. Furthermore, if \(a\in \mathcal {C}^{\omega }(J, \mathbb {R})\) is analytic in the parameter x ∈ J, where J is an open and bounded interval of the real line, then so is its square root.

Next, we first observe that it is easy to infer that the Cholesky factor of B is as smooth as B itself (for functions of one parameter, see [2]).

Theorem 2.4

Let \(B\in \mathcal {C}^k({\Omega },\mathbb {R}^{n\times n})\) be symmetric positive definite for all \(x\in {\Omega }\subset \mathbb {R}^p\). Then, its Cholesky factor L in (6) with Lii > 0 is also a \(\mathcal {C}^k\) function for x ∈Ω. Furthermore, if \(B\in \mathcal {C}^{\omega }(J, \mathbb {R}^{n\times n})\) is analytic in the parameter x ∈ J, where J is an open and bounded interval of the real line, then so is the Cholesky factor.

Proof

The proof is immediate. Write \(B=\left [\begin {array}{cc}b_{11} & c^T \\ c & \hat B \end {array}\right ]\) and let \(L_1=\left [\begin {array}{cc}\sqrt {b_{11}} & 0 \\ c/\sqrt {b_{11}} & I \end {array}\right ]\), so that \(L_1^{-1}BL_1^{-T}=\left [\begin {array}{cc}1 & 0 \\ 0 & B_1 \end {array}\right ]\), where \(B_1=\hat B - cc^T/b_{11}\). Obviously, B1 is symmetric, positive definite, and as smooth as B, and the result follows using Lemma 2.3. The analytic case also follows in the same way since in this case B1 and \(\sqrt {b_{11}}\) are analytic. □

Next, we show that the square root B1/2 is also a \(\mathcal {C}^k\) function. First, we point out that a symmetric positive definite matrix function B, continuously depending on parameters \(x\in \mathbb {R}^p\), has a unique symmetric positive definite square root S which depends continuously on x. We will write B1/2 for this square root of B. Both uniqueness and continuity can be inferred from results in [10, Chapter 6]; in particular, continuity follows from Theorem 6.2 therein, which in turn cites the work [9]; therefore, its proof is omitted.

Lemma 2.5

Let \(B\in \mathcal {C}({\Omega },\mathbb {R}^{n\times n})\), where Ω is an open subset of \(\mathbb {R}^p\), p ≥ 1. Furthermore, let B(x) be symmetric positive definite for all x ∈Ω. Then, for any x ∈Ω there exists a unique symmetric positive definite square root B1/2(x) of B(x). Moreover, B1/2(x) is a continuous function of x.

Using Lemma 2.5, we can get the result on smoothness.

Theorem 2.6

With the same notation as in Lemma 2.5, let B be a \(\mathcal {C}^k\) function, k ≥ 1. Then, the unique positive definite square root B1/2 of B is also a \(\mathcal {C}^k\) function.

Proof

Let S = B1/2 and use that S2 = B. We know that S(x) is continuous, and that B(x) is smooth. Next, we define the first partial derivatives from formally differentiating the relation S2 = B. That is, consider

The linear systems given by the Lyapunov equations in (9) are uniquely solvable, since S is positive definite and thus invertible. Now, the unique solution of an invertible linear system Cz = b with C and b continuously depending on parameters, obviously defines a continuous solution z, from which we conclude that the unique solutions Xi of (9) are continuous functions of the parameters x. Finally, we observe that \(S_{x_i}=X_i\), \(i=1,\dots , p\).

At this point, we can look at higher derivatives. We see the situation for the second derivatives, from which the general argument will be evident.

Rewrite (9)

and consider the second partial derivatives from formally differentiating this relation. We get:

Rearranging terms in (10), we obtain

which is again uniquely solvable and gives a continuous solution Xij, and we observe that \(S_{x_ix_j}=X_{ij}\). Finally, observe that, from the left-hand-side of (10), we get that Xij = Xji, that is, \(S_{x_ix_j}= S_{x_jx_i}\), and thus the order of differentiation of the second partial derivatives does not matter.

Continuing to formally differentiate, we obtain continuous higher derivatives and the result follows. □

For completeness, we specialize Theorem 2.6 to the case of B real analytic.

Theorem 2.7

Let \(B\in \mathcal {C}^{\omega }(\mathbb {R},\mathbb {R}^{n\times n})\), symmetric and positive definite for all x. Then, the unique positive definite square root B1/2 is analytic in x as well.

Proof

The proof rests on a fundamental theorem (see Rellich [21], or Kato [15]), whereby an analytic Hermitian function admits an analytic eigendecomposition. Thus, we can write B(x) = W(x)D(x)WT(x) where W and D are analytic, W is orthogonal, and D is diagonal with Dii(x) > 0 (we note that the eigenvalues in D are not necessarily ordered). Then, we have B1/2(x) = W(x)D1/2(x)WT(x), where \(D^{1/2}(x)=\text {diag}\left (\sqrt {D_{ii}(x)}\ , \ i=1,\dots , n\right )\). The result now follows from Lemma 2.3. □

Next, we look at a general block-diagonalization result for the parameter dependent generalized eigenproblem, specializing a result given by Hsieh-Sibuya and Gingold (see [12] and [7]) for the standard eigenvalue case. Then, we give more refined results for the case of one parameter, and further specialize some results to the case of periodic pencils. All of these results will form the justification for our algorithms to locate conical intersections (see Sections 3 and 4).

2.2 General block diagonalization results

In order to simplify the problem we consider, the following result is quite useful. It highlights that the correct transformations for the pencil under study are “inertia transformations.”

Since our interest is for the case where A and B depend on two (real) parameters, this is the case on which we focus in the theorem below.

Theorem 2.8 (Block-Diagonalization)

Let R be a closed rectangular region in \(\mathbb {R}^2\). Let \(A, B\in \mathcal {C}^k(R,\mathbb {R}^{n\times n})\), k ≥ 0, with A = AT and B = BT ≻ 0, and suppose that the eigenvalues of the pencil (A, B) can be labeled so that they belong to two disjoint sets for all x ∈ R: \(\lambda _1(x),\dots ,\lambda _j(x)\) in Λ1(x) and \(\lambda _{j+1}(x),\dots , \lambda _n(x)\) in Λ2(x), Λ1(x) ∩Λ2(x) = ∅ ,∀x ∈ R. Then, there exists \(V\in \mathcal {C}^k(R,\mathbb {R}^{n\times n})\), invertible, such that

where \(A_1, B_1\in \mathcal {C}^k(R,\mathbb {R}^{j\times j})\), with \(A_1=A_1^T\) and \(B_1=B_1^T\succ 0\), and \(A_2, B_2\in \mathcal {C}^k\left (R,\mathbb {R}^{(n-j)\times (n-j)}\right )\), with \(A_2=A_2^T\) and \(B_2=B_2^T\succ 0\), so that the eigenvalues of the pencil (A1,B1) are those in Λ1 and the eigenvalues of the pencil (A2,B2) are those in Λ2, for all x ∈ R. Furthermore, the function V can be chosen to be B-orthogonal: VTBV = I for all x.

Proof

We show directly that the transformation V can be chosen so that VTBV = I, from which the general result will follow.

One way to proceed is by using the unique smooth positive definite square root of B, B1/2, so that the eigenvalues of the pencil are the same as those of the standard eigenvalue problem with function \(\tilde A=B^{-1/2}AB^{-1/2}\). Because of Theorem 2.6, the function \(\tilde A\) is as smooth as A and it is clearly symmetric. Therefore, from the cited results in [7, 12], we have that there exists smooth, orthogonal, W such that \(W^T\tilde AW=\left [\begin {array}{cc}A_1 & 0 \\ 0 & A_2 \end {array}\right ]\), with the eigenvalues of Ai being those in Λi, and \(A_i=A_i^T\), i = 1,2. Now we just take V = B− 1/2W. □

We notice that the function V of Theorem 2.8 is clearly not unique, not even if we select one for which VTBV = I.

The block diagonalization result Theorem 2.8 can easily be extended to several blocks. In the case of n distinct eigenvalues, one ends up with a full smooth diagonalization. Because of its relevance in what follows, we give this fact as a separate result.

Corollary 2.9

With same notation as in Theorem 2.8, assume that the eigenvalues of the pencil (A(x),B(x)) are distinct for all x. Then, the eigenvalues can be labeled so to be \(\mathcal {C}^k\) functions of x. Moreover, we can also choose the corresponding eigenvector function V to be a \(\mathcal {C}^k\) function of x and to satisfy the relations \(V^T(x)A(x)V(x)={\Lambda }(x)=\text {diag}(\lambda _1(x), \dots , \lambda _n(x))\), and V (x)TB(x)V (x) = I, for all x.

Similarly, let \(A, B\in \mathcal {C}^k(\mathbb {R},\mathbb {R}^{n\times n})\), k ≥ 0, with A = AT and B = BT ≻ 0, and let the eigenvalues of the pencil (A(t),B(t)) be distinct for all \(t\in \mathbb {R}\). Then, they can be labeled so to be \(\mathcal {C}^k\) functions of t. Moreover, we can also choose the corresponding eigenvector function V to be a \(\mathcal {C}^k\) function of t and to satisfy the relation V (t)TB(t)V (t) = I for all t.

2.3 One parameter case: smoothness

First of all, from Corollary 2.9 we have that the 1-parameter smooth pencil (A(t),B(t)), \(t\in \mathbb {R}\), can be smoothly diagonalized. This is a fact that, for the standard case (B = I) and for 1-parameter functions, is well known since the work of Lancaster and Rellich [18, 21]. Secondly, we have the following simple but useful result on the uniqueness of V.

Corollary 2.10

Consider the 1-parameter case of Corollary 2.9, and call V a smooth function of eigenvectors of the pencil (A(t),B(t)) satisfying V (t)TB(t)V (t) = I, and rendering a certain ordering for the diagonal of Λ. Such V is unique and any other possible (smooth) function of eigenvectors yielding the same ordering of eigenvalues is obtained from V by sign changes of V’s columns.

Proof

Since the eigenvalues are distinct, then the eigenvectors are uniquely determined up to scaling. In other words, the only freedom in specifying V is given by V → V S where \(S=\text {diag}(s_i, i=1,\dots , n)\) with si≠ 0. By requiring that VTBV = I, we get that we must have S2 = I, that is, \(s_i^2=1\), \(i=1,\dots , n\), as claimed. □

We are now ready for the most important result of this section, a derivation of the differential equations satisfied by the smooth factors V and Λ, under the assumption of distinct eigenvalues. So doing, we will generalize known results in [3] for the standard eigenproblem (i.e., when B = I). As it turns out, the generalization is not immediate.

2.3.1 Differential Equations for the factors

Consider (1), with \(A, B\in \mathcal {C}^k(\mathbb {R}^+,\mathbb {R}^{n\times n})\), A = AT and B = BT ≻ 0, and assume that the eigenvalues of the pencil (A(t),B(t)) are distinct for all t. As seen above, we can choose V and \({\Lambda }=\text {diag}(\lambda _1,\dots , \lambda _n)\) smooth as well, satisfying A(t)V (t) = B(t)V (t)Λ(t) and V (t)TB(t)V (t) = I, for all t.

As seen in Corollary 2.10, we must fix a choice for V. So, suppose we have an eigendecomposition at t = 0, that is, we have V0 and Λ0 so that

We want to obtain differential equations satisfied by the factors V and Λ for all t ≥ 0, with initial conditions V (0) = V0 and Λ(0) = Λ0.

Since the factors are smooth, we can formally differentiate the two relations

Differentiation of (11)-(a) gives

from which using AV = BV Λ, and hence VTA = ΛVTB, we obtain

Now, using the structure of Λ (diagonal) we observe that relatively to the diagonal entries we have (using that the diagonals of \(V^TB\dot V\) and of \((V^TB\dot V)^T\) are the same):

that is, the eigenvalues in general satisfy a linear non-homogeneous differential equation.

Next, differentiating (11)-(b), we obtain \(\dot V^TBV+V^T\dot B V+V^TB\dot V=0 \) from which we get

hence, we can obtain an expression for the symmetric part of \((V^TB\dot V)\), and in particular in (13) we can use

What we are missing is an expression for the anti-symmetric part of \((V^TB\dot V)\). To arrive at this, we use (12) relative to the off-diagonal entries. This gives the following for the (i, j) and (j, i) entries:

and

where we have used symmetry of \(V^T\dot A V\) and the fact that the (i, j)th entry of a matrix is the (j, i)th entry of its transpose. Now, adding the last two expressions in (15) and (16), we obtain

and thus we can obtain an expression for the antisymmetric part of \((V^TB\dot V)\), upon using (14) for its symmetric part.

So, finally, using (14) and (17), we can obtain a formula for the term \(V^TB\dot V\) which depends on \(B,\dot B, {\Lambda }\) and V. Let us formally set \(C=V^TB\dot V\), and summarize the sought differential equations for V and Λ:

where the coefficients for C are given by

We summarize in the following Theorem.

Theorem 2.11 (Differential Equations for the Generalized Eigenvalue Problem)

Let \(A, B\in \mathcal {C}^k(\mathbb {R}^+,\mathbb {R}^{n\times n})\), k ≥ 1, A = AT and B = BT ≻ 0, and assume that the eigenvalues of the pencil (A(t),B(t)) are distinct for all t ≥ 0. Let V0 and Λ0 be such that A(0)V0 = B(0)V0Λ0 and \(V_0^TB(0)V_0=I\). Then, we have A(t)V (t) = B(t)V (t)Λ(t), and V (t)TB(t)V (t) = I, for all t ≥ 0, where the \(\mathcal {C}^k\) factors Λ and V satisfy the differential (18)–(19), subject to the initial conditions V (0) = V0 and Λ(0) = Λ0. __

Example 2.12 (Standard Eigenproblem)

A most important special case of the previous analysis is of course the case where B = I, the standard eigenproblem. In this case, since \(\dot B=0\), we obtain major simplifications. For one thing, (13) is a simple integral not a linear differential equation for the eigenvalues:

Furthermore, from (14), we observe that \(V^T\dot V\) must be anti-symmetric, and thus we have that \(C=V^T\dot V\) is such that CT = −C. Hence, (19) simplifies to read

Formulas (20) and (21) of course match those derived for the standard eigenproblem in [3].

2.4 Periodicity

Our algorithms to locate conical intersections rely on being able to smoothly compute the eigenvalues of the pencil (under the assumption that the eigenvalues are distinct) along closed loops in parameter space (see Step 2 of Algorithm 1.1). For this reason, we next give some results on periodicity for the square root and the Cholesky factors of a positive definite periodic function, as well as some general results on periodicity.

Definition 2.13

A function \(f\in \mathcal {C}^k(\mathbb {R},\mathbb {R})\) (k ≥ 0) is called periodic of period 1, or simply 1-periodic, if f(t + 1) = f(t), for all t. Moreover, we say that 1 is the minimal period of f if there is no τ < 1 for which f(t + τ) = f(t), for all t. In the same way, we say that the pencil (A, B) is periodic of period 1 if A(t + 1) = A(t) and B(t + 1) = B(t), and further of minimal period 1 if either A or B is such.

Cholesky factor and positive definite square root of a 1-periodic positive definite function are also 1-periodic. This is the content of the next Theorem.

Theorem 2.14

Let the function \(A\in \mathcal {C}^k(\mathbb {R},\mathbb {R}^{n\times n})\), k ≥ 0, be symmetric positive definite and of minimal period 1.

-

(a) Let L be the unique Cholesky factor of A: A(t) = L(t)LT(t), where L is lower triangular with positive diagonal, for all t. Then, also L has minimal period 1.

-

(b) Let S = A1/2 be the unique positive definite square root of A: S = ST ≻ 0, S2 = A. Then, also S has minimal period 1.

Proof

The result is a straightforward consequence of the uniqueness of L and S. □

The next result is a corollary to Theorem 2.8.

Corollary 2.15

Let \(V\in \mathcal {C}^k(R,\mathbb {R}^{n\times n})\) be the function of which in Theorem 2.8. Let Γ be a simple closed curve in R, parametrized as a \(\mathcal {C}^{\ell }\) (ℓ ≥ 0) function γ in the variable t, so that the function \(\gamma :\ t\in \mathbb {R} \to R\) is \(\mathcal {C}^{\ell }\) and of (minimal) period 1. Let \(m=\min \limits (k,\ell )\), and let Vγ be the \(\mathcal {C}^m\) function V (γ(t)), \(t\in \mathbb {R}\). Then, Vγ is \(\mathcal {C}^m\) and 1-periodic.

Proof

The result is immediate upon considering the composite function Vγ and using the stated smoothness and periodicity results. □

Remark 2.16

In case the eigenvalues of the pencil (A, B) are distinct in R, then the B-orthogonal function V has diagonalized (A, B). For a given ordering of the eigenvalues, as we already remarked V is essentially unique: the degree of non-uniqueness is given only by the signs of the columns of V. Naturally, in this case, Corollary 2.15 will give that a smooth Vγ will be a 1-periodic function.

The last result we give is a generalization of [6, Lemma 1.7] and it essentially states that if the pencil (A, B) has minimal period 1, then there cannot coexist continuous eigendecompositions of minimal periods 1 and 2. It is a simple yet important result that, together with Theorem 3.13, forms the theoretical backing of our algorithms in Section 4.

Lemma 2.17

Let the functions \(A, B\in \mathcal {C}^k(\mathbb {R},\mathbb {R}^{n\times n})\), k ≥ 0, with A = AT and B = BT ≻ 0, be of minimal period 1 and let the pencil (A(t),B(t)) have distinct eigenvalues for all t. Suppose that there exist V = V (t) continuous and invertible, and Λ diagonal such that

with:

-

(i)

\({\Lambda }\in \mathcal {C}(\mathbb {R},\mathbb {R}^{n\times n})\) diagonal with distinct diagonal entries, and s.t. Λ(t + 1) = Λ(t);

-

(ii)

\(V\in \mathcal {C}(\mathbb {R},\mathbb {R}^{n\times n})\) invertible, with

$$V(t+1)=V(t)\ D\ , \forall t\in \mathbb{R}\ ,$$where D is diagonal with Dii = ± 1 for all i, but D≠In.

Then, there cannot exist an invertible continuous matrix function T diagonalizing the pencil and of period 1.

Proof

By contradiction, suppose that there exists continuous T of period 1 such that A(t)T− 1(t) = B(t)T− 1(t)Λ(t), for all \(t\in \mathbb {R}\). Therefore, we must have A = BT− 1ΛT and A = BV ΛV− 1 from which Λ(TV ) = (TV )Λ. But, Λ(t) has distinct diagonal entries for all \(t\in \mathbb {R}\), so that T(t)V (t) must be diagonal for all \(t\in \mathbb {R}\). Denote its diagonal entries by c1(t),…,cn(t), and so (since TV is invertible) ci(t)≠ 0, for all t. But T(t + 1)V (t + 1) = T(t)V (t)D, for all \(t\in \mathbb {R}\), hence there must exist an index i for which ci(t + 1) = −ci(t), which is a contradiction, since the functions ci’s are continuous and nonzero for \(t\in \mathbb {R}\). □

3 Coalescing eigenvalues for two parameter pencils

In this section, we study the occurrence of equal eigenvalues for (1) when A and B depend on two (real) parameters.

First, we consider the case of a single pair of eigenvalues coalescing, then generalize to several pairs coalescing at the same parameter values. To begin with, we show that having a pair of coalescing eigenvalues is a codimension 2 property.

3.1 One generic coalescing in Ω

First, consider the 2 × 2 case. The following simple result is the key to relate a generic coalescing to the transversal intersection of two curves.

Theorem 3.1

Let \(A=A^T\in \mathcal {C}^k({\Omega }, \mathbb {R}^{2\times 2})\) and \(B=B^T\succ 0 \in \mathcal {C}^k({\Omega }, \mathbb {R}^{2\times 2})\), k ≥ 1. Write \(A(x)=\left [\begin {array}{cc}a & b \\ b & c \end {array}\right ]\) and \(B(x)=\left [\begin {array}{cc}\alpha & \beta \\ \beta & \gamma \end {array}\right ]\). Then, the generalized eigenproblem

has identical eigenvalues at x if and only if

Proof

The problem \(\left (\left [\begin {array}{cc} a & b \\ b & c \end {array}\right ]-\lambda \left [\begin {array}{cc} \alpha & \beta \\ \beta & \gamma \end {array}\right ]\right )v=0\) can be rewritten as \(\left (\left [\begin {array}{cc}\tilde a & \tilde b \\ \tilde b & \tilde c \end {array}\right ]-\right .\) \(\left .\lambda \left [\begin {array}{cc} 1 & \tilde d \\ \tilde d &1 \end {array}\right ]\right )w=0\), where \(\tilde a=a/\alpha \), \(\tilde b=b/\sqrt {\alpha \gamma }\), \(\tilde c=c/\gamma \) and \(\tilde d=\beta /\sqrt {\alpha \gamma }\), and \(w=\left [\begin {array}{cc}v_1\sqrt {\alpha } \\ v_2\sqrt {\gamma } \end {array}\right ]\). We observe that the sign of the entries of v and of w is the same, and we also note that \(\tilde d^2<1\) (since B is positive definite).

We further have the following chain of equalities:

where

(Note that we have reduced the problem to one for which A has 0-trace.) Now, an explicit computation gives

We have identical eigenvalues μ1 = μ2 (hence λ1 = λ2) if and only if

Rephrasing in terms of the original entries, this is precisely what we wanted to verify. □

The next result is about periodicity of the eigenvectors of a 2 × 2 pencil along a loop that encircles a generic coalescing point, and under specified “generic assumptions.” The proof is similar to what we did in [6, Theorem 2.2] for the symmetric eigenproblem, with the necessary changes due to dealing with the generalized eigenproblem and also fixing some imprecisions we had in there.

Theorem 3.2 (2 × 2 case)

Consider \(A,B\in \mathcal {C}^k({\Omega }, \mathbb {R}^{2\times 2})\), k ≥ 1, with A = AT and B = BT ≻ 0. For all x ∈Ω, write

and let λ1 and λ2 be the two continuous eigenvalues of the pencil (A, B), labeled so that λ1(x) ≥ λ2(x) for all x in Ω. Assume that there exists a unique point ξ0 ∈Ω where the eigenvalues coincide: λ1(ξ0) = λ2(ξ0). Consider the \(\mathcal {C}^k\) function \(F:\ {\Omega } \to \mathbb {R}^2\) given by

and assume that 0 is a regular value for both functions aγ − αc and bγ − βx. Footnote 1 Then, consider the two \(\mathcal {C}^k\) curves Γ1 and Γ2 through ξ0, given by the zero-set of the components of F: Γ1 = {x ∈Ω : a(x)γ(x) − α(x)c(x) = 0}, Γ2 = {x ∈Ω : b(x)γ(x) − β(x)c(x) = 0}. Assume that Γ1 and Γ2 intersect transversally at ξ0. Footnote 2

Let Γ be a simple closed curve enclosing the point ξ0, and let it be parametrized as a \(\mathcal {C}^{\ell }\) (ℓ ≥ 0) function γ in the variable t, so that the function \(\gamma :\ t\in \mathbb {R} \to {\Omega }\) is \(\mathcal {C}^{\ell }\) and 1-periodic. Let \(m=\min \limits (k,\ell )\), and let Aγ, Bγ be the \(\mathcal {C}^m\) functions A(γ(t)), B(γ(t)), for all \(t\in \mathbb {R}\). Then, for all \(t\in \mathbb {R}\), the pencil (Aγ,Bγ) has the eigendecomposition

such that:

-

(i) \({\Lambda }_{\gamma }\in \mathcal {C}^m(\mathbb {R},\mathbb {R}^{2\times 2})\) and diagonal: \({\Lambda }_{\gamma }(t)=\left [\begin {array}{cc}\lambda _1(\gamma (t)) & 0 \\ 0 & \lambda _2(\gamma (t)) \end {array}\right ]\), and Λγ(t + 1) = Λγ(t) for all \(t\in \mathbb {R}\);

-

(ii) \(V_{\gamma }\in \mathcal {C}^m(\mathbb {R},\mathbb {R}^{2\times 2})\), Vγ(t + 1) = −Vγ(t) for all \(t\in \mathbb {R}\), and Vγ is Bγ-orthogonal: Vγ(t)TBγ(t)Vγ(t) = I, for all \(t\in \mathbb {R}\).

Proof

Because of Theorem 3.1,

and, by hypothesis, ξ0 is the unique root of F(x) in Ω. Moreover, under the assumption of ξ0 being the only root of F in Ω, just like in the proof of Theorem 3.1 (see (24)), we can also rewrite the problem in the simpler form

Furthermore, 0 is a regular value for both function \(\hat a\) and \(\hat b\), and therefore G(x) = 0 continues to define smooth curves intersecting transversally at ξ0, call them \(\hat {\Gamma }_1\) and \(\hat {\Gamma }_2\) (these are just rescaling and shifting of the curves Γ1 and Γ2). Moreover, we let \((\hat A, \hat B)\) be the pencil associated to these simpler functions:

At this point, we will prove the asserted result for \(\hat {\Gamma }_1\) and \(\hat {\Gamma }_2\) by first showing that it holds true along a small circle C around ξ0, and then show that the same results hold when we continuously deform C into Γ.

Since \(\hat {\Gamma }_1\) and \(\hat {\Gamma }_2\) intersect transversally at ξ0, we let C be a circle centered at ξ0, of radius small enough so that the circle goes through each of \(\hat {\Gamma }_1\) and \(\hat {\Gamma }_2\) at exactly two distinct points (see Fig. 1).

Furthermore, let C be parametrized by a continuous 1-periodic function ρ, ρ(t + 1) = ρ(t), for all \(t\in \mathbb {R}\).

Consider the pencil \((\hat A(\rho (t)), \hat B(\rho (t)))\), \(t\in \mathbb {R}\), which is thus a smooth (and 1-periodic) pencil, with distinct eigenvalues, so that its smooth eigenvalues μ1,2 in (25) (where all functions \(\hat a, \hat b, \tilde d\) are evaluated along C) will necessarily satisfy μj(t + 1) = μj(t), j = 1,2. The smooth eigenvectors of \((\hat A(\rho (t)), \hat B(\rho (t)))\), call them W(t), are uniquely determined (for each t) up to sign. Call \(\left [\begin {array}{cc}u_{1}\\ u_{2} \end {array}\right ]\) theeigenvector relative to μ2, so that

From this, a direct computation shows that (recall that, presently, all functions are computed along C)

Therefore, from these it follows that u1 (respectively, u2) changes sign if and only if \(\hat b\) goes through zero and \(\hat a>0\) (respectively, \(\hat a < 0\)). Therefore, each of the two functions u1 and u2 changes sign only once over any interval of length 1, and since no continuous function of period 1 can change sign only once over one period, it follows that u1 and u2 must be 2-periodic functions and the periodicity assertions of the theorem follow relatively to the curve ρ(t) for the eigenvector function W. That is, along C we have that W has period 2. Finally, we note that the eigenvector function V has columns whose entries have the same sign as those of W (see the third line in the proof of Theorem 3.1), so that the periodicity assertion holds for V.

Finally, the extension from the circle C to the curve Γ enclosing the point ξ0 follows by a homotopy argument similar to what was done in [6] (see the final part of the proof of Theorem 2.2 and Remark 2.5 in [6]). One takes a continuous homotopy h(s, t), (s, t) ∈ [0,1] × [0,1], such that h(0,t) = ρ(t), h(1,t) = γ(t), for all t ∈ [0,1], h(s,0) = h(s,1) for any s ∈ [0,1], and h continuously (in s) deforms ρ(⋅) into γ(⋅). Then, consider the restrictions A(h(s, t)) and B(h(s, t)), (s, t) ∈ [0,1] × [0,1]. Using the block diagonalization result Theorem 2.8, we get that there are continuous V (s, t) and Λ(s, t) such that VT(s, t)A(h(s, t))V (s, t) = Λ(s, t) where Λ is diagonal, and V is B-orthogonal: VT(s, t)B(h(s, t))V (s, t) = I. Next, take the functions \(f_{k}(s) = {v^{T}_{k}}(s,0)B(h(s,0))v_{k}(s,1)\) for k = 1,2 and recall that B(h(s,0)) = B(h(s,1)) since h(s,0) = h(s,1) for all s ∈ [0,1]. So, we have that f1 and f2 take values in {− 1,1}. But they are continuous, and so they have to be constant. Therefore, we must have f1(0) = f1(1) = − 1 and f2(0) = f2(1) = − 1, from which Vγ(1) = −Vγ(0) and the asserted periodicity of Vγ follows. □

The assumption of transversality for the curves Γ1 and Γ2 at ξ0 is generic within the class of smooth curves intersecting at a point. As a consequence, we can say that ξ0 is a generic coalescing point of eigenvalues of (22) when Γ1 and Γ2 intersect transversally at ξ0. As a consequence, within the class of \(\mathcal {C}^{k}\) functions A, B, generically we will need two parameters to observe coalescing of the eigenvalues of (22), and such coalescings will occur at isolated points in parameter space and persist (as a phenomenon, the parameter value will typically change) under generic perturbation.

Example 3.3

Take \(A(x,y)= \left [\begin {array}{cc}4x+3y & 5y \\ 5y & -4x+3y \end {array}\right ]\), \(B(x,y)=\left [\begin {array}{cc}5 & 3 \\ 3 & 5 \end {array}\right ]\). Then, the eigenvalues satisfy the relation \(\lambda _{1,2}=\pm \sqrt {x^{2}+y^{2}}\), (23) gives the solution x = y = 0, and the eigenvalues are not differentiable there. If we perturb the data as \(A\to A+\epsilon \left [\begin {array}{cc}1 & 1 \\ 1 & -1 \end {array}\right ]\), then the solution of (23) is x = −𝜖/4, y = − 5𝜖/16.

Combining Theorem 3.2 with Theorem 2.8, we can characterize the case of a symmetric-definite pencil in \(\mathbb {R}^{n\times n}\) whose eigenvalues coalesce uniquely at a point ξ0.

First, we define what we mean by generic coalescing point for the eigenvalues of an n-dimensional pencil when there is only one such point.

Definition 3.4

Let \(A,B\in \mathcal {C}^{k}({\Omega },\mathbb {R}^{n\times n})\), k ≥ 1, with A = AT and B = BT ≻ 0. Let λ1(x),…,λn(x), x ∈Ω, be the continuous eigenvalues of the pencil (A, B), ordered so that

and

Let R be a rectangular region \(R\subseteq {\Omega }\) containing ξ0 in its interior. Moreover, let

-

(1) \(V\in \mathcal {C}^{k}(R,\mathbb {R}^{n\times n})\) be a B-orthogonal function achieving the reduction guaranteed by Theorem 2.8:

$$ \begin{array}{@{}rcl@{}} && V^{T}(x)A(x)V(x)=\left[\begin{array}{ccc}{\Lambda}_{1}(x) & 0 & 0 \\ 0 & {A_{2}(x)} & 0 \\ 0 & 0 & {\Lambda}_{3}(x) \end{array}\right]\ , \text{and} \\ && V^{T}(x)B(x)V(x)=\left[\begin{array}{ccc}I_{j-1} & 0 & 0 \\ 0 & {B_{2}(x)} & 0 \\ 0 & 0 & I_{n-j-1} \end{array}\right]\ , \ \forall x\in R\ , \end{array} $$where \({\Lambda }_{1}\in \mathcal {C}^{k}\left (R,\mathbb {R}^{(j-1) \times (j-1)}\right )\) and \({\Lambda }_{3}\in \mathcal {C}^{k}\left (R,\mathbb {R}^{(n-j-1) \times (n-j-1)}\right )\), such that, for all x ∈ R, Λ1(x) = diag(λ1(x),…,λj− 1(x)), and Λ3(x) = diag(λj+ 2(x),…,λn(x)). Moreover, \(A_{2}={A_{2}^{T}}\in \mathcal {C}^{k}\left (R,\mathbb {R}^{2\times 2}\right )\), \(B_{2}={B_{2}^{T}}\succ 0 \in \mathcal {C}^{k}\left (R,\mathbb {R}^{2\times 2}\right )\) and the pencil (A2,B2) has eigenvalues λj(x),λj+ 1(x) for each x ∈ R;

-

(2) for all x ∈ R, write \(A_{2}(x)=\left [\begin {array}{cc}a(x) & b(x) \\ b(x) & d(x) \end {array}\right ]\), \(B_{2}(x)=\left [\begin {array}{cc}\alpha (x) & \beta (x) \\ \beta (x) & \gamma (x) \end {array}\right ]\). Assume that 0 is a regular value for the functions aγ − αc and bγ − βc, and define the function F and the curves Γ1 and Γ2 as in Theorem 3.2.

Then, we call ξ0 a generic coalescing point of eigenvalues in Ω if the curves Γ1 and Γ2 intersect transversally at ξ0.

Remark 3.5

Arguing in a similar way to [6, Theorem 2.7], it is a lengthy but simple computation to verify that Definition 3.4 is independent of the transformation V used to bring the pencil (A, B) to block-diagonal form.

Corollary 3.6

Coalescence of one pair of eigenvalues for a pencil (A, B) where A = AT and B = BT ≻ 0 is a codimension 2 phenomenon.

Proof

This is because coalescence is expressed by the two relations in (23), or –as seen in the proof of Theorem 3.1– by the two relations \(\left \{\begin {array}{l} \hat a = 0 \\ \hat b = 0 \end {array}\right .\). This, coupled with Definition 3.4, gives the claim. □

As a consequence of its definition, and of Corollary 3.6, it is generic for coalescing points of two-parameter symmetric-definite pencils to be generic coalescing points.

Remark 3.7

Corollary 3.6 is the reason why in this work we focus on pencils depending on two parameters. Being eigenvalues’ coalescence a codimension 2 phenomenon, one typically needs to vary two parameters in order to observe it at isolated points in parameter space; 1-parameter pencils, instead, are generally expected to have distinct eigenvalues.

As already exemplified by Example 3.3, at a point where eigenvalues of the pencil coalesce, there is a complete loss of smoothness of the eigenvalues. In fact, the situation of Example 3.3 is fully general, as the next example shows.

Example 3.8

Without loss of generality (see the proof of Theorem 3.1), take the symmetric-definite pencil (A, B) with

and let ξ0 be such that a(ξ0) = b(ξ0) = 0, and (because of transversality) we also have that the Jacobian \(\left [\begin {array}{cc}\nabla a \\ \nabla b \end {array}\right ]_{\xi _{0}}= \left [\begin {array}{cc}a_{x} & a_{y} \\ b_{x} & b_{y} \end {array}\right ]_{\xi _{0}}\) is invertible, that is, axby − aybx≠ 0. Now, the eigenvalues μ1,2 of the pencil are given by (25): \(\mu _{1,2}(x)=\frac {-b d\pm \sqrt {h(x)}}{1-d^{2}}\), with h(x) = b2 + (1 − d2)a2. Expanding the function h(x) at ξ0, we get

and a simple computation gives h(ξ0) = 0, ∇h(ξ0) = 0, and

so that at ξ0: H11 > 0, H22 > 0, and \(\det (H(\xi _{0}))=(1-d^{2})(b_{x}a_{y}-a_{x}b_{y})^{2}\) is positive, because of the previously remarked transversality. Therefore, H(ξ0) is positive definite, and in the vicinity of ξ0 the eigenvalues have the form \(\mu _{1,2} = \frac {-b d\pm \sqrt {\|z\|^{2}_{2}+O(\|x-\xi _{0}\|^{4}_{2})}}{1-d^{2}}\) where z = H1/2(ξ0) (x − ξ0). As a consequence, the eigenvalues’s surface have a double cone structure at the coalescing point. This justifies calling the coalescing point a conical intersection, or CI for short. Obviously, there is a total loss of differentiability through a conical intersection.

In the next theorem, we extend Theorem 3.1 to the case of a (n, n) pencil, with a single generic coalescing of eigenvalues in Ω.

Theorem 3.9

Let \(A, B\in \mathcal {C}^{k}({\Omega },\mathbb {R}^{n\times n})\), k ≥ 1, with A = AT and B = BT ≻ 0. Let λ1(x),…,λn(x), x ∈Ω, be the continuous eigenvalues of the pencil (A, B). Assume that

and

where ξ0 is a generic coalescing point.

Let Γ be a simple closed curve in Ω enclosing the point ξ0, and let it be parametrized as a \(\mathcal {C}^{\ell }\) (ℓ ≥ 0) function γ in the variable t, so that the function \(\gamma :\ t\in \mathbb {R} \to {\Omega }\) is \(\mathcal {C}^{\ell }\) and 1-periodic. Let \(m=\min \limits (k,\ell )\), and let Aγ, Bγ be the \(\mathcal {C}^{m}\) restrictions of A, B, to γ(t), \(t\in \mathbb {R}\).

Then, for all \(t\in \mathbb {R}\), the pencil (Aγ,Bγ) admits the diagonalization Aγ(t)Vγ(t) = Bγ(t)Vγ(t)Λ(t), where

-

(i) \({\Lambda }\in \mathcal {C}^{m}(\mathbb {R},\mathbb {R}^{n\times n})\), Λ(t + 1) = Λ(t), and Λ(t) = diag(λ1(t),…,λn(t)), \(\forall t\in \mathbb {R}\);

-

(ii) \(V_{\gamma }\in \mathcal {C}^{m}(\mathbb {R},\mathbb {R}^{n\times n})\) is B-orthogonal, and

$$V_{\gamma}(t+1)=V_{\gamma}(t) D\ , \ D=\left[\begin{array}{ccc}I_{j-1} & 0 & 0\\ 0 & -I_{2} & 0\\ 0 & 0 & I_{n-j-1} \end{array}\right]\ .$$

Proof

The proof combines the block-diagonalization result, Theorem 2.8, with the (2,2) case, Theorem 3.1. It follows closely the proof of [6, Theorem 2.8], and is therefore omitted. □

It is worth emphasizing that for the eigenvectors associated to eigenvalues which do not coalesce inside Ω, we have vγ(t + 1) = vγ(t). In other words, a continuous eigendecomposition V along a simple curve Γ not containing coalescing points inside (or on) it, satisfies V (t + 1) = V (t). This consideration, coupled with the uniqueness up to sign of a B-orthogonal function eigendecomposing a pencil with distinct eigenvalues, gives the following.

Corollary 3.10

Let (A, B) be a \(\mathcal {C}^{k}\) symmetric-positive definite pencil for all x ∈Ω. Let Γ be a simple closed curve in Ω, parametrized by the \(\mathcal {C}^{\ell }\) and 1-periodic function γ. Let \(m=\min \limits (k,\ell )\), and let (Aγ,Bγ) be the smooth pencil restricted to Γ. If there are no coalescing points inside Γ (nor on it), then any \(\mathcal {C}^{m}\) eigendecomposition V of the pencil (Aγ,Bγ) satisfies V (t + 1) = V (t).

3.2 Several generic coalescing points in Ω

Here, we consider the case when several eigenvalues of the pencil coalesce inside a closed curve Γ. In line with our previous analysis of generic cases, we only consider the case when coalescing points are isolated and generic, as characterized next.

Definition 3.11

Consider the pencil (A, B), with \(A, B\in \mathcal {C}^{k}({\Omega }, \mathbb {R}^{n\times n})\), k ≥ 1, A = AT and B = BT ≻ 0. A parameter value ξ0 ∈Ω is called a generic coalescing point of eigenvalues if there is a pair of equal eigenvalues at ξ0, no other pair of eigenvalues coalesce inside an open simply connected region \({\Omega }_{0}\subseteq {\Omega }\), and ξ0 is a generic coalescing point of eigenvalues in Ω0.

In these cases, we have the following result which elucidates how each of several coalescing points contributes to the sign changes of the eigenvectors around a loop that encircles the points.

Theorem 3.12

Consider the pencil (A, B), where \(A, B\in \mathcal {C}^{k}({\Omega },\mathbb {R}^{n\times n})\), k ≥ 1, A = AT and B = BT ≻ 0. Let λ1(x) ≥… ≥ λn(x) be its continuous eigenvalues. Assume that for every i = 1,…,n − 1,

at di distinct generic coalescing points in Ω, so that there are \({\sum }_{i=1}^{n-1}d_{i}\) such pointsFootnote 3. Let Γ be a simple closed curve in Ω enclosing all of these distinct generic coalescing points of eigenvalues, and let it be parametrized as a \(\mathcal {C}^{\ell }\) (ℓ ≥ 0) function γ in the variable t, so that the function \(\gamma :\ t\in \mathbb {R} \to {\Omega }\) is \(\mathcal {C}^{\ell }\) and 1-periodic. Let \(m=\min \limits (k,\ell )\) and let Aγ and Bγ be the \(\mathcal {C}^{m}\) restrictions of A and B to γ(t). Then, for all \(t\in \mathbb {R}\), there exists \(V\in \mathcal {C}^{m}(\mathbb {R},\mathbb {R}^{n\times n})\) diagonalizing the pencil (Aγ,Bγ): AγV = BγV Λ, where

-

(i) \({\Lambda }\in \mathcal {C}^{m}(\mathbb {R},\mathbb {R}^{n\times n})\) is diagonal: \({\Lambda }=\text {diag}\bigl (\lambda _{1}(t), \dots , \lambda _{n}(t)\bigr )\), for all \(t\in \mathbb {R}\), and Λ(t + 1) = Λ(t);

-

(ii) V is Bγ-orthogonal, with

$$V(t+1)=V(t)\ D\ , \forall t\in \mathbb{R}\ ,$$where D is a diagonal matrix of ± 1 given as follows:

$$D_{11}=(-1)^{d_{1}},\ \ D_{ii}=(-1)^{d_{i-1}+d_{i}}\ \text{for}\ i=2,\ldots,n-1,\ \ D_{nn}=(-1)^{d_{n-1}}\ .$$In particular, if D = I, then V is 1-periodic, otherwise it is 2-periodic with minimal period 2.

Proof

Since the eigenvalues are distinct on Γ, we know that there is a \(\mathcal {C}^{m}\) eigendecomposition V of the pencil (Aγ,Bγ), and that V is Bγ-orthogonal. The issue is to establish the periodicity of V. Our proof is by induction on the number of coalescing points.

Because of Theorem 3.9, we know that the result is true for 1 coalescing point. So, we assume that the result holds for N − 1 distinct generic coalescing points, and we will show it for N distinct generic coalescing points; note that \(N={\sum }_{i=1}^{n-1}d_{i}\).

Since the coalescing points are distinct, we can always separate one of them, call it ξN, from the other N − 1 points, with a curve α not containing coalescing points, and which stays inside the region bounded by Γ, joining two distinct points on Γ, y0 = γ(t0) and y1 = γ(t1), with t0,t1 ∈ [0,1), so that α leaves ξN and all other coalescing points ξi’s on opposite sides (see Fig. 2). Let j, 1 ≤ j ≤ n − 1, be the index for which λj(ξN) = λj+ 1(ξN).

Now, consider the following construction. Take a smooth eigendecomposition of (Aγ,Bγ) along Γ, starting at y0 and returning to it; the loop is done once, and to fix ideas, we will transverse it in the counterclockwise direction. Denote the continuous matrix of eigenvectors of (Aγ,Bγ) at the beginning of this loop as V0 and that at the end of the loop as V1.

Since the curve α does not contain any coalescing point, the matrix V1 would be the same as if, instead of following the curve Γ, we were to follow Γ0 from y0 to y1, then go from y1 to y0 along α, back from y0 to y1 along α in opposite direction and then from y1 to y0 along Γ1: (Γ0 ∪ α) ∪ ((−α) ∪Γ1). Denote the matrix of eigenvectors of (Aγ,Bγ) at the end of the first loop (Γ0 ∪ α) by \(V_{\frac 12}\). Using the induction hypothesis along the closed curve Γ0 ∪ α, we have

where \(\hat D \) is a diagonal matrix \(\hat D=\text {diag}(\hat D_{11},{\dots } \hat D_{nn})\), with

and \(\hat d_{i}=d_{i}\), for all i≠j, and \(\hat d_{j}=d_{j}-1\). Now, by looking at what happens on the second loop, by virtue of Theorem 3.9, we have that all columns of \(V_{\frac 12}\) coincide with those of V1, except for the j th and (j + 1)st ones which have changed in sign. Putting everything together, we have V0 = V1D with D as given in the statement of the Theorem. □

We do not study nongeneric coalescings, since they are not robust under perturbation; see [6] for considerations on these cases, for the symmetric eigenproblem. With this in mind, in the final result we give below, we should think of all coalescing points as being generic.

The following Theorem, which is the main result of this work, yields a sufficient condition for the existence of (generic) conical intersections inside a certain region. In a nutshell, it says that a change of sign of an eigenvector of a pencil around a loop betrays the existence of a coalescing point for the corresponding eigenvalue inside the loop. This is, together with Lemma 2.17, the foundation on which we base our numerical algorithm to detect CIs.

Theorem 3.13

Consider the pencil (A, B), where \(A,B\in \mathcal {C}^{k}({\Omega },\mathbb {R}^{n\times n})\), A = AT and B = BT ≻ 0. Let λ1(x) ≥… ≥ λn(x) be its continuous eigenvalues. Let Γ be a simple closed curve in Ω with no coalescing point for the eigenvalues on it, and let it be parametrized as a \(\mathcal {C}^{\ell }\) (ℓ ≥ 0) function γ in the variable t, so that the function \(\gamma :\ t\in \mathbb {R} \to {\Omega }\) is \(\mathcal {C}^{\ell }\) and 1-periodic. Let \(m=\min \limits (k,\ell )\) and let Aγ and Bγ be the \(\mathcal {C}^{m}\) restrictions of A and B to γ(t), and let V be \(\mathcal {C}^{m}\) and Bγ-orthogonal diagonalizing the pencil (Aγ,Bγ). Let V0 = V (0) and V1 = V (1), and define D such that V0D = V1.

Next, let i1 < i2 < ⋯ < i2q be the 2q indicesFootnote 4i for which Dii = − 1. Let us group these indices in pairs \((i_{1},i_{2}),\dots ,(i_{2q-1},i_{2q})\). Then, λν and λν+ 1 coalesced at least once inside the region encircled by Γ if i2j− 1 ≤ ν < i2j for some j = 1,…,q.

Remark 3.14

Some comments are in order. As always, we implicitly assume all CIs to be generic.

-

(i) To exemplify, suppose that, with the notation of Theorem 3.13, we have D11 = D33 = − 1, all other Dii’s being 1. Then, inside the region encircled by Γ, the pairs (λ1,λ2) and (λ2,λ3) have coalesced at an odd number of points.

-

(ii) Theorem 3.13 yields a sufficient but not necessary condition for coalescence of eigenvalues. It is a topological result, similar in spirit to the Intermediate Value Theorem, and cannot distinguish whether, inside Γ, some pair of eigenvalues have coalesced an even number of times or not at all.

4 Algorithms to locate coalescing eigenvalues

The procedure we implemented to locate coalescing generalized eigenvalues is based on Theorem 3.13, and on the smooth generalized eigendecomposition A(t)V (t) = B(t)V (t)Λ(t) along 1-d paths, as stated in Theorem 2.9. Our goal is to obtain a sampling of these smooth V and Λ at some values of t. Given a 1-parameter pencil (A(t),B(t)), for t ∈ [0,1], with \(A, B\in \mathcal {C}^{k}([0,1],\mathbb {R}^{n\times n})\), A = AT and B = BT ≻ 0, we can assume that the eigenvalues are distinct for all t ∈ [0,1], and λ1(t) > λ2(t) > … > λn(t).

To compute Λ = diag(λ1,λ2,…,λn) and V we used a continuation procedure of predictor-corrector type, similar to the one developed in [5] to obtain a sampling of the smooth ordered Schur decomposition for symmetric 1-d functions. For completeness, we briefly describe here the step from a point tκ to the point tκ+ 1 of the new procedure, further remarking on the differences between the present procedure and the one in [5], to which we refer for a discussion of some algorithmic choices.

Given an ordered decomposition at tκ: A(tκ)V (tκ) = B(tκ)V (tκ)Λ(tκ) and a stepsize h, we want the decomposition at tκ+ 1 = tκ + h: A(tκ+ 1)V (tκ+ 1) = B(tκ+ 1)V (tκ+ 1)Λ(tκ+ 1), where the factors V (tκ+ 1) and Λ(tκ+ 1) lie along the smooth path from tκ to tκ+ 1. To get Λ(tκ+ 1) is easy to do with canned software, like eig in MATLAB, since the eigenvalues are distinct, so we will keep them ordered. Furthermore, a B-orthogonal matrix Vκ+ 1 such that A(tκ+ 1)Vκ+ 1 = B(tκ+ 1)Vκ+ 1Λ(tκ+ 1) can be also obtained by standard linear algebra software, like the eig MATLAB command, and re-ordering. Then, recalling Corollary 2.10, we know that V (tκ+ 1) = Vκ+ 1S, where S is a sign matrix, S = diag(s1,…,sn), si = ± 1,i = 1,…,n, that is, V (tκ+ 1) can be recovered by correcting the signs of the columns of Vκ+ 1. Specifically, by enforcing minimum variation with respect to a suitably predicted factor Vpred, we set S equal to the sign matrix which minimizes \(\left \| SV_{\kappa +1}^{T}B(t_{\kappa +1})V^{pred}-I\right \|_{F}\).

Despite the overall simplicity of the basic step we just described, if the stepsize h is too large with respect to the variation of the factors, predicting the correct signs of the eigenvectors to follow the smooth path may be a hard task. This difficulty is typically encountered when there is a pair of close eigenvalues, as happens in presence of a veering phenomenonFootnote 5. In this case, smoothness could be maintained only by using very small stepsizes, being the variation of the eigenvectors inversely proportional to the difference between eigenvalues (see the differential (18)). Therefore, we proceed in two different ways, depending on the distance between consecutive eigenvalues. We say that a pair of eigenvalues (λi, λi+ 1) is close to veering at tκ + h if the following condition holds:

otherwise the eigenvalues are considered well separatedFootnote 6. At the starting point tκ, eigenvalues are assumed to be well separated. This is by far the most frequent case. Yet, to be able to compute the sought smooth generalized eigendecomposition, it is essential to consider the possibility of having close eigenvalues; to handle this case, we have adopted the strategy detailed below.

Case 1. A pair of eigenvalues is close to veering attκ + h.

In practice during a veering close eigenvalues may become numerically undistinguishable, and the corresponding B-orthogonal eigenvectors change very rapidly within a very small interval, out of which the eigenvalues are again well separated. To overcome this critical veering zone, we proceed by computing a smooth block-diagonal eigendecomposition (see Theorem 2.8):

where close eigenvalues are grouped into one block, so that the eigenvalues of each Λi are well separated from the others. We do not expect, nor consider, the nongeneric case of three or more close eigenvalues. Hence, each Λi(t) is either an eigenvalue or a 2 × 2 block. Using the Cholesky factorization of B = LLT, we first re-write (26) as follows:

Then, to compute the smooth orthogonal transformation WB and block-diagonal ΛB, we use a procedure for the continuation of invariant subspaces, which is based on Riccati transformations (see [4] and [5] for details of this technique). Starting at tκ, we continue with this standard block eigendecomposition until all eigenvalues are again well separated; this happens at some value tf, and we set tκ+ 1 = tf. Then, VB(tκ+ 1) = L−T(tκ+ 1)WB(tκ+ 1). A key issue is how to recover the complete smooth eigendecomposition at tκ+ 1. Indeed Theorem 2.8 guarantees the existence of decomposition (26) but not its uniqueness, as can be easily verified by rotating the columns of VB - or WB - corresponding to a 2 × 2 diagonal block. In [5], to which we refer for the details, we show how these subspaces can be rotated to obtain an accurate predicted factor Vpred which allows to correct the signs of Vκ+ 1’s columns, and continue the complete smooth eigendecomposition at tκ+ 1 + h. Case 2. All eigenvalues are well separated. In this case, through our predictor-corrector strategy the stepsize is adapted based on both eigenvalues and eigenvectors variations. The following parameters

are used both to update the stepsize and to accept or reject a step (see Steps 4 and 5 in Algorithm 4.1 below). Accurate predictors are hence mandatory for the efficiency of the overall procedure. We obtain them by taking an Euler step:

in the differential (18), where the derivatives \(\dot {\Lambda }(t_{\kappa })\simeq \dot {\Lambda }_{\kappa }\) and \(\dot V(t_{\kappa })\simeq \dot V_{\kappa }\) are approximated by replacing \({\dot A}(t_{\kappa })\) with (A(tκ+ 1) − A(tκ))/h and \({\dot B}(t_{\kappa })\) with (B(tκ+ 1) − B(tκ))/h. Setting AV = V (tκ)TA(tκ+ 1)V (tκ) and BV = V (tκ)TB(tκ+ 1)V (tκ) we have

where P = (I − BV)/2, and H is the skew-symmetric matrix such that

We remark that ρV, Λpred and Vpred reduce to the corresponding quantities we used in [5] for the standard symmetric eigenvalue problem, when B = I and V is orthogonal.

Furthermore, after we reached tκ+ 1 with a successful step, we compute the following predicted eigenvalues of secant type:

Step 7 in Algorithm 4.1 uses these secant prediction, and its rationale is that if \(\lambda ^{sec}_{i}<\lambda ^{sec}_{i+1}\) for some i (recall that we have to obtain λi > λi+ 1), then the new step tκ+ 1 + h is likely to fail, and therefore h will be safely reduced as in (29).

Remark 4.1

Observe that smooth factors Λ and V could be obtained also via a smooth Schur decomposition \(\tilde A(t)=Q(t){\Lambda }(t) Q^{T}(t)\) of the symmetric matrix \(\tilde A=L^{-1}AL^{-T}\), with B = LLT, by using the procedure developed in [5] and setting V = LTQ. However, Algorithm 4.1 which is tailored to the original generalized eigenproblem turns out to be much more efficient; for general A and B, in our experiments in MATLAB the main cost is given by step 2 of the algorithm, which we resolve with a call to eig, and using eig(A, B) typically costs less than half of the execution time encountered forming \(\tilde A\) and using eig\((\tilde A)\).

5 Random matrix ensemble and experiments

Making use of the algorithms presented in Section 4, we have performed a numerical study on coalescence of eigenvalues for parametric eigenproblems and in this Section we report on these experiments.

Remark 5.1

Before describing our results, we emphasize several computational advantages of working directly with the pencil (A, B) rather than converting it to a standard eigenproblem prior to computing its eigenvalues.

-

To begin with, it is less costly (much less so) (see Remark 4.1). This is not surprising, since for nearly 50 years the numerical linear algebra community has invested in methods tailor-made for the generalized eigenproblem.

-

Working with the pencil allows to retain the structure of the pencil. For example, sparsity is generally lost after conversion to a standard eigenproblem.

-

Furthermore, consider the case of pencils with banded A, B. In this case, the differential (18) used in the prediction phase of Algorithm 4.1 respect the banded structure of our problem, and this fact is exploited to obtain less costly predictors than those that would be obtained after conversion to a standard eigenproblem.

Next, we consider pencils A − λB whose matrices belong to a random matrix ensemble called “SG+.” This ensemble has been introduced in [23] for modelling uncertainties in computational mechanics. Matrices in this ensemble are characterized by a property called dispersion, which is controlled by a dispersion parameter δ that must satisfy \(0<\delta <\sqrt {(n+1)(n+5)^{-1}}\). Moreover we contemplated also banded SG+ matrices, i.e., matrices in SG+ “truncated” so to have bandwidth b = 1,…,n − 1, where b = 1 means tridiagonal and b = n − 1 means “full.” Our goal is to investigate the effect of bandwidth and dispersion on how the number of conical intersections varies as we increase the dimension of the matrices.

We now illustrate in detail how our random matrix functions are defined. First, given integers n ≥ 2 and b = 1,…,n − 1, and dispersion parameter \(0<\delta <\sqrt {(n+1)(n+5)^{-1}}\), we construct the following n × n matrices (only the non-zero entries are explicitly defined):

where N(0,1) is the normal distribution with zero mean and variance 1, while Γ(ai,1) is the gamma distribution with shape ai and rate 1. Then, for all (x, y) in \(\mathbb {R}^{2}\), we define the following matrix functions:

We point out that:

-

a)

all matrices LA, k and LB, k are strictly lower triangular and have bandwidth b, while DA and DB are diagonal; therefore, both A(x, y) and B(x, y) have bandwidth b;

-

b)

A(x, y) = A(x, y)T and B(x, y) = B(x, y)T are positive definite for all (x, y);

-

c)

the nonzero entries of all matrices LA, k, LB, k, DA and DB are independent random variables; more precisely: A(x, y) ∈ SG+ for all (x, y), and the same is true for B(x, y);

-

d)

the probability density function of the diagonal entries of DA and DB matrix depends on their position along the diagonal.

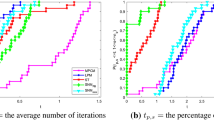

In our experiments, we have fixed five values of the dispersion parameter: δ = 0.05, 0.25, 0.45, 0.65, 0.85, and considered four possible bandwidths b = 3,4,5,full. For each combination of δ and b, and for dimensions n = 50,60,…,120, we have constructed 10 realizations of matrix pencils A(x, y) − λB(x, y) and performed a search for conical intersections for the pencil over the domain Ω = [0,π] × [0,2π]. The detection strategy consisted of subdividing the domain Ω into 64 × 128 square boxes and computing a smooth generalized eigendecomposition of the pencil around the perimeter of each box using the algorithm described in Section 4. The presence of conical intersections inside each box is betrayed by sign changes of the columns of the smooth B-orthogonal matrix that diagonalizes the pencil, see Theorem 3.13 and the subsequent remarks.

Our purpose is to fit the data with a power law

averaging the number of conical intersections over the 10 realizations. The outcome of the experiments is condensed in Fig. 3, which shows the superposition of the 20 linear regression lines obtained by computing a least squares best fit over the logarithm of the data, so that p and c in (30) represent, respectively, slope and intercept of the lines. The figure reveals 4 groups of 5 lines each, where lines in the same group share the value of the bandwidth. It is evident that the dispersion parameter δ has a negligible effect on the exponent p, and mostly also on the factor c (with the exception of the full bandwidth case). In contrast, bandwidth has a significant effect on the exponent p, that increases from p ≈ 2 of the full bandwidth case to p ≈ 2.6 of the heptadiagonal case. A similar study was conducted in [5] for the GOE (Gaussian Orthogonal Ensemble) model. For convenience, below we report on the values of the exponent p obtained for the two models (for the SG+ model we average over all values of δ, since variations for different values of δ are negligible):

Interestingly, the table above shows similar –quadratic– power laws for both models in the “full” case; but, as the bandwidth decreases, the SG+ model displays a slower growth of the exponent p than the GOE model.

Finally, let us give a brief account of the computational time required by our experiments. All computations have been performed on the “Partnership for an Advanced Computing Environment” (PACE), the high-performance computing infrastructure at the Georgia Institute of Technology, Atlanta, GA, USA. On this computing environment, the computation that required the longest time was the heptadiagonal case with largest dimension n = 120, and was about 12.5 h. By contrast, the fastest computation corresponded to the full bandwidth case and smallest dimension n = 50 and was about 15 min. This confirms that the computational effort is directly proportional to the number of conical intersections, which in turn is directly proportional to the exponent p. This fact comes with no surprise: in the vicinity of each conical intersection, the eigenvectors exhibit rapid variations that require severe restrictions on the stepsize for our continuation algorithm.

Remark 5.2

We were not able to perform experiments for the tridiagonal and pentadiagonal cases. This is due to the fact that, as the bandwidth gets small and the dimension large, a significant amount of sharp variations of the eigenvectors occur within intervals of size smaller than machine precision, ruling out our (but, actually, any) numerical continuation solver. These difficulties were already encountered (and explained) in [5] for the tridiagonal case on the standard eigenproblem (see Remark 3.1 therein). Because of these numerical difficulties, in [5, Section 2.3] we devised ad-hoc techniques for the tridiagonal case, techniques based upon the fact that for a symmetric tridiagonal matrix \(A=\left [\begin {array}{cccc}a_{1} & b_{2} & &\\ b_{2} & {\ddots } & {\ddots } & \\ & {\ddots } & {\ddots } & b_{n} \\ & & b_{n} & a_{n} \end {array}\right ]\), a necessary condition to have repeated eigenvalues is that bi = 0, for some i. Unfortunately, in the case of a tridiagonal pencil (A, B), there is no such simple necessary condition that has to hold for having repeated eigenvalues. For these reasons, the case of A and B tridiagonal is left open for future study, and our results in the present work do not include this case.

6 Conclusions

In this work, we have considered symmetric positive definite pencils, A(x) − λB(x), where A and B are symmetric matrix valued functions in \(\mathbb {R}^{n\times n}\), smoothly depending on parameters x, and B is also positive definite. We gave general smoothness results for the one parameter and two-parameter case, and gave results to characterize the (generic) case of coalescing eigenvalues in the two-parameter case, the so-called conical intersections. In the one parameter case, we derived novel differential equations for the smooth factors. We further presented, justified, and implemented, new algorithms of predictor-corrector type to locate parameter values where there are conical intersections. Our prediction was based on a Euler step relative to the underlying differential equations. These algorithms were used to perform a statistical study of the number of conical intersections for pencils of several bandwidths.

Several issues are still requiring a more ad-hoc study. For example, the case of both A and B tridiagonal (e.g., see [26]) is still not resolved in a satisfactory way for the generalized eigenvalue problem, and perhaps the techniques of [19] or [16] can be adapted to the parameter dependent case examined by us. But also other problems remain to be examined, especially from the algorithmic point of view, like the case of large number of equal eigenvalues seen in some engineering works (e.g., see [22]).

Data availability

Data sharing not applicable to this article.

Notes

This implies that the zeros set of these functions is actually a \(\mathcal {C}^k\) curve (or collection of \(\mathcal {C}^k\) curves). For background on these concepts, see [11].

Transversal intersection means that the two tangents to the curves at ξ0 are not parallel to each other.

Of course, some di’s may be 0.

The reason for the number of indices being even is that Aγ(t)V (t) = Bγ(t)V (t)Λ(t), and V (t + 1) = V (t) D. Since V is continuous and invertible, then its determinant is either always positive or always negative. But, since V (1) = V (0)D, then we must have \(\det (D)=1\).

Eigenvalues’ veering is a typical phenomenon for eigenvalues of parameter dependent problems. It occurs when two curves of eigenvalues seem to be about to coalesce but suddenly “veer away” from each other.

In our experiments, we have used = 106 ≈ 10− 10.

References

Berkolaiko, G., Parulekar, A.: Locating conical degeneracies in the spectra of parametric self-adjoint matrices. SIAM J Matrix Anal Appl 42(1), 224–242 (2021)

Chern, J.L., Dieci, L.: Smoothness and periodicity of some matrix decompositions. SIAM J. Matrix Anal. Appl. 22, 772–792 (2001)

Dieci, L., Eirola, T.: On smooth decomposition of matrices. SIAM J. Matrix Anal. Appl. 20, 800–819 (1999)

Dieci, L., Papini, A.: Continuation of eigendecompositions. Futur. Gener. Comput. Syst. 19, 1125–1137 (2003)

Dieci, L., Papini, A., Pugliese, A.: Coalescing points for eigenvalues of banded matrices depending on parameters with application to banded random matrix functions. Numerical Algorithms 80, 1241–1266 (2019)

Dieci, L., Pugliese, A.: Two-parameter SVD: Coalescing singular values and periodicity. SIAM J. Matrix Anal. Appl. 31, 375–403 (2009)

Gingold, H.: A method of global blockdiagonalization for matrix-valued functions. SIAM J. Math. Anal. 9-6, 1076–1082 (1978)

Golub, G.H., Van Loan, C.F.: Matrix computations Johns Hopkins University Press (1996)

van Hemmen, J.L., Ando, T.: An inequality for trace ideals. Commun. Math. Phys. 76, 143–148 (1980)

Higham, N.J.: Functions of matrices. Theory and Computation. SIAM, Philaelphia (2008)

Hirsch, M.W.: Differential Topology. Springer-Verlag, New York (1976)

Hsieh, P.F., Sibuya, Y.: A global analysis of matrices of functions of several variables. J. Math. Anal. Appl. 14, 332–340 (1966)

Jarlebring, E., Kvaal, S., Michiels, W.: Computing all pairs (λ, μ) such that λ is a double eigenvalue of a + μB. SIAM J. Matrix Anal. Appl. 32(3), 902–927 (2011)

Kalinina E.A.: On multiple eigenvalues of a matrix dependent on a parameter. Computer Algebra in Scientific Computing, pp 305–314, 2016. Springer International Publishing

Kato, T.: Perturbation Theory for Linear Operators, 2nd edn. Springer-Verlag, Berlin (1976)

Kaufman, L.: An algorithm for the banded symmetric generalized matrix. SIAM J. Matrix Anal. Appl. 14(2), 372–389 (1993)

Krantz, S.G., Parks, H.R.: A primer of real analytic functions. birkhäuser Basel (2002)

Lancaster, P.: On eigenvalues of matrices dependent on a parameter. Numer Math 6, 377–387 (1964)

Li, K., Li, T.-Y., Zeng, Z.: An algorithm for the generalized symmetric tridiagonal eigenvalue problem. Numer Algorithms 8, 269–291 (1994)

Mehl, C., Mehrmann, V., Wojtylak, M: Parameter-dependent rank-one perturbations of singular hermitian or symmetric pencils. SIAM J. Matrix Anal. Appl. 38(1), 72–95 (2017)

Rellich, F.: Perturbation theory of eigenvalue problems, Courant Institute of Mathematical Sciences. New York University, New York (1954)

Srikantha Phani, A., Woodhouse, J., Fleck, N.A.: Wave propagation in two-dimensional periodic lattices. J. Acoust. Soc. Am. 119, 1995–2005 (2006)

Soize, C.: Random matrix models and nonparametric method for uncertainty quantification. Handbook for Uncertainty Quantification, 1. In: Ghanem, R., Higdon, D., Owhadi, H. (eds.) , pp. 219–287. Springer International Publishing, Switzerland (2017)

Tisseur, F., Meerbergen, K.: The quadratic eigenvalue problem. SIAM Rev 43-2, 235–286 (2001)

Uhlig, F.: Coalescing eigenvalues and Crossing eigencurves of 1-parameter matrix flows. SIAM J Coalescing Matrix Anal. Appl. 41(4), 1528–1545 (2020)

Wilkinson, J.H.: Algebraic eigenvalue problem. Clarendon Press. Oxford University Press, Oxford (1988)

Funding

Open access funding provided by Università degli Studi di Bari Aldo Moro within the CRUI-CARE Agreement. This work has been partially supported by the GNCS-Indam group and the PRIN2017 research grant.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dieci, L., Papini, A. & Pugliese, A. Decompositions and coalescing eigenvalues of symmetric definite pencils depending on parameters. Numer Algor 91, 1879–1910 (2022). https://doi.org/10.1007/s11075-022-01326-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01326-7