Abstract

The computation of n-point Gaussian quadrature rules for symmetric weight functions is considered in this paper. It is shown that the nodes and the weights of the Gaussian quadrature rule can be retrieved from the singular value decomposition of a bidiagonal matrix of size n/2. The proposed numerical method allows to compute the nodes with high relative accuracy and a computational complexity of \( \mathcal {O} (n^{2}). \) We also describe an algorithm for computing the weights of a generic Gaussian quadrature rule with high relative accuracy. Numerical examples show the effectiveness of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Golub and Welsch algorithm [12] is the classical way to compute the nodes λi and the weights ωi of an n-point Gaussian quadrature rule (GQR), commonly used to approximate integrals of the following kind,

where ω(x) ≥ 0 is a positive weight function in the interval [−τ,τ], with τ > 0 and f(x) is a continuous function in the same interval. It computes the nodes, also called knots, as the eigenvalues of a symmetric tridiagonal matrix of order n, called the Jacobi matrix, while the corresponding weights are easily obtained from the first components of the associated eigenvectors (see [12] for more details). The nonzero entries of the Jacobi matrix are computed from the coefficients of the three-term recurrence relation of the orthogonal polynomials associated with the weight function ω(x).

The Matlab function gauss is a straightforward implementation of the Golub and Welsch algorithm [12], included in the package “OPQ: A Matlab suite of programs for generating orthogonal polynomials and related quadrature rules” by Walter Gautschi, and it is available at https://www.cs.purdue.edu/archives/2002/wxg/codes/OPQ.html.

In [19] it is shown that, when the weight function ω(x) is symmetric with respect to the origin, the positive nodes of the n-point GQR are the eigenvalues of a symmetric tridiagonal matrix, of order \( \frac {n}{2} \) if n is even and \( \frac {n+1}{2} \) if n is odd, obtained by eliminating half of the unknowns from the original eigenvalue problem. Moreover, the Cholesky factor of the latter tridiagonal matrix of reduced order is a nonnegative lower bidiagonal matrix of dimensions \( \frac {n}{2}\times \frac {n}{2}, \) if n is even, and \( \frac {n+1}{2} \times \frac {n-1}{2}, \) if n is odd, whose entries in its main diagonal and lower subdiagonal are respectively the odd and the even elements of the first subdiagonal of the original Jacobi matrix.

In the same paper, the authors propose modifications of the Golub and Welsch algorithm for computing the nodes and weights of a symmetric GQR (SGQR), by means of a three-term recurrence relation corresponding to the orthogonal polynomials associated with the modified weight function. This resulted in faster Matlab functions than gauss.

Inspired by [19], in this paper we propose to compute the positive nodes of the n-point SGQR as the singular values of a nonnegative bidiagonal matrix. These can be computed in \( \mathcal {O} (n^{2}) \) floating point operations and with high relative accuracy by the algorithm described in [5, 6]. Moreover, the weights of the SGQR can be obtained from the first row of the right singular vector matrix. The stability of the three-term recurrence relation arising in computing the weights of a nonsymmetric n-point GQR is also analyzed and a novel numerical method for computing the weights with high relative accuracy is proposed.

A different approach for computing nodes and weights of a GQR in \( \mathcal {O}(n)\) operations has been introduced in [10] and is implemented in the Matlab package Chebfun [15]. This method is based on approximations of the nodes and weights that appear to be relatively accurate in most nodes but no proof of such a result is given. For a very large number of nodes, this method is today the most efficient one [14, 25].

The paper is organized as follows. The basic notation and definitions used in the paper are listed in Section 2. In Section 3, the main features of the Golub and Welsch algorithm are described. In Section 4, the proposed algorithm for computing the nodes of an n-point SGQR as the singular values of a bidiagonal matrix is described. Different techniques for computing the weights of an n-point GQR are described in Section 5. In Section 6 we show the elementwise relative accuracy of the weights computed by our new method and in Section 7, we give a number of numerical examples confirming our stability results. We then end with a section of concluding remarks.

2 Notation and definitions

Matrices are denoted by upper-case letters X,Y,…,Λ,Θ,…; vectors with bold lower-case letters x,y,…,λ,𝜃,…; scalars with lower-case letters x,y,…,λ,𝜃,…. The element i,j of a matrix A is denoted by aij and the i th element of a vector x is denoted by xi.

Submatrices are denoted by the colon notation of Matlab: A(i : j,k : l) denotes the submatrix of A formed by the intersection of rows i to j and columns k to l, and A(i : j,:) and A(:,k : l) denote the rows of A from i to j and the columns of A and from k to l, respectively. Sometimes, A(i : j,k : l) is also denoted by Ai:j,k:l and x(i : j) by xi:j.

The identity matrix of order n is denoted by In, and its i th column, i = 1,…,n, i.e., the i th vector of the canonical basis of \( {\mathbb {R}}^{n}, \) is denoted by ei. The notation ⌊y⌋ stands for the largest integer not exceeding \( y \in \mathbb {R}_{+}.\)

Given a vector \(\boldsymbol {x} \in {\mathbb {R}}^{n}, \) then diag(x) is a diagonal matrix of order n, with the elements of vector x on the main diagonal. Given a matrix \( A \in {\mathbb {R}}^{m \times n},\) then diag(A) is a column vector of length \( i ={\min \limits } (m,n),\) whose entries are those of the main diagonal of A.

3 Computing the zeros of orthogonal polynomials

Let \(p_{\ell }(x) = k_{\ell }x^{\ell } + {\sum }_{j=0}^{\ell -1} c_{j} x^{j}, \ell =0, 1, \ldots , \) be the sequence of orthonormal polynomials with respect to a positive weight function ω(x) in the interval [−τ,τ],τ > 0, i.e.,

where kℓ is chosen to be positive. The polynomials pℓ(x) satisfy the following three-term recurrence relation [23]

where

and

Using (1), we can write the n-step recurrence relation

where

The matrix J is called the Jacobi matrix [7]. The following theorem was shown in [7, Th. 1.31].

Theorem 1

Let J = QΛQT be the spectral decomposition of J, where \({\Lambda } \in {\mathbb {R}}^{n \times n} \) is a diagonal matrix and

and \( Q \in {\mathbb {R}}^{n \times n} \) is an orthogonal matrix, i.e., QTQ = In. Then pn(λi) = 0,i = 1,…,n, and Q = V D, with

and

The eigenvalues λi are the nodes of the GQR and the corresponding weights ωi are defined as (see [23])

Hence, the weights ωi of the GQR can be determined by (4), computing pℓ(λi),ℓ = 0,1,…,n − 1, i = 1,…,n, either by means of the three-term recurrence relation (1), as done in [19], or by computing the whole eigenvector matrix Q of J in (3). Both approaches will be described in Section 5, and their stability analysis will be provided as well.

As shown in [26], the weights can be also obtained by the first row of Q as

The Golub and Welsch algorithm [12], relying on a modification of the QR-method [4], yields the nodes and the weights of the GQR by computing only the eigenvalues of the Jacobi matrix and the first row of the associated eigenvector matrix.

3.1 Computing the zeros of orthogonal polynomials for a symmetric weight function

For a weight function ω(x), symmetric with respect to the origin, the diagonal elements 𝜃ℓ,ℓ = 1,…,n, in (2) become zero since, as shown in [7, Th. 1.17],

and, thus, \( x {p}^{2}_{\ell }(x) \) is an odd function in [−τ,τ]. Therefore, the Jacobi matrix in (2) becomes

Furthermore, by (6), if λi,i = 1,…,n, is a zero of pℓ,ℓ ≥ 0, i.e., pℓ(λi) = 0, then λn−i+ 1 = −λi is also a zero of pℓ, since

As a consequence,

Therefore, for weight functions symmetric with respect to the origin, it is sufficient to compute only the positive nodes and the corresponding weights.

Without loss of generality, in the sequel we will consider the following reordering of λ,Λ and V, i.e.,

where j is the following permutation of the index set i = [1,…,n]

Hence, the first \(\lfloor \frac {n}{2}\rfloor \) entries of λ are the strictly positive eigenvalues of J in a decreasing order, the second \(\lfloor \frac {n}{2}\rfloor \) entries of λ are the negative eigenvalues of J in an increasing order, and, if n is odd, the last entry of λ is the zero eigenvalue.

4 Computation of the nodes and the weights of a symmetric Gaussian quadrature rule

In this section we propose an alternative method to compute the positive nodes and corresponding weights of a SGQR. We will need the following Lemma [11].

Lemma 1

Let \( A \in {\mathbb {R}}^{m \times n}, m \le n,\) and

Let A = UΣVT be the singular value decomposition of A, with \( U \in {\mathbb {R}}^{m \times m}, V \in {\mathbb {R}}^{n \times n} \) orthogonal, and

where σ1 ≥ σ2 ≥⋯ ≥ σm ≥ 0. Then

Moreover, if

then

with

and

The following Corollary holds.

Corollary 1

Let Bn be the bidiagonal matrix

with singular value decomposition

where U and V are orthogonal, \(\boldsymbol {\sigma }= \left [\begin {array}{cccc} \sigma _{1}, &\sigma _{2},& \ldots , &\sigma _{\lfloor \frac {n}{2}\rfloor } \end {array}\right ]^{T}\), and

and, if n is odd, \(V=\left [\begin {array}{c|c} V_{1} &\boldsymbol {v}_{2} \end {array}\right ], V_{1} \in {\mathbb {R}}^{\frac {n+1}{2} \times \frac {n-1}{2}}, \boldsymbol {v}_{2} \in {\mathbb {R}}^{\frac {n+1}{2}}.\) Then

and \(\hat {\Lambda } \) is the square diagonal matrix

where \(P:= I_{n}(\tilde {\boldsymbol {\jmath }},:)\) is the even-odd permutation matrix corresponding to the even-odd permutation of the index set i = [1,…,n], i.e.,

Proof

Obviously, we have

and (8) follows then straightforwardly from Lemma 1. □

Taking the even-odd structure of P into account, we have \(\textbf {e}_{1}^{T} P^{T}=\textbf {e}_{\lfloor \frac {n}{2}\rfloor +1}^{T}\) and thus the first row of Q is given by

and, hence, by (4) and (5), the weights ωi are given by

and, for n odd,

Remark 1

Transforming the problem of computing the eigenvalues of J into the problem of computing the singular values of the nonnegative bidiagonal matrix B, allows us to compute the nodes of a SGQR with high relative accuracy by using the algorithm described in [5, 6] and implemented in LAPACK (subroutine dlasq1.f) [1]. Note that dlasq1.f computes the singular values of square bidiagonal matrices and for the n-point SGQR with odd n, the bidiagonal matrix B is rectangular of size \(\lfloor \frac {n}{2}\rfloor \times \lfloor \frac {n+1}{2}\rfloor . \) But one step of the QR iteration with zero shift, implemented as described in [5], is sufficient to transform B to \( \left [\begin {array}{c|c} \hat {B} & \textbf {0} \end {array} \right ], \) with \( \hat {B}\) square bidiagonal of order \(\lfloor \frac {n}{2}\rfloor \) to high relative accuracy.

On the other hand, the componentwise stability of computing ωi,i = 1,…,n, from the first row of either Q or V, is not ensured if these matrices are computed by the QR algorithm. Indeed, these orthogonal matrices are computed in a normwise backward stable manner but not necessarily in a componentwise stable manner [27, p. 236], [16]. In Section 7, we show in the example of Fig. 2 that using the eigenvalue decomposition of J for computing the sequence (10), the computation of the small weights is not elementwise stable even though the eigenvectors are computed in a normwise backward stable manner [16]. For the sake of completeness, it is worth mentioning that a similar behavior is not observed for other classes of orthogonal polynomials, such as the Jacobi ones, since the weights do not have such a large range of values and, thus, the computation of GQR for such weights with the function gauss is as accurate as the method proposed in this paper.

5 Computation of the weights of the Gaussian quadrature rules

In this section we consider different techniques for computing the weights ωi,i = 1,…,n, of an n-point GQR, relying only on the knowledge of the corresponding nodes λi for nonsymmetric weight functions. At the end of the section we will shortly describe how these techniques can be adapted to symmetric weight functions. As pointed out in Section 3, the nodes and the weights of the n-point Gaussian rule associated with a weight function ω(x), are the zeros λi,i = 1,…,n, of the orthogonal polynomial pn(x) of degree n and the corresponding weights are

The following methods have been proposed in the literature for computing the sequence

-

1.

the eigenvalue decomposition [11];

-

2.

the forward three-term recurrence relation (FTTR) [8];

-

3.

the backward three-term recurrence relation (BTTR) [20, 24].

The computation of the weights by means of the FTTR was considered in [19] without providing any stability analysis.

Given \(\tilde {p}_{0}(\bar {\lambda })\) and a zero \( \bar {\lambda }\) of pn(λ), we denote by \(\tilde {p}_{1}(\bar {\lambda }), \) \( \tilde {p}_{2}(\bar {\lambda }), \) \( \ldots , \tilde {p}_{n-1}(\bar {\lambda }),\) the sequence computed by means of (1) in a forward manner, i.e., by FTTR. Analogously, since \({p}_{n}(\bar {\lambda })=0,\) we can set an arbitrary value for \(\hat {p}_{n-1}(\bar {\lambda }), \) and denote by \(\hat {p}_{n-2}(\bar {\lambda }), \hat {p}_{n-2}(\bar {\lambda }), {\ldots } , \hat {p}_{1}(\bar {\lambda }), \hat {p}_{0}(\bar {\lambda }), \) the sequence computed using (1) in a backward fashion, i.e., by BTTR. The latter procedure is often referred to as the Miller’s backward recurrence algorithm [3, 8, 20] in the literature. It was originally proposed by J.C.P. Miller for the computation of tables of the modified Bessel function [3, page xvii].

The stability of the generic FTTR and BTTR, i.e., sequences generated by three-term recurrence relations not linked to orthogonal polynomials, was analyzed in [8, 20, 24], and in these papers the preference went to the BTTR for stability purposes.

For the weight functions depending on the parameters α and β listed in Table 1, we carried out extensive tests, choosing many different values of α and β, for computing the sequences pj(λi),j = 0,1,…,n − 1, by means of FTTR and BTTR. FTTR works in an accurate way for some classes of weights and BTTR works in an accurate way for other classes of weights.

results obtained by FTTR and BTTR when computing the sequence of orthogonal polynomials associated to the weight ω, considering many different values of α and β

results obtained by FTTR and BTTR when computing the sequence of orthogonal polynomials associated to the weight ω, considering many different values of α and βThe results of these experiments are displayed in Table 1 in which we mention “Ok” if the method computes the sequence in an accurate way for all the considered values of α and β, and “  ” if the method fails to compute the sequence in an accurate way for some values of α and β. In Fig. 1 (left) we show on a logarithmic scale, the results obtained for the Hermite polynomials of degree j, j = 0,1,…,127, in the largest zero of the Hermite polynomial of degree 128, with FTTR in extended precision (128 digits), with FTTR in double precision and with BTTR in double precision. While the Hermite polynomials are accurately computed with FTTR, the results obtained by BTTR after some steps diverge from the actual values. In this case we report an “Ok” in column FTTR and a “

” if the method fails to compute the sequence in an accurate way for some values of α and β. In Fig. 1 (left) we show on a logarithmic scale, the results obtained for the Hermite polynomials of degree j, j = 0,1,…,127, in the largest zero of the Hermite polynomial of degree 128, with FTTR in extended precision (128 digits), with FTTR in double precision and with BTTR in double precision. While the Hermite polynomials are accurately computed with FTTR, the results obtained by BTTR after some steps diverge from the actual values. In this case we report an “Ok” in column FTTR and a “  ” in column BTTR in Table 1. In Fig. 1 (right) we show the results for the Hahn polynomials of degree j = 0,1,2,...,127, evaluated in the fourth smallest zero of the Hahn polynomial of degree 128, computed in double precision with FTTR and BTTR, for α = β = −.5. In this case, FTTR does not compute the sequence of Hahn polynomials in an accurate way, while BTTR does. We thus report a “

” in column BTTR in Table 1. In Fig. 1 (right) we show the results for the Hahn polynomials of degree j = 0,1,2,...,127, evaluated in the fourth smallest zero of the Hahn polynomial of degree 128, computed in double precision with FTTR and BTTR, for α = β = −.5. In this case, FTTR does not compute the sequence of Hahn polynomials in an accurate way, while BTTR does. We thus report a “  ” in column FTTR and an “Ok” in column BTTR in Table 1.

” in column FTTR and an “Ok” in column BTTR in Table 1.

Left: plot of the absolute values of the Hermite polynomials of degree j, j = 0,1,…,127, evaluated in the largest zero of the Hermite polynomial of degree 128, and computed by FTTR in double precision (denoted by “  ”), by BTTR in double precision (denoted by “

”), by BTTR in double precision (denoted by “  ”) and by gauss with extended precision (128 digits) (denoted by “∘”). Right: plot of the absolute values of the Hahn polynomials of degree j, j = 0,1,…,127, evaluated in the fourth smallest zero of the Hahn polynomial of degree 128, and computed by FTTR in double precision (denoted by “

”) and by gauss with extended precision (128 digits) (denoted by “∘”). Right: plot of the absolute values of the Hahn polynomials of degree j, j = 0,1,…,127, evaluated in the fourth smallest zero of the Hahn polynomial of degree 128, and computed by FTTR in double precision (denoted by “  ”), by BTTR in double precision (denoted by “

”), by BTTR in double precision (denoted by “  ”) and by gauss with extended precision (128 digits) (denoted by “∘”)

”) and by gauss with extended precision (128 digits) (denoted by “∘”)

The Hahn polynomials in Table 1 are discrete orthogonal polynomials on the discrete set of n points {0,1,2,...,n − 1} with respect to the weight \(\binom {\alpha +k}{k} \binom { \beta +n-1-k}{ n-1-k}, \alpha , \beta >-1, k=0,1,2,...,n-1.\)

Hence, since experimentally it is not clear which of FTTR and BTTR can be chosen, we describe an algorithm, called LMV, that combines the FTTR and the BTTR methods in order to compute the sequence (10) with high relative accuracy.

Let \(\bar {\lambda }\) be a zero of pn(x) and let us denote by \(\tilde {\textbf {p}}\) and by \(\hat {\textbf {p}}\) the following vectors, computed by FTTR and BTTR, respectively:

The following theorem emphasizes the relationship between the sequence \( \tilde {p}_{j}(\bar {\lambda }), \) j = 1,…,n − 1, computed by (1) and the columns of the \(\tilde Q\) factor of the QR factorization of \(J - \bar {\lambda }I_{n}. \)

Theorem 2

Let \( \bar {\lambda } \) be a zero of pn(x), and let \( \tilde G_{1}, \tilde G_{2}, \ldots , \tilde G_{n-1} \) be the sequence of Givens rotations

such that

with \( R \in {\mathbb {R}}^{n\times n}\) upper triangular. Let \( \tilde {p}_{0}(\bar {\lambda }), \tilde {p}_{1}(\bar {\lambda }), \ldots , \tilde {p}_{n-2}(\bar {\lambda }), \tilde {p}_{n-1}(\bar {\lambda }) \) be the sequence of orthogonal polynomials evaluated in \( \bar {\lambda }\) by means of the three-term recurrence relation (1). Then

with \(\tilde {\nu }_{i} \in {\mathbb {R}}, \tilde {\nu }_{i} \ne 0.\)

Proof

We prove (12) by induction. Let \( \tilde {p}_{0}(\bar {\lambda })= 1/\sqrt {\mu _{0}}. \) Then, by (1),

On the other hand,

Therefore,

with

Let us suppose that (12) holds for i, 1 ≤ i < n and let us prove (12) for i + 1.

Observe that

with \(\upsilon _{i}= -\tilde s_{i} \tilde c_{i-1}\gamma _{i} +\tilde c_{i} (\theta _{i+1} \bar {\lambda }).\) Moreover, by the induction hypothesis (12),

By (1) and the induction hypothesis (12),

On the other hand, from (13),

with \(\xi _{i+1}=\sqrt { (-\tilde s_{i} \tilde c_{i-1}\gamma _{i} - \tilde c_{i} \bar {\lambda })^{2}+\gamma _{i+1}^{2}}.\) Hence,

Therefore,

with \( \tilde {\nu }_{i+1}=-\tilde s_{i+1} \tilde {\nu }_{i}. \)□

Remark 2

The matrix \(\tilde Q=\tilde {G_{1}^{T}} {\cdots } \tilde G_{n-2}^{T} \tilde G_{n-1}^{T} \) is the orthogonal upper Hessenberg matrix

since \(J - \bar {\lambda }I_{n} \) is a tridiagonal matrix. Therefore, by Theorem 2, the subvector made by the first i entries of \(\textbf {p} (\bar {\lambda })\) is parallel to the vectors made by the first i entries of the columns j of \(\tilde Q,\) with j ≥ i.

Theorem 3 shows the relationship between the sequence \( \{ \hat {p}_{j}(\bar {\lambda })\}_{j=0}^{n-1}\) and the columns of the factor \( \hat {Q}\) of the QL factorization of \( J - \bar {\lambda } I_{n}. \) For the sake of brevity, we omit the proof since it is very similar to the one of Theorem 2.

Theorem 3

Let \( \bar {\lambda } \) be a zero of pn(x), and let \( \hat {G}_{1}, \hat {G}_{2}, \ldots , \hat {G}_{n-1} \) be the sequence of Givens rotations

such that

with \( L \in {\mathbb {R}}^{n\times n}\) lower triangular. Let \( \hat {p}_{n-1}(\bar {\lambda }), \hat {p}_{n-2}(\bar {\lambda }), {\ldots } , \hat {p}_{1}(\bar {\lambda }), \hat {p}_{0}(\bar {\lambda }), \) be the sequence evaluated in \( \bar {\lambda }\) by the three-term recurrence relation (1) in a backward fashion, with \(\hat {p}_{n-1}(\bar {\lambda }) \) fixed. Then

with \(\hat {\nu }_{i} \in {\mathbb {R}}, \hat {\nu }_{i} \ne 0.\)

Theorem 2 shows that the vector \( \tilde {\textbf {p}}, \) computed using FTTR, can also be obtained by applying either one step of the implicit QR algorithm with shift \( \bar {\lambda } \) to J or computing the QR factorization of \( J - \bar {\lambda } I_{n}, \) since the orthogonal matrices generated by both methods are the same. The last column of the orthogonal matrices generated by both methods will be parallel to \( \tilde {\textbf {p}}. \) Therefore, forward instability can occur in the computation of \( \tilde {\textbf {p}} \) if premature convergence occurs in one step of the forward implicit QR (FIQR) method with shift \( \bar {\lambda } \) to J [17, 21].

On the other hand, Theorem 3 shows that the vector \( \hat {\textbf {p}}, \) computed applying BTTR, can also be obtained applying either one step of the backward implicit QR algorithm with shift \( \bar {\lambda } \) to J or computing the QL factorization of \( J - \bar {\lambda } I_{n}. \) The first column of the orthogonal matrices generated by both methods will be parallel to \( \hat {\textbf {p}}. \) Therefore, forward instability can occur in the computation of \( \hat {\textbf {p}} \) if premature convergence occurs in one step of the backward implicit QL (BIQL) method with shift \( \bar {\lambda } \) to J [17, 21].

The premature convergence of the implicit QR method with shift \(\bar {\lambda } \) depends on the distance between the eigenvalues of the consecutive matrices J1:i,1:i and J1:i+ 1,1:i+ 1,i = 1,2,…,n − 1. Hence, if \( \lambda _{j}^{(i)}, j=1,\ldots ,i,\) and \( \lambda _{j}^{(i+1)}, j=1,\ldots ,i+1,\) are the eigenvalues of J1:i,1:i and J1:i+ 1,1:i+ 1, respectively, then, by the Cauchy interlacing Theorem [22],

If \( \mid \lambda _{j}^{(i+1)}- \lambda _{j}^{(i)}\mid \) and \(\mid \lambda _{j}^{(i)}- \lambda _{j+1}^{(i+1)}\mid \), j = 1,…,i,i = 1,…,n − 1, are sufficiently large, then premature convergence does not occur in one step of the implicit forward QR algorithm with shift \( \bar {\lambda } \) and, hence, FTTR computes the sequence (10) accurately. This is the reason why FTTR computes the sequence (10) accurately for the Chebyshev polynomials Tj(x) of the first kind, i.e., Jacobi polynomials associated with the weight \(\frac {1}{\sqrt {1-x^{2}}} \) in the interval [− 1,1], since the distance of the zeros of two consecutive polynomials Tj(x) an Tj+ 1(x) is of order \( \mathcal {O} \left (\frac {1}{j^{2}}\right )\) at least.

In [17, 18], an algorithm combining one step of FIQR and one step of BIQL with shift \(\bar {\lambda } \) is described in order to compute the corresponding eigenvector. Therefore, the eigenvector associated with \(\bar {\lambda } \) is computed combining the first \( \bar {\jmath }-1\) Givens rotations of FIQR with shift \(\bar {\lambda } \) and the first \(n-\bar {\jmath } \) rotations of BIQL with shift \(\bar {\lambda }.\) It is proven that each eigenvector is computed accurately with \(\mathcal {O} (n) \) floating point operations. Once the eigenvector is computed, the weights are obtained by applying (9).

Following [17, 18], we now describe a recursive procedure to determine an interval in which the index \(\bar {\jmath } \) lies. Let us consider the sequence of Givens rotations \( \tilde G_{i} \in {\mathbb {R}}^{n \times n}, i=1,\ldots ,n-1, \) defined in (11). It turns out that

Then we compute the sequence of normalized vectors \( \tilde {\textbf {v}}_{i} \in {\mathbb {R}}^{i}, i=1,\ldots ,n, \) in the following way,

and the Rayleigh quotients \(\tilde {\lambda }^{(i)}=\tilde {\textbf {v}}_{i}^{T} J_{1:i,1:i} \tilde {\textbf {v}}_{i}, \) for i = 2,…,n, as follows,

with \( \tilde c_{0}=1, \tilde {\lambda }^{(1)}=\theta _{1}.\)

If premature convergence occurs at step \(\tilde {\jmath }\) of FIQR with shift \(\bar {\lambda }, \) then \( \tilde {\textbf {v}}_{\tilde {\jmath }} \) is the eigenvector of \(J_{1:\tilde {\jmath }}\) associated with the eigenvalue \(\bar {\lambda } \approx \tilde {\lambda }^{(\tilde {\jmath })}=\tilde {\textbf {v}}_{\tilde {\jmath }}^{T} J_{1:\tilde {\jmath },1:\tilde {\jmath }} \tilde {\textbf {v}}_{\tilde {\jmath }}.\) It then follows that \(\bar {\jmath } \le \tilde {\jmath } \) (see [17, 18]).

Similarly, let \(\hat {G}_{i} \in {\mathbb {R}}^{n \times n}, i=1,\ldots , n \) be the sequence of Givens rotations defined in (11). Then

We construct the sequence of vectors \( \hat {\textbf {v}}_{i} \in {\mathbb {R}}^{i}, i=1,\ldots , n, \) as follows,

where \(\hat {\textbf {w}}_{i} =\hat {G}_{1} \hat {G}_{2} {\cdots } \hat {G}_{i-1} \textbf {e}_{n-i+1},\) and \(\hat {\textbf {v}}_{1}\equiv 1, \) and the Rayleigh quotients \(\hat {\lambda }^{(i)}=\hat {\textbf {v}}_{i}^{T} J_{n-i+1:n,n-i+1:n} \hat {\textbf {v}}_{i}, \) as,

with \( \hat {\lambda }^{(1)}=\theta _{n} \) and \( \hat {c}_{0}=1. \)

It turns out that if premature convergence occurs at step \( \hat {\jmath }, 1\le \hat {\jmath } \le n-1, \) in IBIQL with shift \( \bar {\lambda },\) then \( \hat {\textbf {v}}_{\tilde {\jmath }} \) is the eigenvector of \( J_{n-\tilde {\jmath }:n,n-\tilde {\jmath }:n} \) associated with the eigenvalue \(\bar {\lambda } \approx \tilde {\lambda }^{(\tilde {\jmath })}=\tilde {\textbf {v}}_{\tilde {\jmath }}^{T} J_{1:\tilde {\jmath }} \tilde {\textbf {v}}_{\tilde {\jmath }}.\)

Since J is an irreducible tridiagonal matrix, it was shown in [17, 18, 21] that if premature converge occurs at step \(\tilde {\jmath }\) of FIQR with shift \( \bar {\lambda }\), with \( 1 \le \hat {\jmath } \le n-1,\) then premature convergence can only occur at the step \( \hat {\jmath }\) of BIQL with shift \( \bar {\lambda }\), with \(1 \le \hat {\jmath }\le \tilde {\jmath }. \)

This suggests the Algorithm 1, written in a Matlab-style, to compute the interval \( [\hat {\jmath }, \tilde {\jmath }]\) in which \( \bar {\jmath } \) lies.

Once the interval \( [\hat {\jmath }, \tilde {\jmath }] \) is determined, the index \( \bar {\jmath } \) is chosen as the index with the maximum element in absolute value in the subvector \( \tilde {\textbf {p}}_{\hat {\jmath }: \tilde {\jmath }} \) [17, 18].

Remark 3

Given \(\bar {\lambda } \), a similar recursion holds for estimating if premature convergence occurs in a singular value of the bidiagonal matrix B.

Remark 4

We have described in Section 4 that the sequence pℓ(λi),ℓ = 0,1,…,n − 1, can be retrieved from the eigenvector of J associated with λi. Since the eigenvalue decomposition of J can be obtained from the singular value decomposition of B, then, the eigenvector sequences can be retrieved in a similar way from the left and right singular vectors associated with the singular value λi of the corresponding bidiagonal matrix. For the sake of brevity, we omit the details.

6 Stability of the eigenvectors and weights

In this section we analyze the sensitivity of the calculation of the eigenvector of the tridiagonal matrix J and the sensitivity of the corresponding weight of the GQR, for a particular eigenvalue λj. Let us define the shifted matrix as T := J − λjIn, where T is tridiagonal and unreduced, let p be the corresponding vector of orthogonal polynomials evaluated at λj, i.e., Tp = 0, and let ω be the corresponding weight, i.e., ω := 1/(pTp). Let us now consider any nonzero element pi≠ 0 of p. We then partition the rows of the matrix T as follows:

implying that T1:0 and Tn+ 1:n,: are void matrices. Then the equation pTT = 0 and the fact that pi≠ 0, implies that the row vector ti is in the row space of the remaining rows of T. If we then construct the matrix

then the two systems of equations Tp = 0 and T(i)p = 0 have the same one dimensional set of solutions, i.e., their kernels are the same:

We now compare their sensitivities with respect to perturbations of the data. Let us denote the normalized vector p/∥p∥2 by q, which is thus the normalized eigenvector of J. It follows that qi≠ 0 and

This then yields the following theorem.

Theorem 4

Let

then for any qi≠ 0, we have

and for any \(\mid q_{i}\mid =\| \textbf {q} \|_{\infty }\), or, equivalently, \(\mid p_{i}\mid =\| \textbf {p} \|_{\infty }\), we have

Proof

The Cauchy inequalities for singular values yields σn− 1(T(i)) ≤ σn− 1(T) since we deleted one row of the matrix T to obtain T(i). Let \(Q \in \mathbb {R}^{n\times (n-1)}\) be the orthogonal complement of q, i.e., QTQ = In− 1 and QTq = 0. Then

Let \(\textbf {v}\in {\mathbb {R}}^{n-1}\) be such that ∥v∥2 = 1, and \(\|MTQ\textbf {v}\|_{2}=\sigma _{\min \limits }(MTQ)=\sigma _{\min \limits }(T_{(i)}Q)\). We then have \(\|MTQ\textbf {v}\|_{2}\ge \sigma _{\min \limits }(M) \|TQv\|_{2} \ge \sigma _{\min \limits }(M)\sigma _{\min \limits }(TQ)\), which implies

Moreover,

and it has been shown in [2, Thm 3.6] that

Putting this together yields (16). The inequalities (17) for \(\mid ~q_{i}\mid =\| \textbf {q} \|_{\infty }\) then follow from \(\mid q_{i}\mid \ge 1/\sqrt {n}\). □

Let us denote by pfl the approximation of the vector p computed by LMV, and let us look at how pfl is constructed from the matrix equation T(i)p = 0 and show that it is backward stable in the sense that there exists a perturbation Δ such that the computed vector pfl satisfies exactly the equation

where 𝜖M is the machine precision of the computer used. Once the index i has been chosen, the LMV method computes the vector shared by the kernels of T1:i− 1,: and Ti+ 1:n. Basis vectors for these kernels are respectively given by

where \(\tilde {\textbf {x}}\) and \(\hat {\textbf {x}}\) are arbitrary. In order to construct a common vector in the two kernels, we impose \(\beta = \alpha \tilde p_{i}/\hat {p}_{i} \), and then choose α such that the common vector corresponds to the initialization \(\tilde p_{0}(\lambda _{j})=1/\sqrt {\mu _{0}}\). The subvectors

are computed by a forward and backward recurrence using the three-term recurrence of the tridiagonal matrix T. After fixing the starting values in these recurrences, they each can be interpreted as a back substitution of a triangular system of equations. This was analyzed in depth in [16, Ch. 8], from which it follows that the computed vectors satisfy exactly

where ζn := nu/(1 − nu)Footnote 1 and \(\tilde {\Delta }\) and \(\hat {\Delta }\) satisfy the elementwise bounds

Moreover, the above bounds are independent of the scaling factors α and β. Putting this together shows that the proposed method constructs the computed vector pfl that satisfies exactly the perturbed system of equations

This finally leads to the following theorem.

Theorem 5

The computed quantities pfl and \(\omega _{fl}:=fl(1/\textbf {p}_{fl}^{T}\textbf {p}_{fl})\), obtained by the LMV algorithm with \(\mid p_{i}\mid =\|\textbf {p}\|_{\infty }\), satisfy the bounds

and

which implies normwise forward stability for p and forward stability for the corresponding weight ω.

Proof

It follows from the compatible equations T(i)p = 0 and (T(i) + Δ)pfl = 0 that

Since Δ is tridiagonal and elementwise bounded by ζ2∥T∥2 it follows that ∥Δ∥2 ≤ 3ζ2∥T∥2. Using this and the bound (17) then implies that

For bounding the relative error in ω, we make use of the relative perturbation theory of norms and inverses, as developed in [16, Chap 3]:

which shows that both functions are forward stable in a relative sense. Combining this with the bound (20) yields a similar bound for ω. □

We point out that the stability result for the eigenvector is similar to what one has for the eigendecomposition of the matrix J, since it is inversely proportional to the smallest nonzero singular value of T, i.e., to the smallest gap between λj and the remaining eigenvalues. The sensitivity of each weight has this same inverse factor, but it has, except for this factor, a forward error that is stable in a relative sense. This is a strong property that is not shared by the eigenvalue method.

7 Numerical examples

In this section we compare the computation of the nodes and weights of n-point GQRs obtained by the proposed method, called LMV, to gauss, the Matlab function available in [9] and to the methods proposed in [19]. All the experiments were performed in Matlab ver. R2020b. In [19], the authors show that the positive nodes and the corresponding weights of a GQR associated with a symmetric weight function can be retrieved from the eigendecomposition of a tridiagonal matrix \( J_{\lfloor \frac {n}{2} \rfloor }\) of order \(\lfloor \frac {n}{2} \rfloor \), providing the Matlab functions displayed in Table 2.

In the first and second example these methods are used to compute n-point GQRs corresponding to the Chebyshev weights of first and second kind, respectively, since their nodes and weights are known. In the third example the proposed method is compared to the function gauss in computing an integral on the whole real line.

Example 1

The nodes of the n-point GQR associated with the Chebyshev weight of the first kind,

are \(x_{j}= \cos \limits (\frac {(2j-1)\pi }{2n}), j=1,\ldots , n, \) the zeros of \( \mathcal {T}_{n}(x)\), the Chebyshev polynomial of the first kind of degree n. Moreover, the weights are wj = π/n,j = 1,…,n. The maxima of the relative errors of the nodes computed by the considered numerical methods

are reported in Table 3, while the maxima of the relative errors of the computed nodes

are reported in Table 4.

In all the cases, the relative errors of the nodes and weights computed by the proposed method are comparable to those of the results yielded by the algorithms proposed in [19].

Example 2

The nodes of the n-point GQR associated with the Chebyshev weight of the second kind,

are \(x_{j}= \cos \limits (\frac {\pi }{n+1}), j=1,\ldots , n, \) the zeros of \( \mathcal {U}_{n}(x)\), the Chebyshev polynomial of the second kind of degree n. Moreover, the weights are \(w_{j} = (1-{x_{j}^{2}})\frac {\pi }{n+1}, j=1,\ldots , n.\) The maxima of the relative errors of the nodes computed by the considered numerical methods are reported in Table 5 while the maxima of the relative errors of the computed nodes are reported in Table 6.

In all the cases, the relative errors of the nodes and weights computed by the proposed method are smaller than those of the results yielded by the algorithms proposed in [19].

Example 3

In this example we consider a GQR for integrals on the whole real line with the Hermite weight \( \omega (x)= e^{-x^{2}} \)

We computed the Hermite weights, for different values of n, with the following three different methods:

-

the function gauss [9], computed in double precision.

-

the function gauss [9], computed in variable precision with 128 digits; these values can therefore be considered as exact values for the weights.

-

the proposed method, called LMV.

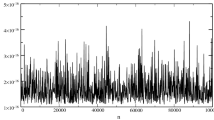

In Fig. 2 (top) we plotted on a logarithmic scale the absolute values of the Hermite polynomials of degree j = 0,1,…,127, evaluated in the largest zeros of the Hermite polynomial of degree 128, computed by the function gauss in double and extended precision (128 digits) and by the proposed method in double precision. In Fig. 2 (bottom), we show the componentwise relative errors of each weight \(e_{rel}({\omega _{j}^{g}}):=\frac {\mid {\omega _{j}^{g}}-\omega _{j}^{ex}\mid }{\mid \omega _{j}^{ex}\mid }\), computed with gauss (in “  ”), and \(e_{rel}(\omega ^{LMV}_{j}):=\frac {\mid \omega _{j}^{LMV}-\omega _{j}^{ex}\mid }{\mid \omega _{j}^{ex}\mid }\), computed with the new method LMV (in “

”), and \(e_{rel}(\omega ^{LMV}_{j}):=\frac {\mid \omega _{j}^{LMV}-\omega _{j}^{ex}\mid }{\mid \omega _{j}^{ex}\mid }\), computed with the new method LMV (in “  ”). We also show the corresponding relative error estimate of each weight \(e_{est}(\omega _{j}^{LMV}):=\frac {\epsilon _{M}\max \limits _{i}\mid \lambda _{i}\mid }{\min \limits _{i, i\neq j}\mid \lambda _{i}-\lambda _{j}\mid }\) (in “∘”). We observe that the Hermite weights corresponding to large nodes are very tiny and gauss, which is based on the QR method, computes them with only normwise backward accuracy, but their elementwise relative forward error can be quite bad. On the other hand, LMV computes them with a relative forward error that is componentwise of the order of the machine precision, as predicted by our analysis in Section 6, and the estimated bounds of Theorem 5 are quite accurate.

”). We also show the corresponding relative error estimate of each weight \(e_{est}(\omega _{j}^{LMV}):=\frac {\epsilon _{M}\max \limits _{i}\mid \lambda _{i}\mid }{\min \limits _{i, i\neq j}\mid \lambda _{i}-\lambda _{j}\mid }\) (in “∘”). We observe that the Hermite weights corresponding to large nodes are very tiny and gauss, which is based on the QR method, computes them with only normwise backward accuracy, but their elementwise relative forward error can be quite bad. On the other hand, LMV computes them with a relative forward error that is componentwise of the order of the machine precision, as predicted by our analysis in Section 6, and the estimated bounds of Theorem 5 are quite accurate.

Top: Plot of the Hermite weights for n = 128, computed with the function gauss in double precision (denoted by “  ”) and in extended precision with 128 digits (denoted by “∘”), and computed by the function LMV (denoted by “

”) and in extended precision with 128 digits (denoted by “∘”), and computed by the function LMV (denoted by “  ”). Bottom: Plot of the componentwise relative errors of each weight \(e_{rel}({\omega _{j}^{g}})\), computed with gauss (in “

”). Bottom: Plot of the componentwise relative errors of each weight \(e_{rel}({\omega _{j}^{g}})\), computed with gauss (in “  ”), and \(e_{rel}(\omega _{j}^{LMV})\), computed with the new method LMV (in “

”), and \(e_{rel}(\omega _{j}^{LMV})\), computed with the new method LMV (in “  ”). The corresponding relative error estimate \(e_{est}(\omega _{j}^{LMV})\) for each weight computed with the method LMV is also given in “∘”

”). The corresponding relative error estimate \(e_{est}(\omega _{j}^{LMV})\) for each weight computed with the method LMV is also given in “∘”

Hence, integrals can not be approximated accurately with the nodes and weights provided by gauss if \( f(x) \sim e^{cx^{2}}, 1-\varepsilon \le c <1\), with ε > 0, small enough.

Let us now consider the integral [13]

that can be rewritten as

If a is close to − 1, then the n-point GQR computed with gauss blows up as n increases. In Table 7 the approximation of the integral with GQR computed with the proposed method and with the function gauss are reported, for a = − 0.8 and b = 20. The correct digits are highlighted in boldface.

8 Conclusions

The nodes and the weights of n-point Gaussian quadrature rules are computed from the eigenvalue decomposition of a tridiagonal matrix of order n by the Golub and Welsch algorithm. In case the weight function is symmetric, Meurant and Sommariva showed that the same information can be fetched from a tridiagonal matrix of order \(\lfloor \frac {n}{2}\rfloor , \) proposing different algorithms.

In this paper it is shown that, for symmetric weight functions, the positive nodes and the corresponding weights can be computed from a bidiagonal matrix with positive entries of size \(\lfloor \frac {n}{2}\rfloor \times \lfloor \frac {n+1}{2}\rfloor . \) Therefore the nodes can be computed with high relative accuracy by an algorithm proposed by Demmel and Kahan. Moreover, the stability of different methods for computing the weights is analyzed, proposing an algorithm for computing them with relative accuracy of the order of the machine precision. The numerical experiments confirm the effectiveness of the proposed approach.

Notes

The quantity ζn is of the order of the unit round-off \(u:=\frac 12\epsilon _{M}\) and corresponds to n rounding errors of a single scalar operation, and follows from the fact that the triangular systems are banded.

References

Anderson, E., Bai, Z., Bischof, C., Blackford, S., Demmel, J., Dongarra, J., Croz, J. D. u., Greenbaum, A., Hammarling, S., McKenney, A., Sorensen, D.: LAPACK Users’ Guide, 3rd edn. Society for Industrial and Applied Mathematics, Philadelphia (1999)

Bart, H., Gohberg, I., Kaashoek, M., Van Dooren, P.: Factorization of transfer functions. SIAM J. Contr. 18(6), 675–696 (1980)

Bickley, W.G., Comrie, L.J., Sadler, D.H., Miller, J.C.P., Thompson, A.J.: British Association for the Advancement of Science Mathematical Tables: Volume 10, Bessel Functions, Part 2. Functions of Positive Integer Order. Cambridge University Press (1952)

Bowdler, H., Martin, R.S., Reinsch, C., Wilkinson, J.H.: The QR and QL algorithms for symmetric matrices. Numer. Math. 11, 293–306 (1968)

Demmel, J., Kahan, W.: Accurate singular values of bidiagonal matrices. SIAM J. Sci. Stat. Comput. 11(5), 873–912 (1990)

Fernando, K.V., Parlett, B.N.: Accurate singular values and differential qd algorithms. Numer. Math. 67(2), 191–230 (1994)

Gautschi, W.: Orthogonal Polynomials: Computation and Approximation. Oxford University Press, Oxford (2004)

Gautschi, W.: Computational aspects of three–term recurrence relations. SIAM Rev. 9, 24–82 (1967)

Gautschi, W.: Orthogonal polynomials (in Matlab). J. Comput. Appl. Math. 178, 215–234 (2005)

Glaser, A., Liu, X., Rokhlin, V.: A fast algorithm for the calculation of the roots of special functions. SIAM J. Sci. Comput. 29, 1420–1438 (2007)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Golub, G.H., Welsch, J.H.: Calculation of Gauss quadrature rules. Math. Comput. 23(106), 221–230 (1969)

Gradshteyn, I.S., Ryzhik, I.M.: Table of Integrals, Series, and Products, 7th edn. Academic Press, Boston (2007)

Hale, N., Townsend, A.: Fast and accurate computation of Gauss-Legendre and Gauss-Jacobi nodes and weights. SIAM J. Sci. Comput. 35, A652–A674 (2013)

Hale, N., Trefethen, L.N.: Chebfun and numerical quadrature. Sci. China Math. 55(9), 1749–1760 (2012)

Higham, N.J.: Accuracy and Stability of Numerical Algorithms, 2nd edn. Society for Industrial and Applied Mathematics, Philadelphia (2002)

Laudadio, T., Mastronardi, N., Van Dooren, P.: Computing the eigenvectors of nonsymmetric tridiagonal matrices. Comput. Math. Math. Phys. 61, 733–749 (2021)

Mastronardi, N., Taeter, H., Van Dooren, P.: On Computing Eigenvectors of Symmetric Tridiagonal Matrices. In: Bini, D, Di Benedetto, F, Tyrtyshnikov, E, Van Barel, M (eds.) , vol. 30, pp 181–195. Springer INdAM Series, Cham (2019)

Meurant, G., Sommariva, A.: Fast variants of the Golub and Welsch algorithm for symmetric weight functions in Matlab. Numer. Algor. 67(3), 491–506 (2014)

Olver, F.W.J.: Error analysis of Miller’s recurrence equations. Math. Comput. 18, 65–74 (1964)

Parlett, B.N., Le, J.: Forward instability of tridiagonal QR. SIAM J. Matrix Anal. Appl. 14, 279–316 (1993)

Parlett, B.N.: The Symmetric Eigenvalue Problem. Prentice-Hall, Englewood Cliffs (1980)

Szegö, G.: Orthogonal Polynomials, 4th edn. American Mathematical Society, Providence (1975)

Tait, R.: Error analysis of recurrence equations. Math. Comput. 21, 629–638 (1967)

Townsend, A., Trogdon, T., Olver, S.: Fast computation of Gauss quadrature nodes and weights on the whole real line. IMA J. Numer. Anal. 36, 337–358 (2016)

Wilf, H.S.: Mathematics for the Physical Sciences. Wiley, New York (1962)

Wilkinson, J.H.: The Algebraic Eigenvalue Problem. England, Oxford (1965)

Acknowledgements

This work was supported partly by the Short Term Mobility program 2021 of Consiglio Nazionale delle Ricerche and partly by Gruppo Nazionale Calcolo Scientifico (GNCS) of Istituto Nazionale di Alta Matematica (INdDAM).

The authors thank Gerard Meurant for the helpful suggestions and the anonymous referees for their constructive remarks.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Teresa Laudadio, Nicola Mastronardi and Paul Van Dooren contributed equally to this work.

Dedicated to Claude Brezinski on the occasion of his 80th birthday.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Laudadio, T., Mastronardi, N. & Van Dooren, P. Computing Gaussian quadrature rules with high relative accuracy. Numer Algor 92, 767–793 (2023). https://doi.org/10.1007/s11075-022-01297-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01297-9