Abstract

In this paper, we introduce a new column selection strategy, named here “Deviation Maximization”, and apply it to compute rank-revealing QR factorizations as an alternative to the well-known block version of the QR factorization with the column pivoting method, called QP3 and currently implemented in LAPACK’s xgeqp3 routine. We show that the resulting algorithm, named QRDM, has similar rank-revealing properties of QP3 and better execution times. We present experimental results on a wide data set of numerically singular matrices, which has become a reference in the recent literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Rank-Revealing QR (RRQR) factorization was introduced by [16] and it is nowadays a classic topic in numerical linear algebra; for example, [17] introduce RRQR factorization for least squares problems where the matrix has not full column rank: in such a case, a plain QR computation may lead to an R factor in which the number of nonzeros on the diagonal does not equal the rank and the matrix Q does not reveal the range nor the null space of the original matrix. Here, the SVD decomposition is the safest and most expensive solution method, while approaches based on a modified QR factorization can be seen as cheaper alternatives. Since the QR factorization is essentially unique once the column ordering is fixed, these techniques all amount to finding an appropriate column permutation. The first algorithm was proposed in [7] and it is referred as QR factorization with column pivoting (QRP). It should be noticed that, if the matrix of the least squares problem has not full column rank, then there is an infinite number of solutions and we must resort to rank-revealing techniques which identify a particular solution as “special”. QR with column pivoting identify a particular basic solution (with at most r nonzero entries, where r is the rank of the matrix), while biorthogonalization methods [17], identify the minimum ℓ2 solution. Rank-revealing decompositions can be used in a number of other applications [20]. The QR factorization with column pivoting works pretty well in practice, even if there are some examples in which it fails, see, e.g., the Kahan matrix [23]. However, further improvements are possible, see, e.g., [8] and [15]: the idea here is to identify and remove small singular values one by one. Gu and Eisenstat [19] introduced the strong RRQR factorization, a stable algorithm for computing a RRQR factorization with a good approximation of the null space, which is not guaranteed by QR factorization with column pivoting. Both can be used as optional improvements to the QR factorization with column pivoting. Rank-revealing QR factorizations were also treated in [9, 18, 22].

Column pivoting makes it more difficult to achieve high performances in QR computation, see [3,4,5,6, 27]. The state-of-the-art algorithm for computing RRQR, named QP3, is a block version [27] of the standard column pivoting and it is currently implemented in LAPACK [1]. Other recent high-performance approaches are tournament pivoting [10] and randomized pivoting [14, 25, 31]. In this paper we present a technique based on correlation analysis we call “Deviation Maximization”, that selects a subset of sufficiently linearly independent columns. The deviation maximization may be adopted as a block pivoting strategy in more complex applications that require subset selection. We successfully apply the deviation maximization to the problem of finding a rank-revealing QR decomposition, but, e.g., the authors experimented also a preliminary version of this procedure in the context of active set methods, see [11, 12]. The rest of this paper is organized as follows. In Section 2 we motivate and describe this novel column selection technique. In Section 3 we define the rank-revealing factorization, we review the QRP algorithm and then we introduce a block algorithm for RRQR by means of deviation maximization; furthermore, we give theoretical worst case bounds for the smallest singular value of the R factor of the RRQR factorizations obtained with these two methods. In Section 4 we discuss the algorithm QRDM and some fundamental issues regarding its implementation. Section 5 compares QP3 and QRDM against a relevant database of singular matrices, and finally, the paper concludes with Section 6.

1.1 Notation

For any matrix A of size m × n, we denote by [A]I,J the submatrix of A obtained considering the entries with row and columns indices ranging in the sets I and J, respectively. We make use of the so-called colon notation, that is we denote by [A]k:l,p:q the submatrix of A obtained considering the entries with row indices k ≤ i ≤ l and column indices p ≤ j ≤ q. When using colon notation, we write [A]:,p:q ([A]k:l,:) as a shorthand for [A]1:m,p:q ([A]k:l,1:n). We also denote the (i,j)-th entry as aij or [A]ij. The singular values of a matrix A are denoted as

Given the vector norm \(\Vert x \Vert _{p} = (|x_{1}|^{p} + {\dots } |x_{n}|^{p})^{1/p}\), p ≥ 1, we denote the family of p-norms as

We denote the operator norm by \(\Vert A \Vert _{2} = \sigma _{\max \limits }(A)\). When the context allows it, we drop the subscript on the 2-norm. With a little abuse of notation, we define the max-norm of A as \(\Vert A \Vert _{\max \limits } = \max \limits _{i,j} |a_{ij}|\). Recall that the max-norm is not a matrix norm (it is not submultiplicative), and it should not be confused with the \(\infty \)-norm \(\Vert A \Vert _{\infty } = \max \limits _{i} {\sum }_{j} |a_{ij}|\).

2 Column selection by deviation maximization

Consider an m × n matrix A which has not full column rank, that is rank(A) = r < n, and consider the problem of finding a subset of well-conditioned columns of A. Before presenting a strategy to solve this problem, let us recall that for an m × k matrix \(C = (\mathbf {c}_{1}\ {\dots } \ \mathbf {c}_{k})\) whose columns cj are non-null, the correlation matrix Θ has entries

In particular, we have \({\varTheta } = \left (C D^{-1} \right )^{T} C D^{-1} = D^{-1} C^{T} C D^{-1}\), where D is the diagonal matrix with entries di = ∥ci∥ , 1 ≤ i ≤ k. It is immediate to see that Θ is symmetric positive semidefinite, it has only ones on the diagonal, and its entries range from − 1 to 1. Notice that 𝜃ij is the cosine of αij = α(ci,cj) ∈ [0,π), the angle (modulo π) between ci and cj. In order to emphasize this geometric interpretation, from now on we refer to Θ as the cosine matrix.

Let us first recall a few definitions taken from [2]. A squared matrix A = Δ + N, where Δ is diagonal and N has a zero diagonal, is said to be τ-diagonally dominant with respect to a norm ∥⋅∥ if \(\Vert N \Vert \leq \tau \min \limits _{i} |{\varDelta }_{ii}|\) for some 0 ≤ τ < 1. A matrix A = D1(Δ + N)D2, where Δ,D1,D2 are diagonal and N has a zero diagonal, is said to be τ-scaled diagonally dominant with respect to a norm ∥⋅∥ if Δ + N is τ-diagonally dominant with respect to same norm, for some 0 ≤ τ < 1. If A is symmetric, then Δ + N is symmetric and we have D := D1 = D2, with diagonal entries di = |Aii|1/2. The main idea behind the deviation maximization is based on the following result.

Lemma 1

Let \(C = (\mathbf {c}_{1} \ {\dots } \ \mathbf {c}_{k})\) be an m × k matrix such that \(\Vert \mathbf {c}_{1}\Vert = \max \limits _{j} \Vert \mathbf {c}_{j}\Vert >0\). Suppose there exists 1 > τ > 0 such that ∥cj∥≥ τ∥c1∥ for all j, and that CTC is τ-scaled diagonally dominant matrix with respect to the \({\infty }\)-norm. Then

Proof

Let us write A = CTC = DΘD, where D = diag(dj), with dj = ∥cj∥, and the cosine matrix Θ decomposes as Θ = (I + N).

We first prove (2). Let us show that A is diagonally dominant in the classic sense, that is for i we have

For all 1 ≤ i ≤ k, we have

and hence

Since |di| > 0 for all i, and ∥c1∥ > 0, the right-hand side is positive if and only if

that is true by assumption since the cosine matrix Θ is τ-diagonally dominant with respect to the \({\infty }\)-norm. Moreover, we have

For any strictly diagonally dominant matrix A with \(\alpha = \min \limits _{i}\left \{ |a_{ii}| - \underset {j\neq i}{\sum } | a_{ij}| \right \}>0\), we have (see [30])

Then

Let us now prove (3). First notice that the cosine matrix Θ = I + N is symmetric, hence \(\Vert N \Vert _{\infty } = \Vert N \Vert _{1}\). In particular, Θ is τ-diagonally dominant also with respect to the 2-norm, since by Hölder’s inequality we get

Moreover, assume without loss of generality that \(d_{1}\geq d_{2} \geq {\dots } \geq d_{k}\), where di = ∥ci∥. Recall the variational characterization (Courant-Fischer Theorem) of the eigenvalues \(\lambda _{1} \geq {\dots } \geq \lambda _{k}\) of a symmetric matrix A of order k

Let \(S_{i-1} \subseteq \mathbb {R}^{k}\) be the subspace spanned by the first i − 1 elements of the canonical basis. Its orthogonal complement \(S_{i-1}^{\perp }\) is then the subspace spanned by the last k − i + 1 elements of the canonical basis. We have

where \(\hat {\mathbf {x}} \in \mathbb {R}^{k-i+1}\) is the vector obtained by deleting the first i − 1 entries of x and Ai− 1 is the square submatrix of order k − i + 1 obtained by deleting the first i − 1 rows and columns of A. Consider the eigenvalues λi of the symmetric matrix A = CTC. Take \(\mathbf {x}\in S_{i-1}^{\perp }\) with ∥x∥ = 1, then by Cauchy-Schwarz inequality

and thus \(\lambda _{i} \leq (1+\tau ) \Vert \mathbf {c}_{i} \Vert ^{2} \leq (1+\tau ) \Vert \mathbf {c}_{1} \Vert ^{2}\). Considering − A instead, we get

and thus λi ≥ (1 − τ)∥ci∥2. Since \(\lambda _{i} = {\sigma _{i}^{2}}\), we have

□

The proof of the bound (3) is mainly based on results contained in [2]. Inequalities (2)–(3) show quite clearly that the bound on the smallest singular value of C depends on the norms of the column vectors and on the angles between each pair of such columns. This suggests to choose k columns of A, namely those with indices \({J} = \left \{ j_{1},\dots ,j_{k} \right \}\), k ≤ r, such that the submatrix C = [A]:,J has columns with large euclidean norms, i.e., larger than the length defined by a parameter τ > 0, and with large deviations, meaning that each pair of columns form an angle whose cosine in absolute value is bounded by a parameter δ ≥ 0. For these reasons, the overall procedure is called deviation maximization and it is presented in Algorithm 1.

Let us detail the above procedure. Define the vector u containing the column norms of A, namely u = (ui) = (∥ai∥), for \(i=1,\dots ,n\). The set J of column indices is initialized at step 1 with a column index corresponding to the maximum column norm, namely

At step 2, a set of “candidate” column indices I to be added in J is identified by selecting those columns with a large norm with respect to the parameter τ, that is

and then the cosine matrix associated to the corresponding submatrix Θ, i.e., the cosine matrix of [A]:,I, is computed at step 4. With a loop over the indices of the candidate set I, an index i ∈ I is inserted in J only if the i-th column has a large deviation from the columns whose index is already in J. In formulae, we ask

i.e., the columns ai and aj are orthogonal up to the factor δ. At the end of the iterations, we have \({J} = \left \{j_{1},\dots ,j_{k} \right \}\), with 1 ≤ k ≤ kmax, where \(k_{\max \limits }\) is the cardinality of the candidate set I, and we set C = [A]:,J. The following static choice of the parameter δ, namely

yields a submatrix C = [A]:,J that satisfies the hypotheses of Lemma 1 for a fixed choice of parameters δ and τ. Indeed, for every j ∈ J, this choice ensures

and hence the cosine matrix Θ is τ-diagonally dominant. Other strategies are possible: for example, at each iteration, an index i can be added to J if

for all j ∈ J, suggesting that the value δ can be updated dynamically as follows

In both (5) and (6), we have 0 ≤ δ < τ < 1. In practice, the value of δ can be chosen independently from τ, as we detail in Section 4, and this is why it is kept as an input parameter.

2.1 Computing the cosine matrix

Let us focus on some details of the implementation of the deviation maximization presented in Algorithm 1. First, the candidate set I defined in (4) can be computed with a fast sorting algorithm, e.g., quicksort, applied to the array of column norms. The most expensive operation in Algorithm 1 is the computation of the cosine matrix Θ in step 4. The cosine matrix of the columns indexed in the candidate set I is given by

where D = diag(∥aj∥), with j ∈ I. The matrix Θ is symmetric, thus we only need its upper (lower) triangular part. This can be computed as

or

The former approach requires m × n additional memory to store U1 and it requires \(m^{2}k_{\max \limits }^{2}\) flops to compute U1 and \((2m-1)k_{\max \limits }(k_{\max \limits }-1)/2\) flops for the upper triangular part of \( {U_{1}^{T}} U_{1}\), while the latter does not require additional memory since the matrix U2 can be stored in the same memory space used for the cosine matrix Θ, and it requires \((2m-1)k_{\max \limits }(k_{\max \limits }-1)/2\) flops the upper triangular part of U2 and \(k_{\max \limits }(k_{\max \limits }-1)\) flops for the upper triangular part of D− 1U2D− 1. The cheapest strategy is to compute Θ according to (9), even if it requires to write an ad hoc low level routine which is not implemented in the BLAS library. It should be noted that both (8) and involve a symmetric matrix–matrix multiplication, which can be efficiently computed with the BLAS subroutine xsyrk.

In order to limit the cost and the amount of additional memory of Algorithm 1, we propose a restricted version of the deviation maximization pivoting. If the candidate is given by \({I} = \{j_{l}: l = 1,{\dots } , {k_{\max \limits }}\}\), we limit its cardinality to be smaller or equal to a machine dependent parameter kDM, that is

We refer to the value kDM as block size, and we discuss its value in terms of achieved performances in Section 5.

3 Rank-revealing QR decompositions

In exact arithmetic, we say that an m × n matrix A is rank-deficient if 0 = σr+ 1(A) < σr(A), where \(r < \min \limits (m,n)\) is its rank. However, rank determination is nontrivial in presence of errors in the matrix elements. Golub and Van Loan [17] define ε-rank of a matrix A as

for some small ε > 0. Thus, if the input data have an initial uncertainty of a known order η, then it has sense to look at rank(A,η). Similarly, for a floating point matrix A it is reasonable to regard A as numerically rank-deficient if \(\text {rank}(A,\varepsilon ) < \min \limits (m,n)\), where ε = u∥A∥ and u is the unit roundoff. This issue is discussed more in detail in Section 3.4.

Let us now introduce the mathematical formulation for the problem of finding a rank-revealing decomposition of a matrix A of size m × n with numerical rank r, defined up to a certain tolerance ε as discussed above. Let π denote a permutation matrix of size n, then we can compute

where Q is an orthogonal matrix of order m, Q1 ∈ m × r and Q2 ∈ m × (m − r), R11 is upper triangular of order r, R12 ∈ r × (n − r) and R22 ∈ (m − r) × (n − r). The QR factorization above is called rank-revealing if

or

or both conditions hold. Notice that if \(\sigma _{\min \limits }(R_{11})\gg \varepsilon \) and ∥R22∥ is small, then the matrix A has numerical rank r, but the converse is not true. In other words, even if A has \((\min \limits (m,n)-r)\) small singular values, it does not follow that any permutation π yields a small ∥R22∥, even if there exist strategies that ensure a small value of ∥R22∥ by identifying and removing small singular values, see, e.g., [8, 15]. It is easy to show that for any factorization like (12) the following relations hold

The proof is an easy application of the interlacing inequalities for singular values [29], namely

which hold for any (m − s) × (n − r) submatrix B of A. In fact we have

Ideally, the best rank-revealing QR decomposition is obtained by the column permutation π which solves

for a fixed rank r. Recall that the volume of a rectangular real matrix A is defined as \(\sqrt {\det (A^{T}A)}\) or \(\sqrt {\det (AA^{T})}\) depending on the shape of A [26], that is the volume of A equals the square root of the product of the singular values of A. It is not difficult to show that problem (15) is equivalent to the problem of selecting r columns such that the volume of the corresponding submatrix [πA]:,1:r is maximal. Problem (15) clearly has a combinatorial nature, thus algorithms that compute RRQR usually provide (see, e.g., [9, 22]) at least one of the following bounds

where p(n) and q(n) are low degree polynomials in n. These are worst case bounds and are usually not sharp. We provide a bound of type (16) in Section 3.3.

3.1 The standard column pivoting

Let us introduce the QR factorization with column pivoting proposed by [7], which can be labeled as a greedy approach in order to cope with the combinatorial optimization problem (15). Suppose at the s-th algorithmic step we have already selected s < r well-conditioned columns of A, which are moved to the leading positions by the permutation matrix π(s) as follows

where \(R_{11}^{(s)}\) is an upper triangular block of order s, and the blocks \( R_{12}^{(s)}\) and \( R_{22}^{(s)}\) have size s × (n − s) and (m − s) × (n − s), respectively. The block \(R_{22}^{(s)}\) is what is left to be processed, and it is often called the “trailing matrix”. Let us introduce the following column partitions for \(R_{12}^{(s)}\), \(R_{22}^{(s)}\) respectively

We aim at selecting, within the n − s remaining columns, that column such that the condition number of the next block \(R_{11}^{(s+1)}\) is kept the largest possible. Formally, we would like to solve

Using the following fact

which is a simple consequence of the invariance of singular values under left multiplication by orthogonal matrices and the insertion of null rows, and using the bound

which holds for any nonsingular matrix A, we can approximate up to a factor \(\sqrt {s+1}\) the smallest singular value as

where eh is the h-th element of the canonical basis of \(\mathbb {R}^{s+1}\). Using this result, as argued in [9], the maximization problem (19) can be solved approximately by solving

The resulting procedure is referred as QR factorization with column pivoting, and it is presented in Algorithm 2. This algorithm can be efficiently implemented since the column norms of the trailing matrix can be updated at each iteration instead of being recomputed from scratch. This can be done [17] by exploiting the following property

which holds for any orthogonal matrix Q and any vector a of order m. Therefore, once defined the vector u(s) whose entry \(u^{(s)}_{j}\) is the j-th partial column norm of Aπ(s), that is the norm of the subcolumn with row indices ranging fromm − ns to m, and initialized \(u^{(1)}_{j} = \Vert \mathbf {a}_{j} \Vert ^{2} \), with 1 ≤ j ≤ n, we can perform the following update

where rij is the entry of indices (i,j) in R(s), 1 ≤ i ≤ m, 1 ≤ j ≤ n. The partial column norm update allows to reduce the operation count from \({\mathscr{O}}(mn^{2})\) to \({\mathscr{O}}(mn)\). Actually, the formula (21) cannot be applied as it is because of numerical cancellation, and it needs to modified, see, e.g., [13] for a robust implementation. The pivoting strategy just presented produces a factor R that satisfies [21]

and, in particular,

A block version of Algorithm 2 has been proposed [27], and it is currently implemented in LAPACK’s xgeqp3 routine, that we will use in the numerical section for comparison.

Remark 1

Geometric interpretation: Introduce the following block column partitioning R(s) = (R1 R2) and Q(s) = (Q1 Q2), where R1 and Q1 have s columns. By the properties of the QR decomposition, we have

where \({\mathscr{R}}(B)\) denotes the subspace spanned by the columns of a matrix B. Every unprocessed column of A rewrites as

where Q1bj−s and Q2cj−s are the orthogonal projection of aj on the subspace \({\mathscr{R}}\left (Q_{1}\right )\) and on its orthogonal complement \({\mathscr{R}}\left (Q_{2}\right )\), respectively. The most linearly independent column ai from the columns in R1 can be seen as the one with the largest orthogonal projection of the complement on the subspace spanned by such columns, namely

However, the matrix Q(s) is never directly available unless it is explicitly computed. We then settle for the index j such that

3.2 The deviation maximization pivoting

Consider the partial factorization in (18), and now suppose at the s-th algorithmic step we have already selected ns, with s ≤ ns < r, well-conditioned columns of A, so that \(R_{11}^{(s)}\) has size ns × ns, while blocks \(R_{12}^{(s)}\) and \(R_{22}^{(s)}\) have size ns × (n − ns) and (m − ns) × (n − ns) respectively. The idea is to pick ks, with ns+ 1 = ns + ks ≤ r, linearly independent and well-conditioned columns from the remaining n − ns columns of A, which are also sufficiently linearly independent from the ns columns already selected, in order to keep the smallest singular value of the R11 block as large as possible. We aim at selecting those columns with indices \(j_{1}, \dots , j_{k_{s}}\) that solve

Of course, this maximization problem has the same combinatorial nature as problem (15), so we rather solve it approximately. We propose to approximate the indices \(\left \{ {j_{1}}, \dots , {j_{k_{s}}} \right \} \) that solves problem (25) with the indices selected by means of the deviation maximization procedure presented in Algorithm 1 applied to the trailing matrix \(R_{22}^{(s)}\). For the moment, let τ > 0 and δ be fixed accordingly to (5) or (6). More efficient choices will be widely discussed in Section 5. For sake of brevity, we will denote by \(B = (\mathbf {b}_{j_{1}} \dots \mathbf {b}_{j_{k_{s}}})\) and \(C = (\mathbf {c}_{j_{1}} {\dots } \mathbf {c}_{j_{k_{s}}})\) the matrices made up of the columns selected, and by \(\bar {B}\) and \(\bar {C}\) the matrices made up by the remaining columns. The rest of the block update, which we detail below, proceeds in a way similar to the recursive block QR. Let \( \tilde {Q}^{(s+1)}\) be an orthogonal matrix of order (m − ns) such that

where T is an upper triangular matrix of order ks. The matrix \(\tilde {Q}^{(s+1)}\) is obtained as a product of ks Householder reflectors, that we represent by mean of the so-called compact WY form [28] as

where Y(s) is lower trapezoidal with ks columns and W(s) is upper triangular of order ks. This allows us to carry out the update of the rest of trailing matrix, that is

by means of BLAS-3 kernels, for performance efficiency. Denoting by \(\tilde {{\varPi }}^{(s)}\) a permutation matrix that moves columns with indices \(j_{1},\dots ,j_{k_{s}}\) to the current leading positions, we set \({\varPi }^{(s+1)} = {\varPi }^{(s)}\tilde {{\varPi }}^{(s)}\) and

then the overall factorization of Aπ(s+ 1) takes the form

where, for the successive iteration, we set

with ns+ 1 = ns + ks. The resulting procedure is presented in Algorithm 3. Last, we point out that the partial column norms can be updated at each iteration also in this case with some straightforward changes of (21), namely

The above formula cannot be applied as it is because of numerical cancellation, like (21). Thus, we apply safety switch from [13] for a robust implementation.

Algorithm 2 has the particular feature that the diagonal elements of the final upper triangular factor R are monotonically non-increasing in modulus, i.e., they satisfy (23). This is because we have (22), that also implies that the diagonal element is larger than any other extra diagonal entry in modulus, see (24). For what concerns Algorithm 3, an analogous of (22) cannot hold in general. Suppose that Algorithm 3 terminates in S ≥ 1 steps for a given matrix A and parameters τ, δ, and the dimension of the \(R_{11}^{(s)}\) factor at the s-th algorithmic step is ns, so that \(0=n_{0}<n_{1}<\dots <n_{S}=n\). It is easy to show that a weaker version of (22) holds, namely we have

which essentially establishes that we have diagonally dominance only for the first pivot of each block, while the standard pivoting ensures it for all pivots (22). In particular, we can only ensure that

Thus Algorithm 3 does not ensure that the factor R will have a monotonically decreasing diagonal, as it is not the case for other recently proposed pivoting strategies [10].

Remark 2

Geometric interpretation: We pointed out in Remark 1 that the standard pivoting can be seen as an approximate procedure to compute at each iteration the most linearly independent column from the columns already processed. Following this line, Algorithm 3 is an approximate procedure to compute at each iteration a set of linearly independent columns which are the most linearly independent from the columns already processed. In fact, the first task is achieved by selecting vector columns \(\{\mathbf {c}_{j_{1}}, \dots , \mathbf {c}_{j_{k}}\}\) which are pairwise orthogonal up to a factor δ, i.e.,

for all 1 ≤ i,l ≤ k, i≠l. The second task is achieved by selecting columns with the largest norm up to a factor τ, i.e.,

for all 1 ≤ l ≤ k.

3.3 Worst-case bound on the smallest singular value

Let us denote by \(\bar {\sigma }^{(s)}\) the smallest singular value of the computed \(R_{11}^{(s)}\) block at step s, that is

Let us first report from [9] an estimate of \(\bar {\sigma }^{(s+1)}\) for QRP.

Theorem 1

Let \(R_{11}^{(s)}\) be the upper triangular factor of order s computed by Algorithm 2. We have

Before coming to the main result, we introduce the following auxiliary Lemma.

Lemma 2

With reference to the notation used for introducing the block partition in (28), we have

Proof

Consider following column partitions \(T = (\mathbf {t}_{1} {\dots } \mathbf {t}_{k})\), \(\bar {T} = (\mathbf {t}_{k+1} {\dots } \mathbf {t}_{n-n_{s}})\), \(R_{22}^{(s+1)} = (\mathbf {r}_{k+1}\dots \mathbf {r}_{n-n_{s}})\), and set rj = 0, for 1 ≤ j ≤ k. Moreover, let T = (ti,j), with 1 ≤ i ≤ j ≤ k, and \(\bar {T}=(t_{i,j})\) with 1 ≤ i ≤ k,1 ≤ j ≤ n − ns. First, notice that by (14) we have

We have

Since \(t_{k,j}^{2} \leq \Vert \mathbf {t}_{j} \Vert ^{2}\), for all 1 ≤ j ≤ n − ns, and computing the maximum on a larger set of indices we have

From equations (26–27), for all 1 ≤ j ≤ n − ns, we have

and, finally, since \(\Vert \mathbf {t}_{1}\Vert ^{2} = \Vert \mathbf {c}_{1}\Vert ^{2} = \max \limits _{j} \Vert \mathbf {c}_{j}\Vert ^{2}\) and by using Lemma 1, we get

We can conclude by noticing that \(\sigma _{\min \limits }(T)=\sigma _{\min \limits }(C)\), since the two matrices differ by a left multiplication by an orthogonal matrix. □

By the interlacing property of singular values, we have

thus the bounds on \(\bar {\sigma }^{(s)}\) and \(\sigma _{\min \limits }(T)\) are, by themselves, not a sufficient condition. Let us introduce the following result, which provides a bound of type (16) for Algorithm 3.

Theorem 2

Let \(R_{11}^{(s)}\) be the upper triangular factor of order ns computed by Algorithm 3. We have

Proof

Let us drop the subscript and the superscript on the inverse of \(R_{11}^{(s)}\) and its inverse \(\left (R_{11}^{(s)}\right )^{-1}\), which will be denoted as R and R− 1 respectively. Then, the inverse of matrix \(R_{11}^{(s+1)}\) is given by

Let us introduce the following partitions into rows

The idea is to use (20), that is

to estimate the minimum singular value up to a factor \(\sqrt {n_{s+1}}\). For 1 ≤ h ≤ ns+ 1 we have

We can bound ∥gh∥ using (20) again, which gives

In particular, for every 1 ≤ h ≤ ns, we get

and thus we have

Similarly, we can bound \( \Vert \mathbf {h}_{h-n_{s}}\Vert \) by ∥T− 1∥. Let us now concentrate on bounding ∥fh∥. We have

where we use the following well-known inequalities ∥x∥2 ≤∥x∥1, \(\left \Vert A \right \Vert _{\max \limits } \leq \left \Vert A \right \Vert \). Moreover, we can write

where, in the last inequality, we used the interlacing property and the invariance under matrix transposition of the singular values. In fact

Hence, we get

If \(\bar {\sigma }^{(s)}\) is a good approximation of \(\sigma _{n_{s}}(A)\), we can suppose that \(\bar {\sigma }^{(s)}/\sigma _{n_{s}}(A) \approx 1\), and we can write

Finally, using Lemma 2, we get

which is the desired bound. □

This shows that even if the leading ns columns have been carefully selected, so that \(\bar {\sigma }^{(s)}\) is an accurate approximation of \(\sigma _{n_{s}}(A)\), there could be a potentially dramatic loss of accuracy in the estimation of the successive block of singular values, namely \(\sigma _{n_{s}+1}(A),\dots ,\sigma _{n_{s+1}}(A)\), just like for the standard column pivoting. In fact, it is well known that failure of QRP algorithm may occur (one such example is the Kahan matrix [23]), as well as for other greedy algorithms, but it is very unlikely in practice.

3.4 Termination criteria

In principle, both Algorithms 2 and 3 reveal the rank of a matrix. In finite arithmetic we have

where \(\hat {R}^{(s)}_{ij}\) is the block \(R^{(s)}_{ij}\) computed in finite representation, for i = 1,2,j = 2. If the block \( \hat {R}^{(s)}_{22} \) is small in norm, then it is reasonable to say that the matrix A has rank ns, where ns is the order of the upper triangular block \(\hat {R}_{11}^{(s)}\). [17] propose the following termination criterion

where ε is the machine precision and f(n) is a modestly growing function of the number n of columns. Notice that even if a block \(\hat {R}_{22}^{(s)}\) with small norm implies numerical rank-deficiency, the converse is not true in general: an example is the Kahan matrix [23], discussed in Section 5.1. Let us write the column partition \( \hat {R}^{(s)}_{22} = (\hat {\mathbf {c}}_{1}\ {\dots } \ \hat {\mathbf {c}}_{n-n_{s}})\). We have

Therefore, the stopping criterion (35) holds if

but the converse is not true in general. Suppose now to have input data with an initial uncertainty of a known order η in A. In this case, the numerical rank may be defined up to a perturbation of order η, see (11), and the stopping criterion (36) is replaced as follows

where η is a user defined input parameter. We do not investigate this case, however it is left as an option in the software. In Section 5 we test the practical stopping criterion (36) and discuss the following two choices:

4 The QR with deviation maximization algorithm

In this section we introduce the QR with deviation maximization (QRDM) algorithm and discuss some crucial aspects related to its implementation.

The deviation maximization procedure exploits diagonal dominance in order to ensure linear independence. Diagonal dominance is sufficient but obviously not necessary and it often turns out to be a too strong condition to be satisfied in practice. Let us briefly comment the choice of the parameter τ: on the one hand, its value should be small in order to get a large candidate set I; on the other hand, a small value of τ implies a small value of δ < τ if (5) or (6) are applied, likely yielding fewer selected columns. Notice that when the value of δ is close to zero, only pairwise nearly orthogonal columns are selected, and it is unlikely to find such matrices in real world problems. However, the value of δ can be chosen independently from τ, as we now detail. Suppose to give up the constraint δ < τ and to settle for any value of τ and δ in the interval (0,1). Then the deviation maximization may identify a set of numerically linearly dependent columns. In order to overcome this issue, we incorporate a filtering procedure in the Householder triangularization. The selected columns \(\left \{ j_{1},\dots ,j_{k_{s}} \right \}\) at the s-th algorithmic step satisfy

before being reduced to triangular form. If a partial column norm becomes too small during the Householder triangularization, then that column is not sufficiently linearly independent from the columns already processed and the procedure is interrupted. In general, the converse is not true. For instance, we demand that the partial column norms \(\Vert [A]_{n_{s}+l:m,n_{s}+l} \Vert \) do not become smaller than the parameter εs defined above in order to compute the related Householder reflectors.

The QR computation obtained in this way is called QRDM and it is presented in Algorithm 4, where the filtering procedure on the partial column norms appears at step 9. Other values of εs are possible, e.g., a small and constant threshold. However, numerical tests show that the choice (40) works well in practice. In this case, the Householder reduction to triangular form terminates withl < k Householder reflectors, and the algorithm continues with the computation and the application of the compact WY representation of these l reflectors. At the next iteration, the pivoting strategy moves the rejected column away from the leading position, if necessary. As we show in Section 5, this break mechanism enables us to independently set values for τ andδ, and thus to obtain the best results in execution times.

4.1 Minimizing memory communication

The performance of an algorithm is highly impacted by the amount of communication performed during its execution, as explicitly pointed out in the literature, see, e.g., [10], where communication refers to data movement within a memory hierarchy of a processor or even between different processors of a parallel computer. In this context, the goal of this section is to design a pivoting strategy that is effective in revealing the rank of a matrix but also minimizes communication. Each time step 5 in Algorithm 3 is reached, the deviation maximization selects ks columns \(\left \{ j_{1}, \dots , j_{k_{s}}\right \}\) to be moved to the leading positions \(\left \{ n_{s}+1, \dots , n_{s}+{k_{s}}\right \}\). However, if one of more columns are already situated within the leading position indices then it is not necessary to move them from their current positions: in this case, since the columns \(\left \{ j_{1}, \dots , j_{k_{s}}\right \}\) are placed in the leading positions with a different ordering, the smallest singular value \(\bar {\sigma }^{(s)}\) of the \(R_{11}^{(s)}\) factor is unchanged, i.e., Theorem 2 still holds. This change on the pivoting strategy allows a huge memory saving in terms of data movement without affecting the rank-revealing properties of the resulting decomposition. This result does not come for free, namely we lose any monotonically decreasing trend in the magnitude of the diagonal elements of the R11 factor and the weak diagonally dominance established in (30)–(32) does not hold anymore. Let us briefly describe the structure of the permutations employed. For every \(i = 1,\dots ,k_{s}\), the column ji is not moved if it is within the ks leading positions. Otherwise, it is moved in place of the first free spot within the ks leading positions, namely we swap the columns ji and ns + l, where l is the minimum integer 1 ≤ l ≤ ks such that \(n_{s}+l \notin \left \{ j_{1},\dots , j_{k_{s}} \right \}\). In this way, the memory communication is minimized and the pivoting strategy requires only m additional memory slots. Let us stress that this communication avoiding pivoting strategy if possible only when multiple columns are selected at once, and hence it cannot be extended to the QR decomposition with standard column pivoting.

5 Numerical experiments

In this section we discuss the numerical accuracy of QRDM against the SVD decomposition and the block QRP algorithm, briefly called QP3 [27]. We report experimental results comparing the double precision codes dgeqrf and dgeqp3 from LAPACK, and dgeqrdm, a double precision C implementation of our block algorithm QRDM available online at the URL: https://github.com/mdessole/qrdm. All tests are carried out on a computer with an Intel(R) Core(TM) i7-2700K processor and a 8 GB system memory, employing CBLAS and LAPACKE, the C reference interfaces to BLAS and LAPACK implementations on Netlib, respectively. All codes have been compiled through a GNU Compiler Collection or a GNU Fortran compiler on a Linux system. The libraries BLAS and LAPACK have been installed from the package libatlas-base-dev for Linux Ubuntu, derived from the well-known ATLAS project (Automatically Tuned Linear Algebra Software), http://math-atlas.sourceforge.net/. It must be pointed out that the absolute timings of the algorithms here discussed are sensitive to the particular optimization adopted for the BLAS library, but it does not change the significance of the results here presented. Actually, a better optimization of the BLAS means more efficient BLAS-3 operations and QRDM increases the speedup with respect to QP3.

Particular importance is given to the values on the diagonal of the upper triangular factor R of the RRQR factorization, which are compared with the singular values of the R11 block and with the singular values of the input matrix A. The tests are carried out on several instances of the Kahan matrix [23] and on a subset of matrices from the San Jose State University Singular matrix database, which were used in other previous papers on the topic, see, e.g., [10, 19].

5.1 Kahan matrix

We first discuss the Kahan matrix [24, p. 31], that is defined as follows

where ς2 + φ2 = 1, and, in general we have

For φ = 0, the singular values are all equal to one. An increasing gap between the last two singular values is obtained when the value of φ is increased. The QRP algorithm does not perform any pivoting, producing a RRQR factorization in which the Q factor is the identity matrix and thus leaving these matrices unchanged [17]. This implies that \(\left \Vert R_{22}^{(s)}\right \Vert \geq {\varsigma }^{n-1}\), for 1 ≤ s ≤ n − 1. For example, the matrix K(300,0.99) has no particular small trailing matrix though, since ς299 ≈ 0.5. In such case \(\sigma _{\min \limits }(R_{11}^{(n-1)})\) can be much smaller than σn− 1(K(n,φ)) [19]. It is not difficult to see that the QRDM algorithm does not perform any pivoting on these matrices too. Let us show this fact by induction on the algorithmic step s. Let \((\mathbf {k}_{1} {\dots } \mathbf {k}_{n})\) be the column partition of K(n,φ). It is easy to see that all columns of the Kahan matrix have unit norm. Moreover, take i < j and we have

that is the cosine of the angle αij ∈ [0,π) between ki and kj does not depend on j. In other words, the column ki forms the same angle (modulo π) with all columns kj, with j > i, thus no column permutations are necessary in the first iteration of QRDM. Suppose no permutations are necessary in the first s iterations, then (42) allows to write

At the s-th algorithmic step the trailing matrix is then \(R_{22}^{(s)} = {\varsigma }^{n_{s}} K(n-n_{s},\varphi )\), whose columns all have the same norm equal to \({\varsigma }^{n_{s}}\). Moreover, the column ci forms the same angle (modulo π) with all other columns cj, for 1 ≤ i < j ≤ (n − ns), hence no permutations are necessary. However, the matrix K(n,φ) may not be in rank-revealed form. In this case, QRDM shows poor rank-reveling properties, similarly to QRP. It is well known that rounding errors due to finite precision may cause nontrivial permutation and the QRP algorithm may reveal the rank. Following [10], in order to avoid this issue we consider instead the matrix

where 1 > ξ > 0. In other words, the j-th column of the matrix \(\hat {K}(n,\varphi ,\xi )\) is the j-th column of K(n,φ) scaled by (1 − ξ)j, for \(j=1,\dots ,n\). Tables 1 and 2 show results for scaled Kahan matrices \(\hat {K}(n,\varphi ,\xi )\), with size n = 128, for several values of φ. For each test case, we show the last two singular values σn− 1, σn and the last two diagonal entries kn− 1,n− 1, kn,n of the current instance of \(\hat {K}(n,\varphi ,\xi )\). For both algorithms QRP and QRDM, we report the (n − 1)-th singular value \(\bar {\sigma }_{n-1}\) of the R11 block of order n − 1 and the absolute value of the last two diagonal entries dn− 1, dn of the factor R. The singular values here presented computed with the xgejsv subroutine of LAPACK. Here, we use the following setting for the hyperparameters τ = 0.15 and δ = 0.9, whose choice is motivated in the next section. When ξ is small (see Table 1), e.g., ξ = 10− 15, both algorithms reveal the rank for some values of φ. However, when the parameter ξ is increased (see Table 2), e.g., ξ = 10− 7, the algorithms do not perform any pivoting for any value of φ, thus resulting in poor rank revealing, according to results in [10]. This fact can be deduced by comparing the diagonal values dn− 1,dn of the R factor with the corresponding singular values σn− 1,σn, and the singular value \(\bar {\sigma }_{n-1}\) of the computed R11 block with the corresponding singular value σn− 1.

5.2 SJSU matrices

We now discuss results coming from two subsets of the San Jose State University Singular matrix database, that we call:

-

1.

“small matrices”: it consists of the 261 matrices with m ≤ 1024, 32 < n ≤ 2048, sorted in ascending order with respect to the number of columns n;

-

2.

“big matrices”: it consists of the first 247 matrices with m > 1024, n > 2048, sorted in ascending order with respect to the number of columns n.

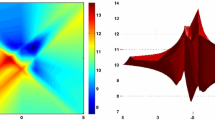

These datasets consist of “fat” (m > n), “tall” (m < n) and square matrices, the results presented hereafter do not depend on this characteristic. For each matrix A, we denote by σi the i-th singular value of A computed with the xgejsv subroutine of LAPACK, and by r the numerical rank computed with the option JOBA=‘A': in this case, small singular values are comparable with roundoff noise and the matrix is treated as numerically rank deficient. Deviation maximization does not guarantee that the diagonal values of the factor R are monotonically decreasing in modulus, therefore we do not sort the diagonal entries and we denote by di the i-th diagonal entry with positive sign. As an example, we show in Fig. 1 the singular values σi and diagonal values di computed with both QP3 and QRDM for the instance n. 3 of the set “small matrices”. Figure 1a shows that the diagonal values di computed with QP3 are monotonically decreasing, while the diagonal values di computed with QRDM are not ordered. However, as it is highlighted in Fig. 1b, the order of magnitude of σi is well approximated by that of the corresponding di for both methods.

Let us first discuss results provided by QP3. Figure 2 compares the positive diagonal entry di and the correspoding singular value σi for each matrix in the two collections, by taking into account the maximum and minimum value of the ratios di/σi. Results show that the positive diagonal value di approximates the corresponding singular value σi up to a factor 10, for \(i = 1,\dots ,r\). Moreover, Fig. 3 compares σi(R11), that is the i-th singular value of R11 = [R]1:r,1:r computed by LAPACK’s xgejsv, with σi for each matrix in the two collections, by taking into account the ratios σi(R11)/σi. These results confirm that QP3 provides an approximation of the singular value σr up to a factor 10.

Before providing similar results for QRDM, let us discuss the sensitivity of parameters τ and δ to the rank-revealing property (16). To this aim, we set a grid \({\mathscr{G}}\) of values \({\mathscr{G}}(i,j) = (\delta _{i},\tau _{j}) = (0.05\ i,0.05\ j)\), with \(i,j=0,\dots ,20\), and we consider the R factor obtained by QRDM. Figure 4a shows the order of magnitude of

where A ranges in the collection “small matrices”, for each grid point (δi,τj). We see that the positive diagonal elements provide an approximation up to a factor 10 of the singular values for a wide range of parameters, corresponding to the light gray region of the grid that we call stability region. In practice, any choice of 1 ≥ τ > 0 and 1 > δ ≥ 0 leads to a rank-revealing QR decomposition.

Therefore, for an optimal parameters’ choice, we look at execution times. Figure 4b shows the cumulative execution times (in seconds) \({\sum }_{A} t_{QRDM}(A)\) where A ranges in the “small matrices” collection and where tQRDM(A) is the execution time of QRDM, for each grid point (δi,τj) in the stability region. It is evident that best performances are obtained toward the right-bottom corner, in correspondence of the dark gray region. Hence, we set τ = 0.15 and δ = 0.9, which are the optimal values for the validation set here considered.

We can now analyze the quality of the RRQR factorization obtained by QRDM with the choice of parameters just discussed. Figure 5 shows that the positive diagonal entries approximate the singular values up to a factor 10, and Fig. 6 shows that the singular values of R11 provide an approximation up to a factor 102, loosing an order of approximation with respect to QP3 in very few cases.

Let us now consider QRDM with a stopping criterion. We show the accuracy in the determination of the numerical rank, and the benefits in terms of execution times, when the matrix rank is much smaller than its number of columns. We consider the stopping criterion in (36)–(38): the numerical rank is this case is given by the number of columns processed by QRDM and we denote it by \(\tilde {r}\). The matrix

denotes the corresponding rank-\(\tilde {r}\) approximation of A. Figure 7 shows the ratios \(\sigma _{\tilde {r}+1}/\Vert A\Vert \) (in red) and \(\Vert A - A_{\tilde {r}}\Vert /\Vert A\Vert \) (in blue), for all matrices in the “small matrices” (Fig. 7a) and “big matrices” (Fig. 7b) collections. Whenever the i-th matrix has full rank, i.e., it has rank \(\tilde {r}\), the singular value \(\sigma _{\tilde {r}+1}\) does not exist and we replaced its value with ε = 10− 16. We also considered the stopping criterion in (36) with the choice (39), which turned out to be less accurate and for this reason we omit the results.

Finally, we compare the execution times of QR computations for the matrices of the set “big matrices”. Here, the instances have been ordered accordingly to the total number of entries nm. Figure 8 shows the speedup of QRDM (Fig. 8a) and QRDM with stopping criterion (Fig. 8b) over QP3, namely the ratio tQP3/tQRDM, where tQP3 and tQRDM are the execution times (in seconds) of QP3 and QRDM respectively. The algorithm QRDM achieves an average speedup of 2.1 ×, as a consequence of the lower amount of memory communication employed to carry out the pivoting, as detailed in Section 4.1. In a stopping criterion is adopted, the average speedup reached is 2.5 ×. It may also be interesting to consider a comparison with an implementation of QP3 with a termination criterion, but this is beyond the scope of the present work.

Figure 9 compares QP3 and QRDM with the QR without pivoting, briefly called QR, implemented by the dgeqrf subroutine of LAPACK. We display the ratios tQRDM/tQR and tQP3/tQR, where tQR is the array of execution times (in seconds) of QR. The standard QR is indeed faster, since it does not involve any column permutation, and it is in average 3 × faster than QP3 while it is only 1.3 × faster than QRDM. This result is obtained thanks to the permutation strategy described in Section 4.1.

Last, let us discuss briefly the effect of the block size kDM introduced to limit the cardinality of the candidate set in (10). This parameter depends on the specific architecture, mainly in terms of cache-memory size, and typical values are kDM = 32,64,128. We observed that there is an optimal value of kDM, in sense that it gives the smallest for a fixed experimental setting, and its computation is similar to the well-known computation practice of the BLAS block size, which is out of scope of this paper. For sake of clarity we say that in our test environment we observed the optimal value kDM = 64, but other choices exhibit a similar behavior, e.g., kDM = 32.

6 Conclusions

In this work we have presented a new subset selection strategy we called “Deviation Maximization”. Our method relies on correlation analysis in order to select a subset of sufficiently linearly independent vectors. Despite this strategy is not sufficient by itself to identify a maximal subset of linearly independent columns for a given numerically rank deficient matrix, it can be adopted as a column pivoting strategy. We introduced the rank-revealing QR factorization with Deviation Maximization pivoting, briefly called QRDM, and we compared it with the rank-revealing QR factorization with standard column pivoting, briefly QRP. We have provided a theoretical worst case bound on the smallest singular value for QRDM and we have shown it is similar to available results for QRP. Extensive numerical experiments confirmed that QRDM reveals the rank similarly to QRP and provides a good approximation of the singular values obtained with LAPACK’s xgejsv routine. Moreover, we have shown that QRDM has better execution times than those of QP3 implemented in LAPACK’s double precision dgeqp3 routine for a large number of test cases, thanks to the lower amount of memory communication involved. The software implementation of QRDM used in this article is available at the URL: https://github.com/mdessole/qrdm.

Our future work will focus on applying deviation maximization as pivoting strategy to other problems which require column selection, e.g., constrained optimization problems, on which the authors successfully experimented a preliminary version in the context of active set methods for NonNegative Least Squares problems, see [11, 12].

Change history

19 July 2022

Missing Open Access funding information has been added in the Funding Note.

References

Anderson, E., Bai, Z., Bischof, C., Blackford, S., Demmel, J., Dongarra, J., Du Croz, J., Greenbaum, A., Hammarling, S., McKenney, A., Sorensen, D.: LAPACK Users’ Guide. Society for Industrial and Applied Mathematics, Philadelphia, PA, 3rd edn. ISBN 0-89871-447-8 (paperback) (1999)

Barlow, J., Demmel, J.: Computing accurate eigensystems of scaled diagonally dominant matrices. SIAM J. Numer. Anal. 27, 11 (1990). https://doi.org/10.1137/0727045

Bischof, C., Hansen, P.: A block algorithm for computing rank-revealing QR factorizations. Numer. Algo. 2, 371–391,10 (1992). https://doi.org/10.1007/BF02139475

Bischof, C., Quintana-Ortí, G.: Computing rank-revealing QR factorizations of dense matrices. ACM Trans. Math. Softw. 24, 226–253, 06 (1998a). https://doi.org/10.1145/290200.287637

Bischof, C., Quintana-Ortí, G.: Algorithm 782: codes for Rank-Revealing QR factorizations of dense matrices. ACM Trans. Math. Softw. 24, 254–257, 07 (1998b). https://doi.org/10.1145/290200.287638

Bischof, J.R.: A block QR factorization algorithm using restricted pivoting. In: Supercomputing ’89:Proceedings of the 1989 ACM/IEEE Conference on Supercomputing, pp. 248–256. https://doi.org/10.1145/76263.76290 (1989)

Businger, P., Golub, G.H.: Linear Least Squares Solutions by Householder Transformations. Numer. Math. 7(3), 269–276 (1965). ISSN 0029-599X. https://doi.org/10.1007/BF01436084

Chan, T.F.: Rank revealing QR factorizations. Linear Algebra Appl. 88-89, 67–82 (1987). ISSN 0024-3795. https://doi.org/10.1016/0024-3795(87)90103-0. http://www.sciencedirect.com/science/article/pii/0024379587901030

Chandrasekaran, S., Ipsen, I.C.F.: On Rank-Revealing factorisations. SIAM J. Matrix Anal. Appl. 15(2), 592–622 (1994). https://doi.org/10.1137/S0895479891223781

Demmel, J., Grigori, L., Gu, M., Xiang, H.: Communication avoiding rank revealing QR factorization with column pivoting. SIAM J. Matrix Anal. Appl. 36, 55–89, 01 (2015). https://doi.org/10.1137/13092157X

Dessole, M., Marcuzzi, F., Vianello, M.: Accelerating the Lawson-Hanson NNLS solver for large-scale Tchakaloff regression designs. Dolomites Research Notes on Approximation 13, 20–29 (2020a). ISSN 2035-6803. https://doi.org/10.14658/PUPJ-DRNA-2020-1-3. https://drna.padovauniversitypress.it/2020/1/3

Dessole, M., Marcuzzi, F., Vianello, M.: DCATCH—a numerical package for d-variate near g-optimal Tchakaloff regression via fast NNLS. Mathematics 8, 7 (2020b). https://doi.org/10.3390/math8071122

Drmač, Z., Bujanović, Z.: On the Failure of Rank-Revealing QR Factorization Software – A Case Study. ACM Trans. Math. Softw. 35(2). ISSN 0098-3500. https://doi.org/10.1145/1377612.1377616 (2008)

Duersch, J.A., Gu, M.: Randomized QR with column pivoting. SIAM J. Sci. Comput. 39(4), C263–C291 (2017). https://doi.org/10.1137/15M1044680

Foster, L.V.: Rank and null space calculations using matrix decomposition without column interchanges. Linear Algebra Appl. 74, 47–71 (1986). ISSN 0024-3795. https://doi.org/10.1016/0024-3795(86)90115-1. https://www.sciencedirect.com/science/article/pii/0024379586901151

Golub, G.: Numerical methods for solving linear least squares problems. Numer. Math. 7(3), 206–216 (1965). ISSN 0029-599X. https://doi.org/10.1007/BF01436075

Golub, G., Van Loan, C.: Matrix Computations (4th ed.). Johns Hopkins Studies in the Mathematical Sciences. Johns Hopkins University Press, Baltimore (2013). ISBN 9781421407944

Golub, G., Klema, V., Stewart, G.W.: Rank degeneracy and least squares problems. Technical Report STAN-CS-76-559. Department of Computer Science Stanford University, Stanford (1976)

Gu, M., Eisenstat, S. C.: Efficient algorithms for computing a strong Rank-Revealing QR factorization. SIAM J. Sci. Comput. 17(4), 848–869 (1996). https://doi.org/10.1137/0917055

Hansen, P.C.: Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion. Society for Industrial and Applied Mathematics, USA (1999). ISBN 0898714036

Higham, N.J.: A survey of condition number estimation for triangular matrices. SIAM Rev. 29(4), 575–596 (1987). ISSN 0036-1445. https://doi.org/10.1137/1029112

Hong, Y.P., Pan, C.-T.: Rank-revealing QR factorizations and the singular value decomposition. Math. Comput. 58(197), 213–232 (1992). ISSN 00255718, 10886842. http://www.jstor.org/stable/2153029

Kahan, W.: Numerical linear algebra. Can. Math. Bull. 9, 757–801 (1966)

Lawson, C. L., Hanson, R.J.: Solving least squares problems, vol. 15. SIAM, Bangkok (1995)

Martinsson, P.G.: Blocked rank-revealing QR factorizations: How randomized sampling can be used to avoid single-vector pivoting. Report, 05. arXiv:1505.08115 (2015)

Mikhalev, A., Oseledets, I.: Rectangular maximum-volume submatrices and their applications. Linear Algebra Appl. 538, 187–211 (2018). ISSN 0024-3795. https://doi.org/10.1016/j.laa.2017.10.014. https://www.sciencedirect.com/science/article/pii/S0024379517305931

Quintana-Ortí, G., Sun, X., Bischof, C. H.: A BLAS-3 version of the QR factorization with column pivoting. SIAM J. Sci. Comput. 19(5), 1486–1494 (1998). https://doi.org/10.1137/S1064827595296732

Schreiber, R., VanLoan, C.: A Storage-Efficient WY representation for products of householder transformations. SIAM J. Sci. Stat. Comput. 10, 02 (1989). https://doi.org/10.1137/0910005

Thompson, R.: Principal submatrices IX: Interlacing inequalities for singular values of submatrices. Linear Algebra Appl. 5(1), 1–12 (1972). ISSN 0024-3795. https://doi.org/10.1016/0024-3795(72)90013-4. https://www.sciencedirect.com/science/article/pii/0024379572900134

Varah, J.: A lower bound for the smallest singular value of a matrix. Linear Algebra Appl. 11(1), 3–5 (1975). ISSN 0024-3795. https://doi.org/10.1016/0024-3795(75)90112-3. http://www.sciencedirect.com/science/article/pii/0024379575901123

Xiao, J., Gu, M., Langou, J.: Fast Parallel Randomized QR with Column Pivoting Algorithms for Reliable Low-Rank Matrix Approximations. In: 2017 IEEE 24Th International Conference on High Performance Computing (HiPC), pp. 233–242, 12. https://doi.org/10.1109/HiPC.2017.00035 (2017)

Acknowledgements

The authors sincerely thank the anonymous referees for their constructive suggestions that improved the quality of the paper.

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement. M. Dessole gratefully acknowledges the company beanTech S.r.l. for funding the doctoral grant “GPU computing for modeling, nonlinear optimization and machine learning”. This work was partially supported by the Project BIRD192932 of the University of Padova.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Data availability

The data that support the findings of this study are available from https://github.com/mdessole/qrdm, the datasets analyzed are available from http://www.math.sjsu.edu/singular/matrices.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dessole, M., Marcuzzi, F. Deviation maximization for rank-revealing QR factorizations. Numer Algor 91, 1047–1079 (2022). https://doi.org/10.1007/s11075-022-01291-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01291-1