Abstract

Numerical continuation tools are nowadays standard methods for the bifurcation analysis of dynamical systems. Unfortunately, the full power of these methods is still unavailable in experiments, in particular, if no underlying mathematical model is at hand. We here aim to narrow this gap by providing control based continuation of periodic states which can be ultimately implemented in real-world experimental set-ups. Taking inspiration from atomic force microscopy, we develop experimentally relevant control and tracking tools for time periodic solutions in driven nonlinear oscillator systems based on stroboscopic maps.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and experimental context

Tools to analyse the phase space structure of dynamical systems are probably at the heart of most subjects in science and engineering. Approaches which exploit linear dynamical properties have a well established tradition, which to some extent dates back more than a century. Linear tools still provide some of the most powerful methods to analyse dynamical behaviour, as most vividly illustrated by the success of linear response theory, which underpins among others large branches of physics and chemistry (see, for example, textbooks such as [1]). Within the context of data science, one should probably also mention the seminal idea of [2] which did plant the seed for a whole universe of successful data analysis tools exploiting the concept of linearity.

With the advent of nonlinear dynamics and chaotic behaviour at about four decades ago, the need to supplement these approaches by suitable nonlinear techniques became apparent (see, for example, [3]). From a plain theoretical perspective, bifurcations as well as unstable solutions are a key to understand the dynamics in systems with a finite number of degrees of freedom. The importance of unstable solutions is also emphasised by the fact that these orbits may provide a skeleton for an otherwise observable stable chaotic behaviour [4]. Powerful numerical tools have been developed to explore the bifurcation and phase space structure of low dimensional dynamical systems, whenever the mathematical equations of motion are accessible [5]. We ultimately aim at taking this idea to the next level and make bifurcation analysis and tracking of unstable solutions available in an experimental context, when no underlying equations of motion are at hand. Equation-free analysis can be viewed as a first step in such a direction since the ideas proposed in [6] have the potential to reduce a microscopic model with a huge number of degrees of freedom to an effective low dimensional macroscopic dynamics where bifurcation analysis becomes a meaningful approach. But contrary to a theoretical analysis the detection and tracking of bifurcations in an experimental setting becomes a challenge as unstable states are not directly accessible. For this reason, one ultimately relies on control techniques which on the one hand are able to stabilise unknown periodic states and which on the other hand have no impact on the target state so that the control force finally vanishes. We ultimately aim at proposing a true model-free approach by developing concepts for phase space reductions and bifurcation analysis to analyse real-world experiments for which no underlying mathematical model is available (see, for example, [7] for a recent application in the context of crowd management), and which cannot be tackled by state of the art methods. In this context, experimental control techniques are a key for stabilising and tracking experimentally unstable states, and these techniques can then even be considered as a kind of novel spectroscopic tool for systems analysis.

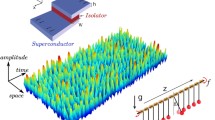

a Experimental time series (voltage at the photodiode vs time) for an atomic force microscope micro-cantilever in dynamic mode with driving frequency of \(53.7\text{ kHz }\). b Nonlinear resonance curve (amplitude of the oscillation vs. driving frequency) for an atomic force microscope in dynamic mode. Experimental frequency sweep (shark fin) under ambient conditions, in the vicinity of a Si surface (attractive interaction), exposing a clearly visible jump (left arrow) from a high-amplitude branch to a low-amplitude branch when sweeping from high to low frequencies (cross, bronze). A jump, though much smaller in size (right arrow), from the low-amplitude to the high-amplitude branch appears as well when sweeping from low to high frequencies (circle, blue). The amplitude has been estimated from the Fourier coefficient of the first harmonic (cf. as well a)

There exists a considerable number of non-invasive feedback control methods in the literature which are able to stabilise unstable equilibria or unstable periodic orbits and where in principle no a priori knowledge of the unstable state is required. These methods may be suitable for stabilising physical systems and to implement a model-free approach by identifying effective phase space structures based on plain observations. The class of washout filter-aided feedback control methods introduced in [8,9,10] can be understood as a proportional-plus-integral control to stabilise unstable stationary points. Linear stability theory can be utilised to prove linear stabilisation of equilibria or periodic orbits of the closed-loop system by washout filter-aided feedback control under certain conditions [10]. The seminal work of Ott, Grebogi and Yorke [11] was based on a similar reasoning where, however, some a priori knowledge of the Poincare map is required. These essential details, such as an estimate of the target state, can be obtained from data, normally by a suitable preprocessing of experimental time series, and [12] presents generalisations of these ideas covering stabilisation and tracking of unstable orbits as well as a successful application of the method in a model of atomic force microscopy. Stabilisation of unstable periodic orbits with a feedback control using a time-delayed signal has been introduced in [13] and applications can be found, for example, in [14]. In the same spirit, Sieber and Krauskopf [15] proposed a method based on time-delayed feedback control to stabilise periodic orbits. The authors used pseudo-arclength continuation for tracking of periodic orbits and this method succeeds in experiments even when fold bifurcations occur. An experimental application of this approach for pendula in the vicinity of saddle-node bifurcations is presented in [16]. Pyragas et al. suggested the simplest instalment of a state observer and proportional feedback to deal with the stabilisation of unknown equilibria in experiments [17, 18]. The method has much in common with the previously mentioned washout filter-aided feedback control [9, 19]. These methods have the limitation that tracking around a fold bifurcation fails at the bifurcation point. A numerical implementation of an equation-free approach where a bifurcation diagram at a macroscopic scale is obtained by applying pseudo-arclength continuation with a feedback control law to equations on a microscopic scale can be found in [20]. Application of feedback control with a suitable prediction of target states has been proposed in [21] to explore bifurcation diagrams for laboratory experiments. Along similar lines, an implementation of an experimental continuation scheme based on local proportional–derivative control for forced nonlinear oscillators is presented in [22,23,24,25].

While our subsequent analysis will be entirely theoretical, our approach is inspired by real-world experiments on fast time scales where a model-free approach has so far never been put to a test. As a paradigmatic experimental set-up, we consider atomic force microscopy which is a quite versatile method to inspect surfaces and attached structures or objects thereon, down to the nanoscale [26, 27]. The sensor element is a bending micro-cantilever of crystalline silicon or amorphous Si\(_3\)N\(_4\) with a tip at one end. Most popular is the dynamic mode, where the cantilever is driven into oscillation via a dither or shaker piezo. A registration laser spot is reflected from the top end of the metal-coated cantilever onto a segmented photodiode. The photocurrents are dynamic, and they serve as a measure of the vertical and lateral displacement of the tip upon cantilever bending or torsion. Many attempts have been undertaken to model force microscopy, using driven, damped harmonic oscillator models with more or less complicated tip–sample contact models. A theory-reality gap persists, mainly due to poorly controllable effects such as dissipation, capillary forces, patch charges, soft crashes, and others. Nonlinear effects are already caused by anharmonicities of the bending cantilever. In frequency scans of dynamic force microscopy close to a surface, a low- and a high-amplitude branch with an unstable branch at intermediate amplitude does exist (cf. Fig. 1b). Jumping between the two stable branches during acquisition of topography is a common distortion, particularly with mobile molecular species, requiring manual intervention. Particularly beyond the resonance frequency of the cantilever a rather broad range of tip–sample separations is prone to such imaging instabilities. In [28], stabilisation of a cantilever oscillation has been managed, leading to reduced noise. For suspended two-dimensional materials, the analysis of nonlinear dynamics enabled determination of elastic and heat transport properties [29, 30]. In dynamic force microscopy, two bistabilities can be accessed, one in the attractive (soft interaction) and another in the repulsive (strong interaction) regime [31]. In experiments with control electronics, the unstable branches cannot be reproduced, and the resonance curves take on shark fin signatures, see Fig. 1b. In addition, chaotic motion of micro-cantilevers has been reported in [32,33,34,35].

Atomic force microscopes may therefore serve as a paradigmatic model system to test a model-free approach properly for systems at the nanoscale and on the \(\text{ kHz }\) frequency scale. The device clearly displays instabilities and bifurcations known from dynamical systems theory. Hence, it looks promising to apply suitable control techniques to track unstable periodic orbits and to continue related unstable branches and bifurcation points. We aim at proposing a control scheme which can stabilise unknown periodic states and which does not require any kind of data processing, i.e. the control is based on the measurement of a single data point per period. In addition, the scheme needs to be non-invasive, i.e. control forces have to vanish asymptotically so that the final state is a periodic solution of the system without control. These constraints rule out various of the control techniques mentioned previously; for instance, no time-consuming data processing can be done, e.g. to reconstruct potential target states from observed data. We want to stress again that we see here atomic force microscopy just as a motivation. We do not claim that the control scheme developed here can be instantly implemented in experiments. Nevertheless, we consider the theoretical challenge of designing a control scheme within the limitations described above to be of interest from the plain theoretical point of view. Of course that does not rule out that our ideas may ultimately find an experimental realisation within atomic force microscopy.

We introduce in Sect. 2 a simple driven oscillator model which will be used to illustrate our control design, and we set up the required notation for the formal analysis of the Poincare map. Linear proportional full-state feedback on a system parameter in the Poincare map will be used to achieve stabilisation of unstable periodic orbits, and the details are outlined in Sect. 3. The selection of optimal control gains and the analytic procedure for tracking unstable branches are introduced in Sect. 4 where we also cover the limitations of the control design which are determined by controllability conditions known from classical control theory. Various generalisations of the concept, such as the impact of a ceiling function for the control feedback, or the use of delayed observations in cases where full-state feedback is not feasible is covered in Sect. 5. Finally, we also discuss the impact of our theoretical considerations for the real-world application outlined above.

2 Stroboscopic map and periodic states

As a motivation to develop the theoretical concepts for tracking unstable states with a view to perform a data-based experimental bifurcation analysis, let us consider the one-dimensional motion of a particle in an external potential subjected to damping and a periodic harmonic drive with amplitude h

For the purpose of a numerical illustration, we will choose later a Toda oscillator [36] in non-dimensional units with

and parameter values

Equation (1) is an example for a two-dimensional non-autonomous differential equations with T-periodic time-dependence which can, in general, be written as

Here, the two-dimensional vector \(\varvec{z}\) describes the state of the system, \(\varvec{f}_\mu \) the right-hand sides, and the subscript \(\mu \) takes the dependence of the system on a static real-valued external parameter into account. We will base our theoretical considerations on the fairly general class of equations given by Eq. (4), and that clearly covers the concrete physical example Eq. (1).

As we are finally interested in stabilising and tracking time-periodic solutions of Eq. (4), it will turn out to be convenient to capture the dynamics in terms of a stroboscopic map. If \(\varvec{z}(t)=\varvec{\zeta }_\mu (t,\varvec{z}_0)\) denotes the solution of the system Eq. (4) with initial condition \(\varvec{z}(0)=\varvec{z}_0\), that means

the stroboscopic map \(\varvec{F}_\mu \) is given by

Here, \(\varvec{z}_n=\varvec{z}(T_n)\) denotes the solution at times \(T_n\), where \(T_n\) stands for discrete time points with fixed phase of the driving field, e.g. \(T_n=n T\) is the end of the n-th period of the drive. In experimental terms, a stroboscopic map is easily realised by recording the output at integer multiples of the period only. While it is obvious how to obtain the stroboscopic map, Eq. (6), based on numerical integration over one period, we finally aim at a scheme which does not require the precise form of the map \(\varvec{F}_\mu \) and thus can be implemented as well in experiments. For the time being, let us assume that we have access to the dynamics and to the stroboscopic map, say via Eq. (5).

A T-periodic solution of Eq. (4) turns out to correspond to a fixed point of the stroboscopic map, i.e.

with \(\varvec{z}(t)=\varvec{\zeta }_\mu (t,\varvec{z}_*)=\varvec{\zeta }_\mu (t+T,\varvec{z}_*)\) being the periodic time-dependent state. The condition Eq. (7) determines the fixed point manifold \(\varvec{z}_*=\varvec{z}_*(\mu )\) in dependence on the system parameter. Using \(\varvec{z}_n=\varvec{z}_*+\varvec{\delta z}_n\), a linear stability analysis of Eq. (6) yields the so-called variational equation

where \(\text {D}\varvec{F}_\mu (\varvec{z}_*)\) denotes the Jacobian matrix at the fixed point. Stability requires all eigenvalues of the Jacobian to be contained in the unit circle. The so-called Jury criterion [37] gives necessary and sufficient conditions for the stability in time discrete dynamical systems. For a two-dimensional map \(\varvec{F}_\mu (\varvec{z})\), these conditions can be expressed in terms of the trace and the determinant of the two-dimensional Jacobian matrix \(\text {D}\varvec{F}_\mu (\varvec{z}_*)\) evaluated at the fixed point \(\varvec{z}_*\). The stability criteria read

To illustrate the considerations so far, we resort to the example defined in Eqs. (1–3). The state vector is given by \(\varvec{z}=(x,v)\), and we chose the frequency \(\omega \) as our external static parameter \(\mu \). Integration over a period simply gives the stroboscopic map, and iteration gives a discrete time series \((\varvec{z}_0,\varvec{z}_1,\ldots )\) which contains position and velocity at discrete times \(T_n=n T\). Numerical simulations demonstrate that this series ultimately may converge to a fixed point \(\varvec{z}_*=(x_*,v_*)\). Skipping the transient we record the fixed point, while we change the parameter \(\mu \) in a quasi-stationary way. While we could simply record each component of the state vector \(\varvec{z}_*=(x_*,v_*)\), we chose here the combination \(r^2_*= x_*^2 + v_*^2/\omega ^2\) as this quantity would give the actual amplitude of the oscillation if the solution were a plain harmonic function. The result displayed in Fig. 2 clearly shows the usual tilted nonlinear resonance curve with a region of bistability which contains as well an invisible unstable state. This unstable state normally determines the basin boundaries of the two coexisting stable branches via a separatrix. We aim to complete this bifurcation scenario by supplementing the unstable state with a method which can then also be implemented in experiments.

Nonlinear resonance in the driven Toda oscillator, Eqs. (1) and (2), with parameters as in Eq. (3). Stable fixed points of the stroboscopic map, \(r_*^2=x_*^2+v_*^2/\omega ^2\), in dependence on the driving frequency, \(\omega \). Circle (blue): adiabatic parameter downsweep, cross (bronze) adiabatic parameter upsweep

3 Instantaneous full-state feedback

A bifurcation analysis and the corresponding numerical continuation tools can easily supplement the data shown in Fig. 2 with the unstable branch. To achieve such a goal in experiments, one needs to design control tools to stabilise and track unstable states based on observations. In addition, such a control has to be non-invasive as the control should not alter the position of the final unstable state. As we deal here with time periodic solutions of time continuous dynamical systems, we base our approach on the stroboscopic map, Eq. (6), since just the stabilisation of unstable fixed points is at stake. We chose the parameter \(\mu \) as our control input, so that \(\mu \) becomes a dynamical quantity \(\mu _n\). The simplest way to install a closed-loop control is by linear state feedback. That means on each time step we update the parameter \(\mu \) by a linear function of the current state, so that

Here, \(\varvec{\alpha }^T =(\alpha _1,\alpha _2)\) are the two control gains and \(\mu _R\) and \(\varvec{z}_R\) denote reference values which result in a single offset. The closed-loop dynamics then reads

The fixed point of Eq. (11) is given by (see as well Eq. (10))

Hence, the fixed point \(\varvec{z}_*\) and the corresponding parameter value \(\mu _*\) are determined by the intersection of the fixed point manifold given in Eq. (7) and a hyperplane which is determined by the reference values \((\mu _R,\varvec{z}_R)\). By making the system parameter \(\mu \) a dynamical quantity, we aim at stabilising fixed points which have been previously unstable, by a suitable choice of the control gains \(\varvec{\alpha }^T\). Stability of the fixed point is governed again by the variational equation of Eq. (11) which reads

where the \(2\times 2\) square matrix \(\varvec{A}\) is given by

Successful control of the unstable state in the driven Toda oscillator, Eqs. (1) and (2), with parameter setting according to Eq. (3), see Fig. 2 for data without control. The frequency \(\omega \) has been used as control input \(\mu \). Reference values in the control law Eq. (10) are \(\omega _R=0.97\), \(x_R=(\varvec{z}_R)_1=-0.28\), \(v_R=(\varvec{z}_R)_2=-0.54\), while gains have been chosen as \(\alpha _1=4.9\) and \(\alpha _2=8.5\). Time series of the stroboscopic map with control, Eq. (11), and initial condition \(x(0)=x_R\), \(v(0)=v_R\) (blue, open symbols, lines are a guide to the eye); a frequency value \(\omega _n\), b amplitude estimate \(r^2_n=x_n^2+v_n^2/\omega _n^2\). For comparison, the corresponding time series for optimal control gains, see Sect. 4, are shown as well (bronze, full symbols)

and the contribution caused by the control \(\partial \varvec{F}_* \otimes \varvec{\alpha }^T\) is the dyadic product of the control gains and the coupling of the control action to the dynamical system. Here, and in what follows, we use the shorthand notation

to abbreviate the Jacobian matrix and the parameter derivative of the system at the fixed point. Stability of the fixed point subjected to control is again determined by the Jury criterion for the matrix \(\varvec{A}\), cf. Eq. (9). To evaluate these conditions, we need the trace and the determinant of the expression Eq. (14). They are simply given by

Here, \(\text{ adj }(\text {D}\varvec{F}_*)\) denotes the adjugate matrix which has the property that the matrix product \(\text {D}\varvec{F}_* \text{ adj }(\text {D}\varvec{F}_*)\) results in the determinant \(\det (\text {D}\varvec{F}_*)\). That means the adjugate is a kind of scaled inverse matrix. Even if the Jacobian \(\text {D}\varvec{F}_*\) becomes singular, the adjugate matrix is still well defined.

The Jury criterion for the matrix \(\varvec{A}\) (see Eq. (9)) gives three linear inequalities for the control gains \(\varvec{\alpha }^T\). These inequalities determine a simplex, that means a triangle, in the parameter space of control gains. As long as the vectors \(\partial \varvec{F}_*\) and \(\text{ adj }(\text {D}\varvec{F}_*) \partial \varvec{F}_*\) are linearly independent, the two equations Eq. (16) can be solved for the control gains \(\varvec{\alpha }^T\) for any values of \(\text{ tr }(\varvec{A})\) and \(\text{ det }(\varvec{A})\). That means we can always find control gains so that the matrix \(\varvec{A}\) has eigenvalues which guarantee linear stability, i.e. so-called pole placement is possible. Thus, the region for successful control in the parameter plane of control gains becomes a nonempty triangle. To perform pole placement and to ensure a stable state, one needs to solve Eq. (16) for given left-hand side for the control gains \(\varvec{\alpha }^T\). That requires the coefficient matrix to be non-singular, i.e. that \(\{\partial \varvec{F}_*, \text{ adj }(\text {D}\varvec{F}_*) \partial \varvec{F}_* \}\) is a set of linearly independent vectors. This condition of linear independence is equivalent to the linear independence of \(\{\partial \varvec{F}_*, \text {D}\varvec{F}_* \partial \varvec{F}_* \}\), i.e. to the classical condition of controllability. The equivalence is obvious for a non-singular matrix \(\text {D}\varvec{F}_*\) but it can also be shown to be valid for singular matrices with a little bit of linear algebra. Hence, our condition for successful control coincides, of course, with the classical engineering controllability condition for the matrix defined in Eq. (14).

Even if the controllability condition is violated, that means if the set \(\{\partial \varvec{F}_*, \text {D}\varvec{F}_* \partial \varvec{F}_* \}\) is linearly dependent so that \(\partial \varvec{F}_*\) is an eigenvector of \(\text {D}\varvec{F}_*\), one can show by a short calculation, using for instance a coordinate system where \(\text {D}\varvec{F}_*\) is diagonal, that one can still find control gains so that the system becomes stable, as long as the eigenvector of \(\text {D}\varvec{F}_*\) which differs from \(\partial \varvec{F}_*\) corresponds to a stable direction of the system without control. This case corresponds in the following sense to the seminal control algorithm proposed by Ott, Grebogi and Yorke [11] more than three decades ago. The original OGY control algorithm has been essentially motivated by a geometric construction whereby the control force in phase space is applied in the direction of the unstable manifold to push the phase space point on the stable manifold in phase space. The internal dynamics of the system then moves the phase space point towards the desired target state at a rate determined by the stable eigenvalue of the target state. Formally, this successful algorithm violates the condition of controllability. The vector which determines how the control force couples to the system, \(\partial \varvec{F}_*\), is an eigenvector of the Jacobian matrix of the system, \(\text {D}\varvec{F}_*\). Thus, the two expressions \(\partial \varvec{F}_*\) and \(\text {D}\varvec{F}_* \partial \varvec{F}_*\) are linearly dependent and the formal condition of controllability is violated. Nevertheless stabilisation works. Controllability is in fact a stronger condition as controllability ensures that by proper control gains eigenvalues of the system with control can be placed at will, a feature which the original OGY algorithm does not share (see as well [38]). Thus, violation of controllability does not necessarily mean that stabilisation fails, as briefly outlined above.

To demonstrate the feasibility of the proposed approach, we illustrate the control again for the driven Toda oscillator, Eqs. (1) and (2). For the control input, we use the parameter \(\omega \), i.e. the frequency. This quantity is often easily accessible and adjustable in experiments, but the dynamics normally depends in a very sensitive way on the frequency of the drive. At the end of each period, we record the state of the system given by the values of \(x(T_n)\) and \(v(T_n)\). One then uses the linear law, Eq. (10), to determine a new value for the frequency which is then kept constant during the following period. This process is repeated after completion of each period, so we apply the control in a stroboscopic way. The time \(T_n\) for the end of the n-th period is now not any longer an integer multiple of a base period. For the control law, Eq. (10), we made up reference values \(\mu _R=\omega _R\) and \(\varvec{z}_R^T=(x_R,v_R)\) as well as control gains \(\varvec{\alpha }^T\) out of thin air. We demonstrate successful control of the unstable state in the region of bistability, see Fig. 2, simply by time traces, see Fig. 3. Frequency \(\omega _n\) and amplitude \(r_n^2\) converge to values which are on the unstable branch of the nonlinear resonance curve, cf. Fig. 2. We should, however, remark that control may fail if initial conditions are set far away from the unstable target state, i.e. the scheme so far has occasionally bad global properties.

The basic idea of the stroboscopic control algorithm can be summarised in non-technical terms in the following way. One adjusts an external parameter at the beginning of each period to a new value so that the parameter becomes a time-dependent piecewise constant quantity. The control gains are adjusted in such a way that the time-dependent parameter converges to a constant value, while at the same time the state of the system converges to a periodic solution. By design, this periodic solution is an unstable state of the original dynamics, with a parameter value which is given by the limit obtained during the control process. The offset in the control law enables one to tune this limit value and to select the target state. Above all, the scheme is able to stabilise an unknown periodic state without the need of any data-based estimation or reconstruction in phase space, and the scheme is non-invasive, even though there is no quantity in the control loop which tends to zero.

4 Optimal control gains and tracking

From a linear perspective optimal control gains result in transients as short as possible, i.e. these gains cause the fixed point to become superstable with both eigenvalues of \(\varvec{A}\) vanishing (see Fig. 3 for an illustration). Solving Eqs. (16) with \(\text{ tr }(\varvec{A})=\text{ det }(\varvec{A})=0\) for \(\varvec{\alpha }^T\) gives

where

are orthogonal vectors to the set \(\{\partial \varvec{F}_*\), \(\text{ adj( } \text {D}\varvec{F}_*) \partial \varvec{F}_*\}\). The analytic computation of these optimal control gains requires the knowledge of \(\text {D}\varvec{F}_*\) and \(\partial \varvec{F}_*\). It is not surprising that without any knowledge about the underlying dynamics an a priori determination of optimal control gains cannot be achieved. It is in fact already quite remarkable that we were able to show that for a generic dynamical system there exists a range of gains where stabilisation of the target state is possible. Based on this observation, it is then possible to find such gains in experiments and optimise their values when looking, e.g. at the transient dynamics or at the spectral width of residual signals, as the latter gives an estimate of the eigenvalues of the system subjected to control (see, for example, [39] for an application of such a general idea in a different control setting). Hence, maximising such linewidths by tuning the gains will result in optimal control gains as well.

Let us here study in more detail the analytic impact of Eq. (17). At least in numerical simulations the required derivatives can be quite easily computed from the variational equation of Eq. (5), along the lines of the stroboscopic map. In fact, the Jacobian matrix

follows from the derivative of the time-dependent solution with respect to the initial condition and thus results in the so-called fundamental matrix \(\varvec{X}_\mu \). Taking the derivative of Eq. (5) with respect to the initial condition, we obtain

Similarly, we have for the derivative of the stroboscopic map with respect to the parameter

where the derivative \(\partial _\mu \varvec{\zeta }_\mu \) of the solution \(\varvec{\zeta }_\mu \) with respect to the parameter obeys (see Eq. (5))

If we integrate Eqs. (20) and (22) together with Eq. (5), we obtain the stroboscopic map, Eq. (6), and the relevant derivatives, Eqs. (19) and (21). Hence, the optimal control gains, Eq. (17), are easily accessible.

The reference values \((\mu _R,\varvec{z}_R)\) in Eq. (10) determine the actual fixed point \(\varvec{z}_*\) to be stabilised and the corresponding parameter value \(\mu _*\). By a slow quasi-stationary change of the reference values, it is possible to track unstable states. While not required, it is suitable to set reference values close to the desired target state \((\mu _*,\varvec{z}_*)\). When control is achieved, one may then increase the reference values \((\mu _R,\varvec{z}_R)\) in the direction of the curve of fixed points. Suitable increments can thus be obtained from the tangent vector of this manifold, which is given in terms of the differential of the fixed point condition \(\varvec{z}_*=\varvec{F}_{\mu _*}(\varvec{z}_*)\), that means

Hence,

so that the vector of increments is parallel to a vector determined by the Jacobian matrix and derivatives of the stroboscopic map. The increments can be computed explicitly with the quantities that have been already used to determine the optimal control gains, Eq. (17). Thus, no additional input is needed to implement a quasi-stationary change of the reference values, \((\mu _R,\varvec{z}_R)=(\mu _*+d \mu , \varvec{z}_*+d \varvec{z})\), which allows to perform a control-based tracking of unstable states.

Again we illustrate the tracking with the driven Toda oscillator, Eqs. (1) and (2), with parameter setting as in Eq. (3). We implement control, Eq. (10), with the frequency \(\omega \) being the control input \(\mu \). The control gains are adjusted to their linear optimal values, Eq. (17), where the relevant quantities can be obtained, as mentioned, from Eqs. (20) and (22), when control has been successful. The reference values are changed by the tracking procedure based on the tangent of the fixed point manifold, Eq. (24), as described above. Results obtained from a manifold upsweep and downsweep are shown in Fig. 4a. We clearly recover the full resonance line including the unstable branch (cf. Fig. 2 for the data without control). Near the tip of the resonance curve the tracking fails. Here, control gains start to diverge as the controllability condition is violated. That feature is reflected by a vanishing denominator in Eq. (17), see Fig. 4b for the value of the denominator along the parameter upsweep and downsweep.

Control-based tracking of the driven Toda oscillator, Eqs. (1) and (2) with parameter setting specified in Eq. (3). For the control input, the frequency is used and control gains have been adjusted to their optimal values, Eq. (17). Tracking is done by incrementing the reference values in the control law, Eq. (10), along the tangent of the control manifold, see Eq. (24). a Stationary amplitude \(r_*^2=x_*^2+v_*^2/\omega ^2\) as a function of the frequency (see Fig. 2 for the data without control), b value of the denominator of the optimal control gains, see Eq. (10). The denominator vanishes near the tip of the resonance curve. Red (circles): manifold downsweep, cyan (crosses): manifold upsweep

5 Practical challenges and extensions

The linear full-state feedback along the lines of Eq. (10) is just a special case of a more general instantaneous law defined by a function h

where \(\nu _R\) stands for some reference value used, e.g. for selecting fixed points or tracking. The fixed point is now determined by the intersection of two manifolds

As far as linear stability is concerned the previous analysis by and large still stands as the variational equation of Eq. (25) reads

Hence, stability is now governed by some renormalised control gains \(\partial _{\varvec{z}_*} h(\varvec{z}_*,\varvec{\alpha },\nu _R)\) but otherwise the analysis remains unchanged. In particular, the constraint caused by controllability still stands, even for the fairly general scheme of Eq. (25). The original linear scheme, Eq. (10), may have bad global properties, e.g. with regard to the size of the basin of attraction or the structural stability of the control loop. One may aim at optimising some of those features by suitable choices of the feedback h.

5.1 Nonlinear observation and ceiling function

It is worth to consider a few special cases of Eq. (25) more explicitly. If one observes the state of the system through two observables \(g_1(\varvec{z})\) and \(g_2(\varvec{z})\) instead of the state vector, a corresponding linear feedback reads

where \(\varvec{g}_R\) are some reference values. The variational equation

tells us that stability is governed by rescaled control gains \(\varvec{\alpha }^T D \varvec{g}(\varvec{z}_*)\) which are still independent quantities as long as the Jacobian \(D \varvec{g}(\varvec{z}_*)\) is not singular (i.e. the two observables are in this sense independent).

The system may depend very sensitively on the parameter \(\mu \). That is in particular the case if the frequency of the drive is used as control input. Then, the control tends to overshoot, in particular for the linear law Eq. (10) which is defined by an unbounded function. One can try to cure this problem by using a ceiling function. A simple version where the control feedback stays bounded is given by

where \(\eta >0\) determines the cut-off of the control feedback. By this choice, we ensure on the one hand that the parameter stays in a neighbourhood of the reference value \(\mu _R\), while on the other hand the linear stability is unaffected as the variational equation coincides with the case of linear feedback. It may, however, happen that the system settles on coexisting stable branches as for large deviations from the reference value the control feedback along the lines of Eq. (30) becomes constant.

5.2 Incomplete information and time-delayed observation

An important variant of the scheme outlined in Eq. (28) occurs if instead of the full-state vector \(\varvec{z}\) only partial information, say one component, is accessible. To analyse this case in slightly more detail, let us use the notation \(\varvec{z}=(x,v)\) for the two components, and assume that the first component x(t) is accessible. The stroboscopic measurement at times \(T_n\), i.e. at the end of the n-th period of the driving field, gives us \(x_n=x(T_n)\). Since we assume the second component to be inaccessible, we are left with the option to base our feedback on x(t) evaluated at a different time during the period of the drive. Following, for instance, the reasoning of delay embedding [40], it is instructive to choose the value of \(\xi _n=x(T_n-\tau )\) as our second observation, where \(\tau \) denotes a fixed time delay. For a theoretical analysis of a scheme which uses such a delayed observation, we recall that \(\xi _n\) can be expressed in terms of the solution of the equations of motion, Eq. (5), as

since \(\mu _{n-1}\) is the actual value of the control input during the period when the delayed observation is performed. In some sense, we are aiming at a control feedback along the lines of Eq. (28) with the choice \(g_1(\varvec{z})=x\) and \(g_2(\varvec{z})=h_{\mu _{n-1}}(\varvec{z})\). Since the delayed observation already depends on the parameter value \(\mu _{n-1}\) of the past period, it looks consistent to take that also explicitly into account for the feedback law of \(\mu _n\). Thus, we arrive at

with some fixed reference values \(\mu _R\), \(x_R\), and \(\xi _R\). The theoretical analysis now closely parallels the steps of the previous sections. The fixed point of the closed-loop dynamics, Eqs. (11) and (32) is determined by

while the variational equation with \(\varvec{z}_n=\varvec{z}_*+\varvec{\delta z}_n\), \(\mu _n=\mu _*+\delta \mu _n\) (cf. as well Eq. (13))

provides the details about the stability of the fixed point. Here,

abbreviate the gradient of the delayed observation and its derivative with respect to the external parameter at the fixed point. Introducing rescaled gains by

Control based on measurements of the position in the driven Toda oscillator, Eqs. (1) and (2), with parameter setting according to Eq. (3), see Fig. 2 for data without control. The frequency \(\omega \) has been used as control input \(\mu \) in the control law Eq. (32) with delay time \(\tau =1.5\). Reference values in the control law are \(\omega _R=0.98\), \(x_R=-0.3\), \(g_R=0.527\), while gains have been chosen as \(\alpha _1=5.0\), \(\alpha _2=-8.0\), and \(\beta =0.1\). Time series of the stroboscopic map with control, Eq. (11), and initial condition \(x(0)=x_R\), \(v(0)=-0.53\). a Frequency value \(\omega _n\), b position \(x_n\)

the variational equation, Eq. (34) can be conveniently written as

with

It is quite easy to check that, as expected, the conditions for controllability of the matrix \(\varvec{B}\) coincide with the simpler case of matrix \(\varvec{A}\) discussed in Sect. 3, as long as \(\text {D}\varvec{F}_*\) is non-singular. The same statement essentially holds in the singular case, as we will see shortly. To assess the stability properties, let us focus on the characteristic polynomial of the matrix \(\varvec{B}\), Eq. (38), which evaluates as

The Jury criterion [37] gives again necessary and sufficient conditions for stability, but the criterion is slightly involved for a polynomial of degree three, see, for example, [41] for a simple application on delayed feedback control. Here, we resort to the simpler concept of pole placement which requires to find gains \(\varvec{\hat{\alpha }}^T\) and \(\hat{\beta }\) so that the subleading coefficients in Eq. (39) can take any prescribed numerical values. Thanks to the linear dependence of the coefficients on the control gains that is indeed the case if \(\text{ det } (\text {D}\varvec{F}_*) \ne 0\) and if the set \(\{\partial \varvec{F}_*, \text{ adj }(\text {D}\varvec{F}_*) \partial \varvec{F}_*\}\) is linearly independent. The last condition coincides in fact with the controllability condition encountered for full-state feedback discussed in Sect. 3. Even if \(\text {D}\varvec{F}_*\) becomes singular, the condition of linear independence still guarantees successful control since the third eigenvalue of the matrix Eq. (38) just vanishes. As for the optimal control gains, i.e. for control gains so that the stabilised orbit becomes superstable, all nontrivial coefficients in Eq. (39) have to vanish. That means these optimal control gains are given by \(\hat{\beta }=0\), while \(\varvec{\hat{\alpha }}^T\) obeys the conditions of optimality discussed in Sect. 4, see Eqs. (16) and (17). In particular, the inclusion of the damping term \(\beta \mu _{n-1}\) in the control law Eq. (32) has turned out to be crucial, as otherwise stabilisation or optimal gains could be inaccessible, see Eq. (36). Finally, we need to ensure that the rescaled gains \(\varvec{\hat{\alpha }}^T\) and \(\hat{\beta }\) translate into actual control gains \(\varvec{\alpha }^T\) and \(\beta \) via Eq. (36). That requires \((d h_*)_2\) to be nonzero, a condition which means that the second unobserved component of the state vector has a finite impact on the delayed observation, see Eq. (31). Such a constraint can thus be considered as a trivial instalment of what is called observability in the engineering context.

In summary, we conclude that control with incomplete state information is possible when delayed observations are employed. If one has access to the underlying equations of motion, the required control gains can be computed in the same way as before, since Eq. (31) and the required derivatives, Eq. (35), can be obtained again from the solution of Eqs. (5), (20), and (22). For the experimental context, our considerations tell us that a control law along the lines of Eq. (32) will be successful for a suitable set of control gains.

For the purpose of an illustration, we again resort to the Toda oscillator with the parameter setting already employed in Fig. 3. Now, however, we base the control on the observation of the position x(t) only, Eq. (32), with the frequency being the control input, \(\mu _n=\omega _n\). Measuring the position at an intermediate time, \(\xi _n=x(T_n-\tau )\), and at the end of the period, \(x_n=x(T_n)\), we update the frequency after each period. For the choice of a suitable time delay \(\tau \), we recall that for harmonic solutions the observation of the position with a delay of a quarter of the period results in a value which is proportional to the velocity. Therefore, we take \(\tau =1.5\). While optimal control gains can be computed as outlined above, here we make up some suitable values out of thin air. Figure 5 shows time traces of successful stabilisation of an unstable state.

6 Conclusion

We have introduced a design for the non-invasive control of an unknown periodic orbit. Our approach is based on a stroboscopic observation of a time series and an implementation of a full-state proportional feedback for a parameter of the system. During the stabilisation, the parameter and the time-dependent state simultaneously adjust in such a way that a proper periodic orbit of the plain system at a target parameter value is obtained. Our approach relies on simple measurements of the state of the system and does not require any further data processing. Our design is thus able to deal with systems on fast time scales, like the example of amplitude modulation dynamic force microscopy described as a motivation in the introduction, and where no accurate mathematical model is available.

We have evaluated the performance of the control in terms of linear stability analysis. That enabled us to determine optimal control gains by and large by analytic means, to implement a tracking algorithm for unstable branches, and to clarify the role and the limitations caused by controllability conditions, which are well established in the engineering context. By definition, a linear stability approach cannot give detailed information about the global performance of control, such as the basin of attraction of the stabilised state, or the invariant manifolds which cause basin boundaries. Numerical findings indicate that the basin is occasionally quite small, and any analytic estimate turns out to be quite challenging. We will address this important issue elsewhere.

In typical situations, information about the full state of the system is hardly available as one often just relies on the measurement of a single scalar time series. Even in such cases our design can succeed by employing delayed observations, as shown in the example above. With access to the underlying dynamics, one can even determine suitable control gains a priori, and essentially by analytic means. In addition, it is worth to explore whether the control performance of periodic orbits can be improved by using multiple delayed observations for a larger set of time delays and for a larger number of control gains, following in some sense the spirit of extended time-delayed feedback control [42].

While the implementation of the control design outlined above is based on the stroboscopic observation of an experimental time series, we have used some properties of the underlying equations of motion for the theoretical analysis. While in numerical simulations one has full access to the details of the dynamical system, so that the optimal control gains, Eq. (17), can be evaluated, such information is hardly available in actual real-world experiments, since a data-based accurate estimate of the required derivatives normally turns out to be a delicate problem. Therefore, finding suitable control gains in experimental set-ups may turn out to be a considerable challenge. It is therefore still a highly nontrivial task to design a feedback, Eq. (25), in fast real-world experiments so that stability estimates and good global properties can be obtained a priori if only a scalar experimental time series is at hand.

Our analysis has been based on linear stability and thus cannot address the questions mentioned above in full detail. In fact, numerical simulations with successful control may show basins in phase space which are small, even if we employ dead beat control with vanishing eigenvalues. The mechanism of stroboscopic control and the conditions for controllability do not rely on any time scale separation. Control is successful even if the internal dynamics is fast compared to the period of the orbit if one discounts for the well-known impact of control loop latency. Eigenvalues of the target states involved do not have to be a small perturbation of unity so that one can deal with situations where one is either far apart or in the vicinity of a bifurcation point. While all these aspects may turn out to be useful in applications, they make an intuitive understanding of the control performance quite challenging, if not impossible. To make some progress, it could be sensible to take a step back and to focus on models and situations where the dynamics is a perturbation of harmonic motion, and where time scale separation and averaging can be applied. In such cases, one would be able to gain analytic and intuitive insight into global properties of the control mechanism such as basins of attraction or the impact of the parameter that is used to couple the control force. In experiments, the frequency and the amplitude of the drive are usually easily accessible for control input. It would be interesting to figure out which of these variables is more suited to optimise the global control performance such as basins of attraction or removing obstacles caused by controllability. In that respect looking at the seemingly simple case of weakly nonlinear systems would be a promising next step, even from the point of view of a potential application, and details about the corresponding global analysis will be published elsewhere.

Data availability

No data sets were generated or analysed during the current study.

References

Kubo, R., Toda, M., Hashitsume, N.: Statistical Physics II: Nonequilibrium Statistical Mechanics. Springer, Berlin (1991)

Karhunen, K.: Über lineare Methoden in der Wahrscheinlichkeitsrechnung, Ann. Acad. Sci. Fennicae Ser. A. I. Math.-Phys. 37, (1947)

Kantz, H., Schreiber, T.: Nonlinear Time Series Analysis. Cambridge University Press, Cambridge (1997)

Artuso, R., Aurell, E., Cvitanović, P.: Recycling of strange sets. Nonlinear 3, 325 (1990)

Krauskopf, B., Osinga, H., Galán-Vioque, J.: Numerical Continuation Methods for Dynamical Systems. Springer, Dordrecht (2007)

Kevrekidis, I., Gear, C., Hyman, J., Kevrekidis, P., Runborg, O., Theodoropoulos, C.: Equation-free, coarse-grained multiscale computation: enabling microscopic simulators to perform system-level tasks. Comm. Math. Sci. 1, 715 (2003)

Panagiotopoulos, I., Starke, J., Just, W.: Control of collective human behaviour: social dynamics beyond modeling. Phys. Rev. Res. 4, 043190 (2022)

Lee, H.-C., Abed, E.H.: Washout filters in the bifurcation control of high alpha flight dynamics in 1991 American Control Conference (American Automatic Control Council, Evanston), pp. 206–211

Abed, E.H., Wang, H.O., Chen, R.: Stabilization of period doubling bifurcations and implications for control of chaos. Physica D 70, 154 (1994)

Hassouneh, M.A., Lee, H.-C., Abed, E.H.: Washout filters in feedback control: Benefits, limitations and extensions in Proceedings of the 2004 American Control Conference, vol. 5, pp. 3950–3955 (2004)

Ott, E., Grebogi, C., Yorke, J.A.: Controlling chaos. Phys. Rev. Lett. 64, 1196 (1990)

Misra, S., Dankowicz, H., Paul, M.R.: Event-driven feedback tracking and control of tapping-mode atomic force microscopy. Proceed. Royal Soc. A: Math. Phys. Eng. Sci. 464, 2113 (2008)

Pyragas, K.: Continuous control of chaos by self-controlling feedback. Phys. Lett. A 170, 421 (1992)

Kiss, I.Z., Kazsu, Z., Gáspár, V.: Tracking unstable steady states and periodic orbits of oscillatory and chaotic electrochemical systems using delayed feedback control, Chaos: An Interdisciplinary Journal of Nonlinear Science 16, 033109 (2006)

Sieber, J., Krauskopf, B.: Control based bifurcation analysis for experiments. Nonlinear Dynam. 51, 365 (2008)

Sieber, J., Gonzalez-Buelga, A., Neild, S., Wagg, D., Krauskopf, B.: Experimental continuation of periodic orbits through a fold. Phys. Rev. Lett. 100, 244101 (2008)

Pyragas, K., Pyragas, V., Kiss, I.Z., Hudson, J.L.: Stabilizing and tracking unknown steady states of dynamical systems, Phys. Rev. Lett. 89, (2002)

Pyragas, K., Pyragas, V., Kiss, I.Z., Hudson, J.L.: Adaptive control of unknown unstable steady states of dynamical systems. Phys. Rev. E 70, 026215 (2004)

Wang, H.O., Abed, E.H.: Bifurcation control of a chaotic system. Automatica 31, 1213 (1995)

Siettos, C.I., Kevrekidis, I.G., Maroudas, D.: Coarse bifurcation diagrams via microscopic simulators: a state-feedback control-based approach. Int. J. Bifurcations and Chaos 14, 207 (2004)

Barton, D.A.W., Sieber, J.: Systematic experimental exploration of bifurcations with noninvasive control. Phys. Rev. E 87, 052916 (2013)

Bureau, E., Schilder, F., Santos, I.F., Thomsen, J.J., Starke, J.: Experimental bifurcation analysis of an impact oscillator - Tuning a non-invasive control scheme. J. Sound Vib. 332, 5883 (2013)

Bureau, E., Schilder, F., Elmegård, M., Santos, I.F., Thomsen, J.J., Starke, J.: Experimental bifurcation analysis of an impact oscillator - Determining stability. J. Sound Vib. 333, 5465 (2014)

Schilder, F., Bureau, E., Santos, I.F., Thomsen, J.J., Starke, J.: Experimental bifurcation analysis - Continuation for noise-contaminated zero problems. J. Sound Vib. 358, 251 (2015)

Dittus, A., Kruse, N., Barke, I., Speller, S., Starke, J.: Detecting stability and bifurcation points in control-based continuation for a physical experiment of the Zeeman catastrophe machine. SIAM J. Appl. Dyn. Sys. 22, 1275 (2023)

Binnig, G., Quate, C., Gerber, C.: Atomic Force Microscope. Phys. Rev. Lett. 56, 930 (1986)

Voigtländer, B.: Atomic Force Microscopy. Springer, Cham (2019)

Yamasue, K., Kobayashi, K., Yamada, H., Matsushige, K., Hikihara, T.: Controlling chaos in dynamic-mode atomic force microscope. Phys. Lett. A 373, 3140 (2009)

Davidovikj, D., Alijani, F., Cartamil-Bueno, S., van der Zant, H., Amabili, M., Steeneken, P.: Nonlinear dynamic characterization of twodimensional materials. Nature Comm. 8, 1253 (2017)

Dolleman, R.J., Houri, S., Davidovikj, D., Cartamil-Bueno, S.J., Blanter, Y.M., van der Zant, H.S.J., Steeneken, P.G.: Optomechanics for thermal characterization of suspended graphene. Phys. Rev. B 96, 165421 (2017)

Hölscher, H., Schwarz, U.D.: Theory of amplitude modulation atomic force microscopy with and without Q-Control. Internat. J. of Non-Linear Mech. 42, 608 (2007)

Hu, S., Raman, A.: Chaos in Atomic Force Microscopy. Phys. Rev. Lett. 96, 036107 (2006)

Jamitzky, F., Stark, M., Bunk, W., Heckl, W., Stark, R.W.: Chaos in dynamic atomic force microscopy. Nanotechnology 17, S213 (2006)

Stark, R.W.: Bistability, higher harmonics, and chaos in AFM, materialstoday 13, 24 (2010)

Rega, G., Settimi, V.: Bifurcation, response scenarios and dynamic integrity in a single-mode model of noncontact atomic force microscopy. Nonlin. Dyn. 73, 101 (2013)

Toda, M.: Studies of a non-linear lattice. Phys. Rep. 18, 1 (1975)

Jury, E.I.: A simplified stability criterion for linear discrete systems. Proc. IRE 50, 1493 (1962)

Romeiras, F., Grebogi, C., Ott, E., Dayawansa, W.: Controlling chaotic dynamical systems. Physica D 58, 165 (1992)

Just, W., Reibold, E., Kacperski, K., Fronczak, P., Hołyst, J., Benner, H.: Influence of stable Floquet exponents on time-delayed feedback control. Phys. Rev. E 61, 5045 (2000)

Packard, N.H., Crutchfield, J.P., Farmer, J.D., Shaw, R.S.: Geometry from a time series. Phys. Rev. Lett. 45, 712 (1980)

Fichtner, A., Just, W., Radons, G.: Analytical investigation of modulated time-delayed feedback control. J. Phys. A 37, 3385 (2004)

Socolar, J.E.S., Sukow, D.W., Gauthier, D.J.: Stabilizing unstable periodic orbits in fast dynamical systems. Phys. Rev. E 50, 3245 (1994)

Acknowledgements

This study was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - CRC 1270 “Electrically Active Implants”, Grant/Award Number SFB 1270/2-299150580 - SFB 1477 “Light-Matter Interactions at Interfaces”, project number 441234705.

Funding

Open Access funding enabled and organized by Projekt DEAL. This study was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - CRC 1270 “Electrically Active Implants”, Grant/Award Number SFB 1270/2-299150580 - SFB 1477 “Light-Matter Interactions at Interfaces”, project number 441234705.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dittus, A., Kruse, N., Wallner, H. et al. Stroboscopic control and tracking of periodic states. Nonlinear Dyn 112, 1261–1274 (2024). https://doi.org/10.1007/s11071-023-09105-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-023-09105-2

Keywords

- Nonlinear dynamics

- Control

- Experimental bifurcation analysis

- Equation-free analysis

- Atomic force microscopy