Abstract

In functional time series analysis, the functional autocorrelation function (fACF) plays an important role in revealing the temporal dependence structures underlying the dynamics and identifying the lags at which substantial correlation exists. However, akin to its counterpart in the univariate case, the fACF is restricted by linear structure and can be misleading in reflecting nonlinear temporal dependence. This paper proposes a nonlinear alternative to the fACF for analyzing the temporal dependence in functional time series. We consider linear and nonlinear data generating processes: a functional autoregressive process and a functional generalized autoregressive conditional heteroskedasticity process. We demonstrate that when the process exhibits linear temporal structures, the inference obtained from our proposed nonlinear fACF is consistent with that from the fACF. When the underlying process exhibits nonlinear temporal dependence, our nonlinear fACF has a superior capability in uncovering the nonlinear structure that the fACF misleads. An empirical data analysis highlights its applications in unveiling nonlinear temporal structures in the daily curves of the intraday volatility dynamics of the foreign exchange rate.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Many real-world data can be treated as functions observed sequentially over time. For instance, intraday pollution level, financial return and volatility can be viewed as functions \(\left[ \mathcal {X}_{1}(u),\mathcal {X}_{2}(u),\ldots ,\mathcal {X}_{N}(u)\right] \) defined for u taking the interval \([u_{1},u_{p}]\), where \(\mathcal {X}_{t}(u)\) represents the observations at time u in day \(t=1,2,\ldots ,N\). The collection of functions in sequential form is called the functional time series. For a more formal definition, a functional time series is assumed to be a realization of length N of a given functional stochastic process \(\{\mathcal {X}_{t}:t\in \mathbb {Z}\}\) , where each random variable \(\mathcal {X}_{t}\) is a square-integrable function \(\{\mathcal {X}_{t}(u);u\in [u_{1},u_{p}]\}.\)

Empirical functional time series can normally be classified into two categories. First is segmenting a univariate time series into (sliced) functional time series. For instance, [1] and [2] sliced intraday volatility to form functional time series. The other category is when the continuum is not a time variable, such as age [3] or wavelength in spectroscopy [4].

Over the past two decades, functional time series, or more broadly, functional data analysis, has developed rapidly. Among others, [5, 6] and [7] provide comprehensive overviews of the major advances and the fundamental concepts and techniques used in this field. The main motivations that drive the development of functional data analysis are threefold. First, compared to the conventional approaches, functional representation of data accommodates particular well with data collected at ultra-high-frequency and can potentially alleviate the burden of the parameter estimation commonly arises in modeling a large volume of observations. Second, as by-products, functional derivatives can provide additional insight into the data under investigation [see, e.g., 8, 9]. Lastly, some data are more natural to be considered functions that behave like smooth curves rather than separated discrete observations.

Functional time series analysis is a branch under functional data analysis. The most important feature of functional time series that differentiates it from the more general functional data is that each functional time series observation cannot be treated as independent. Just like its scalar counterpart, there exists a temporal structure in the process of \(\{\mathcal {X}_{t}(u):t\in \mathbb {Z}\}\). Take an empirical example; the function representing the volatility in a given day will be affected on previous days, so the curves are dependent. Kokoszka [10] proposed functional autocorrelation function (fACF) to quantify linear serial correlation in a functional time series. The coefficient at lag \(\tau \) of the functional time series \(\{\mathcal {X}_{t}:t\in \mathbb {Z}\}\) with \(E\Vert \mathcal {X}_{t} \Vert ^{4}<\infty \) is defined as

where \(C_{\tau }(u,v)=\text {cov}[\mathcal {X}_{1}(u),\mathcal {X}_{1+\tau }(v)]=E\{[\mathcal {X}_{1}(u) -E\mathcal {X}_{1}(u)][\mathcal {X}_{1+\tau }(v)-E\mathcal {X}_{1+\tau }(v)]\}\). The estimated functional coefficient at lag \(\tau \) is thus defined as

where \(\widehat{Q}_{N,\tau }=\int ^{u_{p}}_{u_{1}} \int ^{u_{p}}_{u_{1}} N\widehat{C}^{2}_{\tau }(u,v)du dv\). Under the assumption that the functional time series \(\{\mathcal {X}_{t}(u):t\in \mathbb {Z}\}\) is independent and identically distributed (iid),

as \(N\rightarrow \infty \). The \(\lambda _{i}\)’s are the eigenvalues of the covariance operator \(C_{0}(u,v)\) and \(\{\chi ^{2}_{j,l}(1), j,l\in \mathbb {Z}\}\) are independent random variables following a chi-squared distribution \(\chi ^{2}(1)\). The detailed proof is given in [5].

Accompanied by the development of analytical tools for functional time series, many models have been proposed to capture the functional time series dynamic. Among them, the most popular model is the functional autoregressive (FAR) process. Following the definition given in [11], let \(\mathcal {H}\) be a separable Hilbert space with norm \(\Vert \cdot \Vert \) and scalar product \(\langle \cdot ,\cdot \rangle \), A sequence \(\{\mathcal {X}_{1}(u),\ldots ,\mathcal {X}_{N}(u)\}\) of \(\mathcal {H}\) random variables is called an FAR process of order 1 associated with \((\mu ,\epsilon ,\rho )\) if it is stationary and such that

where the linear operator \(\rho \) (\(||\rho ||<1\)) is compact, and the error term \(\{\epsilon _{1}(u), \epsilon _{2}(u), \ldots , \epsilon _{N}(u)\}\) is a set of independent random variables with a distribution that satisfy \(E\left[ \epsilon _{t}(u)\right] =0\), \(0<E\left[ \epsilon ^{2}_{t}(u)\right] <\infty \), and \(E\left[ \epsilon _{t}(u)\epsilon _{s}(u)\right] =\sigma ^{2}\delta _{t,s}\), for \(t,s\in \mathbb {Z}\) (\(\delta _{t,s}=1\) if \(t=s\); \(\delta _{t,s}=0\) if \(t\ne s\)). Eq. (2) can be easily extended to define a FAR(p) process by including additional terms of the form \(\rho _{k}[\mathcal {X}_{t-k}(u)-\mu (u)]\).

In addition to the FAR model, many functional time series models are extended from the FAR model. They include the autoregressive Hilbertian model with exogenous variables (ARHX) model [12], the Hilbert moving average model [13], the functional autoregressive moving average (fARMA) model [14], the seasonal functional autoregressive model [15], and the seasonal autoregressive moving average Hilbertian model with exogenous variables (SARMAHX) model [16]. All models mentioned above assume linear serial correlation within the functional time series process, and some incorporate the exogenous explanatory variables and seasonality into the dynamic. Recently, increasing attention has shifted into proposing and investigating nonlinear functional time series models, for instance, the functional autoregressive conditional heteroskedasticity (fARCH) model [17] and functional generalized autoregressive conditional heteroskedasticity (fGARCH) model [18].

The fACF has shown to be a great aid in identifying the lags at which substantial correlation exists and selecting orders of the FAR process [19]. However, despite its successful applications in many studies [20], the measure is restricted by linear relations. We will provide an example in the simulation section of our paper that the fACF can be insensitive to certain nonlinear serial correlations. Since the mainstream models in the literature for functional time series are linear models. Also, many existing measures/tools that study the structure underlying the observed functional time series, including the fACF, are based on the autocovariance and/or autocorrelation [19, 21, 22]. They only capture the linear temporal structure. There is little research to study the nonlinear temporal structures within the functional time series. The increasing popularity of nonlinear functional models and the prevalence of nonlinear temporal structures underlying real-world dynamics calls for a new measure of temporal structures of functional time series with the capacity to capture nonlinearity. This paper proposes a nonlinear alternative to fACF for functional time series analysis.

The newly proposed nonlinear fACF measures the strength of temporal dependence (both linear and nonlinear) within a functional time series. Akin to univariate time series, for functional time series, the process governing \(\{\mathcal {X}_{t}(u);u\in [u_{1},u_{p}]\}\) is said to be linear if functions \(\mathcal {X}_{t}(u)\) are connected via linear forms, namely \(\mathcal {X}_{t}(u)\) can be written in the form of

where the linear operators \(\rho _{i}\) (\(||\rho _{i}||<1\)), \(\theta _{i}\) (\(||\theta _{i}||<1\)) are compact, and the error term \(\left\{ \epsilon _{1}(u), \epsilon _{2}(u), \ldots ,\epsilon _{N}(u)\right\} \) is a set of independent random variables. Any violation of structures between \(\mathcal {X}_{t}(u)\) and its lags defined in the above form is termed ‘nonlinear temporal structures.’

The new measure uses the rank of the observed curves to reflect the level of temporal dependence underlying the functional time series process. Using rank to detect nonlinear correlation is very common in the univariate time series analysis. Spearman and Kendall’s Tau correlations are famous examples of employing ranks to capture the nonlinear correlation between two univariate time series. Additionally, permutation entropy (PE) proposed by Bandt and Pompe [23] is another instance of employing ordinal patterns. It has been shown that using ordinal patterns rather than the actual observed values provide PE with many desirable features. To name a few, using an ordinal pattern enables PE to deal with complex systems with high nonlinearity [24]. Additionally, such design makes PE require minimal prior assumptions and knowledge of the underlying dynamics of the process and is robust to outliers and both dynamic and stochastic noise [23, 25,26,27]. The specification of the nonlinear fACF inherits similar concepts and rationale from the PE. But we modified it to be adaptive for the analysis of the functional time series.

The rest of this paper is structured as follows. Section 2 provides the specification and notations of the newly proposed nonlinear fACF of functional time series. In Sect. 3, we present a number of simulation studies to compare the nonlinear fACF and fACF on simulated linear and nonlinear functional time series processes. In Sect. 4, the nonlinear autocorrelation function (ACF) is applied on empirical daily curves of several exchange rates intraday squared returns and realized volatilities to demonstrate its superiority over fACF in a practical context. Conclusions are given in Sect. 5, along with some ideas on how the methodology presented here can be further extended.

2 Nonlinear functional autocorrelation function

The nonlinear fACF measures the strength of temporal dependence relations within a functional time series. The computation of nonlinear fACF consists of two steps: (1) assign a scalar value to each observation in the observed functional time series to indicate their ranks; (2) compute a statistic based on the converted scalar time series to reflect the strength of the temporal dependence of the underlying process.

2.1 Rank of the functional time series

To record the relative position of each observed curve, we first employ the functional principal component analysis (FPCA) to obtain the functional principal component (FPC) scores. These scores allow us to rank the observed functional time series.

The FPCA is very similar to the principal component analysis for multivariate data, except in the FPCA, the variables of interest and the weight coefficients are functions. Given a set of functional observations \(\varvec{\mathcal {X}}(u)=\{\mathcal {X}_{t}(u);u\in [u_{1},u_{p}]\}\), the first step of the FPCA is to find the weight coefficient function \(\beta _{1}(u)\) that maximum the functional principal component (FPC) scores

subject to \(\Vert \beta _{1}^{2}(u)\Vert =\int ^{u_{p}}_{u_{1}}\beta _{1}^{2}(u) du=1\). Then, the successive functional principal component can be obtained iteratively by subtracting the previous derived principal component functions. The initial function starts from \(\varvec{\mathcal {X}}^{0}(u)=\varvec{\mathcal {X}}(u)\) and

The \((k+1)\)th functional principal component is found by maximizing

subject to

It is worth noting that the FPCA generally requires the decentralization of the observed functional data before the steps above.

Through the FPCA, we can obtain a FPC score matrix \(\varvec{F}\) containing the 1st to kth principal component sores associated with \(\varvec{\mathcal {X}}(u)\), i.e.,

where \(\varvec{f}_{t}=(f_{t,1},f_{t,2},\ldots ,f_{t,K})\) and \(f_{t,k}\) denotes to the kth FPC score of the tth observed functional time series \(\mathcal {X}_{t}(u)\). The number of total principal components K could potentially affect the rank of the functional time series. To retain the maximum information about the functional observations, K is determined by the number of FPCs that explain 90% of the covariance function unless K exceeds \(N-2\), where N represents the number of functional observations. Alternatively, K can also be selected by the integer minimizing the ratio of two adjacent empirical eigenvalues [28].

The FPC score matrix \(\varvec{F}\) forms a \(N \times K\) matrix and can be treated as a K-dimensional multivariate time series. Then, we operate a \(R^{k}\rightarrow R\) transformation to convert the K-dimensional multivariate time series into a scalar one. We want the converted scalar time series to preserve the maximum ordinal predictability present in the original data. To retain the ordinal structures in F, we first identify the pair of the \(\varvec{f}_{t}\)s that has the furthest distance within all \(\varvec{f}_{t}\)s measured by the Euclidean distance, i.e.,

\(\mathcal {X}_{i^{1}}(u)\) denotes the observational curve that occurs earlier in the identified pair, and \(\mathcal {X}_{i^{2}}(u)\) denotes the one that occurs later. We select one of \(\mathcal {X}_{i^{1}}(u)\), \(\mathcal {X}_{i^{2}}(u)\) as the target \(\mathcal {X}_{i^{*}}(u)\). In this paper, we choose \(\mathcal {X}_{i^{1}}(u)\). Then a scalar value \(K(\varvec{f}_{t})\) is assigned to the rest of observations based its distance to \(\mathcal {X}_{i^{1}}(u)\).

The \(R^{k}\rightarrow R\) mapping of each multivariate observation in \(\varvec{F}\) is through pairwise distance

Since only the rank within the observed curves matters, there is no need to employ smoothing kernel functions such as the Gaussian kernel in the similarity measure. Any monotonic transformation yields identical results. Using an ordinal pattern relieves the need to choose from several candidate similarity measures, which often arise in many parametric measures.

2.2 Temporal dependence measure

We propose a new measure \(\rho ^{\text {nonlinear}}_{\tau }\) to measure the temporal dependence (both linear and nonlinear) of the converted scalar series \(\{K(\varvec{f}_{t});t=1,...,N\}\) at a given lag \(\tau \). By plotting \(\rho ^{\text {nonlinear}}_{\tau }\) against \(\tau \), one can track the evolvement of the temporal structure of a process as the lag increases, just like the ACF.

\(\rho ^{\text {nonlinear}}_{\tau }\) is constructed by modifying the specification of the permutation entropy (PE). The computation steps of PE is given in [23]. We modify the PE because the value of PE is affected by a composite of temporal dependence over multiple lags [29]. The lack of the one-to-one correspondence between PE and the temporal dependence at the selected lag motivates us to develop a new measure.

In a nutshell, \(\rho ^{\text {nonlinear}}_{\tau }\) reveals the level the temporal dependence in a univariate time series by measuring the deviation of the ordinal pattern distribution formed by the partitions of the observed time series from that if there is no temporal structure over lag \(\tau \). We first compute the frequencies of partitions that follow each ordinal pattern of the observed time series and then compute the expected frequencies if no temporal structure exists over the lag \(\tau \). \(\rho ^{\text {nonlinear}}_{\tau }\) compares their difference based on \(\chi ^2\) test statistics.

\(\rho ^{\text {nonlinear}}_{\tau }\) is defined as

where \((\pi _{i}:i=1,2,\ldots ,D!)\) represents the collection of D! possible distinct ordinal patterns of length D,

and

where \(\{r_{t};t=1,2,\ldots ,N\}\) is an iid process and is generated by employing the kernel function to replicating the empirical marginal distribution of \(\{x_{t};t=1,2,\ldots ,N\}\).

To illustrate the concept of ordinal pattern, we provide the six distinct ordinal patterns associated with segment length \(D=3\) in Table 1. We ignore the circumstances that the equal entries might appear in the segment because the probability of two identical curves in an observed functional time series is negligible.

It is worth noting that the way that the vector \(s_{t,\tau }^{D}\) is constructed in PD is not the same as that in PE. The observations in the constructed vectors in PE are \(\tau \) intervals apart, whereas in PD, the first \(D-1\) observations in \(s_{t,\tau }^{D}\) are consecutive, only the last entry is \(\tau \)-step-ahead. Modifying the constructed vectors in PD enhances its sensitivity in detecting temporal structures. The temporal structure between the consecutive entries is often the strongest in most real-world time series. In such process, the regularity of ordinal pattern in \(\left( x_{t},x_{t+1},\ldots ,x_{t+D-2},x_{t+D-2+\tau } \right) \) would be greater than that in \(\left[ x_{t},x_{t+\tau },\ldots ,x_{t+\left( D-2\right) \tau },x_{t+\left( D-1\right) \tau }\right] \).

The domain of the measure \(\rho ^{\text {nonlinear}}_{\tau }\) is bounded in [0, 1], where 0 indicates a completely stochastic process, and 1 indicates a completely predictable process. The greater its value, the stronger dependence it indicates.

If \(\{x_{t};t=1,2,\ldots ,N\}\) lacks of temporal dependence structure, the ordinal patterns in \(s_{t,\tau }^{D}\) and \(s_{t,\tau }^{D,\text {rand}}\) are expected to follow the same distribution. Therefore, \(O(\pi _{i})=E(\pi _{i})\) for \(i=1,2,\ldots ,D!\), thus \(\rho ^{\text {nonlinear}}_{\tau }=0\). On the other hand, if \(\{x_{t};t=1,2,\ldots ,N\}\) is perfectly predictable, in which case all constructed segments \(s_{t,\tau }^{D}\) follow the same ordinal pattern, i.e.,

the ordinal pattern distribution in the simulated segments \(s_{t,\tau }^{D,\text {rand}}\) is expected to be

The ordinal pattern \(\pi _{b}\) and \(\pi _{a}\) have the same ordinal pattern in the first \(D-1\) entries, except the last entry in \(\pi _{b}\) is in a different position as in \(\pi _{a}\) relative to the first \(D-1\) entries. Consequently, \(\rho ^{\text {nonlinear}}_{\tau }=1\).

According to the chi-square test, under the condition that \(\{x_{t};t=1,2,\ldots ,N\}\) has no temporal structure,

as \(N\rightarrow \infty \). The degree of freedom is determined by the number of free variables among the frequencies of D! ordinal patterns. It is easy to derive that among D! ordinal patterns, with the first \(D-1\) entries restricted in a given pattern, there are \(D!-(D-1)!-1\) number of free variables associated with the \(\chi ^{2}\) distributed statistic under independence.

To illustrate how \(\rho ^{\text {nonlinear}}_{\tau }\) compensates the weakness of PE, Fig. 1 provides a comparison between \(\rho ^{\text {nonlinear}}_{\tau }\) and \(1-\text {PE}\) as a function of increasing lag on a simulated ARMA(1,1) process:

The simulated ARMA(1,1) process exhibits gradually diminished linear serial correlations for increasing lags. However, 1-PE shows an insignificant and steady value regardless of the increasing lags. The insignificant 1-PE is caused by the fact that the value of PE reflects the temporal dependence at multiple delays. Bandt and Shiha [29] showed that, for a stationary Gaussian process, the value of \(1-\text {PE}\) with segment length \(D=3\) at lag \(\tau \) is positively related to the ACF at lag \(\tau \), but also negatively related to the ACF at lag \(2\tau \). As a result, when the two contributing components offset each other, PE losses the capacity to detect the temporal structure that is diminishing at a slow rate. The design of \(\rho ^{\text {nonlinear}}_{\tau }\) eliminates the impact from the temporal structures at lags other than the selected lag \(\tau \). From Fig. 1c, \(\rho ^{\text {nonlinear}}_{\tau }\) successfully unveils the gradually diminishing serial correlations underlying the simulated time series. Additionally, the plot of \(\rho ^{\text {nonlinear}}_{\tau }\) is in close resemblance to the theoretical ACF of the simulated process.

To measure the temporal dependence within the observed functional time series \(\varvec{\mathcal {X}}(u)\), one simply replace the univariate time series \(\{x_{t};t=1,2,\ldots ,N\}\) in the specification of \(\rho ^{\text {nonlinear}}_{\tau }\) with \(\{K(\varvec{f}_{t});t=1,2,\ldots ,N\}\) defined in Sect. 2.1.

The newly proposed temporal measure \(\rho ^{\text {nonlinear}}_{\tau }\) requires minimal prior knowledge and assumptions about the underlying dynamics of the observed data, but a pre-chosen segment length parameter D. Following the guideline of choosing D in the computation of PE, D is commonly chosen to be between 3 and 7. The choice of D needs to be subject to the restriction \(N-\left( D-1\right) \tau \gg D!\) [30, 31]. For simplicity and computational efficiency, we choose \(D=3\) as the default segment length in the subsequent analysis.

3 Simulation studies

We compare the fACF (\(\rho _{\tau }\)) and the nonlinear fACF (\(\rho ^{\text {nonlinear}}_{\tau }\)) on both simulated linear and nonlinear functional time series processes to examine their consistency and showcase their respective strength and weakness.

3.1 Data generating process from a FAR(1) model

The linear functional time series process we choose for the simulation study is the FAR(1) process. The FAR(1) process specification is given in Eq. (2). We use the function simul.far in the

package ‘far’ [32] to generate the FAR(1) process. To compare the behavior of the fACF and the nonlinear fACF in the process of different serial correlations, we generate four FAR(1) models with different linear operators \(\rho \), i.e., \(\rho =0.7\), 0.45, 0.2, and 0. For each simulated model, 1000 sample paths are generated. The generated functional time series in each path contains 100 discretization equal-spaced points within (0,1) and 1000 observations. The error terms \(\epsilon _{t}(u)\) are strong white noise, and five equally spaced sinusoidal bases are used to construct the FAR(1) model.

Figure 2 plots the 10 observations in a simulated FAR(1) process with \(\rho =0.7\) and the corresponding scalar value \(K(\varvec{f}_{t})\) assigned to each observed curves. This plot illustrates how a sequence of observed curves is transformed into a scalar time series. The value of \(K(\varvec{f}_{t})\) is indicative of the relative position of each curve within all observations.

Ten simulated function variables of FAR(1) process with \(\rho =0.7\) and the scalar value \(K(\varvec{f}_{t})\) (purple dots) assigned to each observed curve. The red dashed curve denotes \(\mathcal {X}_{i^{1}}(u)\) and the yellow dashed curve denotes \(\mathcal {X}_{i^{2}}(u)\) defined in Eq. (4). They are the furthest distanced observations within the ten simulated function variables

The red-dashed and yellow-dashed curves are the furthest distanced pair among the plotted ten simulated curves. We select the curve that occurs earlier, \(\mathcal {X}_{i^{1}}(u)\) (the red-dashed curve), as the target \(\mathcal {X}_{i^{*}}(u)\). Consequently, \(K(\varvec{f}_{i^{*}})\) is equal to zero since the distance between the target and itself is zero. Therefore, the target curve has the lowest corresponding scalar value. The yellow-dashed curve has the highest scalar value. Any other curve is assigned a scalar value in between. Their scalar values depend on their distance to \(\mathcal {X}_{i^{*}}(u)\).

It is worth noting we also test the case when selecting \(\mathcal {X}_{i^{2}}(u)\) (the yellow-dashed curve) as the target in the Appendix. In Appendix, Figs. 9 and 10 depict the comparison when \(\mathcal {X}_{i^{1}}(u)\) is selected as \(\mathcal {X}_{i^{*}}(u)\) and when \(\mathcal {X}_{i^{2}}(u)\) is selected as \(\mathcal {X}_{i^{*}}(u)\). The values of nonlinear fACF are very close in both cases, suggesting choosing either of them as the target curve has little effect in computing the nonlinear fACF.

To examine the behavior of the newly proposed nonlinear fACF on linear functional time series process, we compute the \(\rho ^{\text {nonlinear}}_{\tau }\) on each path of the four FAR(1) processes and plot their averages in Fig. 3. As a comparison, we also plot the fACFs on the same simulated process below. The fACF is calculated through the

package ‘fdaACF’ [19]. We also provide the 95% confidence intervals of \(\rho ^{\text {nonlinear}}_{\tau }\) and \(\rho _{\tau }\) under the assumption of independence in Fig. 3 to indicate their significance. Their 95% confidence intervals under independence are computed following Eqs. (1) and (5), respectively.

Comparing the fACF and the nonlinear fACF demonstrates their consistency in linear processes. The simulated FAR(1) process with stronger serial correlation is associated with greater values of \(\rho ^{\text {nonlinear}}_{\tau }\) and \(\rho _{\tau }\) for all considered delays. The \(\rho ^{\text {nonlinear}}_{\tau }\) is perfectly aligned with the strength of the serial correlation proposed by different FAR(1) processes, as well as the \(\rho _{\tau }\). However, despite their alignment in revealing various serial correlations, their magnitudes and the slopes of decay for increasing delays differ significantly for the same process. For instance, in the FAR(1) process with \(\rho =0.7\), \(\rho _{\tau }\) displays an average value of 0.55 at delay one and diminishes around 30% to 0.4 at delay two. For the same process, \(\rho ^{\text {nonlinear}}_{\tau }\) only gives a value of 0.12 at delay one and diminishes at a much faster rate of 66% to 0.04 at delay two.

The considerable discrepancy between the two measures is that their values have different implications. For instance: A value of one in the fACF indicates the process has perfect collinearity. However, a value of one in the nonlinear fACF suggests the process is perfectly predictable. A perfect collinear process can be stochastic as long as an innovation term is present in the dynamics. A perfectly predictable process can also violate perfect collinear, as it can be governed by nonlinear deterministic relation. Therefore, we need to be careful when interpenetrating the implication behind the value of each measure.

Besides comparing the average value of the fACF and the nonlinear fACF on the simulated FAR(1) process of various strengths of serial correlation, we also provide the 2.5% and the 97.5% of the two measures on each specified process. The plots of the percentile of the fACF and the nonlinear fACF are given in Fig. 4. The percentile bands of each measure at various delays are indicative of the variance associated with \(\widehat{\rho }_{\tau }\) and \(\widehat{\rho }^{\text {nonlinear}}_{\tau }\). Figure 4 suggests that the variance of \(\widehat{\rho }_{\tau }\) remains at a steady level despite its magnitude. However, the variance associated with \(\widehat{\rho }^{\text {nonlinear}}_{\tau }\) tends to increase with its magnitude. In other words, the greater the \(\widehat{\rho }^{\text {nonlinear}}_{\tau }\) is, the more uncertainty the nonlinear fACF involves.

3.2 Data generating process from a functional GARCH model

The nonlinear functional time series process we choose in the simulation study is the functional GARCH process proposed by [18]. A sequence of random functions \((\mathcal {X}_{t}:t\in \mathbb {Z})\) is called a functional GARCH process of order (1,1), abbreviated by fGARCH(1,1), if it satisfied the equations

where \(\delta \) is a nonnegative function, the operators \(\alpha \) and \(\beta \) map nonnegative functions to nonnegative functions and the innovations \(\varepsilon _{t}\) are iid random functions. Our simulated fGARCH processes inherit the format of the simulated fGARCH process in [18]. We set

and the integral operators \(\alpha \) and \(\beta \) to be

where C is constant. The innovations \((\varepsilon _{t}:t\in \mathbb {Z})\) are defined as

where \((B_{t}:t\in \mathbb {Z})\) are iid standard Brownian motions.

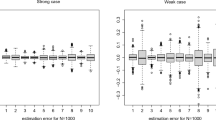

We simulated 1000 random paths of the specified fGARCH process with \(C=14\) and \(C=15\) in Eq. (9) to compare the behavior of \(\rho ^{\text {nonlinear}}_{\tau }\) and \(\rho _{\tau }\) on nonlinear functional time series process. Each simulated path contains 1000 observational curves with 100 discretization equally spaced points within a unit interval. Figure 5 plots the mean, the 2.5%, and the 97.5% of the computed fACF, and the nonlinear fACF on the two specified fGARCH processes. Figure 5a and b indicates that the fACF is insensitive to the form of the temporal dependence postulated by the simulated fGARCH models. The fACF exhibits marginally significant values at the considered delays and displays minimal difference for the simulated nonlinear process with varied strength of temporal dependence. On the contrary, the nonlinear fACF produces a distinctively significant value relative to its 95% confidence intervals. Further, the nonlinear fACF displays a much wider gap between the simulated fGARCH process with \(C=14\) and \(C=15\), demonstrating the superior ability of \(\rho ^{\text {nonlinear}}_{\tau }\) in differentiating various levels of nonlinear temporal structures.

4 Foreign exchange rate modeling

We compute the nonlinear fACF on six empirical functional time series. The functional time series are the daily curves of 10-minute EUR/USD, JPY/USD, and AUD/USD squared returns and the daily curves of one-hour realized volatilities recorded for the same currency pairs in the same investigation period.

We choose to investigate these functional time series because we expect the daily curves of intraday volatility series to exhibit a certain level of long-memory nonlinear temporal structures. Our expectation comes from the consensus in the literature that conditional heteroskedasticity is a widely documented feature of financial time series (see, e.g., [33, 34]). The GARCH model is a popular univariate time series model to address the heteroskedasticity in the financial return and volatility series. More recently, the functional GARCH model has been proposed by [18] and is applied to the curves of intraday volatility to model the temporal structures between the volatility functions recorded daily. Suppose the functional GARCH process is the appropriate model for capturing the temporal structures within the daily volatility curves. In that case, we expect our newly proposed \(\rho ^{\text {nonlinear}}_{\tau }\) to reveal the nonlinear temporal structures underlying our investigated data that \(\rho _{\tau }\) might overlook.

Let \(P=\{p_{i}; i=1,...,N+1\}\) denote the close bid rate of EUR/USD, the ln return series \(\ R=\{r_{i};i=1,...,N\}\) is computed through

and the squared returns are denoted by \(R^{2}=\{r_{i}^{2};i=1,...,N\}\). The one-hour realized volatility is obtained using

The close bid price of the foreign exchange rate is provided by Thomson Reuters Tick History (https://www.refinitiv.com/en/financial-data/market-data/tick-history). The price data are recorded from 21:00 GMT 16/06/2013 to 20:50 GMT 21/06/2019, with the weekend entries removed (from 21:00 GMT Friday to 20:50 GMT Sunday inclusive), constituting 1570 weekdays. Consequently, the empirical functional time series under investigation contains 1570 functional observations. Each daily curve of 10-minute squared returns consists of 144 discretization points within each day. Each daily curve of one-hour aggregated volatilities consists of 24 discretization points.

Intraday periodicity in volatility is a commonly observed feature in high-frequency financial time series [35]. The periodicity in intraday foreign exchange rate returns is a 24-hour pattern mainly attributed to the differences in trading times in the global foreign exchange markets. To remove the intraday periodicity, we employ Andersen and Bollerslev’s[36]’s flexible Fourier transform method to deseasonalize the intraday squared returns.

Figures 6, 7 and 8 provide the plots of \(\rho _{\tau }\) and \(\rho ^{\text {nonlinear}}_{\tau }\) on the six Forex volatility functional time series. The upper two subplots in these figures show the behavior of the standard fACF versus various lags, and the bottom two plots correspond to the newly proposed nonlinear alternative. From the provided figures, \(\rho _{\tau }\) displays marginal or insignificant values for most of the considered delays except at several non-consecutive lags. Such observation indicates the prevalent lack or weak linear serial correlation between the daily Forex volatility functional time series. However, the distinct peaks in the observed non-consecutive lags, such as at \(\tau =5\) in Fig. 6a and b, at \(\tau =38\) in Figs. 7a, 8a and b, could be caused by seasonality that is not captured by our intraday seasonal filter.

Unlike the fACF plots that exhibit sharp peaks at a few non-consecutive lags, the nonlinear fACF declines gradually over increasing lags. Further, the value of \(\rho ^{\text {nonlinear}}_{\tau }\) remains significant up to around lag 50 for the functional time series formed by one-hour volatilities. The significant \(\rho ^{\text {nonlinear}}_{\tau }\) suggests that daily curves of intraday volatilities exhibit persistent temporal dependence. Moreover, the significant \(\rho ^{\text {nonlinear}}_{\tau }\) but insignificant \(\rho _{\tau }\) at many short-term lags unveil an important finding. Even though daily curves of intraday volatilities are not correlated in a simple linear format for adjacent days, their connection is through a certain nonlinear structure.

The nonlinear fACF also differentiates the varied level of dependence for the volatility time series formed by different frequency data. The nonlinear fACF suggests the daily curves formed by the one-hour aggregated volatility exhibit a much stronger temporal structure than those formed by 10-minute squared returns. The standard fACF cannot reveal such a difference as it fluctuates at similar levels for the 10-minute squared return and one-hour aggregated volatility functional time series. However, the nonlinear fACF displays distinctively higher values at all considered delays for the daily curves of one-hour realized volatilities than that formed by 10-minute squared returns.

Moreover, the empirical analysis also provides some insight into the volatility dynamics for different Forex rate markets. For the three considered USD-based Forex rates, the nonlinear fACF suggests that the EUR/USD exhibits the strongest temporal dependence and then the JPY/USD. The daily curves of AUD/USD intraday volatilities have the weakest temporal structures. The rank of the temporal structure strength seems to coincide with the popularity and the volume traded for the considered currency pairs.

The empirical study comparing the fACF and the nonlinear fACF on Forex volatility functional time series explicitly demonstrates the merits of introducing a temporal dependence measure to capture the nonlinear structures. The nonlinear fACF shows its superiority to the standard fACF. It confirms nonlinear temporal structures within the daily volatility curves of forex rate returns and reflects the added strength of temporal structures when longer interval aggregated volatilities form the curves. These conclusions cannot be achieved by employing the standard fACF alone.

5 Conclusion

We proposed a new nonparametric measure based on the ordinal pattern to measure the functional time series’s temporal structure (both linear and nonlinear). Compared to the functional ACF (fACF), our measure is shown to have the capacity to reveal the nonlinear structure that fACF overlooks. The significance of our research is illustrated in Sects. 3.2 and 4, where we applied our measure to a simulated fGARCH process and empirical daily curves of forex volatility. It is shown that fACF is insensitive to the form of temporal structures specified in the fGARCH process and present in the observed daily curves of forex volatility. Without our tool, practitioners might be misled by the insignificant or marginal significant value of fACF that the underlying processes do not have strong temporal dependence, thus no modeling or prediction potentials. But our tool indicates the opposite inferences. Additionally, it reveals whether the temporal dependence is linear or nonlinear; and if employing a linear model, such as the FAR or fARMA model or linear analysis (such as tools based on autocorrelation/autocovariance) is sufficient. Such inference calls for more research on developing nonlinear models and prediction approaches for functional time series, where currently, the researches in this area are still scarce.

While our measure demonstrates a capacity to reveal nonlinear structures, when the process is linear, the simulation study suggests it is also consistent with the fACF. Therefore, the nonlinear fACF can complement the fACF when one studies the temporal dependence of a functional time series.

The advantages of the nonlinear fACF reside in its minimal requirement of prior assumption and knowledge about the underlying dynamics of the investigated data and its non-restriction by any form of temporal structure. Because of those advantages, the nonlinear fACF is an excellent tool for testing independence within the functional time series. We showed in our simulation studies that when the functional time series process is iid, the value of the \(\rho ^{\text {nonlinear}}_{\tau }\) is well below its 95% confidence intervals derived from its asymptotic distribution. Because of the nature of the nonlinear fACF, the independence hypothesis test based on it can be considered a portmanteau test that tests against various possible deviations from independence.

The major limitation of the current study is that the tool we developed can only detect the existence of temporal structures (both linear and nonlinear) present in the observed functional time series. However, it cannot indicate the form of the detected structures. As a consequence, practitioners have no guidance as to what kind of models or prediction approaches are more promising than others. Additionally, the nonlinear fACF employs a dimension reduction technique to convert the observed functions into multivariate time series. The dimension reduction technique would inevitably lead to the loss of information on the functional observations. One could consider using fully functional approaches, such as with the aid of depth [37], to rank the observed curves. The simulation studies suggest that the variance associated with our nonlinear fACF is generally greater than that of the fACF relative to their respective magnitudes. The variance could be reduced without dimensional reduction, thereby fewer uncertainties for the nonlinear fACF measure.

Our study is the first proposal of a temporal dependence measure of functional time series, focusing on nonlinear structures to the best of our knowledge. Despite its imperfection, we hope that our work will spur further research into the dependence structure of functional time series, particularly in analyzing, modeling, and forecasting nonlinear functional time series.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Rice, G., Wirjanto, T., Zhao, Y.: Exploring volatility of crude oil intra-day return curves: a functional GARCH-X model. MPRA working paper 109231, University of Waterloo, (2021)

Kearney, F., Shang, H. L., Zhao, Y.: Intraday foreign exchange rate volatility forecasting: univariate and multilevel functional GARCH models. Working paper, Queen’s University Belfast, (2022)

Shang, H. L., Haberman, S., Xu, R.: Multi-population modelling and forecasting life-table death counts. Insur.: Math. Econ. 106, 239–253, (2022a)

Shang, H. L., Cao, J., Sang, P.: Stopping time detection of wood panel compression: a functional time-series approach. J. R. Stat. Soc.: Ser. C, in press, (2022b)

Kokoszka, P., Reimherr, M.: Introduction to Functional Data Analysis. Chapman and Hall/CRC, Boca Raton (2017)

Ramsay, J.O., Silverman, B.W.: Applied Functional Data Analysis: Methods and Case Studies, vol. 77. Springer, New York (2002)

Ramsay, J.O., Silverman, B.W.: Functional Data Analysis. Springer, New York (2005)

Hooker, G., Shang, H.: Selecting the derivative of a functional covariate in scalar-on-function regression. Stat. Comput. 32(3), 35 (2022)

Shang, H.L.: Visualizing rate of change: an application to age-specific fertility rates. J. R. Stat. Soc.: Ser. A (Stat. Soc.) 182(1), 249–262 (2019)

Kokoszka, P., Rice, G., Shang, H.L.: Inference for the autocovariance of a functional time series under conditional heteroscedasticity. J. Multivar. Anal. 162, 32–50 (2017)

Bosq, D.: Linear Processes in Function Spaces: Theory and Applications, vol. 149. Springer Science & Business Media, New York (2000)

Damon, J., Guillas, S.: The inclusion of exogenous variables in functional autoregressive ozone forecasting. Environmetrics 13(7), 759–774 (2002)

Turbillon, C., Marion, J.-M., Pumo, B.: Estimation of the moving-average operator in a Hilbert space. In: Recent advances in stochastic modeling and data analysis, pp. 597–604. World Scientific, (2007)

Klepsch, J., Klüppelberg, C., Wei, T.: Prediction of functional ARMA processes with an application to traffic data. Econ. Stat. 1, 128–149 (2017)

Zamani, A., Haghbin, H., Hashemi, M., Hyndman, R.J.: Seasonal functional autoregressive models. J. Time Ser. Anal. 43(2), 197–218 (2022)

González, J.P., San Roque, A.M.S.M., Perez, E.A.: Forecasting functional time series with a new Hilbertian ARMAX model: application to electricity price forecasting. IEEE Trans. Power Syst. 33(1), 545–556 (2017)

Hörmann, S., Horváth, L., Reeder, R.: A functional version of the ARCH model. Economet. Theor. 29(2), 267–288 (2013)

Aue, A., Horváth, L., Pellatt, D.F.: Functional generalized autoregressive conditional heteroskedasticity. J. Time Ser. Anal. 38(1), 3–21 (2017)

Mestre, G., Portela, J., Rice, G., Roque, A.M.S., Alonso, E.: Functional time series model identification and diagnosis by means of auto-and partial autocorrelation analysis. Comput. Stat. Data Anal. 155, 107108 (2021)

Canale, A., Vantini, S.: Constrained functional time series: applications to the italian gas market. Int. J. Forecast. 32(4), 1340–1351 (2016)

Horváth, L., Hušková, M., Rice, G.: Test of independence for functional data. J. Multivar. Anal. 117, 100–119 (2013)

Zhang, X.: White noise testing and model diagnostic checking for functional time series. J. Econ. 194(1), 76–95 (2016)

Bandt, C., Pompe, B.: Permutation entropy: a natural complexity measure for time series. Phys. Rev. Lett. 88(17), 174102 (2002)

Zunino, L., Soriano, M.C., Fischer, I., Rosso, O.A., Mirasso, C.R.: Permutation-information-theory approach to unveil delay dynamics from time-series analysis. Phys. Rev. E 82(4), 046212 (2010)

Parlitz, U., Berg, S., Luther, S., Schirdewan, A., Kurths, J., Wessel, N.: Classifying cardiac biosignals using ordinal pattern statistics and symbolic dynamics. Comput. Biol. Med. 42(3), 319–327 (2012)

Bandt, C.: Ordinal time series analysis. Ecol. Model. 182(3–4), 229–238 (2005)

Groth, A.: Visualization of coupling in time series by order recurrence plots. Phys. Rev. E 72(4), 046220 (2005)

Li, D., Robinson, P.M., Shang, H.L.: Long-range dependent curve time series. J. Am. Stat. Assoc.: Theor. Methods 115(530), 957–971 (2020)

Bandt, C., Shiha, F.: Order patterns in time series. J. Time Ser. Anal. 28(5), 646–665 (2007)

Rosso, O.A., Larrondo, H.A., Martin, M.T., Plastino, A., Fuentes, M.A.: Distinguishing noise from chaos. Phys. Rev. Lett. 99(15), 154102 (2007)

Kowalski, A.M., Martín, M.T., Plastino, A., Rosso, O.A.: Bandt-Pompe approach to the classical-quantum transition. Phys. D 233(1), 21–31 (2007)

Serge, D.J.G.: far: Modelization for Functional AutoRegressive Processes, (2022). R package version 0.6-6. URL: https://CRAN.R-project.org/package=far

Bollerslev, T.: Generalized autoregressive conditional heteroskedasticity. J. Economet. 31(3), 307–327 (1986)

Engle, R.F.: Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econ.: J. Econ. Soc. 50(4), 987–1007 (1982)

Martens, M., Chang, Y.-C., Taylor, S.J.: A comparison of seasonal adjustment methods when forecasting intraday volatility. J. Fin. Res. 25(2), 283–299 (2002)

Andersen, T.G., Bollerslev, T.: Deutsche mark-dollar volatility: intraday activity patterns, macroeconomic announcements, and longer run dependencies. J. Financ. 53(1), 219–265 (1998)

López-Pintado, S., Romo, J.: On the concept of depth for functional data. J. Am. Stat. Assoc.: Theor. Methods 104(486), 718–734 (2009)

Acknowledgements

The authors are grateful for insightful comments and suggestions from three reviewers. We thank the generous provision of financial data from the Securities Industry Research Centre of Asia-Pacific (SIRCA).

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. The first author acknowledges the financial support of an International Macquarie Research Excellence Scholarship from Macquarie University for the research of this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Additional comparison between selecting the earlier occurring curve, \(\mathcal {X}_{i^{1}}(u)\), and the later occurring curve, \(\mathcal {X}_{i^{2}}(u)\), in the furthest distanced curves as the target in the computation of nonlinear fACF in the FAR(1) and fGARCH simulation studies, described in Sect. 3.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huang, X., Shang, H.L. Nonlinear autocorrelation function of functional time series. Nonlinear Dyn 111, 2537–2554 (2023). https://doi.org/10.1007/s11071-022-07927-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-07927-0