Abstract

Conventional neural networks are universal function approximators, but they may need impractically many training data to approximate nonlinear dynamics. Recently introduced Hamiltonian neural networks can efficiently learn and forecast dynamical systems that conserve energy, but they require special inputs called canonical coordinates, which may be hard to infer from data. Here, we prepend a conventional neural network to a Hamiltonian neural network and show that the combination accurately forecasts Hamiltonian dynamics from generalised noncanonical coordinates. Examples include a predator–prey competition model where the canonical coordinates are nonlinear functions of the predator and prey populations, an elastic pendulum characterised by nontrivial coupling of radial and angular motion, a double pendulum each of whose canonical momenta are intricate nonlinear combinations of angular positions and velocities, and real-world video of a compound pendulum clock.

Similar content being viewed by others

Data Availability Statement

Our code and data are available at GitHub [29].

References

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. (MCSS) 2(4), 303 (1989). https://doi.org/10.1007/BF02551274

Hornik, K.: Approximation capabilities of multilayer feedforward networks. Neural Netw. 4(2), 251 (1991). https://doi.org/10.1016/0893-6080(91)90009-T

Lusch, B., Kutz, J.N., Brunton, S.L.: Deep learning for universal linear embeddings of nonlinear dynamics. Nat. Commun. 9(1), 4950 (2018). https://doi.org/10.1038/s41467-018-07210-0

Jaeger, H., Haas, H.: Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304(5667), 78 (2004)

Pathak, J., Hunt, B., Girvan, M., Lu, Z., Ott, E.: Model-free prediction of large spatiotemporally chaotic systems from data: a reservoir computing approach. Phys. Rev. Lett. 120, 024102 (2018)

Carroll, T.L.: Network structure effects in reservoir computers. Chaos Interdiscip. J. Nonlinear Sci. 29(8), 083130 (2019)

Iten, R., Metger, T., Wilming, H., del Rio, L., Renner, R.: Discovering physical concepts with neural networks. Phys. Rev. Lett. 124, 010508 (2019)

Udrescu, S.M., Tegmark, M.: AI Feynman: A Physics-Inspired Method for Symbolic Regression. arXiv:1905.11481 (2019)

Wu, T., Tegmark, M.: Toward an artificial intelligence physicist for unsupervised learning. Phys. Rev. E 100, 033311 (2019). https://doi.org/10.1103/PhysRevE.100.033311

Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., Lanctot, M., Sifre, L., Kumaran, D., Graepel, T., Lillicrap, T., Simonyan, K., Hassabis, D.: A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 362(6419), 1140 (2018). https://doi.org/10.1126/science.aar6404

Greydanus, S., Dzamba, M., Yosinski, J.: Hamiltonian Neural Networks. arXiv:1906.01563 (2019)

Toth, P., Rezende, D.J., Jaegle, A., Racanire, S., Botev, A., Higgins, I.: Hamiltonian Generative Networks. arXiv:1909.13789 (2019)

Mattheakis, M., Protopapas, P., Sondak, D., Giovanni, M.D., Kaxiras, E.: Physical Symmetries Embedded in Neural Networks. ArXiv:1904.08991 (2019)

Bertalan, T., Dietrich, F., Mezi, I., Kevrekidis, I.G.: On learning Hamiltonian systems from data. Chaos Interdiscip. J. Nonlinear Sci. 29(12), 121107 (2019)

Bondesan, R., Lamacraft, A.: Learning Symmetries of Classical Integrable Systems. ArXiv:1906.04645 (2019)

Choudhary, A., Lindner, J.F., Holliday, E.G., Miller, S.T., Sinha, S., Ditto, W.L.: Physics-enhanced neural networks learn order and chaos. Phys. Rev. E 101, 062207 (2020). https://doi.org/10.1103/PhysRevE.101.062207

Miller, S.T., Lindner, J.F., Choudhary, A., Sinha, S., Ditto, W.L.: Mastering high-dimensional dynamics with Hamiltonian neural networks. Chaos Solitons Fractals: X 5, 100046 (2020)

Miller, S.T., Lindner, J.F., Choudhary, A., Sinha, S., Ditto, W.L.: Negotiating the separatrix with machine learning. In: Nonlinear Theory and Its Applications , vol. 2, TBA (2020)

Lotka, A.J.: Contribution to the theory of periodic reactions. J. Phys. Chem. 14(3), 271 (1910). https://doi.org/10.1021/j150111a004

Haykin, S.O.: Neural Networks and Learning Machines, Third edn. Pearson, London (2008)

Baydin, A.G., Pearlmutter, B.A., Radul, A.A., Siskind, J.M.: Automatic differentiation in machine learning: a survey. J. Mach. Learn. Res. 18(153), 1 (2018)

Plank, M.: Hamiltonian structures for the \(n\)-dimensional Lotka–Volterra equations. J. Math. Phys. 36, 3520 (1995). https://doi.org/10.1063/1.530978

O’Dwyer, J.P.: Whence Lotka–Volterra? Conservation laws and integrable systems in ecology. Theor. Ecol. 11, 441 (2018). https://doi.org/10.1063/1.530978

Savitzky, A., Golay, M.J.E.: Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 36(8), 1627 (1964). https://doi.org/10.1021/ac60214a047

Chartrand, R.: Numerical differentiation of noisy, nonsmooth data. ISRN Appl. Math. 2011, 164564 (2011). https://doi.org/10.5402/2011/164564

Liang, Q., Mendel, J.M.: Interval type-2 fuzzy logic systems: theory and design. IEEE Trans. Fuzzy Syst. 8(5), 535 (2000)

Mohammadzadeh, A., Kaynak, O.: A novel general type-2 fuzzy controller for fractional-order multi-agent systems under unknown time-varying topology. J. Frankl. Inst. 356(10), 5151 (2019)

Mohammadzadeh, A., Kayacan, E.: A non-singleton type-2 fuzzy neural network with adaptive secondary membership for high dimensional applications. Neurocomputing 338, 63 (2019)

Choudhary, A.: Forecasting Hamiltonian Dynamics Without Canonical Coordinates. https://github.com/anshu957/gHNN (2020)

Funding

This research was supported by ONR Grant N00014-16-1-3066, a gift from United Therapeutics, and support from Aeris Rising, LLC. J.F.L. thanks The College of Wooster for making possible his sabbatical at NCSU. S.S. acknowledges support from the J.C. Bose National Fellowship (Grant No. SB/S2/JCB-013/2015).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Implementation details

1.1 Architecture

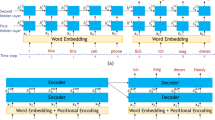

In our three examples, with phase space dimensions of \(d=2\) or \(d=4\), NN has d inputs, 2 layers of 50 neurons, and d outputs for a d:50:50:d architecture. HNN has d inputs, 2 layers of 200 neurons, and 1 output for a d:200:200:1 architecture. gHNN is the concatenation of NN and HNN for a d:50:50:d:200:200:1 architecture. For the wooden pendulum example, the NN preprocessor and the HNN use 2:20:20:2 and 2:100:100:1 architecture, respectively. All neurons use hyperbolic-tangent sigmoids in Eq. 1. The neural networks run on desktop computers and are implemented using the PyTorch library.

1.2 Hyperparameters

Just as we use stochastic gradient descent to optimise our weights and biases, we also vary the initial weights and biases and our training or hyperparameters to seek the deepest loss minimum in the very high-dimensional landscape of possibilities. One strategy is to repeat the computation multiple times from different starts, disregard the outliers and the occasional algorithmic errors (such as not-a-number NaN or singular value decomposition failures, which might occur in computing the inverse of Eq. 22 Jacobian) and average the remaining results [17].

Rights and permissions

About this article

Cite this article

Choudhary, A., Lindner, J.F., Holliday, E.G. et al. Forecasting Hamiltonian dynamics without canonical coordinates. Nonlinear Dyn 103, 1553–1562 (2021). https://doi.org/10.1007/s11071-020-06185-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-020-06185-2