Abstract

The proton exchange membrane (PEM) fuel cell is a nonlinear dynamic system which cannot be precisely described and controlled using a linear model. This work has two objectives: (a) it discusses model selection for the PEM and (b) it develops two nonlinear computationally efficient model predictive control (MPC) algorithms for the PEM. Three Wiener model types of different orders of dynamics and complexity of the nonlinear steady-state block are compared. The model consisting of three dynamic blocks and a neural network with five hidden nodes is chosen. To obtain simple MPC quadratic optimization problems, a linear approximation of the model or a linear approximation of the predicted trajectory is repeatedly found. The first MPC scheme gives very good control accuracy, whereas the second MPC scheme leads to the same trajectories as those possible in the “ideal” MPC scheme with full online nonlinear optimization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

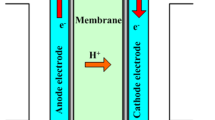

Currently, the transport sector relies on the combustion engine which uses fossil fuels. Alas, it results in emission of greenhouse gases, which leads to serious environmental problems, i.e., air pollution, global warming, climate changes and destruction of the ozone layer. Zero-emission electric vehicles are available, and their popularity grows. The majority of electric vehicles use batteries for energy storage. An interesting alternative is to use a fuel cell for energy generation. Fuel cells are electrochemical devices that convert chemical energy stored in hydrocarbon fuels (usually hydrogen) directly into electrical energy [1]. They have many important advantages: high electrical efficiency, very low emission and quiet operation. Moreover, since fuel cells do not have moving parts, their life cycle is very long. Fuel cells may be produced in different scales: from microwatts to megawatts, which makes them useful in numerous applications. Lastly, hydrogen necessary for fuel cells may be easily produced from different sources (i.e., biomass, coal or natural gas) so dependence on imported oil may be significantly reduced.

Among existing types of fuel cells [1], the proton exchange membrane (PEM) fuel cells are preferred not only for mobile and vehicle applications, including cars, scooters, bicycles, boats and underwater vessels [2, 3], but also for stationary ones. This is because of low operation temperature (usually between 60 and 80 \(^\circ {\mathrm {C}}\)) which gives a fast start-up, simple and compact design as well as reliable operation. Since solid electrolyte is used, no electrolyte leakage is possible. The PEM fuel cells are considered to be very promising power sources, and they are expected to become sound alternatives to conventional power generation methods.

It is necessary to point out that control of PEM fuel cells is a challenging task. Although there are examples of classical control methods applied to the PEM fuel cell, e.g., a linear state feedback controller [4] or a sliding mode controller (SMC) [5], the process is inherently nonlinear and the linear controllers may give control accuracy below expectations. Hence, a number of nonlinear control strategies have been applied to the PEM fuel cell process: an adaptive proportional–integral–derivative (PID) algorithm whose parameters are tuned online by a fuzzy logic system [6, 7] or by a neural network [8], an adaptive PID algorithm with a fuzzy logic feedforward compensator [9], a nonlinear state feedback controller [10], a fuzzy controller [11] and a lookup table [12]. Fractional complex-order controllers may be also used [13, 14]. Recently, model predictive control (MPC) algorithms [15,16,17] have been applied for the PEM fuel cell. In the literature, it is possible to find two categories of MPC:

-

1.

The fully fledged nonlinear MPC (for prediction a nonlinear model is used) which requires solving a nonlinear optimization problem at each sampling instant online [18,19,20,21]. Such an approach may be very computationally demanding, and its practical application may be impossible.

-

2.

The classical MPC algorithm based on a fixed (parameter constant) linear model [22, 23]. In this approach, online calculations in MPC are not demanding (quadratic optimization is used), but the resulting control quality may be not satisfactory because the process is nonlinear and the linear model used in MPC is only a very rough approximation of the process.

The contribution of this work is twofold:

-

1.

Two nonlinear MPC algorithms for the PEM fuel cell are described. In contrast to the algorithms presented in [18,19,20,21], in both algorithms computationally simple quadratic optimization is used, and full nonlinear optimization is not necessary. It is possible because a linear approximation of the model or a linear approximation of the predicted trajectory is found online. The discussed algorithms are compared to the MPC scheme with nonlinear optimization in terms of control accuracy and computational time, and inefficiency of the linear MPC scheme is shown.

-

2.

Both described MPC algorithms use Wiener models of the PEM process, and effectiveness of three structures is compared. The choice of the Wiener model, composed of a linear dynamic block connected in series with a nonlinear steady-state one [24], is motivated by two factors. Firstly, the Wiener model is a natural representation of the PEM process. Secondly, the Wiener model is used in a simple way in the described MPC algorithms; the first of them is motivated by the serial structure of the Wiener model.

A neural network [25] is used in the steady-state part of the Wiener model. Neural networks are very efficient for modeling of dynamic systems, e.g., [26], and control. There are many different control methods based on neural networks: e.g., adaptive PID control [8], adaptive state control [27], adaptive sliding mode control [28], robust feedback control [29], the zeroing dynamics method [30, 31] and MPC [26]. Neural networks may be also used for online matrix calculations [32] and optimization [33].

This paper is structured as follows. Section 2 describes the PEM process and its fundamental model. Next, in Sect. 3 three Wiener structures are characterized. Section 4 details two nonlinear MPC algorithms for the PEM process. Section 5 discusses identification of different types of models and MPC of the PEM process. Section 6 concludes the paper.

2 Proton exchange membrane fuel cell

In the general case, the model of the PEM fuel cell is quite complicated [4]. Hence, the tendency is to use simpler model for development of a control system [34,35,36,37,38,39].

2.1 PEM fuel cell system description

In this work, the PEM fuel cell model introduced in [39] and further discussed in [40,41,42] is considered. The PEM process has one manipulated variable (the input of the process) which is the input methanol flow rate q (\({\mathrm {mol \ s^{-1}}}\)), one disturbance (the uncontrolled input) I which is the external current load (A) and one controlled variable (the output of the process) which is the stack output voltage V (V). The partial pressures of hydrogen, oxygen and water are denoted by \(p_{{\mathrm {H}}_{2}}\), \(p_{{\mathrm {O}}_{2}}\) and \(p_{{\mathrm {H}}_{2}{\mathrm {O}}}\), respectively (atm). The input hydrogen flow, the hydrogen reacted flow and the oxygen input flow are denoted by \(q_{{\mathrm {H}}_{2}}^{{\mathrm {in}}}\), \(q_{{\mathrm {H}}_{2}}^{{\mathrm {r}}}\) and \(q_{{\mathrm {O}}_{2}}^{{\mathrm {in}}}\), respectively (\(\mathrm {mol \ s^{-1}}\)).

2.2 PEM fuel cell continuous-time fundamental model

The fundamental continuous-time model of the PEM system is defined by the following continuous-time equations. The pressure of hydrogen is

where \(K_{{{\mathrm {H}}}_2}\) and \(\tau _{{{\mathrm {H}}}_2}\) denote the valve molar constant for hydrogen and the response time of hydrogen flow, respectively. The input hydrogen flow obtained from the reformer is

where q is the methane flow rate, CV, \(\tau _1\) and \(\tau _2\) are constants. Hence, from Eqs. (1) and (2), the pressure of hydrogen is

The pressure of oxygen is

where \(K_{{{\mathrm {O}}}_2}\) and \(\tau _{{{\mathrm {O}}}_2}\) denote the valve molar constant for oxygen and the response time of oxygen flow, respectively. The input flow rate of oxygen is

where \(\tau _{{\mathrm {H-O}}}\) is the ration of hydrogen to oxygen. Using Eq. (2), the pressure of oxygen is

The pressure of water is

where \(K_{{{\mathrm {H}}}_2{\mathrm {O}}}\) and \(\tau _{{{\mathrm {H}}}_2{{\mathrm {O}}}}\) denote the valve molar constant for water and the response time of water flow, respectively. The hydrogen flow that reacts is

Hence, from Eqs. (7) and (8), the pressure of water is

Finally, the stack output voltage is

From the Nernst’s equation

where \(N_0\), \(E_0\), \(R_0\), \(T_0\) and \(F_0\) denote the number of cells in series in the stack, the ideal standard potential, the universal gas constant, the absolute temperature and the Faraday’s constant, respectively. The activation loses are defined by

where B and C are constants. The ohmic losses are

where \(R^{{\mathrm {int}}}\) is the internal resistance.

The continuous-time fundamental model consists of Eqs. (2), (3), (6), (8)–(13). The values of parameters are given in Table 1. Table 2 gives the values of process variables for the initial operating point. The values of process input and disturbance signals are constrained

3 Wiener models of PEM fuel cell

It can be noted that the continuous-time fundamental model of the discussed PEM fuel cell is characterized by linear dynamic transfer functions (Eqs. 3, 6, 9), but the stack voltage is defined by the nonlinear steady-state Nernst’s equation (11). This means that the outputs of the linear dynamic part of the model are inputs of the nonlinear steady-state one. Hence, it is straightforward to use the Wiener structure as an empirical model of the considered PEM fuel cell.

For model identification, the manipulated variable of the process, q, the disturbance, I, and the output, V, are scaled

where \(\bar{q}\), \(\bar{I}\) and \(\overline{V}\) denote values of process variables at the initial operating point (Table 2).

3.1 Wiener model: structure A

Figure 1 depicts the first structure of the Wiener model (structure A). It consists of a linear dynamic block connected in series with a nonlinear steady-state one. The linear block has two inputs (u, h) and one output, v, which is an auxiliary variable. The linear block is characterized by the equation

The integers \(n_{{\mathrm {A}}}\) and \(n_{{\mathrm {B}}}^j\), \(j=1,2\) define the order of the model dynamics. The constant parameters of the linear dynamic blocks are denoted by the real numbers \(a_i\) (\(i=1,\ldots ,n_{{\mathrm {A}}}\)), \(b_i^1\) (\(i=1,\ldots ,n_{{\mathrm {B}}}^1\)) and \(b_i^2\) (\(i=0,\ldots ,n_{{\mathrm {B}}}^1\)). It is important to note that the signal v(k) depends directly on the signal h(k) since the current, I, has an immediate impact on the voltage, V. The output signal of the linear dynamic block is taken as the input of the steady-state one. The nonlinear steady-state part of the model is described by the general equation

As the differentiable function \(g :{\mathbb {R}} \rightarrow {\mathbb {R}}\) a neural network with one input, one hidden layer with K units and one output is used [25]. The model output is

where \(\varphi :{\mathbb {R}} \longrightarrow {\mathbb {R}}\) is a nonlinear transfer function (e.g., hyperbolic tangent). Weights of the network are denoted by \(w_{l,m}^{1}\), \(l=1,\ldots ,K\), \(m=0,1\) and \(w_{l}^{2}\), \(l=0,\ldots ,K\), for the first and the second layers, respectively. The total number of weights is \(3K+1\). All parameters of the Wiener model, i.e., the parameters of the dynamic part and weights of the neural network, are determined from an identification procedure. During identification, the model error defined by

is minimized, where \(y_{{\mathrm {mod}}}(k)\) and y(k) denote the model output and the training data, respectively, \(n_{{\mathrm {samples}}}\) denotes the number of data samples. Since the model is nonlinear, optimization of the model parameters is a nonlinear optimization task which is solved off-line. For this purpose, the sequential quadratic programming (SQP) algorithm is used [43], which makes it possible to take into account constraints during optimization. To enforce stability of the Wiener model, the poles of linear dynamic block are optimized subject to stability constraints. (All poles must belong to the unit circle.) Next, from the optimized poles the model coefficients \(a_i\) (Eq. 17) are calculated. The values of \(b_i^j\), \(w_{l,m}^{1}\) and \(w_{l}^{2}\) are directly calculated (optimized) with no constraints.

3.2 Wiener model: structure B

Figure 2 depicts the second structure of the Wiener model (structure B). It consists of two linear dynamic blocks and a nonlinear steady-state one, but unlike structure A, it has two inputs. The outputs of the linear blocks are characterized by the equations

The integers \(n_{{\mathrm {A}}}^j\), \(n_{{\mathrm {B}}}^j\), \(j=1,2\) define the order of the model dynamics. The constant parameters of the linear dynamic blocks are denoted by the real numbers \(a_i^1\) (\(i=1,\ldots ,n_{{\mathrm {A}}}^1\)), \(a_i^2\) (\(i=1,\ldots ,n_{{\mathrm {A}}}^2\)), \(b_i^1\) (\(i=1,\ldots ,n_{{\mathrm {B}}}^1\)) and \(b_i^2\) (\(i=0,\ldots ,n_{{\mathrm {B}}}^1\)). The signal \(v_2(k)\) depends on the signal h(k) since the current, I, has an immediate impact on the voltage, V. The nonlinear steady-state block is described by the general equation

A neural network with two inputs, one hidden layer with K units and one output is used. The model output is

The weights of the network are denoted by \(w_{l,j}^{1}\), \(l=1,\ldots ,K\), \(j=0,1,2\) and \(w_{l}^{2}\), \(l=0,\ldots ,K\), for the first and the second layers, respectively. The overall number of weights is \(4K+1\). The parameters of the second type of the Wiener model are also obtained by minimization of the model error function (20) subject to the constraints to enforce model stability (the poles of Eqs. 21 and 22 must belong to the unit circle).

3.3 Wiener model: structure C

Figure 3 depicts the third structure of the Wiener model (structure C). It has three linear dynamic blocks. They are characterized by the equations

The integers \(n_{{\mathrm {A}}}^j\) for \(j=1,2,3\), \(n_{{\mathrm {B}}}^{ij}\) for \(i=1,2\), \(j=1,2\) and \(n_{{\mathrm {B}}}^3\) define the order of the model dynamics. The constant parameters of the linear dynamic blocks are denoted by the real numbers \(a_i^j\) (\(i=1,\ldots ,n_{{\mathrm {A}}}^j\), \(j=1,2,3\)), \(b_i^{jl}\) (\(i=1,\ldots ,n_{{\mathrm {B}}}^j\), \(j=1,2\), \(l=1,2\)) and \(b_i^{3}\) (\(i=1,\ldots ,n_{{\mathrm {B}}}^3\)). Unlike two previously discussed model structures, in structure C the steady-state block has an additional input which is the value of the disturbance signal, h, measured at the current sampling instant, k. The nonlinear steady-state block is described by the general equation

A neural network with four inputs, one hidden layer with K units and one output is used. The model output is

Weights of the network are denoted by \(w_{l,j}^{1}\), \(l=1,\ldots ,K\), \(j=0,\ldots ,4\) and \(w_{l}^{2}\), \(l=0,\ldots ,K\), for the first and the second layers, respectively. The overall number of weights is \(6K+1\). In this case, model parameters are also obtained by minimization of the model error function (20) subject to the constraints to enforce model stability (the poles of Eqs. 25–27 must belong to the unit circle).

4 Nonlinear model predictive control algorithms of PEM fuel cell

4.1 MPC problem formulation

MPC is an advanced control technique in which a dynamic model is used repeatedly online to predict the future behavior of the controlled process and an optimization procedure finds the best possible control policy [15,16,17]. The MPC approach has important advantages: It offers good control quality and takes into account all existing constraints imposed on process variables. As a result, MPC algorithms have been applied to numerous technological processes, mainly in industrial process control [44], e.g., chemical reactors [45], but also for control: computer networks [46], unmanned vehicles [28], antilock brake systems in automobiles [47], unmanned helicopters [27], tractor–trailer vehicles [48], overhead cranes [49], underwater vehicles [50], active steering systems in cars [29] and even chaotic systems [51].

Let u denote the scaled input (manipulated) variable of the process and y denote the scaled output (controlled) variable (Eq. 16). In MPC algorithms [15,16,17] at each consecutive sampling instant k, a future control policy for a control horizon, \(N_{{\mathrm {u}}}\), is calculated. Typically, increments of the manipulated variable are found

where \(\triangle u(k|k)=u(k|k)-u(k-1)\), \(\triangle u(k+p|k)=u(k+p|k)-u(k+p-1|k)\) for \(p=1,\ldots ,N_{{\mathrm {u}}}-1\). It is assumed that \(\triangle u(k+p|k)=0\) for \(p\ge N_{{\mathrm {u}}}\), i.e., \(u(k+p|k)=u(k+N_{{\mathrm {u}}}-1|k)\) for \(p\ge N_{{\mathrm {u}}}\). The control increments (30) are calculated at each sampling instant from an optimization problem in which the predicted control errors are minimized. They are defined as the differences between the set-point trajectory, \(y^{{\mathrm {sp}}}(k+p|k)\), and the predicted trajectory, \(\hat{y}(k+p|k)\), over the prediction horizon \(N\ge N_{{\mathrm {u}}}\). The MPC cost function is

A dynamic model of the controlled process is used to calculate online the predicted values of the process output variable. The second part of the MPC cost function is a penalty term used to limit increments of the manipulated variable. An advantage of MPC algorithms is the fact that all existing constraints may be taken into account in an optimization task solved at each sampling instant online to find the optimal increments of the manipulated variable (30). Assuming that there are constraints imposed on the value and the rate of change of the manipulated variables as well as on the predicted values of process output, the rudimentary MPC optimization problem is

The minimal and maximal possible values of the manipulated variable are denoted by \(u^{\min }\) and \(u^{\max }\), respectively, the maximal allowed increment of that variable is denoted by \(\triangle u^{\max }\), and the minimal and maximal possible values of the predicted controlled variable are denoted by \(y^{\min }\) and \(y^{\max }\), respectively. At each sampling instant, the whole sequence of control increments (30), of length \(N_{{\mathrm {u}}}\), is calculated, but only the first element is applied to the process, i.e., \(u(k)=\triangle u(k|k)+u(k-1)\). At the next sampling instant, \(k+1\), the whole procedure is repeated.

For further transformations, the MPC optimization problem (32) is expressed in a convenient vector–matrix notation

where the norms are \(\left\| \varvec{x}\right\| ^{2}=\varvec{x}^{{\mathrm {T}}}\varvec{x}\), \(\left\| \varvec{x}\right\| ^{2}_{\varvec{A}}=\varvec{x}^{{\mathrm {T}}}\varvec{A}\varvec{x}\), the predicted trajectory of the output variable over the prediction horizon and the set-point trajectory are vectors of length N

The vectors of length \(N_{{\mathrm {u}}}\) are: \(\varvec{u}^{\min }=\left[ u^{\min }\ldots u^{\min }\right] ^{{\mathrm {T}}}\), \(\varvec{u}^{\max }=\left[ u^{\max }\ldots u^{\max }\right] ^{{\mathrm {T}}}\), \(\triangle \varvec{u}^{\max }=\Big [\triangle u^{\max }\ldots \triangle u^{\max }\Big ]^{{\mathrm {T}}}\), \(\varvec{u}(k-1)=\left[ u(k-1)\ldots u(k-1)\right] ^{{\mathrm {T}}}\); the vectors of length N are: \(\varvec{y}^{\min }=\left[ y^{\min }\ldots y^{\min }\right] ^{{\mathrm {T}}}\), \(\varvec{y}^{\max }=\left[ y^{\max }\ldots y^{\max }\right] ^{{\mathrm {T}}}\). The matrix \(\varvec{\Lambda }={\mathrm {diag}}(\lambda ,\ldots ,\lambda )\) and the lower ones matrix \(\varvec{J}\) (its diagonal and below diagonal entries are equal to 1, and the entries over the diagonal are equal to 0) are of dimensionality \(N_{{\mathrm {u}}}\times N_{{\mathrm {u}}}\).

The Wiener models of the PEM fuel cell are nonlinear, so the predicted values of the controlled variable are nonlinear functions of the currently calculated sequence of increments of the manipulated variable, Eq. (30). Hence, the general MPC optimization problem (32) becomes a nonlinear task which must be solved at each sampling instant online. To reduce the computational complexity, two alternatives are discussed in the following part of the article.

4.2 Nonlinear MPC algorithm with nonlinear prediction and simplified model linearization (MPC-NPSL)

In the first approach regarding computationally efficient MPC of the PEM fuel cell, a linear approximation of the Wiener model is successively calculated online at each sampling instant k and next used for finding the predicted trajectory of the process output [26]. The model is linearized for the current operating conditions. During linearization, the serial structure of the Wiener model is used. Although all three model types may be used, the MPC-NPSL algorithm for the most complex Wiener structure C shown in Fig. 3 is detailed. The algorithm for structures A and B may be easily derived from the given description.

Online linearization is performed in a simple way: The time-varying gains of the nonlinear steady-state blocks of the model are calculated for the current operating point, and next, taking into account the serial structure of the model, the gain of the whole model is updated. From Eq. (29), the time-varying gains of the \(v_i\) to y channels (\(i=1,2,3\)) of the nonlinear block can be obtained as

where \(z_l(k)=w_{l,0}^{1}+\sum _{j=1}^3 w_{l,j}^{1}v_j(k)+w_{l,4}^{1}h(k)\). If the hyperbolic tangent is used as the activation function of the hidden layer of the neural network, i.e., \(\varphi =\tanh (\cdot )\), it results in

Taking into account the serial structure of Wiener model C shown in Fig. 3, a linear approximation of the model output signal is

Remembering that the dynamic blocks are linear with constant parameters (Eqs. 25–27), the model used in the MPC-NPSL algorithm (Eq. 38) is linear, but time-varying. In all MPC algorithms based on constant linear models, the predicted trajectory of the output (Eq. 34) is a linear combination of the decision variables of MPC [17]. Using the concept of linear MPC and taking into account the time-varying linear approximation of Wiener structure C defined by Eq. (38), the prediction equation is obtained

The predicted output trajectory, \(\hat{\varvec{y}}(k)\), is a sum of two parts: the forced trajectory, \((K_1(k)\varvec{G}_1(k)+K_2(k)\varvec{G}_2(k))\triangle \varvec{u}(k)\), and the free trajectory (a vector of length N)

The first trajectory depends on the currently calculated future control increments, whereas the second one depends only on the past. It is straightforward to notice from Eqs. (25)–(27) that the manipulated variable, u, influences only the first two intermediate model variables, \(v_1\) and \(v_2\). Hence, only the channels \(u-v_1-y\) and \(u-v_2-y\) are considered in the forced trajectory. The constant matrices of dimensionality \(N\times N_{{\mathrm {u}}}\)

comprise step response matrices of the channels \(u-v_1\) (\(n=1\)) and \(u-v_2\) (\(n=2\)), respectively. They are calculated off-line in the classical way [17]. For this purpose, the first two dynamic blocks (Eqs. 25, 26) are taken into account without any influence of the disturbance signal, h.

In the MPC-NPSL algorithm, it is also necessary to find the free trajectory, \(\varvec{y}^{0}(k)\), (Eq. 40). It is calculated at each sampling instant not from the simplified linearized model (38), but from the full nonlinear Wiener model. From Eq. (29), one has

The free trajectories of the variables \(v_1\), \(v_2\) and \(v_3\) are denoted by \(v_1^0\), \(v_2^0\) and \(v_3^0\), respectively. The free trajectory of the variable \(v_1\) is obtained from Eq. (25)

where \(I_{{\mathrm {uf}}}^1(p)=\min (p,n_{\mathrm {B}}^{11})\), \(I_{{\mathrm {vf}}}^1(p)=\min (p-1,n_{\mathrm {A}}^1)\). The free trajectory of the variable \(v_2\) is obtained from Eq. (26)

where \(I_{{\mathrm {uf}}}^2(p)=\min (p,n_{\mathrm {B}}^{21})\), \(I_{{\mathrm {vf}}}^2(p)=\min (p-1,n_{\mathrm {A}}^2)\). The free trajectory of the variable \(v_3\) is obtained from Eq. (27)

where \(I_{{\mathrm {vf}}}^3(p)=\min (p-1,n_{\mathrm {A}}^3)\). The measured value of the disturbance is typically known up to the current sampling instant, i.e.,

The unmeasured disturbance acting on the process output, d(k), used in the free trajectory (Eq. 42), is calculated as difference between the value of the output signal measured at the current sampling instant, y(k), and process output estimated from the model. Using Eq. (29), one obtains

Because in the MPC-NPSL algorithm for prediction of the predicted output trajectory (Eq. (39)) a linear function of the future control increments \(\triangle \varvec{u}(k)\) is used, the general MPC optimization problem (33) is transformed to the MPC-NPSL quadratic programming task

4.3 Nonlinear MPC algorithm with nonlinear prediction and linearization along the trajectory(MPC-NPLT)

In the second approach to computationally efficient MPC of the PEM fuel cell, a linear approximation of the predicted trajectory of the process output is directly calculated online, at each sampling instant k [26]. It should be noted that in the MPC-NPSL algorithm a linear approximation of the model is successively found online and next used for calculation of the predicted trajectory of the controlled variable. This approach uses a constant linearized model over the whole prediction horizon, which is a disadvantage.

In the MPC-NPLT algorithm, linearization of the output trajectory is performed for an assumed future trajectory of the input variable \(\varvec{u}^{{\mathrm {traj}}}(k)=\left[ u^{{\mathrm {traj}}}(k|k) \ldots u^{{\mathrm {traj}}}(k+N_{{\mathrm {u}}}-1|k)\right] ^{{\mathrm {T}}}\). In this work, that trajectory is defined as the last \(N_{{\mathrm {u}}}-1\) elements of the optimal trajectory calculated at the previous instant and not applied to the process

The last element is repeated twice since the control increment for the sampling instant \(k+N_{{\mathrm {u}}}-1\) is not calculated in the previous sampling instant. Using Taylor’s series expansion method, a linear approximation of the nonlinear output trajectory \(\hat{\varvec{y}}(k)\) (Eq. 34) along the input trajectory \(\varvec{u}^{{\mathrm {traj}}}(k)\), i.e., linearization of the function \(\hat{\varvec{y}}(\varvec{u}(k)) :{\mathbb {R}}^{N_{{\mathrm {u}}}}\rightarrow {\mathbb {R}}^{N}\) is

where \(\varvec{u}(k)=\left[ u(k|k) \ldots u(k+N_{{\mathrm {u}}}-1|k)\right] ^{{\mathrm {T}}}\) is the future trajectory of the manipulated variable corresponding to the calculated increments, \(\triangle \varvec{u}(k)\), (Eq. 30). The predicted trajectory of the controlled variable, \(\hat{\varvec{y}}^{{\mathrm {traj}}}(k)\), corresponds to the assumed trajectory of the manipulated variable, \(\varvec{u}^{{\mathrm {traj}}}(k)\). The \(N\times N_{{\mathrm {u}}}\) matrix of the derivatives of the predicted trajectory of the controlled variable with respect of the assumed trajectory of the manipulated variable has the structure

The linear approximation of the predicted process trajectory (50) may be expressed as a function of the future trajectory of the increments of the manipulated variable, which is repeatedly calculated in MPC at each sampling instant

Using the linear approximation of the predicted trajectory of the controlled variable (Eq. 52), from the rudimentary MPC optimization problem (33) the following MPC-NPLT quadratic optimization task is obtained

The predicted trajectory \(\hat{\varvec{y}}^{{\mathrm {traj}}}(k)\) and the matrix \(\varvec{H}(k)\) are calculated directly from the full nonlinear model of the process, without any simplification. Although all three model types may be used, the MPC-NPLT algorithm for the most complex Wiener structure C shown in Fig. 3 is detailed. From Eq. (29), the predicted trajectory is

From Eq. (25), the predicted trajectory of the variable \(v_1\) is

From Eq. (26), the predicted trajectory of the variable \(v_2\) is

From Eq. (27), the predicted trajectory of the variable \(v_3\) is

The unmeasured disturbance is assessed in the same way as in the MPC-NPSL algorithm (Eq. 47).

The entries of the matrix \(\varvec{H}(k)\) are determined differentiating Eq. (54) which leads to

for all \(p=1,\ldots ,N\), \(r=0,\ldots ,N_{{\mathrm {u}}}-1\), where \(z_l(k+p|k)=w_{l,0}^{1}+\sum _{j=1}^3 w_{l,j}^{1}v_j(k+p|k)+w_{l,4}^{1}h_{{\mathrm {meas}}}(k)\). The first derivative in Eq. (58) depends on the transfer function used in the neural network, for the hyperbolic tangent the general Eq. (37) is used. The partial derivatives are calculated differentiating Eqs. (55) and (56). In general, for \(j=1,2\), one has

The first partial derivative in the right side of Eq. (59) is

and the second one is calculated recurrently for the consecutive values of the indices p and r.

5 Simulation results

5.1 Model identification of PEM fuel cell

The objective of this subsection is to find precise black box models of the PEM fuel cell. A linear model and three discussed Wiener structures (A, B and C) are considered. All models are assessed in terms of the SSE error (Eq. 20) and the number of model parameters. During model identification two data sets are used: the training data set and the validation one. The first of them is used only to find parameters of models, whereas the second one is used only to assess generalization ability of models, i.e., how the model reacts when it is excited by a different data set than that used for identification. To obtain those two sets of data, the continuous-time fundamental model of the PEM process (defined by Eqs. 2, 3, 6, 8–13) is simulated. The model system of differential equations is solved by Runge–Kutta method of order 45. As the process input and disturbance signals random sequences from the range characterized by Eqs. (14) and (15) are used. The process signals (i.e., the manipulated variable, q, the disturbance, I, and the controlled variable, V) are sampled with the sampling period equal to 1 s. The training and validation data sets are shown in Fig. 4, both sets consist of 3000 samples of the process manipulated variable, the disturbance and the output. Since identification of nonlinear Wiener models is a nonlinear optimization problem, training is repeated as many as 10 times for each model configuration and the results presented next are the best obtained.

At first linear models of the process are considered. They have the following structure

Table 3 compares training and validation errors of linear models of the first, the second, the third and the fourth order (the order is defined as an integer number \(n_{\mathrm {B}}^1=n_{\mathrm {B}}^2=n_{\mathrm {A}}\)). As a compromise between model accuracy and complexity, the third-order model is chosen. Figure 5 compares the validation data set versus the output of the chosen linear model. The model is stable, but not precise since there are significant differences between the model output and the data.

Next, Wiener structure A is considered. Table 4 presents training and validation errors for models of different orders (the order is defined as an integer number \(n_{\mathrm {B}}^1=n_{\mathrm {B}}^2=n_{\mathrm {A}}\)) and different numbers of hidden nodes, K. All compared models are of very low quality, only slightly better than the linear models (Table 3). Model complexity (defined by the order of dynamics and the number of hidden nodes) has practically no influence on model accuracy. For further comparison, the third-order model containing five hidden nodes is chosen. The top part of Fig. 6 compares the validation data set versus the output of Wiener model A. Slightly better results in comparison with the linear structure (Fig. 5) can be obtained, but still significant differences between model output and data are present.

The training and validation errors of Wiener structure B are given in Table 5 for models of different orders (the order is defined as an integer number \(n_{\mathrm {B}}^1=n_{\mathrm {B}}^2=n_{\mathrm {A}}^1=n_{\mathrm {A}}^2\)) and different numbers of hidden nodes, K. In comparison with the linear model (Table 3) and Wiener structure A (Table 4), Wiener structure B has significantly lower errors. For further comparisons, the third-order model containing five hidden nodes is chosen. The middle part of Fig. 6 compares the validation data set versus the output of Wiener model B. Unlike structure A, the model output signal is similar to that of the validation data, the differences are small.

Finally, Wiener structure C is considered. Training and validation errors of the model are given in Table 6 for models of different orders (where the order is defined as an integer number \(n_{\mathrm {B}}^{ij}=n_{\mathrm {A}}^i=n_{\mathrm {B}}^{3}=n_{\mathrm {A}}^{3}\) for \(i=1,2\), \(j=1,2\)) and different numbers of hidden nodes, K. In comparison with Wiener structures A and B (Tables 4, 5), structure C has significantly lower errors. Furthermore, there is a direct influence of the number of hidden nodes on model accuracy (the more hidden nodes, the lower the errors). It is interesting to notice that the third-order models are characterized by very similar errors as the fourth-order ones, whereas the first- and second-order structures are significantly worse. As a compromise between accuracy and complexity the third-order model containing five hidden nodes is chosen. The bottom part of Fig. 6 compares the validation data set versus the output of Wiener model C. It can be checked that in this case it is practically impossible to seen any differences between the validation data and the model output (which are present in the case of structures A and B).

The accuracy of the considered third-order linear model and all Wiener structures (with five hidden nodes) is compactly presented in Fig. 7 which depicts the relation between the validation data versus the model outputs. The linear model and Wiener structure A are imprecise, Wiener structure B gives much better results, whereas Wiener structure C is excellent. (The relation between the data and the model output forms a line the slope of which is 45 degrees.)

5.2 Model predictive control of PEM fuel cell

The objective of this subsection is to compare performance of MPC algorithms based on the models found in the previous subsection. The following MPC algorithms are compared:

-

1.

The classical generalized predictive control (GPC) MPC algorithm [52]. The third-order linear model is used for prediction.

-

2.

The fully fledged MPC-NO algorithm with nonlinear optimization repeated at each sampling instant. The Wiener models (A, B and C) are used for prediction.

-

3.

The computationally efficient MPC schemes: the MPC-NPSL algorithm with simplified linearization and the MPC-NPLT one with trajectory linearization. Wiener model C is used in both MPC approaches. Both algorithms need solving online a quadratic optimization problem at each sampling instant.

All models (linear and nonlinear) used in all MPC algorithms are of the third order. As the simulated process the continuous-time fundamental model consists of Eqs. (2), (3), (6), (8)–(13) is used. In all simulations, the horizons of all compared MPC algorithms are the same: \(N=10\) and \(N_{{\mathrm {u}}}=3\). The objective of all MPC algorithms is to control the process in such a way that the output, V, is close to the constant set point \(V^{{\mathrm {sp}}}=\bar{V}\) irrespective of the changes of the disturbance, I. The scenario of disturbance changes is

The magnitude of the manipulated variable is constrained: \(u^{\min }=0.1\), \(u^{\max }=2\).

At first, the GPC algorithm based on the linear model is considered. Simulation results for different values of the penalty factor \(\lambda \) are depicted in Fig. 8. Unfortunately, for the smallest value of that coefficient, i.e., for \(\lambda =1\), there are very strong oscillations of the process input and output variables. When the penalty coefficient is increased, for \(\lambda =25\) and \(\lambda =50\), the oscillations are damped, but the trajectory of the process output is slow. When \(\lambda =100\), no oscillations combined with a long rise time are observed. It means that the GPC algorithm is unable to compensate fast for changes of the disturbance.

Due to the underlying linear model, the GPC algorithm gives poor control results when applied for prediction. It seems to be straightforward to consider a nonlinear model in MPC. At first, Wiener structure A is used in the fully fledged MPC-NO algorithm. Although the MPC-NO algorithm is computationally too demanding to be used in practice, in simulations it shows whether or not the model may be used for long-range prediction in MPC. Figure 9 depicts simulation results of the MPC-NO algorithm based on Wiener model A for different values of the penalty factor \(\lambda \). (The same values are used as in the case of the GPC algorithm.) Since Wiener model A is imprecise (Table 4 and Fig. 6), the obtained control quality is poor. For the smallest value \(\lambda =1\), there are some damped oscillations which may be eliminated when the penalty coefficient is increased. Unfortunately, it results in very slow trajectories, as those in the case of the GPC strategy. One may conclude that Wiener model A is not precise enough to be used in MPC.

Next, Wiener models B and C are discussed to be used in MPC. Simulations results of the MPC-NO algorithm based on these models are shown in Fig. 10. It is possible to formulate two observations. First of all, unlike the GPC algorithm and the MPC-NO strategy based on Wiener model A, when applied for prediction in MPC, both B and C Wiener models result in good control, i.e., it is possible to compensate fast for changes of the disturbance. Secondly, it should be noticed that the MPC-NO algorithm gives slightly better, but noticeable, results when Wiener model C is used. In this case, overshoot is smaller and the required set point is achieved faster. This results from the use of the more precise model C (instead of B) (Tables 5, 6).

Taking into account simulation results presented in Figs. 8, 9 and 10, it can be concluded that Wiener model C results in strongly improved control quality when used in the MPC-NO algorithm. It should be noted that the MPC-NO algorithm requires solving a nonlinear optimization problem at each sampling instant online. In order to reduce computational complexity, two alternatives are considered: the MPC-NPLT algorithm with trajectory linearization and the MPC-NPSL algorithm with simplified linearization. Both algorithms result in quadratic optimization problems; nonlinear optimization is not necessary. Figure 11 compares trajectories of the MPC-NO algorithm with those obtained in the MPC-NPLT and MPC-NPSL strategies. Two observations may be discussed. Firstly, the MPC-NPLT algorithm with trajectory linearization gives practically the same process trajectories as the “ideal” MPC-NO strategy; it is impossible to see any differences. It is a beneficial feature of the MPC-NPLT algorithm, since it is significantly less computationally demanding, but leads to the same control performance as the MPC-NO strategy. Secondly, the MPC-NPSL algorithm is stable, and it only gives slightly larger overshoot than the MPC-NO and MPC-NPLT algorithms. It should be noted that the MPC-NPSL algorithm uses for prediction a linear approximation of the model which is obtained in a simple way, and quite complicated trajectory linearization is not necessary.

Figure 12 depicts simulation results of the three compared nonlinear MPC algorithms based on Wiener model C (MPC-NO, MPC-NPLT and MPC-NPSL), but now the increments of the manipulated variable are constrained, \(\triangle u^{\max }=0.1\). Due to the additional constraints, the manipulated variable does not change as quickly as in Fig. 11, but the trajectories of the process output are slower. In this case, the observations of algorithms’ performance are the same as before, i.e., the MPC-NPLT algorithm gives the trajectories practically the same as the MPC-NO one, whereas the MPC-NPSL algorithm gives only slightly larger overshoot.

To further compare MPC algorithms whose trajectories are depicted in Figs. 11 and 12, two performance indices are calculated after completing simulations. The first one

measures the sum of squared differences between the required set point, \(V^{{\mathrm {sp}}}(k)\), and the actual process output, V(k), for the whole simulation horizon. The second one

measures the sum of squared differences between the process output controlled by the “ideal” MPC-NO algorithm, \(V^{{\mathrm {MPC-NO}}}(k)\), and the process output controlled by a compared MPC algorithm, V(k). The obtained numerical values of the performance indices \(E_1\) and \(E_2\) are given in Table 7. In general, the values of \(E_1\) for the MPC-NO and MPC-NPLT algorithms are the same, which indicates that the measure \(E_2\) for the MPC-NPLT algorithm is low, close to 0. When the MPC-NO algorithm is compared with the MPC-NPSL one, there are much noticeable differences, but still leading to control accuracy very similar to that possible when the MPC-NO and MPC-NPLT strategies are used. Two cases are considered (corresponding to Figs. 11, 12): when the rate of change of the manipulated variable is constrained or not. When the rate constraints are present, all trajectories (of the process input and output) are slower. Table 7 additionally specifies calculation time (scaled) of the MPC algorithms. Two general observations may be made. Firstly, the MPC-NPSL and MPC-NPLT algorithms are many times computationally efficient in comparison with the MPC-NO one. The MPC-NPSL scheme is somehow less demanding than the MPC-NPLT one, but this difference is not big since computational complexity is mostly influenced by the quadratic optimization subroutine. Secondly, introduction of the additional constraints imposed on the rate of change of the manipulated variable “helps” the optimization routine to slightly faster find the solution.

6 Conclusions

The PEM fuel cell is a nonlinear dynamic system. A linear model cannot describe process behavior precisely. Moreover, when such a model is used in MPC, obtained control accuracy is not acceptable.

In this work, effectiveness of three Wiener models of the PEM fuel cell is discussed. In all models, the nonlinear steady-state block is represented by a neural network, whereas the linear dynamic part is different. The model consisting of three dynamic blocks and a neural network with five hidden nodes is chosen for control.

The second contribution of this work is the development of two computationally efficient MPC algorithms for the PEM process. In both MPC algorithms, computationally not complicated quadratic optimization is used online, and nonlinear optimization is not necessary. In the first MPC algorithm, a time-varying linear approximation of the model is used for prediction, whereas in the second one a linear approximation of the predicted process trajectory is calculated repeatedly online. The MPC-NPSL algorithm with online simple model linearization gives very good results, very similar to those possible in the complex algorithm using trajectory linearization and computationally demanding MPC with online nonlinear optimization. It is important to note the fact that model linearization in MPC is performed online in a simple way, which is possible because of the specialized serial structure of the Wiener model. Moreover, as a nonlinear block a neural network is used which leads to good approximation accuracy and easiness of model utilization in MPC. The MPC-NPLT algorithm with trajectory linearization gives practically the same control accuracy as that possible in the MPC-NO approach with nonlinear optimization, but requires more demanding calculations. (Trajectory linearization is more demanding than model linearization.)

Although the Wiener models and the MPC algorithms are developed for a particular type of the PEM, one may consider alternative ones, e.g., [4, 34,35,36,37,38]. The described MPC algorithms may be used in complex power management optimization systems for the PEM fuel cell [53].

References

Larminie, J., Dicks, A.: Fuel Cell Systems Explained. Wiley, Hoboken (2000)

Barbir, F.: PEM Fuel Cells: Theory and Practice. Academic Press, London (2013)

Özbek, M.: Modeling, Simulation, and Concept Studies of a Fuel Cell Hybrid Electric Vehicle Powertrain. Ph.D. Thesis, University of Duisburg-Essen, Duisburg (2010)

Pukrushpan, J.T., Stefanopoulou, A.G., Peng, H.: Control of Fuel Cell Power Systems: Principles, Modeling. Analysis and Feedback Design. Springer, London (2004)

Kunusch, C., Puleston, P., Mayosky, M.: Sliding-Mode Control of PEM Fuel Cells. Springer, London (2012)

Baroud, Z., Benmiloud, M., Benalia, A., Ocampo-Martinez, C.: Novel hybrid fuzzy-PID control scheme for air supply in PEM fuel-cell-based systems. Int. J. Hydrogen Energy 42, 10435–10447 (2017)

Ou, K., Wang, Y.-X., Li, Z.-Z., Shen, Y.-D., Xuan, D.-J.: Feedforward fuzzy-PID control for air flow regulation of PEM fuel cell system. Int. J. Hydrogen Energy 40, 11686–11695 (2015)

Damoura, C., Benne, M., Lebreton, C., Deseure, J., Grondin-Perez, B.: Real-time implementation of a neural model-based self-tuning PID strategy for oxygen stoichiometry control in PEM fuel cell. Int. J. Hydrogen Energy 39, 12819–12825 (2014)

Beirami, H., Shabestari, A.Z., Zerafat, M.M.: Optimal PID plus fuzzy controller design for a PEM fuel cell air feed system using the self-adaptive differential evolution algorithm. Int. J. Hydrogen Energy 40, 9422–9434 (2015)

Hong, L., Chen, J., Liu, Z., Huang, L., Wu, Z.: A nonlinear control strategy for fuel delivery in PEM fuel cells considering nitrogen permeation. Int. J. Hydrogen Energy 42, 1565–1576 (2017)

Meidanshahi, V., Karimi, G.: Dynamic modeling, optimization and control of power density in a PEM fuel cell. Appl. Energy 93, 98–105 (2012)

Özbek, M., Wang, S., Marx, M., Söffker, D.: Modeling and control of a PEM fuel cell system: a practical study based on experimental defined component behavior. J. Process Control 23, 282–293 (2013)

Shahiri, M., Ranjbar, A., Karami, M.R., Ghaderi, R.: New tuning design schemes of fractional complex-order PI controller. Nonlinear Dyn. 84, 1813–1835 (2016)

Shahiri, M., Ranjbar, A., Karami, M.R., Ghaderi, R.: Robust control of nonlinear PEMFC against uncertainty using fractional complex order control. Nonlinear Dyn. 80, 1785–1800 (2015)

Camacho, E.F., Bordons, C.: Model Predictive Control. Springer, London (1999)

Maciejowski, J.M.: Predictive Control with Constraints. Prentice Hall, Englewood Cliffs (2002)

Tatjewski, P.: Advanced Control of Industrial Processes, Structures and Algorithms. Springer, London (2007)

Hähnel, C., Aul, V., Horn, J.: Power control for efficient operation of a PEM fuel cell system by nonlinear model predictive control. IFAC-PapersOnLine 48, 174–179 (2015)

Rosanas-Boeta, N., Ocampo-Martinez, C., Kunusch, C.: On the anode pressure and humidity regulation in PEM fuel cells: a nonlinear predictive control approach. IFAC-PapersOnLine 48, 434–439 (2015)

Schultze, M., Horn, J.: Modeling, state estimation and nonlinear model predictive control of cathode exhaust gas mass flow for PEM fuel cells. Control Eng. Pract. 43, 76–86 (2016)

Ziogou, C., Papadopoulou, S., Georgiadis, M.C., Voutetakis, S.: On-line nonlinear model predictive control of a PEM fuel cell system. J. Process Control 23, 483–492 (2013)

Barzegari, M.M., Alizadeh, E., Pahnabi, A.H.: Grey-box modeling and model predictive control for cascade-type PEMFC. Energy 127, 611–622 (2017)

Panos, C., Kouramas, K.I., Georgiadis, M.C., Pistikopoulos, E.N.: Modelling and explicit model predictive control for PEM fuel cell systems. Chem. Eng. Sci. 67, 15–25 (2012)

Janczak, A.: Identification of Nonlinear Systems Using Neural Networks and Polynomial Models. A Block-Oriented Approach. Lecture Notes in Control and Information Sciences, vol. 310. Springer, Berlin (2004)

Haykin, S.: Neural Networks-A Comprehensive Foundation. Prentice Hall, Upper Saddle River (2008)

Ławryńczuk, M.: Computationally Efficient Model Predictive Control Algorithms: A Neural Network Approach. Studies in Systems, Decision and Control, vol. 3. Springer, Cham (2014)

Zhu, B.: Nonlinear adaptive neural network control for a model-scaled unmanned helicopter. Nonlinear Dyn. 78, 1695–1708 (2014)

Guo, J., Luo, Y., Li, K.: Adaptive neural-network sliding mode cascade architecture of longitudinal tracking control for unmanned vehicles. Nonlinear Dyn. 87, 2497–2510 (2017)

Eski, İ., Temürlenk, A.: Design of neural network-based control systems for active steering system. Nonlinear Dyn. 73, 1443–1454 (2013)

Chen, D., Zhang, Y., Li, S.: Tracking control of robot manipulators with unknown models: a Jacobian-matrix-adaption method. IEEE Trans. Ind. Inform. 14, 3044–3053 (2018)

Chen, D., Zhang, Y.: Robust zeroing neural-dynamics and its time-varying disturbances suppression model applied to mobile robot manipulators. IEEE Trans. Neural Netw. Learing Syst. 29, 4385–4397 (2018)

Zhang, Y., Chen, K., Tan, H.-Z.: Performance analysis of gradient neural network exploited for online time-varying matrix inversion. IEEE Trans. Autom. Control 54, 1940–1945 (2009)

Yan, Z., Wang, J.: Nonlinear model predictive control based on collective neurodynamic optimization. IEEE Trans. Neural Netw. Learing Syst. 26, 840–850 (2015)

Benchouia, N.E., Derghal, A., Mahmah, B., Madi, B., Khochemane, L., Aoul, L.H.: An adaptive fuzzy logic controller (AFLC) for PEMFC fuel cell. Int. J. Hydrog. Energy 40, 13806–13819 (2015)

Barzegari, M.M., Dardel, M., Alizadeh, E., Ramiar, A.: Reduced-order model of cascade-type PEM fuel cell stack with integrated humidifiers and water separators. Energy 113, 683–692 (2016)

Hatziadoniu, C.J., Lobo, A.A., Pourboghrat, F., Daneshdoost, M.: A simplified dynamic model of grid-connected fuel-cell generators. IEEE Trans. Power Deliv. 17, 467–473 (2002)

Suh, K.W.: Modeling, Analysis and control of fuel cell hybrid power systems. Ph.D. Thesis, University of Michigan, Ann Arbor (2016)

Talj, R.J., Hissel, D., Ortega, R., Becherif, M., Hilairet, M.: Experimental validation of a PEM fuel-cell reduced-order model and a moto-compressor higher order sliding-mode control. IEEE Trans. Ind. Electron. 57, 1906–1913 (2010)

Uzunoglu, M., Alam, M.S.: Dynamic modeling, design and simulation of a combined PEM fuel cell and ultracapacitor system for stand-alone residential applications. IEEE Trans. Energy Conv. 21, 767–775 (2006)

Uzunoglu, M., Alam, M.S.: Dynamic modeling, design and simulation of a PEM fuel cell/ultra-capacitor hybrid system for vehicular applications. Energy Conv. Manag. 48, 1544–1553 (2009)

Erdinc, O., Vural, B., Uzunoglu, M., Ates, Y.: Modeling and analysis of an FC/UC hybrid vehicular power system using a wavelet-fuzzy logic based load sharing and control algorithm. Int. J. Hydrog. Energy 34, 5223–5233 (2009)

Kisacikoglu, M.C., Uzunoglu, M., Alam, M.S.: Load sharing using fuzzy logic control in a fuel cell/ultracapacitor hybrid vehicle. Int. J. Hydrog. Energy 34, 1497–1507 (2009)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, Berlin (2006)

Qin, S.J., Badgwell, T.A.: A survey of industrial model predictive control technology. Control Eng. Pract. 11, 733–764 (2003)

Zhang, J., Chin, K.-S., Ławryńczuk, M.: Nonlinear model predictive control based on piecewise linear Hammerstein models. Nonlinear Dyn. 92, 1001–1021 (2018)

Marami, B., Haeri, M.: Implementation of MPC as an AQM controller. Comput. Commun. 33, 227–239 (2010)

Sardarmehni, T., Rahmani, R., Menhaj, M.B.: Robust control of wheel slip in anti-lock brake system of automobiles. Nonlinear Dyn. 76, 125–138 (2014)

Yue, M., Hou, X., Gao, R., Chen, J.: Trajectory tracking control for tractor-trailer vehicles: a coordinated control approach. Nonlinear Dyn. 91, 1061–1074 (2018)

Wu, Z., Xia, X., Zhu, B.: Model predictive control for improving operational efficiency of overhead cranes. Nonlinear Dyn. 79, 2639–2657 (2015)

Gao, J., Puguo, W., Li, T., Proctor, A.: Optimization-based model reference adaptive control for dynamic positioning of a fully actuated underwater vehicle. Nonlinear Dyn. 87, 2611–2623 (2017)

Longge, Z., Xiangjie, L.: The synchronization between two discrete-time chaotic systems using active robust model predictive control. Nonlinear Dyn. 74, 905–910 (2013)

Clarke, D.W., Mohtadi, C.: Properties of generalized predictive control. Automatica 25, 859–875 (1989)

Moulik, B., Söffker, D.: Optimal rule-based power management for online, real-time applications in HEVs with multiple sources and objectives: a review. Energies 8, 9049–9063 (2015)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Financial support received by the first author from the German Academic Exchange Service (DAAD) is acknowledged.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ławryńczuk, M., Söffker, D. Wiener structures for modeling and nonlinear predictive control of proton exchange membrane fuel cell. Nonlinear Dyn 95, 1639–1660 (2019). https://doi.org/10.1007/s11071-018-4650-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-018-4650-y