Abstract

Collective behavior in the resource allocation systems has attracted much attention, where the efficiency of the system is intimately depended on the self-organized processes of the multiple agents that composed the system. Nowadays, as artificial intelligence (AI) is adopted ubiquitously in decision making in various scenes, it becomes crucial and unavoidable to understand what would emerge in an multi-agent AI systems for resource allocation and how can we intervene the collective behavior there in the future, as we have experience of the possible unexpected outcomes that are induced by collective behavior. Here, we introduce the reinforcement learning (RL) algorithm into minority game (MG) dynamics, in which agents have learning ability based on one typical RL scheme, Q-learning. We investigate the dynamical behaviors of the system numerically and analytically for a different game setting, with combination of two different types of agents which mimic the diversified situations. It is found that through short-term training, the multi-agent AI system adopting Q-learning algorithm relaxes to the optimal solution of the game. Moreover, one striking phenomenon is the transition of interaction mechanism from self-organized optimization to game through tuning the fraction of RL agents \(\eta _{q}\). The critical curve for transition between the two mechanisms in phase diagram is obtained analytically. The adaptability of the AI agents population against the time-variable environment is also discussed. To gain further understanding of these phenomena, a theoretical framework with mean-field approximation is also developed. Our findings from the simplified multi-agent AI system may give new enlightenment to how the reconciliation and optimization can be breed in the coming era of AI.

Similar content being viewed by others

References

Kauffman, S.A.: The Origins of Order: Self-Organization and Selection in Evolution. Oxford University Press, Oxford (1993)

Levin, S.A.: Ecosystems and the biosphere as complex adaptive systems. Ecosystems 1(5), 431–436 (1998)

Brian Arthur, W., Durlauf, S.N., Lane, D.A.: The Economy as an Evolving Complex System II, vol. 28. Addison-Wesley, Reading (1997)

Nowak, M.A., Page, K.M., Sigmund, K.: Fairness versus reason in the ultimatum game. Science 289(5485), 1773–1775 (2000)

Roca, C.P., Cuesta, J.A., Sánchez, A.: Effect of spatial structure on the evolution of cooperation. Phys. Rev. E 80(4), 046106 (2009)

Press, W.H., Dyson, F.J.: Iterated prisoner dilemma contains strategies that dominate any evolutionary opponent. Proc. Natl. Acad. Sci. (UDA) 109(26), 10409–10413 (2012)

Challet, D., Zhang, Y.-C.: Emergence of cooperation and organization in an evolutionary game. arXiv preprint adap-org/9708006, (1997)

Arthur, W.B.: Inductive reasoning and bounded rationality. Am. Econ. Rev. 84(2), 406–411 (1994)

Challet, D., Marsili, M.: Phase transition and symmetry breaking in the minority game. Phys. Rev. E 60(6), R6271 (1999)

Savit, R., Manuca, R., Riolo, R.: Adaptive competition, market efficiency, and phase transitions. Phys. Rev. Lett. 82(10), 2203 (1999)

Johnson, N.F., Hart, M., Hui, P.M.: Crowd effects and volatility in markets with competing agents. Physica A 269(1), 1–8 (1999)

Kalinowski, T., Schulz, H.-J., Birese, M.: Cooperation in the minority game with local information. Physica A 277, 502 (2000)

Paczuski, M., Bassler, K.E., Corral, Á.: Self-organized networks of competing boolean agents. Phys. Rev. Lett. 84(14), 3185 (2000)

Eguiluz, V.M., Zimmermann, M.G.: Transmission of information and herd behavior: an application to financial markets. Phys. Rev. Lett. 85(26), 5659 (2000)

Slanina, F.: Harms and benefits from social imitation. Physica A 299, 334 (2001)

Hart, M., Jefferies, P., Johnson, N.F., Hui, P.M.: Crowd-anticrowd theory of the minority game. Physica A 298(3), 537–544 (2001)

Marsili, M.: Market mechanism and expectations in minority and majority games. Physica A 299(1), 93–103 (2001)

Galstyan, A., Lerman, K.: Adaptive boolean networks and minority games with time-dependent capacities. Phys. Rev. E 66, 015103 (2002)

De Martino, A., Marsili, M., Mulet, R.: Adaptive drivers in a model of urban traffic. Europhys. Lett. 65(2), 283 (2004)

Anghel, M., Toroczkai, Z., Bassler, K.E., Korniss, G.: Competition-driven network dynamics: emergence of a scale-free leadership structure and collective efficiency. Phys. Rev. Lett. 92, 058701 (2004)

Lo, T.S., Chan, H.Y., Hui, P.M., Johnson, N.F.: Theory of networked minority games based on strategy pattern dynamics. Phys. Rev. E 70, 056102 (2004)

Moro, E.: Advances in Condensed Matter and Statistical Physics, Chapter the Minority Games: An Introductory Guide. Nova Science Publishers, New York (2004)

Xie, Y.B., Hu, C.-K., Wang, B.H., Zhou, T.: Global optimization of minority game by intelligent agents. Eur. Phys. J. B 47, 587 (2005)

Zhong, L.-X., Zheng, D.-F., Zheng, B., Hui, P.M.: Effects of contrarians in the minority game. Phys. Rev. E 72, 026134 (2005)

Zhou, T., Wang, B.-H., Zhou, P.-L., Yang, C.-X., Liu, J.: Self-organized boolean game on networks. Phys. Rev. E 72(4), 046139 (2005)

Challet, D., Marsili, M., Zhang, Y.-C.: Minority Games. Oxford Finance, Oxford University Press, Oxford (2005)

Lo, T.S., Chan, K.P., Hui, P.M., Johnson, N.F.: Theory of enhanced performance emerging in a sparsely connected competitive population. Phys. Rev. E 71, 050101 (2005)

Borghesi, C., Marsili, M., Miccichè, S.: Emergence of time-horizon invariant correlation structure in financial returns by subtraction of the market mode. Phys. Rev. E 76, 026104 (2007)

Challet, D., De Martino, A., Marsili, M.: Dynamical instabilities in a simple minority game with discounting. J. Stat. Mech. Theory Exp 2008(4), L04004 (2008)

Yeung, C.H., Zhang, Y.C.: Minority games. In: Meyers, R.A. (ed.) Encyclopedia of Complexity and Systems Science, pp. 5588–5604. Springer, New York (2009)

Bianconi, G., De Martino, A., Ferreira, F.F., Marsili, M.: Multi-asset minority games. Quant. Finance 8(3), 225–231 (2008)

Huang, Z.-G., Zhang, J.-Q., Dong, J.-Q., Huang, L., Lai, Y.-C.: Emergence of grouping in multi-resource minority game dynamics. Sci. Rep. 2, 703 (2012)

Zhang, J.-Q., Huang, Z.-G., Dong, J.-Q., Huang, L., Lai, Y.-C.: Controlling collective dynamics in complex minority-game resource-allocation systems. Phys. Rev. E 87, 052808 (2013)

Dong, J.-Q., Huang, Z.-G., Huang, L., Lai, Y.-C.: Triple grouping and period-three oscillations in minority-game dynamics. Phys. Rev. E 90(6), 062917 (2014)

Zhang, J.-Q., Huang, Z.-G., Wu, Z.-X., Su, R.-Q., Lai, Y.-C.: Controlling herding in minority game systems. Sci. Rep. 6, 20925 (2016)

Das, R., Wales, D.J.: Energy landscapes for a machine-learning prediction of patient discharge. Phys. Rev. E 93, 063310 (2016)

Kim, B.-J., Kim, S.-H.: Prediction of inherited genomic susceptibility to 20 common cancer types by a supervised machine-learning method. Proc. Natl. Acad. Sci. (UDA) 115(6), 1322–1327 (2018)

Singh, S., Okun, A., Jackson, A.: Artificial intelligence: learning to play go from scratch. Nature 550(2), 336–337 (2017)

Murray Campbell, A., Joseph Hoane, A., Hsu, F.H.: Deep blue. Artif. Intell. 134(1), 57–83 (2002)

Gebru, T., Krause, J., Wang, Y., Chen, D., Deng, J., Aiden, E.L., Li, F.-F.: Using deep learning and Google Street View to estimate the demographic makeup of neighborhoods across the United States. Proc. Natl. Acad. Sci. (UDA) 114(50), 13108–13113 (2017)

Naik, N., Kominers, S.D., Raskar, R., Glaeser, E.L., Hidalgo, C.A.: Computer vision uncovers predictors of physical urban change. Proc. Natl. Acad. Sci. (UDA) 114(29), 7571–7576 (2017)

Blumenstock, J., Cadamuro, G., On, R.: Predicting poverty and wealth from mobile phone metadata. Science 350(6264), 1073–1076 (2015)

Dia, H., Panwai, S.: Modelling drivers’ compliance and route choice behaviour in response to travel information. Nonlinear Dynam 49(4), 493–509 (2007)

Li, D.-J., Tang, L., Liu, Y.-J.: Adaptive intelligence learning for nonlinear chaotic systems. Nonlinear Dyn. 73(4), 2103–2109 (2013)

Kianercy, A., Galstyan, A.: Coevolutionary networks of reinforcement-learning agents. Phys. Rev. E 88, 012815 (2013)

Zhang, S.-P., Dong, J.Q., Liu, L., Huang, Z.-G., Huang, L., Lai. Y.-C.: Artificial intelligence meets minority game: toward optimal resource allocation. ArXiv e-prints, (2018)

Barto, A.G., Sutton, R.S.: Reinforcement Learning: An Introduction, vol. 21. The MIT press, Cambridge (1998)

Bellman, R.E.: Dynamic Programing. Princeton University Press, Princeton (1957)

Sutton, R.S.: Learning top redict by the methods of temporal difference. Mach. Learn. 3, 9–44 (1998)

Watkins, C.J.C.: Learning from delayed rewards. Ph.D. thesis Cambridge University, (1989)

Watkins, C.J.C.H., Dayan, P.: Q-learning. Mach. Learn. 8, 279–292 (1992)

Potapov, A., Ali, M.K.: Convergence of reinforcement learning algorithms and acceleration of learning. Phys. Rev. E 67, 026706 (2003)

Sato, Y., Crutchfield, J.P.: Coupled replicator equations for the dynamics of learning in multiagent systems. Phys. Rev. E 67, 015206 (2003)

Kianercy, A., Galstyan, A.: Dynamics of boltzmann \(q\) learning in two-player two-action games. Phys. Rev. E 85, 041145 (2012)

Acknowledgements

We thank Prof. Ying-Cheng Lai, Richong Zhang, Liang Huang and Dr. Xu-sheng Liu for helpful discussions. This work was supported by NSFC Nos. 11275003, 11575072, 61431012 and 11475074, the Science and Technology Coordination Innovation Project of Shaanxi Province (2016KTCQ01-45), and the Fundamental Research Funds for the Central Universities No. lzujbky-2016-123. ZGH gratefully acknowledges the support of K. C. Wong Education Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Appendix

Appendix

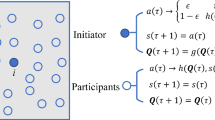

The critical curve of interaction transition. There are two types of agents (RL agents and DD agents) as we have set in the model and the corresponding decision rules in a resource allocation system. Their density is \(\eta _{q}\) and \(\eta _{d}\), respectively, in system and satisfies \(\eta _{q}+\eta _{d}=1\). There are only two kinds of resources \(+\) and −, and the capacity of each resource which can accommodate agents is \(C_r=1/2\). Moreover, the preference probability is p or \(1-p\) for resource \(+\) or − in a period T for DD agents. Therefore, the optimization resource allocation in the system needs to satisfy:

Then, we can solve Eq. 11\(p=\frac{C_r-1+\eta _{q}}{\eta _{d}}\). In the right area of the curve, there is not interaction mechanism between RL agents, but only exists the interaction between RL agent and DD agent, because the minority resource is determined by the density of DD agent. In the left area of the curve, these two interaction mechanisms determined the evolution behavior of the system simultaneously.

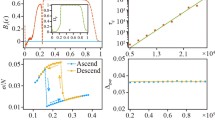

Transient state behavior of \(\rho _+^{q}\) for RL agents at \(t = 2000\). a A small system size (cyan dot line) and a large system size (magenta dot), but the RL agent density \(\eta _{q}\) is fixed. b Reports three exploration rates \(\epsilon =0.04\) (bottle green), 0.03 (yellow dot) and 0.02 (magenta dot). The else parameters \(p = 0.2, \eta _{q} = 0.02, \alpha =0.9, \gamma =0.9, T =2000\). (Color figure online)

Next, we calculate the target value of RL agents \(\rho ^{*}\), namely the magenta line position as shown in Fig. 1. It is not difficult to understand that \(\rho ^{*}\) is 0 or 1 in the right area of the curve in the p and \(\eta _d\) parameter space, because the interaction between RL agents is eliminated, and they converge to the minority resource independently by oneself. Namely, the target resource is minority resource. In the left area of the curve, the target value of RL agents \(\rho ^{*}\) satisfies as follows relationship \(\rho ^{*}\eta _{q}=C_r-\eta _d\) in the p and \(\eta _d\) parameter space, namely \(\rho ^{*}=\frac{(C_r-\eta _dp)}{\eta _{q}}\). Therefore, the target value is \(\min (\frac{(C_r-\eta _dp)}{\eta _{q}},1)\) for RL agents in entire p and \(\eta _d\) parameter space. It gives the target that RL agent can learn.

We investigate evolution behavior of the deviation \(\kappa =\sqrt{\langle (0.5-\rho _+^{q})^2\rangle }\) with the exploration rate \(\epsilon \) in Section 3.2 in this paper. Then, in this section, we investigate the impact of system size and the exploration rate \(\epsilon \) on the transient state when environment is changing. Figure 9a shows the transient behavior of \(\rho ^{q}_+\) under different sizes of system, but the RL agents density \(\eta _{q}\) is identical, a small system size (cyan dot line) and a big system size (magenta dot) at \(t = 2000\). The parameters p and \(\eta _{q}\) are still located on the right region of the critical curve in p and \(\eta _{d}\) space. We find the two oscillatory transient curves of \(\rho ^{q}_+\) are not different qualitatively, only become more smooth for the bigger system size with the same \(\eta _{q}\). In fact, it is gradually revealing statistical effect of reinforcement learning agents when the number of RL agents increases for random exploration \(\epsilon \). That is to say, the dynamics mechanism of the system is not changed. The oscillatory convergent character of \(\rho ^{q}_+\) is not affected by the size of system.

Figure 9b shows the transient state behavior of \(\rho ^{q}_+\) with three different exploration rates for reinforcement learning agents. The transient state time decreases prominently with the exploration rate rising slightly. The higher the exploratory rate for RL agents, the easier to discover the useful route; therefore, the convergence speed of \(\rho ^{q}_+\) is faster. However, the larger \(\epsilon \) is advantageous to adjust strategy for their payoff maximization when the environment occurs changing, but it is not useful to hold state in a stable environment for the large fluctuation from the exploration rate \(\epsilon \).

Rights and permissions

About this article

Cite this article

Zhang, SP., Zhang, JQ., Huang, ZG. et al. Collective behavior of artificial intelligence population: transition from optimization to game. Nonlinear Dyn 95, 1627–1637 (2019). https://doi.org/10.1007/s11071-018-4649-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-018-4649-4