Abstract

We consider reduction of dimension for nonlinear dynamical systems. We demonstrate that in some cases, one can reduce a nonlinear system of equations into a single equation for one of the state variables, and this can be useful for computing the solution when using a variety of analytical approaches. In the case where this reduction is possible, we employ differential elimination to obtain the reduced system. While analytical, the approach is algorithmic and is implemented in symbolic software such as MAPLE or SageMath. In other cases, the reduction cannot be performed strictly in terms of differential operators, and one obtains integro-differential operators, which may still be useful. In either case, one can use the reduced equation to both approximate solutions for the state variables and perform chaos diagnostics more efficiently than could be done for the original higher-dimensional system, as well as to construct Lyapunov functions which help in the large-time study of the state variables. A number of chaotic and hyperchaotic dynamical systems are used as examples in order to motivate the approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nonlinear dynamical systems are ubiquitous in mathematics, engineering, and the sciences, with many real-world phenomenon governed by such nonlinear processes. In particular, nonequilibrium and chaotic dynamics are a continuing area of active research for applied mathematicians, as approximating such dynamics accurately and efficiently can be quite challenging. In the present paper, we shall consider reduction of dimensionFootnote 1 for nonlinear dynamical systems. This approach has previously been employed in the literature in order to enable the construction of Lyapunov functions [1] and equilibrium dynamics [2], as well as to allow one to more easily approximate chaotic attractors analytically [3,4,5]. One method for reduction of dimension is differential elimination, in which one algorithmically reduces the nonlinear dynamical system into a single ordinary differential equation (ODE) for one of the state variables. However, this is possible only when the system reduces to an ODE; if the reduction is instead to an integro-differential equation, the process is not algorithmic and specific cases must be handled with more individual care. Our focus shall be on dynamical systems giving chaotic dynamics, but the approach can certainly be applied for non-chaotic ODE systems. We give an overview of reduction of dimension, after which we demonstrate in several ways why one might wish to apply this technique.

With the wide range of numerical methods available for solving nonlinear first-order ODE systems of high order, one may wonder why it might be advantageous to convert such systems into a single higher-order ODE. We shall mention several situations in which the differential elimination, and more generally reduction of dimension, may prove useful. We then outline the paper.

Often times, if one is trying to approximate the solution to a nonlinear system through some sort of analytical approximation, via series, perturbation, or more complicated approaches, one quickly finds that the coupled equations require balancing many terms coming from the expansion for each of the state variables. In the case of a single state variable governed by a higher-order ODE, one needs only track terms in a single asymptotic expansion. This approach has been applied when using Taylor series, approximate Fourier series, and asymptotic expansions in other types of basis functions to the solution of a system of nonlinear ODE. In hybrid analytic–numeric methods, such as the homotopy analysis method [6], such reductions of a system to a single equation also simplify the optimization problem which is solved to obtain the error-minimizing solution (see, for instance, [2], where the present approach is used in such a capacity). Therefore, the reduction of dimension can greatly reduce the complexity of analytical calculations under several frameworks.

Contraction maps or Lyapunov functions are useful tools for discussing the convergence of solutions to nonlinear dynamical systems to large-time steady or quasi-steady dynamics. In situations where contraction maps or Lyapunov functions are known for a given dynamical system, the state variable governed by a single higher-order ODE necessarily results in a contraction map in the single state variable. However, as is well known to those studying stability of nonlinear systems, it is not often easy to obtain contraction maps for complicated systems. As we shall show here, it is possible to use the reduction of a system to a single higher-order ODE in order to construct a contraction map for the state variable governed by the aforementioned higher-order ODE. The existence of such a map can then be used to deduce the large-time dynamics of the state variable, as well as for the other state variables in the original system. One example of this is given in [1], and other examples are provided in Sect. 5.

Related to both the topic of analytical approximations and Lyapunov functions would be long-time dynamics and equilibrium behavior of nonlinear dynamical systems. Indeed, in order to study the equilibrium structure of a high-order system of ODEs, one must solve a coupled system of nonlinear algebraic equations in order to recover the fixed points for the state variables. First reducing the system to a single ODE allows one to obtain a single nonlinear algebraic equation for the fixed point of a single state variable, which can then be used to recover the fixed points of the other state variables. Therefore, when such a reduction to a single ODE is possible, the need to solve a nonlinear algebraic system for all of the fixed points simultaneously is eliminated, resulting in what is often a far less computationally demanding problem.

Another topic is great recent interest in nonlinear science has been both the synchronization of chaos [7] and the control of chaos. In situations where one is interested in mitigating the possibility of emergent chaos, one can couple a chaotic system to various control terms, or indeed to additional dynamical systems, which may lend a degree to stability. Under such approaches, one often increases the complexity or even the dimension of the dynamical system being solved. As such, methods to reduce the dimension of such systems could improve compatibility. Furthermore, since the control of chaos is often linked to a control term which itself is determined by a Lyapunov function, the construction of contraction maps through the reduction approach outlined here could be of great use.

As stated before [8], the competitive modes analysis gives an interesting link between the geometry of phase space possibly yielding chaotic trajectories (recall that the competitive modes requirements appear to be a necessary, albeit not sufficient, condition for chaos [9,10,11,12,13,14]). Conversely, the differential elimination may cast light into the geometry of solutions in the space of derivations. Since this result of the differential elimination is a single higher-order ODE, and since any chaos emergent from the nonlinear system should be encoded in the single higher-order ODE, the differential algebraic structure of such equations may cast light into practical geometric tools by which one may study systems in which chaos is observed. In particular, through this reduction approach, the calculation of mode frequencies in the standard competitive modes analysis becomes much simpler.

This paper is outlined as follows. In Sect. 2, we provide an algorithmic approach, to differential elimination for nonlinear dynamical systems based upon differential algebra. First laying out the general theory, we then give specific MAPLE code for performing the differential elimination in a systematic manner. The algorithmic approach is useful in the case where the dynamical system can be reduced to a single ODE in terms of only one of the state variables. In Sect. 3, we implement the approach in order to reduce a variety of chaotic and hyperchaotic systems, finding that the form of the nonlinearity in the dynamical system will strongly influence the reducibility properties.Footnote 2 However, in cases where the dynamical system is not reducible using differential elimination, one may still obtain more complicated reductions, for instance in terms of integrals, resulting in more complicated integro-differential equations for the reduced state variable. The possible results are illustrated through concrete examples for the Rössler system (which is completely reducible), the Lorenz system (which is partially reducible—that is, reducible in some but not all state variables), and the Qi–Chen–Du–Chen–Yuan (which is irreducible under differential elimination, but which can be reduced to an integro-differential equation). We give summarizing observations regarding the reducibility of dynamical systems in Sect. 4.

The remainder of the paper is devoted to applications of reduction of dimension for dynamical systems. In Sect. 5, we demonstrate that reduction of dimension can be useful for obtaining contraction maps and Lyapunov functions, which in turn may be used to determine asymptotic stability of dynamical systems and also to control chaos in such systems. In Sect. 6, we demonstrate that reduction of dimension can be used to simplify calculations involved in certain techniques for studying the solutions of nonlinear dynamical systems. Indeed, when applicable, we find that the approach greatly reduces the number of nonlinear algebraic equations required to be solved when constructing trajectories in state space via undetermined coefficient methods by a factor of 1 / n, where n is the dimension of the dynamical system, meaning that the number of equations needing to be solved will not increase with the size of the system. Furthermore, when applying the competitive modes analysis (which is a type of diagnostic criteria for finding chaotic trajectories in nonlinear dynamical systems), we find that only one binary comparison is needed if one first reduces the dimension of the dynamical system so that there is a single equation for one state variable. In contrast, there are normally of order \(2^{n-1}\) comparisons needed for an n-dimensional dynamical system. In Sect. 7, we provide a discussion and possible avenues for future work.

2 Algebraic approach to differential elimination

Systems of differential equations are ubiquitous and widely studied. Ritt [15] and Kolchin [16] pioneered the field of differential algebra, an algebraic theory for studying solutions of ordinary and partial differential equations. We are particularly interested in differential elimination, an algorithmic subtheory that can simplify systems of parameterized algebraic differential equations. This permits one to reduce the dimension of a dynamical system so that one is left with a single ODE in the state variable.

2.1 Algebra preliminaries

Here we briefly review concepts from algebra and differential algebra. For reference books in differential algebra, see [15, 16]. If I is a subset of a ring R, then (I) is the (algebraic) ideal generated by I. Let I be an ideal of R. Then \(\sqrt{I}\) denotes the radical of I. A derivation over a ring R is a map \( R \mapsto R\) which satisfies (we write \(\dot{a}\) is the derivative of a), for every \(a,b \in R\), \(\dot{(a+b)}=\dot{a} + \dot{b}\) and \(\dot{(a~b)} = (\dot{a})b+a(\dot{b}).\) The field of differential algebra is based on the concept of a differential ring (resp. field), which is a ring (resp. field) R endowed with a set of derivations that commutes pairwise. A differential ideal [I] of a differential ring R is an ideal of R stable under the action of derivation.

Differential algebra is more similar to commutative algebra than analysis. In commutative algebra, Buchberger solved the membership problem (tests whether a given polynomial is contained in a given ideal) through the theory of Gröbner bases [25]. From algebraic geometry, we know the set of polynomials which vanish over the solutions of a given polynomial system form an ideal and even a radical ideal [26]. In the case of differential equations, the set of differential polynomials which vanish over the analytic solutions of a given system of differential polynomial equations form a differential ideal and even a radical differential ideal [15]. Ritt solved the theoretical problem (of membership for radical differential ideals) and developed algorithmic tools to solve systems of polynomial ODE and PDEs; however, Ritt’s algorithm requires factorization.

Due to the complexity of factorization, Boulier and co-authors avoided it by developing the Rosenfeld–Gröbner algorithm, based on the work of Seidenberg and Rosenfeld, and incorporating Gröbner bases [19, 20, 22]. Since then, the algorithm has been improved both theoretically and practically [17, 18, 21] and it no longer requires Gröbner bases. It is available in the DifferentialAlgebra package in MAPLE [17] and SageMath as an interface for the BLAD and BMI libraries [27, 28].

Algorithmically, differential elimination involves manipulation of finite subsets of a differential polynomial ring \(R = K\{U\}\) where K is the differential field of coefficients (i.e., \(K = \mathbb {Q}\)), and U is a finite set of dependent variables. The elements of R are differential polynomials, which are polynomials built over the infinite set of all derivatives \(\varTheta U\), of the dependent variables. Considering a system \(\varSigma \) of polynomial differential equations, here, we consider the Lorenz system of three ordinary differential equations:

The Lorenz system can be rewritten as:

The Rosenfeld–Gröbner algorithm takes as an input a differential system \(\varSigma \) and a ranking. A ranking > is any total ordering over the set \(\varTheta U\) of all derivatives of the elements of U which satisfies the following axioms: \(a<\dot{a}\) and \(a<b \Rightarrow \dot{a}<\dot{b} \) for all \(a,b \in \varTheta U\). The Rosenfeld–Gröbner algorithm transforms \(\varSigma \) into finitely many systems called regular differential systems, which reduces differential problems to purely algebraic ones that are triangular. The next step is purely algebraic and transforms the regular differential system into finitely many characteristic presentations, \(C_1, \ldots C_r\). Rosenfeld–Gröbner outputs this finite family \(C_1,\ldots C_r\) of finite subsets of \(K\{U\} \setminus K\), where each \(C_i\) defines a differential ideal \([C_i]\). The radical \(\sqrt{ [\varSigma ]}\) of the differential ideal generated by \(\varSigma \) is the intersection presented by characteristic sets:

Note differential ideals \([C_i]\) do not need to be prime; however by Lazard’s lemma, they are necessarily radical. Differential algebra elimination has proven useful for parameter estimation, identifiability, and model reduction of biological and chemical systems [23, 24].

2.2 Computational method

We demonstrate reduction in dimension via differential elimination algorithm RosenfeldGroebner in the DifferentialAlgebra package implemented in MAPLE. For the sake of using a concrete example, we choose the Lorenz system. First, we call the package:

Next we input the Lorenz system:

Next we form our differential ring, embedding the rank of dependent variables in blocks and independent variables in derivations. Since we are considering ordinary differential equations, derivations are set to one ordering, time t. We remark that the DifferentialAlgebra package enables differential elimination of PDEs by including additional inputs for the derivations (e.g., derivations=[u,x,t]. Note, sys is assumed to have coefficients in the field \(\mathbb {Q}[x_1,x_2,x_3]\) obtained by adjoining the independent variables to the field of rationals, and symbolic parameters a, b, c are considered arbitrary in the coefficient field. To form the differential ring, we input:

Note that \(x_1\) stands to the rightmost place on the list which identifies that we are attempting to reduce the differential equation to only one variable, i.e., \(x_1(t)\). This ranking eliminates \(x_3\) with respect to \(x_2\), and then eliminates \(x_2\) with respect to \(x_1\). We now call the Rosenfeld–Gröbner algorithm for our system and differential polynomial ring:

This will return the characteristic presentation (which should be understood as an intersection), with the equations given by the ranking, with the final equation a single ODE for x1(t), provided that it exists and can be computed by the algorithm. In some cases, the algorithm will keep running and therefore should eventually be terminated by the user. For such cases, it is unlikely that a reduction of the specified form exists. However, as we shall consider in the next section, when the reduction is to an integro-differential equation, rather than an ODE, the approach will not identify the reduced equation.

3 Reduction of dimension: applications

Here we apply the method of differential elimination to several nonlinear dynamical systems known to give chaos, in order to see if these equations can be reduced. We first apply the algorithmic approach outlined in Sect. 2, finding that the approach gives a complete reduction (all state variables can be isolated and expressed as the solution to single uncoupled ODEs), a partial reduction (one or more, but not all, state variables can be isolated and expressed as the solution to single uncoupled ODEs), or returns no reduction (the algorithm does not complete in a fixed amount of time), in which case none of the state variables can be expressed as a solution to a single ODE reducible from the original system. For simplicity, we shall only consider autonomous systems.

We consider a number of examples of chaotic systems in Table 1, with the results of the differential elimination algorithm given. We also give a summary of the dynamics of the example equations selected. Since the form of these equations may vary through the literature, we give a list of the specific form of the equations considered, in “Appendix 1”. Note that we have considered the differential elimination algorithm for the arbitrary parameter values listed in “Appendix 1”. Table 1 demonstrates that the structure of the dynamical system tends to play a strong role in whether the system can be reduced. Indeed, equations with a single nonlinearity tend to be completely or partially reducible; hence, at least one state variable can be solved for via a single nonlinear ODE. On the other hand, the equations with many nonlinear terms or higher-order degree of nonlinearity (we consider only equations with polynomial nonlinearities) tend more often to be irreducible using the approach. Of the listed equations, note that the Rössler system is one of the few completely reducible systems, lending validity to the belief that it is indeed one of the simplest possible continuous-time dynamical systems giving chaos. Meanwhile, the commonly studied Lorenz system is only partially reducible under the approach. More complicated systems tend to be irreducible under the algorithm, and many of these give more complicated dynamics such as multiple scroll attractors.

Note that the algorithm returns a ‘No’ if a reduction is not obtained within a given time interval. For cases where the algorithm found a reduction, the computation time was fairly quick. We are therefore comfortable in assuming that a reduction to an ODE does not exist in cases where the algorithm times out. For such cases, the system may still admit a reduction, but not strictly in derivatives of one of the state variables. One such example would be a system which is reducible to an integro-differential equation in one of the state variables, but never to simply an ODE.

We next consider hyperchaotic systems (chaotic systems giving two or more positive Lyapunov exponents) in Table 2. Again, we find that the more complicated the functional form of the nonlinearities, the less likely a system seems to be reducible. Furthermore, hyperchaotic generalizations of known chaotic systems appear to maintain their reducibility properties, since often a simple additional equation is added to make a chaotic system hyperchaotic. The hyperchaotic Rössler system is completely reducible, as was the related chaotic Rössler system, again suggesting that the chaotic and hyperchaotic Rössler systems are some of the simplest systems which still exhibit chaos and hyperchaos, respectively.

The results indicate that completely reducible systems are perhaps the simplest systems giving chaos or hyperchaos. Again, this would support the qualitative and topological claims that the Rössler systems are some of the simplest possible equations permitting chaos [56], as they each involve only a single quadratic nonlinearity. On the other hand, systems with stronger polynomial nonlinearities, or systems with many nonlinear terms, appear to often be irreducible under differential elimination. Note that for cases where the reduction might involve integrals, resulting in a type of integro-differential equation, the differential elimination algorithm would miss such a reduction, even though it exists. This is due to the fact that the differential elimination algorithm is working over the ring of derivations, which does not include integrals. Indeed, since integral operators are fairly cumbersome to introduce compared to their differential operator counterparts (we discuss this later in Sect. 7), obtaining an algorithmic approach including integrals would be challenging. Therefore, the differential elimination algorithm outlined in Sect. 2 appears to be a very useful tool for reducing the dimension of dynamical systems, provided that a reduction to a single ODE exists. For the more complicated models, we find the need to proceed on a case-by-case basis with manual manipulations due to any integration needed.

We demonstrate reduction of dimension for chaotic systems into single higher-order ODEs in the next three subsections. We pick a case where all state variables can be isolated (the Rössler system), a case where one of the state variables can be isolated in terms of a differential equation (the Lorenz system), and finally a case where none of the state variables can be isolated in terms of a differential equation (the Qi–Chen–Du–Chen–Yuan system) so that any reduction would necessarily involve integrals. For all cases considered, we let \(x,y,z\in C^n(\mathbb {R})\) where n is the dimension of the relevant dynamical system, and we take \(a,b,c \in \mathbb {R}\) to be parameters.

3.1 Rössler system

The Rössler equation [34] reads

We first obtain the ODE for y(t). Note from the second equation that \(x=\dot{y}-ay\), so that \(\dot{y}=\ddot{y}-a\dot{y}\) and hence from the first equation we have \(z=-\ddot{y}+a\dot{y}-y\). Placing these into the third equation, and performing algebraic manipulations, we obtain

Note that this equation is third order, and therefore the information of the three-dimensional system (3) can be encoded in this single ODE. By similar manipulations, one may arrive at an equation for x(t),

and an equation for z(t),

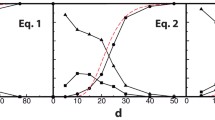

In Fig. 1, we plot a numerical simulation of the chaotic attractor for the Rössler system, while in Fig. 2, we give the time series for the numerical solution y(t) to (4), which was the equation for y(t) obtained via reduction of dimension. The function y(t) from (4) encodes all of the information from the chaotic attractor.

Time series plot of the function y(t) in (4) when \(a=0.2\), \(b=0.2\), \(c=5.7\). This function encodes all of the information for the chaotic attractor in the Rössler system corresponding to \(a=0.2\), \(b=0.2\), \(c=5.7\)

3.2 Lorenz system

The Lorenz system [29] is given by

Observing from the first two equations that

and

the third equation can be used to obtain a single ODE for the state variable x(t), viz.,

This agrees with what one obtains from the differential elimination. On the other hand, we observe that the algorithmic approach to differential elimination is useful for situations in which there is no obvious route to reduce a system into a single equation (through eliminations and substitutions). A good example of this is found when trying to obtain a differential equation for the state variable z(t) alone. Using the differential elimination, we arrive at a rather complicated equation of the form

where \(P_1\) and \(P_2\) are complicated polynomials that we do not list for the sake of brevity. Interestingly, this is a fully nonlinear equation, since the highest order derivative enters into the equation nonlinearly. In contrast, the equation obtained for the state variable x(t) is quasi-linear, since it is linear in the highest derivative. One could differentiate the equation for z(t) in order to isolate the highest derivative, but by doing so one would increase the differential order of the system, thereby decreasing the regularity of the system. This is particularly important in cases where the solution z(t) may only be \(C^3(\mathbb {R})\).

When a system is nonlinear, there may of course be forms of the nonlinearity which do not permit one to obtain an equation for a single state variable in terms of that state variable and its derivatives. A good example of this is the state variable y(t) in the Lorenz system. The algorithmic differential elimination finds no closed differential equation for y(t). As it turns out, the reason for this is that any equation governing y(t) alone will necessarily involve integral terms which cannot be eliminated (due to the nonlinearity of the equation). To see this, note that if we consider the first equation in the Lorenz system, which may be written in the form \((e^{at}x)' = ae^{at}y\), we find

Here \(x_0\) is the initial value of the state x(t), that is \(x(0)=x_0\). Yet, from the second equation in the Lorenz system, we have \(z = b - (\dot{y}+y)/x\), which yields

Placing the representations for x(t) and z(t) into the third equation of the Lorenz system, and performing algebraic manipulations to simplify the resulting expression, we obtain

Note that the equation both involves an integral and is nonautonomous.

Time series plot of the function x(t) in (10) when \(a=10\), \(b=28\), \(c=8/3\). This function encodes all of the information for the chaotic attractor in the Lorenz system corresponding to \(a=10\), \(b=28\), \(c=8/3\)

In Fig. 3, we plot a the numerical solution to the chaotic attractor for the Lorenz system, while in Fig. 4, we give the time series for the numerical solution for the function x(t) governed by (10), which was obtained via reduction of dimension. The function x(t) from (10) encodes all of the information from the chaotic attractor.

3.3 Qi–Chen–Du–Chen–Yuan system

We now consider the Qi–Chen–Du–Chen–Yuan (QCDCY) system [40], which is given by

The differential elimination algorithm indicates there is no reduction to a single ODE in any of the three state variables. This system has a quadratic nonlinearity in each equation, and this added complication is behind the difficulties in obtaining such a reduction. However, we may still obtain an equation for a single state variable, if we are willing to include integral terms. Due to the complexity in obtaining such an equation, we shall restrict our attention to finding a single equation for the state variable z(t), noting that similar approaches can be used to find a single equation for either of the other two state variables, x(t) or y(t).

Let us begin by noting that the second equation in the QCDCY system implies \((\mathrm{e}^t y)' = \mathrm{e}^t (b-z)x\), while placing this into the third equation in the QCDCY system gives \((a+z)(\dot{z}+cz)=x\mathrm{e}^{-at}(\mathrm{e}^{at}x)' = x(\dot{x}+ax)\). This, in turn, implies that state variables x(t) and z(t) satisfy

where \(x(0)=x_0\). The first equation in the QCDCY system has not been used, and we place this relation into that equation to obtain a single equation for the state variable z(t). After several algebraic and differential manipulations, we arrive at the single equation

where we have defined the integral operator

Similar results can be obtained for the other state variables. The fact that the obtained equations involve an integral operator which cannot simply be differentiated away demonstrates why the differential elimination algorithm was not useful for this case. Still, performing the manipulations by hand, we have reduced the fairly complicated QCDCY system into a single integro-differential equation, thereby reducing the dimension of the original system.

Time series plot of the function z(t) in (17) when \(a=35\), \(b=80\), \(c=3\). This function encodes all of the information for the chaotic attractor in the QCDCY system corresponding to \(a=35\), \(b=80\), \(c=3\)

In Fig. 5, we plot the numerical solution for a chaotic attractor arising from the QCDCY system, while in Fig. 6, we give the time series for the numerical solution z(t) to (17), which was obtained via reduction of dimension. The function z(t) from (17) encodes all of the information from the chaotic attractor.

4 Reductions of n-dimensional dynamical systems

We now give some summarizing remarks based on what we have seen in the previous sections. We shall assume that each system is coupled through at least one state variable (otherwise the state variables naturally separate into distinct lower-dimension equations, and the approach is not needed).

4.1 Linear systems

For first-order linear systems of dimension n, there is always a reduction into a single higher-order ODE. This follows from the process of Gaussian elimination. In the case that the matrix of coefficients for such a first-order system is full rank, the resulting higher-order ODE will be of order n. If the matrix of coefficients is singular, then the resulting higher-order ODE will be of order less than n.

4.2 Reducible nonlinear systems

For first-order nonlinear systems of dimension n, there are multiple possibilities, owing to the structure of the nonlinearity.

In cases where the system permits the complete differential elimination (an example being the Rossler equation), all state variables in a first-order nonlinear system can be expressed in terms of a higher-order ODE. Note, however, that it is possible for the order of the single ODE to be different from the dimension n of the first-order system. As an example of this point, consider the system

Clearly, differentiation of the first equation gives \(\ddot{x}= \dot{x} - \dot{y} -\dot{z} = \dot{x} - x^2 +x -x^3\). So, we obtain

which is a second-order equation for the state variable x(t), even though the original system was first order. A similar example can be found in [1], where a fourth-order nonlinear dynamical system was reduced to a single second-order nonlinear ODE.

It is possible for a system to be reduced to a single equation, which is not an ODE. This was evident even for the Lorenz equation, where an equation for one of the state variables involves an integral term in addition to derivative terms. Note that the equation was not closed under any number of differentiations, due to the form of the integral terms. As such, the single reduced equation for the state variable could never be expressed strictly as an ODE of any finite order. Note that this can occur for one of the state variables, while a different state variable might satisfy a finite order ODE. For such cases, the nonlinearity in the system results in their being certain favored state variables with which to perform the reduction to a single ODE.

4.3 Differentially irreducible nonlinear systems

We have observed that for more complicated nonlinear dynamical systems, there is no reduction to a single ODE in one state variable. While it may be the case that differential elimination does not pick up an ODE that does exist, it seems as though the failure of differential elimination is a sign that integrations will be needed in order to reduce the dimension of such systems. Indeed, when integrations of this kind are called for, the manipulations are no longer confined to the specified differential ring, and the differential elimination cannot be performed. While one can attempt these integrations manually, as opposed to algorithmically, obviously it would be desirable to have some kind of algorithmic approach. Perhaps one may adjoin integral operators to the differential ring, in order to perform reductions for more complicated nonlinear systems. This would likely work in cases like the Lorenz system, for which there is partial reducibility under differential elimination. For instance, if one was to define a new variable \(Y(t) = \int _0^t e^{as}y(s)ds\), then one would obtain a nonautonomous ODE for Y(t) from equation (14). Therefore, this fairly simple integral transformation, in addition to differential operators can reduce the dimension of the Lorenz system with respect to the state variable y(t). However, in cases like that of the QCDCY system, note that the form of the integral operator given in (18) is rather complicated, depending nonlinearly on both the state variable z(t) and its derivative \(\dot{z}(t)\). For such cases, there is no combination of elementary integral transforms that can be adjoined to the differential ring which would permit reduction of dimension to a single ODE. As such, it appears as though reduction of dimension for certain more complicated systems will result in reductions to integro-differential equations, rather than ODEs, for some fundamental reason related to how complicated the original dynamical system is. Therefore, the study of possible algorithmic methods for the reduction of dimension for dynamical systems into single scalar integro-differential equations would be an interesting and potentially very useful area of future work.

5 Contraction maps and Lyapunov functions

Turning out attention now to practical applications for reduction of dimension, recall that contraction maps and Lyapunov functions are useful tools for studying the asymptotic stability of nonlinear dynamical systems. In this section, we use the three examples worked explicitly in Sect. 3 in order to demonstrate the utility of reduction of dimension for finding Lyapunov functions. Using these results, we can recover stability results for these dynamical systems which were obtained through other approaches, and which agree with existing results in the literature.

5.1 Rössler system

The Rössler system has two equilibrium values, \(\pm y^*\), for y(t), and the constant \(y^*\) must satisfy the quadratic equation

In order to discover a Lyapunov function for the Rössler system, it is tempting to assume a bowl-shaped map of the form \(\alpha x^2 + \beta y^2 + \gamma z^2\), or minor variations on this theme involving higher power polynomials of even order, but the approach evidently proves fruitless. Therefore, we shall use one of the three equations obtained for the isolated state variables of the Rössler system.

Consider Eq. (4) for the Rössler system (3). Let us write \(Y(t) = y(t)-y^*\) in the neighborhood of either equilibrium value \(y^*\). This transformation will prove useful, as the Lyapunov function needs to vanish at the equilibrium value selected. (There is therefore the need to construct such a function in a neighborhood of each equilibrium point.) Under this transformation, (4) is put into the form

Let us define a function \(m=\ddot{Y} - a\dot{Y}+Y\) so that (22) is put into the form

Observe that (23) can be written as

From this, we recover

where \(m_0\) is a constant of integration. As we are interested in recovering information about the asymptotic stability of the Rössler system, let us pick the initial condition \(Y(0)=\epsilon \). This corresponds to setting the initial condition such that it is contained within a neighborhood of the equilibrium value. Let us also restrict \(|a|<2\) (this will simplify the mathematics and is consistent with the physics of the Rössler system). Then, we obtain

where C is a constant that will depend on the initial value of \(\dot{Y}(0)\) (the value of which will not impact our analysis) and K(t, s) is the kernel

Observe that for \(-2< a < 0\) the map is a contraction. Given arbitrarily small \(\epsilon >0\), for large enough time \(\tilde{t}(\epsilon )>0\), the solution Y(t) will lie in a neighborhood \(-\epsilon< Y(t) < \epsilon \) for all \(t>\tilde{t}(\epsilon )\). Therefore, \(Y\rightarrow 0\) as \(t\rightarrow \infty \). Yet, by definition of Y(t), this implies \(y \rightarrow y^*\) as \(t\rightarrow \infty \). Using this, one may shown that \(x\rightarrow -ay^*\) and \(z\rightarrow -y^*\) as \(t\rightarrow \infty \). Hence, we have shown that \(a<0\) gives a stable solution, which was already known from different work. The nice thing about this approach is that it allows us to bypass a linear stability analysis involving the calculation of eigenvalues at the algebraic solution to \(y^*\) found from (21). Indeed, we did not even need to calculate the equilibrium value \(y^*\) for the present analysis, as the analysis holds for an arbitrary equilibrium value satisfying (21).

5.2 Lorenz system

In order to find a Lyapunov function for the Lorenz system in a neighborhood of the zero equilibrium \((x,y,z)=(0,0,0)\), let us assume a bowl-type function of the form

where \(\alpha >0\), \(\beta >0\), and \(\gamma >0\) are constant parameters to be selected. Recall that physically interesting model parameters a, b, and c are positive. Then, the time derivative of V is given by

Clearly, we should take \(\gamma = \beta b\). Note that

Then,

hence \(\dot{V} \le 0\) provided that \(\beta b < 2\sqrt{\alpha \beta a} - \alpha a\) (since this would imply \(-\alpha a x^2 - \beta y^2 + (\alpha a + \beta b)xy <0\)). Let us pick \(\beta = \alpha a\). Then, the condition reduces to \(b<1\). As \(\alpha >0\) was arbitrary, we set \(\alpha = \frac{1}{2}\). This means that whenever \(a>0\), \(0<b<1\), and \(c>0\), there exists a Lyapunov function

since \(V(0,0,0)=0\), \(|V|\rightarrow \infty \) as \(|(x,y,z)|\rightarrow \infty \) (radially unbounded), and \(\dot{V} <0\) for \((x,y,z)\ne (0,0,0)\). Interestingly, the condition \(<b<1\) is exactly the stability condition known in the literature [30]. Therefore, parameters implying the existence of this contraction map correspond to known stable parameters.

Now, if we were to seek such a map for only one of the state variables, then using what we have obtained in Sect. 3, we find that there exists a contraction map

for the state variable x(t). Then, one may verify \(\dot{\hat{V}}<0\) away from the equilibrium \(x=0\). One can obtain similar contraction maps in either of the other two state variables.

5.3 Qi–Chen–Du–Chen–Yuan system

In order to find a contraction map for the Qi–Chen–Du–Chen–Yuan (QCDCY) system, we begin with the bowl-shaped assumption for a Lyapunov function about the equilibrium \((x,y,z)=(0,0,0)\),

where \(\alpha >0\), \(\beta >0\), and \(\gamma >0\) are constant parameters to be selected. Differentiating with respect to t and using the three constituent equations of the QCDCY system, we have

Since we need \(\alpha >0\), \(\beta >0\), and \(\gamma >0\), we should consider model parameters satisfying \(a>0\) and \(c>0\). To remove the first term, which is hyperbolic in nature, we should choose \(\beta = \alpha + \gamma \). Meanwhile, by the removal of the second term, which is also hyperbolic, we should set \(\beta = -\frac{a}{b}\alpha \) for nonzero b. Since all other parameters are positive, we must require \(b<0\). Then, \(\beta = \frac{a}{|b|}\alpha \), and placing this into \(\beta = \alpha + \gamma \) gives \(\gamma = \frac{a-|b|}{|b|}\). As we need \(\gamma >0\), this gives the added restriction \(a > |b|\). The parameter \(\alpha \) is arbitrary, so we take \(\alpha = \frac{1}{2}\). We therefore obtain

and this candidate function is indeed a contraction map satisfying \(V(0,0,0)=0\), \(\dot{V}<0\) for \((x,y,z)\ne (0,0,0)\), and \(|V|\rightarrow \infty \) as \(|(x,y,z)|\rightarrow \infty \), provided that the parameter restrictions \(a>|b|\), \(b<0\), and \(c>0\) hold. Therefore, under these parameter restrictions, the zero equilibrium is asymptotically stable for the QCDCY system.

When we obtain a single equation for a state variable, even one containing integrals, we may similarly obtain a contraction map. Since we have obtained an equation for the state variable z(t) in the QCDCY system in Sect. 3, we shall choose to construct a contraction map for that state variable here. Doing so, we find that

satisfies \(\dot{\hat{V}}<0\) for all \(z\ne 0\), given that \(a>|b|\), \(b<0\), and \(c>0\). Hence, \(\hat{V}(z,\dot{z})\) is a contraction map for the state variable z(t) when \(a>|b|\), \(b<0\), and \(c>0\). With this, we have determined the stability of the zero equilibrium for the QCDCY system.

6 Computational considerations for chaotic trajectories

There are a variety of methods available for trying to find chaotic trajectories in nonlinear dynamical systems, and the approach highlighted in this paper does not add to collection of tools, explicitly. However, the reduction of dimension approach outlined in Sect. 2 can be used to make finding chaos in dynamical systems more efficient. To demonstrate this, we shall consider two rather distinct approaches, namely, the undetermined coefficients method for obtaining chaotic trajectories and the competitive modes analysis for identification of chaotic parameter regimes. For each of these approaches, we show that an application of reduction of dimension results in a simplification of each test for chaos.

6.1 Calculation of trajectories via undetermined coefficients

When attempting to analytically calculate chaotic trajectories, even in an approximate sense, one often reduces the dimension of the governing equations. The reason for this lies in the fact that it is easier to consider an expansion for one state variable, rather than multiple state variables. To best illustrate this point, let us return to the Rössler equation (3).

One popular method for approximating trajectories of chaotic systems analytically is the undetermined coefficient method [3,4,5]. Since Taylor series expansions for nonlinear systems often have a finite region of convergence centered at the origin, yet the chaotic dynamics remain bounded in space, one often considers non-polynomial base functions. One popular choice would be a function of the form

In this expression, the \(A_j \in \mathbb {R}\) and the parameter \(\alpha >0\) are undetermined parameters which one typically will obtain in an iterative manner. Assuming such an expansion in time, it makes sense to consider a solution the Rössler system (3) of the form \(x(t) = S(t;\left\{ A_j \right\} _{j=-\infty }^{j = \infty },\alpha )\), \(y(t)=S(t;\left\{ B_j \right\} _{j=-\infty }^{j = \infty },\alpha )\), and \(z(t)=S(t;\left\{ C_j \right\} _{j=-\infty }^{j = \infty },\alpha )\). Placing these equations into (3), one would obtain an infinite system of nonlinear algebraic equations for all of the coefficients and the temporal scaling \(\alpha >0\). In practice, one would truncate these expansions, taking the sum over \(-J \le j \le J\) for some \(J>0\). As the solutions may converge slowly—if they converge at all (owing to the nonlinearity), one would need to solve \(6J+1\) nonlinear algebraic equations.

Assume, instead, that we wish to solve (4) by the approach described above. We would then insert the expansion for y(t) into (4). Assuming that we can solve the resulting nonlinear algebraic equations for the constants \(\left\{ B_j \right\} _{j=-\infty }^{j = \infty }\) and \(\alpha \), we can then recover x(t) and z(t) by recalling \(x = \dot{y}-ay\) and \(z = -\ddot{y}+a\dot{y}-y\). From these expressions, it is simple to show \(A_n = -(\alpha |n|+a)B_n\) and \(C_n = -(\alpha ^2 n^2 +a \alpha |n| +1)B_n\) for all \(n\in \mathbb {Z}\). If we were to truncate the expansion for y(t), in the manner described above, we would need to solve \(2J+1\) nonlinear algebraic equations for \(\left\{ B_j \right\} _{j=-J}^{j = J}\) and \(\alpha \), while the coefficients for x(t) and z(t) are immediately found once we know these parameters. This means that by first reducing the dimension of the ODE system, we would be able to reduce the computational complexity of the problem by a factor of three. For higher-dimensional system, the reduction in computational complexity will scale as the dimension of the system itself. In other words, a solution in term of the undetermined coefficient method will not depend on the size of the dynamical system provided that the dynamical system can be reduced in dimension to a single equation governing one state variable. See Table 3 for an example comparing the number of algebraic equations needing to be solved before and after reduction of dimension.

6.2 Competitive modes analysis: a check for chaos

The method of competitive modes involves recasting a dynamical system as a coupled system of oscillators [8,9,10,11,12,13]. Consider the general nonlinear autonomous system of dimension n given by

Differentiation of (39) once gives a coupled system of second-order equations,

When a \(g_i\) is positive, its respective ith equation behaves like an oscillator. The following conjecture is posed in [9]:

Competitive Modes Requirements: The conditions for dynamical systems to be chaotic are given by:

-

(A)

there exist at least two modes, labeled \(g_i\) in the system;

-

(B)

at least two g’s are competitive or nearly competitive, that is, for some i and j, \(g_i \approx g_j >0\) at some t;

-

(C)

at least one of the g’s is a function of evolution variables such as t; and

-

(D)

at least one of the h’s is a function of system variables.

The requirements (A)–(D) essentially tell us that a condition for chaos is that two or more equations in (40) behave as oscillators (\(g_i>0\)), and that two of these oscillators lock frequencies at one or more times. In practice, we find that the frequencies agree at a countably infinite collection of time values [8, 13]. The frequencies should be functions of time (i.e., we have nonlinear frequencies), and there should be at least one forcing function which depends on a state variable.

In order to consider all possible chaotic dynamics, one would have to compare each pair \(g_i = g_j\), \(i\ne j\), \(i,j = 1,2,3,\dots ,n\). Accounting for symmetry, this gives \(2^{n-1} -1\) matchings to consider. For high-dimensional dynamical systems, this number becomes rather large. As an example, in the case of a ten-dimensional system, there will be 511 possible matchings to be checked in order to ensure one has determined the possible chaotic regimes. For such situations, the approach is not particularly efficient, and a competitive modes analysis is often considered for systems of dimension three or four in the literature.

Let us consider dynamical systems (39) which can be put into the form of a single equation for one state variable. As such an equation will encode the dynamics of the complete system, it is sufficient to consider a competitive modes analysis for the resulting equation. Suppose that the resulting equation has maximal derivative of order \(p>0\) (where p need not be equal to n, as we have seen in earlier sections). Then, associating y(t) to this single state variable, we have

Since the competitive modes analysis relies on us obtaining a system of oscillator equations, let take \(y=y_1\) and write the equation (41) as the system

Differentiation of (42) results in the system of second-order equations given by

The right- hand side of the first \(p-2\) second-order equations do not depend on the state variable for each respective equation, so \(g_1=\cdots = g_{p-2} =0\). Hence, these equations are never oscillators. Meanwhile, we can decompose the right-hand sides of the latter two equations, so that

and

Note that there are now exactly two mode frequencies, \(g_{p-1}\) and \(g_p\), and there is always an \(h_i\) depending on state variables. In order to determine whether the system (42) satisfies the competitiveness conditions (A)–(D) (and therefore if the original system satisfies these competitiveness conditions), it is sufficient to check whether \(g_{p-1}=g_p >0\) for some collection of time values. This is only one condition to check, rather than \(2^{n-1}-1\) conditions to check from the original system. Therefore, while the conversion of the system (39) to the equivalent system (42) may seem somewhat roundabout, doing so greatly simplifies the search for possible chaotic dynamics under the competitive modes framework. See Table 4 for examples of the number of comparisons needed when applying the competitive modes analysis both before and after reduction of dimension.

7 Discussion

The construction of Lyapunov functions for nonlinear dynamical systems is often either simple, or quite challenging, with little room in between. Aside from choosing some standard forms (such as the common bowl shape centered about an equilibrium value), there is more an art to the selection of such function. However, as we have demonstrated for the Rössler system, it is possible to use differential elimination to obtain a contraction map in a single state variable, which can then be used to obtain a stability result for all state variables. A similar approach was also employed in [1] to study Michaelis–Menten enzymatic reactions, and after using a reduced equation, the proof of global asymptotic stability for a positive steady state was rather simple. Differential elimination, and reduction of dimension more generally, can therefore be useful in helping one determine asymptotic properties of solutions to nonlinear dynamical systems. On the other hand, it is now known that there are autonomous chaotic systems which lack any equilibrium [57]. The approach outlined here could still be used to construct maps which demonstrate the boundedness of trajectories in time for such systems, even if such trajectories do not approach any fixed points.

While useful for obtaining contraction maps, reduction of dimension is also a promising tool for finding and studying chaotic trajectories in nonlinear dynamical systems. We were able to show that reduction of dimension can be used to simplify calculations involved in using undetermined coefficient methods [3,4,5] by a factor of 1 / n, where n is the dimension of the dynamical system, meaning that the number of equations needing to be solved will not increase with the size of the system, i.e., the computational complexity of the approach will not scale with the size of the system but rather will remain fixed. This means that one may approximate chaotic trajectories through such approaches in systems of rather large dimension, without the computational problem becoming unwieldy, provided that the system can be reduced to a single ODE governing only one state variable.

While chaos is often studied numerically, recently analytical approaches have been employed to construct trajectories approximating chaotic orbits. When employing these methods, it can be beneficial to consider a single equation rather than a system of equations, even if the single equation is more complicated. This is true for series and perturbation approaches, as the reduction of dimension requires one to track fewer functions, which is particularly useful when dealing with messy equations. Furthermore, analytical approaches permitting the control of error, such as the optimal homotopy analysis method, rely on assigning an error control parameter to each state variable. Reduction of dimension can allow one to minimize error via a single control parameter [2], rather than over multiple parameters (as done in, for instance, [58]), which is computationally less demanding.

The reduction of dimension can also be useful for diagnostic tests for chaos. When applying the competitive modes analysis, a type of diagnostic criteria for finding chaotic in nonlinear dynamical systems, one performs binary comparisons between the mode frequencies of an oscillator corresponding to each equation. However, if one first applies reduction of dimension and reduces the system of a single ODE for one state variable, we prove that only one comparison would needed. This is particularly beneficial when studying large dimensional systems, since the number of naive comparisons needed scales like \(2^{n-1}\) in dimension n.

In the future, it would be interesting to consider an algorithm that considered elimination not only elements \(\partial ^n\) (\(\partial = \frac{d}{dt}\)) of a differential ring \(R[[\partial ]]\), but also integral operators \(\partial ^{-n}\) (where \(\partial ^{-n}\) satisfies \(\partial ^{-n}\partial ^{n} = \partial ^0\) and hence is the inversion of the operator \(\partial ^n\)). Indeed, in cases where the differential elimination algorithm failed to give a reduction of the system to a single ODE, we found by manual substitutions that one can arrive at an integro-differential equation. While more complicated, such integro-differential equations can still cast light on the behavior of solutions and can prove useful in obtaining Lyapunov functions. More generally than for dynamical systems, these inverse operators \(\partial ^{-n}\) play a role in the study of operators and integrable hierarchies arising in nonlinear evolution PDEs [59,60,61,62]. Therefore, the extension of the algorithm to the ring of formal Laurent series in \(\partial \) would be a fruitful area for future work, not only for dynamical systems, but also for integrable partial differential equations.

8 Conclusions

From our study, we find that reduction of dimension permits one certain computational benefits when used in conjunction with analytic methods. Let \(\dim (S)\) denote the dimension of a nonlinear system S. When used in conjunction with series or perturbation approaches to approximate solution trajectories, reduction of dimension can reduce the number of unknown series or perturbation expansions by a factor equal to \(\dim (S)\). Therefore, when such an approach is applicable, the number of series or perturbation expansions needed becomes independent of the original dimension of the system. When using competitive modes in order to determine likely candidates for chaos, the results are even more promising, as instead of needing \(2^{\dim (S)- 1}\) comparisons, one needs only to perform one comparison if first one successfully employs reduction of dimension. This is incredibly useful when \(\dim (S)\) is large. Additionally, there is an art to obtaining Lyapunov functions or contraction maps for nonlinear systems. However, applying reduction of dimension first, one only has to search for a Lyapunov function for a single equation. While this may still not be an easy task, it is often more intuitive to consider a single scalar equation. Therefore, we find that reduction of dimension can improve various aspects of analytical methods, making those methods more tractable or even more appealing to apply. This is particularly important, as most results in this area are obtained numerically, and hence, any analytical verification of such numerical results is of great utility.

Notes

We refer to the method as reduction of dimension, rather than reduction of order, as in many cases the differential order is unchanged. Rather, we are eliminating scalar functions, and hence, the number of unknown scalar functions is reduced. This method could also be referred to as reduction of scalar dimension.

Note that we also provide a long list of systems with nondimensional parameters in the Appendices, which could prove a useful resource for those wishing to have a unified list of chaotic and hyperchaotic systems and references for each system.

References

Mallory, K., Van Gorder, R.A.: A transformed time-dependent Michaelis–Menten enzymatic reaction model and its asymptotic stability. J. Math. Chem. 52, 222–230 (2014)

Li, R., Van Gorder, R.A., Mallory, K., Vajravelu, K.: Solution method for the transformed time-dependent Michaelis–Menten enzymatic reaction model. J. Math. Chem. 52, 2494–2506 (2014)

Zhou, T., Chen, G., Yang, Q.: Constructing a new chaotic system based on the Silnikov criterion. Chaos, Solitons, Fractals 19, 985–993 (2004)

Jiang, Y., Sun, J.: Si’lnikov homoclinic orbits in a new chaotic system. Chaos, Solitons, Fractals 32, 150–159 (2007)

Van Gorder, R.A., Choudhury, S.R.: Shil’nikov analysis of homoclinic and heteroclinic orbits of the T System. J. Comput. Nonlinear Dyn. 6, 021013 (2011)

Liao, S.J.: Beyond perturbation: introduction to the homotopy analysis method. CRC Press, Boca Raton (2003)

Ahn, C.K.: Neural network H\(^\infty \) chaos synchronization. Nonlinear Dyn. 60, 295–302 (2010)

Choudhury, S.R., Van Gorder, R.A.: Competitive modes as reliable predictors of chaos versus hyperchaos and as geometric mappings accurately delimiting attractors. Nonlinear Dyn. 69, 2255–2267 (2012)

Yao, W., Yu, P., Essex, C.: Estimation of chaotic parameter regimes via generalized competitive mode approach. Commun. Nonlinear Sci. Numer. Simul. 7, 197–205 (2002)

Yu, P., Yao, W., Chen, G.: Analysis on topological properties of the Lorenz and the Chen attractors using GCM. Int. J. Bifurc. Chaos 17, 2791–2796 (2007)

Chen, Z., Wu, Z.Q., Yu, P.: The critical phenomena in a hysteretic model due to the interaction between hysteretic damping and external force. J. Sound Vib. 284, 783–803 (2005)

Yao, W., Yu, P., Essex, C., Davison, M.: Competitive modes and their application. Int. J. Bifurc. Chaos 16, 497–522 (2006)

Van Gorder, R.A., Choudhury, S.R.: Classification of chaotic regimes in the T system by use of competitive modes. Int. J. Bifurc. Chaos 20, 3785–3793 (2010)

Mallory, K., Van Gorder, R.A.: Competitive modes for the detection of chaotic parameter regimes in the general chaotic bilinear system of Lorenz type. Int. J. Bifurc. Chaos 25, 1530012 (2015)

Ritt, J.F.: Differential algebra. Dover Publications Inc, New York (1950)

Kolchin, E.R.: Differential algebra and algebraic groups. Academic Press, New York (1973)

Boulier, F., Lazard, D., Ollivier, F., Petitot, M.: Computing representations for radicals of finitely generated differential ideals. AAECC 20(1), 73–121 (2009)

Boulier, F., Lemaire, F.: Computing canonical representatives of regular differential ideals. In :Proceedings of the 2000 international symposium on Symbolic and algebraic computation. pp. 38–47 (2000)

Boulier, F., Lazard, D., Ollivier, F., Petitot M.: Representation for the radical of a finitely generated differential ideal. In: Proceedings of the 1995 international symposium on Symbolic and algebraic computation pp. 158–166 (1995)

Rosenfeld, A.: Specializations in differential algebra. Trans. Am. Math. Soc. 90, 394–407 (1959)

Hashemi, A., Touraji, Z.: An Improvement of Rosenfeld-Gröbner Algorithm. In: Hong, H., Yap, C., (eds), ICMS Springer-Verlag, Berlin. LNCS 8592, pp. 466–471 (2014)

Seidenberg, A.: An elimination theory for differential algebra. University of California Publishing Mathematical (New Series) (1956)

Boulier, F.: Differential elimination and biological modelling. M. Rosenkranz and D. Wang. Workshop D2.2 of the Special Semester on Gröbner Bases and Related Methods, May 2006, Hagenberg, Austria. de Gruyter, Radon Series Comp. Appl Math 2, 111–139 (2007)

Meshkat, N., Anderson, C., DiStefano, J.J.I.I.I.: Alternative to Ritt’s pseudo division for finding the input-output equations of multi-output models. Math. Biosci. 239, 117–123 (2012)

Buchberger, B.: An Algorithm for Finding a Basis for the Residue Class Ring of a Zero-Dimensional Polynomial Ideal (German). PhD thesis, Math. Inst. Univ of Innsbruck, Austria (1965)

Zariski O, Samuel P (1958) Commutative Algebra. Graduate Texts in Mathematics, vol 28, Springer Verlag, New York

Boulier, F.: The BLAD libraries. http://www.lifl.fr/boulier/BLAD (2004)

Stein, WA., et al.: Sage Mathematics Software. The Sage Development Team. http://www.sagemath.org (2015)

Lorenz, E.N.: Deterministic nonperiodic flow. J. Atmos. Sci. 20(2), 130–141 (1963)

Hirsch, M.W., Smale, S., Devaney, R.: Differential equations, dynamical systems, and an introduction to chaos, 2nd edn. Academic Press, Boston (2003). ISBN 978-0-12-349703-1

Chua, L.O., Matsumoto, T., Komuro, M.: The double scroll. IEEE Trans. Circuits Syst. CAS 32(8), 798–818 (1985)

Chen, H.K., Lee, C.I.: Anti-control of chaos in rigid body motion. Chaos, Solitons, Fractals 21, 957–965 (2004)

Rabinovich, M.I., Fabrikant, A.L.: On the stochastic self-modulation of waves in nonequilibrium media. Sov. Phys. JETP 77, 617–629 (1979)

Rössler, O.E.: An equation for continuous chaos. Phys. Lett. A 57(5), 397–398 (1976)

Chen, G., Ueta, T.: Yet another chaotic attractor. Int. J. Bifurc. Chaos 9, 1465–1466 (1999)

Lü, J., Chen, G.: A new chaotic attractor coined. Int. J. Bifurc. Chaos 12(03), 659–661 (2002)

Tigan, G.: Analysis of a dynamical system derived from the Lorenz system. Sci. Bull. Politehnica. Univ. Timisoara 50, 61–72 (2005)

Van Gorder, R.A., Choudhury, S.R.: Analytical Hopf bifurcation and stability analysis of T system. Commun. Theor. Phys. 55(4), 609–616 (2011)

Qi, G., Du, S., Chen, G., Chen, Z., Yuan, Z.: On a four-dimensional chaotic system. Chaos, Solitons, Fractals 23(5), 1671–1682 (2005)

Qi, G., Chen, G., Du, S., Chen, Z., Yuan, Z.: Analysis of a new chaotic system. Phys. A Stat. Mech. Appl. 352(2), 295–308 (2005)

Celikovsky, S., Chen, G.: On a generalized Lorenz canonical form of chaotic systems. Int. J. Bifurc. Chaos 12(08), 1789–1812 (2002)

Van Gorder, R.A.: Emergence of chaotic regimes in the generalized Lorenz canonical form: a competitive modes analysis. Nonlinear Dyn. 66, 153–160 (2011)

Turaev, D.V., Shilnikov, L.P.: Blue sky catastrophes. Dokl. Math. 51, 404–407 (1995)

Van Gorder, R.A.: Triple mode alignment in a canonical model of the blue-sky catastrophe. Nonlinear Dyn. 73, 397–403 (2013)

Stenflo, L.: Generalized Lorenz equations for acoustic-gravity waves in the atmosphere. Phys. Scr. 53(1), 83–84 (1996)

Van Gorder, R.A.: Shilnikov chaos in the 4D Lorenz–Stenflo system modeling the time evolution of nonlinear acoustic-gravity waves in a rotating atmosphere. Nonlinear Dyn. 72(4), 837–851 (2013)

Genesio, R., Tesi, A.: Harmonic balance methods for the analysis of chaotic dynamics in nonlinear systems. Automatica 28(3), 531–548 (1992)

Arneodo, A., Coullet, P., Tresser, C.: Possible new strange attractors with spiral structure. Commun. Math. Phys. 79, 573–579 (1981)

Rössler, O.E.: An equation for hyperchaos. Phys. Lett. A 71, 155–157 (1979)

Gao, T.G., Chen, Z.Q., Chen, G.: A hyperchaos generated from Chen’s system. Int. J. Mod. Phys. C 17, 471–478 (2006)

Chen, A., Lu, J., Lü, J., Yu, S.: Generating hyperchaotic Lü attractor via state feedback control. Phys. A 64, 103–110 (2006)

Wang, G., Zhang, X., Zheng, Y., Li, Y.: A new modified hyperchaotic Lü system. Phys. A 371, 260–272 (2006)

Wang, F.-Q., Liu, C.-X.: Hyperchaos evolved from the Liu chaotic system. Chinese Phys. 15(5), 963–968 (2006)

Jia, Q.: Hyperchaos generated from the Lorenz chaotic system and its control. Phys. Lett. A 366(3), 217–222 (2007)

Qi, G., van Wyk, M.A., van Wyk, B.J., Chen, G.: On a new hyperchaotic system. Phys. Lett. A 372(2), 124–136 (2008)

Letellier, C., Roulin, E., Rössler, O.E.: Inequivalent topologies of chaos in simple equations. Chaos, Solitons, Fractals 28, 337–360 (2006)

Jafari, S., Sprott, J.C., Hashemi-Golpayegani, M.R.: Elementary quadratic chaotic flows with no equilibria. Phys. Lett. A 377(9), 699–702 (2013)

Van Gorder, R.A.: Analytical method for the construction of solutions to the Föppl–von Karman equations governing deflections of a thin flat plate. Int. J. Non Linear Mech. 47, 1–6 (2012)

Baxter, M., Choudhury, S.R., Van Gorder, R.A.: Zero curvature representation, bi-Hamiltonian structure, and an integrable hierarchy for the Zakharov-Ito system. J. Math. Phys. 56, 063503 (2015)

Ma, W.X., Chen, M.: Hamiltonian and quasi-Hamiltonian structures associated with semi-direct sums of Lie algebras. J. Phys. A Math. Gen. 39, 10787–10801 (2006)

Robertz, D.: Formal algorithmic elimination for PDEs, vol. 2121. Springer, Cham (2014)

Paramonov, S.V.: Checking existence of solutions of partial differential equations in the fields of Laurent series. Program. Compu. Softw. 40(2), 58–62 (2014)

Acknowledgements

HAH gratefully acknowledges the support of EPSRC Fellowship EP/K041096/1. The authors thank I. Kevrekidis and P. Kevrekidis for discussions.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: List of chaotic systems

When testing our approach, we considered a variety of specific chaotic systems. Results for these are listed in Table 1. As the form and scaling of such equations can vary in the literature, we list the specific form of these equations used in our work.

Let \(x,y,z,w\in C^n(\mathbb {R})\) where n is the dimension of the relevant dynamical system, and let \(a,b,c,d \in \mathbb {R}\) be parameters.

Modified Chua’s circuit [31]:

Chen–Lee system [32]:

Rabinovich–Fabrikant equations [33]:

Rössler system [34]:

Chen system [35]:

Lü system [36]:

4D Qi–Chen–Du–Chen–Yuan system [39]:

Qi–Chen–Du–Chen–Yuan system [40]:

Generalized Lorenz canonical form [41, 42]:

Two-parameter model for the blue-sky catastrophe [43, 44]:

4D Lorenz–Stenflo system [45, 46]:

Genesio–Tesi system [47]:

Arneodo–Coullet–Tresser system [48]:

Appendix 2: List of hyperchaotic systems

When testing our approach, we considered a variety of specific hyperchaotic systems. Results for these are listed in Table 2. As the form and scaling of such equations can vary in the literature, we list the specific form of these equations used in our work.

Let \(x,y,z,w\in C^4(\mathbb {R})\) and let \(a,b,c,d,e,f \in \mathbb {R}\) be parameters.

4D Rössler flow [49]:

Hyperchaotic Chen system [50]:

Hyperchaotic Lü system [51]:

Modified hyperchaotic Lü system [52]:

Hyperchaotic Wang–Liu system [53]:

Hyperchaotic Jia system [54]:

Hyperchaotic Qi–van Wyk–van Wyk–Chen system [55]:

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Harrington, H.A., Van Gorder, R.A. Reduction of dimension for nonlinear dynamical systems. Nonlinear Dyn 88, 715–734 (2017). https://doi.org/10.1007/s11071-016-3272-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-016-3272-5