Abstract

Interdependent critical infrastructures in coastal regions, including transportation, electrical grid, and emergency services, are continually threatened by storm-induced flooding. This has been demonstrated a number of times, most recently by hurricanes such as Harvey and Maria, as well as Sandy and Katrina. The need to protect these infrastructures with robust protection mechanisms is critical for our continued existence along the world’s coastlines. Planning these protections is non-trivial given the rare-event nature of strong storms and climate change manifested through sea level rise. This article proposes a framework for a methodology that combines multiple computational models, stakeholder interviews, and optimization to find an optimal protective strategy over time for critical coastal infrastructure while being constrained by budgetary considerations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The nation’s and the world’s infrastructure is threatened by climate change. Nowhere is this threat more direct than along the coastlines where interdependent critical infrastructure (ICI) provides life-lines not only to those living in coastal regions but also for those far into the interior of the nation as coastal communities provide critical conduits for goods and services to travel inward. Storm surge, flooding due to a storm pushing water against the coastline, is a major threat in many coastal areas throughout the world, causing widespread damage across large swaths of coastlines as recently demonstrated by Hurricane Sandy (2012), which caused $67.6 billion in direct damage (NCEI 2018). Today and in the future, these disastrous events may become more common due to the impacts of climate change. Sea level rise, predicted to rise at least 1 meter in the world-wide mean by the end of the century (Parris et al. 2012; Stocker et al. 2013), is expected to cause storm surges associated with previously moderate coastal storm events to become disasters. New York City demonstrates this as seen in Fig. 1, where the predicted flood-plain expands with time and associated sea level rise. The result is increased vulnerability of the critical infrastructure as observed during Hurricane Sandy that caused significant interruptions to transportation, power and emergency services in New York. Finding ways to prevent these interruptions is central to ensuring the safety and longevity of our coastal areas and broader communities that depend on them.

Coastal protection strategies take a variety of forms and often the optimal strategy requires the combination of a number of different approaches. Finding the optimal strategy, however, is extremely difficult and the true breadth of possibilities is rarely explored, especially when considering storms that are outside the historical record. The methodological framework proposed here combines computational models and social science to find “optimal” coastal protection strategies for infrastructure. It should be noted that in this article, “optimal” solution means “most effective and preferred” solution, given a number of prescribed constraints. Consequently, there are certain limitations in the definition of optimality, as future storms and sea level rise are highly uncertain events. The social science component includes interviews of local and regional stakeholders who have technical and first-hand knowledge about the risks to critical infrastructure and about priorities in the area of interest. Stakeholders’ knowledge and perspectives are integrated into the computational component of the methodology in order to identify strategies that can successfully mitigate risks while also meeting local needs. In the end, the goal is to develop tools for determining optimal coastal protection of ICI accounting for climate change in ways that include the physical, financial, cultural, and social factors that are critical components for a successful adaptation (Adger et al. 2005).

In the following, the focus will be on New York City, although the proposed methodology is general enough to be applied in any coastal area around the world. The NYC region provides a number of advantages as a test bed for the proposed methodological framework as the authors’ prior research was conducted in the area where they established valuable connections with important stakeholders. In addition, the complexities of the ICI in New York City make it an ideal test bed for any methodology claiming to address such complexities. Finally, Hurricane Sandy’s relatively recent landfall provides an excellent validation test-case for the proposed models, as well as a test-case that resonates with stakeholders. This test-case will then be generalized with additional stakeholder input for other communities with a focus on the interdisciplinary aspect of the approach.

Summarizing, the paper is aiming to develop and present a new methodological framework for determining the optimal coastal protection strategy for ICI subjected to the combination of storm surges and sea level rise, including the identification of models and data sets required, and then to demonstrate the modeling and data integration using a simple, illustrative example. The full implementation of the method with its optimization component is currently in progress and will be presented in future work.

2 Risk to the interdependent critical infrastructures (ICI) of New York City

When storm surge damages critical infrastructures, important interdependencies among infrastructure components may extend and exacerbate impacts well beyond any initial infrastructure failures. One example is the failure of critical arterial roadways preventing emergency services from reaching certain areas. Impacts to the power grid, especially long-term ones, can impact both transportation networks and medical services due to lack of consistent power. These include loss of the signaling system in mass transit or of supply of consistent power to hospitals, both experienced during Hurricane Sandy. New York City’s experiences during Sandy demonstrated these and other failures due to interdependencies that must be addressed to ensure a more resilient city. We will focus on three components of New York City’s ICI: the transportation infrastructure, the power infrastructure, and the emergency services infrastructure, in relation to their risk from storm surge and climate change through sea level rise.

2.1 Transportation

The transportation infrastructure includes both above and below ground components, but due to its susceptibility to storm-induced flooding and sea level rise, more emphasis will be placed on the underground component (subway or train tunnels and stations, car tunnels, etc.). The above ground component is also considered, especially in low-lying areas/flood zones (e.g., roads, bus depots, subway car parking areas, etc.).

During Hurricane Sandy, there was extensive damage to the transportation infrastructure of New York City. The underground transportation infrastructure in New York City is essentially open to the air. Water entered subway/rail tunnels through low-lying stations and ventilation grilles/openings. After the massive damage that occurred, the Metropolitan Transportation Authority of New York City has been implementing the installation of watertight hatches, doors, and coverings to locations that flooded during Sandy. In addition, water can sometimes enter tunnels through basements of nearby buildings. Car tunnels were also flooded through their entrances and ventilation mechanisms. Once the underground transportation infrastructure is flooded, restoration to its prior serviceability level can be long, as water has to be pumped out first and then affected electrical systems have to be checked and repaired as necessary.

New York City’s transportation infrastructure is particularly complex. For the purposes of this work, emphasis will be placed on (1) the subway system, (2) the rail system (3) all car tunnels, (4) streets and roadways in low-lying areas with particular emphasis to those providing access to critical medical facilities, and (5) low-lying bus depots and subway/train car parking areas in flood-prone zones. The subway system will be fully accounted for with particular emphasis to the underground part of the network and the above ground part located in low-lying areas. The rail system, including Amtrak, Long Island Railroad, PATH Train and Metro North, is heavily interconnected with the subway system (e.g., at Pennsylvania Station, Grand Central Station, and World Trade Center Station), and this will be taken into account.

2.2 Power grid

The power grid in New York City is another critical piece of its infrastructure that experienced significant failures during Hurricane Sandy. Most of the grid’s power lines are located underground and this serves as a safety feature for certain weather-related hazards such as strong wind and ice storms. However, and most critically, this feature also exposes the grid to an increasingly important weather-related hazard: flooding. The power grid also includes above ground features, such as power stations that are vulnerable to storm-induced flooding, as illustrated by the power outage that occurred in Lower Manhattan during Hurricane Sandy.

New York City’s power grid is a distribution system which provides power within a relatively narrow geographical area. Transmission systems, in contrast, transport power over long distances at high voltages while distribution systems operate at lower voltages. Perhaps uniquely among major metropolitan areas, New York City generates a comparatively large fraction of its power, approximately 60%. The 40% shortfall is large enough that if in the event of severe weather little or no power reaches the city from outside sources, the intra-city power grid would collapse or deliver only a small fraction of the 60%.

Along these lines, it is important to have an intuitive understanding of the process by which a grid “fails.” The process starts with the failure of some components, such as a power line (e.g., disabled by wind or a short-circuit) or a transformer (e.g., disabled by flooding). Following such an event, power is redistributed according to laws of physics. This redistribution may place an overload on other equipment, such as other power lines. Overloaded power lines “trip” (stop operating) when local circuit breakers indicate that temperature has reached a critical point. Thus, it is possible to observe a set of secondary line interruptions, which may cause loss of power to customers or may cascade into a larger systemic failure. Such a cascade may lead to a complete system collapse when the available power sources can only provide a relatively small fraction of the overall power that is needed at the time. “Smart-grid” protective measures that intelligently reduce demand in real time, to mitigate such a cascade, are still in the research stage.

2.3 Emergency services

The third component of the infrastructure system that will be considered is emergency services that are especially critical during any catastrophic event. The components that will be considered include police and fire stations, hospitals and other treatment centers, as well as the associated delivery of these services via the transportation infrastructure. The infrastructure needed to coordinate emergency services will be considered, as well as any other services identified by the relevant stakeholders. The most important objective for hospitals during a major flooding event is to continue operating with emergency power generators. If the area surrounding a hospital becomes flooded, it becomes impossible for emergency response vehicles to reach the hospital. The interconnectivity of emergency medical services and the transportation infrastructure is absolutely critical and will be considered as part of this study.

All hospitals and treatment centers in New York City will be accounted for. They will be considered as nodes of a network connected through the transportation infrastructure. The risk of failure of emergency power generators will be considered in our analysis.

2.4 Interdependencies

The aforementioned infrastructures in New York City can be heavily dependent on one another. For example, it was a loss of power due to explosions of an electric utility located close to the East River during Hurricane Sandy that triggered the evacuation of the New York University Hospital Center/Bellevue Hospital following the failure of its power generators due to flooding. A breakdown in transportation (flooded roads and subways) prevented emergency services to reach areas that were in critical need and impeded access to medical facilities. Such interdependencies of the various infrastructures will be considered as part of this work.

2.5 Data availability

To evaluate the proposed methodology, databases from the authors’ past research for the transportation system, the power grid, and critical emergency services are used. These databases are combined into a highly detailed Geographical Information System (GIS) including the:

-

1.

Underground transportation system of New York City A detailed description of NYC’s subway system, train system, and all car tunnels is currently available from the Metropolitan Transportation Authority of New York City (MTA), the Port Authority of New York and New Jersey (PANYNJ), and Amtrak. This database includes critical elevations of all openings of the underground infrastructure such as station entrances and ventilation openings, size of all these openings, and exact mapping of all stations and tunnels including their location, dimensions, and overall volume.

-

2.

Above ground transportation system Location and critical elevations of all streets and roads in New York City, as well as of selected low-lying bus depots, subway car parking areas, airports, etc., are also available.

-

3.

Critical facilities Location and critical elevations of all critical facilities including hospitals, fire departments, police departments, military installations, gas and electric utilities, are available as depicted in Fig. 2.

-

4.

Complete building information in New York City Location, critical elevations, building usage, and asset data are finally available for every building in the city.

3 Framework of the proposed methodology

The proposed methodology aims at determining optimal protective strategies based on parameterizations of various protective measures, physics-based storm surge models that will evaluate these strategies, damage estimates due to storm-induced flooding, and quantification of the success of a protective strategy, integrated with input from professional stakeholders and members of at-risk communities for determining a realistic optimal solution.

The conceptual layout of the proposed methodological framework is depicted in Fig. 3. Its four basic components are:

-

1.

New protective strategy Each iteration starts with the formulation of a new protective strategy which is based on the evaluation of previous protective strategies as well as random perturbations. A protective strategy may consist of multiple protective measures implemented at different geographic (spatial) locations and at different times.

-

2.

Simulated flooding The extent of the storm-induced flooding over the area under consideration is estimated accounting for the various different protective measures of the corresponding protective strategy. This is accomplished using the available simulation tools (the more computationally inexpensive GIS-based model or the more accurate GeoClaw model).

-

3.

Flooding damage assessment Using the extent of the storm-induced flooding over the area under consideration, the resulting damage/loss is estimated.

-

4.

Suitability of protective strategy Using the estimated damage/loss for the current iteration and corresponding damage/loss of previous iterations, it is possible to assess the relative suitability or effectiveness of the current protective strategy with respect to those of previous iterations. The cost of implementing the protective strategies and stakeholder feedback are taken into account.

Key aspects of the proposed methodological framework are described in further detail in the following.

3.1 Parameterization of protective strategies

Each protective strategy at a specific iteration will include a set of different protective measures that will be appropriately parameterized. There are various possible protective measures such as large-scale storm barriers, seawalls, levees, artificial islands and reefs, restoration of wetlands, sand dunes, sealing of individual components of the infrastructures (e.g., installing water-tight door/hatches at subway station entrances and ventilation openings), raising individual components of the infrastructure to larger heights, relocation of individual components of the infrastructure or of entire communities to higher ground away from their current location, etc.

For example, for the case of a seawall, the corresponding parameterization includes: (1) the location and length of the seawall and (2) its height. The parameterization allows for spatially and temporarily varying location, length, and height of the seawalls. The temporal dependence allows for the sea-wall to be built at a certain height at a certain time and to increase its height at a later time if needed. The construction cost including labor wages will be provided by stakeholders and informed by previous similar projects. A critical consideration when building the original wall will be to design it in such a way that it could carry the additional weight resulting from an increase in its height in the future. Parameterizations can be easily developed for all the other protective mechanisms considered.

3.2 Storm-induced flood models

Previous approaches to calculating storm-induced flood damage have addressed the problem by using either a computationally inexpensive model capable of performing a large number of calculations with limited accuracy (lacking a robust and complete description of the flood), or a computationally expensive and highly accurate model that can only be used to perform a limited number of calculations because of its high computational cost. One of the main innovations of the proposed methodology is the combination of both of these models by narrowing down the parameter space using the computationally inexpensive model, before using the higher accuracy but more computationally expensive model to determine the exact final solution.

In the proposed methodological framework, the computationally inexpensive model uses a GIS-based flood model (GIS-based Subdivision-Redistribution Simulation (GISSR) Miura et al. 2021) described in Sect. 3.2.2. The computationally expensive but more accurate model uses GeoClaw, which can solve the shallow water equations with high accuracy, providing a dynamic simulation of a body of water’s response to a storm. It is described in Sect. 3.2.3.

3.2.1 Future storms and sea level rise

Both models require a series of inputs. The first input is an ensemble of storms over a prescribed time frame that each protective strategy will be tested against. One major challenge in analyzing the susceptibility of New York City’s ICI to a weather-related exogenous event is that few such events have taken place. The ensemble of storms is defined using the record of historical storms that have hit the New York City area in a probabilistic manner, as well as taking advantage of a synthetic ensemble of physically realizable storms as was done in Lin et al. (2012). A specific maximum storm surge height can be simulated for each storm using a probabilistic model based on the historical data. The simulation for the proposed optimization methodology uses a modified beta distribution function for the future peak storm surge estimations in Lower Manhattan, NY. The details of this modified beta distribution model will be provided in an upcoming paper. The uncertainties in occurrence and intensity of future storms over the prescribed period of time considered are addressed by simulating a large number of storm sequences over the time period considered. Each storm sequence contains a different number of storms, with different occurrences and corresponding intensities. At the end, a histogram of the overall damage loss can be established over the prescribed period of time.

The second input is the consideration of sea level rise. Given a time-frame for evaluation defined at the outset of the optimization process, the specific amount of sea level rise at a specific time in the future will be determined using a probabilistic model. This sea level rise will be combined with the height of a storm surge. For example, the sea level rise projections for New York City shown in Table 1 (Horton et al. 2015; Gornitz et al. 2019) can be added to the generated storm surge data. It should be noted that the variations in sea level rise may alter the coastal configuration and the storm surges but this is beyond our current modeling capabilities.

3.2.2 GIS-based dynamic flood level models

The initial iterations in the optimization process in Fig. 3 will use the GIS-based system GISSR developed by the authors (Miura et al. 2021; Jacob et al. 2011). The GISSR system includes a very detailed digital elevation model (DEM) with a resolution of 1 ft, building footprints with essential building information [including height, area, value (asset), usage, and basement data] in New York City, complete description of transportation systems (above and below ground), etc. Layering these features together with flood height data will enable us to determine the overall height of water at the location of each and every infrastructure component in the city.

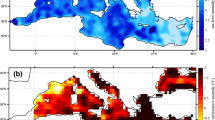

The simulation only needs topographical data, surge data (time history of water level along the coastline), and protective measures, if any, as inputs. The simulation can estimate the amount of flooding when the surge exceeds the height of the protective measures. Representative results from two different GIS systems regarding the flooding of the infrastructure systems above and below ground in New York City are shown in Figs. 4 and 5 (Jacob et al. 2011; Miura et al. 2021).

Representative results of GISSR (Miura et al. 2021) in Lower Manhattan, NY. Light blue color indicates the inundation area from Hurricane Sandy; darker blue color indicates Sandy’s inundation area with added middle estimate of sea level rise in 2050; darkest blue color indicates the inundation area of Sandy with added middle estimate sea level rise in 2100

Representative results of the GIS system from Jacob et al. (2011). Heavy blue lines indicate fully flooded subway tunnels from the 100-year flood in New York City

3.2.3 The GeoClaw model

Once the optimization process in Fig. 3 has sufficiently narrowed down the space of protective strategies using the fast GIS-based model GISSR, the GeoClaw model will be used to calculate time-dependent, storm-induced flooding with high accuracy. GeoClaw is a finite volume, wave-propagation numerical method described in LeVeque (2002) and is a part of the Clawpack (Mandli et al. 2016). An example of GeoClaw’s capabilities is shown in Fig. 6.

Left: A snapshot of a GeoClaw storm surge simulation of Hurricane Ike at landfall. Right: Tide gauge data computed from GeoClaw along with observed data at the same location. The wall clock time was 2 h and the CPU time 8 h (Mandli and Dawson 2014)

3.2.4 The overall simulation model

The GISSR model and the GeoClaw model can each calculate the extent of flooding from a specific storm with varying levels of accuracy and computational efficiency. However, the overall methodological framework and the associated optimization scheme depicted in Fig. 3 do not depend on the outcome of a single specific storm. Instead, they depend on the outcome of the entirety of possible storms over a prescribed period of time. This is accomplished in the following way.

Assuming that the prescribed period of time under consideration is N years, one simulation consists of determining the number and occurrence times of all storms within these N years (a Poisson model can be used for this purpose). Then, a specific maximum storm surge height is simulated for each and every storm in this period of N years using the modified beta distribution model mentioned earlier. This simulation process over the period of N years is repeated a large number of times M (e.g., \(M=1000\)). For each of these M times, the number and specific times of occurrence of the storms within the N years will be different. The corresponding maximum storm surge heights will also be different. Eventually, statistics will be derived from the M simulations performed over the prescribed period of N years. For example, statistics of the overall damage/loss over the N years can be established (mean, standard deviation, and even a rough estimate of its PDF if M is large enough).

The following section describes how to compute damage/loss for a specific storm event. Adding the damage/loss from all storms within the N years of one of the M simulations provides the corresponding overall damage/loss. This is repeated M times to determine M values of the overall damage/loss over N years. After that, computation of corresponding statistics is straightforward. The resulting very large number of generated storms ensures the capturing of rare events with very large storm surges.

3.3 Flooding damage assessment

Using appropriate fragilities (e.g., Hazus 2018), the loss for every component of the infrastructure can be computed (including structural damage, damage to contents, and loss of use) for a given storm with known water height at any location within the geographical area considered (using GISSR or GeoClaw). The losses are then added for all infrastructure components inside the geographical area considered to establish the overall loss from this specific event. The interconnections of different infrastructures will be considered. For example, a power failure can trigger interruptions in subway service, street traffic lights, communications (especially wireless), water supply to tall buildings and many other areas. The interconnections can continue beyond this level. For example, loss of street traffic lights can lead to traffic chaos preventing emergency vehicles to reach their assigned destinations. Loss of wireless communications can negatively affect the coordination of emergency services, and so on.

This damage assessment procedure requires a detailed description of all components of the infrastructure in the geographic area under consideration. This information has to include the following for each component of the infrastructure: exact geographic location, critical elevations (elevations of openings from where water can enter and flood the structure), description of structure (materials, form, use, etc.), fragility of structure as a function of the height of water at its location, value of structure and of its contents, per diem cost resulting from loss of use of the structure, etc. Note that here, cost is associated with the loss and subsequent repair of the infrastructure, not the cost of the protective measures that are accounted for separately. It should be pointed out that both the above ground and below ground infrastructures are accounted for.

3.3.1 Damage functions for physical loss

The first step in flooding damage assessment translates water height to percent loss of a structure using damage functions/fragilities. Water heights at the location of every structure are computed using GISSR or GeoClaw. This step will also be informed by the stakeholder interviews so that first-hand knowledge of weaknesses, strengths, and interconnections of the infrastructure is included as accurately as possible.

Figure 7 shows typical damage functions providing damage percentage as a function of flood height for different types of structures. They are provided by Hazus that was developed by the Department of Homeland Security, Federal Emergency Management Agency. Damage functions are available for a variety of different classes of buildings, including various residential building types, commercial building types, utilities, factories, theaters, hospitals, nursing homes, churches, etc. It should be mentioned here that the Hazus damage functions/fragilities have been developed for static type of flooding resulting from rainfall. The storm surge type of flooding considered in this study is somehow different as it involves wave action close to the coastline. This is accounted for through appropriate modifications of Hazus damage functions. Another unique characteristic of this study centered in New York City is the prevalence of tall and very tall buildings. Appropriate damage functions will be developed for such buildings that usually sustain damage from flooding that is only a small percentage of their overall value. Finally, the authors have developed damage functions/fragilities for the underground transportation system in New York City including direct damage and loss of use. The total damage/loss in a target area \(C_{dmg}\) related to physical loss is computed from:

where N is the total number of buildings in the area, \(a_i\) is a total value/asset of building i, and \(D_i\) is the percentage of the total replacement cost associated with flood height \(h_i\) observed at the location of building i. The flood height at each location \(h_i\) is computed by subtracting the critical elevation of each building from the flood height.

Hazus (2018) damage functions related to physical loss for various types of structures as a function of flood height. The damage functions for police and fire departments and emergency operation centers are the same. The damage function for electric utilities is available only in the 0 to 3-meter range

3.3.2 Economic loss assessment

Damage/loss due to suspended business operations during the restoration period will be considered if the building has commercial areas and did not collapse (the buildings with over 50% damage will be considered as collapsed). Hazus (2018) developed damage functions for two types of economic loss: inventory loss and income loss. The total inventory loss \(C_{inv}\) is computed as:

where \(N_{inv}\) is the total number of commercial/industrial buildings/occupancies dealing with inventories, \(A_i\) is the floor area at and below the flood height \(h_i\), \(S_i\) denotes the annual gross sales for occupancy i, and \(B_i\) is the business inventory which is a percentage of gross annual sales. This applies to retail trade, wholesale trade, and industrial facilities. The total income loss \(C_{inc}\) is computed from:

where \(N_{com}\) is the total number of buildings/occupancies with commercial areas, \(f_i\) is the income recapture factor for occupancy i, \(I_i\) is the income per day for occupancy i, and \(d_i\) is loss of function time for the business in days. When a storm destroys more than 50% of a building, the building is considered as collapsed and will be demolished (not repaired).

3.3.3 Overall damage assessment

Following the damage assessment methods described in Sects. 3.3.1 and 3.3.2, overall damage cost estimates can be computed as a function of flood height. The overall cost estimates include physical damage loss [Eq. (1)], inventory loss [Eq. (2)], and income loss [Eq. (3)]. For example, Fig. 8 displays representative damage cost estimates in Lower Manhattan for different levels of flood height using Eqs. (1) to (3).

3.4 Assessment of suitability of a specific protective strategy

The iterative process depicted in Fig. 3 is based on the assumption of a prescribed time horizon of N years (N can be 20, 50, 100 years or any other number). The first step is to calculate the overall losses over the N years from all possible storms during this period, without any protective strategy implemented. We denote these losses by \(L_{no}\) (losses are considered in a statistical sense in this section as \(L_{no}\) is computed M times from M different simulations over the period of N years).

The basic requirement for any protective strategy is that its implementation (construction) cost \(L_{co}\) and overall losses \(L_{ps}\) is less than \(L_{no}\):

If Eq. (4) is not satisfied for a specific protective strategy, then this strategy is unacceptable (since doing nothing has a lower overall cost).

During the iterative optimization process shown in Fig. 3, a large number of different protective strategies are considered (a new strategy at every iteration). If the sum of the implementation cost and overall losses of the protective strategy at iteration (i) is less than that of iteration (\(i-1\)), the protective strategy at iteration (i) becomes the temporarily optimum solution. Otherwise, the protective strategy at iteration (\(i-1\)) remains the temporarily optimum solution and a new protective strategy is tested against it. This procedure is expressed as:

The iterations continue until \((L_{co} + L_{ps})\) stabilizes without further reduction possible in subsequent iterations. It should be mentioned that as with \(L_{no}\), \(L_{ps}\) is considered in a statistical sense.

Stakeholder identification of pertinent metrics will be incorporated into the assessment through the social science component of the method. Knowledge from stakeholder interviews and community meetings will be included by adjusting the weighting of various components or adding new criteria as appropriate. For example, stakeholders might identify critical areas that should not flood and therefore should be weighted more heavily. Considering multiple time horizons will allow the evaluation of the long-term success of each protective strategy (Hancilar et al. 2014; Lopeman et al. 2015).

Finally, it is important to mention that the methodology includes an upper limit for the implementation cost of the optimal protective strategy:

3.5 Stakeholder interactions and feedback

As part of developing this method, local stakeholders who have technical and empirical first-hand knowledge about ICI in New York City have been interviewed to elicit their understandings and perceptions of how critical infrastructure is impacted by storm surges, and how these impacts are amplified through infrastructure interdependencies. Findings from these interviews provide information that goes beyond the strictly technical understanding that usually informs protective strategy planning. By engaging and integrating the knowledge of stakeholders in this arena, adaptation options to protect coastal infrastructure will be attuned to the particular and contextual risks that transportation, power grid, emergency services, and other components of the infrastructure face due to storm surge hazards. Moreover, by understanding how storm surge intersects with infrastructure, and about interdependencies within complex infrastructure systems from multiple perspectives, a deeper understanding of the complexity is gained than from any single perspective. In this way, the optimization methodological framework will not only rely on GISSR and GeoClaw modeling but also on the wealth of experiential knowledge about infrastructure interdependencies held by local stakeholders, particularly based on their experiences with Hurricane Sandy which devastated communities and the infrastructure systems on which they rely.

Complementing the stakeholder interviews, the method also includes participating in community meetings with local groups who are actively pursuing coastal community resilience activities. The community meetings will be an opportunity to reach out to selected communities with results of the optimization framework and assess it according to community needs and priorities.

3.5.1 Stakeholder interviews

Stakeholder interviews were conducted in the initial stages of the project to enable the interview results to inform the optimization framework from the early stages of development. Stakeholders that were interviewed were selected based on relevance to the project and their connection to NYC’s ICI, with additional stakeholders identified based on recommendations from the initial interviewees following a purposive snowball sampling technique (Burawoy 1998). According to standard social science practice, interviews were recorded and transcribed for analysis. In order to ensure that interviews yield insight relevant to the operation and goals of the optimization framework, the research team collaborated iteratively to create the interview protocol and the coding scheme used to guide analysis, thus including the computer modeling and infrastructure experts in the social science methodologies.

Our current implementation of the method includes interviews conducted in two phases. In phase one, at the beginning of the project, ten stakeholders were interviewed to elicit their mental models of storm surge impact on ICI in New York City. Mental models are causal beliefs about how the world works, including complex relationships within specific processes (Morgan et al. 2002; Jones et al. 2011; de Bruin and Bostrom 2013; Lazrus et al. 2016). To characterize stakeholders’ mental models of storm surge impacts and critical infrastructure interdependencies, stakeholders were asked to describe storm surge risks to infrastructure in general and progressively narrow the field of questioning to follow up on and hone in on specific elements of their mental models following Lazrus et al. (2016) and Morss et al. (2015). Interviewees also viewed a sample of initial GeoClaw model simulations and provided feedback on their understanding, trust, and potential utility of the model information. In phase two, once initial modeling results using the input from the phase one interview became available, the same set of stakeholders was re-interviewed, this time to assess how well the optimization framework captures their perspectives and to identify key areas for improvements to the optimization methodology. The authors who developed physical models also joined the second set of interviews and discussed the models and simulations directly with the stakeholders to obtain their feedback.

Initial findings from the interviews have already informed the development of the optimization framework, and they will continue to do so as the modeling and optimization are performed. First, they are informing the types of critical infrastructure and infrastructure interdependencies that we are including in the modeling. Second, among the stakeholders interviewed, there is a great diversity of familiarity with storm surge models, and thus some stakeholders are much more fluent in interpreting the model simulations than others. We have made adjustments to how the model is presented with this in mind. For example, in order to make the simulations more legible to this audience, we have noted the importance of increasing the geographic resolution of the model so that stakeholders can more easily identify key features of the New York area. One example is the Rockaway Peninsula, which is a key geographic feature of the New York landscape and thus is important to resolve for stakeholders to become oriented in the simulations.

3.5.2 Community meetings

Another component of the method is participating in community meetings in the final phases of the project, as an opportunity to share results of the optimization framework, learn where it may need to be adjusted to conform to community values, and explore social acceptability. For example, if the optimization framework points to coastal solutions that may hinder crucial subsistence, recreational, or other cultural activities, the research team will understand where flexibility and options need to be included in the framework. Communities in which these meetings will be held will be identified using the initial results of the optimization framework itself: where the risks of sea level rise are greatest and any existing protective/adaptation measures are inadequate to address them. Conversations during the community meetings will be carefully guided to manage expectations based on the optimization framework results and to empower local community members to make adaptive decisions that meet economic, social, and cultural priorities.

3.6 Optimization component

The optimization component of the iterative scheme shown in Fig. 3 involves the selection of the new protective strategy to be evaluated in the following next iteration as described in Sect. 3.4. This constitutes a significant challenge due in part to the scale and scope of the underlying problem, but primarily due to deep nonlinearities in the model, and uncertain and noisy data. It is expected that a large number of iterations will be necessary to identify the optimal solution for the protective strategy. In the beginning, and for the majority of iterations, the computationally efficient GISSR model will be used. The GeoClaw model will be used at the end to provide high accuracy (but at a higher computational cost).

As discussed above, the optimization scheme includes a constraint: there is an upper limit of the budget available to implement the optimal protective strategy as indicated in Eq. (6). The optimal protective strategy will therefore be different with different budgetary considerations.

4 Example: fast damage assessment with GISSR model

A GISSR-based flood risk assessment system has been developed by the authors and is able to determine the total loss to the infrastructure as a function of flood height that is assumed to be constant along the coastline. An example scenario of damage assessment is provided in this section considering losses only to the above ground infrastructure (although a full description of the underground transportation infrastructure exists and will be used later in this project).

4.1 3.44 m Height flood scenario

The Lower Manhattan area below 34th Street has been selected for this demonstration since the region experienced massive damage during Hurricane Sandy due to inundation and power-outage. Eventually, the entire New York City will be considered in this project. Based on the damage functions [Eqs. (1) to (3)] and complete building information in the Lower Manhattan area, the cost of physical loss and economic loss is computed assuming a 3.44 m flood height (from Mean Sea Level) all over the targeted region. No protective measures or strategies are considered for demonstration purposes. The targeted area is shown in Fig. 9. The height of 3.44 m is the peak flood height at Battery, NY during Hurricane Sandy.

Target area focused on Lower Manhattan below 34th Street where buildings’ critical elevation is lower than other regions in the city and extensive power-outage happened during Hurricane Sandy. Subway stations are marked and subway lines are colored according to the MTA standard manner (although they are not considered in this example)

Figure 10 shows the area inundated by the 3.44 m flood height. It is clear that multiple buildings exist within the flooded area.

4.2 Total damage assessment

There are 14,443 buildings in the targeted area shown in Fig. 9. 1014 of these buildings would be flooded to some degree, and consequently, they would experience some level of damage by the 3.44 m height flood. Total losses and corresponding damage percentages for each building are provided in Figs. 11 and 12. The total damage (physical) loss to all these building structures would be $678 million by applying the damage function in Eq. (1). The corresponding total inventory loss for commercial and industrial facilities is $4.3 million [Eq. (2)] and the total income loss is $328 million [Eq. (3)]. The total (overall) loss, $1.0 billion, is a reasonable estimate considering that the targets here were only buildings in the Lower Manhattan area, compared to the total loss in the entire city induced by Hurricane Sandy that was $19 billion. As an example, the highest damage cost for a specific structure in this region is $137 million and this structure is a utility facility in the Midtown East area colored in red in Fig. 11. This structure sustained heavy damage during Sandy. The average damage cost of three hospital buildings in the Midtown East area, also colored in red in Fig. 11, is $71 million, and the corresponding total damage cost of the entire hospital complex is $213 million. These hospital facilities sustained severe damage during Sandy.

This example considers 3.44 m of constant flood height all over the targeted area. The result would be more accurate with an exact flood height at each building location from the GeoClaw model. Hazus (2018) classifies that if the damage percentage exceeds 50%, structures are considered as collapsed. There are no collapsed buildings found in the simulation (Fig. 12), as was the actual case at Sandy’s landfall.

5 Conclusions

The basic methodological framework to determine the “optimal” protective strategy over a prescribed multi-year period for coastal infrastructures subjected to storm surge-induced flooding and sea level rise was presented. It should be noted that optimality, as defined in this paper, has certain limitations due to the uncertainties involved with future storm events and sea level rise. The resulting optimal protective strategy is constrained by budgetary considerations. The methodology combines multiple computational models, optimization techniques, and stakeholders’ feedback and empirical knowledge.

Availability of data and material

1. Department of Information Technology & Telecommunications (2018). “Building footprints.” Online; Accessed December 2020, https://www.data.cityofnewyork.us/Housing-Development/Building-Footprints/nqwf-w8eh, 2. Department of Environmental Protection & the Department of Information Technology and Telecommunications (2018). “1 foot digital elevation model (dem).” Online; Accessed December 2020, https://www.data.cityofnewyork.us/City-Government/1-foot-Digital-492Elevation-Model-DEM-/dpc8-z3jc, 3. Department of Homeland Security, Federal Emergency Management Agency (2018). “Hazus.” Online; Accessed December 2020, https://www.fema.gov/hazus, 4. National Oceanic and Atmospheric Administration (2012). “Water level - observed water level at5188518750, the Battery, NY from 2012/10/29 00:00 gmt to 2012/10/30 23:59 gmt.” Online; Accessed December 2020, https://www.tidesandcurrents.noaa.gov/waterlevels.html?id=8518750&units=metric&bdate=20121029&edate=20121030&timezone=GMT&datum=MSL&interval=6&action=, 5. Department of City Planning (DCP) (2018) “Borough Boundaries” Online; Accessed December 2020, https://www.data.cityofnewyork.us/City-Government/Borough-Boundaries/qefp-jxjk, 6. New York City Department of City Planning (2018). “MapPLUTO”, Online; Accessed December 2020, https://www1.nyc.gov/site/planning/data-maps/open-data/dwn-pluto-mappluto.page, 7. Mayor’s Office of Sustainability (2017). “Sea level rise maps (2020s 100-year floodplain)” Online; Accessed January 2021, https://www.data.cityofnewyork.us/Environment/Sea-Level-Rise-Maps-2020s-100-year-Floodplain-/ezfn-5dsb/data, 8. Mayor’s Office of Sustainability (2017). “Sea level rise maps (2050s 100-year floodplain)” Online; Accessed January 2021, https://www.data.cityofnewyork.us/Environment/Sea-Level-Rise-Maps-2050s-100-year-Floodplain-/hbw8-2bah/data

References

Adger WN, Arnell NW, Tompkins EL (2005) Successful adaptation to climate change across scales. Glob Environ Change 15(2):77–86

Burawoy M (1998) The extended case method. Sociol Theory 16(1):4–33

de Bruin WB, Bostrom A (2013) Assessing what to address in science communication. Proc Natl Acad Sci 110(Supplement 3):14062–14068. https://doi.org/10.1073/pnas.1212729110, http://www.pnas.org.ezproxy.cul.columbia.edu/content/110/Supplement_3/14062.full

Gornitz V, Oppenheimer M, Kopp R, Orton P, Buchanan M, Lin N, Horton R, Bader D (2019) New York city panel on climate change 2019 report chapter 3: sea level rise. Ann N Y Acad Sci 1439(1):71–94

Hancilar U, Çaktı E, Erdik M, Franco GE, Deodatis G (2014) Earthquake vulnerability of school buildings: probabilistic structural fragility analyses. Soil Dyn Earthq Eng 67:169–178

Hazus (2018) The department of homeland security, federal emergency management agency. https://www.fema.gov/hazus, online. Accessed July 2018

Horton R, Little C, Gornitz V, Bader D, Oppenheimer M (2015) New York city panel on climate change 2015 report chapter 2: sea level rise and coastal storms. Ann N Y Acad Sci 1336(1):36–44

Jacob K, Deodatis G, Atlas J, Whitcomb M, Lopeman M, Markogiannaki O, Kennett Z, Morla A, Leichenko R, Vancura P (2011) Responding to climate change in New York State: the ClimAID integrated assessment for effective climate change adaptation in New York State: transportation. New York State Energy Research and Development Authority

Jones N, Ross H, Lynam T, Perez P, Leitch A (2011) Mental models: an interdisciplinary synthesis of theory and methods. Ecol Soc 16(1):art46

Lazrus H, Morss RE, Demuth JL, Lazo JK, Bostrom A (2016) Know what to do if you encounter a flash flood: mental models analysis for improving flash flood risk communication and public decision making. Risk Anal 36(2):411–427

LeVeque R (2002) Finite volume methods for hyperbolic problems. Cambridge Texts in Applied Mathematics. Cambridge University Press

Lin N, Emanuel K, Oppenheimer M, Vanmarcke E (2012) Physically based assessment of hurricane surge threat under climate change. Nat Clim Change 2(6):462–467

Lopeman M, Deodatis G, Franco G (2015) Extreme storm surge hazard estimation in lower Manhattan. Nat Hazards 78(1):355–391

Mandli KT, Dawson CN (2014) Adaptive mesh refinement for storm surge. Ocean Model 75:36–50

Mandli KT, Ahmadia AJ, Berger M, Calhoun D, George DL, Hadjimichael Y, Ketcheson DI, Lemoine GI, LeVeque RJ (2016) Clawpack: building an open source ecosystem for solving hyperbolic pdes. PeerJ Comput Sci 2(3):e68

Mayor’s Office of Sustainability (2017) Sea level rise maps (2020s 100-year floodplain). https://www.data.cityofnewyork.us/Environment/Sea-Level-Rise-Maps-2020s-100-year-Floodplain-/ezfn-5dsb/data, online. Accessed July 2017

Mayor’s Office of Sustainability (2017) Sea level rise maps (2050s 100-year floodplain). https://www.data.cityofnewyork.us/Environment/Sea-Level-Rise-Maps-2050s-100-year-Floodplain-/hbw8-2bah/data, online. Accessed July 2017

Miura Y, Mandli KT, Deodatis G (2021) High-speed gis based simulation of storm surge induced flooding accounting for sea level rise. Nat Hazards Rev Forthcom. https://doi.org/10.1061/(ASCE)NH.1527-6996.0000465

Morgan MG, Fischhoff B, Bostrom A, Atman CJ (2002) Risk communication: a mental models approach. Cambridge University Press

Morss RE, Demuth JL, Bostrom A, Lazo JK, Lazrus H (2015) Flash flood risks and warning decisions: a mental models study of forecasters, public officials, and media broadcasters in Boulder, Colorado. Risk Anal 35(11):2009–2028

NCEI N (2018) Us billion-dollar weather and climate disasters

Parris A, Bromirski P, Burkett V, Cayan D, Culver M, Hall J, Horton R, Knuuti K, Moss R (2012) Global sea level rise scenarios for the United States National Climate Assessment. NOAA, Technical report

Stocker T, Qin D, Plattner GK, Tignor M, Allen SK, Boschung J, Nauels A, Xia Y, Bex V, Midgley PM (2013) Climate change 2013-the physical science basis: working group I contribution to the fifth assessment report of the IPCC. Technical report

Acknowledgements

This project is supported by the National Science Foundation under Grant Nos. DMS-1720288 and OAC-1735609. The National Center for Atmospheric Research is sponsored by the National Science Foundation.

Funding

This project is supported by the National Science Foundation under Grant Nos. DMS-1720288 and OAC-1735609. The National Center for Atmospheric Research is sponsored by the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest or competing interests.

Code availability

Miura, Y. (2020). “High-speed GIS Flood Simulation (GISSR). GitHub repository” Online; Accessed December 2020, https://www.github.com/ym2540/GIS_FloodSimulation.git DOI: 10.5281/zenodo.3971004.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miura, Y., Qureshi, H., Ryoo, C. et al. A methodological framework for determining an optimal coastal protection strategy against storm surges and sea level rise. Nat Hazards 107, 1821–1843 (2021). https://doi.org/10.1007/s11069-021-04661-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-021-04661-5