Abstract

Flood risk assessment is generally studied using flood simulation models; however, flood risk managers often simplify the computational process; this is called a “simplification strategy”. This study investigates the appropriateness of the “simplification strategy” when used as a flood risk assessment tool for areas prone to flash flooding. The 2004 Boscastle, UK, flash flood was selected as a case study. Three different model structures were considered in this study, including: (1) a shock-capturing model, (2) a regular ADI-type flood model and (3) a diffusion wave model, i.e. a zero-inertia approach. The key findings from this paper strongly suggest that applying the “simplification strategy” is only appropriate for flood simulations with a mild slope and over relatively smooth terrains, whereas in areas susceptible to flash flooding (i.e. steep catchments), following this strategy can lead to significantly erroneous predictions of the main parameters—particularly the peak water levels and the inundation extent. For flood risk assessment of urban areas, where the emergence of flash flooding is possible, it is shown to be necessary to incorporate shock-capturing algorithms in the solution procedure, since these algorithms prevent the formation of spurious oscillations and provide a more realistic simulation of the flood levels.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Flooding is one of the most frequently occurring natural hazards worldwide. According to Opolot (2013), nearly 99 million people worldwide were affected annually by floods during the period 2000–2008. Climate change will have a key role in intensifying and accelerating the hydrological cycle (Christensen and Christensen 2007; Kundzewicz 2008) and is expected to result in an increase in the frequency and intensity of extreme rainfall events, which in turn is expected to increase the magnitude and frequency of future floods (Allan and Soden 2008; Pall et al. 2011; Rojas et al. 2013). Those affected by future flooding are expected to increase in number as extreme weather events, brought on by climate change, become more frequent. Furthermore, a global increase in the world’s population from the current level of around 7 billion to over 10 billion by 2050, together with increased urbanisation, will further increase the number of citizens affected globally by flooding.

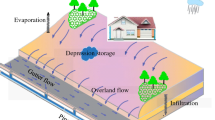

Although rainfall is usually considered to be the most important factor affecting flood forecasting, for extreme flash flood events a number of other parameters are also important, including: (1) soil influences, such as soil moisture, permeability and profile, (2) basin characteristics, such as basin size, shape, slope, surface roughness, stream density and (3) land use, cover and changes; with these other parameters sometimes being of greater significance than rainfall itself (University Corporation for Atmospheric Research 2010). Flash floods are characterised by specific combinations of meteorological conditions (i.e. short, local high-intensity rainfall events), combined with topographical and hydrological characteristics, leading to rapid run-off production processes (Marchi et al. 2010). The nature of flash floods makes them particularly difficult to predict; moreover, their sudden occurrence provides very limited opportunity for warnings to be prepared and issued effectively (Xia et al. 2011a; Creutin et al. 2013). This, coupled with the higher discharges and increased flow velocities associated with flash floods, poses a higher risk of increased human casualties, including fatalities. For example, during the period 1950–2006, 40 % of the flood-related casualties occurring in Europe were due to flash flooding (Barredo 2007).

Even though it is not possible to prevent the occurrence of the natural conditions, which lead to flash floods, flood damage and human casualties can be minimised by adopting suitable structural and non-structural measures. Nowadays, potential flood damage is generally estimated using flood simulation models. Even though developments in the field of computer science have enabled the generation and application of high-resolution flood prediction models, difficulties still remain in recreating actual extreme flood events, which in turn has a direct impact on model predictions of flood elevations, inundation extent and hazard risk. This difference between the computer-predicted flood characteristics and the actual properties is often a consequence of what is called a “simplification strategy”. In an effort to minimise simulation time and optimise limited resources, it is common practice among flood risk assessment practitioners to simplify the computational process by leaving out governing terms, such as the advective acceleration or not including shock-capturing features for trans- or supercritical flows. For example, in modelling trans- or supercritical flows, numerical oscillations generally occur in conventional 1D or 2D models and the advection terms are often removed or an artificially high bed resistance or eddy diffusion term is included to dampen out the oscillations (de Almeida et al. 2012); for such conditions, it is not then possible to evaluate how much of the increased dissipation is being used in physically dissipating the energy of the flow and how much is being used in numerically damping out the oscillations. Such flood simulation results in potential extreme flood events can lead to time-consuming analysis or an increase in the complexity of the problem that practitioners are dealing with (Leskens et al. 2014). This “simplification strategy” can lead to misleading and incorrect predictions of flood elevations, inundation extent and hazard risk, particularly in urban settings where the impact of a flash flood is higher than in a rural setting where the population is much less and hence the infrastructure is less changed.

Flash floods usually occur in short and steep basins. The steeper the slope the less time the peak flow has to be dissipated as the hydrograph propagates down the river basin. This maintenance of the peak hydrograph (both in terms of elevations and discharges) frequently leads to near (trans) or supercritical flows. Most numerical models used for flood simulation by flood control management practitioners are based either on the ADI (alternating direction implicit) algorithm or a form of an explicit central difference scheme. Such models are generally very accurate (numerically) in modelling flood events over mild slope, or nearly horizontal, flow conditions. However, in the presence of discontinuities, such as the emergence of a hydraulic jump, or steep hydraulic gradients, as often occur for flash floods or short steep catchments, then such models are prone to generate spurious numerical oscillations close to the sharp gradients in the solution (Liang et al. 2007a). This numerical solution procedure, without the inclusion of artificial dissipation, can lead to highly erroneous results (Liang et al. 2006a). Numerical oscillations mostly occur in the region of discontinuities, or high elevation or velocity gradients. While solving the hydrodynamic governing equations, the numerical schemes try to fit the solution with a function. In the presence of discontinuities, this function approximation leads to discontinuous solutions, which in turn lead to oscillations analogous to the Gibbs phenomenon (Lax 2006). A common technique used to reduce these oscillations is bound the solution and preserve the conservation properties of the flow; this is referred to as “shock-capturing”. Even though there are numerous shock-capturing models that can deal with these discontinuities (Caleffi et al. 2003; Liang et al. 2006a; Kazolea and Delis 2013), the high computational cost required by some of the shock-capturing models persuades practitioners in flood risk assessment to adopt the “simplification strategy”; to save time and resources, they revert to the ADI-type models, which provide incorrect flood level predictions for rapidly changing flows (Liang et al. 2006a).

Flash floods are mostly limited to specific regions (Marchi et al. 2010; Borga et al. 2011). For example, the terrain across much of Wales (UK) is complex, with many short steep catchments across the country being highly prone to flash flooding (Carter 2009; Davies 2010; Hough 2012; BBC 2013a, b; Lowe 2013). The St Asaph flood event, which occurred in November 2012, highlights some of the problems associated with current flood risk assessment tools. The town of St Asaph, located in north Wales, UK, was devastated by flash flooding in 2012. The post-flood investigation revealed that flood defences offered protection against 1 in 30-year flood in some parts of the city and against 1 in 75-year flood in others, whereas it was previously believed that the flood defences would protect against a 1 in 100-year flood (Denbighshire County Council 2013). Findings from this report clearly indicate limitations in the current flood risk assessment methodologies adopted in the UK and particularly for river basins with short steep catchments. The use of standard flood risk tools for such river basins does not adequately represent the complex hydrodynamic processes associated with trans- and supercritical flow. Simplifications in the modelling algorithms lead to the emergence of numerical oscillations which need to be smoothed out. In doing so, the model has a tendency to under predict peak flood discharges, elevations and hence flood inundation extent.

This paper investigates the appropriateness of the “simplification strategy” when used as a flood risk assessment tool for areas susceptible to flash flooding and extreme flood events. The results strongly suggest that the application of the “simplification strategy” leads to incorrect flood level predictions in the flood simulation analysis of such flash flood events. The findings in this paper contribute to ongoing research in the field of applying environmental modelling in practical flood risk assessment and are also relevant to the entire engineering community, planning authorities and the wider public. To study these effects in more detail, emphasis has been put on modelling an extreme flow event that occurred in Boscastle in 2004; a well-documented natural hazard in the UK, which occurred in a short and steep catchment and led to devastating damage to infrastructure and the small-town community.

2 Study area

The 2004 Boscastle flash flood was selected as a case study in this paper. This flood event is one of the best recorded flash floods in the UK, and therefore there is a relatively large base of reasonable data available for verifying numerical model-predicted results.

On 16 August 2004, the coastal village of Boscastle, in north Cornwall, UK (Fig. 1), was devastated by a flash flood. The short, steep valleys of north Cornwall, and specially Boscastle (see Fig. 1), are particularly vulnerable to localised high rainfall events. They collect water efficiently from the surrounding moors, channel it rapidly into the main stream, where the flow propagates to the nearby sea in a matter of a few hours. The extreme flood event in Boscastle was caused by the combination of heavy rain, saturation of the soil due to the previous 2 weeks receiving well above average rainfall, and the steep nature of the river basin and catchment. Over 200 mm of rainfall fell in a period of approximately 5 h into a small catchment (area of 20 km2), which resulted in a 1 in 400-year flood event. The total peak discharge of the flood was estimated to be 180 m3/s (Roca and Davison 2010).

Topographical data for the study domain were collected after the flood using the LiDAR (Light Detection and Ranging) mapping technique (Killinger 2014). The study domain considered herein is 665 m long, 235 m wide, and was modelled using a high-resolution domain, divided into 156,275 square cells, with each cell having a cell area of 1 m2. The extent of the computational domain around the village, and used in this case study, is shown in Fig. 2.

The River Valency enters the study domain from the east (see Fig. 2). The eastern boundary of the domain was set as an inflow boundary for the River Valency, i.e. the peak discharge value was specified as an upstream boundary. The downstream boundary was set downstream of Boscastle, where River Valency is governed by the tides in the coastal region. According to HRW (2005), the actual tide level at the peak of the flood was approximately 0.8 m AOD, whereas the highest tide level of around 3.5 m AOD was measured approximately 1 h after the peak of the flood had passed through the domain. Neither the actual tide level at the peak of the flood nor the high tide level, had any effect on the actual flood levels in the centre of the village (HRW 2005); therefore, there was no need to include a time-varying tide in the model. Thus, the prescribed water level, which was fixed during the numerical computation, was specified as the downstream boundary.

Roughness coefficients for the flooded area were estimated in the post-flood survey. Recommended values of Manning’s coefficients from the post-flood survey are presented in Table 1.

The village of Boscastle is situated in a narrow, steep valley (see Fig. 1). Therefore, the model domain was defined to cover just the car park, the River Valency channel (through the centre of the village) and downstream to the harbour, located just downstream of the B3263 bridge (see Fig. 2). The surrounding terrain was too high to be affected by flooding. Since the computational domain was relatively small and had a short river reach, friction variation was predicted not to have any significant effect on the flood level predictions; hence, a Manning’s coefficient of 0.040 was applied across the entire domain (i.e. an average value was calculated from Table 1). In accordance with eyewitness accounts, both bridges were blocked during the early stages of the flood event (HRW 2005). To account for this, both bridges were modelled as being completely blocked, i.e. no flood flow was permitted under both bridges. In reality, there would still have been some flow under the bridges, but the amount of the flow propagating through the bridges would have been relatively small.

3 Numerical models

The flood simulations presented in this paper were conducted using the DIVAST and DIVAST-TVD numerical models. The DIVAST model is a widely used open-source ADI-type flood model, developed by Falconer (1986), while the DIVAST-TVD model is a shock-capturing flood model introduced by Liang et al. (2006a).

3.1 DIVAST model

Depth Integrated Velocities and Solute Transport (DIVAST) is a two-dimensional, depth-integrated, time-variant model, which has primarily been developed for predicting the hydrodynamics in estuaries and coastal waters. It is suitable for water bodies that are dominated by near horizontal, unsteady flows and do not display significant vertical stratification. The model simulates two-dimensional currents, water surface elevations and various water quality parameters within the modelling domain as functions of time, taking into account the hydrodynamic characteristics governed by the bed topography and boundary conditions.

DIVAST has been developed in order to simulate the hydrodynamic, solute and sediment transport processes in rivers, estuaries and coastal waters. The hydrodynamic module solves the Reynolds-averaged, depth-integrated, Navier–Stokes equations. The governing equations for the hydrodynamic processes include the continuity equation:

and two momentum equations, given for the x and y directions as:

where η is water surface elevation above datum, q m is source discharge per unit horizontal area (m3/s/m2), p and q are discharges per unit width in the x and y directions, U and V are depth-averaged velocity components in the x and y directions, H is total water depth, h is water depth between bed level and datum, β is momentum correction factor for a non-uniform vertical velocity profile, f is Coriolis parameter, ρ a is air density, ρ is density of fluid, W x and W y are wind velocities in x and y directions, C is Chezy roughness coefficient, C w is air/fluid resistance coefficient, and ε is depth-averaged turbulent eddy viscosity.

In the DIVAST model, an alternating direct implicit (ADI) method has been adopted for solving the hydrodynamic equations. Adopting the finite difference method (FDM) and a space-staggered grid, the governing equations are split into two sets of one-dimensional equations, which are then solved at two half time steps. The system of equations to be solved in the first and second half time steps can be described as a tri-diagonal system of equations and are solved efficiently using a simplified form of Gaussian elimination, e.g. the Thomas algorithm. The x-direction system of equations is solved in the first half time step, while the y-direction system of equations is solved in the second half time step. This means that the solution of a full two-dimensional matrix is not required, and a one-dimensional set of equations is solved implicitly for each half time step. The numerical scheme for the hydrodynamics is basically second order accurate, both in time and space, with no stability constraints due to the time centred implicit character of the ADI technique. However, it has been recognised that the time step needs to be restricted so that a reasonable computational accuracy can be achieved. Therefore, a Courant number restriction for accuracy of the hydrodynamic module has been implemented in the DIVAST model.

The DIVAST model has been acquired by consulting companies and government organisations for application to over 100 hydro-environmental impact assessment studies worldwide. The DIVAST model has also been extensively calibrated and verified against laboratory and field data, with details of model refinements and verification tests being well documented in the literature (Falconer and Lin 2003; Bockelmann et al. 2004; Falconer et al. 2005; Liang et al. 2006b; Hunter et al. 2008; Gao et al. 2011; Ahmadian et al. 2012; Ahmadian and Falconer 2012; Sparks et al. 2013; Wang and Lin 2013).

3.2 DIVAST-TVD model

The DIVAST-TVD model is a variation of the DIVAST model, which includes a shock-capturing algorithm for maintaining sharp gradients—a feature particularly relevant for modelling flood propagation through short steep catchments. The DIVAST-TVD model combines a symmetric total variation diminishing (TVD) term with the standard MacCormack scheme. The MacCormack scheme is a numerical method ideally suited to solving the time-dependent compressible Navier–Stokes equations (MacCormack 1969), and is a variation of the Lax–Wendroff scheme (Lax and Wendroff 1964). The MacCormack scheme is a predictor–corrector scheme, defined as (Mingham et al. 2001):

Predictor step:

Corrector step:

where u is “provisional” value, n is time level, Δt is time step, and Δx is spatial step.

The first step (the predictor step) calculates a rough approximation of the desired variable(s), whereas the second step (the corrector step) refines the initial approximation. Equation (4) shows that forward difference is employed in the predictor step, while Eq. (5) shows that backward difference is employed in the corrector step. There is also an alternate version of the MacCormack scheme, where backward difference is employed in the predictor step and forward difference is employed in the corrector step (Pletcher et al. 2012). An implicit MacCormack scheme can also be derived from the explicit MacCormack scheme (Fürst and Furmánek 2011).

The standard MacCormack scheme has second-ordered accuracy (MacCormack 1969). According to Godunov’s theorem (Godunov 1959), all schemes of accuracy greater than one will generate spurious oscillations in the vicinity of large gradients. The most common approach for resolving these discontinuities is the shock-capturing method. There are two major groups of shock-capturing schemes in use in computational fluid dynamics. One group of schemes is based on the Godunov method, which solves the Riemann problems at the interfaces of grid cells (Kerger et al. 2011; LeFloch and Thanh 2011; Urbano and Nasuti 2013), while the other group is based on an arithmetical combination method of the first- and second-order upwind schemes (Lin et al. 2003; Tseng 2004; Ouyang et al. 2013). The DIVAST-TVD model belongs to the second group.

To prevent non-physical oscillations, a symmetric five-point TVD term is added to the corrector step of the MacCormack scheme. The TVD term implemented in the DIVAST-TVD model was first presented by Davis (1984), and deployed in solving shallow water equations by Louaked and Hanich (1998) and Mingham et al. (2001). Davis (1984) proposed a total variation diminishing scheme, where a symmetric five-point TVD term is appended to Lax–Wendroff predictor–corrector scheme. The symmetric five-point TVD term is used to adjust the introduced numerical diffusion: the second-order accurate MacCormack scheme is deployed where the solution is smooth, whereas the first-order accurate upwind scheme is deployed to avoid spurious numerical oscillations (Liang et al. 2006a). The accuracy analyses of this symmetric five-point TVD term can be found in Davis (1984) and Mingham et al. (2001).

The DIVAST-TVD model is a useful tool for analysing storm surges, dam-break flows, flash floods and other flow scenarios that could involve rapid changes in the hydrodynamic conditions. Detailed information about model development, model structure, and extensive and quantitative verifications can be found in the literature (Liang et al. 2006a, c, 2007a, b, 2010).

4 Methodology

The well-documented Boscastle flash flood event of 2004 was simulated using three different flood prediction model structures, including:

-

1.

the shock-capturing flood model (i.e. the TVD simulation case)

-

2.

the regular ADI-type flood model (i.e. the ADI simulation case)

-

3.

the regular ADI-type flood model with no inertia terms (i.e. the NI simulation case)

For the TVD case, the full shock-capturing ability of the DIVAST-TVD model was included. For the ADI case, the flood simulations were undertaken using the DIVAST model, a regular ADI-type flood prediction model. For the NI case, the advection terms were excluded in the DIVAST model and therefore the DIVAST model worked on the principle of the diffusion wave concept (Wang et al. 2011), i.e. similar to many rapid assessment commercial models. The objective of the comparisons was therefore to establish the main differences between these three modelling approaches when simulating flood events where abrupt changes of flow occur, such as for the case of flash flooding or dam-break scenarios. In order to compare the computational ability of these three different modelling approaches, all test cases were run under the same conditions, i.e. the same boundary conditions, Manning’s coefficient, the same model time step, etc. The initial assumption was made that only the TVD test case would generate numerically accurate results, whereas the ADI and NI cases were likely to give less numerically accurate flood level and inundation extent predictions. These two cases do not have appropriate mechanisms to absorb numerical oscillations, which occur for rapidly varying flows (Liang et al. 2006a, c, Moussa and Bocquillon 2009), and therefore produce highly erroneous results.

Simulation results were compared to actual post-flood field measurements and observations. Namely, following the extreme Boscastle flood event, a survey of flood marks was undertaken (HRW 2005). The peak water levels during the flood are frequently marked by a collection of floating branches or rubbish that were carried by the flow; these marks are referred to as wrack marks. Although there are some reservations regarding the accuracy of the wrack marks (e.g. wrack marks may not always correspond to the highest water levels), the wrack marks still provide the best indication of actual flood levels (HRW 2005), and were therefore used to validate the performance of the three different flood model structures.

After validation of the performance of the different flood modelling approaches, attempts have been made herein to improve on the simulation results for the ADI case. One of the most obvious ways to improve the final results, and that most widely used by practitioners engaged in flood risk assessment, is to increase the value of the Manning’s coefficient. By increasing the roughness coefficient, the flow velocity generally decreases and correspondingly the water level generally rises. To test this assumption, more than 30 additional simulations of the ADI case were carried out, using different values of the Manning’s coefficient for each simulation. Only the relevant simulations are presented herein.

5 Results and discussion

As stated earlier, all three model cases were run for the same conditions (i.e. with the same upstream and downstream boundary conditions, Manning’s coefficient of 0.040 across the entire study domain and model time step). The differences between these three different model structures can be readily identified by a direct comparison of the flood level predictions with observed wrack marks, obtained from a post-flood survey of flood marks (HRW 2005), with the collected data being reproduced in Table 2. Figure 3 shows the position of the surveyed wrack marks, while Fig. 4 shows a comparison between the observed flood levels (i.e. wrack marks) and the predicted flood levels for three different model configurations, e.g. the TVD, ADI and NI simulation cases.

Locations of the post-flood surveyed wrack marks (HRW 2005)

Comparison between predicted flood levels for the TVD, ADI and NI simulation cases and post-flood surveyed wrack marks: a shows comparisons of the marking positions on the western side of the flood pathway (marks 1–11), while b shows the comparisons on the eastern side of the flood pathway (marks 12–30)

In Fig. 4, it can be seen that results for the TVD simulation case generally fit the post-flood surveyed wrack marks. The results from the TVD test case differ more obviously at marked positions 4, 19, 23 and 27. At marking positions 4 and 27, the TVD simulation case slightly overpredicts the flood levels, but according to the comments made by observers (Table 2) the maximum flood levels at marked positions 4 and 27 were probably higher than those recorded, which suggests that at these two marked positions the results from the TVD simulation case do not differ too significantly from the actual flood levels occurring during the event. At the marked positions 19 and 23, the TVD simulation case overpredicts the flood level by nearly 1 m. However, this big difference in the flood level predictions is most likely due to the too simple modelling approach for this particular section. The marked positions 19 and 23 were selected between buildings where the space was very limited. To get more accurate results for such a small space, a more densely distributed grid would be necessary to represent these areas more accurately, or buildings around the marking positions should be modelled as solid blocks, which would then allow the model to propagate the flood wave more realistically in this area. Overall, the TVD simulation case results appear to reproduce the wrack marks most accurately.

Figure 4 also shows that the NI simulation case generally overpredicted the peak flood levels. The inaccuracy in this approach was as expected, since the diffusion wave concept is too basic for simulating the extreme flood events. The inertia terms can be neglected without any major influence on the predicted water levels if the Froude number is well below 0.5, whereas at flows with higher Froude numbers discontinuities and errors start to appear (Katopodes and Schamber 1983). Diffusion wave models can be used to simulate extreme flooding to a certain extent, but the zero-inertia approach is particularly erroneous for examples where the depth and velocity changes are very rapid, as in the case for flash flooding (Moussa and Bocquillon 2009). Overall, the NI simulation case results are numerically inaccurate.

Figure 4 further shows that the ADI simulation case also generally underpredicted the peak flood levels. The inaccuracy in this approach was as expected, since it can be shown that the presence of discontinuities leads to the generation of spurious numerical oscillations for such numerical schemes, and with the oscillations being particularly pronounced where the sharp gradients exist in the true solution (Liang et al. 2007a). These spurious solutions lead to erroneous results, which can only be overcome by artificially raising the roughness coefficient to an unrealistically high level (Liang et al. 2006a). Hence, it can be concluded for such a case study, then the ADI simulation results are numerically inaccurate and lead to erroneous flood inundation maps.

The difference between the simulation results becomes more obvious when the water depths from all three test cases are compared. Figures 5a (i.e. NI case), b (i.e. ADI case) and c (i.e. TVD case) show the predicted maximum water depth for each model case (flood depths are expressed in metres). In comparing the predicted water depths to those obtained for other studies, such as HRW (2005), Roca and Davison (2010) and Xia et al. (2011b) show that the TVD case more accurately predicted the flood depths, whereas the NI and ADI simulation cases inaccurately predicted the flood depths. As stated earlier, the ADI-type models inaccurately predicted the main hydrodynamic parameters due to the fierce spurious numerical oscillations predicted for this site. These numerical oscillations, caused by abrupt changes in the flow regime, have swamped the flood wave prediction and therefore resulted in erroneous flood level predictions. These results again confirm the initial assumption in that ADI-type models are inappropriate for simulating flash flood scenarios.

The Nash–Sutcliffe model efficiency coefficient (NSE) was used to measure the predictive capability of the TVD, ADI and NI test case (i.e. different configurations of the DIVAST-TVD model). The NSE was presented by Nash and Sutcliffe (1970) and is based on the following equation:

where O i is the observed data, S i is the simulated data, and \( \bar{O} \) is the mean of the observed data. Nash–Sutcliffe efficiencies can range from −∞ to 1. An efficiency of 1 (i.e. NSE = 1) corresponds to a perfect match between the predicted data and the observed data. An efficiency of 0 (NSE = 0) indicates that the model predictions are as accurate as the mean of the observed data. An efficiency of less than zero (NSE < 0) indicates that the mean of the observed data is a better predictor than the model. Most importantly, the closer the model efficiency is to 1, the more accurate is the model.

The NSE efficiency for the different configurations of the DIVAST-TVD model was calculated using 30 pairs of observed simulated values. Calculation of the NSE coefficients revealed that the TVD configuration had an efficiency of 0.9863, the ADI configuration had an efficiency of 0.8530, while the NI configuration had an efficiency of 0.8684. These coefficient values show that the TVD configuration outperformed the other two models in predicting the peak flood levels; with the NSE coefficient being nearly equal to 1 and thereby indicating that the TVD predictions almost matched the observed data perfectly, whereas the ADI and NI simulation cases were less numerically accurate.

Although results presented herein highlight that NI and ADI-type models are not appropriate for flood simulations of extreme flood events, these models (especially the ADI-type) are still the model of choice for most practitioners working in this field, since these models execute the simulations much quicker than the more numerically accurate shock-capturing models (Liang et al. 2006a). For example, all three simulation cases were completed on personal computer (processor Intel® Core™ i5-3210 M CPU 2.50 GHz, 6 GB RAM). The TVD simulation case was completed in 8 h 52 min, whereas the ADI and the NI simulation cases were completed in less than an hour. The differences in computational times are quite substantial even for a small domain, such as the one used in this study. Therefore, it is reasonable to assume that the differences in the computational times would be even greater for flood simulations for much larger urban areas.

Even though the ADI simulation case was completed much faster than the TVD simulation case, the above results have highlighted that the ADI simulation results can be completely misleading in terms of determining the extent of the flood inundation impact for extreme flood events. However, during flood risk assessment, the oscillatory results are often improved by increasing the Manning’s coefficient, with some of the friction then smoothing out the numerical oscillations. Therefore, additional flood simulations with the ADI-type model (e.g. ADI simulation case) were performed, to test the appropriateness of this methodology for flash flood modelling in steep terrains. In each ADI case simulation, the value of the Manning’s coefficient was gradually increased until the modelled water depths were not similar to those obtained using the TVD case (the case which simulated the most accurate flood levels).

As stated earlier, more than 30 additional simulations of the ADI configuration were carried out, with the value of the Manning’s coefficient being gradually increased for each simulation run. It was noted that higher, but still reasonable values of Manning’s coefficient (e.g. values of up to around 0.15—an unrealistically high value for Manning’s n) did not significantly improve the simulation results. This suggested that for this extreme site, the ADI-type models were not capable of accurately predicting the main parameters for rapidly varying flows, even when higher roughness coefficients were applied. Therefore, unrealistically high values of Manning’s coefficient were used to manipulate the ADI-type model simulation results to an acceptable level.

Figure 6 shows the predicted maximum water depths for the ADI simulation cases, for Manning’s coefficients of 0.1, 0.2, 0.3, 0.4, 0.5 and 0.6. The results from these simulations were then compared to the TVD simulation case, which employed a more realistic value of Manning’s coefficient (i.e. 0.040). It can be seen that higher value of the Manning’s coefficient leads to greater water depths, and with a value of the Manning’s coefficient of 0.6, the ADI simulation case produced similar results to the TVD simulation case. It should be added here that such high values of Manning’s coefficient also result in unrealistically low flood velocities for an extreme flood event, such as a flash flood. However, as there are no records of actual flood velocities for the 2004 Boscastle flood, it is not possible to validate the model-predicted flood velocities; this will be the focus of future studies.

Even though the ADI simulation case with a Manning’s value of 0.6 generally matched the TVD simulation case, this should not be considered as proof of appropriateness for the ADI-type models for simulating extreme flood events. There is no known procedure in determining the value of the Manning’s coefficient which will improve on the accuracy of the simulations results to an acceptable level. If flood risk assessment practitioners improve on their final flood elevation result by increasing the value of the Manning’s coefficient, their effort to improve on the final results is based on bare speculation, especially if it is taken into account that maximum values of a Manning’s coefficient for open channels are about 0.20 (Chow 1959). In setting the Manning’s coefficient values as high as 0.6, or thereabouts, the corresponding flood simulation results have no scientific or engineering basis, as such large values of the Manning coefficient can rarely be justified on technical grounds. Therefore, these results again strongly confirm the initial assumption: that is, that the “simplification strategy”, in any form, is not appropriate for flood risk assessment of urban areas where the emergence of flash flooding is possible.

6 Conclusions

In this paper, we have investigated the appropriateness of the “simplification strategy”, frequently used in flood risk assessment for areas where the emergence of flash flooding (or extreme flood events) is possible. The 2004 Boscastle flash flood was selected as a case study, and three different model structures were considered, including: (1) a shock-capturing model (i.e. the TVD case), (2) a regular ADI-type flood model (i.e. the ADI case) and (3) a diffusion wave concept, e.g. zero-inertia approach (i.e. the NI case). Simulation results from these three different model structures were compared to post-flood measurements, based mainly on observed wrack marks. Direct comparisons between the predicted flood levels and observed wrack marks, and direct comparisons with predicted water depth marks, showed that the TVD model was significantly more accurate in terms of numerical model predictions of the flash flood peak elevations, as compared to the water elevations predicted using the two other models considered herein.

In using the ADI simulation case, the results could be improved by increasing the Manning’s coefficient. However, the improvements were based on using an artificially high Manning’s coefficient to give an acceptable level of accuracy. As only unrealistically high roughness coefficients improved simulation results to an acceptable level, these improvements should not stand as proof of appropriateness of ADI-type models for simulating flash flood scenarios, but they should stand as a caution against using this undesirable practice, since setting blindly unrealistically high values of Manning’s coefficients to improve the results could have a devastating consequence in predicting the real extreme flood elevations and inundation extent, particularly when designing flood defence structures.

The lack of data for flash flood events creates a challenge for flood modellers, and will do so for the foreseeable future due to practical difficulties in collecting data in emergency situations. Therefore, there is an urgent need to improve our knowledge of flash flood processes and drivers as well as raising awareness of the importance of using appropriate models. This being the case, further extensive research is needed to determine the precise hydrological and topographical conditions as to when shock-capturing algorithms are needed. This should focus on determining the topographical and hydrological indexes in the areas prone to flash flooding, which would stand as a guide as to when to use regular type flood modelling or extreme flood event modelling. Determination of these indexes, coupled with the correct flood modelling scheme, will lead to a more realistic flood modelling in flashy catchments. This, in turn, will better equip flood risk practitioners in their planning and decision-making. Although the storm event of the 2004 Boscastle flood could not have been prevented, the devastating consequences of the ensuing flash floods could be mitigated against in the future, particularly with the use of the most appropriate models.

References

Ahmadian R, Falconer RA (2012) Assessment of array shape of tidal stream turbines on hydro-environmental impacts and power output. Renew Energy 44:318–327

Ahmadian R, Falconer R, Bockelmann-Evans B (2012) Far-field modelling of the hydro-environmental impact of tidal stream turbines. Renew Energy 38:107–116

Allan RP, Soden BJ (2008) Atmospheric warming and the amplification of precipitation extremes. Science 321:1481–1484

Barredo J (2007) Major flood disasters in Europe: 1950–2005. Nat Hazards 42:125–148

BBC (2013a) Cardiff hit by flash flooding chaos on Saturday afternoon. http://www.bbc.co.uk/news/uk-wales-south-east-wales-24595500

BBC (2013b) Flash flooding hits homes after rain across Wales. http://www.bbc.co.uk/news/uk-wales-23576219

Bockelmann BN, Fenrich EK, Lin B, Falconer RA (2004) Development of an ecohydraulics model for stream and river restoration. Ecol Eng 22:227–235

Borga M, Anagnostou EN, Blöschl G, Creutin JD (2011) Flash flood forecasting, warning and risk management: the HYDRATE project. Environ Sci Policy 14:834–844

Caleffi V, Valiani A, Zanni A (2003) Finite volume method for simulating extreme flood events in natural channels. J Hydraul Res 41:167–177

Carter H (2009) Northern England and Wales mop up after flash floods. http://www.theguardian.com/uk/2009/jun/11/flash-floods-northern-england-wales

Chow VT (1959) Open channel hydraulics, 1959. MacGraw-Hill, New York

Christensen JH, Christensen OB (2007) A summary of the PRUDENCE model projections of changes in European climate by the end of this century. Clim Change 81:7–30

Creutin JD, Borga M, Gruntfest E, Lutoff C, Zoccatelli D, Ruin I (2013) A space and time framework for analyzing human anticipation of flash floods. J Hydrol 482:14–24

Davies D (2010) Heavy rain sparks flash floods in Wales. http://www.independent.co.uk/environment/nature/heavy-rain-sparks-flash-floods-in-wales-2057955.html

Davis SF (1984) TVD finite difference schemes and artificial viscosity. NASA CR-172373, ICASE report, no. 84–20, National Aeronautics and Space Administration, Langley Research Center, Hampton

de Almeida GAM, Bates P, Freer JE, Souvignet M (2012) Improving the stability of a simple formulation of the shallow water equations for 2-D flood modeling. Water Resour Res 48:W05528

Denbighshire County Council (2013) Investigation into the November 2012 Floods: Flood Investigation Report—Part 1

Falconer RA (1986) Water quality simulation study of a natural harbor. J Waterw Port Coast Ocean Eng 112:15–34

Falconer RA, Lin B (2003) Hydro-environmental modelling of riverine basins using dynamic rate and partitioning coefficients. Int J River Basin Manag 1:81–89

Falconer RA, Lin B, Kashefipour S (2005) Modelling water quality processes in estuaries. Wiley, Hoboken

Fürst J, Furmánek P (2011) An implicit MacCormack scheme for unsteady flow calculations. Comput Fluids 46:231–236

Gao G, Falconer RA, Lin B (2011) Numerical modelling of sediment-bacteria interaction processes in surface waters. Water Res 45:1951–1960

Godunov S (1959) A difference scheme for numerical solution of discontinuous solution of hydrodynamic equations. Matematicheskii Sbornik 47:271–306

Hough A (2012) Welsh village evacuated as flash floods strike Britain. http://www.telegraph.co.uk/topics/weather/9322889/Weather-Welsh-village-evacuated-as-flash-floods-strike-Britain.html

HRW (2005) Flooding in Boscastle and North Cornwall, August 2004 (Phase 2 Studies Report). Report EX 5160. Wallingford

Hunter NM, Bates PD, Neelz S, Pender G, Villanueva I, Wright NG, Liang D, Falconer RA, Lin B, Waller S, Crossley AJ, Mason DC (2008) Benchmarking 2D hydraulic models for urban flooding. Proc Inst Civil Eng Water Manag 161:13–30

Katopodes ND, Schamber DR (1983) Applicability of dam-break flood wave models. J Hydraul Eng ASCE 109:702–721

Kazolea M, Delis AI (2013) A well-balanced shock-capturing hybrid finite volume–finite difference numerical scheme for extended 1D Boussinesq models. Appl Numer Math 67:167–186

Kerger F, Archambeau P, Erpicum S, Dewals BJ, Pirotton M (2011) An exact Riemann solver and a Godunov scheme for simulating highly transient mixed flows. J Comput Appl Math 235:2030–2040

Killinger DK (2014) 10: Lidar (light detection and ranging). In: Baudelet M (ed) Laser spectroscopy for sensing. Woodhead Publishing, Sawston

Kundzewicz ZW (2008) Climate change impacts on the hydrological cycle. Ecohydrol Hydrobiol 8:195–203

Lax P (2006) Gibbs phenomena. J Sci Comput 28:445–449

Lax PD, Wendroff B (1964) Difference schemes for hyperbolic equations with high order of accuracy. Commun Pure Appl Math 17:381–398

Lefloch PG, Thanh MD (2011) A Godunov-type method for the shallow water equations with discontinuous topography in the resonant regime. J Comput Phys 230:7631–7660

Leskens JG, Brugnach M, Hoekstra AY, Schuurmans W (2014) Why are decisions in flood disaster management so poorly supported by information from flood models? Environ Model Softw 53:53–61

Liang D, Falconer RA, Lin B (2006a) Comparison between TVD-MacCormack and ADI-type solvers of the shallow water equations. Adv Water Resour 29:1833–1845

Liang D, Falconer RA, Lin B (2006b) Improved numerical modelling of estuarine flows. Proc Inst Civil Eng Marit Eng 159:25–35

Liang D, Lin B, Falconer RA (2006c) Simulation of rapidly varying flow using an efficient TVD-MacCormack scheme. Int J Numer Methods Fluids 53:811–826

Liang D, Lin B, Falconer RA (2007a) A boundary-fitted numerical model for flood routing with shock-capturing capability. J Hydrol 332:477–486

Liang D, Lin B, Falconer RA (2007b) Simulation of rapidly varying flow using an efficient TVD-MacCormack scheme. Int J Numer Methods Fluids 53:811–826

Liang D, Wang X, Falconer RA, Bockelmann-Evans BN (2010) Solving the depth-integrated solute transport equation with a TVD-MacCormack scheme. Environ Model Softw 25:1619–1629

Lin G-F, Lai J-S, Guo W-D (2003) Finite-volume component-wise TVD schemes for 2D shallow water equations. Adv Water Resour 26:861–873

Louaked M, Hanich L (1998) TVD scheme for the shallow water equations. J Hydraul Res 36:363–378

Lowe C (2013) Homes evacuated in Treorchy after flash flooding. http://www.walesonline.co.uk/news/wales-news/homes-evacuated-treorchy-after-flash-6407007

Maccormack R (1969) The effect of viscosity in hypervelocity impact cratering. AIAA Paper 69-354

Marchi L, Borga M, Preciso E, Gaume E (2010) Characterisation of selected extreme flash floods in Europe and implications for flood risk management. J Hydrol 394:118–133

Mingham CG, Causon DM, Ingram D, Mingham CG, Causon DM, Ingram D (2001) A TVD MacCormack scheme for transcritical flow. Proc Inst Civil Eng Water Marit Eng 148:167–175

Moussa R, Bocquillon C (2009) On the use of the diffusive wave for modelling extreme flood events with overbank flow in the floodplain. J Hydrol 374:116–135

Nash JE, Sutcliffe JV (1970) River flow forecasting through conceptual models part I: a discussion of principles. J Hydrol 10:282–290

Opolot E (2013) Application of remote sensing and geographical information systems in flood management: a review. Res J Appl Sci Eng Technol 6:1884–1894

Ouyang C, He S, Xu Q, Luo Y, Zhang W (2013) A MacCormack-TVD finite difference method to simulate the mass flow in mountainous terrain with variable computational domain. Comput Geosci 52:1–10

Pall P, Aina T, Stone DA, Stott PA, Nozawa T, Hilberts AGJ, Lohmann D, Allen MR (2011) Anthropogenic greenhouse gas contribution to flood risk in England and Wales in autumn 2000. Nature 470:382–385

Pletcher RH, Tannehill JC, Anderson D (2012) Computational fluid mechanics and heat transfer. CRC Press, Boca Raton

Roca M, Davison M (2010) Two dimensional model analysis of flash-flood processes: application to the Boscastle event. J Flood Risk Manag 3:63–71

Rojas R, Feyen L, Watkiss P (2013) Climate change and river floods in the European Union: socio-economic consequences and the costs and benefits of adaptation. Global Environ Change 23:1737–1751

Sparks TD, Bockelmann-Evans BN, Falconer RA (2013) Development and analytical verification of an integrated 2-D surface water–groundwater model. Water Resour Manag 27:2989–3004

Tseng M-H (2004) Improved treatment of source terms in TVD scheme for shallow water equations. Adv Water Resour 27:617–629

University Corporation for Atmospheric Research (2010) Flash Flood Early Warning System Reference Guide 2010

Urbano A, Nasuti F (2013) An approximate Riemann solver for real gas parabolized Navier-Stokes equations. J Comput Phys 233:574–591

Wang F, Lin B (2013) Modelling habitat suitability for fish in the fluvial and lacustrine regions of a new Eco-City. Ecol Model 267:115–126

Wang Y, Liang Q, Kesserwani G, Hall JW (2011) A positivity-preserving zero-inertia model for flood simulation. Comput Fluids 46:505–511

Xia J, Falconer RA, Lin B, Tan G (2011a) Modelling flash flood risk in urban areas. Proc Inst Civil Eng Water Manag 164:267–282

Xia J, Falconer RA, Lin B, Tan G (2011b) Numerical assessment of flood hazard risk to people and vehicles in flash floods. Environ Model Softw 26:987–998

Acknowledgments

This research project was funded by High Performance Computing (HPC) Wales and Fujitsu, and part funded by the European Regional Development Fund and European Social Fund. The bathymetric data for the Boscastle study were provided by the Environmental Agency, while the post-flood surveys were undertaken by CH2M. The contribution of all of these organisations is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kvočka, D., Falconer, R.A. & Bray, M. Appropriate model use for predicting elevations and inundation extent for extreme flood events. Nat Hazards 79, 1791–1808 (2015). https://doi.org/10.1007/s11069-015-1926-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-015-1926-0