Abstract

Multimodal sentiment analysis is a downstream branch task of sentiment analysis with high attention at present. Previous work in multimodal sentiment analysis have focused on the representation and fusion of modalities, capturing the underlying semantic relationships between modalities by considering contextual information. While this approach is feasible for simple contextual comments, more complex comments require the integration of external knowledge to obtain more accurate sentiment information. However, incorporating external knowledge into sentiment analysis to enhance information complementarity has not been thoroughly investigated. To address this, we propose a multichannel cross-modal feedback interaction model that incorporates the knowledge graph into multimodal sentiment analysis. Our proposed model consists of two main components: the cross-modal feedback recurrent interaction module and the external knowledge module for capturing latent information. The cross-modal interaction employs a self-feedback mechanism during network training, extracting feature representations of each modality and using these representations to mask sensory inputs, allowing the model to perform feedback-based feature masking. The external knowledge graph captures potential semantic information representations in the textual data through knowledge graph embedding. Finally, a global feature fusion module is employed for multichannel multimodal information integration. On two publicly available datasets, our method demonstrates good performance in terms of accuracy and F1 scores, compared to state-of-the-art models and several baselines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sentiment Analysis (SA), abbreviated as SA, is a widely studied topic in the field of Natural Language Processing (NLP). It aims to automatically reveal our underlying attitudes towards an entity. With the advent of the intelligent era, sentiment analysis has become increasingly important, with wide-ranging potential applications in human–computer interaction, personalized services, smart home appliances, and more. Currently, text-based sentiment analysis relies on the construction of dictionaries and machine learning models to learn emotions from large text corpora. Text sentiment analysis is currently widely used in customer satisfaction assessment and brand perception analysis, among others. With the popularity of social media, Multimodal Sentiment Analysis (MSA) has gained increasing attention in recent years. Compared to single-modal text sentiment analysis, multimodal models are more robust in handling social media data and have shown significant improvements. At the same time, multimodal sentiment analysis brings new opportunities with the advent of complementary data streams.

Several methods have been proposed to effectively utilize multimodal information for multimodal sentiment analysis. They can be categorized into three types: 1. Methods that independently learn modalities and fuse the output into modality-specific representations. 2. Methods that jointly learn the interaction between two or three modalities. 3. Methods that explicitly learn contributions from single-modal and cross-modal cues, often using attention-based techniques.

However, in the research on multimodal sentiment analysis, capturing latent semantic information between modalities in complex contexts remains an exploratory question. Most existing methods either propose fusion at different granularities or use a cross-modal interaction block to couple features from different modalities. Combining features from different modalities is necessary as they provide parallel information form the same source and help eliminate ambiguity in emotional behavior. Zadeh et al. [1] proposed tensor fusion to explicitly capture interactions among unimodal, bimodal, and trimodal representations, but this method employs a triple Cartesian product for feature fusion, resulting in high computational cost. To address this [2], proposed an efficient low-rank multimodal fusion that leverages low-rank tensor fusion to accelerate the fusion process. Yang et al. [3] introduced the Multichannel Graph Neural Network Model for Emotion Perception (MGNNS), which captures hidden representations by encoding different modalities and employs a multichannel graph neural network to learn multimodal representations based on dataset-specific global features. Pham et al. [4] proposed a source-to-target modality transformation, offering an approach to learning joint representations, and introduced the multimodal Cycle Translation Network model (MCTN) to learn joint multimodal representations. Wu et al. [5] constructed a text-centric multimodal fusion shared-private framework (TCSP) that enriches the semantics of the text modality by utilizing shared and private semantics from other non-text modalities.

While the above methods enhance the representation of latent semantics by learning shared and complementary information between modalities, they may not adequately handle more complex contexts. For instance, the use of popular memes on the internet where one netizen expresses their opinion straightforwardly in a video comment, and others subsequently quote the netizen's username to convey bluntness. Such cases require learning representations of related events to explore the contained sentiment information in the data. To address these challenges, this paper proposes a multichannel multimodal sentiment analysis model based on knowledge graph and cross-modal feedback interaction. The main contributions of this paper are as follows: (a) a multichannel learning framework that utilizes dual-channel modes for learning complementary information between modalities and introduces a knowledge graph for learning potential emotional feature representations through text modality embedding; (b) to achieve better fusion between dual-channel features, we design a novel global fusion module to effectively integrate them.

2 Related Work

2.1 Sentiment Analysis Based on Single Modality

In the task of sentiment analysis, early research primarily focused on unimodal text sentiment analysis and achieved certain results. Based on the different methods used, text-based sentiment analysis approaches can be categorized into three types: lexicon-based sentiment analysis, traditional machine learning-based sentiment analysis, and deep learning-based sentiment analysis.

-

(1)

Lexicon-based sentiment analysis approaches introduce sentiment lexicons to classify the sentiment polarity of texts in the dataset at different granularities, based on the sentiment polarity provided by different sentiment words. Rao et al. [6] constructed word-level and topic-level sentiment lexicons using three pruning rules to enhance the accuracy of sentiment analysis. Zainuddin et al. [7] proposed a method of preprocessing the text modality (e.g., removing stopwords) for text feature analysis. Sharma et al. [8] considered text normalization, elimination of URLs, and expansion of acronyms and utilized sentiment lexicons with Part-of-Speech (PoS) tags for text-based sentiment analysis of the dataset. Sailunaz et al. [9] demonstrated how text pretraining improves the accuracy of text-based sentiment analysis using the Twitter dataset. Pradha et al. [10] incorporated citations and references into text-based sentiment analysis methods and achieved significant results in sentiment analysis of the Twitter dataset.

-

(2)

Machine learning-based sentiment analysis methods extract manual features of text sentiment and use different machine learning algorithms to learn and perform classification tasks on these features. Sharma et al. [11] proposed integrating the Boosting algorithm with the Support Vector Machine (SVM) classifier, using SVM as a foundation and improving the classification accuracy through the Boosting algorithm. Simple accumulation of sentiment words cannot accurately classify the semantic information contained in the text. Abbasi et al. [12] considered the importance of text semantic information and reduced noise and redundancy in text features by combining text feature information with syntactic relationships using diversified grammatical features.

-

(3)

Deep learning-based sentiment analysis methods, leveraging the ability of deep learning to construct neural network models that simulate the human brain's neural system, have greatly improved the accuracy of classification results in the field of sentiment analysis. Giatsoglou et al. [13] encoded the text dataset using Word2Vec's contextual encoding and combined it with a sentiment lexicon for text information classification. Tang et al. [14] encoded the context of sentiment words during sentiment embedding and applied loss trimming to ensure the proposed model captures sentiment information more effectively. Hu et al. [15] improved the Long Short-Term Memory (LSTM) network and, based on it, constructed a text sentiment word repository to aid in capturing deep-level text semantic information. Zhou et al. [16] used Convolutional Neural Networks (CNN) for sentence-level feature mapping and proposed the Restricted Boltzmann Machine (RBM) to simulate latent text features.

2.2 Multimodal Sentiment Analysis

With the emergence of multimodal forms of emotional expression, such as images, audio, and video, it has become challenging for unimodal approaches to comprehensively and accurately perform sentiment analysis on data. As different modalities complement each other in conveying emotional information, multimodal sentiment analysis has gained increasing attention. Zadeh et al. [17] proposed the Memory Fusion Network (MFN), which models specific and cross-modal interactions iteratively and summarizes them temporally through a multiview gated memory module. Pham et al. [18] designed the Multimodal Cycle Translation Network (MCTN) to perform modality translation among different modalities, learning a joint representation of multiple modalities by mutually transforming modalities. Wang et al. [19] developed the Recurrent Attention Variational Embedding Network (RAVEN), which utilizes attention mechanisms to reweight auxiliary non-linguistic signals for word embedding. Rahman et al. [20] made improvements to RAVEN by introducing multimodal adaptation gates at different layers of BERT. Yang et al. [21] addressed the issue of equal capturing of inter-modal information and proposed a Text-Core-based Multimodal Shared-Private Information Fusion Architecture (TCSP). In this architecture, the text modality is considered as the core modality, and non-text modalities serve as tools to enhance auxiliary information. The authors utilized mask patterns to capture complementary and shared information between different modalities, thereby comprehensively enhancing the semantic representation of the text modality.

While many multimodal sentiment analysis methods focus on feature representation, modality fusion, and semantic enhancement, they often overlook hidden contextual information, leading to difficulties in capturing implicit semantic information and improving model efficiency.

2.3 Sentiment Analysis Based on Knowledge Graph

To address the challenge of capturing hidden semantic information in complex contexts, researchers have started incorporating syntactic knowledge graph, neural networks, and knowledge graphs into sentiment analysis tasks to enhance semantic features using external knowledge. Ma et al. [22] proposed improvements to the LSTM model by leveraging common-sense knowledge with control information. While simple knowledge embedding has played a role in enhancing semantic features, it neglects syntactic relationships, which can have the opposite effect in certain cases, resulting in poorer model performance and weak robustness. To address this, Wu et al. [23] introduced multiple sources of knowledge, integrating syntactic structure knowledge and external sentiment knowledge into a unified sentiment analysis model. Zhou et al. [24] proposed a Syntax Tree and Knowledge-based Graph Convolutional Network (SK-GCN) model, which jointly models syntactic dependency trees and knowledge graphs, effectively combining syntactic knowledge and external knowledge.

In comparison to the aforementioned methods, our model solely incorporates external knowledge into the sentiment task. By leveraging cross-modal information completion and an external knowledge dualchannel, we enhance semantic features. This approach allows us to obtain more semantic information while keeping the model streamlined, resulting in a lighter and more efficient model.

3 Approach

In this section, we will describe the proposed MMKGE (multimodal Knowledge Graph Embedding) method for multimodal sentiment analysis. The overall architecture of MMKGE is illustrated in Fig. 1. It consists of two modules: the Cross-Modal Interaction module and the External Knowledge Embedding module. The left part of Fig. 1 represents the Cross-Modal Interaction module, which includes three components: Bi-directional Long Short-Term Memory networks (Bi-LSTM), Attention, and LSTM Information Completion layer. On the right side of Fig. 1, the External Knowledge Embedding module is depicted. Its main task is to extract semantic relationships between aspects from the Knowledge Graph (KG) and transfer the semantic information learned from adjacent aspects to the current aspect through aspect embedding.

3.1 Cross-Modal Interaction Feature Representation

We employ a self-feedback cross-modal semantic interaction module to capture global feature representations of text, audio, and video. The input consists of the original feature representations of three modalities: text iT = {t1, t2, …, tn}, audio iA = {a1, a2, …, an}and video iV = {v1, v2, …, vn}. Initially, we utilize Bi-LSTM to model the representations of the three modalities. The hidden states of each Bi-LSTM are then used to obtain their respective single-modal feature encodings through a dot product self-attention mechanism. Let's denote the encodings as MT, MA, and MV, where T, A, and V represent the text, audio, and video modalities, respectively.

To integrate global information and enhance the baseline system, we adopt a self-feedback mechanism. It involves three LSTM units connected in a feedback loop, followed by a sigmoid activation function (denoted as σ). The single-modal feature encodings MT, MA and MV are input into the feedback LSTM. The feedback LSTM generates hidden states hT, hA and hV. Applying the sigmoid activation function to the hidden states produces feedback masks fT, fA and fV, i.e., fm = σ(hm), where m ∈ {T, A, V}. The feedback masks are then utilized to iteratively combine the complementary modalities through element-wise multiplication with the original modalities, as shown in Eq. (1).

In Eq. (1), where m, m1, and m2 ∈ {T, A, V} and m ≠ m1 ≠ m2, the element-wise multiplication is represented. Equation (1) describes how the feedback masks from the other two modalities are applied to the input features of the third modality. For simplicity, let's consider an example where we mask the text input features using the audio and video modalities. If the text modality is important for both the audio and video modalities, the mask value will be close to 1. If it is important for either one of the modalities, the mask value will be 0.5. If it is not relevant to either modality, the mask value will be 0. The cross-modal self-feedback learns the complementary representations of the modalities based on their overall importance in representing the whole.

3.2 Merging of Textual Relations Based on Knowledge Graph

To map aspect relationships from the knowledge graph into the multimodal sentiment analysis task, the first step is to identify aspect entities that correspond to the aspect words in the context within the Wikipedia knowledge graph. This process can be achieved through Named Entity Disambiguation (NED) or wikification. In this study, we utilized the Wikifier API [25] tool for aspect entity mapping, which is a free and computationally efficient entity linking method.

Once the aspect entity mapping is completed, the next step is to learn entity embeddings. We employed the popular GraphSAGE algorithm [26] for this purpose, which is suitable for both supervised and unsupervised tasks. We used the DBpedia knowledge graph to generate the entity relation graph, where each entity serves as a node in the graph, and the edges are represented in the form of < h, r, t > triples, where h and t represent the head and tail entities, and r is the predicate relationship between h and t. However, due to the large size of the DBpedia knowledge graph, it is not feasible to use deep network representation learning techniques for embedding. To address this issue, we introduced the Gs graph by leveraging the dataset, but the Gs graph is disconnected, and the entity similarity embedding is consistent only in a subset of connections within the Gs graph. This could lead to an unexpected proximity between two highly dissimilar entities u and v. To overcome this, we proposed a scalable two-stage network embedding technique that can be scaled to DBpedia while avoiding the aforementioned problem.

We compute entity embeddings by constructing two graphs: (1) Gk graph (Vk, Ek, Wk) to capture the global connectivity structure between entity clusters and (2) Gs graph (Vs, Es) introduced by the aspect entities in the dataset. Since the Gs graph is disconnected, we need to combine the embeddings of GK graph, denoted as Zk(i), and Gs graph, denoted as Zs(u), to capture the relationship between the aspect entitys u and i. The Gk graph (Vk, Ek, Wk) is a compact representation of the DBpedia KG, where v, v ∈ Vk represents a node (entity) cluster in the DBpedia KG. We employ the Louvain hierarchical graph clustering algorithm to cluster the entire knowledge graph. The edge set Ek is computed such that if there exists a connection between a pair of clusters i and j in the knowledge graph, it is represented as (i, j) ∈ Ek, for all i, j ∈ Vk. The weight between clusters i and j is represented as Wk(i, j) and is calculated by considering both the score of actual edges between cluster i and j and the probability of the maximum edge between the two clusters, such as |i| * |j|, where |i| denotes the number of nodes in cluster i. We compute the cluster embeddings Zk(i), i ∈ Vk, for the Gk graph using an improved GraphSAGE embedding algorithm by optimizing the loss, as shown in Eq. (2).

In this context, Zk(i), i ∈ Vk represents the embedded output representation, σ denotes the sigmoid function, Pn is the negative sampling distribution, Q is the number of negative samples, and k (k ∈ Vk) represents a negative sample.

For training Gs, we sample 25 nodes for layer 1 and 10 nodes for layer 2 using a random walk. The output hidden representation dimension is set as 50, and the number of negative samples Q taken as 5. Default values are used for all other parameters.

The Gs graph (Vs, Es) is the entity relation graph, where the Vs node set is composed of the aspect entities extracted from the dataset, and the edge set Es is a subset of edges from the DBpedia KG. We use the standard GraphSAGE embedding algorithm and loss function to construct the similar embedding Zs(u) for the aspect entity u in the Gs graph, where u ∈ Vs. To preserve both the local neighborhood information and the global graph structure in the knowledge graph, we combine the embeddings from the Gs graph and the Gk graph as our two-level entity embedding: Zu = [Zk(i); Zs(u)], where u ∈ Vs and i ∈ Vk, such that u represents an entity in cluster i.

3.3 Global Fusion of Multichannel Representations

Due to the different channels through which the representations {MT, MA, MV, Zs(u), Zk(i)} are learned, directly merging them is not conducive to the model capturing complementary information from multiple channels. To address this, we propose a Global Fusion module, as shown in Fig. 2, which performs a coordinated fusion of multichannel representations from local to global, thereby effectively improving the model's performance. For the sake of clarity, we use "input" to represent the output representations from the channels.

In the process of multichannel modality fusion, we first use a symmetric attention mechanism to interact pairwise among the four feature representations, resulting in four vector representations. The fused representations are then fed into four independent fully connected layers to obtain the predicted sentiment features, denoted as RVT, RTA, RAV, and RMK, as shown in Eqs. (3) and (4).

Here, LN(·) represents the normalization operation, MH(·) denotes the symmetric attention mechanism, and FNN(·) indicates the feed-forward operation. The variables m and l represent the feature inputs from different channels.

It is important to note that the parameters of these fully connected layers are not shared. Subsequently, to fully leverage the complementarity among the various sentiment features, we further fuse the obtained features at the global level. Specifically, we employ a multihead attention interaction mechanism to facilitate the interaction learning between multiple channels, selectively merging these features to enhance the efficiency of the model.

3.4 Sentiment Classification

Finally, we feed the fused feature representations into the top fully connected layer and apply the softmax function for emotion detection, as shown in Eq. (5):

where \({\text{w}}^{\text{s}}\) and \({\text{b}}^{\text{s}}\), are "weights" and "biases" refer to the parameters of the fully connected layer.

4 Experiments

4.1 Experimental Data and Preprocessing

We evaluated the performance of our model using the CMU-MOSI [27] and CMU-MOSEI [28] datasets, which are two publicly available multimodal sentiment analysis datasets. MOSI consists of 93 videos collected from YouTube, ranging in length from 2 to 5 min. These videos are divided into 2199 short video segments, each labeled with sentiment scores ranging from −3 (strongly negative) to 3 (strongly positive). MOSEI consists of 23,453 annotated video utterances from 1000 different speakers and 250 topics. Each utterance is labeled with sentiment scores ranging from −3 to 3. To compare with existing methods, we report results for binary sentiment classification, where values ≥ 0 indicate positive sentiment and values < 0 indicate negative sentiment.

For the CMU-MOSEI dataset, we utilized GloVe embeddings to represent the textual modality, with each word embedded into a 300-dimensional feature space. For the visual modality, we employed the Facets tool to extract visual features, resulting in a 35-dimensional feature vector per frame. The audio data was processed using CovaRep [29], which extracted 12-dimensional Mel-frequency cepstral coefficients (MFCCs) and low-level audio features, resulting in a 74-dimensional feature representation.

For the CMU-MOSI dataset, we employed CNNs to represent the textual modality at the utterance level. The visual modality was processed using 3D CNNs for video data, and then using OpenFace2.0 [30] to extract frame-level FACS features. And the audio modality was represented using the openSMILE [31] method to extract audio features.

4.2 Experimental Setup

To ensure a fair comparison with other baseline methods, we employed the following evaluation metrics in our experiments: binary accuracy (Acc),and F1 score (F1). Since the predicted results are continuous values, we first evaluated the model using MAE and Corr, which measure the average absolute error and the correlation between predicted scores and ground truth values, respectively. We then mapped the sentiment scores to sentiment labels and used binary accuracy and F1 score to evaluate the model's performance.

In our experiments, we utilized a Bi-LSTM with a hidden layer size of 100 dimensions. The Bi-LSTM combines the forward and backward passes by summing them. We set the dimensions of all attention module mappings to 100. Dropout with a value of 0.2 was applied. We employed the Adam optimizer [32] with a learning rate of 0.0005. If the validation loss did not decrease for two epochs, we halved the learning rate. Early stopping was applied based on the validation loss. Additionally, Table 1 presents the statistical data for the MOSI and MOSEI datasets, illustrating the different partitions of the training, validation, and test sets. MOSI and MOSEI exhibit distinct data distributions.

For all our experiments we use bidirectional LSTMs with hidden size 100. LSTMs are bidirectional and forward and backward passes are summed. All projection sizes for the attention modules are set to 100. We use dropout 0.2. We use Adam with learning rate 0.0005 and halve the learning rate if the validation loss does not decrease for 2 epochs. We use early stopping on the validation loss (patience 10 epochs). During Stage I of each training step we disable gradients for the unimodal encoders.

4.3 Baselines

In order to thoroughly evaluate the performance of our proposed MMKGE model, we compared it with several baseline methods for multimodal sentiment analysis tasks, including:

-

(1)

TFN [1]: Tensor Fusion Network captures the interactions between unimodal, bimodal, and trimodal data by computing a multidimensional tensor based on outer products.

-

(2)

MFN [17]: Memory Fusion Network models modality interactions by continuously modeling specific and cross views, summarizing them using a multiview gating mechanism over time.

-

(3)

RAVEN [19]: Recurrent Attention Variational Embedding Network utilizes attention mechanisms to model reweighted word embeddings based on auxiliary non-linguistic information.

-

(4)

MCTN [18]: A framework for learning joint representations that enforces cycle consistency loss to ensure that the joint representation retains maximum information from all modalities.

-

(5)

MAGCN [33]: multichannel Graph Attention Convolutional Neural Network leverages self-attention and Dense Connected Graph Convolutional Networks (DCGCN) to learn a framework for cross-modal feature representation. It extracts language-specific feature representations using a module composed of multi-head self-attention and DCGCN. Furthermore, it enhances the commonality of inter-modal feature representations through the design of a consistency loss.

-

(6)

SK-GCN [24]: This model combines syntactic dependency trees and knowledge graphs to effectively integrate syntactic knowledge with external knowledge.

-

(7)

MVCL [34]: This is a novel framework with multi-view contrastive learning for improving the modality representation used for the multimodal sentiment analysis.

-

(8)

AMLT [35]: A novel Adaptive Language-guided Multimodal Transformer (ALMT) is proposed to better model sentiment cues for robust Multimodal Sentiment Analysis (MSA).

In the initial stage after three modal encodings, we introduced the modal alignment method proposed by [36] to all models, integrating the benefits of early fusion into the late fusion network through reverse connections, thereby promoting model performance improvement. For the latest models MVCL and AMLT, due to the performance decline after adding this method, we compared this with the original model. Among them, we use (method *) to represent the baseline model after adding the method of [36].

4.4 Experimental Results and Analysis

We compared our MMKGE model with the aforementioned baseline models on two publicly available datasets, MOSI and MOSEI, and the experimental results are shown in Tables 2 and 3. Firstly, it can be observed that our MMKGE model outperforms other baseline methods and state-of-the-art (SOTA) methods on most evaluation metrics. Specifically, in both datasets, our MMKGE method outperforms SK-GCN, which only combines external knowledge with a single context of syntactic information, demonstrating the superiority of multichannel representation learning and global fusion.

In the MOSI dataset, compared to the current SOTA models, MVCL and AMLT, our MMKGE model achieves improvements of 0.155% (in terms of ACC compared to AMLT) and 0.26% (in terms of F1 compared to MVCL). MMKGE outperforms other baseline methods by more than 2.09% in terms of ACC and F1 scores. These results demonstrate the effectiveness and superiority of MMKGE in multimodal sentiment classification tasks. In the MOSEI dataset, 'N/A' indicates results not reported in the previous paper. From Table 3, it can be seen that compared to the best-performing model AMLT, the MMKGE model provides higher overall performance. It surpasses AMLT by 0.16% in terms of ACC.However, The F1 value metric of our model on MOSI is lower than AMLT, which may be that there is partial redundant information in the visual and audio modalities in our model and affects the entire model. But our model performs well overall, This demonstrates the improved performance of MMKGE in sentiment analysis tasks after incorporating feedback cross-modal interaction, and external common sense.

We observed that some methods that performed well on the MOSI dataset did not achieve satisfactory results on the MOSEI dataset. For example, for the MAGCN method, the removal of sentiment knowledge resulted in poorer performance compared to the lack of consistency loss. Compared to the MOSEI dataset, the MOSI dataset tends to highlight information from the language modality. Therefore, eliminating the consistency loss leads to a sharp drop in the ACC and F1 scores of some methods. From Tables 2 and 3, it can be seen that the introduction of external knowledge can significantly improve the model's performance compared to most baseline models, enabling the model to capture more efficient and accurate deep semantic features and latent semantic features in complex text contexts.In comparison to the MAGCN model, which also incorporates sentiment knowledge,and the SK-GCN model, which incorporates syntactic information and external knowledge, our proposed multichannel structure and global fusion approach facilitate a deeper interaction of semantic features obtained from different channels, thereby demonstrating the strong performance of MMKGE in sentiment analysis.Compared with AMLT's multi-modal information conversion method to supplement language modalities, our scalable method can incorporate external common sense into knowledge into sentiment analysis, thereby significantly improving model performance.

4.5 Ablation Study

In this section, we conducted extensive ablation experiments to investigate the efficacy of the cross-modal feedback interaction module, external knowledge embedding module, and the proposed multichannel global fusion module in MMKGE. Additionally, we analyzed the impact of different fusion methods and data sparsity on the experiments, as well as tested the robustness of the MMKGE model.

During the ablation experiments, for convenience, we used "W/O" to denote the removal of specific modules, while Cross-MFI, KGE, and MGI represented the cross-modal feedback interaction module, external knowledge embedding module, and multichannel global fusion module, respectively. Tables 4 and 5 present the results of the ablation experiments conducted on the MOSI and MOSEI datasets. From Tables 4 and 5, it is evident that the complete MMKGE model outperforms all other models in terms of performance. To showcase the performance of the Cross-MFI module, we replaced the Bi-LSTM with three LSTM units and removed the feedback mechanism. The removal of the Cross-MFI module resulted in a significant drop in performance, highlighting the effectiveness of our module in capturing modality semantics in multimodal sentiment analysis.

Regarding the KGE module, when it was removed, the model exhibited the worst performance. This indicates that the KGE module better captures the text's latent semantics or complementary semantic information hidden in background knowledge, particularly in complex contextual situations. It emphasizes the crucial role of the KGE module in sentiment analysis tasks. To replace the MGI module, which performs fusion through simple feature concatenation, the results in Tables 4 and 5 show a slightly inferior performance compared to the overall MMKGE model in the final sentiment classification task.

To validate the effectiveness of our proposed multichannel global fusion module, we compared it with two typical information fusion methods: "CONCAT," which directly concatenates the multichannel feature representations and fuses them through fully connected layers, and "sum," which inputs the multichannel feature representations into four independent fully connected layers and performs fusion through element-wise summation of modal information. We denoted our proposed multichannel global fusion module as "our." As shown in Tables 6 and 7, compared to other fusion strategies, our proposed MGI module significantly outperforms the others in terms of both accuracy and macro F1 score. Specifically, on MOSEI, the macro F1 score improved by 1.64%, with a relative increase in accuracy of at least 1.14%. These results provide evidence that our MGI model is more advantageous for sentiment analysis tasks. In contrast, our proposed multichannel global fusion module proposes a complementary global fusion of different channel feature representations, leveraging their complementarity and differences to enhance the effectiveness of the MMKGE model in sentiment analysis tasks.

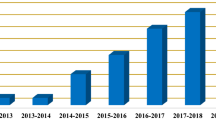

We also investigated the impact of limited training data on the model. Figure 3 compares the baseline model with our proposed model in terms of accuracy for all testing aspects in the MOSI dataset. The testing aspects are grouped based on the count of their training data, and the bar graph reports the average accuracy for all aspects. It can be observed that our proposed method outperforms the baseline for aspects with 0–20 training points. Hence, we conclude that for aspects with a small number of training data points, our approach improves the performance of multimodal sentiment analysis tasks by leveraging information from nearby aspects in the knowledge graph (KG). The red line represents the number of testing data points in each batch. We found that a significant portion of testing aspects had fewer than 20 training data points.

4.6 Model Robustness Analysis

Due to the incorporation of external knowledge in the MMKGE model, some people may have concerns about the introduction of potentially incorrect noise information, which could affect the results of sentiment analysis tasks. To address these concerns, we conducted experiments by introducing different percentages of noise into the knowledge embedded on the MOSI dataset. This aimed to study whether our model exhibits robustness to noisy knowledge. It is worth noting that noise attacks are widely used in the NLP community to investigate model robustness, such as in neural machine translation. In this study, we randomly initialized some knowledge embeddings as noise. As Shown in Fig. 4, our proposed MMKGE approach can tolerate slight noise, such as 1, 2, and 5%, while maintaining the performance to some extent. However, as the noise increases, such as reaching 20%, the performance starts to decline significantly, indicating that noise knowledge does not serve as a regularization. This demonstrates the benefit of our MMKGE approach in leveraging integrated knowledge and its robustness to mild noise.

Introducing external knowledge will admittedly increase the latency and model size. We therefore perform a contrastive investigation on whether we achieve a good trade-off between efficiency and performance. For a fair comparison, all experiments are trained and tested on an Nvidia GTX-1660 SUPER. Compared to more recent models, our MMKGE could achieve better performance while preserving comparable or better latency. The main reason for this is that these models introduced the sophisticated module to capture the semantic features, leading to larger model sizes, while we only employ the original BiLSTM as the feature extractor and share its parameters between three branches, greatly decreasing the model parameters.

5 Conclusions

In this paper, we propose a knowledge graph-based cross-modal feedback interaction method for multichannel multimodal sentiment analysis, specifically targeting complex semantic backgrounds. The aim is to enhance the representation of complementary semantic information across modalities through feedback interaction learning. For the text modality, we simultaneously consider extracting aspect words and mapping them to the knowledge graph to capture latent semantic information in complex semantic backgrounds using the rich associative information within the knowledge graph. External knowledge is incorporated to enhance the semantic information. Finally, we propose the global fusion of multichannel feature representations to better accomplish the multimodal sentiment analysis task. We conducted experiments on two public datasets and compared our approach with numerous baseline models, demonstrating the effectiveness and robustness of our proposed MMKGE.

In future work, we plan to explore the multi-modal information redundancy existing in the model as well as the lack of external common sense and incorrect disambiguation detection problems in the common sense integration process. Additionally, we aimed to make the structure of the model more lightweight. Finally, we would like to explore integrating multi-modal emotional knowledge graphs by embedding image modalities into knowledge graphs to better capture the hidden semantic information between modalities.

References

Zadeh A, Chen M, Poria S, Cambria E and Morency L-P (2017) Tensor fusion network for multimodal senti-ment analysis. arXiv:1707.07250

Liu Z, Shen Y, Lakshminarasimhan VB, Liang PP, Zadeh AB and Morency, L-P (2018) Effificient low-rank multimodal fusion with modality-specifific factors. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics 1:2247–2256

Yang X, Feng S, Zhang Y, Wang D (2021) Multimodal Sentiment Detection Based on multichannel Graph Neural Networks. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, pp 328–339

Pham H, Liang PP, Manzini T, Morency L-P, Poczos M (2021) Found in translation: Learning robust joint representations by cyclic translations between modalities.ssociation for the Advancement of Artifificial Intelligence 33:6892–6899

Wu Y, Lin Z, Zhao Y, Qin B, Zhu L-N (2021) A text-centered shared-private framework via cross-modal prediction for multimodal sentiment analysis. Findings of the Association for Computational Linguistics: ACL-IJCNLP, pp 4730–4738

Yang-hui R, Jing-sheng L, Wen-yin L et al (2014) Buildinge motiondal dictionary for sentiment analysis of onlinenews. World Wide Web-Internet Web Inform Syst 17(4):723–742

Zainuddin N, Selamat A, Ibrahim R (2018) Hybrid sentiment classifification on twitter aspect-based sentiment analysis. Appl Intell 48(5):1218–1232

Sharma AK, Chaurasia S, and Srivastava DK (2020) Sentimental short sentences classifification by using CNN deep learning model with fine tuned Word2Vec. In: Procedia Computer Science, vol 167, pp 1139–1147. Elsevier B.V

Sailunaz K, Alhajj R (2019) Emotion and sentiment analysis from Twitter text. J Comput Sci. https://doi.org/10.1016/j.jocs.2019.05.009

Pradha S, Halgamuge MN, and Vinh NTQ (2019) Effective text data preprocessing technique for sentiment analysis in social media data. In: 2019 11th International Conference on Knowledge and Systems Engineering (KSE), pp 1–8. IEEE

Sharma A, Dey S (2013) A boosted SVM based ensemble classifier for sentiment analysis of online reviews. ACM SIGAPP Appl Comput Rev 13(4):43–52

Abbasi A, France S, Zhang Z et al (2011) Selecting Attributers for sentiment classification using feature relation networks. IEEE Trans Knowl Data Eng 23(3):447–462

Giatsoglou M, Vozalis MG, Diamantaras KI et al (2017) sentiment analysis leveraging emotions and word embeddings. Expert Syst Appl 69:214–224

Du-yu T, Fu-ri W, Bing Q et al (2016) Sentiment embeddings with applications to sentiment analysis. IEEE Trans Knowl Data Eng 28(2):496–509

Fei H, Li L, Zi-li Z et al (2017) Emphasizing essential words for sentiment classification based on recurrent neural networks. J Comput Sci Technol 32(4):785–795

Yu Z, Rui-feng X, Lin G (2016) A sequence level latent topic modeling method for sentiment analysis via CNN based diversified restrict Boltzmann machine. In: Proc of International Conference on Machine Learning and Cybernetics, pp 356–361

Zadeh A, Liang PP, Mazumder N, Poria S, Cambria E, and Morency L-P (2018) Memory fusion network for multi-view sequential learning. In: Proceedings of the AAAI Conference on Artifificial Intelligence, pp 32

Pham H, Liang PP, Manzini T, Morency LP, and P´oczos B (2019) Found in translation: Learning robust joint representations by cyclic translations between modalities. In: Proceedings of the AAAI Conference on Artifificial Intelligence, 33:6892–6899

Wang Y, Shen Y, Liu Z, Liang PP, Zadeh A and Morency L-P (2019) Words can shift: Dynamically adjusting word representations using nonverbal behaviors. In: Proceedings of the AAAI Conference on Artifificial Intelligence 33:7216–7223

Rahman W, Hasan MK, Lee S, Zadeh AB, Mao C, Morency L-P and Hoque E (2020) Integrating multimodal information in large pretrained transformers. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp 2359–2369

Wu1 Y, Lin Z, Zhao1 Y, Qin1 B, Zhu L-N (2021) A text-centered shared-private framework via cross-modal prediction for multimodal sentiment analysis. ACL-IJCNLP, pp 4730–4738

Ma Y, Peng H, Khan T, Cambria E, Hussain A (2018) Sentic lstm: a hybrid network for targeted aspect-based sentiment analysis. Cogn Comput 10(4):639–650

Wu S, Xu Y, Wu F, Yuan Z, Huang Y, Li X (2019) Aspect-based sentiment analysis via fusing multiple sources of textual knowledge. Knowl-Based Syst 183:104868

Zhou J, Huang JX, Hu QV, He L (2020) Sk-gcn: modeling syntax and knowledge via graph convolutional network for aspect-level sentiment classifification. Knowl-Based Syst 205:106292

Brank J, Leban G, Grobelnik M (2018) Semantic annotation of documents based on wikipedia concepts. Informatica (Slovenia) 42:1

Hamilton WL, Ying Z, and Leskovec J (2017) Inductive representation learning on large graphs. In: Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems, 4–9 Dec2017, Long Beach, CA,USA, pp 1024–1034

Zadeh A, Zellers R, Pincus E, and Morency LP (2016)MOSI: multimodal corpus of sentiment intensity and subjectivity analysis in online opinion videos. CoRR, pp 1606–06259

Zadeh AB, Liang PP, Poria S, Cambria E, and L.P.Morency, “Multimodal language analysis in the wild: CMU-MOSEI dataset and interpretable dynamic fusion graph. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, ACL, pp 2236–2246

Degottex G, Kane J, Drugman T, Raitio T, and Scherer S (2014) COVAREP - A collaborative voice analysis repository for speech technologies. In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp 960–964

Mao H, Yuan Z, Xu H, Yu W et al (2022) M-SENA: an integrated platform for multimodal sentiment analysis. In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Dublin, Ireland, May 2022, pp 204–213

Eyben F, Weninger F, Gross F, and Schuller B (2013) Recent developments in opensmile, the munich open source multimedia feature extractor. In: Proceedings of the 21st ACM international conference on Multimedia. ACM, pp 835–838

Diederik PK and Jimmy B (2015) Adam: a method for stochasticoptimization. In: 3rd ICLR, Yoshua B and Yann L (eds)

Xiao L, Wu X, Wu W, Yang J, He L (2022) Multichannel attentive graph convolutional network with sentiment fusion for multimodal sentiment analysis. ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 37:4578–4582

Liu P et al (2023) Improving the Modality Representation with multi-view Contrastive Learning for Multimodal Sentiment Analysis. In: ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, pp 1–5, https://doi.org/10.1109/ICASSP49357.2023.10096470

Zhang H, Wang Y, Yin G, Liu K, Liu Y, Yu T (2023) Learning language-guided adaptive hyper-modality representation for multimodal sentiment analysis. EMNLP 2023 Main Conference

Shankar S et al (2022) Progressive Fusion for Multimodal Integration. ArXiv abs/2209.00302 (2022): n

Funding

This work was supported by the [Xinjiang Uygur Autonomous Region Natural Science Foundation Project], [2022D01A99]; the [National Natural Science Foundation of China], [62066044, 62167008]; and the [XJNUQB2022-23 Xinjiang Normal University 2022 Outstanding Youth Talent Program].

Author information

Authors and Affiliations

Contributions

SD wrote the main manuscript text and XF and XM approves and consents to the publication of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dong, S., Fan, X. & Ma, X. Multichannel Multimodal Emotion Analysis of Cross-Modal Feedback Interactions Based on Knowledge Graph. Neural Process Lett 56, 190 (2024). https://doi.org/10.1007/s11063-024-11641-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11641-w