Abstract

A massive amount of information explosion over the internet has caused a possible difficulty of information overload. To overcome this, Recommender systems are systematic tools that are rapidly being employed in several domains such as movies, travel, E-commerce, and music. In the existing research, several methods have been proposed for single-user modeling, however, the massive rise of social connections potentially increases the significance of group recommender systems (GRS). A GRS is one that jointly recommends a list of items to a collection of individuals based on their interests. Moreover, the single-user model poses several challenges to recommender systems such as data sparsity, cold start, and long tail problems. On the contrary hand, another hotspot for group-based recommendation is the modeling of user preferences and interests based on the groups to which they belong using effective aggregation strategies. To address such issues, a novel “KGR” group recommender system based on user-trust relations is proposed in this study using kernel mapping techniques. In the proposed model, user-trust networks or relations are exploited to generate trust-based groups of users which is one of the important behavioral and social aspects. More precisely, in KGR the group kernels and group residual matrices are exploited as well as seeking a multi-linear mapping between encoded vectors of group-item interactions and probability density function indicating how groups will rate the items. Moreover, to emphasize the relevance of individual preferences of users in a group to which they belong, a hybrid approach is also suggested in which group kernels and individual user kernels are merged as additive and multiplicative models. Furthermore, the proposed KGR is validated on two different trust-based datasets including Film Trust and CiaoDVD. In addition, KGR outperforms with an RMSE value of 0.3306 and 0.3013 on FilmTrust and CiaoDVD datasets which are lower than the 1.8176 and 1.1092 observed with the original KMR.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Internet has evolved into a vital necessity in our digital age. People use many Internet technologies to fulfill their varied desires and interests including shopping, watching movies, learning, communications, watching their favorite celebrities, developing the latest innovations, marketing, and entrepreneurship. These behaviors not only generate a large amount of data, but they also necessitate a systematic strategy for accessing data that is quick, dependable, and relevant to users. Recommender Systems are useful tools for dealing with such challenges and recommending information relevant to users' interests [1]. Depending upon the past preferences of the users, the recommender filters the item space and recommends items to users that meet their needs and fulfill their interests [2]. In other ways, the objective of recommender systems is to assist different users in locating only the content that is pertinent to their interests instead of browsing a huge volume of information [3, 4].

These recommender systems also acquire the feedback of users after providing the recommendations in the form of either explicit ratings or implicit feedback such as user clicks, and time spent on a particular page. This feedback gives additional information about the user's interests and preferences, allowing the recommender system to adjust and generate more meaningful recommendations. Many conventional recommender systems have focused only on single-user modeling. However, in everyday life, there exist numerous scenarios in which users interact in groups such as viewing a movie with their families, going to dinner with coworkers, organizing and going on trips with friends, studying in groups, and so on. This will ultimately emphasize the significance of an accurate group recommendation which is an immensely prominent concern to address [2]. More precisely, in conventional group recommender systems, the preferences and interests of all users are integrated and treated as one single set by the recommender system that generates the recommendation for a group of users [5].

However, it also raises another challenge that all members of the group should have similar preferences to generate more relevant recommendations. Some studies primarily concentrated on different ways to aggregate the individual preferences of a group. More precisely, for aggregation, early approaches used predetermined functions such as average, least misery, and maximum satisfaction [6,7,8]. Moreover, among different domains, the significance of group recommender systems has increased due to the rise in mobile phones and social communications platforms [9]. One of the ongoing research areas of group recommender systems is the incorporation of different elements that impact the group recommender systems such as behavioral and social elements, trust, similarities in preferences, and social contact list [9].

A group recommender is comprised of two distinct elements: “groups” and “items”. Different items have been rated by different groups and based on these interactions and ratings; the recommender system subsequently recommends a new list of items. In this study, groups are represented by \(G=\{{g}_{1},{g}_{2},{g}_{3}\dots .,{g}_{M}\}\) in which \(\left|G\right|=M\) indicates the total number of groups in the system, and the collection of items being recommended is represented by \(I=\{{i}_{1},{i}_{2},{i}_{3}\dots .,{i}_{N}\}\) with \(\left|I\right|=N\). Some, but certainly not all the items have been rated by the users present or belong to different groups. The term \(({r}_{i,g}|(i,g)\in \mathcal{D})\) represents the group-wise ratings in which \(\mathcal{D}\subset I\times G\) indicates the collection of group-items pairs on which ratings have been provided. Likewise, the total ratings are represented as \(\left|\mathcal{D}\right|=T\). Usually, only a subset of items from the space of the items \(I\) have been rated by every group, so \(\left|\mathcal{D}\right|=T<<\left|I\times G\right|=N\times M\) where \(T/(N\times M)\cong 0.01\) is not uncommon in real applications. The rating matrix \(R\) is of the form \(M\times N\) can provide the potential ratings given by the groups of users. In this study, the items that are rated by the group of users \(g\) are denoted by \({\mathcal{D}}_{i}\) while the group of users who provide the ratings are represented by \({\mathcal{D}}_{g}\). The main objective of the recommendation systems is to forecast rating \({r}_{i,g}\), i.e. for \((i,g)\notin \mathcal{D}\). Some of the important notations and their meanings are given in Table 1.

Various strategies have been proposed in the realm of recommender systems, including content-based filtering [10], collaborative filtering [11], demographic [12], and context-based recommenders systems [13]. The content-based methods suffer from a "limited content analysis" problem. Similarly, on the other hand, collaborative filtering-based methods suffer from data sparsity and cold start issues. Furthermore, “context” is a vast term such as user context includes "companion", "emotions", "time", "day type", and "mood. These contextual variables are difficult to acquire by the recommender system automatically, except for some variables i.e., "time" [14]. Some research studies have designed hybrid methods to overcome the limitations, but still, designing an effective recommender system still remains an open research area.

All these methods are mostly designed for single-user satisfaction. However, one major downside of single-user modeling is a cold start, which happens when the user is new to the system and provides only a few ratings. Similarly, another problem is a long tail scenario in which the item spaces are very huge e.g. in Amazon, and very few users provide the rating. The recommender systems have relatively little knowledge about the users' interests and preferences in such sparse ratings. As a result, we leverage user-trust data to solve the problem of cold start or long tail scenarios. Since, leveraging or adding their trust-based preferences will aid recommenders in learning about user interests and, to some extent, overcome cold start problems. Therefore, in this research study, a group recommender system is designed using kernel mapping techniques [15] known as "KGR”. The purpose of utilizing kernel mapping techniques is to investigate and optimize their performance further by modeling user preferences through trust relationships with other users. The existing method relies on single-user modeling; however, in the improved version of "KGR”, group-based preference modeling of each user is proposed based on user-trust relations. Trust is a very promising factor indicating the similarities among users since when two users trust each other, they share the same taste [16,17,18]. Every user has their own social circle or group to which they belong as shown in Fig. 1. The trustworthiness of users is indicated by themselves e.g. in the domain of the recommender system, the users are allowed to indicate how much they believe that ratings provided by another user are meaningful and useful.

A Trust network is used to depict the trust relations among users and based on these relations groups are designed. This will turn the single-user-item matrix into a group-item matrix indicating the ratings provided by the group to items. The rationale for trust-based grouping is based on the notion that when users trust one another, they have similar preferences [19]. Subsequently, after the trust-based grouping of each user, a group residual matrix is computed based on which group kernels are learned to find a multi-linear mapping among two vector spaces. The first vector space specifies the information about the items that we wish to rate, while the second vector space is comprised of the probability density function that provides information on how the particular group will provide the ratings to items. In comparison with the original KMR, in which only single-user kernels or item kernels are learned to forecast the ratings for the unknown items, but in the proposed model, each user’s preferences are viewed as a group i.e. user preferences and user-trust-based preferences. More precisely, these preferences include explicit feedback or ratings of the items. Since group preferences may be obtained by leveraging trust relationships among users, the resultant KMR is extended to the trust-based group recommender model i.e. KGR.

Furthermore, it is also vital to keep the individual preferences of the user in the group to which he belongs. To solve this, a hybrid approach is suggested in which we utilize additive and multiplicative models to combine group-based rating kernels and user-based kernels. Moreover, the proposed “KGR” is validated on two different trust-based datasets and shows good performance in comparison with single-user modeling. Following are our contributions:

-

Trust-aware group recommender system is proposed using kernel mapping techniques for both item-based and group-based versions

-

A hybrid approach is designed where single-user preferences are kept alive in the groups using additive and multiplicative models.

-

Cold-start and data sparsity concerns are addressed using trust data and achieve good performance over existing methods

Similarly, the essential research questions that this study answers are as follows:

-

What is the performance of Kernel mapping recommender systems when users' trust data is associated with it?

-

How cold-start and data sparsity concerns are addressed utilizing the strategy of incorporating trust data with kernel mapping recommenders?

-

How are individual user preferences preserved when making recommendations based on group preferences utilizing trust data?

The rest of the paper is organized as: Sect. 2 provides the related work, Sect. 3 provides the proposed methodology, and Sect. 4 describes the results with analysis followed by a conclusion and references.

2 Related Work

For single-user and group modeling, there are numerous strategies for recommender systems, including content-based, collaborative, and hybrid models [20]. The following section provides a thorough discussion of many types of methods:

Generally, in recommender systems, content-based filtering is one of the most widely used techniques. For instance, Wang et al. [21] proposed the algorithm capable of recommending journals for computer science publications depending upon the content of the abstract of the manuscript. A web crawler is utilized to simultaneously update the training dataset as well as the training model. To obtain an interactive online result, feature selection reliant on chi-square and softmax regression is employed to design a hybrid model. Their suggested method shows an accuracy of 61.37% in recommending the best journals and conferences. Similarly, Chandra et al. [22] proposed the recommender system for citation recommendations. The input of the model is the query of the document which is then processed to find the nearest neighbors that act as a candidate item set for ranking. The suggested technique is adequate for recommending citations without using meta-data and achieves an 18% F1 score in the top 20 recommendations. Similarly, Asif et al. [23] proposed a KNN and Association Rule Mining (ARM) to forecast serious condition ICU patients to lessen mortality rates. For the management of hospital data, the IBM cloud platform is employed. Singla et al. [24] proposed a content-based movie recommender named FLEX. To extract features from the textual content, a hybrid model based on Doc2Vec and Tf-Idf is employed. Renuka et al. [25] proposed the unsupervised learning approach for recommender systems. More precisely, the suggested recommender system recommends articles based on natural language processing techniques (NLP). The TF-IDF method is used to acquire the word vectorization followed by unsupervised algorithms i.e. K-means and agglomerative clustering techniques to recommend articles based on user interests and preferences. These content-based approaches are more effective; nevertheless, they require content that describes the items in order to make effective recommendations.

Other than content-based methods, collaborative filtering techniques are also the most often used methods for recommender systems. For instance, Gazdar et al. [11] proposed a novel similarity measure for collaborative filtering-based recommender systems. The similarity measure is based on the conceptualization of mathematical equations including, integral, linear, differential, and non-linear systems. To achieve the kernel function for the similarity metric, these equations are used. Their proposed similarity measure works very well and shows good accuracy over different baseline methods. Xiong et al. [26] proposed a hybrid algorithm for web recommendations. More precisely, they have combined both collaborative filtering and textual content along with deep neural networks. In addition, the interaction of web applications and web services are also integrated into the neural networks to describe the various relationships among them. The proposed method is validated on a real-world web service dataset and shows state-of-the-art performance on web service recommendations. Xue et al. [27] proposed an item-based collaborative filtering (ICF) algorithm by taking into consideration nonlinear and higher-order interactions between items. In addition, the proposed method not only considers the similarity between two items but also examines the interaction between all interaction item pairs with the help of non-linear neural networks. In doing so, the algorithm also models the high-order associations between the items. The suggested model is validated on two distinct datasets including MovieLens and Pinterest and it was observed that such kind of high-order interactions show a positive impact on results. Furthermore, Wang et al. [28] proposed dynamic user modeling and collaborative filtering algorithms for recommender systems. More explicitly, the suggested algorithm is time-aware and comprised of two main parts. The first part assembles the short-term interactions of the users with the help of the attention mechanism while in the second part, the high-order user-item interactions are learned with the help of different algorithms including Deep Matrix factorization and Multi-Layer perceptron. The proposed shows good results in comparison with existing time-aware and deep learning-based recommendation models.

Aside from single-user modeling, there are also group recommender systems designed using various methodologies in the literature. For instance, Reza et al. [29] proposed a group recommender using by involving the influence of individuals present in the group as well as the leader's impact. More precisely, they consider some individuals as the leaders in the group since these individuals are trusted more than other individuals. The integration of fuzzy clustering and similarity metrics is employed to determine the users have similar preferences. The suggested method shows good MAE, RMSE, and group satisfaction measures. Similarly, Guo et al. [30] proposed the group recommender system using preference relations with the help of an extreme learning-based machine learning model. This model assists in forecasting unseen preference relations in candidate sets and later on borda voting rule is utilized to make a recommendation from these sets. Ortega et al. [31] suggested the group recommendation using matrix factorization (MF) based collaborative filtering. In this method, a group of users of different sizes i.e. small, medium, and large is mapped to a latent factor space. Movie rating datasets including MovieLens and Netflix are used to validate the performance of the proposed algorithm and show good performance in comparison with KNN and simple collaborative filtering-based group recommender systems.

Sadeghi et al. [32] proposed the multi-view recommender system (IMVGRS) with a group recommendation approach. This algorithm makes recommendations from two main viewpoints to a group. The first one is based on ratings while the second one is based on social connections i.e. trust. Singular value decomposition is utilized as a reduction technique for reducing the dimension of the data. The experimental findings demonstrate the efficacy of the suggested enhanced approach. Likewise, Shabnam et al. [33] exploit the influence of users' personality characteristics as well as preference choices according to privacy concerns about location along with emotional facts. They did a user study and experimented with group recommendation settings. Their research also emphasizes the need to give consumers the option of partially disclosing personal information, which seemed to be popular amongst those who took part. In another work [34], they considered explanations for users in addition to personality traits in group recommendation settings. In such group-based recommender models, clustering-based, machine learning algorithms including extreme machine learning, matrix factorization, and SVD are employed. All of these techniques have downsides such as low accuracy, lack of scalability, poor performance in sparse datasets, and cold-start concerns, which can be addressed with kernel mapping recommender systems [15]. In contrast to them, our approach possesses a novel and flexible structure learning technique in which different types of information including trust, user context, item context, and personality traits can be added as additional information using kernel tricks. Moreover, we are capable of developing a kernel-based learning system that can be trained in linear time in terms of data points (Fig. 2).

Some research studies also exploit social-connections and trust-based group recommender systems. For instance, Wang et al. [35] trust-based group recommender system named TruGRC. The proposed method is based on virtual coordinators that integrate two aggregation techniques i.e. profile and result aggregations. The role of the coordinator is to give a global view of all group's individual preferences and reconcile the conflicting preferences. Experiments are conducted on two benchmark datasets with distinct sizes of groups to demonstrate the effectiveness of the TruGRC method. Song et al. [36] proposed trust-based group recommender systems using deep learning and user interactions. The proposed method is validated on the Epinions dataset and shows good performance in comparison with other algorithms in terms of RMSE and Hit Ratio. Moreover, Choudhary et al. [37] exploit the similarity and knowledge-based trust relations to recommend items to a group of users. It was found from this study that trust-based relations among the group can enhance the quality of recommendations in groups.

3 Methodology

In this section, the step-by-step explanation of the proposed “KGR” and hybrid approach-based recommender system is discussed. In the first stage, an original dataset containing users, items, and ratings is converted into a group-based dataset with the help of trust, and later, a kernel mapping technique is employed to predict the unknown ratings that are not provided by the group. Furthermore, with the help of additive and multiplicative models, single-user preferences, and group-based preferences (trust-based) are merged as a hybrid technique to generate recommendations. The following is a more detailed exposition, beginning with the original KMR approach and progressing to the suggested method:

3.1 Kernel Mapping Recommender Systems (KMR)

The KMR algorithms are kernel-reliant methods employed to solve different challenges including data sparsity, imbalanced datasets, long tail scenarios, and cold start scenarios, and have achieved state-of-the-art performance i.e. lowest errors and high precision [15, 38]. The main concept is to figure out a multilinear mapping between two vector spaces. For instance, the first vector space can consist of vectors encoding details regarding the items that we want to rate, but the second vector space might additionally include a probability density characterizing the way an individual rates an item. Learning a linear mapping between two vector spaces allows to involve kernel trick. This kernel trick permits us a more effective and less costly method of handling inner products in high dimensional vector spaces and also facilitates linear mapping. Kernels in the KMR method can be used to include additional information, such as features and genres. The application of kernel functions improves the algorithm's ability to capture non-linear relationships. Since, the KMR has the ability to incorporate multiple sorts of information, such as context and trust, using kernels to further improve performance. In our earlier study [38], we improved the performance of the original KMR by combining user and item context; however, currently, the reason for choosing this method in this study is to examine its performance with user trust data. In this study, we have extended this approach to incorporate user’s trust data with the structured learning technique of the kernel mapping algorithm to improve its performance.

3.2 Trust-Based Grouping

Generally, in group recommender systems, groups are formed on different aggregation strategies such as grouping the users based on rating similarities, trust, and social connections. In this study, a group of users is formed based on trust and distrust values indicated as (1 or 0). For example, if user 1 only trusts users 2, 3, 4, and 5, the trust values are represented as 1 with these users and 0 with the remaining users. In this manner, a group is formed by merging the interactions of users 1, 2, 3, 4, and 5. When two or more people provide ratings for the same item in the group, then the overall rating is the average of their ratings of that item by the group. With this, the sparsity of the dataset is also reduced to some extent since, in single-user cases, some users have very few rating records for items. However, when several users are integrated into a single group, additional rating data for the items are gathered and combined in a single group viewed as a single user. Subsequently, after trust-based grouping, the group-item matrix is utilized further in KGR to predict the unknown ratings. Furthermore, in the hybrid approach, single-user preferences for items and group-based preferences are kept separately for each user, and later on, these preferences are modeled using separate kernels.

3.3 Proposed Kernel-Based Group Recommender (KGR) System

To tackle the recommendations problem, this study employs the kernel mapping technique, along with user trust data. The intuition behind kernel mapping techniques is to determine a multi-linear among two highly dimensional vectors. The quadratic optimization problem is solved to learn this multi-linear mapping. Moreover, this study adopted Joachims’ concept of carrying out training in linear time [39]. Technique for partially completed dataset and based on this conceptualization KMR algorithms are designed based on novel structure learning techniques [15, 40]. In the next section, we will describe how the KGR approach is adopted in “item-based” and “group-based” kernel mapping recommender systems.

3.4 Item-Based KGR

In the conventional KGR algorithm, the input data is in the form of groups who provided the ratings to the subset of item space and the remaining ratings are considered as un-rated items:

Equation (1), \(\emptyset\) denotes the missing values (i.e. ratings). For the residual ratings, the additive and multiplicative models are designed to make the recommendations. Equation (2) shows the additive model of residual ratings:

In the above Eq. (2),\(\overline{{r }_{i}}\) is the average rating for the items, where \(\overline{{r }_{g}}\) is the average rating provided by groups to items, and \(r\) is the average rating of the group-item matrix. Similarly, Eq. (3) provides the multiplicative model for the residual ratings.

In Eq. (3),\(\widetilde{{r}_{i}}\), \(\widetilde{{r}_{g}}\) and \(\widetilde{r}\) denote the geometric means of the ratings provided by group \(G\) to items \(i\). In this study, both multiplicative and additive models are implemented to draw the results, but a multiplicative model produces better results. Szedmak et al. [40] proposed a technique for structure learning in their work and that technique is modified to be applied in collaborative filtering algorithms. We hypothesized that some information relevant to items is denoted as \({q}_{i}\). The ratings provided by the groups to items are the possible information i.e. \({r}_{i,g}\) in which \(({r}_{i,g}|(i,g)\in \mathcal{D})\) and \(\mathcal{D}\subset I\times G\). \({\mathcal{D}}_{i}\) is the collection of items on which ratings are provided by groups or it also represents textual features that denote an item \(i\). A function \(\varnothing \) is employed for mapping the input features denoted as \({q}_{i}\) to some Hilbert space. The residual rating \({r}_{i,g}\) in another Hilbert space is likewise mapped to another vector space. In this study, all these objects are located in the function space denoted as \({L}_{2}({\mathbb{R}})\). The density distribution of normal distribution is employed to represent every residual with an average and variance indicated by \(\widetilde{{r}_{i,g}}\), and \(\sigma \).

The rationale for selecting this notion is to account for the rating errors when they were assigned which can be caused owing to the discretization of the rating system or variability in delivering rating information. The algorithm learns the mapping between two vector spaces e.g. Input and residual ratings-based vector space which helps make predictions of ratings. More explicitly, in this study, the algorithm "KGR" seeks a collection of linear mappings \({W}_{g}\) belonging to the space of Hilbert comprising the vectors \(\phi ({q}_{i})\) representing groups-item interactions into Hilbert space of residual group-based rating representation \(\psi \left(\widetilde{{r}_{ig}}\right)\). The Frobenius norm of \({W}_{g}\) is minimized in the given optimization process along with the summation of slack variables, \({\xi }_{i}\), with regard to the collection of maximum margins constraint types. The underlying optimization problem in the given Eq. (5):

In the above Eq. (5), \({\xi }_{i}\ge 0\) is a slack variable and when there is a restriction for every pair \((i,g\in D)\). When the alignment between vectors i.e. \({W}_{g}\phi \left({q}_{i}\right)\) and \(\psi \left(\widetilde{{r}_{ig}}\right)\) is done then the optimum solution is achieved. The forecasting regarding new items \((k)\) can be made easily by employing \({W}_{g}\phi \left({q}_{k}\right)\) after seeking the mappings of \({W}_{g}\). Lagrangian multipliers are involved to address the optimization problem.

In the above Eq. (6), \({\alpha }_{ig}\ge 0\) is referred to as the Lagrangian multiplier that is involved to make sure that the expression while \(\left(\psi \left(\widetilde{{r}_{ig}}\right), {W}_{g}\phi \left({q}_{i}\right)\right)\ge 1-{\xi }_{i}\), and \({\lambda }_{i}\ge 0\) are also the other Lagrangian multipliers that are employed to make sure that \({\xi }_{i}\ge 0\). The best mapping outcomes are achieved by conducting:

Considering the constraints \({\alpha }_{ig}\ge 0\), for all \((i,g)\in \mathcal{D})\) and \({\lambda }_{i}\ge 0\) for all items \(i\epsilon I\). The Eq. (8) is used for universal linear mapping \({W}_{g}\):

In the above Eq. (8), the tensor multiplication of two vectors is denoted by \(\otimes\). Moreover, \({W}_{g}\) mapping is represented by a matrix in the scenario of linear mapping as Hilbert and high dimensional space of vectors comprising finite dimensions. Taking the derivative of Eq. (6) with reference to \({W}_{g}\), then we obtain:

The preceding statement explains how the Lagrangian variables are reduced in this case with regard to \({W}_{g}\) i.e. when:

Taking the derivative with reference to \({\xi }_{i}\), we can determine that:

Whenever these derivatives become zero, we observed that the Lagrangian is minimized with reference to \({\xi }_{i}\) when:

where inequality arises since \({\lambda }_{i}\ge 0\).

When we plug the values of all separate equations into the main Eq. (6) of Lagrangian, we obtain a dual problem of Eq. (6) i.e. a maximizing problem with regard to the variables of Lagrangian \({\alpha }_{ig}\). The dual problem is expressed mathematically as Eq. (13):

Considering the constraint on variables \(\alpha \) i.e. \(\alpha \epsilon Z(\alpha )\) where:

Here in the position, we can now easily apply the kernel tricks here. In this study, different kernel functions are applied that are defined as:

In the above Eqs. (15–16), \({K}_{\widehat{r}}\) is the residual kernel while \({K}_{q}\) is the input feature kernel. Hence, \(f\left(\alpha \right)\) can be written as:

There exist various types of kernels and this study selects the positive definite kernels. In addition, the mapping of residual ratings of groups is calculated with the help of these kernels with the normalization procedure.

3.5 Learning of Lagrange Multipliers

For big-scale recommender systems, addressing the quadratic problem with a generic quadratic programming solver is exceedingly costly and would pose realistic concerns because of the enormous number of instances, groups, and items. Due to this issue, this study employs the conditional gradient method as a solution to tackle this problem. The function \(f\left(\alpha \right)\) should be represented in the matrix form for learning Lagrange multipliers. A more extensive discussion of the learning of Lagrangian multipliers is given in [15].

3.6 Group-Based KGR

According to the features available in the dataset, data can be represented in various forms such as matrix, and following the underlying algorithm is trained over the rows and columns of the matrix. For instance, the total number of groups in the system, the items available in the item space, as well as the ratings by groups for a subset of items. As a result, several models or methods dealing with these properties have been developed. This study also proposed the group-based variant of “KGR”. Therefore, this variant is also referred to as the “KGR”. More precisely, information \({{{q}}}_{{{g}}}\) denotes the information about the groups denoted as \({g}\) to better coordinate the feature vectors \({q}_{u}\) as well as group residual rating \({\widehat{{{r}}}}_{{{i}},{{g}}}\), and \({{{W}}}_{{{g}}}\) i.e., linear mapping. The derivation for the group-based variant is identical to that of the item-based variant, however, only the notations are exchanged from \({{i}}\) to \({{g}}\).

3.7 Hybrid KGR

In the above group and item-based KGR, we have modeled the preferences of users as a group with the help of trust relations. However, in this case, the individual preferences of a user for an item are ignored due to accumulative group preferences. To cope with such issues in KGR and to make individual preferences alive in the group, we exploit both individual and group preferences by designing separate kernels i.e., group-based kernels and user-based kernels. More explicitly as shown in Fig. 3, we first form the groups of users using trust relations, then in a subsequent step, the individual preferences (ratings) for an item are also included as an additional feature. For example, group \({{{g}}}_{{{i}}}\) rates an item \({i}_{1}\) with some rating \({{{r}}}_{{{i}}{{g}}1}\), and a user \({{{u}}}_{{{i}}}\in {{{g}}}_{{{i}}}\) rates the item \({{{i}}}_{1}\) with a rating \({{{r}}}_{{{i}}{{g}}2}\). Both rating \({{{r}}}_{{{i}}{{g}}1}\) and \({{{r}}}_{{{i}}{{g}}2}\) will indicate group and user (users belonging to particular groups) based preferences to model in the recommender system to generate future recommendations. To add this information to KGR, we have added separate kernels for users and combined those kernels with group-based kernels to predict the ratings.

Similarly, a user kernel is defined as:

This source of user preferences for items can be integrated into KGR by combining kernels. This combination is done linearly as follows:

Moreover, the convex integration of both types of kernels is given in Eq. (21):

In the above Eq. (21), there exists the assumption that \({\beta }_{group\_rat}\)+ \({\beta }_{user}=1\), hence, the parameters are tuned within the range of 0.0 to 1.0. Integration of kernels in this manner will lead to the representation of vectors as follows:

The integration of these kernels is done either linearly or non-linearly with the help of additive and multiplicative models. In the additive model, the concatenation of kernels is done with the help of Eqs. (20) and (22):

In the above Eq. (23), the symbol \(\oplus\) denotes the sum of the vectors of features. In the multiplicative model, the integration of kernels can also be done non-linearly as follows:

And

where the symbol \( \cdot\) denotes the point-wise multiplication while \(\otimes\) denotes the tensor multiplication. This type of kernel integration will result in the aggregation of user individual preferences as well as trust-based group preferences.

4 Experiments and Discussions

This section includes several types of experimental results as well as detailed analysis. In addition, the dataset utilized in this study and the evaluation metrics used to assess the model's performance are included in this section.

4.1 Datasets

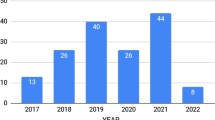

In movie lens datasets [41], information regarding users’ trust relations is not available. Therefore, in this study, we have employed the datasets in which information about users’ trust with other users is available. More precisely, we have considered Film Trust [42], and CiaoDVD [43] datasets for experimentation. The rating scale in the Film Trust data set is in the range of [0.5, 4], however, we have modified it to convert it into a standard rating scale of 1 to 5. The CiaoDVD dataset has a rating scale of 1 to 5. In the Film Trust dataset, the total number of users is 1508 with 1853 social connections, 2071 items, and 35,497 ratings. Subsequently, CiaoDVD has 7553 users with 111,781 social links. The total number of items and ratings in CiaoDVD are 99,746 and 278,483 respectively. In these datasets, the values of trust and distrust are indicated with a binary number 1 or 0 i.e., 1 indicates trust while 0 indicates distrust. A more detailed description of the datasets is given in Table 2 and Fig. 4 provides the rating distribution of both datasets.

4.2 Evaluation Metrics

To assess the performance of the model in generating the optimal recommendation of items to groups, we have employed different evaluation metrics which include RMSE (Root means Squared Error), Precision, Recall, F1 measure, and Receiver Operating Characteristic (ROC) sensitivity. Following are the mathematical equations used to evaluate the recommender model:

4.2.1 Root Mean Square Error (RMSE)

RMSE is a frequently used assessment metric for recommender systems that are primarily linked to mean absolute error and squared the error before combining it and can be computed using the following Eq. (25):

In the above equations, the ratings predicted by the model are denoted by \({{r}_{ig}}^{,}\) whereas the original ratings are denoted by \({r}_{ig}\) where \(|{T}_{test}|\) is the number of instances in the test set.

4.2.2 Precision

Precision is defined as the likelihood of relevant items from many items offered by the recommender agent. Precision could be mathematically represented in Eq. (26):

4.2.3 Recall

The recall is defined as the likelihood of identifying the relevant items that are selected by the recommender agent from a collection of all relevant items. The recall could be mathematically represented in Eq. (27):

4.2.4 F1 Measure

The F1 measure is another popular metric used to measure the performance of the recommender system. It can be tested by examining how frequently it assists users in predicting or recommending desirable things. Mathematically, it can be computed by Eq. (28):

4.2.5 Receiver Operating Characteristics Sensitivity (ROC)

This metric evaluates the recommender systems' capability of discriminating between good and bad items. It determines the likelihood with which a recommender system is able to accept the good items. Its values range from 0 to 1 is-e with values close to 0 being bad while values close to 1 are good. To evaluate the recommender system with this metric, we first determine which items are good for users and which are bad. To compute the ROC sensitivity in this research study, we used the same approach as in [44].

4.3 Evaluation Criteria and Experimental Settings

To evaluate the performance of KGR, all of the datasets including FilmTrust and CiaoDVD are randomly divided into 80–20 split criteria for train and testing with three and five-fold-cross validation. Moreover, during training, the train set is further divided to make a validation set to compute the performance of KGR during training. The implementation is done in MATLAB on AMD-A8-7410 APU with AMD Radeon R5 Graphics and 8GB RAM. Some parameters of the model are given in Table 3. More precisely, as shown in Table 3, we have experimented with different kernels. The kernels used for input are linear, polynomial, Gaussian, and Poly Gaussian while the output kernel type is set to Gaussian. The total iterations are set to 2000 and the value of C (which punishes the slack variables in the Lagrange formulation) was set to 20. The values \({\beta }_{group\_rat}\) and \({\beta }_{user}\) is 0.5 and L2 normalization is used for dual bounds.

4.4 Results and Discussions

To evaluate the proposed trust-based recommender system, we have designed experimental settings on both datasets separately and computed the metrics defined in the previous section. More precisely, we evaluate the recommender as both an item-based and group-based version denoted by \({{{K}}{{G}}{{R}}}_{{{i}}{{b}}}\) and \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\). Later, the variant namely" Hybrid KGR" in which single-user preferences for items are also added as additional information using user kernels is evaluated. This additional information i.e. single-user preferences to items is added using multiplicative and addition of kernels denoted as Hybrid \({{KGR}}_{{{{ib}} \otimes }}\) and Hybrid \({{KGR}}_{{{{ib}} \oplus }}\). Moreover, the proposed method is validated using both 2, 3, 4, and fivefold cross-validation. The results of all these experimental settings on both datasets are given in Tables 4 and 5. More precisely, the RMSE with \({{{K}}{{G}}{{R}}}_{{{i}}{{b}}}\) on the FilmTrust dataset is about 0.3306 while on the CiaoDVD dataset, the RMSE value is about 0.3013 which is less than the FilmTrust dataset. Similarly, the RMSE values on the group-based version \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\) for both of the datasets exhibit similar error values. Overall, the RMSE scores on both datasets are much lower indicating the good performance of the KGR in predicting the ratings of the items given by groups. Furthermore, in terms of ranking metrics i.e. precision, recall, FScore, and TopNROC values are also good on both datasets.

More precisely, the best precision@20 with both \({{{K}}{{G}}{{R}}}_{{{i}}{{b}}}\) and \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\) on FilmTrust dataset is about 0.6704 and 0.6439 respectively. Similarly, on the CiaoDVD dataset, the best values of precision@20 with \({{{K}}{{G}}{{R}}}_{{{i}}{{b}}}\) and \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\) are 0.6857 and 0.5763. It is observed from these results that the group-based version works better than item-based versions in terms of precision@20 i.e., with the group-based version, the recommender is able to recommend the most relevant items of about 68.57% on the top20 recommendation list to users belonging to several groups.

Similarly, the topNRoc on both datasets with \({{{K}}{{G}}{{R}}}_{{{i}}{{b}}}\) and \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\) is also very good. The highest topNRoc@20 of 0.7996 is observed with the CiaoDVD dataset using \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\). Similarly, the value of topNRoc@20 on FilmTrust using \({{{K}}{{G}}{{R}}}_{{{g}}{{b}}}\) is about 0.7758. These highest values of topNRoc@20 show the effective capability of the recommender algorithm in differentiating the good and bad items for the users belonging to several groups based on trust relations.

Subsequently, in the next stage, we have examined the performance of the proposed algorithm using the hybrid approach in which both user-group-level and individual-level preferences are exploited using the addition and multiplication of kernels. Initially, each user's group based on trust relationships is learned using group kernels, and later on, the additional information of user individual preferences for items is added using separate kernels which are then combined into group kernels using additive and multiplicative models.

The results of this hybrid approach using both additive and multiplicative methods are also given in Tables 4 and 5. The lowest RMSE value of 0.4244 is observed with the FilmTrust dataset using an additive model. However, on the Ciao dataset, the lowest RMSE value of 0.3385 is observed with the multiplicative model. These lowest RMSE values of error show the best performance of the proposed hybrid approaches of additive and multiplicative kernels. However, if a comparison is carried out between the standalone KGR and the hybrid method, then the standalone method works better than the hybrid technique. This is due to the reason that the hybrid method exploits both the individual level and group-level preferences to forecast unknown ratings, which makes it complex to make a balance between user-trust-based preferences and actual user preferences. Furthermore, the highest topNRoc@20 on the FilmTrust dataset using the hybrid method of multiplicative kernels Hybrid \({{KGR}}_{{{{ib}} \otimes }}\) is about 0.5633 and the highest topNRoc@20 on the CiaoDVD dataset using the hybrid method of additive kernels Hybrid \({{KGR}}_{{{{ib}} \oplus }}\) is 0.5383. In addition, the mean absolute error (MAE) values during training of "KGR" and "Hybrid KGR" using 2, 3, 4, and fivefold cross-validation on both datasets are depicted in Fig. 5. Moreover, the comparison between different types of kernels in terms of RMSE error and precision@20 is presented in Fig. 6 for both the datasets and methods. These kernels include linear, polynomial, Gaussian, and polyGaussian. It is observed that RMSE error is low with the polynomial kernels and the Precision@20 is also high with polynomial kernels. Hence, polynomial kernels were found to be the best kernel in learning a multi-linear mapping between two vector spaces to forecast the preferences of the users. Furthermore, the impact of user trust in reducing the RMSE errors is also studied as shown in Fig. 7. More specifically, Fig. 7 shows that extending the trust circle of user’s increases performance and minimizes errors. This is because increasing the number of trustees reduces the sparsity of user (truster) rating data, allowing recommender systems to create more personalized recommendations. In addition, the contribution of kernels (i.e. user-based and group-based) can be modified by employing Eq. (21). More precisely, we set different values of β for both kernels. The value of RMSE error by setting different values of β with both multiplicative and additive models are shown in Fig. 8 with an indication of best values. From Fig. 8, we can observe that with the multiplicative model, the best value of \({({{\beta}}}_{{{g}}{{r}}{{o}}{{u}}{{p}}\_{{r}}{{a}}{{t}}}\),\({{{\beta}}}_{{{u}}{{s}}{{e}}{{r}}})\) for the FilmTrust dataset is (0.5, 0.5) while for CiaoDVD the best value is (0.6, 0.4). Similarly, with the additive model, the best values of \({({{\beta}}}_{{{g}}{{r}}{{o}}{{u}}{{p}}\_{{r}}{{a}}{{t}}}\),\({{{\beta}}}_{{{u}}{{s}}{{e}}{{r}}})\) for both the FilmTrust and CiaoDVD datasets are (0.9, 0.1) respectively.

Error (mean absolute error) during training of the “KGR” and “Hybrid KGR” with both 2,3, 4 and 5- folds cross-validation a KGR on FilmTrust dataset with 3 and fivefold cross-validation using both group and item-based versions b hybrid KGR on FilmTrust dataset with both 3 and fivefold cross-validation using both additive and multiplicative models c KGR on FilmTrust dataset with 2 and fourfold cross-validation using both group and item-based versions d hybrid KGR on FilmTrust dataset with both 2 and fourfold cross validation suing both additive and multiplicative models e KGR on CiaoDVD dataset with 3 and fivefold cross-validation using both group and item-based versions f hybrid KGR on CiaoDVD dataset with both 3 and fivefold cross validation suing both additive and multiplicative models g KGR on CiaoDVD dataset with 2 and fourfold cross-validation using both group and item-based versions h hybrid KGR on CiaoDVD dataset with both 2 and fourfold cross validation suing both additive and multiplicative model

Performance comparison in terms of precision@20 and RMSE using different types of input kernels a RMSE comparison on FilmTrust and CiaoDVD datasets using KGR b precision@20 comparison on FilmTrust and CiaoDVD datasets using KGR c precision@20 comparison on FilmTrust using hybrid KGR d RMSE Comparison on FilmTrust using hybrid KGR e precision@20 comparison on CiaoDVD using hybrid KGR f RMSE comparison on CiaoDVD using Hybrid KGR

Impact on error values by increasing the values of trust factors (i.e., trust relations of each user) a, b, c, d performance comparison in terms of error values on FilmTrust dataset e, f, g, h performance comparison in terms of error values on CiaoDVD dataset i, j, k, l mean error values on both dataset

To further indicate the worth of the proposed method we have performed the comparison of KGR with the original KMR method [15]. More explicitly, in the original KMR standalone users and item kernels are employed to forecast the ratings, however, in the proposed KGR trust relations are taken into account and for each user, recommendations are generated using their trust relations with other users and their standalone preferences to items. The comparison results between the original KMR and proposed methods for both datasets are given in Tables 6 and 7. As given in Table 6, the RMSE error for both item-based \({KMR}_{ib}\) and user-based versions \({KMR}_{ub}\) is high for different folds-cross validation. However, this error is reduced to a 0.3303 value on FilmTrust datasets with the proposed method of group-based kernels on the same fold-cross validation. It is found that \({KGR}_{gb}\) shows good performance in all the proposed variants. Similarly, if the comparison is made between the hybrid approaches and original KMR versions \({KMR}_{ib}\) and \({KMR}_{ub}\), then the proposed hybrid approach also performs well in terms of RMSE. Similarly, if performance in terms of other metrics precsion@20, recall@20, F1 measure@20, and TopNRoc@20 is compared, then the proposed method also shows a good improvement over the original method. More explicitly, the highest precsiom@20 and TopNRoc@20 of 0.6439 and 0.7607 is achieved with \({KGR}_{gb}\) which is better than the original KMR versions \({KMR}_{ib}\) and \({KMR}_{ub}\) having 0.4446 and 0.4400. Subsequently, the identical set of experiments is accomplished on the second dataset namely the CiaoDVD dataset as shown in Table 7. It is observed that the proposed method also shows good improvement over this dataset in comparison with the original KMR. The RMSE error is reduced to 0.3013 with the proposed method, however, with the original KMR, this error is 1.8370. Similarly, if topNRoc@20 is observed then the proposed shows a substantial improvement with a score of 0.7948 in comparison with the original method having 0.4400 topNRoc@20. Tables 8 and Table 9 show the results with parameter C indicating the range for C, i.e., the tradeoff parameter between regularization and error, set at 20. As a parameter study, we also computed the results with this number set to 10 (i.e., less penalization). The larger the value, the more the slack variables in the Lagrangian formulation are penalized. As this should neither be too high nor too low, there must be a trade-off.

All these results indicate that modeling user preferences using trust relationships solves the problem of cold start and data sparsity to some extent. For example, suppose a user does not provide ratings for the items, resulting in data sparsity.

To overcome such sparsity, user preferences can be modeled using a trust relationship that indicates their interests. Similarly, in the cold-start scenario, if the user provides a very small number of ratings to items, then, in that case, the recommender algorithm have not sufficient information regarding user preferences, so, in this study, the suggested KGR models the preferences by utilizing trust relationships, such as user provide trust value for other users, to learn about user preferences in order to overcome data sparsity connected with that user. Following that, a comparison with other conventional and popular methods for recommendations is also performed, as shown in Fig. 9. The algorithm used for comparison is listed in Table 10. The RMSE errors resulting from these methods are first sorted before being shown as a line curve. More explicitly, as depicted in Fig. 9, the proposed algorithm has the lowest RMSE error in comparison with these algorithms. Figure 9a shows the comparison on the FilmTrust dataset while Fig. 9b shows the comparison on the CiaoDVD dataset respectively. Based on this comparison, the proposed method has the lowest and best value of error when forecasting user preferences.

Furthermore, a comparison between existing state-of-the-art approaches in literature and the proposed method has also been made. Table 11 shows the comparison of existing studies and the proposed method. More precisely, in existing studies, different methods are designed by researchers to improve the performance of recommender systems by addressing different challenges, such as cold start, data sparsity, and data unbalancing problems in datasets. As given in Table 11, various researchers use trust relationships to improve the effectiveness of recommender systems and produce good outcomes. For instance, Molaei et al. [54] suggest the Collaborative Deep Forest Learning (CDFL) in which social latent features are learned that are integrated into the CF approach in the next stage based on cascade tree forest and have RMSE of about 0.857. Similarly, Zhu et al. [58] employs a deep learning approach of neural collaborative filtering along with an attention mechanism and reports an RMSE of 0.4966. Bathla et al. [53] also employed the deep learning model along with a shared layer of auto-encoders and performed experiments on trust-based datasets. It is observed from these results that the proposed method has the potential benefit of good results over state-of-the-art methods since it achieves the lowest RMSE error value of 0.30313 on the Ciao dataset and 0.3294 on the FilmTrust dataset. Moreover, the proposed model is capable of addressing the data sparsity and cold start issues by making personalized recommendations using the trust relations of the users. For example, if a new user creates a profile but has not yet rated the items, the system may gather information about his tastes from other users they trust. In addition, another important strength of the proposed work is that it is flexible to integrate multiple types of additional features and information using kernel tricks such as contextual variables, trust information, personality traits as well and text-based features. Since the algorithm we created is fairly adaptable, we can quickly modify it to be user-based or item-based. Moreover, an intriguing aspect of our technique is that we map the residues in the ratings onto a density function that represents the residue's uncertainties. These measures aid in performance improvement, especially when dealing with sparse data.

Following that, the above-mentioned findings lead us to the answer of the research questions identified in Sect. 1. Hence, the answer to first research question is that by involving the trust data of users, the performance KMR algorithm is increased by significant margin. Similarly, the answer to the second research question is that the sparsity and cold-start issues have been adequately addressed by forming groups of users who trust one another. Lastly, the answer to the last research question is that individual user preferences are preserved by designing both individual and group level kernels. This can be done by combining kernels using additive and multiplicative models.

4.5 Implications of the Research

The suggested extended version of KMR referred to as the “KGR” produced encouraging findings with major implications and contributions to existing literature. In general, researchers working in the domain of recommender systems keep coming up with their proposed methodologies to improve recommender models to handle various challenges such as cold start problems, data sparsity issues, scalability, and many more. To make an additional contribution, the proposed study presents another variant of the KMR by involving user’s trust data. The results presented in Tables 4, 5, 6, 7, 8, 9 show that adding a trust factor while formulating a group improves the traditional KMR. The sparsity of the rating matrix is addressed by including user trust in ratings provided by other users. Moreover, Fig. 9 and Table 11 also demonstrate the effectiveness of the proposed KGR over different algorithms and state-of-the-art studies. The study points out the KGR algorithm's effectiveness, which allows for training the recommendation system in linear time. One interesting aspect of this technique is that we map the residues in the ratings onto a density function that captures the residue's uncertainty. We regarded the mapping \({W}_{g}\phi \left({q}_{i}\right)\) for unknown residues as a rough estimate of a density function for the residue. Despite the fact that this function is not a function of density (it gets negative in some locations and is not normalized), it is highly helpful for considering the positive portion of the function to be a density function through which mean, mode, median, as well as the variance, is calculated. These measures aid in performance improvement, especially when dealing with sparse data. Such advancements at the algorithm level open the way for an entirely novel type of recommendation system that emphasizes personalization and effectiveness by addressing challenges. As a result, one of the key research implications of this study is that it contributes to the development of research on this topic.

Furthermore, the proposed KGR is scalable for usage in various systems since it achieves faster convergence on diverse large-scale datasets while maintaining high performance and reduced error values in forecasting user tastes in terms of ratings for different items. Depending on the underlying domain, these items can be of any sort, such as movie item records, products, and so on. In addition, the hybrid version of the proposed method is intended to describe an individual’s liking utilizing both the user kernel and the group kernel. This can be accomplished by designing multiplicative and additive models. The suggested method is also flexible enough because of kernels, to include different information, such as user context. The cold start scenario, in which users only rate a few items, is likewise addressed by formulating the problem as a group with trust data. More specifically, the presented method tackles the challenge by forming groups of people who trust one another, which means they rely on the rating provided by the other user. The strong performance of the proposed KGR will provide it a competitive edge over other algorithms and will encourage various researchers to use and develop kernel mapping recommender systems to extend the potential for future research. Another noteworthy finding of this research is its adaptability in including many types of information, such as context, which includes both the user and the item, trust, or some other information through kernels.

Besides good performance, there also exist some limitations of the proposed work. As a result, one potential shortcoming of the proposed approach is that it does not adapt to changing user interests over time. To make it more effective, we will integrate it with reinforcement learning frameworks that are adaptable based on evolving user interests. Similarly, another shortcoming is that KGR simply examines user trust criteria when forming groups of users to generate recommendations; however, additional factors such as personality traits, demographics, emotional states, and so on may also be included. The combination of these factors can increase the effectiveness of KGR while delivering recommendations. However, there are no publicly available datasets that take into consideration all of these features, including trust. As a result, in the future, we are going to develop our own dataset by taking into account all of these crucial factors.

5 Conclusion

Recommender systems are very helpful tools since it guides users in making decisions and selecting a subset of items from a large space of items according to their interest. These systems employ the previous interactions of the users to generate a new set of recommendations. In contrast to single-user modeling, group-based recommendations have become a promising research focus. Therefore, to best model user preferences, this study involves the user-trust relations to exploit grouping and design the group kernels to forecast the ratings for unrated items. In addition, it is necessary to maintain the interest of individual preferences of a user belonging to a particular group. To address this, we design a hybrid approach in which additive and multiplicative models are used to integrate both group-based kernels and user-based kernels. The user kernels include information on single-user preferences, whereas the group kernels hold information concerning group similarities. Furthermore, the proposed method is validated on Trust-based datasets including Film Trust, and CiaoDVD datasets, and shows good improvement over single-user modeling employed in the original KMR algorithm as well as on seventeen traditional algorithms and state-of-the-art studies.

References

Schafer JB, Konstan JA, Riedl J (2001) E-commerce recommendation applications. Data Min Knowl Disc 5:115–153

Dara S, Chowdary CR, Kumar C (2020) A survey on group recommender systems. J Intell Inf Syst 54:271–295

Alhijawi B and Kilani Y (2016) Using genetic algorithms for measuring the similarity values between users in collaborative filtering recommender systems. In: 2016 IEEE/ACIS 15th international conference on computer and information science (ICIS), pp 1–6

Alhijawi B, Kilani Y (2020) The recommender system: a survey. Int J Adv Intell Paradigms 15:229–251

Garcia I, Sebastia L, Onaindia E, Guzman C (2009) A group recommender system for tourist activities. In: International conference on electronic commerce and web technologies, pp 26–37

Jeong HJ, Lee KH, Kim MH (2021) DGC: Dynamic group behavior modeling that utilizes context information for group recommendation. Knowl-Based Syst 213:106659

Masthoff J (2004) Group modeling: selecting a sequence of television items to suit a group of viewers. Personalized digital television. Springer, Dordrecht, pp 93–141

Quijano-Sanchez L, Recio-Garcia JA, Diaz-Agudo B, Jimenez-Diaz G (2013) Social factors in group recommender systems. ACM Trans Intell Syst Technol (TIST) 4:1–30

Quijano-Sanchez L, Recio-Garcia JA, and Diaz-Agudo B (2010) Personality and social trust in group recommendations. In: 2010 22Nd IEEE international conference on tools with artificial intelligence, pp 121–126

Musto C, de Gemmis M, Lops P, Narducci F, Semeraro G (2022) Semantics and content-based recommendations. In: Ricci F, Rokach L, Shapira B (eds) Recommender systems handbook. Springer, New York, NY, pp 251–298

Gazdar A, Hidri L (2020) A new similarity measure for collaborative filtering based recommender systems. Knowl-Based Syst 188:105058

Safoury L, Salah A (2013) Exploiting user demographic attributes for solving cold-start problem in recommender system. Lect Notes Softw Eng 1:303–307

Sejwal VK, Abulaish M (2020) CRecSys: a context-based recommender system using collaborative filtering and LOD. IEEE Access 8:158432–158448

Villegas NM, Sánchez C, Díaz-Cely J, Tamura G (2018) Characterizing context-aware recommender systems: a systematic literature review. Knowl-Based Syst 140:173–200

Ghazanfar MA, Prügel-Bennett A, Szedmak S (2012) Kernel-mapping recommender system algorithms. Inf Sci 208:81–104

Azadjalal MM, Moradi P, Abdollahpouri A, Jalili M (2017) A trust-aware recommendation method based on Pareto dominance and confidence concepts. Knowl-Based Syst 116:130–143

Massa P and Avesani P (2007) Trust-aware recommender systems. In: Proceedings of the 2007 ACM conference on recommender systems, pp 17–24

Moradi P, Ahmadian S (2015) A reliability-based recommendation method to improve trust-aware recommender systems. Expert Syst Appl 42:7386–7398

Ahmadian S, Ahmadian M, Jalili M (2022) A deep learning based trust-and tag-aware recommender system. Neurocomputing 488:557–571

Ko H, Lee S, Park Y, Choi A (2022) A survey of recommendation systems: recommendation models, techniques, and application fields. Electronics 11:141

Wang D, Liang Y, Xu D, Feng X, Guan R (2018) A content-based recommender system for computer science publications. Knowl-Based Syst 157:1–9

Bhagavatula C, Feldman S, Power R, and Ammar W (2018) Content-based citation recommendation. arXiv preprint arXiv:1802.08301

A Neloy AA, Shafayat Oshman M, Islam MM, Hossain MJ, Zahir ZB (2019) Content-based health recommender system for ICU patient. In: International conference on multi-disciplinary trends in artificial intelligence, pp 229–237

Singla R, Gupta S, Gupta A, Vishwakarma DK (2020) FLEX: a content based movie recommender. In: 2020 international conference for emerging technology (INCET), pp 1–4

Renuka S, Raj Kiran GSS, Rohit Palakodeti (2021) An unsupervised content-based article recommendation system using natural language processing. In: Jeena Jacob I, Shanmugam SK, Piramuthu S, Falkowski-Gilski P (eds) Data intelligence and cognitive informatics: proceedings of ICDICI 2020. Springer, Singapore, pp 165–180

Xiong R, Wang J, Zhang N, Ma Y (2018) Deep hybrid collaborative filtering for web service recommendation. Expert Syst Appl 110:191–205

Xue F, He X, Wang X, Xu J, Liu K, Hong R (2019) Deep item-based collaborative filtering for top-n recommendation. ACM Trans Inf Syst (TOIS) 37:1–25

Wang R, Wu Z, Lou J, Jiang Y (2022) Attention-based dynamic user modeling and deep collaborative filtering recommendation. Expert Syst Appl 188:116036

Nozari RB, Koohi H (2020) A novel group recommender system based on members’ influence and leader impact. Knowl-Based Syst 205:106296

Guo Z, Zeng W, Wang H, Shen Y (2019) An enhanced group recommender system by exploiting preference relation. IEEE Access 7:24852–24864

Ortega F, Hernando A, Bobadilla J, Kang JH (2016) Recommending items to group of users using matrix factorization based collaborative filtering. Inf Sci 345:313–324

Sadeghi M, Asghari SA, Pedram MM (2020) An improved method multi-view group recommender system (IMVGRS). In: 2020 8th iranian joint congress on fuzzy and intelligent systems (CFIS), pp 127–132

Najafian S, Draws T, Barile F, Tkalcic M, Yang J, Tintarev N (2021) Exploring user concerns about disclosing location and emotion information in group recommendations. In: Proceedings of the 32nd ACM conference on hypertext and social media, pp 155–164

Najafian S, Draws T, Tkalcic M, Yang J, Tintarev N (2021) Factors influencing privacy concern for explanations of group recommendation. In: Proceedings of the 29th ACM conference on user modeling, adaptation and personalization, pp 14–23

Wang X, Liu Y, Lu J, Xiong F, Zhang G (2019) TruGRC: Trust-aware group recommendation with virtual coordinators. Future Gener Comput Syst 94:224–236

Song Y, Ma W, Liu T (2020) Deep group recommender system model based on user trust. In: 2020 13th international congress on image and signal processing, BioMedical Engineering and Informatics (CISP-BMEI), pp 1015–1019

Choudhary N, Bharadwaj K (2019) Leveraging trust behaviour of users for group recommender systems in social networks. Integrated intelligent computing, communication and security. Springer, New York, pp 41–47

Iqbal M, Ghazanfar MA, Sattar A, Maqsood M, Khan S, Mehmood I et al (2019) Kernel context recommender system (KCR): a scalable context-aware recommender system algorithm. IEEE Access 7:24719–24737

Joachims T (2006) Training linear SVMs in linear time. In: Proceedings of the 12th ACM SIGKDD international conference on knowledge discovery and data mining, pp 217–226

Szedmak S, Ni Y, Gunn SR (2010) Maximum margin learning with incomplete data: learning networks instead of tables. In: Proceedings of the First workshop on applications of pattern analysis, pp 96–102

MovieLensDatasets Available Online,https://grouplens.org/datasets/movielens, Accessed On, 30 June 2023

FilmTrust Dataset Available Onlie, https://guoguibing.github.io/librec/datasets.html, Accessed 30 June 2022

Guo G, Zhang J, Thalmann D, Yorke-Smith N (2014) Etaf: an extended trust antecedents framework for trust prediction. In: 2014 IEEE/ACM international conference on advances in social networks analysis and mining (ASONAM 2014), pp 540–547

Ghazanfar M, Prugel-Bennett A (2010) Building switching hybrid recommender system using machine learning classifiers and collaborative filtering. IAENG Int J Compu Sci 37:1–16

Rendle S, Freudenthaler C, Gantner Z, Schmidt-Thieme L (2012) BPR: Bayesian personalized ranking from implicit feedback. arXiv preprint arXiv:1205.2618

Lee D, Seung HS (2000) Algorithms for non-negative matrix factorization. Adv Neural Inf Process Syst 13:1–7

George T and Merugu S (2005) A scalable collaborative filtering framework based on co-clustering. In: Fifth IEEE international conference on data mining (ICDM'05), p 4

Lemire D and Maclachlan A (2005) Slope one predictors for online rating-based collaborative filtering. In: Proceedings of the 2005 SIAM International Conference on Data Mining, pp 471–475

Mnih A, Salakhutdinov RR (2007) Probabilistic matrix factorization. Adv Neural Inf Process Syst 20:1–8

Koren Y, Bell R, Volinsky C (2009) Matrix factorization techniques for recommender systems. Computer 42:30–37

Koren Y (2008) Factorization meets the neighborhood: a multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD international conference on knowledge discovery and data mining, pp 426–434

Ricci FR, Sharpira L, Kantor B (2010) Recommender systems handbook, 1st edn. Springer-Verlag, New York

Bathla G, Aggarwal H, Rani R (2020) AutoTrustRec: recommender system with social trust and deep learning using autoEncoder. Multimed Tools App 79:20845–20860

Molaei S, Havvaei A, Zare H, Jalili M (2021) Collaborative deep forest learning for recommender systems. IEEE Access 9:22053–22061

Nudrat S, Khan HU, Iqbal S, Talha MM, Alarfaj FK, Almusallam N (2022) Users’ rating predictions using collaborating filtering based on users and items similarity measures. Comput Intell Neurosci. https://doi.org/10.1155/2022/2347641

Butt MH, Zhang X, Khan GA, Masood A, Butt MA, Khudayberdiev O (2019) A novel recommender model using trust based networks. In: 2019 16th international computer conference on wavelet active media technology and information processing, pp 81–84

Fang G, Lei S, Jiang D, Liping W (2018) Group recommendation systems based on external social-trust networks. Wirel Commun Mobile Comput. https://doi.org/10.1155/2018/6709607

Zhu J, Li Z, Yue C, Liu Y (2019) Trust-aware group recommendation with attention Mechanism in social network. In: 2019 15th International Conference on Mobile Ad-Hoc and Sensor Networks (MSN), pp 271–276

Li Y, Tong X (2022) Trust recommendation based on deep deterministic strategy gradient algorithm. IEEE Access 10:48274–48282

Author information

Authors and Affiliations

Contributions

M.M and F.A finalized the problem formulation; F.A., and M.B, completed the formal analysis; F.A., and M.M., carried out the implementation; M.M, and F.A, finalized the Experiments and Results; F.A. and M.M finalized Figures and Typesetting; M.B., and M.M, wrote the paper; M.B. and M.M. reviewed and finalized the draft.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bukhari, M., Maqsood, M. & Aadil, F. KGR: A Kernel-Mapping Based Group Recommender System Using Trust Relations. Neural Process Lett 56, 201 (2024). https://doi.org/10.1007/s11063-024-11639-4

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11639-4