Abstract

Transfer learning has made significant advancements, however, the issue of overfitting continues to pose a major challenge. Data augmentation has emerged as a highly promising technique to counteract this challenge. Current data augmentation methods are fixed in nature, requiring manual determination of the appropriate intensity prior to the training process. However, this entails substantial computational costs. Additionally, as the model approaches convergence, static data augmentation strategies can become suboptimal. In this paper, we introduce the concept of Dynamic Data Augmentation (DynamicAug), a method that autonomously adjusts the intensity of data augmentation, taking into account the convergence state of the model. During each iteration of the model’s forward pass, we utilize a Gaussian distribution based sampler to stochastically sample the current intensity of data augmentation. To ensure that the sampled intensity is aligned with the convergence state of the model, we introduce a learnable expectation to the sampler and update the expectation iteratively. In order to assess the convergence status of the model, we introduce a novel loss function called the convergence loss. Through extensive experiments conducted over 27 vision datasets, we have demonstrated that DynamicAug can significantly enhance the performance of existing transfer learning methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Transfer learning has gained significant traction in the field of computer vision for a multitude of visual tasks. These tasks encompass image classification [1, 2], image segmentation [3, 4], and object detection [5, 6]. By leveraging transfer learning, practitioners can capitalize on pre-existing models that have been trained on large-scale datasets [5, 7,8,9]. This approach allows for the extraction of valuable features and knowledge, which can subsequently be transferred or fine-tuned to tackle specific tasks with limited available data. Consequently, transfer learning has emerged as a powerful technique, enabling enhanced performance and efficiency in various computer vision applications.

Fine-tuning pre-trained models has proven to be resource-efficient and can yield commendable performance. However, the impracticality lies in the need to fine-tune the entire model [10]. Fine-tuning a complete model demands substantial computational resources and time, which can be prohibitive in real-world scenarios. To address this challenge, researchers have explored strategies such as transfer learning with partial fine-tuning [10,11,12,13] or selective layer freezing. These techniques involve selectively updating only specific layers or parts of the model while keeping other components frozen. By doing so, the computational burden is significantly reduced, and the fine-tuning process becomes more feasible. These approaches strike a balance between leveraging pre-trained knowledge and adapting the model to the target task with limited updates. They offer a practical compromise that allows for efficient utilization of resources while still achieving satisfactory performance in various computer vision tasks.

Nevertheless, the datasets employed in transfer learning often exhibit limitations, particularly in terms of their size or scope [14]. Consequently, models fine-tuned on such datasets are susceptible to either overfitting or undertraining. Several mitigation strategies have been proposed, among which data augmentation has emerged as a particularly effective technique. Data augmentation involves applying a variety of transformations to the input images during model training to increase the diversity of the training data. By exposing the model to a broader range of underlying data, data augmentation enhances the model’s capacity for generalization and improves its ability to handle previously unseen inputs with greater robustness. Commonly employed techniques for data augmentation include mixup-based methods [15, 16], RandAugment [17], and others. It is worth noting that the Drop method [18] can also be utilized as a hidden technique for data augmentation in order to combat overfitting.

Current fine-tuning paradigms require manual determination of the strength of these data-augmentation hyperparameters prior to training [13, 19]. However, the fine-tuning process is highly sensitive to hyperparameters of data augmentation. In order to achieve optimal fine-tuning performance, a considerable number of hyperparameters related to data augmentation need to be searched(e.g. drop ratio in Drop and mix up ratio in mixup). However, manually tuning these hyperparameters is inefficient and requires significant resource expenditure. Additionally, the fine-tuning hyperparameters determined via manual search are typically static, implying that they will not change during the whole fine-tuning process. The effectiveness of static hyperparameters for model fine-tuning is a topic of debate since dynamic learning rates have shown to be a superior approach.

In contrast to conventional static and manually curated data augmentation strategies, DynamicAug leverages the model itself to explore dynamic data augmentation strategies. We applied three conventional data augmentation methods to LoRA respectively and transformed them into dynamic paradigms. The experimental results are presented on the right side of the figure. LoRA-DA and LoRA represent the experimental outcomes obtained by applying DynamicAug and the static data augmentation method, respectively. Each of these results represents the average outcome derived from three separate experiments involving Mixup-based method, Randaugment, or Drop. The experimental results clearly indicate that dynamic data augmentation methods outperform traditional static methods by a significant margin

To enhance the thoroughness of the model fine-tuning process and mitigate overfitting, we conducted an investigation on the impact of dynamic augmentation method in fine-tuning tasks and innovatively proposed a dynamic model-aware augmentation strategy named DynamicAug, which enables adaptive adjustment of the data augmentation intensity based on the model’s convergence state. Unlike traditional static augmentation techniques, dynamic augmentation methods adaptively adjust the augmentation strategy during the training process based on real-time feedback and the current state of the model. The intuition behind DynamicAug originates from the observation that the model exhibits varying levels of convergence at different training stages. Various factors such as diverse datasets, model variations, and training strategies can significantly influence the convergence states exhibited by a model throughout its training process. And consequently, different levels of regularization are required for each convergence degree. Hence, it is essential to dynamically adjust the regularization level based on the model’s convergence state.

To achieve this, we implemented a criterion that dynamically adjusts the augmentation strategy every iteration by introducing a novel loss function that evaluates the convergence state of the model during training and keeps track of the adjusted augmentation intensity for future retraining. In addition, to ensure the exploratory nature of the learnable augmentation strategy, the hyperparameters required for data augmentation are all sampled from the Gaussian Distribution based Data Augmentation Sampler(DAS). We utilize DAS to acquire dynamic data enhancement strategies. The entire training process adopts an end-to-end training method without additional computational overhead.

For the specific data augmentation strategies, we primarily focuses on the dynamic implementation of commonly employed static data augmentation techniques, including mixup-based methods, RandAugment method, and Drop method. The intensity of these three methods is controlled by adjusting specific parameters. For the mixup-based method, the ratio is modified to regulate the intensity. In the case of RandAugment, the magnitude is adjusted to control the intensity. Lastly, for the Drop method, the drop ratio in each Transformer block is manipulated to regulate the intensity. By dynamically modifying the augmentation intensity, these methods are effective in addressing challenges related to overfitting and limited training data, thereby facilitating optimal model training.

We evaluate DynamicAug on 27 classification datasets [20,21,22,23,24] in total and conduct extensive experiments on transfer learning tasks, including different pretrained weights tasks, different augmentation method tasks and different transfer learning models. In addition, DynamicAug requires few additional parameters and is easily extended to various model families. Figure 1 demonstrates the disparity between DynamicAug and fixed data augmentation methods. Furthermore, we present sufficient experimental outcomes to assess its overall effectiveness. It is noteworthy that, upon optimization through dynamic data augmentation, the traditional fine-tuning approach can outperform the current state-of-the-art (SOTA) fine-tuning method SPT [25]. In summary, the contributions can be summarized as follows:

-

1.

We innovatively propose a dynamic model-aware augmentation approach. This approach can dynamically and adaptively adjust the data augmentation intensity based on the model’s convergence state. The model achieved through dynamic data augmentation fine-tuning demonstrates superior performance compared to the model obtained through static data augmentation fine-tuning.

-

2.

The proposed DynamicAug method is not restricted to the modified LoRA [26], Adapter [27] and VPT [10] methods. It can be seamlessly combined with the mainstream fine-tuning methods.

-

3.

Our experiments validate that DynamicAug is a valuable supplement to current fine-tuning strategies and significantly improves model performance. For instance, DynamicAug improves the LoRA fine-tuning method by achieving an average accuracy increase of + 1.7% on VTAB-1k, which even surpasses that of the best fine-tuning architecture searched in NOAH [13] and SPT [25].

2 Related Work

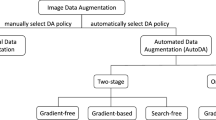

2.1 Data Augmentation

Data augmentation is a technique that artificially generates additional data while preserving the original data distribution within the training set [28]. Commonly employed techniques for data augmentation include mixup-based methods [15, 16], RandAugment [17], among others. Moreover, Drop method [18, 29] can also be regarded as an effective data enhancement strategy.

Drop: Methods like Drop achieve improved model performance or robustness by randomly deactivating parts of the model’s structure during training. For instance, in the dropout method [18], certain neurons stop working with a specific probability during the forward propagation process in the fully connected layer. This process significantly improves the model’s generalization and reduces its sensitivity to local features. Likewise, in the drop path method [29], random paths in the multi-branch network structure are deactivated, removing the dependence of weight update on the joint action of the fixed relationship between the various branches. Typically, these methods are included during model training as a means of regularization to prevent the model from overfitting.

RandAugment: RandAugment [17] is an improvement upon AutoAugment [30], where it maintains the probabilities of all image processing methods while varying only the number and intensity of the image processing types. These processing methods mainly include 14 different transformations such as identity, autocontrast, among others. Notably, the RandAugment algorithm primarily consists of three hyperparameters: magnitude m, standard deviation of magnitude M, and the number of transformations N. Increasing the value of M and N enhances the intensity of data augmentation, while the standard deviation of magnitude is often assigned a value of 0.5.

CutMix and Mixup: Mixup [15] and CutMix [16] are data augmentation methods that fuse different parts of images to generate new training samples. The Mixup method randomly selects two samples and performs a linear weighted summation. The CutMix technique introduces a novel methodology for merging parts of an image by cutting and pasting them onto another image, thereby accomplishing image merging in a region-based manner. New samples generated using the Mixup and CutMix methods help compensate for a lack of training image datasets and expand the training data to some extent. Given two input images, \(x_A\) and \(x_B\), with their corresponding labels \(y_A\) and \(y_B\). Mixup generates a new mixed image \({\hat{x}}\), using a linear combination of the pixel values from A and B. This can be described as

where \(\lambda \) obeys the Beta distribution with the parameter 0.8. And for Cutmix, the processing can be fomulated as

where \(M \in \{0,1\}^{W \times H}\) represents a binary mask associated with \(\lambda \), and W and H denote the width and height of the input images, respectively. \(\lambda \) here obeys the Beta distribution with the parameter 1.0.

These techniques have proven to be highly effective at incorporating richer variations in the training data, while simultaneously preserving local spatial information. Overall, data augmentation aims to enable the model to learn the characteristics of the data in a more comprehensive, multi-faceted manner instead of relying on one-sided information.

2.2 Transfer Learning

Pre-training followed by fine-tuning is the most frequently utilized transfer learning approach [10, 26, 27, 31,32,33]. The utilization of pre-training and fine-tuning approaches in the field of computer vision has consistently demonstrated remarkable empirical performance across various tasks. While fine-tuning pre-trained models offers notable efficiency and performance advantages, the practicality of fine-tuning the entire model remains limited in many scenarios. Hence, an innovative approach involves replacing full fine-tuning with the selective tuning of only a few trainable parameters, while keeping the majority of parameters shared across multiple tasks frozen. This approach typically fine-tunes less than 1% of the parameters of the complete model, yet its overall performance can be equivalent to or surpass that achieved through full fine-tuning. By significantly reducing the storage burden and making optimization less challenging, tuning fewer parameters when adapting pre-trained models to target datasets becomes more feasible. As a result, this approach can achieve comparable or even superior performance to that of full fine-tuning. The current mainstream parameter efficient tuning methods can be broadly categorized into two, namely addition-based parameter tuning methods [10, 27, 31] and reparameterization-based tuning methods [34,35,36].

In the addition-based parameter efficient tuning approach, extra trainable parameters are appended to the backbone model, and solely these supplementary parameters are fine-tuned during the adaptation process. The existing parameters of the backbone model remain fixed throughout the adaptation process. For instance, two notable examples of addition-based parameter efficient tuning methods are the Visual Prompt Tuning (VPT) algorithm [10] and the Adapter algorithm [27]. VPT employs a technique where a group of learnable tokens is placed at the beginning of the input sequence for each Transformer block. And the input of VPT can be represented by \(x_{vpt}=[\text {x}_{cls},\text {P}]\), where \(x_{cls}\) represents the class token and P represents the m added learnable tokens and \(\text {P} = \{ \text {p}_k | \text {p}_k \in {\mathbb {R}}^D, k = 1,\ldots , m \}\). D is the dimension of each token. Adapter is a lightweight network after the MLP layers in Transformer and it can be fomulated as

where \(Linear_{up}\) and \(Linear_{down}\) are constructed with a small number of parameters, alongside QuickGELU which functions as the activation function.

The reparameterization-based methods adjust parameters that are either inherent to the backbone or can be reparameterized within it during the inference process. For example, LoRA [26] add the additioned parameter \(\Delta W\) for Q and K in each Transformer block, where \(\Delta W\) is composed of a matrix \(A_{q/k} \in {\mathbb {R}}^{r \times D}\) for ascending dimensions and a matrix \(B_{q/k} \in {\mathbb {R}}^{D \times r}\) for descending dimensions. r is the down-projection dimension (generally set to 8 or 16). During training, only A and B require iterative updates, while the other parameters of the model remain frozen. The forward process of Q and K can be formulated as

3 Approach

Overview of our DynamicAug method. On the basis of the existed transfer learning methods, we dynamically update the intensity of data augmentation for fine-tuning. we first replace the conventional data augmentation methods with dynamic paradigms and incorporate additional parameters to update the intensity of data augmentation. Therefore, the \(\alpha \) is a learnable hyperparameter embedded in model. In order to maintain the exploratory nature of \(\alpha \), we introduce the Gaussian Distribution based Data Augmentation Sampler (DAS) for sampling and use the augmentation policies sampled from DAS for fine-tuning. Finally, we evaluate the convergence state of the model by a new introduced loss and then update \(\alpha \). We named it convergence loss in Eq. 9

In this section, we will begin by introducing DynamicAug, a dynamic optimization method that extends the traditional augmentation approach with minimal additional parameters. We show the overview of our method in Fig. 2. To preserve the exploratory nature of the learnable hyperparameters, we have incorporated a Gaussian Distribution based Data Augmentation Sampler for sampling. We leverage this sampler to sample an augmentation strategy, which is then used for fine-tuning purposes. Finally, to update the augmentation strategy based on a reasonable evaluation of the model’s convergence status, we propose a novel loss function referred to as the convergence loss.

3.1 Dynamic Data Augmentation

Models can exhibit distinct convergence states and therefore require appropriate training strategies. Fixed strategies used in previous studies may lead to insufficient training or overfitting under certain conditions [26, 27]. However, a suitable strategy can also aid in the training process. For instance, studies have demonstrated that applying the drop method only during the initial stages of training can improve model fitting to data compared to using it throughout the entire process in certain scenarios [37]. To comprehensively tackle this issue, we introduce the adaptive data augmentation method called DynamicAug. The purpose of DynamicAug is to determine the intensity of data augmentation during fine-tuning.

For Drop. The traditional drop method of the forward pass for each Transformer block can be generally expressed as follows:

where \(x_i\) and \(y_i\) represent the inputs and outputs of the i-th ViT block. The drop ratio of the corresponding block is denoted by \(r_i\), which increases or decreases as the depth i increases, like [38]. But in DynamicAug, we establish parameters for \(r_i\) and update them within each Transformer block during the iteration. And Eq. 5 can be rewrite as:

\(p^i_{d}\) here is a dynamic drop ratio that updated with the improvement of model. We added a learnable hyperparameter \(\alpha ^i_{d}\) for each \(p^i_{d}\). During the fine-tuning process, we will sample the \(p^i_{d}\) by \(\alpha ^i_{d}\) before each iteration through a truncated normal sampler, and constantly update \(\alpha ^i_{d}\) according to the model convergence state.

For Mixup and Cutmix. The conventional mixup-based methods involve mixing the values and labels of two images using a linear ratio \(\lambda \). And the prevalent training paradigm involves alternating between mixup and cutmix methods, with a default probability of 0.5 for switching between them. Nonetheless, it should be noted that regardless of the approach employed, the parameter \(\lambda \) serves as the governing factor for controlling the intensity of data augmentation. Consequently, during the fine-tuning process, we dynamically adjust the value of \(\lambda \) with \([p_{m},p_{c}]\). To learn its optimal value based on the convergence state, we add two parameters \([\alpha _{m},\alpha _{c}]\) for \([p_{m},p_{c}]\).

For RandAugment. During the implementation of RandAugment, a predetermined set of transformation operations is randomly selected. Subsequently, these operations are applied to the samples in accordance with preset hyperparameters as

where m denotes the magnitude of the transformation and n signifies the number of transformations applied. p represents the specific transform operation.

In DynamicAug, we dynamically update the value of m with \(p_r\) and sample the \(p_r\) with \(\alpha _r\) to control the intensity of data augmentation. Hence, RandAugment requires only one parameter to achieve fine-tuning dynamics.

3.2 Gaussian Distribution Based Data Augmentation Sampler

The Gaussian Distribution based Data Augmentation Sampler (DAS) serves the purpose of introducing noise and enhancing the exploratory nature of the data augmentation hyperparameters. Our preference is for the model to prioritize capturing the trend of changes in augmentation strategies rather than specific hyperparameter values.

So in DynamicAug, we randomly sample the data augmentation strategies p from the DAS \(\Psi \) with the learnable expectation \(\alpha \) for fine-tuning in real time. The DAS can be formulated as:

where \(\sigma ^2\) is a fixed variance. \(a_i\) and \(b_i\) refer to the left and right borders for the truncated distribution, respectively. For the strategies p, we have \(p=[p^1_{drop},\ldots ,p^{i}_{drop}]\) for the dynamic drop strategies in Transformer models with the depth i, \(p=[p_{m},p_{c}]\) for the dynamic mixup-based methods and \(p=[p_r]\) for the dynamic RandAugment method. Obviously, DynamicAug introduces very few additional parameters. To update \(\alpha \) end to end, we use Straight-through estimator (STE) [39] to incorporate \(\alpha \) into the forward process. Details can be seen in Algorithm 1. Mixup-base methods and Randaugment share a similar characteristic as they operate prior to the samples entering the model. In contrast, Drop functions during the whole forward process of the model. Therefore, the methods by which they are updated also diverge. Regarding Drop, we incorporate the corresponding parameter \(\alpha _i\) in each block during the forward process. For the other two methods, \(\alpha \) is multiplied at the end of the model’s forward process to assess the state of the model.

To provide further evidence, we conducted ablation studies comparing the use of DAS to not using one. The results demonstrate a significant decrease in model performance when a sampler is not utilized. Moreover, recent studies have also employed samplers to obtain corresponding strategies [40].

Since \(\alpha \) is a hyperparameter determined by the convergence behavior of the model, its optimization process differs from that of the model parameter w. Therefore, we introduced a new loss function specifically for optimizing \(\alpha \).

3.3 Loss for Model Convergence State

Since obtaining the model’s convergence state directly on the evaluation set is still a challenging problem, we have proposed an alternative approach to simulate the convergence state and subsequently update the \(\alpha \) parameter. Our approach centers on monitoring the alteration in model loss and entails reserving a designated number of samples from the training set to construct a validation set that emulates the evaluation data. Specifically, we have introduced a novel loss function, referred to as the Convergence loss, which serves as the optimization objective for \(\alpha \),

In the proposed loss function, \(L_{val}\) represents the Cross-entropy loss and w represents the common model weight. During the actual training process, we can estimate the change in loss by measuring the difference between iterations. Subsequently, Eq. 9 can be approximated as

where f is the update frequency of \(\alpha \) and t is the current training iteration.

Generally, a larger negative incremental loss indicates a greater potential for improvement in model performance, suggesting that the model has not yet converged. Therefore, our ultimate optimization goal can be expressed as follows:

To update \(\alpha \), we can obtain its gradient \(\nabla _{\alpha }\) by storing the gradient in the previous iteration and then again in the subsequent f iteration.

During the update of \(\alpha \), we explicitly set the drop ratio to 0 or turn off the mixup method and RandAugment method in order to assess the model’s performance in the evaluation state. Within the set of partitioned validations, \(\alpha \) plays a crucial role in the forward process of each block, and its gradient is maintained through Straight-Through Estimator (STE) during the backward process. We posted the pseudocode of \(\alpha \) update in Algorithm 1. In the implementation, the augmentation parameter \(\alpha \) is updated only every few iterations, minimizing any potential computational overhead.

Indeed, it is crucial to recognize that while the augmentation parameters may not exert a noticeable impact on the loss during individual training iterations, it does have a subtle influence on the overall training process over an extended period of time.

4 Experiments

In this section, we begin by outlining our experimental setup, which encompasses the datasets, baseline methods, and implementation details employed in our study. We then proceed to showcase the effectiveness of DynamicAug across multiple mainstream transfer learning tasks. Furthermore, we conduct ablation experiments to evaluate the impact and efficacy of DynamicAug in comparison to other approaches. Lastly, we delve into in-depth analyses to enhance our comprehension of the role of augmentations in transfer learning tasks.

4.1 Experimental Settings

Datasets. Our experiments primarily utilize three distinctive types of datasets. (1) We employ the VTAB-1k [24] dataset, which serves as a benchmark for transfer learning in visual classification tasks. This dataset includes 19 classification tasks that are categorized into three domains: (i) natural images captured by standard cameras; (ii) professional images captured by non-standard cameras, such as remote sensing and medical cameras; (iii) structured images synthesized from simulated environments. The benchmark consists of various tasks, including object counting and depth estimation, from diverse image domains. Due to the presence of only 1000 training samples per task, the dataset’s high level of complexity makes it extremely challenging for training benchmarks. (2) Another benchmark we utilize is Fine-Grained Visual Classification (FGVC), which focuses on fine-grained visual classification tasks. This benchmark comprises several datasets, including the Stanford Dogs [20], Oxford Flowers [21], NABirds [22], CUB-200-2011 [23] and Stanford Cars [41]. Each FGVC dataset consists of 55 to 200 categories and several thousand images for training, validation, and testing. In cases where the validation sets are not provided, we follow the specified validation split as indicated in [13]. (3) For the few-shot tasks, we select five fine-grained visual recognition datasets, namely Food101 [42], OxfordFlowers102 [43], StandfordCars [44], OxfordPets [45], and FGVCAircraft [46]. These datasets comprise categories that depict a diverse range of visual concepts closely associated with our daily lives, such as food, plants, vehicles, and animals. To assess the effectiveness of our approach, we follow previous studies [33, 47] and evaluate the performance on 1, 2, 4, 8, and 16 shots, which are adequate for observing the trend.

Baselines. Based on [25], our main experiments are conducted using a Vision Transformer backbone ViT-B/16, which is pretrained on ImageNet-21K. We incorporate the DynamicAug method into LoRA [26], Adapter [27] and Prompt-deep [10] to further enhance their performance. During the fine-tuning process, these three methods exclusively utilize the Drop data augmentation technique. To ensure a fair comparison, we conduct separate fine-tuning processes for the Mixup and Randaugment methods, thereby establishing baselines specific to these two data augmentation techniques. Furthermore, we perform additional experiments on the ViT-L/16 backbone to demonstrate the effectiveness of the DynamicAug method on larger models. In terms of training strategies, we explore both supervised pre-training and self-supervised pre-training techniques. Specifically, we employ the techniques of MAE [48] and MoCo v3 [49] to train our models. Finally, to assess the generalizability of DynamicAug, we incorporate it into the CLIP language-visual model [50, 51] in the appendix. This evaluation allows us to examine the effectiveness and applicability of the DynamicAug method beyond just visual tasks, extending its potential to language-visual tasks as well.

Implementation Details. Following [13], we utilize the AdamW optimizer [52] with cosine learning rate decay for our experiments. Specifically, for the VTAB experiments, we set the batch size to 64, the learning rate to \(1\times 10^{-3}\), and the weight decay to \(1\times 10^{-4}\). To ensure fairness, we follow the standard data augmentation pipeline [13]. To update the augmentation parameters, we initialize the number of validation samples to a certain ratio, such as 0.1, 0.2, or 0.4. The augmentation strategies is updated either every 5 iterations or the maximum number of iterations remaining for the image after segmentation. To optimize the performance of DynamicAug, we conduct a grid search on the hyperparameters involved. Importantly, DynamicAug only operates during training and is turned off during testing or verification stages. Therefore, it does not increase the model’s inference time, ensuring efficient and practical implementation.

4.2 Main Results

Experiments on VTAB-1k. We first choose the VTAB-1k [24] benchmark to evaluate the proposed DynamicAug method. Table 1 demonstrates that the benchmark model, which utilizes DynamicAug, outperforms the original methods of data augmentation in both addition-based and reparameterization-based approaches. The abbreviations FD, FM, and FR correspond to Fixed Drop, Fixed Mixup, and Fixed Randaugment respectively. Similarly, DD, DM, and DR represent Dynamic Drop, Dynamic Mixup, and Dynamic Randaugment respectively. In the three fine-tuning methods LoRA [26], Adapter [27], and Prompt-deep [10], the DD (DM, DR) method shows a superiority of 1.7% (1.1%, 0.9%), 1.2% (0.7%, 0.6%), and 2.1% (2.7%, 2.8%) over the FD (FM, FR) method, respectively.

Remarkably, LoRA-DD outperforms the NOAH [13] and SPT [25] SOTA method with just 12 trainable parameters (equivalent to ViT-B/16 depth) in Table 2, which means that for a model, the training strategy’s impact on model performance may be greater than that of architecture sometimes.

Self-supervised learning strategies constitute an exceedingly crucial component of deep learning [48, 49, 53]. However, previous efficient fine-tuning methods have shown inconsistent results when applied to backbones with different learning strategies. To validate the effectiveness of DynamicAug under different pre-training strategies, we conducted experiments on MAE and MoCo v3 pre-trained backbones. The results are shown in Table 3. LoRA-DD achieves remarkable 1.2% and 2.1% mean top-1 accuracy gains over baseline method on VTAB-1k benchmark and obtains the state-of-the-art results. Due to the wider scope of application of Drop, we will mainly focus on the Drop strategy in subsequent experiments.

It should be noted that the 19 VTAB-1k datasets are incredibly small, comprising only 800 to 1000 samples per dataset. Dividing the dataset to evaluate the drop policy can have a considerable negative impact on the model’s performance. Consequently, after obtaining the drop strategy, we have to retrain the model using the full dataset.

Experiments on FGVC. We conducted the experiments on five fine-grained datasets for FGVC. As shown in Table 2, LoRA-DD outperforms the LoRA base by a clean margin of 1.2% mean top-1 accuracy. The results shows that DynamicAug can also perform well in fine-grained tasks.

Experiments on Few-Shot Transfer Learning. Due to the particularity of the dataset, during the few-shot experiment, we divided the dataset proportionately into (0.1, 0.2, 0.4) for evaluation purposes. As depicted in Fig. 3, after applying the DynamicAug method (blue line), the average accuracy of LoRA significantly improved compared to using static drop (orange line). Furthermore, the average results were found to be on par with the current SOTA method, NOAH (green line), and surpassed it in the low-data regime of 1-shot, 2-shot, and 4-shot. Notably, the performance of LoRA-DD in the FGVCAircraft dataset is outstanding. Although the other datasets did not display the same level of improvement, most of them outperformed LoRA with static drop.

4.3 Ablation Study

Effect of the DynamicAug. To assess the effectiveness of DynamicAug on VTAB-1k, we utilized different static drop rates as baselines and compared the dynamic drop strategy’s efficacy with the static drop strategy. The findings are presented in Table 4. On the original baseline with a 0.1 drop rate, the DynamicAug method increased the accuracy rate by 1.5%, 0.9%, and 2.8% in the Natural, Specialized, and Structured groups, respectively.

Although varying drop rates can impact model training to a certain extent, it becomes evident that static drop offers suboptimal training strategies for the model. The DynamicAug strategy is capable of compensating for insufficient or excessive training to a certain degree. Simultaneously, the greedy update strategy can expedite the model’s convergence process to a certain extent.

Effect of Gaussian Distribution based Data Augmentation Sampler. As mentioned previously, after the initial training with the incomplete dataset, it is necessary to conduct retraining using the complete dataset. However, there exists a gap in the quantity of strategies obtained through the sampler during training and the strategies applied during the retraining process.

To address this issue, we examined the drop strategies generated with different approaches during the initial training process. We show the detailed drop curves in appendix and the ablation experiments in Table 5. (first, last, ave) in the table respectively represent the strategies generated during the first update, the last update in each epoch, and the average of the strategies within that epoch in the initial training. Therefore, we conducted ablation experiments on these three strategies by applying the (first, last, ave) strategy during each epoch of the retraining process. The results demonstrate that the model primarily focuses on the trend of regularization level changes rather than specific values. So we use the last drop policy of each epoch in retraining.

In addition, the Gaussian Distribution based Data Augmentation Sampler is also capable of introducing noise to drop and preventing the updates of drop from falling into the Matthew effect. We provided experimental results comparing the use of the normal sampler and not using it in Table 5. The results indicate that not using a sampler leads to a noticeable decline in model performance.

Effect of the number of image divisions and retraining. The accuracy of evaluating model convergence is directly impacted by the number of image divisions employed. Gradually, the evaluation of convergence will affect the optimization direction of the drop. The divided training set and verification set should be able to fit the training process while evaluating the convergence degree of the model as accurately as possible.

As a result, we split a validation set from the training set with the ratio of 0.1, 0.2 and 0.4 for evaluating on VTAB-1k and the results are shown in Table 6. In addition, we counted the experimental results without retraining and marked it as LoRA-DD-without retraining. Experiments show that a split ratio of 0.1 is sufficient to evaluate the convergence state of the model. Retraining again improves the effectiveness of the DynamicAug method based on the first training.

Comparison on the manually scheduled drop and DynamicAug. Due to the successful application of manually designed learning rate schedules in deep learning, such as cosine and linear learning rates, we similarly designed a drop ratio schedule to verify whether artificially designed dynamic drop is feasible. We set the minimum and maximum values of drop to 0 and 0.1, respectively, and increased the drop value using linear and cosine methods as the number of epochs increased. The term “reversal” here means that the initial value of drop is set to 0.1 and it decreases as the number of epochs increases. As shown in Table 7, due to the unique characteristics of the drop parameter, it is challenging for it to exhibit similar behavior to the learning rate. Therefore, manually setting a dynamic drop schedule can lead to a significant decrease in model performance. This also highlights the importance of DynamicAug.

4.4 Impacts of DynamicAug on Transfer Learning

Data augmentation method is a crucial component in most deep learning tasks, serving as a regularization method during model training to mitigate overfitting on local features and focus on global features instead. To better evaluate the impact of the DynamicAug method on transfer learning tasks, we painted the drop values for the three VTAB-1k dataset groups: Natural (7), Specialized (4) and Structured (8), and plotted the results in appendix. It is evident that different datasets require distinct optimization strategies. Additionally, we provide the loss differential figures for both fixed regularization strategy and dynamic regularization strategy acquired by DynamicAug. From Fig. 4, it is observable that the model fell into overfitting between the 30th and 40th epochs with the static data augmentation strategy, indicated by a positive loss difference. In contrast, DynamicAug continues to facilitate model training during the same period. In the end, we evaluated the generalizability of the DynamicAug method by incorporating it into the CLIP language-visual model in appendix.

5 Conclusion

In this study, we primarily investigate the impact of the dynamic data augmentation strategy on transfer learning tasks. Specifically, we propose a novel model-aware based DynamicAug strategy that continuously adjusts the intensity of data augmentation based on the model convergence state. It is worth mentioning that our approach is not restricted to the LoRA, Adapter and VPT fine-tuning methods that we have modified, as long as the model involving the data augmentation method can be improved with DynamicAug.

However, the convergence curves of different models on diverse datasets can vary significantly. As a result, it is challenging for our method to provide a universal drop strategy that applies to all models and datasets. Additionally, due to the evaluation requirements, we divided the dataset, resulting in a lack of training samples in the the first fine-tuning stage. Consequently, after obtaining the drop strategy, the model needs to be retrained.

References

Kandel I, Castelli M (2020) Transfer learning with convolutional neural networks for diabetic retinopathy image classification. A review. Appl Sci 10(6):2021

Wang C, Chen D, Hao L, Liu X, Zeng Y, Chen J, Zhang G (2019) Pulmonary image classification based on inception-v3 transfer learning model. IEEE Access 7:146533–146541

Kirillov A, Mintun E, Ravi N, Mao H, Rolland C, Gustafson L, Xiao T, Whitehead S, Berg AC, Lo W-Y et al (2023) Segment anything. arXiv preprint arXiv:2304.02643

Oquab M, Darcet T, Moutakanni T, Vo H, Szafraniec M, Khalidov V, Fernandez P, Haziza D, Massa F, El-Nouby A et al (2023) Dinov2: learning robust visual features without supervision. arXiv preprint arXiv:2304.07193

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: ECCV. Springer, pp 213–229

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. In: ICCV, pp 2961–2969

Chen L-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2017) DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. TPAMI 40(4):834–848

Zhu D, Chen J, Shen X, Li X, Elhoseiny M (2023) MiniGPT-4: enhancing vision-language understanding with advanced large language models. arXiv preprint arXiv:2304.10592

Kwon W, Li Z, Zhuang S, Sheng Y, Zheng L, Yu C, Gonzalez J, Zhang H et al (2023) vLLM: easy, fast, and cheap LLM serving with PagedAttention

Jia M, Tang L, Chen B-C, Cardie C, Belongie S, Hariharan B, Lim S-N (2022) Visual prompt tuning. In: ECCV

Chen S, Ge C, Tong Z, Wang J, Song Y, Wang J, Luo P (2022) AdaptFormer: adapting vision transformers for scalable visual recognition. Adv Neural Inf Process Syst 35:16664–16678

Jie S, Deng Z-H (2022) Convolutional bypasses are better vision transformer adapters. arXiv preprint arXiv:2207.07039

Zhang Y, Zhou K, Liu Z (2022) Neural prompt search. arXiv preprint arXiv:2206.04673

Zhai X, Puigcerver J, Kolesnikov A, Ruyssen P, Riquelme C, Lucic M, Djolonga J, Pinto AS, Neumann M, Dosovitskiy A et al (2019) A large-scale study of representation learning with the visual task adaptation benchmark. arXiv preprint arXiv:1910.04867

Zhang H, Cisse M, Dauphin YN, Lopez-Paz D (2017) mixup: beyond empirical risk minimization. arXiv preprint arXiv:1710.09412

Yun S, Han D, Oh SJ, Chun S, Choe J, Yoo Y (2019) CutMix: regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6023–6032

Cubuk ED, Zoph B, Shlens J, Le QV (2020) Randaugment: practical automated data augmentation with a reduced search space. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp 702–703

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Luo G, Huang M, Zhou Y, Sun X, Jiang G, Wang Z, Ji R (2023) Towards efficient visual adaption via structural re-parameterization. arXiv preprint arXiv:2302.08106

Khosla A, Jayadevaprakash N, Yao B, Fei-Fei L (2011) Novel dataset for fine-grained image categorization. In: CVPRW

Nilsback M-E, Zisserman A (2008) Automated flower classification over a large number of classes. In: ICVGIP. IEEE, pp 722–729

Van Horn G, Branson S, Farrell R, Haber S, Barry J, Ipeirotis P, Perona P, Belongie S (2015) Building a bird recognition app and large scale dataset with citizen scientists: the fine print in fine-grained dataset collection. In: CVPR, pp 595–604

Wah C, Branson S, Welinder P, Perona P, Belongie S (2011) The Caltech-UCSD birds-200-2011 dataset. Tech. Rep. CNS-TR-2011-001, California Institute of Technology

Zhai X, Puigcerver J, Kolesnikov A, Ruyssen P, Riquelme C, Lucic M, Djolonga J, Pinto AS, Neumann M, Dosovitskiy A et al (2019) A large-scale study of representation learning with the visual task adaptation benchmark. arXiv preprint arXiv:1910.04867

He H, Cai J, Zhang J, Tao D, Zhuang B (2023) Sensitivity-aware visual parameter-efficient tuning. arXiv preprint arXiv:2303.08566

Hu EJ, shen, Wallis P, Allen-Zhu Z, Li Y, Wang S, Wang L, Chen W (2022) LoRA: low-rank adaptation of large language models. In: ICLR

Houlsby N, Giurgiu A, Jastrzebski S, Morrone B, De Laroussilhe Q, Gesmundo A, Attariyan M, Gelly S (2019) Parameter-efficient transfer learning for NLP. In: ICML, pp 2790–2799

Khalifa NE, Loey M, Mirjalili S (2022) A comprehensive survey of recent trends in deep learning for digital images augmentation. Artif Intell Rev 55(3):2351–2377

Larsson G, Maire M, Shakhnarovich G (2016) FractalNet: ultra-deep neural networks without residuals. arXiv preprint arXiv:1605.07648

Cubuk ED, Zoph B, Mane D, Vasudevan V, Le QV (2018) Autoaugment: learning augmentation policies from data. arXiv preprint arXiv:1805.09501

He J, Zhou C, Ma X, Berg-Kirkpatrick T, Neubig G (2022) Towards a unified view of parameter-efficient transfer learning. In: ICLR

Zhong Z, Friedman D, Chen D (2021) Factual probing is [mask]: learning vs. learning to recall. arXiv preprint arXiv:2104.05240

Zhou K, Yang J, Loy CC, Liu Z (2022) Learning to prompt for vision-language models. IJCV 130(9):2337–2348

Caelles S, Maninis K-K, Pont-Tuset J, Leal-Taixé L, Cremers D, Van Gool L (2017) One-shot video object segmentation. In: CVPR, pp 221–230

Yosinski J, Clune J, Bengio Y, Lipson H (2014) How transferable are features in deep neural networks? NeurIPS 2:7

Zaken EB, Goldberg Y, Ravfogel S (2022) BitFit: simple parameter-efficient fine-tuning for transformer-based masked language-models. In: ACL, pp 1–9

Liu Z, Xu Z, Jin J, Shen Z, Darrell T (2023) Dropout reduces underfitting. arXiv preprint arXiv:2303.01500

Li B, Hu Y, Nie X, Han C, Jiang X, Guo T, Liu L (2022) Dropkey. arXiv preprint arXiv:2208.02646

Liu Z, Cheng K-T, Huang D, Xing EP, Shen Z (2022) Nonuniform-to-uniform quantization: towards accurate quantization via generalized straight-through estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4942–4952

Hu S, Xie S, Zheng H, Liu C, Shi J, Liu X, Lin D (2020) DSNAS: direct neural architecture search without parameter retraining. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12084–12092

Gebru T, Krause J, Wang Y, Chen D, Deng J, Fei-Fei L (2017) Fine-grained car detection for visual census estimation. In: AAAI

Bossard L, Guillaumin M, Gool LV (2014) Food-101–mining discriminative components with random forests. In: European conference on computer vision (ECCV). Springer, pp 446–461

Nilsback M-E, Zisserman A (2006) A visual vocabulary for flower classification. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), vol 2. IEEE, pp 1447–1454

Krause J, Stark M, Deng J, Fei-Fei L (2013) 3d object representations for fine-grained categorization. In: Proceedings of the IEEE international conference on computer vision workshops, pp 554–561

Parkhi OM, Vedaldi A, Zisserman A, Jawahar C (2012) Cats and dogs. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR). IEEE, pp 3498–3505

Maji S, Rahtu E, Kannala J, Blaschko M, Vedaldi A (2013) Fine-grained visual classification of aircraft. arXiv preprint arXiv:1306.5151

Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J et al (2021) Learning transferable visual models from natural language supervision. In: ICML, pp 8748–8763

He K, Chen X, Xie S, Li Y, Dollár P, Girshick R (2022) Masked autoencoders are scalable vision learners. In: CVPR, pp 16000–16009

Chen X, Xie S, He K (2021) An empirical study of training self-supervised vision transformers. In: ICCV, pp 9640–9649

Gao P, Geng S, Zhang R, Ma T, Fang R, Zhang Y, Li H, Qiao Y (2023) Clip-adapter: better vision-language models with feature adapters. Int J Comput Vis 132(2):581–595

Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J et al (2021) Learning transferable visual models from natural language supervision. In: International conference on machine learning. PMLR, pp 8748–8763

Loshchilov I, Hutter F (2018) Fixing weight decay regularization in Adam. https://openreview.net/forum?id=rk6qdGgCZ

Biswas M, Buckchash H, Prasad DK (2023) pNNCLR: stochastic pseudo neighborhoods for contrastive learning based unsupervised representation learning problems. arXiv preprint arXiv:2308.06983

Funding

This research was supported by the Baima Lake Laboratory Joint Funds of the Zhejiang Provincial Natural Science Foundation of China under Grant No. LBMHD24F030002 and the National Natural Science Foundation of China under Grant 62373329.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

In appendix, we initially examine the behavior of the DynamicAug method in larger models. Furthermore, we provide drop curves that were sampled under various acquisition strategies. This is done to address the discrepancy in the number of strategies obtained during training and the strategies employed in the retraining process. We then present the specific experimental results on VTAB-1k, including the direct results of DynamicAug without retraining, which further demonstrate the effectiveness of the dynamic regularization approach. In addition, we also provide the specific results on five FGVC datasets, indicating that even on fine-grained tasks, DynamicAug demonstrates promising performance. Finally, we validate the generality of our method within the CLIP-Adapter framework. In the visual-language model, our approach also achieves remarkable performance. In the appendix experiments, our primary focus remains on Dynamic Drop (DD) to demonstrate the efficacy of DynamicAug.

1.1 A.1 Effect of DynamicAug on Large Model

In order to demonstrate the effectiveness of the DynamicAug method on larger models, we performed extra experiments on the ViT-L/16 backbone. And results can be seen in Table 8. Obviously, the effect of the DynamicAug strategy to prevent overfitting will be more pronounced in larger models.

1.2 A.2 DynamicAug Strategies Obtained Through Different Strategy Acquisition Methods

We analyzed the drop strategies generated using various approaches during the initial training process with the Gaussian Distribution based Data Augmentation Sampler. We recorded the results of the experiments, capturing the strategies generated during the first update, the last update in each epoch, and the average of the strategies within that epoch in the initial training process. As illustrated in Fig. 5, different acquisition strategies yield similar drop values in each epoch. This aligns with our expectation of prioritizing regularization trends over specific values. Subsequently, we retrained with the last updated drop strategy in each epoch.

1.3 A.3 Detailed Experiment Results on VTAB-1k

We present the main results on VTAB-1k, including the drop strategy for the individual datasets and per-task results in Fig. 6 and Table 9. The experimental results show that after optimization with DynamicAug, the Adapter, Prompt-deep, and LoRA methods all significantly outperformed the original methods, even demonstrating significant improvements on every dataset. In addition, we also provide the results without retraining. The experimental results indicate that DynamicAug exhibits satisfactory performance even when dealing with datasets that have been segmented. On the original baseline with the Adapter, Prompt-deep, and LoRA methods, the DynamicAug increased the mean accuracy rate by 0.8%, 1.5%, and 0.9% without retrain, respectively.

1.4 A.4 Detailed Experiment Results on FGVC

We present the main results on FGVC, including the per-task results in Table 10. As shown in the table below, LoRA-DD outperforms the LoRA base by a clean margin of 1.2% mean top-1 accuracy. The results shows that DynamicAug can also perform well in fine-grained tasks.

1.5 A.5 Experiments on CLIP-Adapter

We provided the results of DynamicAug with CLIP models. The experimental setting is following CLIP-Adapter. Since the original paper did not include experimental results using ViT-B as the visual module, we reproduced the baseline ourselves. The final results can be shown in Fig. 7, which shows improvement across all 11 datasets. It is worth noting that since the datasets involved in CLIP-Adapter already have validation sets, we do not need to partition additional samples from the training set. This avoids the extra work of retraining.

As depicted in Fig. 7, after applying DynamicAug on CLIP-Adapter, the average accuracy of CLIP-Adapter-DD significantly improved compared to using static drop with 0.1 drop ratio. Even when drop ratio is set to 0, we are still able to achieve comparable results to it. This indicates that the CLIP model itself fits the data very well, but DynamicAug is still able to outperform it in part of the experiments.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yu, X., Zhao, H., Zhang, M. et al. DynamicAug: Enhancing Transfer Learning Through Dynamic Data Augmentation Strategies Based on Model State. Neural Process Lett 56, 176 (2024). https://doi.org/10.1007/s11063-024-11626-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11626-9