Abstract

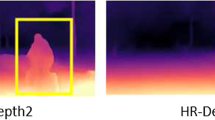

In the unsupervised domain adaptation (UDA) (Akada et al. Self-supervised learning of domain invariant features for depth estimation, in: 2022 IEEE/CVF winter conference on applications of computer vision (WACV), pp 3377–3387 (2022). 10.1109/WACV51458.2022.00107) depth estimation task, a new adaptive approach is to use the bidirectional transformation network to transfer the style between the target and source domain inputs, and then train the depth estimation network in their respective domains. However, the domain adaptation process and the style transfer may result in defects and biases, often leading to depth holes and instance edge depth missing in the target domain’s depth output. To address these issues, We propose a training network that has been improved in terms of model structure and supervision constraints. First, we introduce a edge-guided self-attention mechanism in the task network of each domain to enhance the network’s attention to high-frequency edge features, maintain clear boundaries and fill in missing areas of depth. Furthermore, we utilize an edge detection algorithm to extract edge features from the input of the target domain. Then we establish edge consistency constraints between inter-domain entities in order to narrow the gap between domains and make domain-to-domain transfers easier. Our experimental demonstrate that our proposed method effectively solve the aforementioned problem, resulting in a higher quality depth map and outperforming existing state-of-the-art methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the field of environment perception in navigation, computer vision-related tasks such as point cloud recognition, 3D reconstruction, and point cloud semantic segmentation often rely on the depth information provided by depth estimation algorithms [1,2,3,4,5,6]. Many advanced monocular depth estimation methods have a strong desire for annotated datasets [7, 8]. Unfortunately, obtaining accurate depth labels for real scenes often requires a significant amount of time and resources. Therefore, unsupervised monocular depth estimation has become a popular research direction in the field of depth estimation [9, 10]. Several unsupervised methods have been proposed, such as self-supervision using video sequences [11] and domain adaptation to generalize network models with rich annotation information to unannotated real data. Among them, the commonly used steps for UDA depth estimation include style transfer, feature extraction, and depth estimation. Earlier UDA depth estimation used a one-way translation network to convert synthetic images to the real domain, and then performed task network learning in the domain [12, 13]. In recent years, the approach has been gradually improved and iterated with the use of a CycleGAN [14] network to achieve bidirectional symmetric style transfer between two domains [14,15,16,17,18].The two types of conversion results are input into the synthetic domain and the real domain, respectively, and each uses an independent task network for learning. According to statistics from the latest relevant review papers, 33 of the 49 papers in the field of unsupervised domain adaptation in recent years are GAN-Based [19, 20]. The effectiveness of the cycleGAN model is particularly prominent. With the advantages of dual-cycle generative adversarial learning, it achieves high semantic and phase consistency between the target domain and the source domain, leading to better domain transfer. This advantage makes it an absolute mainstream method for unsupervised domain adaptation [19,20,21,22]. While the above strategies have achieved good results in domain-adaptive depth estimation, most of the research focuses on optimizing the style transfer between two domain inputs [16]. They ignored the style correction of two loop branches at the end, resulting in missing depth information at the edges of object instances in the predicted depth map, as well as depth missing holes (predicting distances close to infinity) caused by strong light reflections from glass, car windows, mirrors, etc.. This issue also affected their prediction accuracy metrics. In our work, we use a GAN-based UDA model, but specifically optimized the network for end-depth estimation tasks. Some research has shown that the self-attention mechanism is conducive to discovering contextual connections in a single image, making it useful for pixel-wise image processing such as depth estimation [23,24,25,26]. Edge constraints can also preserve more shape semantic information on the basis of geometric constraints [24, 27,28,29], which can effectively improve the quality of predicted depth maps. Therefore, we make full use of these two features: First, in the depth estimation network task of a single domain, we insert a self-attention mechanism module to enhance the pixel-wise feature extraction capability of the network and strengthen the pixel correlation of a single image, filling depth missing holes [30]. After the depth map output results of the respective networks in the two domains, we add an edge prediction network to guide attention to high-frequency boundary information in the entire network training process, so that the depth estimation network outputs a more accurate depth map of the target contour. We also use deep learning algorithms to extract edge information from raw images in the target domain, which is used as an unsupervised prior for unlabeled datasets in the real domain. The loss function is formed with the output of the edge extraction network in the two domains, respectively. The edge consistency constraints of similar targets in different domains are established to narrow the gap between domains, thereby solving the problem of missing depth of large-area targets caused by domain adaptive deviation. Our contributions can be summarized as:

-

1.

A new UDA depth estimation framework is proposed to establish edge consistency constraints between domains, which reduce domain gap and improve domain adaptation performance.

-

2.

We effectively introduced the edge-guided self-attention mechanism in single-domain depth estimation tasks to complete depth map holes and missing areas, resulting in high-quality predicted depth maps.

-

3.

Through a large number of experiments, We have successfully validated the efficacy of our approach on the KITTI dataset [31] and demonstrated its capability to generalize well on the Make3D dataset [32].

2 Related Work

2.1 UDA Depth Estimation

The depth estimation algorithm trains a neural network model based on the relationship between pixel values, enabling it to estimate depth information from a single image, which is a pioneering approach [5, 33, 34]. However, collecting the necessary datasets for training this algorithm is challenging. To address this issue, an unsupervised domain adaptive depth estimation algorithm has been introduced.

The approach involves a synthetic data corpus and their labeled counterparts as the source domain, with real but unlabeled data as the target domain. The objective is to train a depth estimation task network in the source domain in a supervised manner and then generalize it to the target domain, creating a network model that can predict depth in the target domain [1, 35,36,37].The UDA depth estimation algorithm has undergone multiple iterative improvements.

Earlier, Atapour et al. [38] developed a two-stage framework in which they first trained an adversarial network to translate natural images into synthetic images and then trained a depth estimation network in the synthetic image domain in a supervised manner. Then Kundu et al. [13] proposed a content congruent regularization method to tackle the model collapse issue caused by domain adaptation in high dimensional feature space.Zheng et al. [12] designed a wide-spectrum input translation network in T\(^2\)Net to further unify real images with synthetic images, leading to more robust translations. Zhao et al. [15] proposed a novel geometry-aware symmetric domain adaptation network, i.e., GASDA by exploiting the epipolar geometry of the stereo images, thereby suppressing undesired distortions during the image translation stage and obtaining better depth map quality.

GASDA [15] adopts the most advanced UDA algorithm framework: generative adversarial learning [14, 17, 37, 39, 40], there is still potential for improvement in certain aspects of the network structure, and we have developed our own algorithm framework based on it.

2.2 Attention Mechanism in Depth Estimation

The attention mechanism is a powerful tool in the field of deep learning image processing tasks, allowing the network model to focus on extracting effective information and improving the distinguishability of target features [6, 23,24,25, 41,42,43]. Woos’ proposed CBAM algorithm [44], aiming to guide the model to focus on spatial hierarchies and channel-wise spatial fusion hierarchies. Through the CBAM module, the model can dynamically learn channel and spatial attention weights in each convolutional block, adjusting the importance of different channels and spatial locations based on the content of the input feature map. In the context of pixel-wise monocular depth estimation, Xu et al. [43] proposed a multiscale spatial attention-guided block to enhance saliency of small objects and built a double-path prediction network to estimate depth map and semantic label simultaneously. CBAM tend to focus more on the importance of local regions, lacking global connections. However, self-attention mechanisms, with their unique per-pixel correlation mechanism, can simultaneously consider the global and local aspects of feature extraction, making them more suitable for pixel-wise image tasks with stronger requirements for contextual relationships. Chen et al. [41] propose a pixel-wise self-attention model for monocular depth estimation that can capture contextual information associated with each pixel. Huang et al. [24] effectively restore depth map integrity by using self-attention mechanism.

At present, attention mechanisms are usually widely used in supervised depth estimation networks [45].

Therefore, to address the issue of depth missing holes and large-area target depth loss in unsupervised domain adaptive depth estimation [12, 15, 30], we draw inspiration from the attention mechanism’s characteristics and add a self-attention module to the depth estimation network, resulting in excellent performance.

2.3 Edge Information for Pixel-wise Image Tasks

In deep learning-based image tasks, Xu et al. [46] proposed that the convolutional neural network tends to focus more on learning low-frequency content rather than high-frequency information in the image. The pixel-level image task has higher requirements on the learning ability of high-frequency regions such as edge contours [24, 28]. Liu et al. [27] developed a contour adaptation network to extract marginal information of brain tumors in magnetic resonance imaging (MRI) slices, aiding in the task of brain tumor cross-modality segmentation.

In the above pixel-wise image tasks, edge information is usually superimposed with the input to achieve the enhancement of high-frequency information in a single domain [27, 29, 36, 47]. While performing domain-adaptive depth estimation, we prefer to strengthen the connection between the two domains and use edge information to establish edge consistency constraints in the output section.

3 Methodology

3.1 Review Of GASDA and Modififications

An overview of the algorithm framework, the framework consists of four main parts:image style transfer,source domain task, target domain task, and unsupervised Cues. (i) The style transfer network contains two generators and two discriminators ( \(i.e. , \textrm{G}_{\textrm{T} \rightarrow \textrm{S}}, \textrm{G}_{\textrm{S} \rightarrow \textrm{T}}, \textrm{D}_{\textrm{T}}, \textrm{D}_{\textrm{S}}\) ),The way of transfer draws on CycleGAN [14]. (ii) The source domain and the target domain have a symmetric task network structure, including the improved attention mechanism depth estimation network (i.e., SA-F\(_{\textrm{S}}\) and SA-F\(_\textrm{T}\)) and auxiliary edge prediction network (i.e., Unet-s and Unet-t). (iii) Unsupervised cues including stereo Image pair with edge GT (edge ground truth). (iv) The green arrow is the source domain arrow flow path, the blue arrow is the target domain data flow path, GC is the geometric consistency loss, and L\(_{\textrm{1}}\) is the L1 loss

GASDA [15] is an advanced UDA monocular depth estimation algorithm based on adversarial training. In the style transfer stage, GASDA [15] uses the CycleGAN [14] network to obtain the style mapping from the target domain image to the source domain and from the source domain image to the target domain through adversarial training. In the depth estimation stage, the source domain and the target domain have independent encoder-decoders, and the input of the two encoders contains both the image datasets of each domain itself and the image map transferred from the neighborhood style.

We adopt the advanced UDA framework from the aforementioned GASDA algorithm [15] and incorporat some of its constraints. For the depth prediction output of the source domain image and its style transfer image, the ground-truth depth map of the source domain is used to construct the L1 loss function between them. For the depth prediction output of the target domain image and its style transfer image, since there is no direct ground truth, we introduce cross-domain consistency loss and binocular stereo image pairs to achieve constraints. Meanwhile, we design our own encoder-decoder. To enable the encoder to obtain more powerful feature extraction capabilities, we replace the original old encoder with EfficientNet-B5 [48] and implant a self-attention mechanism module. As shown in the Fig. 1, we add an edge detection network at the end of the depth estimation network and introduce additional edge information to construct inter-domain edge consistency constraints, which further enhanced the training effect and improved the network performance. Providing more details on each additional module will be done in the next few sections.

Self-attention architecture (Sect. 3.2), the feature layer size is represented as a tensor in the colored square, e.g., H\(\times \)W\(\times \)176 means the number of channels is 176, the resolution of a single channel is H\(\times \)W, 1\(\times \)1 conv represents a standard convolution process with the kernel size of 1\(\times \)1. (Because the batch parameter does not participate in the calculation, the batch parameter is omitted here)

3.2 Edge-guided Self-attention Mechanism

As a form of attention mechanism, the self-attention mechanism is commonly used to optimize pixel-wise tasks such as semantic segmentation and depth estimation. It emphasizes the correlation between different pixels within a single channel, thereby facilitating the exploration of contextual connections between pixels and resulting in an output with superior detail quality. This mechanism has proven to be particularly effective for the depth completion task [23,24,25, 41, 45, 49]. Meanwhile, the attention to edge information in the network prediction process can better generate a clear boundary and complete depth map [27, 28]. In UDA depth estimation, we attempt to incorporate self-attention guided by edge information into the network to address the problem of depth map missing holes. Therefore, as shown in the Fig 2, we draw inspiration from the non-local network structure [50] to develop a self-attention block and insert it between the encoder and decoder of the domain depth estimation task network (SA-F\(_{\textrm{S}}\) and SA-F\(_\textrm{T}\)). First, the output of the encoder is passed through three 1\(\times \)1 convolutions to obtain three feature vectors, namely query (q), key (k), and value (v). The query vector and key vector are then input into the pairing function, which can be expressed as:

where x is the output, i is a certain position in the input feature map matrix, j is the index of all positions,\(W_q\) and \(W_k\) are the weight matrices that need to be learned during the 1\(\times \)1 convolution process. The purpose of doing the Batch matrix multiplication operation is to calculate the correlation between the i-th pixel position and all other positions. We then use the softmax function to limit the output value of \(f\left( x_i, x_j\right) \) between 0 and 1, making the training gradient more stable.After performing the Batch matrix multiplication operation again on the correlation map and the value feature vector,a set of pixel matrices integrated into the self-attention mechanism are obtained. The overall function is expressed as:

where \(g(x)=W_v x\) is the value feature vector. After reconstructing the channel scale of the result through 1\(\times \)1 convolution, perform Element-wise add operation with shortcut, and finally get the output of the module.

Then we add an auxiliary edge prediction network (Unet-t and Unet-s) at the end of the depth prediction network in two domains, as shown the Fig. 3. This encourages the front network and self-attention module to prioritize the boundaries in the image, resulting in high-quality and clear boundary contours in the output depth map.

3.3 Consistency Constraint Between Two Domains

In previous domain-adaptive depth estimation approaches, since there are no supervised labels available in the target domain, the image in the target domain is input into the source domain task network, and a depth consistency loss is established with the target domain output as a form of supervision. Nevertheless, this approach lacks structural constraints, making it susceptible to producing distorted boundaries and even missing target depth in large areas, ultimately affecting the depth map’s quality. GASDA [15] addresses this issue by leveraging binocular stereo imaging pairs to create unsupervised prior constraints that suppress undesired distortions. Building upon this, in the single domain, we perform depth map edge prediction using the Encoder-Decoder structure i.e., Unet-t and Unet-s, followed by edge’s ground truth to establish constraints, further strengthening structural constraints and enhancing depth estimation’s quality. While in real dataset, we only have input images and lack the depth map used to generate the edge’s ground truth. Using classical algorithms such as the Sobel algorithm [51] to directly extract the input image generates a large number of internal textures that can be mistaken for real edges, impacting edge consistency. Nevertheless, deep learning edge detection networks can effectively mask these shallow texture details and represent deep feature information well [52]. Therefore, we use the RCF-Net [53] edge detection network to extract only the entity outlines in images, ignoring internal texture features. The extracted results are utilized as ground-truth supervision for training an edge prediction network. At the same time, a boundary consistency loss function is constructed using the output results of independent edge predictions in both domains, which helps to establish network attention association and narrow the gap between the two domains. This makes transferring between domains much easier.

Edge detection network structure diagram (Unet-t and Unet-s), the blue blocks is the feature layer of the downsampling process, the red blocks is the feature layer of the upsampling process, and the green is the part of the downsampling feature layer that participates in the skip link. The horizontal number represents the number of feature layers, and the vertical represents the number of feature channels.Regarding the arrows, Max pool, Conv2d and ConvTranspose2d represent the standard maximum pooling, convolution, and deconvolution operations in the pytorch library, respectively. 2\(\times \)2 and 3\(\times \)3 are the convolution kernel sizes, and ReLU is the activation function

3.4 Loss Function

In terms of loss function, we continue to use some of the same loss functions as GASDA [15], including the adversarial loss in style transfer:

Among them, the generator \(G_{s 2 t}\left( G_{t 2 s}\right) \) and the corresponding discriminator \(D_t\left( D_s\right) \) constitute a bidirectional style transfer network. \(X_t\) and \(X_s\) represent the target domain and the source domain, respectively, while \(x_t\left( x_s\right) \) is the corresponding input image. The overall loss function used is the GAN loss [14].

We establish a cycle consistency loss \(L_{c y c l e}\left( G_{t 2\,s}, G_{s 2 t}\right) \) to prevent mode crashes, and the geometric consistency loss \(L_{i nf}\left( G_{t 2\,s}, G_{s 2 t}, X_s, X_t\right) \) is adopted, which promotes the generator to prefer to preserve the geometric information.

The loss of the entire style transfer part can be summarized as:

where \(\lambda _1\) and \(\lambda _2\) are the trade-off parameters.

For the depth estimation task, we feed the source domain image into the source domain depth estimation network to obtain the depth map. Simultaneously, after the generator transfers the same source domain image to the target domain, we input it into a target domain depth estimation network to obtain a depth map from an alternative pathway. We then use these estimated depth maps with the ground truth of the source domain dataset to build the L1 loss \(L_{stask}\left( L_{ttask}\right) \). The source domain supervised depth estimation loss \(L_{task}\) can be summarized as:

Keeping the same as GASDA [15], we also exploit the epipolar geometry of real stereo images and unsupervised cues to implement stereo geometry constraints. And we employ L1 loss and single-scale SSIM [54] to construct a geometric consistency loss \(L_{sgc}\left( L_{tgc}\right) \) in the source (target) domain.

where \(L_{g c}\) is the full geometry consistency loss. In the stereo image pairs of target domain, \(x_{t 2 t}^{\prime }\left( x_{t 2\,s}^{\prime }\right) \) is the warp of the right image based on the estimated depth map of the left image, using bilinear sampling. \(\mu \) is set to be 0.85, and \(\eta \) is 0.15.

In the depth map, in order to suppress the local jump of depths in the target edge region, we establish the edge depth smoothing loss [1, 15]:

The depth consistency loss established by estimated depth map of the target domain is as follows:

The depth maps of the real scene image mapped in both domains constructs this constraint through the L1 loss function

Additionally, we have incorporated a boundary consistency loss function, where the edges represent obvious high-frequency information, and thus,we only require pixel-wise alignment constraints using L1 loss Thus, the supervision of the single-domain edge prediction network and the edge consistency constraints between domains are realized:

where \(L_{edge }\) is the overall edge consistency loss, \(L_{tedge }\) and \(L_{sedge }\) are the edge loss in the respective domain; \(U_t\) and \(U_s\) are the edge detection network in the target domain and the source domain, respectively. The input image of the target domain generates the edge truth label z through RCF-NET [53], and A loss constraint is established with the output of the edge detection network \(z_{t 2 t}\left( z_{t 2\,s}\right) \) in two domains.

Finally, integrating all the above loss functions, we can get:

where \(\gamma _n(n \in \{1,2,3,4,5\})\) are trade-off factors.

4 Experiments

4.1 Network Architecture

We construct a style transfer network based on CycleGAN [14], utilize EfficientNet-B5 [48] pre-trained on ImageNet [55] as the encoder in the depth estimation network (i.e., SA-F\(_{\textrm{S}}\) and SA-F\(_\textrm{T}\)), and follow the decoder in T\(^2\)net [12]. A self-attention mechanism module is inserted after the output of the last layer of EfficientNet-B5 [48]. At the end of the depth estimation network, a basic auxiliary U-net [56] (i.e., Unet-s and Unet-t) is designed to perform the edge prediction task. To obtain the edge ground truth of the target domain image, we use RCF-NET [53], which directly loads the open-source pre-training weights based on BSDS500 [47] and is not involved in network training.

4.2 Datasets

Our source domain dataset is the standard vKITTI dataset [7], which consists of 21,260 image-depth pairs with depth labels extracted from 50 synthetic videos of size 375\(\times \)1242. The target domain dataset is the KITTI dataset [31], which contains 42,382 stereo image pairs of size 375\(\times \)1242 with corresponding depth label information collected from real-world scenes. In our experiment, these labels are only used to evaluate the accuracy of the algorithm on the test dataset. We select 32 scenes from the KITTI dataset [31], using 22,600 images for training and 888 images for validation. We use 697 pictures selected from other 29 scenes to test the model performance. The 200 training images from the KITTI stereo 2015 [57] dataset are also used for further result testing, and 134 test images from the Make3D [32] dataset are used to evaluate the model’s generalization performance.

4.3 Training Details

Using PyTorch, our network is trained on a single NVIDIA GeForce RTX 3090 with 22GB memory over two phases. The first phase involves warm-up training to give the single-domain network some basic depth prediction and edge detection capabilities. We introduce the pre-trained weights of CycleGAN [14] and fix the weight parameters of this part. Then, we train SA-F\(_\textrm{T}\), Unet-t on \(\left\{ X_t, G_{s 2 t}\left( x_s\right) \right\} \), and SA-F\(_{\textrm{S}}\), \(\left\{ X_s, G_{t 2 s}\left( x_t\right) \right\} \) for 20 epochs, setting the momentum of \(\beta _1\)=0.9, \(\beta _2\)=0.999, and the initial learning rate of \(\alpha \) = 0.0001 using the ADAM solver [58]. Hyperparameters \(\gamma _1\) = 1.0, \(\gamma _2\) = 1.0, \(\gamma _4\) = 0.01 and \(\gamma _5\) = 1.0 are set in this step.

In the second step, the same training mode as GASDA [15] is used to achieve global network training. The network model ( SA-F\(_{\textrm{T}}\), Unet-t, SA-F\(_{\textrm{T}}\), Unet-s) trained in the warm-up stage is frozen, and the \(G_{s 2 t}\) and \(G_{t 2 s}\) in the style transfer network are trained in m batches. Then, we freeze them and train the remaining network n batches. We set m = 3,n = 7, and repeat the whole process for about 55 batches until the network converges. In this step, we set the hyperparameters as \(\beta _1\)=0.9, \(\beta _2\)=0.999, and set \(\alpha \) = 0.000002 in the first part of training and \(\alpha \) = 0.00001 in the latter part of training. The trade-off factors are set to \(\lambda _1\) = 10, \(\lambda _2\) = 30, \(\gamma _1\) = 50, \(\gamma _2\) = 50, \(\gamma _3\) = 50, \(\gamma _4\) = 0.5, \(\gamma _5\) = 100.The original KITTI image data is downscaled to a size of 640 \(\times \) 192 and the same data augmentation strategy as GASDA [15] is employed throughout the training phase.

4.4 Ablation Study

In order to effectively demonstrate the impact of various improvements in our model on enhancing the UDA depth estimation algorithm’s performance, we conduct several ablation experiments on the KITTI dataset [31] using feature segmentation. Building upon the GASDA [15] baseline model, we design the following models: 1) A baseline model that solely incorporates the self-attention mechanism, 2) A baseline model that only integrates edge constraints, 3) A complete model with edge constraints supervised by L2 loss and 4) A complete model with edge constraints supervised by L1 loss. To ensure consistency in other factors such as training methods and hyperparameters, we separately train these four models and obtain the subsequent results.

Table 1 highlights that our contributed baseline model performs the worst. Introducing a single self-attention mechanism or edge consistency constraint alone does not significantly improve performance. However, when we simultaneously introduce these two improvement schemes and use the complete improved model, it greatly enhances depth estimation performance. These observations suggest that the attention mechanism module enables the model to achieve pixel-level contextual relevance and the individual edge constraint technique helps optimize the depth map contours but provides limited improvement. By combining the advantages of attention mechanism and edge constraints, both can be mutually reinforced, allowing the model to focus more on high-frequency edge information ignored by traditional convolution structures and strengthen cross-domain association. Now for the loss function in the key edge constraint improvement, we have additionally conducted an L2 loss function ablation experiment. The results can be seen that the final model accuracy is lower than the L1 loss. Studies [6, 15] have shown that L1 loss is good at handling abnormal outliers because its penalty for outliers is fixed, while L2 loss performs better when handling small errors. The purpose of our additional edge constraint supervision is to solve the problems of poor edge quality and loss of local depth information in the predicted depth map. Such problems are caused by abnormal large errors in some areas, so L1 loss will have better results at this time. Ultimately, the entire network far exceeds the baseline model in multiple metrics, achieving significant improvements and outperforming current state-of-the-art techniques in some aspects.

4.5 Comparison with State-of-the-Art Methods

In Table 2 we use the scores obtained by Eigen split [2] as an evaluation criterion to achieve comparison with other existing algorithms, where the test split consists of 697 images in a total of 29 traffic scenes.And we take the range where ground true depth is less than 80 m as the evaluation area.

My algorithm is based on the GASDA algorithm, and it has a convincing improvement compared with the classical algorithm and the GASDA [15] algorithm. Compared with the state-of-the-art algorithms, the DESC algorithm proposed by Mikolajczyk et al. [36] requires additional semantic segmentation of the source domain and ground truth labels of edge images in order to effectively improve the performance of the algorithm.This process is complex and resource-intensive In contrast, we have achieved some surpassing indicators by using only simpler and less prior information.The following five scale-invariant metrics are used to measure the performance of the algorithm:

Abs Rel: \(\frac{1}{T} \sum _{k \in T} \frac{\left\| d_k-d_k^{g t}\right\| }{d_k^{g t}}\) Sq Rel: \(\frac{1}{T} \sum _{k \in T} \frac{\left\| d_k-d_k^{g t}\right\| ^2}{d_k^{g t}}\)

RMSE: \(\sqrt{\frac{1}{T} \sum _{k \in I}\left\| d_k-d_k^{g t}\right\| ^2}\) RMSE Log: \(\sqrt{\frac{1}{T} \sum _{k \in I}\left\| \log d_k-\log d_k^{g t}\right\| ^2}\)

Threshold: \(\delta =max \left( \frac{d_k}{d_k^{g t}}, \frac{d_k^{g t}}{d_k}\right) \)

Here, T represents the total number of pixels across all test images. \(d_k\),\(d_k^{g t}\) represent the predicted depth and ground-truth depth, respectively, corresponding to the kth pixel.

The KITTI 2015 stereo 200 training set [57] is more accurate than the laser depth annotations in KITTI, thanks to its denser ground truth labels. As seen from the first two experiments, our algorithm demonstrates excellent performance in terms of depth structure integrity, and dense annotations on instances such as cars and buildings can better highlight the strengths of our algorithm. Therefore, in the evaluation results presented in Table 3, the accuracy gap between our algorithm and GASDA [15] is greater than that in Table 1, while compared with the semi-supervised DESC algorithm that uses complex semantic segmentation, edge contours, and height pseudo-labels, our algorithm also has a part of performance advantages.

In Table 4, We conduct a model generalization quality evaluation on the Make3D [32] dataset, which includes 134 test images. Despite the large domain gap between datasets, good algorithmic performance is still achieved. Although we do not train on this dataset, our performance is superior to that of some methods trained on this dataset, indicating the strong generalization capability of our model. However, as our philosophy emphasizes bridging the gap between the target and source domains, the lack of training in certain areas can result in weaker performance of some metrics compared to classical algorithms.

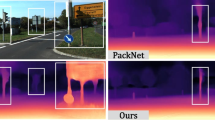

As shown in the Fig. 4, we compared our predicted depth maps with several classical algorithms. To address the issue of unmeasurable depth in images with sky scenes, we occluded the corresponding area at the top using the same processing method as T\(^2\)net [12] and GASDA [15]. The yellow box shows an enlarged view of the details in the red part. Note that the details of some image samples belonging to the T\(^2\)net [12] algorithm were not included in the comparison due to partial occlusion of the sky. Our results demonstrate that our algorithm outperforms the first two algorithms in terms of producing clearer and more complete representations of the buildings in the red boxes in the first and second rows of depth maps. Specifically, we found that the parts of the large vehicles indicated by the red boxes had obvious large-area depth loss in the previous algorithms, while in our algorithm these regions were patched. Moreover, in the last five rows, the leaves, glass, and brightly illuminated areas in the red box showed a significant number of abnormal deep missing holes in the results of past algorithms. By contrast, our algorithm solved this problem well, resulting in better quality depth maps.

5 Conclusion

In this paper, we present a method to enhance the performance of the UDA depth estimation algorithm by utilizing attention mechanism and edge consistency constraints. Specifically, we introduce edge-guided self-attention mechanisms in the depth estimation networks of both the source and target domains. Moreover, we establish consistency constraints between the ground truth edge contours of the target domain samples and the edge prediction outcomes of the two domains. By employing these techniques, we minimize the gap between the source and target domains, forcing the model to pay more attention to high-frequency edge information while suppressing geometrical distortions in the depth prediction process. It solves the problem of incomplete depth perception of objects such as vehicles and buildings in depth maps, as well as the frequent occurrence of missing depth holes, improving the accuracy and completeness of depth maps. Our experimental results demonstrate that our proposed approach outperforms the existing state-of-the-art methods on the KITTI and KITTI stereo 2015 dataset. Additionally, ablation studies validate the effectiveness of each component of our approach.Our model exhibits exceptional generalization performance on the Make3D dataset, further validating its efficacy. In future work, we would like to further extend the idea of combining attention mechanisms with edge information to Unsupervised Multi-Task Domain Adaptation

References

Akada H, Bhat SF, Alhashim I, Wonka P (2022) Self-supervised learning of domain invariant features for depth estimation. In: 2022 IEEE/CVF winter conference on applications of computer vision (WACV). pp. 3377–3387. https://doi.org/10.1109/WACV51458.2022.00107

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. Advances in neural information processing systems 27. https://doi.org/10.48550/arXiv.1406.2283

Atapour-Abarghouei A, Breckon TP (2019) Veritatem dies aperit - temporally consistent depth prediction enabled by a multi-task geometric and semantic scene understanding approach. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR). pp. 3368–3379. https://doi.org/10.1109/CVPR.2019.00349

Chen X, Chen X, Zha ZJ (2019) Structure-aware residual pyramid network for monocular depth estimation. In: Proceedings of the twenty-eighth international joint conference on artificial intelligence, IJCAI-19. pp. 694–700. https://doi.org/10.24963/ijcai.2019/98

Saxena A, Chung S, Ng A (2005) Learning depth from single monocular images. Advances in neural information processing systems 18

Zhang Y, Funkhouser T (2018) Deep depth completion of a single rgb-d image. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 175–185. https://doi.org/10.1109/cvpr.2018.00026

Gaidon A, Wang Q, Cabon Y, Vig E (2016) Virtual worlds as proxy for multi-object tracking analysis. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4340–4349. https://doi.org/10.1109/cvpr.2016.470

Hu J, Zhang Y, Okatani T (2019) Visualization of convolutional neural networks for monocular depth estimation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 3869–3878. https://doi.org/10.1109/iccv.2019.00397

Zhan H, Garg R, Weerasekera CS, Li K, Agarwal H, Reid I (2018) Unsupervised learning of monocular depth estimation and visual odometry with deep feature reconstruction. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 340–349. https://doi.org/10.1109/cvpr.2018.00043

Garg R, Bg VK, Carneiro G, Reid I (2016) Unsupervised cnn for single view depth estimation: Geometry to the rescue. In: Computer Vision–ECCV 2016: 14th European conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VIII 14. pp. 740–756. Springer. https://doi.org/10.1007/978-3-319-46484-8_45

Godard C, Mac Aodha O, Firman M, Brostow GJ (2019) Digging into self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 3828–3838. https://doi.org/10.1109/iccv.2019.00393

Zheng C, Cham TJ, Cai J (2018) T2net: Synthetic-to-realistic translation for solving single-image depth estimation tasks. In: Proceedings of the European conference on computer vision (ECCV). pp. 767–783. https://doi.org/10.1007/978-3-030-01234-2_47

Kundu JN, Uppala PK, Pahuja A, Babu RV (2018) Adadepth: Unsupervised content congruent adaptation for depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2656–2665 . https://doi.org/10.1109/cvpr.2018.00281

Zhu JY, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision. pp. 2223–2232. https://doi.org/10.1109/iccv.2017.244

Zhao S, Fu H, Gong M, Tao D (2019) Geometry-aware symmetric domain adaptation for monocular depth estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 9788–9798. https://doi.org/10.1109/cvpr.2019.01002

Huang X, Liu MY, Belongie S, Kautz J (2018) Multimodal unsupervised image-to-image translation. In: Proceedings of the European conference on computer vision (ECCV). pp. 172–189. https://doi.org/10.1007/978-3-030-01219-9_11

Chen YC, Lin YY, Yang MH, Huang JB (2019) Crdoco: Pixel-level domain transfer with cross-domain consistency. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 1791–1800. https://doi.org/10.1109/cvpr.2019.00189

PNVR K, Zhou H, Jacobs D (2020) Sharingan: Combining synthetic and real data for unsupervised geometry estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 13974–13983. https://doi.org/10.1109/cvpr42600.2020.01399

Schwonberg M, Niemeijer J, Termöhlen JA, Schäfer JP, Schmidt NM, Gottschalk H, Fingscheidt T (2023) Survey on unsupervised domain adaptation for semantic segmentation for visual perception in automated driving. IEEE Access. https://doi.org/10.1109/access.2023.3277785

Chiou E, Panagiotaki E, Kokkinos I (2022) Beyond deterministic translation for unsupervised domain adaptation. arXiv preprint arXiv:2202.07778 . https://doi.org/10.48550/arXiv.2006.08658

Thanh PTH, Bui MQV, Nguyen DD, Pham TV, Duy TVT, Naotake N (2024) Transfer multi-source knowledge via scale-aware online domain adaptation in depth estimation for autonomous driving. Image Vis Comput 141:104871. https://doi.org/10.1016/j.imavis.2023.104871

Liao Y, Zhou W, Yan X, Li Z, Yu Y, Cui S (2023) Geometry-aware network for domain adaptive semantic segmentation. Proc AAAI Conf Artif Intell 37:8755–8763. https://doi.org/10.1609/aaai.v37i7.26053

Chen Y, Zhao H, Hu Z, Peng J (2021) Attention-based context aggregation network for monocular depth estimation. Int J Mach Learn Cybern 12:1583–1596. https://doi.org/10.1007/s13042-020-01251-y

Huang YK, Wu TH, Liu YC, Hsu WH (2019) Indoor depth completion with boundary consistency and self-attention. In: Proceedings of the IEEE/CVF international conference on computer vision workshops. pp. 0–0. https://doi.org/10.1109/iccvw.2019.00137

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang TS (2019) Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 4471–4480 . https://doi.org/10.1109/iccv.2019.00457

Liu W, Rabinovich A, Berg AC (2015) Parsenet: Looking wider to see better. arXiv preprint arXiv:1506.04579 . https://doi.org/10.48550/arXiv.1506.04579

Liu X, Xing F, El Fakhri G, Woo J (2022) Self-semantic contour adaptation for cross modality brain tumor segmentation. In: 2022 IEEE 19th international symposium on biomedical imaging (ISBI). pp. 1–5. IEEE. https://doi.org/10.1109/isbi52829.2022.9761629

Tao Z, Shuguo P, Hui Z, Yingchun S (2021) Dilated u-block for lightweight indoor depth completion with sobel edge. IEEE Signal Process Lett 28:1615–1619. https://doi.org/10.1109/lsp.2021.3092280

Qi X, Liu Z, Liao R, Torr PHS, Urtasun R, Jia J (2022) Geonet++: Iterative geometric neural network with edge-aware refinement for joint depth and surface normal estimation. IEEE Trans Pattern Anal Mach Intell 44(2):969–984. https://doi.org/10.1109/tpami.2020.3020800

Camplani M, Salgado L (2012) Efficient spatio-temporal hole filling strategy for kinect depth maps. In: Three-dimensional image processing (3DIP) and applications Ii. vol. 8290, pp. 127–136. SPIE. https://doi.org/10.1117/12.911909

Menze M, Geiger A (2015) Object scene flow for autonomous vehicles. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 3061–3070. https://doi.org/10.1109/cvpr.2015.7298925

Saxena A, Sun M, Ng AY (2008) Make3d: learning 3d scene structure from a single still image. IEEE Trans Pattern Anal Mach Intell 31(5):824–840. https://doi.org/10.1109/tpami.2008.132

Liu F, Shen C, Lin G, Reid I (2015) Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans Pattern Anal Mach Intell 38(10):2024–2039. https://doi.org/10.1109/tpami.2015.2505283

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. Advances in neural information processing systems 27. https://doi.org/10.48550/arXiv.1406.2283

Zhao Y, Kong S, Shin D, Fowlkes C (2020) Domain decluttering: Simplifying images to mitigate synthetic-real domain shift and improve depth estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 3330–3340. https://doi.org/10.1109/cvpr42600.2020.00339

Lopez-Rodriguez A, Mikolajczyk K (2022) Desc: Domain adaptation for depth estimation via semantic consistency. Int J Comput Vis 131(3):752–771. https://doi.org/10.1007/s11263-022-01718-1

Liu X, Guo Z, Li S, Xing F, You J, Kuo CCJ, El Fakhri G, Woo J (2021) Adversarial unsupervised domain adaptation with conditional and label shift: Infer, align and iterate. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 10367–10376 . https://doi.org/10.1109/iccv48922.2021.01020

Atapour-Abarghouei A, Breckon TP (2018) Real-time monocular depth estimation using synthetic data with domain adaptation via image style transfer. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2800–2810. https://doi.org/10.1109/iccv48922.2021.01020

Kundu JN, Lakkakula N, Babu RV (2019) Um-adapt: Unsupervised multi-task adaptation using adversarial cross-task distillation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 1436–1445. https://doi.org/10.1109/iccv.2019.00152

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2020) Generative adversarial networks. Commun ACM 63(11):139–144

Chen X, Wang Y, Chen X, Zeng W (2021) S2r-depthnet: Learning a generalizable depth-specific structural representation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 3034–3043. https://doi.org/10.1109/cvpr46437.2021.00305

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Advances in neural information processing systems 30 . https://doi.org/10.48550/arXiv.1706.03762

Xu X, Chen Z, Yin F (2021) Multi-scale spatial attention-guided monocular depth estimation with semantic enhancement. IEEE Trans Image Process 30:8811–8822. https://doi.org/10.1109/tip.2021.3120670

Woo S, Park J, Lee JY, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV). pp. 3–19. https://doi.org/10.48550/arXiv.1807.06521

Jaderberg M, Simonyan K, Zisserman A, et al. (2015) Spatial transformer networks. Advances in neural information processing systems 28. https://doi.org/10.48550/arXiv.1506.02025

Xu ZQJ, Zhang Y, Xiao Y (2019) Training behavior of deep neural network in frequency domain. In: Neural Information Processing: 26th international conference, ICONIP 2019, Sydney, NSW, Australia, December 12–15, 2019, Proceedings, Part I 26. pp. 264–274. Springer. https://doi.org/10.48550/arXiv.1807.01251

Arbelaez P, Maire M, Fowlkes C, Malik J (2010) Contour detection and hierarchical image segmentation. IEEE Trans Pattern Anal Mach Intell 33(5):898–916. https://doi.org/10.1109/tpami.2010.161

Tan M, Le Q (2019) Efficientnet: Rethinking model scaling for convolutional neural networks. In: international conference on machine learning. pp. 6105–6114. PMLR. https://doi.org/10.48550/arXiv.1905.11946

Zhang Y, Funkhouser T (2018) Deep depth completion of a single rgb-d image. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 175–185. https://doi.org/10.1109/cvpr.2018.00026

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 7794–7803. https://doi.org/10.48550/arXiv.1711.07971

Kittler J (1983) On the accuracy of the sobel edge detector. Image Vis Comput 1(1):37–42. https://doi.org/10.1016/0262-8856(83)90006-9

Xie S, Tu Z (2015) Holistically-nested edge detection. In: Proceedings of the IEEE international conference on computer vision. pp. 1395–1403. https://doi.org/10.1109/iccv.2015.164

Liu Y, Cheng MM, Hu X, Wang K, Bai X (2017) Richer convolutional features for edge detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 3000–3009. https://doi.org/10.1109/cvpr.2017.622

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612. https://doi.org/10.1109/tip.2003.819861

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition. pp. 248–255. Ieee . https://doi.org/10.1109/cvpr.2009.5206848

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: medical image computing and computer-assisted intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18. pp. 234–241. Springer. https://doi.org/10.1007/978-3-662-54345-0_3

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? the kitti vision benchmark suite. In: 2012 IEEE conference on computer vision and pattern recognition. pp. 3354–3361. IEEE. https://doi.org/10.1109/cvpr.2012.6248074

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 . https://doi.org/10.48550/arxiv.1412.6980

Kuznietsov Y, Stuckler J, Leibe B (2017) Semi-supervised deep learning for monocular depth map prediction. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 6647–6655. https://doi.org/10.1109/cvpr.2017.238

Zhou T, Brown M, Snavely N, Lowe DG (2017) Unsupervised learning of depth and ego-motion from video. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1851–1858. https://doi.org/10.1109/aivr46125.2019.00059

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 270–279. https://doi.org/10.1109/cvpr.2017.699

Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2016) The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 3213–3223. https://doi.org/10.1109/cvpr.2016.350

Acknowledgements

This work was supported by the National Key Research and Development Program of China (No. 2021YFB3900804), the Research Fund of Ministry of Education of China and China Mobile (No. MCM20200J01).

Author information

Authors and Affiliations

Contributions

PG(First Author):Conceptualization, Methodology, Software, Investigation, Formal Analysis, Writing-Original Draft,Writing-Review & Editing,Visualization; Shuguo Pan (Corresponding Author):Conceptualization, Funding Acquisition, Resources, Supervision, Writing - Review & Editing; Peng Hu: Visualization, Investigation, Software; LP:Resources, Supervision,Writing-Review & Editing; BY:Writing-Review & Editing, Validation

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no Conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guo, P., Pan, S., Hu, P. et al. Unsupervised Domain Adaptation Depth Estimation Based on Self-attention Mechanism and Edge Consistency Constraints. Neural Process Lett 56, 170 (2024). https://doi.org/10.1007/s11063-024-11621-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11621-0